Velocity and orientation detection of dynamic textures using integrated algorithm

Автор: Shilpa Paygude, Vibha Vyas, Chinmay Khadilkar

Журнал: International Journal of Image, Graphics and Signal Processing @ijigsp

Статья в выпуске: 12 vol.10, 2018 года.

Бесплатный доступ

Dynamic Texture Analysis is a hotspot field in Computer Vision. Dynamic Textures are temporal extensions of static Textures. There are broadly two cat-egories of Dynamic Textures: natural and manmade. Smoke, fire, water and tree are natural while traffic and crowd are manmade Dynamic Textures. In this paper, an integrated efficient algorithm is discussed and proposed which is used for detecting two features of objects in Dynamic Textures namely, velocity and orientation. These two features can be used in identifying the velocity of vehicles in traffic, stampede prediction and cloud movement direction. Optical flow technique is used to obtain the velocity feature of the objects in motion. Since optical flow is computationally complex, it is applied after background subtraction. This reduces the number of computations. Variance feature of Gabor filter is used to find the orientation which gives direction of movement of majority objects in a video. The combination of optical flow and Gabor filter technique together gives accurate orientation and velocity of Dynamic Texture with less number of computations in terms of time and algorithm.. Proposed algorithm can be used in real time applications. Velocity detection is done using Farneback Optical flow and orientation or angle detection is done using Bank of Gabor Filters The existing methods are used to calculate either velocity or orientation accurately individually. Varied datasets are used for experimentation and acquired results are validated for the selected database.

Dynamic Texture, Degree of dynamism, Manmade Dynamic Texture, Optical Flow, Gabor Filter

Короткий адрес: https://sciup.org/15016019

IDR: 15016019 | DOI: 10.5815/ijigsp.2018.12.05

Текст научной статьи Velocity and orientation detection of dynamic textures using integrated algorithm

Dynamic Textures are considered as sequences of images of moving scenes which exhibit some stationary properties in time.[1] They are also considered as tem- poral extension of static Textures. Static Textures are images with some repetitive pattern of pixels. This repetitive pattern forms a kind of Texture. Dynamic Textures are videos with stationary background and moving objects as foreground. Examples are waving trees, ocean water, smoke, fire, water fall etc. These databases are verified to contain DT. For Example, ocean water has water in motion and rest of the background constant. Classification Texture in general and Dynamic Texture in particular is an open issue. However for convenience and as per requirement, Dynamic Textures are classified in two categories namely Natural and Manmade. Waterfall, clouds and ocean water are natural whereas highway traffic and moving crowd are considered as manmade Dynamic Textures. Dynamic Texture processing has two broad approaches as Analysis and Synthesis. Synthesis is used to generate Dynamic Texture videos from mathematical models. This finds applications in Gaming and Simulation. Analysis is the field where videos are processed using various techniques to extract useful information. The information extracted is different depending on the application for which it is going to be utilized. Since natural and manmade Dynamic Textures have different inherent properties, used technique gives good results on one of the types. Techniques which work on natural Dynamic Texture may not give similar results on manmade Dynamic Textures.

The work discussed in this paper is used to segment the Dynamic Texture in a clip of video using combination of Optical flow and Gabor Filter. Further segmented video is processed for velocity and orientation detection of Dynamic Texture. The optical flow technique is a natural way of obtaining the information of objects in motion. This technique is based on brightness constancy assumption. In general, Optical Flow technique applied on an image sequence performs calculations on all the pixels including stationary pixels. To avoid such redundant calculations, background subtraction is applied be fore Optical Flow computations. This makes the system efficient in terms of computational complexity and time complexity. The Optical flow technique is used to detect velocity which represents the degree of Dynamism of objects in video. Gabor filter is applied to get orientation of Dynamic Texture. Gabor filter is basically a Gaussian envelope modulated with exponential or sinusoidal signal.

Organization of the paper is as follows; second section briefly covers previous work on Dynamic Textures based on Optical Flow. In third section technical aspects of Farneback Optical Flow and Gabor filter is discussed which is used to detect velocity and orientation of DT. Experimentation and results are discussed and analyzed in fourth section. Conclusion is presented in fifth section. Lastly the list of the references is included.

-

II. Related Work

Dynamic Texture was first defined by R. Nelson and R. Polana [1]. According to them, people are able to distinguish highly structured motions, which are produced by walking, running, swimming or flying birds, and more statistical patterns present due to blowing snow, flowing water or fluttering leaves. S. Soatto, G. Doretto and Y.Wu further worked on segmenting a sequence of images of natural scenes into disjoint regions with constant spatiotemporal statistics [2]. Spatiotemporal Dynamics are modeled by Gauss Markov model. This gives good distinction even if regions differ in Dynamics.

G. Doretto, A. Chiuso, Y. N. Wu, and S. Soatto [3] and G. Doretto, D. Cremers, P. Favaro, and S. Soatto [4] presented a characterization of Dynamic Textures that is useful in modeling, learning, recognizing and synthesizing Dynamic Textures. The tools from system identification were used to capture the “essence” of Dynamic Textures. For learning or identifying models that are optimal in the sense of maximum likelihood or minimum prediction error variance is done. The first-order ARMA model driven by white zero-mean Gaussian noise can capture complex visual phenomena.

Methods based on optical flow [5,6,7] are currently the most popular. Since it is computationally efficient and natural way to characterize the local Dynamics of a temporal Texture, it gives good results . It reduces Dynamic Texture analysis system to be a system that deals with sequence of motion patterns of static Textures. The appearance and motion features are combined to accomplish complete analysis.

H Quan [8] combined optical flow technique with a method based on level set strategy. This new method works faster and gives accurate results. Jie Chen, Guoy-ing Zhao and Matti Pietikinen [9] and Jie Chen, Guoying Zhao, Mikko Salo, Esa Rahtu, and Matti Pietikinen[10] employed a new spatiotemporal local Texture descriptor which is combination of local binary pattern and a differential excitation measure. They combined Local Binary Pattern and Weber Local Descriptor based technique with optical flow for Automatic Dynamic Texture Segmentation. Histogram Oriented Optical Flow and thresh- old selection based on supervised statistical learning is included for better results of the algorithm. D. Chetverikov and R. Peteri [11] described various techniques used for DT recognition and description. DT recognition is the field of identifying a particular Dynamic Texture with its unique characteristic features.

R. Vidal and A. Ravichandran [12] and A. Rahman and M. Murshed [13] worked on segmentation of multiple Dynamic Textures. This work is focused on the dataset that has multiple Dynamic Textures in a single video. They successfully separated fire from ocean water background. T. Amiaz, S. Fazekas, D. Chetverikov, and N. Kiryati [14] detected different regions in DT. T. Ojala and M. Pietikinen [15] used unsupervised approach for DT segmentation.

A. B. Chan and N. Vasconcelos [16,17] contributed in modeling and clustering of mixture of Dynamic Textures. They also considered multi layered Dynamic Textures for processing. Dataset of various DTs is processed to cluster in the groups. D. Chetverikov, S. Fazekas, and M. Haindl [18] presented a technique to look at Dynamic Texture as background and foreground.

-

III. Proposed Algorithm

The proposed algorithm is accomplished in three steps. First, Dynamic Texture segmentation using background subtraction is implemented and achieved. Then angle or orientation detection of DT using Gabor filter is obtained. Finally, maximum velocity of DT using Farne-back optical flow technique is measured. To avoid the unnecessary calculations, background subtraction is applied before Optical Flow. This reduces the computational and time complexity of the system . A. Bruhn, J. Weickert [19] proposed a number of ways of optical flow technique calculations. There are two broad approaches of Optical Flow namely Local and Global or Sparse and Dense. Horn and Schunck technique is based on brightness constancy assumption. It is a global method that yields flow fields with high density however it is more sensitive to noise. The local technique such as Lucas-Kanade offers high robustness under noise however it has two shortcomings. First, it does not give dense flow fields. Second, it works with control points in the Dynamic Texture. Application of Lucas Kanade based approach computes the direction of pixels individually. However it does not compute a unique direction of the Texture. So some other technique is required to find orientation in which majority of objects move in case of videos containing multiple objects. Gunnar Farneback [20] proposed an optical flow algorithm that works well on tracing the Dynamic Texture .

The studies revealed that Gabor filter has the property of detecting orientation accurately. Variance feature of Gabor filter is preferred as key feature to detect angle of oriented Texture. S. Agarwal, P. K. Verma and M. A. Khan applied Gabor filter bank to detect palm print[21]. The Texture feature is extracted from each part of image separately using different orientations of Gabor filter. The work gives good results even if palm is slightly in different position with scanner. Velocity is found by using Farneback method. Laplacian Of Gaussian (LOG) is used to detect edge of segmented region. Further this edge of Dynamic Texture is used for tracking using Far-neback optical flow and dominant velocity is calculated which in turn provides overall velocity information of DT. Gabor filter bank of sixteen orientations is applied separately to obtain the accurate orientation.

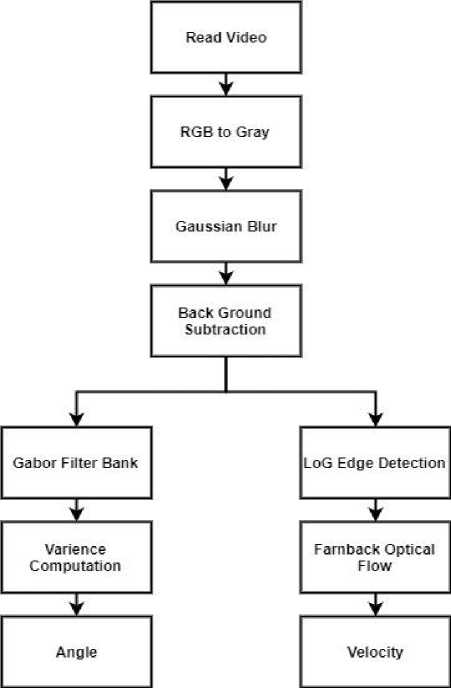

Block diagram of system is illustrated in Fig.1.

Fig.1. System Block Diagram

-

A. Gabor Filter and its Parameters

The filter is used extensively for Texture detection and visual systems. The Filter approximates to response similar to that of cells in visual cortex of human being. Gabor filters have three important characteristics: Bandpass, Orientation selectivity, and direction selectivity. Mathematically this filter is a Gaussian pulse modulated by complex exponential or sinusoidal function. Hence it gives optimal response in both spatial as well as spectral domain.

Gabor filters can be configured to have various shapes, bandwidths, center frequencies and orientations by the adjustment of suitable parameters[22]. Since wavelets can be local in time or frequency domain, one at a time, Gabor observed the necessity of a presentation which is local in both time and frequency domain and is discrete. He proposed to expand a function into a series of functions constructed from a single building block by translation and modulation. Gabor filter is also extended in 3D for bicycle detection by Kazuyuki Takahashi, Ya-sutaka Kuriya and Takashi Morie [23].They used shape based object detection using Histogram of Oriented Gradients and Support Vector Machine.

Gabor observed that there should be a presentation or representation which is local both in time and frequency domain and such a local time and frequency representation should be discrete so that it is better adapted to various applications.

The Gabor filter is basically a Gaussian Band pass filter having spreads of σ x and σ y along x and y-axes respectively. Equation (1) represents Fourier Transform of Gabor filter.

G(,.r) .-Х,' 1 ( /-V +/j]

The real part of Gabor filter can be expressed as shown in equation (2).

G(x,y) = e

‘2 .‘2

C5-^-)

2a 2 1 COS(— + s)

Where

x = xcos(66) + ysin(6)(3)

у = -xsin(6) + ycos(6)(4)

Sigma is the standard deviation, x and y are the spatial co-ordinates of the individual pixels in the image to be filtered. Spatial frequency is given by λ. Equations (3) and (4) describe the relation between 6 and x and у . Direction of the filter is controlled by θ. In case of Gabor filter, 0 0 is vertical filter so this filter will give optimal response to vertical Texture. Statistical feature called variance of the Gabor Response is used for detection of Texture angle.

-

B. Farneback method for calculation of Optical flow

Optical flow method is developed to estimate the motion between frames. J. Hurr and S. Roth have recently used optical flow mechanism to overcome occlusion problem. They proposed a model jointly estimating optical flow in forward and backward direction, as well as consistent occlusion maps .[24] J. Wulff, L. Sevilla-Lara and M. J. Black et al. proposed an optical flow algorithm that estimates an explicit segmentation of moving objects from appearance and physical constraints[25]. Farneback method is based on approximating the neighborhood by means of certain polynomials and motion model is developed for two frames.

Approximated polynomials are expanded for computing the motion. Mathematical expression for certain neighborhood polynomial is as follows.

I 1 (x')~xTP 1 x + q 1T x + r 1 (5)

Where P is symmetric matrix, q and r are vector and scalar respectively in equation (5). Similarly for frame 2 it can be written as , l2(x) = хт P2X + q^x + Г2(6)

It can also be written in terms of I 1 ( x ) as given in equation (7).

I2 (x) = (x - d) P1 (x - d) + qT (x - d) + r2

I2(x) = xTP2x + (q 1 - 2P1)Tx + dTP 1 d - q ^ d + r 1

So we can say that

P2 = Pi q2 = qi - 2Pid r2 = dTP1d - q! + r1(11)

Considering equations (9), (10) and (11),the conclusion is represented in equation (12)

d = 1P-1(q2-qi)

-

IV. Experimental Result

The proposed algorithm is applied on various datasets consisting Natural and manmade Dynamic Textures[26]. The datasets considered are containing smoke, water stream, and clouds, highway traffic, and crowd. The movement of above mentioned videos can be completely described with velocity and orientation. The result of the algorithm is verified on known orientations and velocity before applying to a large number of test videos. The describable Banded dataset is used for testing algorithm for orientation of objects in images. Since the dataset contains objects in known orientations, results of algorithm can be verified. A technique is developed to verify the velocity of motion based on two image frames. Joel Gibson and Oge Marques discuss various implementations of Optical Flow [27]. The Optical Flow algorithm is used to get the velocity of motion of objects in video and separation of moving objects from stationary background. The bank of Gabor filter is used to get the orientation of segmented part from the video. Following subsections describe the techniques developed for verification of results in detail.

-

A. Verification of velocity measured

The velocity obtained is in terms of number of pixels per second. Technique used to verify the result of velocity measurement is as follows. The images considered here are of the size 640X480. A grid of 30X30 is superimposed on the image. The two frames with a gap of one second are observed and the displacement of moving object is measured in terms of lines of grid. The displacement of object in horizontal and vertical direction is calculated in term of number of lines of grid. Since each line is 30 pixels apart from consecutive line, we get displacement in terms of number of pixels and the two frames considered are one second apart in time domain. Thus velocity is obtained as number of pixels per second. The formula used to calculate velocity based on Pythago-rus theorem is shown in equation (13).

Velocity = Square Root ( (Number of lines present be- tween two positions of object in horizontal direction * 30 )2+ (Number of lines present between two positions of object in vertical direction * 30 )2 )

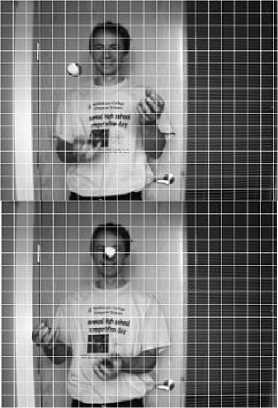

It is verified that the result obtained using Farneback Optical Flow algorithm is correct. Fig. 2 and Fig.4 show the mechanism with grid used to verify the velocity of objects in the video. Fig. 3 and Fig.5 are the snapshots of the system designed with results.

Fig.2. Hand and ball motion across blocks j -python 1713 Sheil*

F* Est Shea D*jg OptiCfr, Ллбо* Hdp

Fytboa 2.7.15 (v2.7.13:*06656bl»f*l, Dec 17 2016, 20:53:60) [H$: v.1500 66 bit (A.4D66)! oa via32 Type "copyright”, "credite” or ”Исеме()” for sore laforsetioa.

(68OL, 6601, 31)

(6501, 6601)

131.196

Fig.3. Snapshot of output of a program to verify the result.

Fig.4. Hand and door motion across blocks

(a)Image frames of a machine in motion

J| Python 27.13 SM

F* Edit $M Drtug Optons Window Help

Python 2.7.13 (72.7.13:a06454blafal, Dec 17 2016, 20:53:40) [MSC 7.1500 64 bit (AMD64)] on Win32 Type "copyright’, ’credits’ or ’license()• for sore infcreation.

»>

(480L, 640L, 3L)

(4801, 6401)

104.071

»>

Fig.5. Snapshot of output of a program to verify the result

, Velocity : 18.6692 ", 'Angle : 010 \ ’ ,

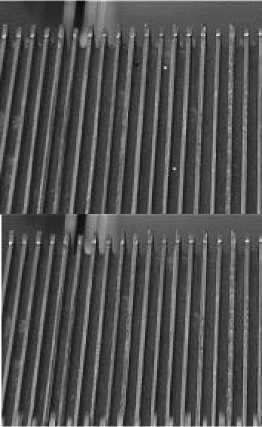

B. Verification of Orientation Measured

The orientation of Dynamic Texture is measured with respect to y axis. If the edge of Texture is vertical, the orientation obtained by the algorithm is 00 . This is verified using images with stripes in different directions. For verification of orientation, Describable Banded dataset is used. There are images with lines oriented in various standard directions. Fig. 6 shows the sample of dataset used for orientation measurement. The directions of lines are horizontal, vertical and diagonal which correspond to 900, 00 and 1350 after application of Gabor filter.

Velocity : 27.5273. * Angle : 0.0

Fig.6. Orientation of Banded dataset images : 900, 00 and 1350

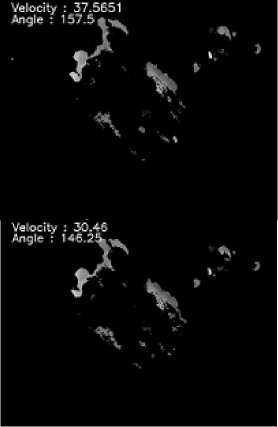

The testing of algorithm is done on sample dataset and after verification, it is applied on test datasets.The results of the proposed system on various datasets are given in Fig. 7, Fig. 8 and Fig. 9. The application of discussed algorithm gives the values of velocity in terms of pixels per second and orientation in degrees.

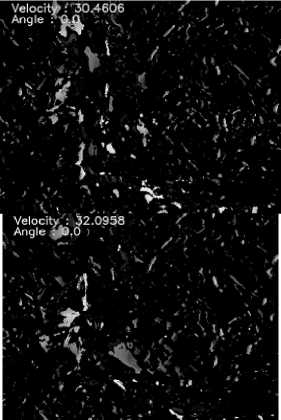

(b)Velocity and Angle detected

Fig.7. Machine Results

(a)Image frames of a smoke in motion

(b) Velocity and Angle detected

-

C. Accuracy Calculation

After application of algorithm, the values of velocity and orientation are compared with actual values obtained with grid structure and standard orientations. Table 1 shows the average accuracy values for different datasets. For each category mentioned in table, 20 videos are tested. The accuracy calculation is done using formula in equation (14).

Percentage Accuracy = 100 - Modulus [((x-y)/y) * 100]

where x = velocity/orientation obtained using proposed algorithm y = velocity/orientation obtained with measurement technique

The average values of accuracies of a number of videos in each category are enlisted in Table 1.

Fig.8. Smoke

(a)Image frames of a waterfall in motion

(b) Velocity and Angle detected

Fig. 9 Waterfall

Table 1. Accuracy of Velocity and Orientation Detection on various Datasets

|

Database |

% Accuracy of Velocity detection |

% Accuracy of Orientation Detection |

|

Describable Texture Dataset Banded |

-- |

93.23 |

|

Dyntex : Cloud |

-- |

86.36 |

|

Dyntex : Ocean water |

90.90 |

90.00 |

|

Dyntex : Waterfall |

92.50 |

93.33 |

|

Dyntex : Traffic database |

93.33 |

86.95 |

|

Highway Traffic (Single Lane) |

92.00 |

85.71 |

|

Highway Traffic (Double Lane) |

90.47 |

82.60 |

|

UCLA : Crowd |

91.30 |

86.11 |

-

V. Conclusion

The experiments on a number of databases were performed. Farneback implementation of Optical flow is used to get the result of velocity of Dynamic Textures. It is observed that the optical flow algorithm works equally good on natural and manmade Dynamic Textures. But the Gabor technique gives 10% more accurate results with natural Dynamic Textures compared to traffic and crowd. Single lane traffic gives 5% accurate results compared to double lane traffic. This is because natural videos have well defined and well connected homogeneous Texture that helps Gabor technique to detect the orientation accurately. Manmade dataset has multiple disconnected objects, so getting an angle is difficult.

-

VI. Future Scope

Instead of background subtraction, Texture descriptors can be used in addition. This may help in getting the orientation of motion of Texture which is one step ahead of getting orientation of Texture.

Список литературы Velocity and orientation detection of dynamic textures using integrated algorithm

- R. Polana, R. Nelson,” Temporal Texture and activity recognition,”, Motion-Based Recognition, Norwell, MA: Kluwer , 1997, pp. 87–115.

- S. Soatto, G. Doretto, Y.Wu, “Dynamic Textures”, IEEE Explore, 2001 pp. 439-446.

- G. Doretto, A. Chiuso, Y. N. Wu, and S. Soatto, "Dynam-ic Textures", Int. J. Computer Vision, vol. 51, no. 2, pp. 91-109, 2003.

- G. Doretto, D. Cremers, P. Favaro, and S. Soatto," Dy-namic Texture segmentation", Proc. IEEE International Conference Computer Vision, Oct. 2003, pp. 1236-1242.

- David J. Fleet and Yair Weiss (2006). ”Optical Flow Estimation,” Handbook of Mathematical Models in Com-puter Vision. Springer. ISBN 0-387-26371-3.

- John L. Barron, David J. Fleet, and Steven Beauche-min ”Performance of optical flow techniques,” Interna-tional Journal of Computer Vision (Springer),vol. 12, is-sue 1,1994 pp. 43-77.

- B. Horn and B. Schunck, “Determining optical flow,” Artificial Intelligence, vol. 17, pp. 185–204, 1981.

- Hongyan Quan,” A New Method of Dynamic Texture Segmentation based on Optical Flow and Level set Com-bination”, ICISE 2009, pp. 1063-1066.

- Jie Chen, Guoying Zhao and Matti Pietikinen “An Im-proved Local Descriptor and Threshold Learning for Un-supervised Dynamic Texture Segmentation”, IEEE Inter-national Conference on Computer Vision Workshops, 2009, pp.460-467.

- Jie Chen, Guoying Zhao, Mikko Salo, Esa Rahtu, and Matti Pietikinen, ”Automatic Dynamic Texture Segmen-tation using Local Descriptors and Optical Flow,”, IEEE Transaction On Image Processing, Vol. 22(1), pp. 326-339, 2013.

- D. Chetverikov and R. Peteri, "A brief survey of Dynamic Texture description and recognition", Proc. 4th Interna-tional Conference Computer Recognition Systems, 2005, pp. 17-26.

- R. Vidal and A. Ravichandran, "Optical flow estimation and segmentation of multiple moving Dynamic Textures", Proc. IEEE International Conference Computer Vision Pattern Recognition, Jun. 2005, pp. 516-521.

- A. Rahman and M. Murshed," Detection of multiple Dy-namic Textures using feature space mapping" , IEEE Transaction Circuits Systems Video Technology, vol. 19, no. 5, pp. 766-771, May 2009.

- T. Amiaz, S. Fazekas, D. Chetverikov, and N. Kiryati, "Detecting regions of Dynamic Texture", Proceedings of Conference on Scale Space and Variational Methods in Computer Vision., 2007, pp. 848-859.

- T. Ojala and M. Pietikinen, "Unsupervised Texture seg-mentation using feature distributions", Pattern Recognition, Vol. 32, no. 3, pp. 477-486, 1999.

- A. B. Chan and N. Vasconcelos, "Modeling, clustering, and segmenting video with mixtures of Dynamic Tex-tures", IEEE Transaction on Pattern Analysis and Ma-chine Intelligence, Vol. 30, no. 5, pp. 909-926, May 2008.

- A. B. Chan and N. Vasconcelos," Variational layered Dynamic textures", Proc. IEEE International Conference on Computer Vision and Pattern Recognition, Jun. 2009, pp. 1062-1069.

- D. Chetverikov, S. Fazekas, and M. Haindl, "Dynamic Texture as foreground and background”, Mach. Vis. Appl., Vol. 22, no. 5, pp. 741- 750, 2011.

- A. Bruhn, J. Weickert , “Lucas/Kanade Meets Horn/Schunck :Combining Local and Global Optic Flow Methods”, Springer International Journal of Computer Vision 61 (3),2005, pp.211-231.

- Gunnar Farneback, "Two-frame motion estimation based on Polynomial Expansion", Proceedings of the 13th Scandinavian conference on Image analysis ,Halmstad, Sweden June 29 - July 02, 2003 ,pp. 363-370.

- S. Agarwal, P. K. Verma and M. A. Khan, "An optimized palm print recognition approach using Gabor filter," 2017 8th International Conference on Computing, Communica-tion and Networking Technologies (ICCCNT), Del-hi,India,2017,pp.1-4. doi:10.1109/ICCCNT.2017.8203919

- Vibha S. Vyas and Priti Rege ,”Automated Texture Anal-ysis with Gabor Filter” GVIP Journal, Vol. 6, Issue 1, Jul 2006 ,pp. 35-41

- Kazuyuki Takahashi, Yasutaka Kuriya and Takashi Morie ,” Bicycle Detection Using Pedaling Movement by Spatiotemporal Gabor Filtering”, IEEE TENCON 2010, pp. 918-922.

- J. Hur and S. Roth, "MirrorFlow: Exploiting Symmetries in Joint Optical Flow and Occlusion Estimation," 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2018, pp. 312-321. doi:10.1109/ICCV.2017.42

- J. Wulff, L. Sevilla-Lara and M. J. Black, "Optical Flow in Mostly Rigid Scenes," 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Hon-olulu, Hawaii, USA, 2017, pp. 6911-6920. doi:10.1109/CVPR.2017.731

- Renaud Peteri, Sndor Fazekas and Mark J. Huiskes ,”DynTex: A Comprehensive Database of Dynamic Textures,” Pattern Recognition Letters, Vol. 31, Issue 12, 1 September 2010, pp. 1627-1632.

- Joel Gibson, Oge Marques, “Optical Flow and Trajectory Estimation Methods” Springer Briefs in Computer Sci-ence, 2016, Springer International Publishing eBook ISBN : 978-3-319-44941-8.