Video Analytics Algorithm for Automatic Vehicle Classification (Intelligent Transport System)

Автор: ArtaIftikhar, Ali Javed

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 4 vol.5, 2013 года.

Бесплатный доступ

Automated Vehicle detection and classification is an important component of intelligent transport system. Due to significant importance in various fields such as traffic accidents avoidance, toll collection, congestion avoidance, terrorist activities monitoring, security and surveillance systems, intelligent transport system has become important field of study. Various technologies have been used for detecting and classifying vehicles automatically. Automated vehicle detection is broadly divided into two types- Hardware based and software based detection. Various algorithms have been implemented to classify different vehicles from videos. In this paper an efficient and economical solution for automatic vehicle detection and classification is proposed. The proposed system first isolates the object through background subtraction followed by vehicle detection using ontology. Vehicle detection is based on low level features such as shape, size, and spatial location. Finally system classifies vehicles into one of the known classes of vehicle based on size.

ITS, Masking, Ontology, ROI, Vehicle Detection, Vehicle classification

Короткий адрес: https://sciup.org/15012698

IDR: 15012698

Текст научной статьи Video Analytics Algorithm for Automatic Vehicle Classification (Intelligent Transport System)

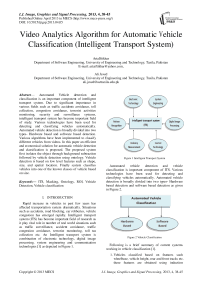

Rapid increase in vehicles in past few years has affected transportation system dramatically. Situations such as accidents, road blocking, car robberies, vehicle congestion has emerged rapidly. Intelligent transport system (ITS) has become important field of research as it play vital role in number of real world situations such as traffic surveillance, accident avoidance, traffic congestion avoidance, terrorist monitoring, toll tax collection etc. An Intelligent transport system is combination of electronic technology, digital image processing, system engineering and communication technologies [1] as depicted in Figure 1.

Figure 1 Intelligent Transport System

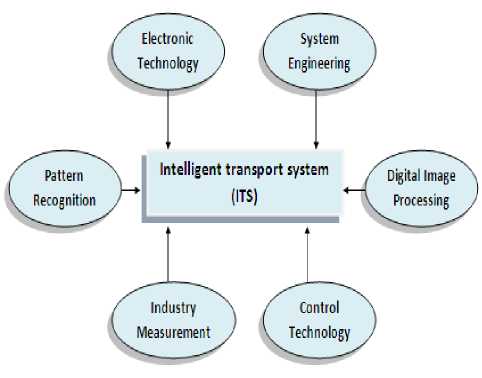

Automated vehicle detection and vehicle classification is important component of ITS. Various technologies have been used for detecting and classifying vehicles automatically. Automated vehicle detection is broadly divided into two types- Hardware based detection and software based detection as given in Figure 2.

Figure 2 Vehicle Classification

Following is a brief summary of current systems working in vehicle classification [1].

-

1. Vehicles classified based on features such wheelbase, vehicle height, rear and front tracks etc. these features are obtained using induction

-

2. Induction coil is used to get induction curves of vehicles, and then by using this information vehicles height, length, edges etc can be calculated which can be used in fuzzy classifiers to classify vehicles. This method has high precision and accuracy rate but it is costly and its installation and periodic maintenance damages the road. The device also has limited short lifetime and gives limited traffic information. Although device has its own uses but still it is used rarely.

-

3. Making video of vehicles using video cameras or taking photos using infrared, ultrasound, laser and so on. Then obtain several features by digital image processing technologies to help classify vehicles. Images captured by ultrasound or laser technology are very costly and images obtained by CCD camera are economical and used mostly. It is current research area as it need a lot of improvement. There is need of a lot of research and work to improve the accuracy and efficiency of vehicle detection and classification by using digital image processing techniques.

-

4. Automated vehicle detection and classification using CCD or video cameras is passive classification technology. It captures frame or image from the captured video and uses it to analyze different characteristics of vehicles by using digital image processing techniques and detect and classify vehicles. The proposed algorithm in this paper obtain frame from the recorded video, use image processing techniques to extracts low level features including size etc to classify vehicles.

magnetic coils, infrared transmitters, piezoelectric sensors etc.

Video based vehicle detection and classification has following advantages over Hardware based detection and classification

-

1. Economical, as it only needs CCD camera as compared to costly piezoelectric sensors etc.

-

2. Installation is very easy, also maintenance cost is very low.

-

3. Its installation and maintenance cause no damage to road, also it has no effect on traffic.

-

4. No harmful effects on environment.

-

5. Hardware based detectors have low visibility and provide less information as compared to video based detection.

-

6. It can also be combined with existing surveillance equipment to enhance the advantages of existing surveillance technology.

-

7. No issue of interference between same detectors.

-

5. Live video produced by camera can be recorded and then replayed to search for specific vehicle, this information can help in various fields like motorway surveillance etc.

Although vehicle detection and classification based on video also has some disadvantages like low capacity, slow processing etc but still its advantages are much better than hardware based detection. Due to easy installation and economical maintenance, it is more economical than hardware based detection. Very low cost CCD cameras can be used in video based detection and they provide more information than other hardware based detection, video based detection is more commonly used technology in ITS. Section II comprise of brief survey of current technologies including video based vehicle detection and classification algorithms is presented. Our proposed algorithm is presented in Section III. Section IV comprises of experimental results and finally Section V includes future improvements for our proposed system.

-

II. SURVEY OF CURRENT VEHICLE DETECTION AND CLASSIFICATION SYSTEMS

Wei, Shang , Guo and Zhiwei [1] defined vehicle classification method using eigenfaces. Their system performs classification using two steps that is training and then classification. They use time average technique to subtract the background in the first step, followed by the extraction of object’s edges or outline using background differencing. System uses outline information to get vehicles borders. Vehicle border information is used to calculate its height. Images are then normalized to finally build the library of vehicles frontal view. Classification step comprises comparison in which input vehicle face is compared with library of eigenvectors to classify vehicle. Comparison is done using minimum distance technique.

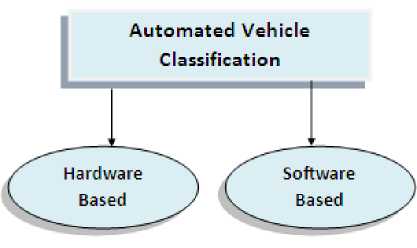

Wei, Yu and Chen [2] described that most vehicle surveillance systems consist of three steps namely vehicle detection, vehicle tracking and finally vehicle classification as depicted in Figure 3. They proposed the system that uses optimal classifier to classify vehicles into different types. Their system extracts different features of given image and then feed these features to the optimal classifier to get the classification results. They also worked on lane division lines and proposed algorithm to eliminate shadows.

Figure 3 Vehicle Surveillance Systems [2]

Goyal and Brijesh [3] proposed a new technique that classifies vehicle into one of the four known categories. They used neural network technique for the classification of vehicles. The proposed system extracts different features of vehicle from given image, then normalizes extracted features and input these features to neural network to get the classification results. The proposed system is based on structural features. They use Multi layer percept based classifier with additional neurons and compared the experimental results with back propagation based classifier and direct solution technique .The comparison results proved that MLP based classifier has high accuracy rate than BP and direct solution.

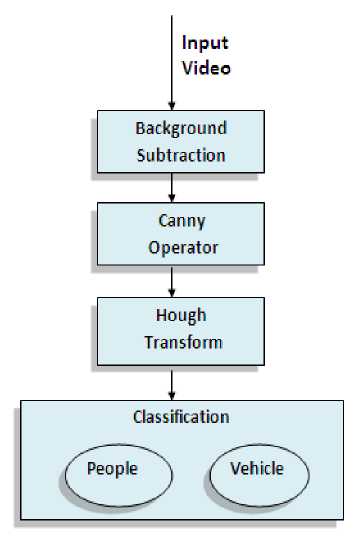

Xu, Liu, Qian and Han [4] proposed a simple algorithm that classifies people and vehicles from video. Proposed system classifies object as people or vehicle based on hough feature. The proposed algorithm subtracts the objects from static background using background subtraction method. Then system uses canny edge detector to extract edges of objects. The algorithm uses Hough transform to extract strait lines from the given object and finally classifies object into one of the two categories that is people or vehicle. Proposed system architecture is shown in Figure 4. Proposed system is very fast and simple and gives higher accuracy rate.

Ma and Grimson [5] in their research presented that object recognition in videos is easier than in static images as background subtraction can be achieved easily using background modeling in videos. They extract object and then normalize it to cope with scale variations in objects. They showed accuracy in object classification rate is increased if features are repeated. Discriminability is very important in selected features. They used various features for vehicle detection including edge points and SIFT. For achieving discriminability in features they associated edge points to the SIFT descriptors and for achieving repeatability they used modified SIFT. Their system work well with poor quality and small size images.

Figure 4 Hough Based Classification [4]

Table 1 depicts summary of current systems for vehicle detection and classification. Each system uses different low level features to detect and classify vehicle. Different view of vehicles are used, mostly system used top view of the vehicle. Classification rate vary depending on number of features used. Brief summary of current systems is outlined in Table 1.

Table 1 Summary of vehicle classification systems

|

Proposed Method |

Features Used |

Classification Types |

Vehicle View |

Vehicle Samples |

Percentage Accuracy |

|

Eigenface based Classification |

borders, length, height, eigenvectors |

Car types eg Lamborghini, BMW |

Frontal face view |

100 vehicle face images |

100 % |

|

Vehicle Tracking and Classification |

Histogram, size, linearity |

Car, MiniVan, Van truck, truck |

Frontal face and top view |

3 videos of 2 0443 vehicles |

69% without shadow elimination 82% with shadow elimination |

|

Neural network based classifier |

Structural features |

Bus, Van , Saab 9000 |

View from Different angles |

400 images |

62% on test set |

|

Hough based classifier |

Edges, shape, location |

2 types, vehicle and people |

Frontal face view |

3 videos |

94% average from 3 videos |

|

Edge based classifier |

Modified SIFT |

Cars vs minivans and Sedans vs taxies |

Top view |

50 training samples |

98.5% for cars vs minivans and 95% for Sedans vs taxies |

-

III. PROPOSED ALGORITHM ARCHITECTURE

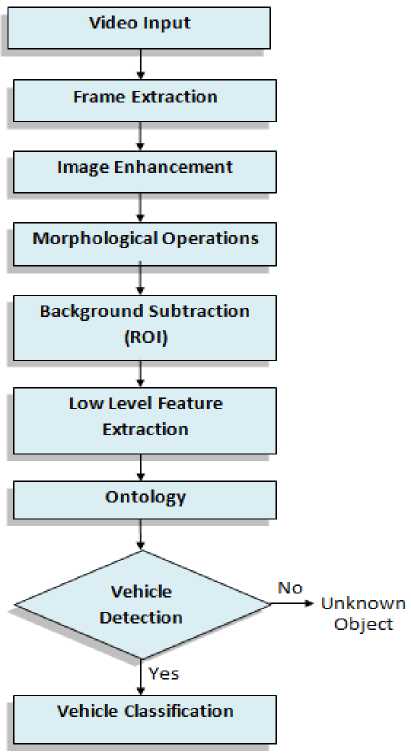

This research work presents an efficient algorithm that performs two tasks of artificial intelligence namely automatic vehicle detection and vehicle classification. It detects the presence of vehicle in the video and classifies it into one of the known class. The proposed algorithm performs the vehicle tyres detection from the side view through ontology. The proposed system extracts frame from the given video, remove noise from image by performing smoothing and convert RGB image to grayscale color model so that low level features can be extracted easily, some pre-processing steps including erosion, dilation (morphological operation) are performed on the grayscale image. Region of interest or object is separated from the static background by using background subtraction technique, followed by the extraction of low level features of given object using Canny edge detector and Hough transform [6]. Proposed system uses shape, spatial location and size as low level features and performed ontology or logical reasoning to detect tyres, and detect vehicle based on tyre presence and once vehicle is detected it is classified into one of the known category. The architecture of proposed algorithm is presented in Figure 5.

Figure 5: Algorithm Architecture

-

A. Pre-processing

Noise is removed from the extracted frame by smoothing. Extracted image is converted from RGB color model to grayscale color model to make the detection process easy.

-

a. Smoothing

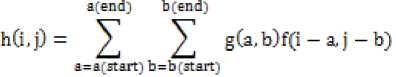

Smoothing is often used to reduce noise within an image or to produce a less pixilated image. For Smoothing operation different filters are used. Smoothing filters or low-pass filters let low frequency components pass and block the high frequency components. In proposed system weighted average filter is used for smoothing which removes noise while not causing blurring as shown in Figure 6. Smoothing reduces noise on images by replacing every pixel by a weighted average of its neighbour pixels. Following convolution is used for smoothing as mentioned in eq (1):

-

• Where g is the convolution mask, f is the image, and a(start), a(end), b(start), b(end) defines the size of kernel.

(a) (b)

Figure 6 (a) Input Image (b) Enhanced Image

-

b. Color Conversion

Grayscale color model contains only the intensity information. In proposed system object is converted from RGB to grayscale color model so that low level features can be extracted easily.

-

B. Morphological operations:

The morphological operator erosion acts like a local minimum operator. Grayscale image erosion computes the minimum of each pixel's neighbourhood whereas dilation computes the maxima. The neighbourhood is defined by the structuring element (that determines the shape of a pixel neighbourhood over which the minimum is taken). Erosion process makes the objects look smaller, and can break a single object into multiple objects, and makes the edges thin, whereas dilation makes the objects larger, and can merge different objects into a single object. Dilation also makes the edges more prominent and thick. Figure 7 shows the result of erosion and dilation.

Figure 7 (a) Erosion (b) Dilation

-

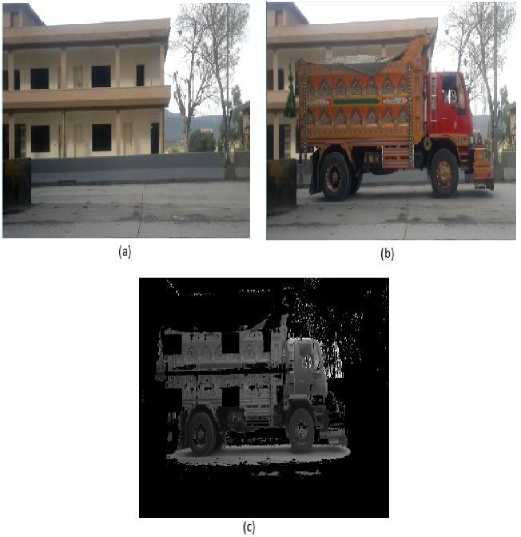

C. Background Subtraction:

Region of interest or object is isolated from the static background using background subtraction method [7].It is one of the most useful techniques to get the desired object from the image. Object can be segmented using Gaussian mixture model of background subtraction technique [8] [9] if region of interest is moving while background is static. The proposed system extracts the region of interest by background subtraction [10] which provides the region where the vehicle exists in the scene. A mask is created for this region, convolved over the input image to obtain the vehicle and subtracts the background. Figure 8 shows the result of object extraction.

Figure 8 (a) Background Image (b) Input Image (c) Extracted Object

-

D. Masking:

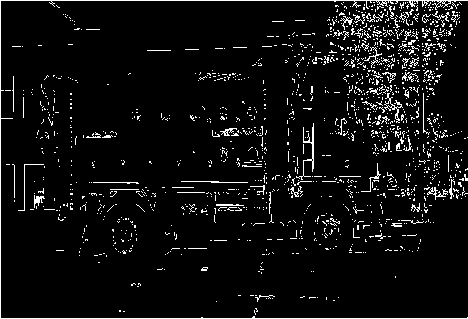

Proposed methodology needs to extract low level features of an object to detect tyres. It is known that tyres are always present in the lower half of the image so proposed algorithm focused on partial sampling technique [11] that is created a mask that highlights the lower region of the image while subtracts the upper half of the image. In this way performance of algorithm is enhanced. Result of masking is shown in Figure 9.

Figure 9(a) Input Frame (b) Mask (c) Convolved Image

-

E. Features Extraction:

Low level features including size [8], shape and location are extracted. Canny Edge detector [12] is used to extract edges from the object. This edge image is then used to find circles. Hough transform [6] is used to detect circles from the image. Hough transform find the circles from the lower half of the image. Pixel coordinate values are processed to find the spatial location of the object. Results of feature extraction are shown in Figure 10.

(a)

(Ь)

Figure 10 (a) Edge Image (b) Circle detection

-

F. Ontology:

Ontology means using low level features to describe real life object for example to define sky we say if color is blue and texture is smooth and spatial location is top, then it is sky. Proposed methodology use ontology to define the vehicle. Vehicle is defined as any object that has two tyres from the side view. Tyres are defined as two circles in the image that have radius in some defined range and have center points in same y axis and are below the half of the image. On the basis of tyres presence, vehicle is detected in the current frame or image.

-

G. Vehicle Detection:

If two or more tyres are detected in the given object, then the given object is classified as vehicle otherwise system classify it into unknown object.

-

H. Vehicle Classification

If vehicle is detected in the current frame, then system use size feature to classify vehicle into known type. Size is calculated by measuring the length between centers of two tyres of given vehicle.

-

IV. EXPERIMENTAL SETUP AND RESULTS

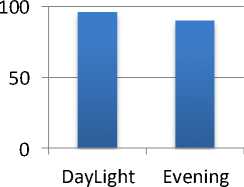

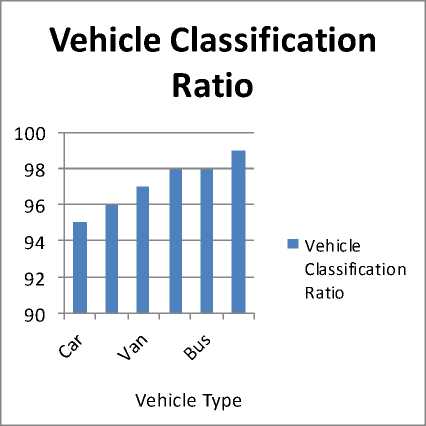

The proposed system utilizes Nikon 8MP camera which captures video at frame rate of 30 frames per second. To fulfill the commitment of delivering an economical system Nikon 8MP has been used for video acquisition. Camera was positioned at a fixed distance of 4 meter from the vehicle. We performed our algorithm on several videos including more than 100 vehicles. Detection and classification rate vary for different weather conditions. Figure 11(a) represents the vehicle detection and classification ratio in day light and evening. The results for daylight are quite excellent near to 96% whereas evening time results lies in the range of 90-92% which are also quite encouraging despite the constraint of illumination conditions. Recognition rate of different vehicles including Car, Bolan, Van, Coaster, Bus and Truck is presented in Figure 11(b). Comparative results demonstrate that the recognition ratio for Truck and Bus are quite excellent due to their large size, whereas car and bolan have small size so recognition rate lies in the range of 95-96%.

Vehicle Detection and Classification Rate

■ Vehicle

Detection and Classification

Rate

Figure 11 (a) Vehicle Detection and Classification Ratio in DayLight and Evening

Figure 11(b) Vehicle Detection and Classification Ratio of different

Vehicles

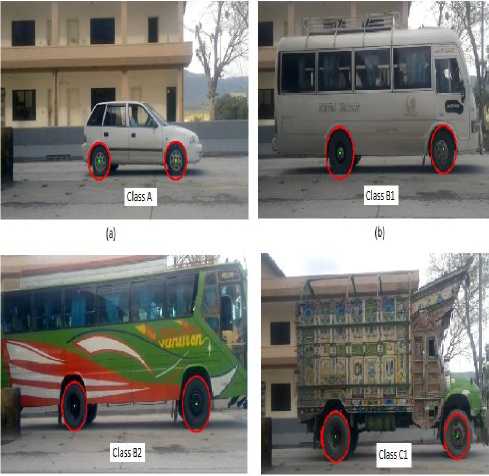

Proposed system classifies vehicles into one of the known class as given in Table 2. Results of vehicle detection and classification are shown in Figure 12.

Table 2Vehicle Classification Types

|

Class |

Type |

Vehicles |

|

A |

Private Cars |

Car, jeep, bolan |

|

B 1 |

Public Transport |

Van, coaster |

|

B 2 |

Public Transport |

Bus |

|

C 1 |

Heavy Vehicle |

Bedford truck, dump truck |

|

C 2 |

Heavy Vehicle |

Crane, trailor |

Figure 12 Vehicle Detection and Classification

-

V. CONCLUSION AND FUTURE WORK

The proposed system provides economical and efficient solution for vehicle detection and vehicle classification using video from the side view. Proposed system uses low level features such as size, shape and location for detection and classification of vehicle. The proposed system can be extended to include more vehicle classes for detection and classification. One of the possible extension can also be to consider frontal and back view of vehicle for classification.

Список литературы Video Analytics Algorithm for Automatic Vehicle Classification (Intelligent Transport System)

- Wei Wang, Yulong Shang, Jinzhi Guo, Zhiwei Qian," Real-time vehicle classification based on eigenface" Consumer Electronics, Communications and Networks (CECNet), 2011 IEEE International Conference.

- Jun-Wei Hsieh," Automatic Traffic Surveillance System for Vehicle Tracking and Classification", june 2006 Intelligent Transportation Systems, IEEE Transactions, Journals & Magazines

- Goyal, A, "A Neural Network based Approach for the Vehicle Classification", Computational Intelligence in Image and Signal Processing, 2007. CIISP 2007. IEEE Symposium, 1-5 April 2007

- Tao Xu ,Hong Liu , YueliangQian , Han Zhang, "A novel method for people and vehicle classification based on Hough line feature", Information Science and Technology (ICIST), 2011 International Conference , 26-28 March 2011

- Xiaoxu Ma; Grimson, W.E.L. ," Edge-based rich representation for vehicle classification", Computer Vision, 2005. ICCV 2005. Tenth IEEE International Conference, 17-21 Oct. 2005

- Mazaheri, M. , "Real time adaptive background estimation and road segmentation for vehicle classification", Electrical Engineering (ICEE), 2011 19th Iranian Conference, 17-19 May 2011

- Jin-Cyuan Lai ;Shih-Shinh Huang ; Chien-Cheng Tseng , "Image-based vehicle tracking and classification on the highway", Green Circuits and Systems (ICGCS), 2010 International Conference, 21-23 June 2010

- Zezhi Chen ,Pears, N. ; Freeman, M. ; ," Road vehicle classification using Support Vector Machines", Intelligent Computing and Intelligent Systems, 2009. ICIS 2009. IEEE International Conference, 20-22 Nov. 2009

- Kafai, M. ; Bhanu, B. ," Dynamic Bayesian Networks for Vehicle Classification in Video", Industrial Informatics, IEEE Transactions , Journals & Magazines, Feb. 2012

- Zhong Qin, Guangzhou, "Method of vehicle classification based on video", Advanced Intelligent Mechatronics, 2008. IEEE/ASME International Conference, 2-5 July 2008

- PeijinJi; Lianwen Jin; Xutao Li," Vision-based Vehicle Type Classification Using Partial Gabor Filter Bank", Automation and Logistics, 2007 IEEE International Conference, 18-21 Aug. 2007

- Canny edge detection, http://en.wikipedia.org/wiki/Canny_edge_detector