Video Retrieval: An Adaptive Novel Feature Based Approach for Movies

Автор: Viral B. Thakar, Chintan B. Desai, S.K. Hadia

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 3 vol.5, 2013 года.

Бесплатный доступ

Video Retrieval is a field, where many techniques and methods have been proposed and have claimed to perform reliably on the videos like broadcasting of news & sports events. As a movie contains a large amount of visual information varying in random manner, it requires a highly robust algorithm for automatic shot boundary detection as well as retrieval. In this paper, we described a new adaptive approach for shot boundary detection which is able to detect not only abrupt transitions like hard cuts but also special effects like wipes, fades, and dissolves as well in different movies. To partition a movie video into shots and retrieve many metrics were constructed to measure the similarity among video frames based on all the available video features. However, too many features will reduce the efficiency of the shot boundary detection. Therefore, it is necessary to perform feature reduction for every decision. For this purpose we are following a minimum features based algorithm.

Abrupt transitions, Features reduction, Minimum features based algorithm, Video Retrieval

Короткий адрес: https://sciup.org/15012576

IDR: 15012576

Текст научной статьи Video Retrieval: An Adaptive Novel Feature Based Approach for Movies

-

I. Introduction

The increasing use of multimedia streams nowadays compels the development of efficient and effective methodologies for manipulation and access of the databases which are having that information. Today, a typical enduser of a multimedia system is usually overwhelmed with video collections, facing the problem of organizing them so that they are easily accessible. As per the analysis given in [1], there were more than 1 trillion numbers of videos on the YouTube during 2011-12 and 201.4 billion number of video s viewed online in the month of October 2011. With such gigantic video data resources, sophisticated video database systems are highly demanded to enable efficient browsing, searching and retrieval. However, the old school video indexing methods, which are totally human dependent, are time-consuming, lack the speed of automation and are diverge by too much human

subjectivity. Therefore, more advanced approaches are needed to support automatic indexing and retrieval directly based on videos content, which provide efficient search with satisfactory responses to the scenes and objects that the user seeks.

Video is just a sequential collection of frames, shots and scenes. In this paper we will explain some of the ways to have efficient retrieval of the videos by using minimum features based methods so that it can be less time consuming [1, 2, 3, and 4].

The effective and efficient video retrieval system totally relies on the effectiveness of the algorithm used for the video segmentation and indexing. A video is nothing but the hierarchical combination of frames, shots and scenes. The shot boundary detection is the process of dividing a video into its core component called SHOT. A shot is defined as the consecutive frames from the start to the end of recording in a camera. It shows a continuous action in an image sequence.

A ‘representational frame’ can be chosen from the scene to act as a scene’s representative. Than the comparison between this representative frame and other query frame will be done in different manner. There are so many methods are available like gray scale or color histogram, pixel comparison, edge detection, some of the predefined models, etc.

-

II. Background

A Fade can be identified by a gradual transition between a constant image and shot or vice versa. In most of the cases the pure black image is used as a constant image. To identify the shot boundary we have to detect that constant or monotone image.

A Dissolve is the special effect to merge two different scenes or shots without generating eye catchy sequence. The most of the dissolve generating algorithms use linear combination of two scenes where we want to generate dissolve effect. So a dissolve image is generated by the linear combination of pixel intensities of two different images [4].

In [6], they have used similarity analyses which again perform efficiently for cut detection but the results for gradual transitions are not that much impressive.

In [14] the authors have used the Scale Invariant Transformation as the main feature for the retrieval. The retrieval is very much efficient but the time consumption for the process is very much high because of the calculation of the SIFT for every frame and their comparison.

So by reviewing the work done in [3-5,7,10,11,12], we came up with a conclusion that all the current established methods are pretty much efficient for cut detection but their response towards gradual transition is not that much impressive. Also the other methods which shows higher performance index are found bungling for the movie videos which contains large visual information and their random variations. Apart from this there is a need of the adaptive approach for the retrieval part of the video also.

-

III. Proposed Algorithm

To examine and understand the aptness of different classes of Video Indexing algorithms with respect to the detection of hard cuts as well as special effects like wipes, fades, and dissolves, at least one representative of each main approach was implemented and tested on a large variety of sequences. Based on these experimental results several shot boundary detection approaches were selected to be combined.

a) CUT Detection

As mentioned in earlier section a CUT is characterized as an abrupt change in the frame sequence. We have considered the following individual features for each frame to identify the Strong Cuts and Weak Cuts.

-

1) discrete cosine transform:

The discrete cosine transform (DCT) represents an image as a sum of sinusoids of varying magnitudes and frequencies. In our system the normalize frame is converted into gray color space, it’s equally divided into nonoverlapped 8X8 blocks. The DCT operates on an X block of N x N image samples and creates Y, which is an N x N block of coefficients. The action of the DCT can be described in terms of a transform matrix A. The forward DCT is given by Y = AXAT, where X is a matrix of samples, Y is a matrix of coefficients, and A is an N x N transform matrix. The elements of A are

-

( 2j + l)in

Ai,j = Ci cos

J 2N

-

1⁄Ν ⁄ 2i = 0

where Ci =

-

2⁄Ν ⁄ 2

Therefore the DCT can be written as

Уху = ∑i=0 ∑ ^o1 Хіј соѕ ( ) cos ( ^ ) - (2)

-

2) hsv histogram:

A histogram is a function that calculates the number of observations that fall into each of the disjoint categories known as bins. Thus, if we let n be the total number of observations and k be the total number of bins, the histogram mi meets the following conditions n= ∑Lmi (3)

We have calculated the cumulative HSV histogram also. A cumulative histogram is a mapping that counts the cumulative number of observations in all of the bins up to the specified bin. That is, the cumulative histogram Mi of a histogram mj is defined as:

Mi= ∑j = lmj (4)

-

3) edge change ratio:

The Edge Change Ratio is defined as follow. Let σn be the number of edge pixels in frame n, Xnin and variable Xn-1out are the number of entering and exiting pixels in the frame then calculate below equation it will give the value of Edge Change Ration in between 0 to 1.

ECRn = max(Xn in ⁄σn , Xn - 1 ⁄σn-1 ) (5)

Apart from these main features we have also considered grey level histogram and grey image correlation as two supporting features for our algorithm. This individual features is not that much effective so we have combined them to define our novel difference index which has outperformed all other methods.

The difference index between two consecutive frames is calculated as

DiffIndex =

DCTcorr

HSVhistocorr+Graycorr+Grayhistocorr+ECR

-

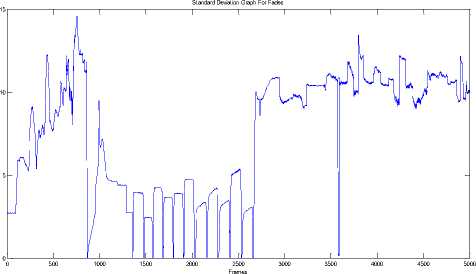

b) Fade Detection

To detect the fade in/out effect we have used the Entropy as a feature. As explained in the earlier section fade is generated or ends in a monotone image. Entropy is a statistical measure of randomness of the image. Entropy is defined as

E = -sum(р ∗ log2 p) (7)

Where p represents the histogram count.

-

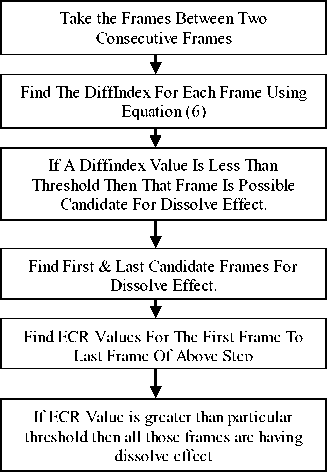

c) Dissolve Detection

Apart from this another main quality of dissolve is that during dissolve type of transition the quality of frames is gradually decreasing compare to the last frame until the next shot is totally overruled the previous one. So the frames which are affected by the dissolve effects contain two different images overlapping on each other. To identify this overlapped region we have deployed following algorithm.

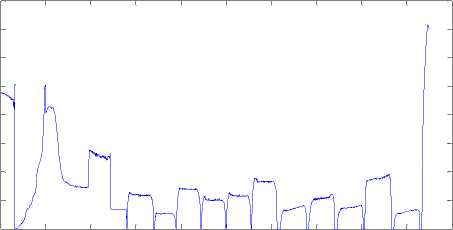

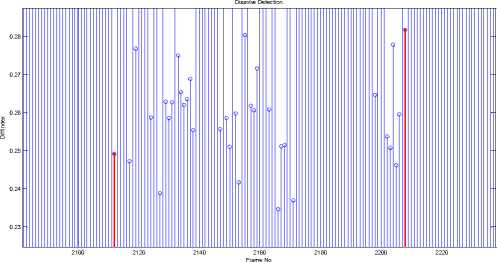

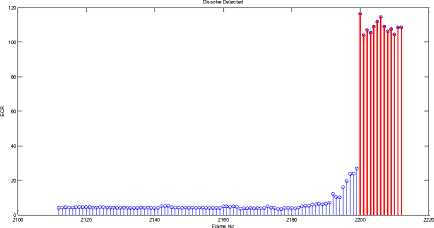

The Figure 17 shows the example frames which are having dissolve effect. Figure 18 shows the correlation graph for all the frames between two consecutive cuts for above example. It also shows the start and end point of the dissolve section. Figure 19 shows the ECR graph for those frames, which clearly shows the dissolve effect. The main advantage of this method is it can very effectively find the number of consecutive dissolves also. Figure 20 shows the result for one special case of three dissolves effect in Rajneeti.avi.

By dividing the total frames into different groups of frames we are reducing the amount of false positives compare to calculating a global threshold for whole video. This kind of clustering based adaptive approach shows very promising results for the video retrieval.

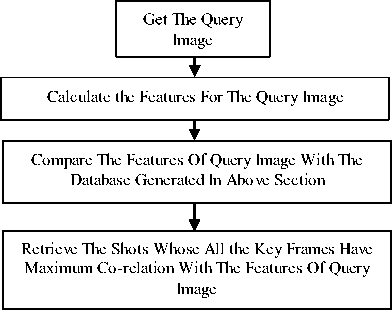

C. Video Retrieval

B. Adaptive Approach

IV. Experiment Simulation And Result Analysis

The adaptive threshold for the above difference index is calculated using the following method.

-

1. Divide all the frames in fix group size (Clustering Or Windowing).

-

2. For each group calculate mean & standard deviation of the DiffIndex.

-

3. Using equation (8) calculate the threshold value for CUT detection.

-

4. If for a particular frame difference index is greater than threshold announce it as possible result.

Threshold = mean + a ∗ standard deviation (8)

Where ‘a’ is an integer constant.

The performances of the implemented algorithms are evaluated based on the recall and precision criteria. Recall is defined as the percentage of desired items that are retrieved. Precision is defined as the percentage of retrieved items that are desired items.

Recall =

Correct Correct+Missed

Precision =

Correct

Correct+False Positives

F1(Recall , Precision)= 5 ∗ s ∗ ^recision (11)

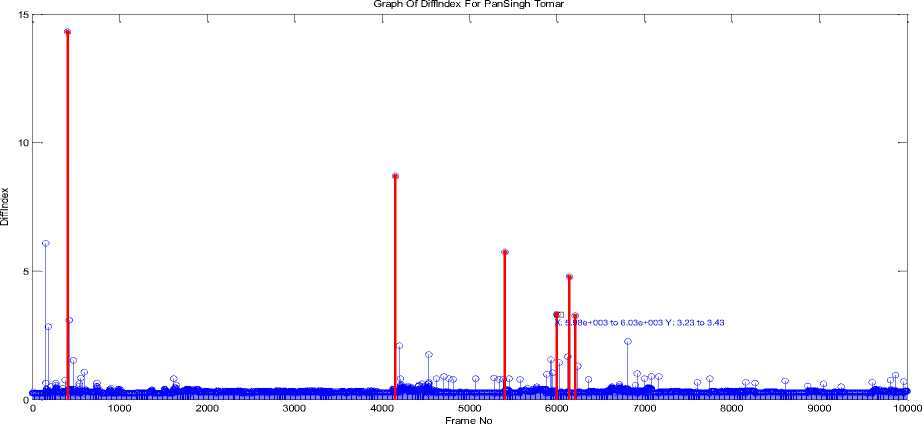

The proposed algorithm for video shot boundary detection is evaluated on 3 different movie videos which are different in size and nature, one video of a talk show and on an animated video. The values of recall, precision and F1 for every video input is mentioned in the table 1.

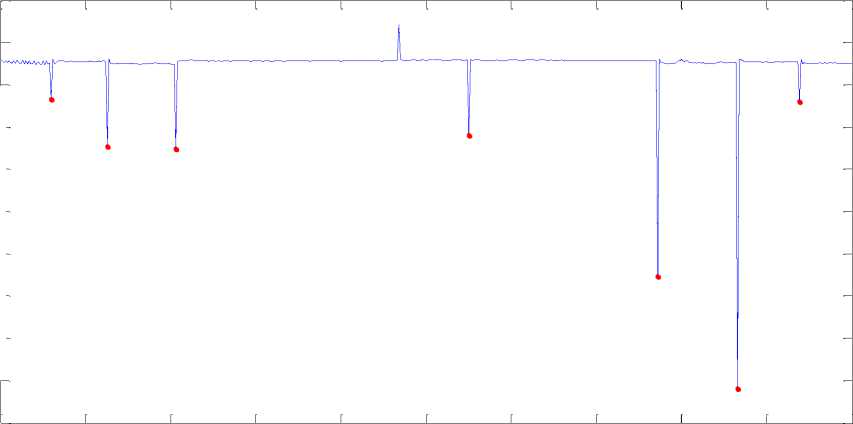

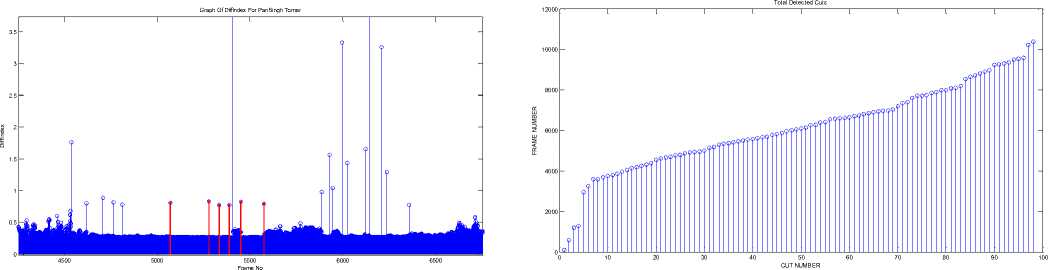

Figure.2. Difference Index for Strong Cuts – Example -1

4929 4930

-40 4900

Difference Index Graph For Strong Cuts

-5

-10

-15

-20

-25

-30

-35

Frame Number

Figure.4. Difference Index for Strong Cuts – Example -2

A. Strong Cuts:

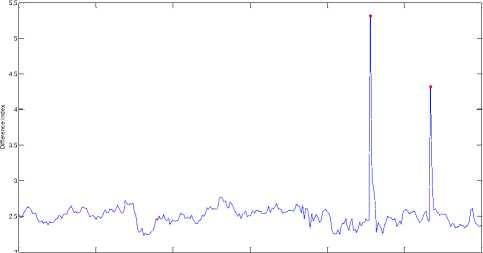

Difference Index Graph For Weak Cut

Frame Number

B. Weak Cuts:

Figure.8. Difference Index for Weak Cuts – Example – 2

Figure.5. Weak Cuts – Example - 1

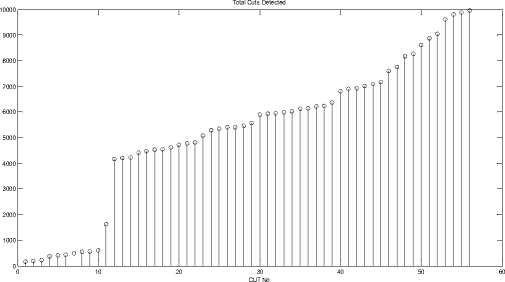

Figure.9. Total Cuts Detected – Example – 1

Figure.6. Difference Index for Weak Cuts – Example – 1

Figure.10. Total Cuts Detected – Example – 2

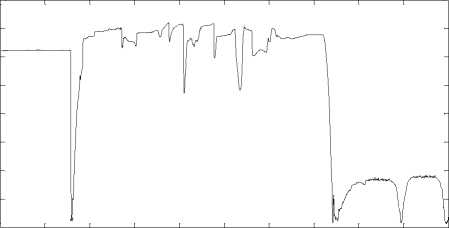

C.

Fade In & Out:

Frame No

Frame No

700 800 900 1000

Entropy Graph For Fades

Frame Number

0 800

D. Dissolve:

Figure.17. Dissolve Frames – Example - 1

Figure.18. Possible Candidates Dissolves – Example - 1

Figure.19. ECR Graph For Dissolve – Example - 1

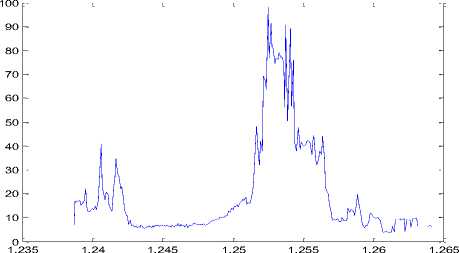

ECR Plot For Dissolve Effect

Frame Number x 104

Figure.20. ECR Graph for Three Consecutive Dissolves

As described earlier, the effectiveness of shot boundary detection algorithm depends largely on the efficient Dissolve detection. Here the Figure 17 shows the few frames which are having dissolve transitions. Figure 19 clearly indicates the detection of the frames under the dissolve effect. During our experimental analysis we have came across a special case in which there are three consecutive Dissolve effects. The proposed algorithm has successfully detected this special case also. In Figure 20 we have shown the graph of ECR for this particular case.

Table 1. Result Table

|

Movie |

Frame Number |

Effect |

Recall |

Precision |

F1 |

|

PanSinghT omar |

1 to 15000 |

Cut |

0.98 |

0.9 |

0.93 |

|

Fade |

1 |

1 |

1 |

||

|

Dissolve |

0.95 |

0.88 |

0.92 |

||

|

Rajneeti |

1 to 20,000 |

Cut |

0.98 |

1 |

0.98 |

|

Fade |

1 |

1 |

1 |

||

|

Dissolve |

0.93 |

0.9 |

0.91 |

||

|

Rajneeti |

80000 to 100000 |

Cut |

1 |

1 |

1 |

|

Fade |

N/A |

N/A |

N/A |

||

|

Dissolve |

1 |

1 |

1 |

||

|

Swadesh |

1 to 20000 |

Cut |

0.96 |

0.92 |

0.93 |

|

Fade |

1 |

1 |

1 |

||

|

Dissolve |

N/A |

N/A |

N/A |

||

|

Little Manhattan |

10000 to 20000 |

Cut |

1 |

1 |

1 |

|

Fade |

1 |

1 |

1 |

||

|

Dissolve |

1 |

1 |

1 |

||

|

Talk Shaow |

1 to 10000 |

Cut |

1 |

1 |

1 |

|

Fade |

1 |

1 |

1 |

||

|

Dissolve |

1 |

1 |

1 |

||

|

Tom & Jerry |

1 to 7257 |

Cut |

1 |

0.94 |

0.96 |

|

Fade |

1 |

1 |

1 |

||

|

Dissolve |

0.9 |

0.92 |

0.905 |

||

|

Medonna_4 Mins |

1 to 7000 |

Cut |

1 |

1 |

1 |

|

Fade |

1 |

1 |

1 |

|

Dissolve |

N/A |

N/A |

N/A |

||

|

Cricket Match |

1 to 20000 |

Cut |

1 |

1 |

1 |

|

Fade |

1 |

1 |

1 |

||

|

Dissolve |

0.8 |

0.73 |

0.76 |

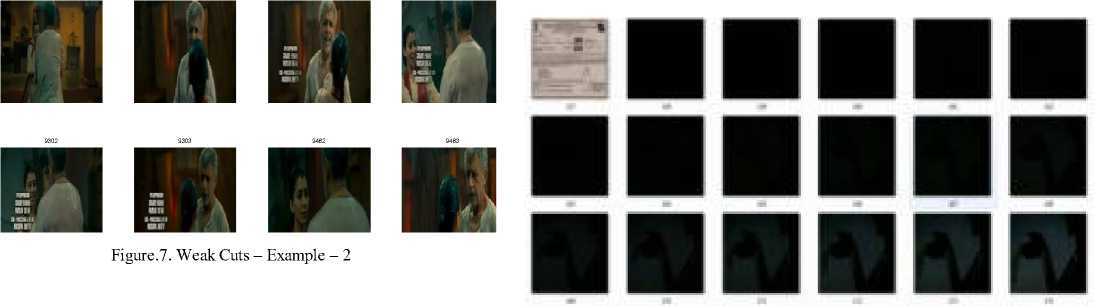

Here in Figure 21 we have shown the final retrieval images. The very first image shows the query image and the rest are the first 20 retrieved key frames. As the images shows the key frames only we can say that we have successfully retrieved the top 20 shots from the movie video based on the query image.

E. Retrieval:

Im 3: 0.143

Im 4: 0.151

Im 5: 0.152

Im 6: 0.166

Im 7: 0.176

Im 8: 0.226

Im 9: 0.266

Im 10: 0.276

Im 11: 0.283

Im 12: 0.338

Im 13: 0.341

Im 14: 0.344

Im 15: 0.345 Im 16: 0.434 Im 17: 0.490

Im ie a SC Im IS € SM im Л 0 «3

Figure.21. First 20 Retrieved Key Frames For Query Image

V. Conclusion

In future work we will try to improve the retrieval algorithm by introducing the adaption parameters in the algorithm.

Acknowledgment

Список литературы Video Retrieval: An Adaptive Novel Feature Based Approach for Movies

- http://royal.pingdom.com/2012/01/17/internet-2011-in-numbers/

- Seung-Hoon Han, Kuk-Jin Yoon, and In So Kweon, 2000. "A new technique for shot detection and key frames selection in histogram space."12th Workshop on Image Processing and Image Understanding, pp 475-479

- Analysis and Verification of Shot Boundary Detection in Video using Block Based χ2 Histogram Method by 1Naimish.Thakar, 2Prof.P.I.Panchal, 3Upesh Patel, 4Ketan Chaudhari, 5Santosh.Sangada in International Journal of Advances in Electronics Engineering

- HYBRID APPROACH FOR SHOT BOUNDARY DETECTION FROM UNCOMPRESSED VIDEO STREAM 1Ketan Chaudhari, 2Santosh Sangada, 3Upesh Patel, 4Prof.J.P. Chaudhari, 5Prof.P.I.Panchal in International Journal of Advances in Electronics Engineering

- Shot boundary detection via similarity analysis by Matthew Cooper Jonathan Foote, John Adcock, and SandeepCasi FX Palo Alto Laboratory Palo Alto, CA USA http://www.fxpal.com

- A. Hanjalic, Shot-boundary detection: unraveled and resolved?, Circuits and Systems for Video Technology, IEEE Transactions on 12 (2) (2002) 90–105.

- SVM-based shot boundary detection with a novel feature by kazunorimatsumotomasakinaitokddir&d laboratories, inc. 2-1-15 ohara, fujimino-shi, saitama 356-8502, japan {matsu, hoashi, naito, fsugaya}@kddilabs.jpkeiichirohoashifumiakisugaya

- Costas Cotsaces "Video Shot Boundary Detection and Condensed Representation: A Review" Student Member, IEEE, Nikos Nikolaidis, Member, IEEE,andIoannis Pitas, Senior Member, IEEE.

- Comparison of Automatic Shot Boundary Detection Algorithms by Rainer Lienhart1, Microcomputer Research Labs, Intel Corporation, Santa Clara, CA 95052-8819 Rainer.Lienhart@intel.com

- Advanced and Adaptive Shot Boundary Detection A. Miene, A. Dammeyer, Th. Hermes, and O. Herzog

- Comparison of video shot boundary detection techniques John S. Boreczky Lawrence A. Rowe

- A novel shot boundary detection framework Wujieheng, Jinhui Yuan, Huiyi Wang, Fuzong Lin and Bo Zhang

- A. Anjulan, N. Canagarajah, Invariant region descriptors for robust shot segmentation, in: Proceedings of the IS&T/ SPIE, 18th Annual Symposium on Electronic Imaging, California, USA, January 2006

- D.G. Lowe, Distinctive image features from scale-invariant key points, Internat. J. Comput. Vision 60 (2004) 91–110.