Vision-based Classification of Pakistani Sign Language

Автор: Sumaira Kausar, M. Younus Javed, Samabia Tehsin, Muhammad Riaz

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 2 vol.8, 2016 года.

Бесплатный доступ

Automated sign language recognition is one of the important areas of computer vision today, because of its applicability in vast fields of life. This paper presents automated recognition of signs taken from Pakistani Sign Language (PSL). The paper presents empirical analysis of two statistical and one transformation based shape descriptors for the recognition of PSL. A purely vision based, efficient, signer independent, multi-aspect invariant method is proposed for the recognition of 44 signs of PSL. The method has proved its worth by utilizing a very small shape descriptor and giving promising results for a reasonable size of sign dictionary. The proposed methodology achieved an accuracy of 92%.

Sign language, shape descriptor, invariance, Pakistani Sign language

Короткий адрес: https://sciup.org/15013948

IDR: 15013948

Текст научной статьи Vision-based Classification of Pakistani Sign Language

Published Online February 2016 in MECS DOI: 10.5815/ijigsp.2016.02.02

In daily life, human beings communicate with each other and interact with computers using gestures. As a kind of gesture, Sign Language (SL) is the primary communication media for deaf people. Everyday, millions of deaf people all over the world are using SL to get useful information and exchange ideas. Therefore, in recent years, SL recognition has gained a lot of attention and a variety of solutions have been proposed. Sign language can be a benchmark for gesture recognition system as it is the most structured and developed form of gestures. Sign language as a tool can be beneficial in many aspects to the deaf community. It can serve as a medium to encourage deaf people to be a part of community and develop their identity in the society. This facilitates deaf community to integrate well into the hearing society. Sign languages are not uniform throughout the world, there is vast difference in sign languages when we move from country to country and region to region. Information and communication technologies can play very effective role for deaf people to facilitate their communication among their deaf society and hearing community as well, along with enhancement in their level of learning.

Every member of the society has the right to access the information around one. Information itself is not reachable or unreachable; rather its form of representation formulates it so. Deaf community is normally deprived of the information, because information around them is not presented in a form that they can access. So to benefit deaf community, systems with general accessibility should be developed. Automated Sign Language Recognition (SLR) systems can provide with such a general solution.

Sign languages can be grouped into two categories: Static signs and Dynamic/Continuous signs. In static signs only hand shape and orientation is analyzed for recognition of signs. Whereas in case of dynamic or continuous signs, along with shape and orientation, direction of movement and sequence of gestures is also considered to completely understand sign.

Automated SLR can also be effectively used in robot control, industrial machine control, appliances control, virtual reality, interactive learning and many more. The fact of vast variety in the acoustic language equally prevails in the case of sign languages. Sign languages have got diverse variety in the base language and then have another range of various dialects based on the same base language. Every country has its own variant of sign language and these variants further have many dialects in different regions of the country. A lot of work has been done for different languages of the world [1-21]. Especially researchers have got a good focus on Australian Sign Language [1-6], American Sign Language [9-12], Chinese Sign Language [13-15], and Arabic Sign Language[16- 19]. Unfortunately very limited work has been done for the recognition of Pakistani Sign Language (PSL). Aleem Khalid and et. Al [8] of Sir Syed university karachi have used data gloves to recognize one handed static urdu sign alphabets. They have used statistical Template Matching for classification purposes. Fuzzy Classifier used for the recognition of alphabets of PSL by kausar and et. al [7] . Colored gloves were used for the recognition of PSL symbols. Approximately there are nine million Pakistanis that have some hearing loss [36] this makes approximately 5% of total Pakistani population. Out of these nine million, 1.5 million people are completely deaf [36]. PSL is a visual-gestural language that came out as a blend of urdu, national language of Pakistan, and other regional languages. Making easy and wide availability of PSL in modern technologies can help improving deaf community lives in many aspects.

This paper presents an efficient and robust method for automated SLR recognition for PSL. Static one-handed signs are used for the proposed method. Different statistical methods are used for selecting different shape descriptors and then those methods are compared along with the tuning of different parameters in classification phase. Two different groups of signs are taken for analysis. Next section presents the classical to state of the art in sign language recognition. section III presents the proposed methodology in detail. Fourth section presents the results and analysis of the proposed method the final section presents the concluding remarks of the presented paper.

-

II. Related Work

The multidimensional applicability of the domain of sing language recognition system has implied the researchers to have this as their research focal point. The researchers in the field of image processing, machine vision and pattern analysis have realized the significance of putting their research efforts into this particular area of SLR. This realization has lead to the valuable contributions by the researchers. Different researchers have focused on different phases of SLR systems, such as segmentation, feature selection, feature extraction or classification. But it is observed that majority researchers have set their focus on feature selection and feature extraction to improve overall system. The reason for attaching prime importance to feature selection and extraction is its vital role in the performance and accuracy of the complete SLR system. Samir et al. [22] and Philippe Dreuw et al. [25] have used multiple cameras to recognize sign language. Samir et al. have generated image features with pulse-coupled neural network (PCNN) from two different viewing angles for recognizing Arabic Sign Language [22]. It is needed to have two cameras mounted on particular angles to get 3D features of image. This make the system, multiple cameras dependent and complex settings for the system make it computationally inefficient as for a single image two images are processed instead of one. Padam et al. used Krawtchouk moment features for recognizing static hand gestures[23]. The system claimed to achieve signer independence along with coping up viewer angle variations. Yun Li. et al. have used portable accelerometer (ACC) and surface electromyography (sEMG) sensors for automatic Chinese SLR at the component level [24]. Three basic components of sign that is the hand shape, hand orientation, and movement are exploited to achieve high accuracy rates. Xu shijian et al. [31] and Tian Swee et al. [32] have also used external data gloves for the purpose of recognition of sign language. Use of data gloves gives good accuracy but dependence upon external gadgetry and high cost, lowers its worth. Rini Akmeliawati [26] and sumaira et al. [27] have used colored gloves for automated SLR. Finger tips and palm prime points are colored with specific colors to identify these points for recognition purpose. They have got good accuracy but with some external aid for feature extraction, a little and simple cost to pay for attaining high accuracy. Many researchers have used limited size of dictionary. Ershaed et al. [28] have used only four signs. similarly Wassnerr et al. [6] have used only two French signs to get recognized by their proposed system based on Artificial Neural Networks. Zafrullah et al. [29] have used only six signs of American sign language for aoutomated recognition. Ershaed et al. [28], Zafrullah et al. [29] , Xing et al. [1] and Cooper et al.[30] have used depth cameras for automated SLR. Depth cameras are quite expensive and hence making a barrier to its vast applicability.

Researches on sign language recognition just mentioned here have different rates of accuracy and meant for different sign languages. These researches have their own strengths and associated disadvantages as well. These are pointed out in the preceding paragraph. The proposed methodology has tried to address all above mentioned problems. The experimentation with proposed methodology proves its worth in many aspects. The proposed methodology have got no dependence on any external gadgetry, no colored gloves or data gloves are used. The proposed method is efficient enough to be implemented in real time systems as it is based on simple yet powerful features.

Method for SLR presented here does not require expensive depth cameras, simple inexpensive digital camera is used and it can even work with a simple webcam. Only a single camera is used, multiple cameras mean more cost in terms of time, money, complexity and hence performance.

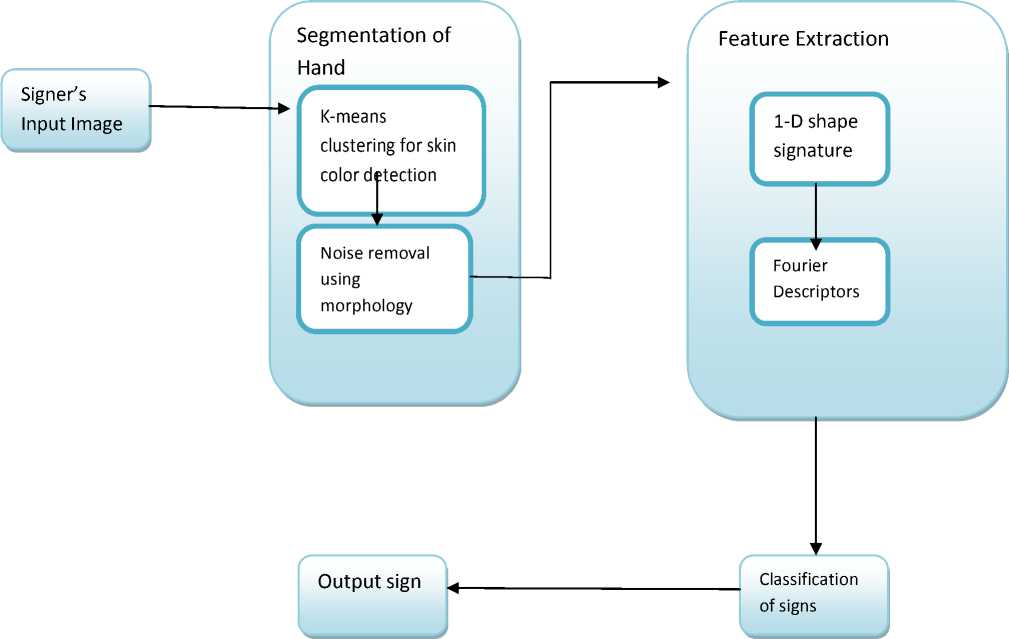

Fig.1. Overview of PSL recognition system

-

III . Proposed Method

This paper presents a method for automated SLR for PSL. The proposed methodology can be shown with a block diagram in Fig. 1. System starts with a signer’s image and takes that image as the input to the system. This signer’s image is then passed to the segmentation module for segmenting hand of the signer, as proposed system is only meant for one handed static signs of PSL. Three different types of features are analyzed to come up to the proposed shape descriptor for the recognition of PSL in the actual system. After this required shape descriptor of signs is extracted and passed on to the classification module that classifies the sign and gives that classification result as an output. Each module of the PSL recognition system is elaborated further in the coming sections.

-

A. Input Module

Input module takes image of the signer. The frame of the image only covers one hand with which sign is shown the other hand and face is not captured in the frame. Along with that signer also have to wear full sleeves shirt with any color that does not match to skin color. Background of the signer should not be occluded with other objects that match with skin color. These special constraints on input image of signer are to make segmentation process easier and simple. As it is relies on purely vision based classification and totally rejecting the idea of use of any external gadgetry, so skin-based classification systems have to put some constraints on the background and clothing. These constraints are much easier to follow than to have expensive and cumbersome external gadgetry such as data gloves etc. Input image is not taken with any special illumination constraints and can be taken from any ordinary digital camera even a moderate quality webcam can work well.

-

B. Segmentation

Input image of signer is passed to the segmentation module. For segmentation phase, K-means clustering is used for the classification of skin color objects and nonskin color objects. Non-skin color objects are taken as background after having three iterations of k-means clustering. K-means clustering can be defined with equation below.

Ct = xp : | xp — mJ < | xp - m.j | V 1 < j < к

Where Ci is the ith cluster where number of total clusters is k. x p is the point to be assigned to a particular cluster and mi is the mean of ith cluster. After each iteration, updation of each cluster’s mean is required, new mean for each cluster is calculated as follows

m‘ = iCaY^

X j € C l

After segmentation to improve the segmentation results, noise in the image is removed by applying morphological operation. The morphological operation used is as follows:

OOS = {x £ I|Sx e 0}

Where O is the object for our case segmented sign of PSL and I is the image and S is the structuring element. For this paper we have used a 3*3 square structuring element to remove noise from the image. S x is the translation of S by the vector x and S x is defined as:

5х = { s + x | s e 5}, Vx e 7

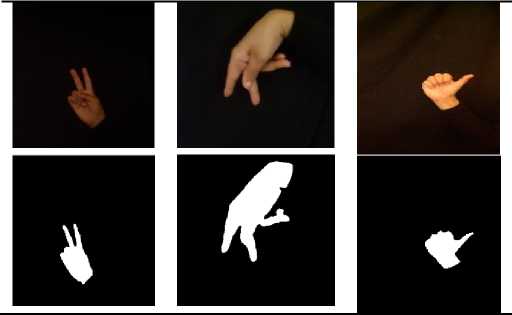

As a result of segmentation process, the binary image of the hand would be produced. Some results of segmentation with scaling, illumination and translation variations are shown in Fig.2 .

Fig.2. Segmentation result

This binary image would be the processed for feature extraction phase.

-

C. Feature Extraction

Once the image is converted to binary image it is further processed for feature extraction. For this paper we have analyzed three different types of features. This paper has explored two statistical and one transformation based descriptors. These features have their own inherent properties which are exploited here for the purpose of recognition of PSL.

-

i. Central Moments

Central moments are used in image processing and computer vision to analyze image properties. Moments about zero for one variable can be defined with the following equation.

mn =

Z ^=1 Xnf(x) &f(x)

As for images we need to extend idea of moments to two variables, as it is required for the analysis of two dimensional images. Moments for two variables can be shown through following relation. Again this definition is for moments about zero.

_ Zx=1Zy=1Xy f(x,y) mij" XNx=iX^=if(x,y)

m00 = 1. m10 is x component of the mean and m10 is the y component of the mean, i.e /х and /у respectively.

Central moments are moments about the center of gravity and these are considered good shape descriptors. Following equation gives the definition of central moments.

_ Zx=1Zy=1 (x-/x у (y - /y) j

-

7 = Z^=iZ^if(%,y)

For the paper we have used central moments up to order three. Definition of these can be given by putting values in the above mentioned general equation.

Where

/к 0 = 7^ 01 = 0 .

For calculating central moments, we have supposition that segmented sign have value 1 and the background have value 0. The central moments / 20 is variance of x and / 02 is variance of y. /11 is the covariance of x and y. Covariance gives the orientation of the sign. Thus these attributes define the shape. Theoratically if we have large number of central miments, shape can be fully retrieved, hence is a good candidate for the SL descriptor. This is the reason these central moments are used in the paper for the recognition of PSL.

-

ii. Hu Moments

The set of seven moments called Hu moments are considered as a good choice for shape descriptor for their invariance property. For recognition of PSL, the absolute Hu moments are used instead of principal Axis Hu moments. The seven absolute moments of Hu are used for the recognition of PSL [37]. These seven moments are mentioned as follows.

-

7 1 = ^ 20 + ^ 02

7 2 = №0 - ^ 02 ) 2 + 45 21

-

/3 = №0 - 512 ) 2+ №1 - 503 ) 2

74= №0 + 512 ) 2+ (521 + ^03 ) 2

/ 5 = №0 - 35 12 )& 0

+ 512 )[ №0 + 3512 ) 2- 3(^21

+ 503 ) 2] + №1 - ^03 ) (^21 + ^03 )[ 3(^30 + 3^12 ) 2- (^21 + ^03 ) 2

76= №0 + 3^02 ) [ №0 + 3^12 ) 2- (^21

+ ^03 ) 2] + 4tf11 №0 + 512 )(^21 + 503)

+ ^12 )[ №0 + 3^12 ) 2— 3(^21

+ ^03 ) 2] — (^30— ^12 ) №1

+ ^03 )[ 3(^30 + 3^12 ) 2— №1

+ - ) 2

Where

{).. = vi7

F i;

/

(1+^)

These Hu moments are actually derived from central moments. The Hu moments from one to six are rotation, scale and translation invariant. Seventh moment has the property of skew orthogonal invariance; this property is used to distinguish mirror objects of identical shape. So these inherent properties make it a suitable candidate for the shape descriptor for the recognition of PSL.

-

iii. Fourier Descriptors

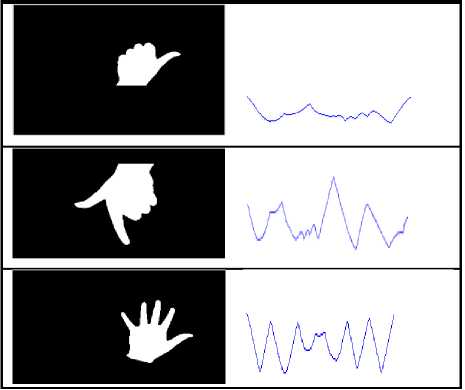

Fourier series has proved its worth in many applications and so in image processing and machine vision. Fourier series can also be used for shape description. This paper analyzed the contour based fourier descriptors. So PSL sign need to get transformed into its contour and that contour ultimately transformed into the 1-D shape signature. Then periodic samples are taken from the signature to obtain fourier descriptors and then these fourier descriptors are used in fourier series to obtain fourier coefficients.

For transforming sign to its corresponding 1-D signature is based on centroid distance. If binary segmented image of PSL sign is represented as:

f(x,y) = 1 if (x,y) ei

f(x,y) = 0 otherwise for 1-D shape signature. Signature is obtained with the following relation.

disti = |contpi(x) - C(x), contp^y) - C(y)|

Where dist i is the i th distance between centroid and i th contour point. contp i is the ith contour point and C is the centroid point of the sign.

Before extracting signature of the shape, sampling of the contour points is performed to make system more time efficient. As shapes are not of same size, so to have equal size of signature vector, an adaptive step size for each image is adopted. This adaptive step size function STEP is defined in terms of total numbers of contour points in each image and by a constant n, we have given a value 300 to n, to get a reasonable size of step while considering size of image and size of sign in majority of images.

No. of contp, S'TEPi = ----------—

n

Results of transforming image to 1-D signature are shown in Fig. 3.

Once 1-D signature is obtained, its fourier descriptor is obtained by transforming the this signature to complex function zi; as follows:

Zi = Xi + jyi

Above relation holds true for i= 0……N boundary points boundary points, where x is the horizontal coordinate and y is the vertical coordinate of the signature vector. This complex function is then transformed to fourier series F(x,y). The coefficients of such series are used as fourier descriptors.

N M

V V xn^ym.

F(x,y) = ^ ^ f(m, n) e1^ n + m )

Where, ‘I’ is the domain of binary segmented image of sign. Then Centroid (C(x), C(y)) of the sign can be obtained as follows:

N

Cto= N^

i=1

N

c(y)=NXyi i=1

Where N, is the total number of points in the segmented sign. :

(^i,yi)l f(Xi,yi) = 1

After obtaining centroid, 1-D signature needs to be extracted. For this contour points need to be extracted. After calculating contour points, cetroid distance is used n=1 m=1

Segmented image

1-D signature

Fig.3. 1-D signature of segmented sign

Experiments performed, which would be discussed later that only very few initial fourier descriptors can be very effectively for the classification purpose. This strong property makes the overall classification process very time efficient.

For making fourier descriptors, translation invariance, we have ignored the DC component of the fourier transform. Similarly for making descriptors as scaling invariant, we have used following formulation.

J

Jl -7

This holds true for i....N for all fourier coefficients, J is the first coefficient i.e. DC component.

Starting point invariance can be achieved by ignoring phase and using only magnitude of the fourier coefficients. This is what we have done for this paper. Along with this to make 1-D signature starting point consistent per signature, the boundary point that is at the maximum distance from the centroid is taken as the starting point maxl-N( dist(con.tpl, C) )

Where N is total number of contour points of the sign in the segmented image, contp i is the i th contour point and C is the centroid point of the sign.

Fourier descriptors, with a very small shape descriptor have proven its worth for the recognition of PSL.

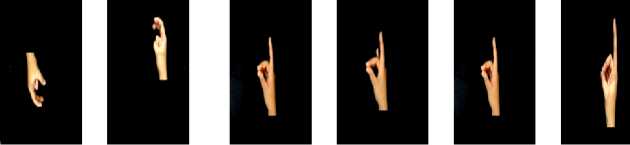

ghain zaal tuay zuay tuay dal

aliph Ttay

ain ghain sheen Choti yay

Fig.4. Different signs of PSL with visual similarity

-

IV. Result and Analysis

Experimentation is performed to analyze three different types of shape descriptors for the recognition of PSL. All three shape descriptors have inherent properties that make each a suitable candidate to be selected as shape descriptor for PSL recognition. There is no standard data set available for PSL, as there is very limited work done on PSL, so dataset is developed for this paper. Sign dictionary comprises two distinct groups of signs. Group I has thirty five signs of alphabets of PSL, whereas Group II has just nine signs for the numbers 1-9. Two distinct sets are used in the experiment to check the impact of the dictionary size on the shape descriptors. All signs from both the groups are one handed static signs. Training set has 387 examples from both sets and 14 different signers have posed for signs. Whereas testing set have 210 examples that are posed by 5 different signers.

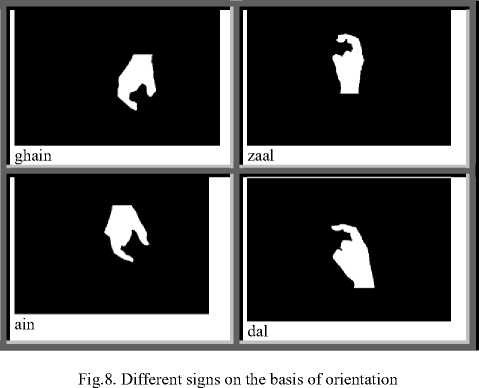

PSL is difficult as compared to many other sign languages. As it have many signs, that are visually quite similar. So it is not an easy task to give a system with very high accuracy for PSL recognition system. Many examples in group I and few in group II where same sign with different orientation in PSL is considered as different sign. For example “daal” and “ain”, “zaal” and “ghain”, “no” and “chay” are some of the examples of

PSL where same sign with different orientation means different sign. Similarly some other signs in PSL, which are visually quite similar to each other. Few examples of such signs in PSL are shown in Fig. 4. There are other such examples in PSL as well. These factors increase the complexity of the data set and hence making PSL recognition system, a very challenging task. Even then, the proposed methodology has proven its worth and results are quite encouraging.

Any digital camera of moderate resolution can be used; even a webcam can work well. It is required to keep the system simple for its vast applicability, so no external gadgetry is used, rather the system is kept purely vision based. Some constraints are applied on the clothing and background of the signer which are already explained in methodology.

For classification, minimum distance metric is used. Ranking metric RM used for the paper is given by taking ratio of the summation of all the correctly recognized signs i.e. acc and total number of signs tested N.

Е-1 acct

RM = —--1

N

(a)

3-NN

— ■ -5-NN

-*-7-NN

(b)

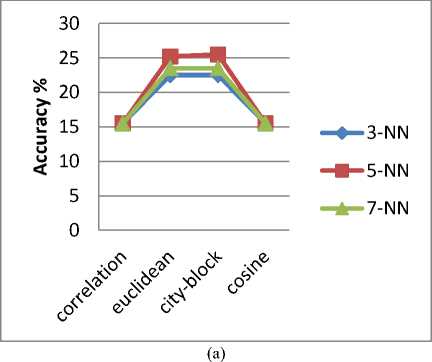

Fig.6(a) and (b). Plot of central moments and Hu moments examples respectively

(b)

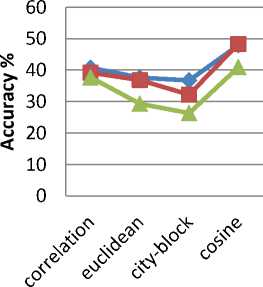

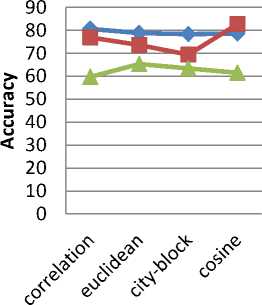

Fig.5(a) and 5(b). Central moments analysis for (a) Group I (b) Group II

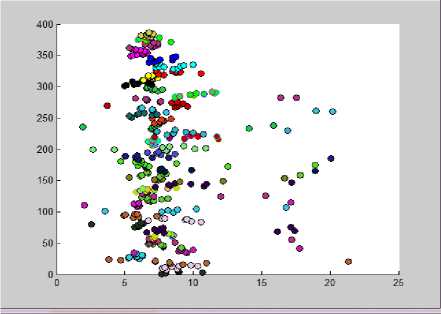

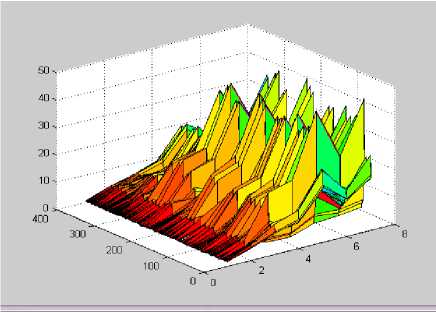

Central moments are analyzed in Fig. 5(a) and 5(b), for Group I and Group II respectively, while tuning different parameters of classifier for both groups of signs. But there was not much of the difference between central moments of different shapes. It is used for binary images as intensity values are of no importance in our case. Area variance and orientation of different numeric signs is almost same so the classifier was not able classify signs on the basis of central moments. Centroid has to play no role in our shape classification as signs can appear at any location in the image and by just identifying the centroid is interpreting nothing It is evident from the data that there is no significant difference in the values of central moment for visually quite different signs. So central moment alone, as shape descriptor for PSL recognition is no way an appropriate choice Fig. 6(a) is showing central moment Scatter plot of 387 examples. Examples belong to one class is represented with one color. It is evident from the figure that there is no certain grouping or clustering visible. Central moments may provide better results, if combined with some other shape descriptors.

The Fig. 6(b) is a plot of some training data that is trained with Hu moments. Three hundred and eighty seven examples are plotted along with values for the seven Hu moments. The figure below is evident of the fact that Hu moments are not a suitable shape descriptor for the purpose of recognition of PSL. As it is obvious from the surface below that values of a particular moment is almost in a limited range for all of the examples. So it is difficult for the classifier to classify correctly. Classifier used all seven values of moments collectively to calculate the distance between points. Following is the testing results for both groups of signs.

(a)

3-NN

- ■ -5-NN

—*—7-NN

— ♦ —3-NN

— ■ -5-NN

—*—7-NN

(b)

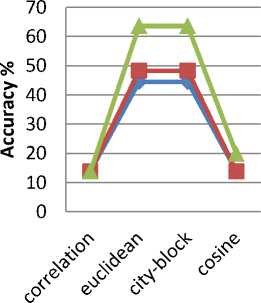

Fig.7(a) and (b). Hu moments analysis for (a) Group I (b) Group II

Fig. 7 (a) and (b) shows different variations in the parameters, distance measure and nearest neigbour, of classifier are brought and it is observed that by tuning different parameters the accuracy obtained for group I could not exceed 50% but for Group II it goes upto 82%. For smaller and simple group it gives good results. So Hu-moments which is considered as good shape descriptor, could not prove its worth for Group I. The main strength for which Hu moments are considered as a superior option among shape descriptors is its invariance. Invariance in terms of translation and scaling is very useful for the recognition of PSL but as far as rotation invariance is concerned; it is a negative instead of positive point for PSL recognition system. Many examples in group I where same sign with different orientation in PSL is considered as different sign. As same sign with different orientation in PSL is considered as different sign. So a good shape descriptor for PSL is that, which is orientation sensitive rather than being rotation invariant. For example “daal” and “ain”, “zaal” and “ghain”, “no” and “chay” are some of the examples of PSL of same sign with different orientation means different sign. There are other such examples in PSL as well. Fig. 8 shows some examples those are different signs just on the basis of their orientation.

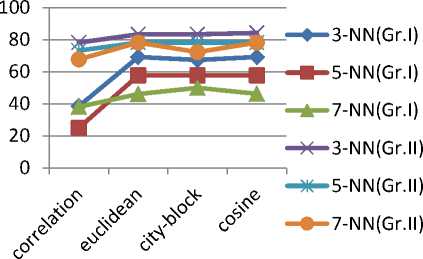

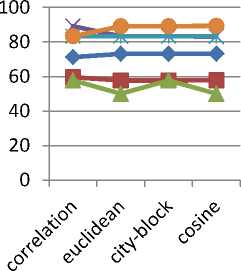

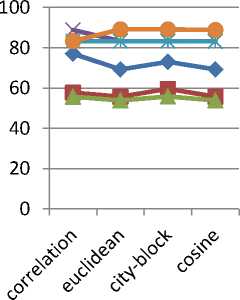

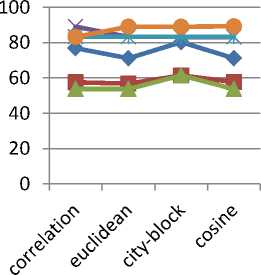

Fourier descriptors are used for this paper as shape descriptor for the recognition of PSL. Fourier descriptors proved to be quite reasonable choice for both groups of signs. Analysis according to the ranking metric is shown in Fig. 9 (a) – (e). These results are achieved while using only magnitude of the fourier descriptor to make it starting point invariant. If phase information is also included in the shape descriptor accuracy goes down 40 to 50% on average. Similarly if 1-D signature per sign consistency is ignored, on average 15% decrease in accuracy is observed. Figure shows the tuning of classification module with respect to nearest neighbors and distance measure for both groups, while analyzing the effect of number of fourier descriptors to be used for classification of PSL. Figure shows results of 5, 8, 10, 30 and 50 Fourier descriptors. There was no further improvement in results by further increasing number of fourier descriptors.

3-NN(Gr.I)

- ■ -5-NN(Gr.I)

—*—7-NN(Gr.I)

—ф—3-NN(Gr.I)

— ■ -5-NN(Gr.I)

-*-7-NN(Gr.I)

—ф—3-NN(Gr.I)

— ■ -5-NN(Gr.I)

—*—7-NN(Gr.I)

— ♦ —3-NN(Gr. I)

— ■ -5-NN(Gr. I)

—*—7-NN(Gr. I)

Fig.9 (a)-(e). Analysis of fourier diescriptors for (a) 5 (b) 8 (c) 10 (d) 30 (e) 50 fourier descriptors

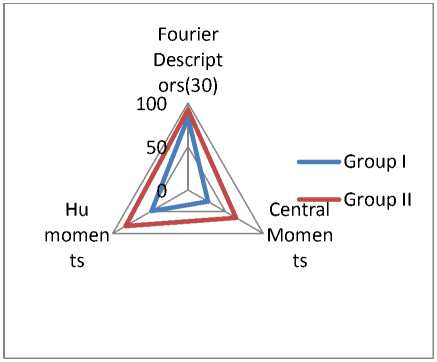

The paper presents fourier descriptors as shape descriptor for PSL recognition. The proposed methodology is empirically analyzed with two statistical shape descriptors i.e. central moments and Hu moments. The results are shown with the help of the following table and graph. Results are evident of the superiority of the proposed methodology.

Table 1. Comparison of different shape Descriptors

|

Shape descriptor |

Classifier |

Accuracy % Group I |

Accuracy% Group II |

|

Central moments (3) |

NN |

26.6 |

63.8 |

|

Hu moments |

NN |

48.3 |

82.9 |

|

Fourier descriptors(30) |

NN |

83.3 |

92.3 |

The paper presents the empirical analysis and proves that the presented methodology has the potential to be a good shape descriptor for a considerable larger and complex sign dictionary. PSL is taken as a test case for this claim and the proposed methodology very successfully proves the claim true.

Fig.10. Comparative analysis of different shape descriptors

Fig.11. Analysis of proposed methodology

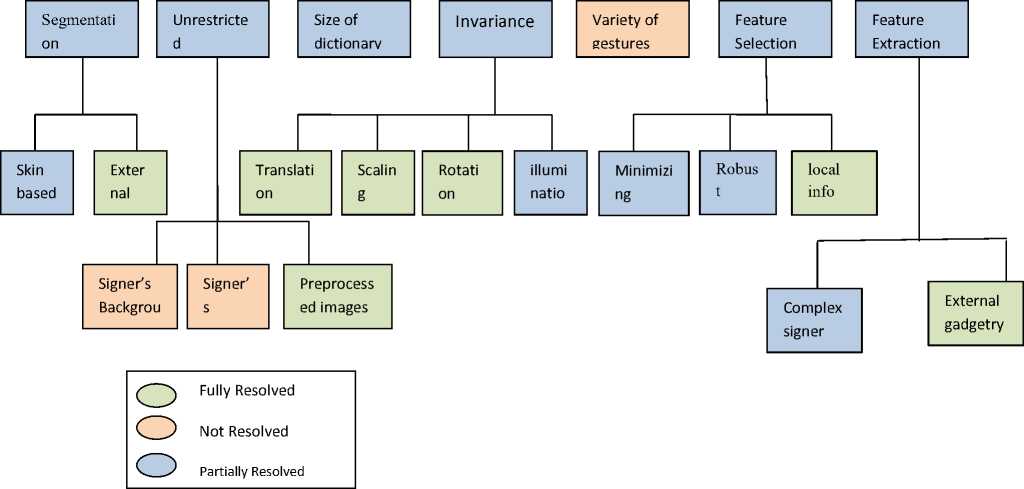

A survey on sign languages [19] has enlisted potential challenges and prime required features for a good automated SLR. We have analyzed our proposed methodology on the gauge provided by the survey [19]. The analysis is depicted in the Fig. 11.

These figure shows that proposed methodology is successful in resolving many problems identified in the survey [19]. Rotation invariance is achieved through proposed methodology but instead of having positive impact, this has negative impact on accuracy of the proposed methodology as discussed earlier in the paper. Variety of gestures as described in survey is dictionary based on gestures having one handed, two handed, facial expressions, and gait, static and dynamic signs. But dictionary used in proposed methodology have only one handed static signs. Similarly proposed method has put restriction on dressing of the signer i.e. full sleeved dress. Apart from these limitations, the presented methodology has given quite encouraging results while coping up a lot of challenges of automated SLR.

-

V. Conclusion

This paper presented an efficient, invariant method for the classification of PSL. The paper has compared different potential shape descriptors and has provided due justification of the results in the context. Two distinct groups of signs are used to analyze. It is observed that Group II that have less complex signs and less number of signs, gives better result as compared to more complex and larger Group I for central moments and Hu Moments, whereas Fourier descriptor have given very encouraging results for both the groups. The proposed methodology has given quite encouraging results. As always there is still a room left for improvement. For future work, further shape descriptors can be investigated for PSL. Similarly different shape signatures can be analyzed for the classification purpose. Variety in gestures should be accommodated in further research for automated PSL recognition system.

Список литературы Vision-based Classification of Pakistani Sign Language

- Xing Guo, Zhenyu Lu, Rongbin Xu, Zhengyi Liu , Jianguo Wu, " Big-screen text input system based on gesture recognition", Proceedings of the 2nd International Conference On Systems Engineering and Modeling (ICSEM-13), 2013.

- M. Mohandes, M. Deriche, U. Johar, S. Ilyas, "A signer-independent Arabic Sign Language recognition system using face detection, geometric features, and a Hidden Markov Model ",Computers & Electrical Engineering, Volume 38, Issue 2, March 2012, Pages 422–433.

- Tolba, M. F, Samir, Ahmed , Abul-Ela, Magdy, "3D Arabic sign language recognition using linear combination of multiple 2D views ", 8th International Conference on Informatics and Systems (INFOS), Cairo, 2012 , 14-16 May 2012, pp: MM-6 - MM-13.

- Sumaira Kausar, M. Younus Javed, "A Survey on Sign Language Recognition," 9th International conference on Frontiers of Information Technology, 19-21 December 2011, Islamabad Pakistan, pp.95-98.

- M-K. Hu.Visual pattern recognition by moment invariants. IRE Trans. on Information Theory, IT-8:pp. 179-187, 1962.

- Wassner. kinect + reseau de neurone = reconnaissance de gestes. http://tinyurl.com/5wbteug, May 2011.

- Sumaira Kausar, M. Younus,Javed, Shaleeza,Sohail: "Recognition of gestures in Pakistani sign language using fuzzy classifier" , 8th conference on Signal processing, computational geometry and artificial vision 2008, Rhodes, Greece, Pages 101-105

- Aleem Khalid Alvi, M. Yousuf Bin Azhar, Mehmood Usman, Suleman Mumtaz, Sameer Rafiq, RaziUr Rehman, Israr Ahmed, "Pakistan Sign Language Recognition Using Statistical Template Matching", World Academy of Science, Engineering and Technology 3 2005.

- Philippe Dreuw, Pascal Steingrube, Thomas Deselaers, Hermann Ney. Smoothed Disparity Maps for Continuous American Sign Language Recognition. In: Pattern Recognition and Image Analysis LECT NOTES COMPUT SC 2009; 5524: 24-31.

- Zafrulla, H. Brashear, T. Starner, H. Hamilton, and P. Presti. American sign language recognition with the kinect. In: Proceedings of the 13th International Conference on Multimodal Interfaces, ICMI '11, 2011; New York, NY, USA. pp. 279–286.

- Jayashree R. Pansare, Shravan H. Gawande, Maya Ingle. Real-Time Static Hand Gesture Recognition for American Sign Language (ASL) in Complex Background. In: Journal of Signal and Information Processing 2012; 3: 364-367 .

- Hervé Lahamy and Derek D. Lichti. Towards Real-Time and Rotation-Invariant American Sign Language Alphabet Recognition Using a Range Camera. In: Sensors 2012; 12: 14416-14441.

- Yun Li, Xiang Chen, Xu Zhang, Kongqiao Wang, and Z. Jane Wang.. A Sign-Component-Based Framework for Chinese Sign Language Recognition Using Accelerometer and sEMG Data. In: IEEE T BIO-MED ENG 2012; 59.

- Xiangwu Zhang, Dehui Kong, Lichun Wang, Jinghua Li, Yanfeng Sun, Qingming Huang. Synthesis of Chinese Sign Language Prosody Based on Head. In: International Conference on Computer Science & Service System (CSSS); 11-13 Aug. 2012; Nanjing: pp.1860 – 1864.

- Xing Guo, Zhenyu Lu, Rongbin Xu, Zhengyi Liu , Jianguo Wu. Big-screen text input system based on gesture recognition. In: Proceedings of the 2nd International Conference On Systems Engineering and Modeling (ICSEM-13), 2013; Paris; France.

- A. Samir Elons , Magdy Abull-ela, M.F. Tolba . A proposed PCNN features quality optimization technique for pose-invariant 3D Arabic sign language recognition. In: APPL SOFT COMPUT April 2013; 13: 1646–1660.

- Ershaed, I. Al-Alali, N. Khasawneh, and M. Fraiwan. An arabic sign language computer interface using the xbox kinect. In: Annual Undergraduate Research Conf. on Applied Computing May 4-5, 2011; Dubai, UAE.

- M. Mohandes, M. Deriche, U. Johar, S. Ilyas. A signer-independent Arabic Sign Language recognition system using face detection, geometric features, and a Hidden Markov Model. In: COMPUT ELECTR ENG March 2012; 38: 422–433.

- Tolba, M. F, Samir, Ahmed, Abul-Ela, Magdy. 3D Arabic sign language recognition using linear combination of multiple 2D views. In: 8th International Conference on Informatics and Systems (INFOS); 14-16 May 2012; Cairo: pp. MM-6 - MM-13.

- Jalilian, Bahare, and Abdolah Chalechale. "Persian Sign Language Recognition Using Radial Distance and Fourier Transform." International Journal of Image, Graphics and Signal Processing (IJIGSP) 6, no. 1 (2013): 40.

- Singh, Amarjot, John Buonassisi, and Sukriti Jain. "Autonomous Multiple Gesture Recognition System for Disabled People." International Journal of Image, Graphics and Signal Processing (IJIGSP) 6, no. 2 (2014): 39.

- A. Samir Elons , Magdy Abull-ela, M.F. Tolba , "A proposed PCNN features quality optimization technique for pose-invariant 3D Arabic sign language recognition", Applied Soft Computing, Volume 13, Issue 4, April 2013, Pages 1646–1660.

- S. Padam Priyal, Prabin Kumar Bora , "A robust static hand gesture recognition system using geometry based normalizations and Krawtchouk moments" , Pattern Recognition, Volume 46, Issue 8, August 2013, Pages 2202–2219

- Yun Li, Xiang Chen, Xu Zhang, Kongqiao Wang, and Z. Jane Wang.," A Sign-Component-Based Framework for Chinese Sign Language Recognition Using Accelerometer and sEMG Data"Ieee Transactions On Biomedical Engineering, Vol. 59, No. 10, October 2012.

- Philippe Dreuw, Pascal Steingrube, Thomas Deselaers, Hermann Ney, "Smoothed Disparity Maps for Continuous American Sign Language Recognition" Pattern Recognition and Image Analysis Lecture Notes in Computer Science Volume 5524, 2009, pp 24-31

- Rini Akmeliawati, Melanie Po-Leen Ooi and Ye Chow Kuang, "Real-Time Malaysian Sign Language Translation using Colour Segmentation and Neural Network", IMTC 2007 - Instrumentation and Measurement Technology Conference Warsaw, Poland, 1-3, May 2007.

- Sumaira Kausar, M. Younus,Javed, Shaleeza,Sohail: "Recognition of gestures in Pakistani sign language using fuzzy classifier" , 8th International conference on Signal processing, computational geometry and artificial vision 2008 (ISCGAV'08), 20-22 August 2008, Rhodes, Greece, Pages 101-105

- Ershaed, I. Al-Alali, N. Khasawneh, and M. Fraiwan. An arabic sign language computer interface using the xbox kinect. In Annual Undergraduate Research Conf. on Applied Computing, May 2011.

- Zafrulla, H. Brashear, T. Starner, H. Hamilton, and P. Presti. American sign language recognition with the kinect. In Proceedings of the 13th International Conference on Multimodal Interfaces, ICMI '11, pages 279–286, New York, NY, USA, 2011. ACM. ISBN 978-1-4503-0641-6. doi: 10.1145/2070481.2070532.

- Helen Cooper, Eng-Jon Ong, Nicolas Pugeault, Richard Bowden, "Sign Language Recognition using Sub-Units", Journal of Machine Learning Research 13 (2012) 2205-2231.

- Xu shijian, Xu jie, Tang yijun, Shen likai, "Design of the Portable Gloves for Sign Language Recognition", Advances in Computer Science and Education Applications Communications in Computer and Information Science Volume 202, 2011, pp 171-177

- Tan Tian Swee, A.K. Ariff, S.-H. Salleh, Siew Kean Seng, Leong Seng Huat "Wireless data gloves Malay sign language recognition system", In proceeding of 6th International Conference on Information, Communications & Signal Processing, 2007;DOI: 10.1109/ICICS.2007.4449599 ISBN: 978-1-4244-0983-9

- Jayashree R. Pansare, Shravan H. Gawande, Maya Ingle, " Real-Time Static Hand Gesture Recognition for American Sign Language (ASL) in Complex Background", Journal of Signal and Information Processing, 2012, 3, 364-367 doi:10.4236/jsip.2012.33047 Published Online August 2012.

- Hervé Lahamy and Derek D. Lichti, "Towards Real-Time and Rotation-Invariant American Sign Language Alphabet Recognition Using a Range Camera", Sensors 2012, 12, 14416-14441; doi:10.3390/s121114416

- Xiangwu Zhang, Dehui Kong, Lichun Wang, Jinghua Li, Yanfeng Sun, Qingming Huang "Synthesis of Chinese Sign Language Prosody Based on Head" International Conference on Computer Science & Service System (CSSS), Nanjing, 2012, 11-13 Aug. 2012, pp:1860 – 1864, Print ISBN:978-1-4673-0721-5.

- Pakistan Association of Deaf, Pakistan, accessed at: http://www.padeaf.org, Accessed on: 20th June 2013.

- M-K. Hu.Visual pattern recognition by moment invariants. IRE Trans. on Information Theory, IT-8:pp. 179-187, 1962.