A Driving Behavior Retrieval Application for Vehicle Surveillance System

Автор: Fu Xianping, Men Yugang, Yuan Guoliang

Журнал: International Journal of Modern Education and Computer Science (IJMECS) @ijmecs

Статья в выпуске: 2 vol.3, 2011 года.

Бесплатный доступ

Vehicle surveillance system provides a large range of informational services for the driver and administrator such as multiview road and driver surveillance videos from multiple cameras mounted on the vehicle, video shots monitoring driving behavior and highlighting the traffic conditions on the roads. How to retrieval driver’s specific behavior, such as ignoring pedestrian, operating infotainment, near collision or running the red light, is difficult in large scale driving data. Annotation and retrieving of these video streams has an important role on visual aids for safety and driving behavior assessment. In a vehicle surveillance system, video as a primary data source requires effective ways of retrieving the desired clip data from a database. And data from naturalistic studies allow for an unparalleled breadth and depth of driver behavior analysis that goes beyond the quantification and description of driver distraction into a deeper understanding of how drivers interact with their vehicles. To do so, a model that classifies vehicle video data on the basis of traffic information and its semantic properties which were described by driver’s eye gaze orientation was developed in this paper. The vehicle data from OBD and sensors is also used to annotate the video. Then the annotated video data based on the model is organized and streamed by retrieval platform and adaptive streaming method. The experimental results show that this model is a good example for evidence-based traffic instruction programs and driving behavior assessment.

Driving behavior, vehicle surveillance, eye gaze orientation, retrieval

Короткий адрес: https://sciup.org/15010072

IDR: 15010072

Текст научной статьи A Driving Behavior Retrieval Application for Vehicle Surveillance System

Published Online April 2011 in MECS

An active driver safety system must monitor the vehicle state, vehicle surroundings and driver behavior. Although, in recent years, significant developments has been made, traffic statistics indicate that we still need significant improvements in this field. For example, how to transfer road scene video real time from vehicle or analyze video data to find driver’s inattention and accident reason in vehicle surveillance system.

In recent years, advances in data collection technology, data compression, data storage and communication have allowed the collection of driver and driving data from within the vehicle in relatively large-scale studies for extended unmonitored durations. In this paper, we use eye gaze orientation as retrieval parameter to manage driving behavior data and retrieve and understand of driver’s intent under hazardous scenarios from driving behavior database which include vehicle data from ECU and surrounding data from vehicle surveillance system.

Driving behavior database has emerged as a natural solution to manage the vast of growing of vehicle surveillance information. Real world driving data collection has been instrumental in aiding the understanding of the driver behaviors and traffic conditions that lead to crashes; and finding how these factors are present in everyday driving. Understanding and fully utilizing these data by manual searching, however, it is an extremely time consuming and labor intensive, so it requires unique data treatment and analysis approaches as well as a realization of their subtle difference. Retrieval is an effective method to extract the useful information from the large-scale driving data. Typically video and image retrieval method in multimedia database is searched based on keywords, features and/or concepts[1]. Given tremendous amount of video data, capabilities to support efficient and effective video retrieval have become increasingly important. There are two general approaches for video retrieval: the text-based approaches apply traditional text retrieval techniques to video annotations or descriptions where as the content based approaches apply image processing techniques to extract image features and retrieve relevant video[2]. The other approach is to represent video with nonverbal descriptions which can be reliably computed from video. Such descriptions are image features based on color, shape, and texture. Conventional content-based image retrieval (CBIR) methods use these image features to define image similarity [3, 4]. In general, both in text based and content based image retrieval systems, the bottleneck to the efficiency of the retrieval is the semantic gap between the high level image interpretations of the users and the low level image features stored in the database for indexing and querying [5, 6]. In vehicle surveillance application, the road scene video which related with driver behavior can be used as a connection between video features and driver behavior. In vehicle, driver’s eye gaze provides accurate proxy for determining driver attention, which is synchronized with the road scene image for surveillance traffic situation and driver intent. GPS coordination, 3D accelerators are also synchronized with road scene video with time stamp. Therefor, all the driving behavior information are connected together to estimate driver intent.

3D accelerator

Eye gaze tracking camera

Driver behavior camera

Road scene camera

GPS

Fig. 1. The digital vehicle surveillance system was comprised of one scene camera aimed at the road ahead, one camera aimed at the driver for driver behavior and one camera aimed at driver’s face to estimate driver’s eye gaze orientation. A car PC in trunk was used to calculate eye gaze orientation and analysis driving behavior. The OBD, GPS and 3D accelerator are also connected with the car PC to get the vehicle position and state.

As the most complex and largest volume form of driving behavior data in vehicle surveillance system is video data, to develop an application for accessing information (e.g., the videos when driver is inattention) we must manage the properties of digital video data for indexing purposes. In a conventional database management system (DBMS), access to data is based on distinct attributes of well-defined data developed for a specific application[7]. The attributes of eye gaze orientation which represent driver behavior is extracted in this paper to define the video attribution. An adaptive streaming method to separate video into different chunks for extracting driver’s eye gazed information contained in the video is proposed. Next, these chunks information is appropriately modeled in order to support both user queries for content and data models for storage. From a structural perspective, a road scene image can be synchronized as traffic data consisting of a finite-length of synchronized GPS and vehicle information. The client can retrieve and view traffic video from HTTP web server using a client adaptive streaming service application.

The rest of the paper is organized as follows. Section II describes our proposed data collection and annotation method. Section III introduces our proposed video retrieval and streaming scheme. In Section IV, we present our experimental results. We conclude and state our findings in section V.

-

II. DATA COLLECTION AND ANNOTATION

-

A. Driving Surveillance system

Driving behavior data was collected in a real driving environment with an in-car driving surveillance and real time processing system. This system included a Car PC with 3 channels video including road scene image and driver’s behavior and eye gaze tracking images (Fig. 1). There were three cameras, a wide view camera was mounted in the middle of windshield in front of the rearview mirror for the traffic scene, an infrared camera was mounted on the middle of the dashboard for face and eye gaze tracking, and a third camera was mounted on the right side of the windshield for monitoring the driver’s body behaviors to monitoring driving behavior. The eye gaze tracking camera was a main driver-focused sensor which was a rectilinear color camera mounted on the middle of dashboard facing toward the driver, providing 30 frame(s) at 720×576 resolution. The car PC connected with OBD and sensors (GPS and 3D accelerator) detecting the vehicle position and vehicle status. The OBD interface provided most of the data related to the vehicle.

When gathering continuous data from a vehicle, the car PC generally record data to persistent media (hard disk), and these data are periodically harvested for subsequent analysis. Some studies also have the ability to send selected subsets of data over cellular wireless media, but in systems with continuous video and sensor data collection, the rate of gathering data far exceeds that of the cellular wireless medium. This data rate is dominated by the rate of the compressed video, with 90%-95% of the data typically being video. Common video data rates are 6-8 megabytes of video per minute, so a passenger vehicle can easily record in excess of 20 gigabytes (GB) of data in a month. To accommodate the storage requirements for these large studies, a substantial investment in data center infrastructure is necessary. After copying data from the in-car surveillance system, these data are available in network file servers for subsequent analysis. At this point, retrieval important segments from volume data in storage architecture can have considerable implications for the project.

The eye gaze tracking system collect and process the video in real time to extract eye gaze parameters based on facial features, which can be used to retrieval the driving behavior from storage data.

-

B. Eye gaze parameters calculation

The large information content presented in video data makes manual indexing (information extraction) labor intensive, time consuming and prone to errors. To search and locate meaningful segment related with driving behavior, we use eye gaze parameters to label the video automatically.

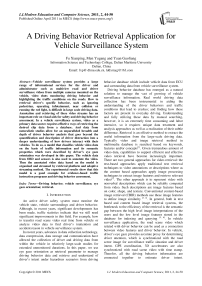

Face detection is the first step in our eye gaze orientation detection system. The purpose of face detection is to localize and extract the face region and facial features from the background that will be fed into the face recognition system for identification. For this processing we used the FDLIB[8] as a face detection library for C++. For each frame of the video the FDLIB library automatically detects facial features, without any preset markers worn on the face, which can be used to extract the eye and head movements (e.g., yaw, pitch, and roll)(Fig. 2(a)-(c)).

(a)

(b)

(c) (d)

Fig. 2. (a)-(c) the detected face features and eye gaze parameters. The detected facial features when driver looks at the left side mirror. (b) Detected facial features when looking at the rearview mirror. (c) Detected facial features when looking at the right side mirror. (d) The driver’s eye gaze parameters are determined by the axis of eyeball and face structure change when head and eyeball move.

We adopted a noncontact computer vision gaze tracking system for obtaining driver's eye gaze parameters[9]. Driver’s gaze direction is determined by head position and virtual eyeball position in 3D space (Fig. 2(d)). The virtual eyeball position is the middle point of the axis of two eyeballs, which is represented by the projection point of the middle point of the line that passes through the left and right eyeball centers. In fig. 2 (d), Point Q is a middle point of the outside corners of two eyes. Point P is the middle point of the left and right iris centers on the eye image. The width between the outside corners of the eyes is W eye . The height of the nose is presented by H nose , which is the distance from point Q to nose tip. M is the middle point of the nose tip to the line of two irises. N is the middle point of nose tip to the axis of eyeball between the outside corners of two eyes.

The horizontal view orientation is determined by the iris horizontal displacement vector ParaX that is defined by

ParaX = ( Q- P)/W eye (1)

Where Q-P represent the iris horizontal displacement and W eye represent the head yaw movement. By normalizing the displacement of Q-P by W eye , change of the size of the face horizontally in the image is negligible.

The vertical view orientation is determined by the iris vertical displacement vector ParaY that is defined by

ParaY= (M-N)/ H nose (2)

Where M-N represents the iris vertical displacement and H nose represent the head pitch movement.

In this method, the Weye and Hnose compensate the head rotates, displacement vector Q-P and M-N represent the eyeball movement. Therefore the driver’s eye gaze orientation can be estimated by ParaX and ParaY, which was used to annotate video.

-

C. Driving behavior analysis database

Driving behavior analysis is a real-time working procedure including data acquisition, process and evaluation. The driving behavior analysis database provide the interface to real-time collect the vehicle movement, driving operation, drivers’ status and other dynamic data through sensors. Definition the frequency of data collection and adoption the time and position respectively as major and minor index could achieve the management and storage of different data source. The customer can analyze the behavior data and make a conclusion under the condition of special section and time zone by using driving behavior analysis database and setting analysis condition.

Driving behavior analysis database was developed as a tool to collect the behavior data and was implicated to support the research on driving performance. Therefore, this database should cover most of information in driving such as drivers’ demographics, position and movement data of vehicle, driving operation data, road alignment data and other related information. This database includes the following information.

Drivers are the main objects of in-car surveillance system, the basic information about drivers age, sex, marital status, years of driving experience, occupation, educational background and other personal demographics information were measured by questionnaire before their participant in the experiments.

The horizontal (latitude and longitude of coordinate) and vertical (elevation) data from GPS and 3D accelerator are used to measure the spatial position of vehicle at any moment, the steering angle, velocity, vertical and lateral acceleration or deceleration are used to measure the movement status of driving vehicle, the distance headway or velocity gap were used to measure movement characteristic between cars and others.

The operation data of gear, throttle, steering wheel, brake, clutch, light and other in-vehicle from ECU make up the data groups which are used to analyze the operation characteristic.

Except for the fundamental data mentioned above, eye gaze parameters, in-vehicle collection device and other data source are also collected.

Ш . VIDEO RETRIEVAL AND STREAMING

-

A. System architecture

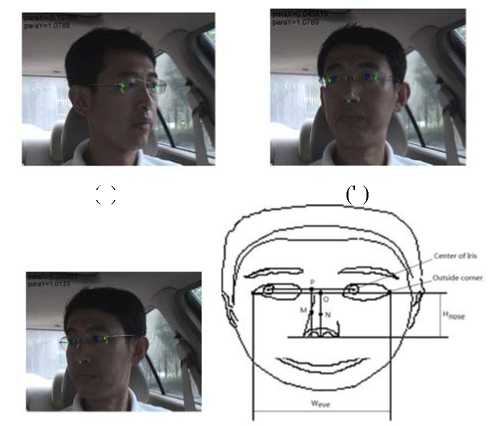

Video annotated with driver’s eye gaze parameters and vehicle information is managed by driving behavior database which is a multimedia database. The architecture of this system is shown in Fig.3. The driver’s eye gaze parameters are calculated in real time by control unit of car PC in vehicle. Traffic scene image, GPS and vehicle information are synchronized by time stamp. All the vehicle information and video metadata are sent via cellular wireless by communication unit in surveillance system to multimedia database and retrieval platform.

Fig. 3. The structure of vehicle surveillance and retrieval system. Traffic and driver information, such as vehicle state, driver head and eye gaze orientation, road scene image and GPS coordinate, are sent to the multimedia database. The vehicle can receive traffic and route suggestion from the retrieval platform. Driving behavior database and retrieval platform manage all the information transferred from vehicle. Users can access this information through a HTTP web server using adaptive streaming technology.

User can access the content and retrieve video data through HTTP web server, such as vehicle status, traffic condition, driver behavior and suggested travel route.

The video data from vehicle can be transferred in real time by adaptive stream strategy on the internet. We develop that video from vehicle are transmitted using a flexible format that can generate on-the-fly prioritized versions of the source data, e.g., using scalable video coding. To enable adaptive transmission of prioritized content, we propose the deployment of multimedia database to manage metadata of traffic video and track hinting to generate multiple hint tracks, which allow realtime adaptation.

The layered network architecture based on an appropriate network technology such as Internet makes it possible to transmit several streams over one channel, because of its high transmission capacity. Thus, new and flexible data processing mechanisms are realizable. For example, video based vehicle surveillance systems that currently process the data right at the source and transmit the processed data as short control metadata to the database and retrieval platform can be designed in a more flexible way. In that case, data processing can be performed not only in the vehicle but also in a central unit or at the destination according to the system requirements. The best candidate among the present technologies to realize our goal turned out to be HTTP, the internet standard. Internet is the best candidate among the frame based computer networking technologies for wide area networks due to its wide availability, high transmission capacity and low cost. However, some modifications have to be applied in order to achieve the desired deterministic behavior for time constraint audio/video oriented communication systems. In order to provide QoS guarantees for real-time and multimedia applications, we apply a priority segmentation concept based on adaptive streaming.

B Preprocessing and Retrieval

Once data preparation and storage are complete, researchers are tasked with transitioning the real world driving data into a production dataset, a process which is typically composed of two steps. The first one is preprocessing of the data. The second one is the completion of any data annotation efforts that are necessary to answer the research questions.

Data pre-processing is needed for sensor variables and camera collected in a real world driving situation, since data can be noisy. This pre-processing includes the application of digital filters, use of heuristic algorithms, and development of confidence levels on particular metrics. Pre-processing is useful in combining the information from redundant data sources into a single measure that utilizes the best information available from ECU, sensors and cameras. In pre-processing these datasets, it is important to know that the usefulness of these datasets can include the examination of driver skills and training, distraction, responses to critical situations, interactions with advanced vehicle systems, traffic flow, aggressive driving, and algorithm testing, among many others. Similarly, the data analysis approaches that are used may also vary, including searches for patterns within the data, the use of summary statistics, and visualization of the data, simulation based on extractions from the data, time series analysis, or case-studies of particular events.

Once data pre-processing is complete, data annotation occurs. Data annotation is supported by the availability of video of the driver and road scene. There are two complementary approaches. The first is the validation of flagged segments of data, which is automated to verify whether segments that exhibit particular signatures indeed represent an event of interest (e.g., a crash, near crash). The second one is the transformation of the information contained in the video into a format that allows for statistical analyses. The parameters of eye gaze orientation are used as a function of time, or distractions occur, or the presence and extent of fatigue. When finalized, these codes are treated as additional data, on par with data that were directly collected by the in-car surveillance system.

Automated coding of video streams occurs in the form of machine vision technology. This technology requires manual validation prior to widespread use. Applications for this system include lane tracking, traffic signal state identification, and eye and face monitoring, and drowsiness detection. Automated annotation is very useful when reliable, but often the reliability levels are low when the lighting and environmental conditions deteriorate. In contrast, manual annotation of video spreads the cost of the process over the life of the project, time consuming and is cost-effective even employed on very large-scale datasets. Manual annotation requires well supervision structure, training, scheduling, and quality control procedures. Consequently, its reliability can vary and is a function of many of these factors. Given constraints time, it is often unrealistic to annotate all the video that are available. In this paper, driving event is annotated by eye gaze parameters manually for some critical event segments. Driving events and road scene images could be selected as a proportion of the eye gaze observed. As previously mentioned, a key construct of real driving behavior data collection is the unobtrusive observation of driving behaviors. When appropriately used, this scheme allows researchers to gather data with a high level of validity and to directly control the environment in which the driver is observed, such as location of adjacent vehicles and obstacles.

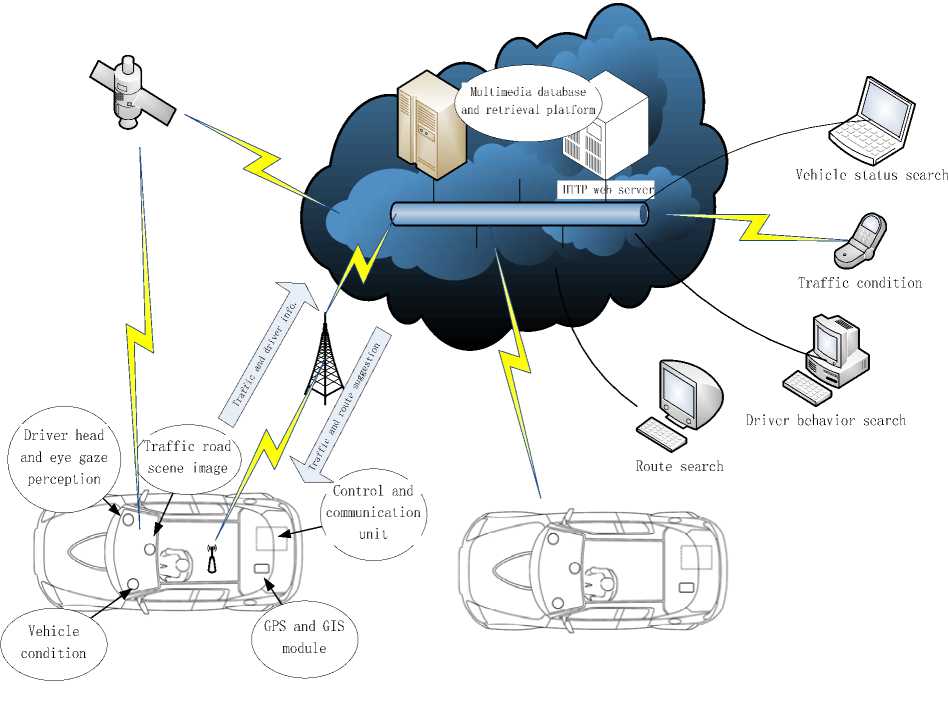

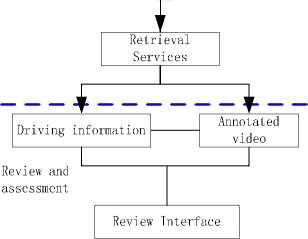

C Services Delivery Platform

Retrieval services come from traffic multimedia services delivery platform, which manage eye gaze parameters, driving information and video chunks, all these information are synchronized with time stamp (Fig.4). Review and assessment module includes client application interface, vehicle status, driving behavior and annotated video. To delivery video, adaptive streaming is an important method for vehicle surveillance system.

Adaptive streaming is a hybrid delivery method that acts like streaming but is based on HTTP progressive download, which is extremely efficient to transfer video through cellular wireless network. In this paper, the vehicle cellular wireless networks adaptive stream is a kind of adaptive streaming that specialized for video retrieval in vehicle surveillance system. The codec technologies, formats, and encryption schemes are customized, the adaptive streaming method rely on HTTP as the transport protocol and perform the media download as a long series of very small progressive downloads, rather than one big progressive download.

In our vehicle surveillance system, video is cut into many short segments ("chunks") according to eye gaze annotation and key frames which are related with vehicle position information, and encoded to the desired delivery format. Chunks are typically 2-to-4-seconds long which are labeled by head direction, eye gaze orientation and GPS coordination. At the road scene video codec level, this typically means that each chunk is cut along video GOP (Group of Pictures) boundaries (each chunk starts

Fig. 4. Data processing framework. Traffic multimedia services are constructed by data from vehicle surveillance system which process driver eye gaze parameters, traffic data and synchronization. Client application can retrieve and access the services based on driving information and annotated video.

with a key frame which is separated by road scene image) and has no dependencies on past or future chunks/GOPs. This allows each chunk to later be decoded independently of other chunks.

The encoded chunks are hosted on multimedia database and HTTP Web server (Fig. 3). A client requests the chunks from the Web server in a linear fashion and downloads them using plain HTTP progressive download. The client requests several services, include vehicle status search, traffic condition request, searching driver behavior in a specific vehicle and request some route suggestion. As the requested chunks are downloaded to the client, the client plays back the sequence of chunks in linear order. Because the chunks are carefully encoded without any gaps or overlaps between them, the chunks play back as a seamless video.

The "adaptive" part of the solution comes into play when the video/audio source is encoded at multiple bit rates, generating multiple chunks of various sizes for each 2-to-4-seconds of video. The client can choose between chunks of different sizes. Because Web servers usually deliver data as fast as network bandwidth allows them to, the client can easily estimate user bandwidth and decide to download larger or smaller chunks ahead of time. The size of the playback/download buffer is fully customizable.

-

IV. experiment and RESULT

We constructed an experimental environment to verify this driving behavior database architecture. An instrumented car is use to collect and process video data (Fig. 1).

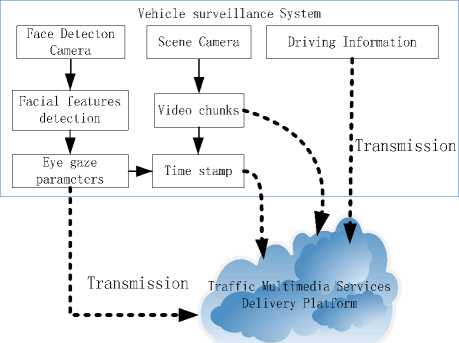

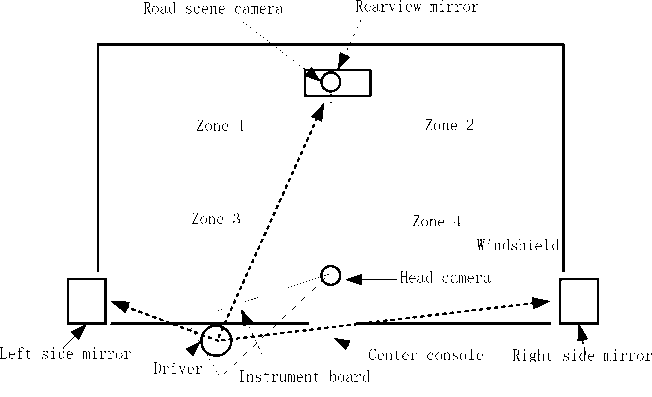

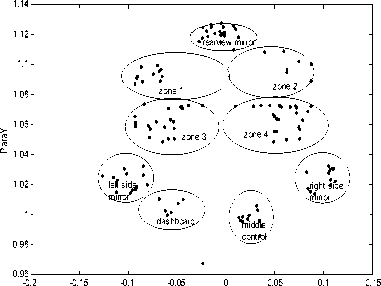

The driver’s eye gaze orientation parameters are calculated using equation 1 and 2. In order to synchronize the eye gaze parameters and road scene, we manually label the eye gaze parameters with the eye gaze orientation. The eye gaze orientations for calibration and annotation are shown in Fig.5, which present the most useful fixation location during driving. The annotated result of eye gaze orientation is shown in Fig. 6. In these figures, the zone 1 is the left top region of the windshield, and zone 2, zone 3 and zone 4 represent the right top, left bottom and right bottom of the windshield separately. We also collect some data when subject look at dashboard (instrument board) and center console.

In this Driving behavior analysis database, the every chunk is annotated by harsh code, key frame sequence number of scene video, GPS coordinate, head orientation and eye gaze direction, all of the traffic information and videos are synchronized with time stamp.

The real-time index acquisition would be more complicated compared with the static index. The equipment of GPS provided the information about vehicle position data in real road experiment, and the real-time coordinate and elevation was used in the driving environment. The velocity, acceleration, steering angel can be collected using different sensors in real-road condition. These data can be directly obtained as the annotated result during driving. The lateral deviation can be obtained by processing the image of road lane and edge, and this data from 3D accelerator.

In order to test the adaptive streaming performance in moving cellular wireless network, a same clip of road scene video is encoded with 256 Kbps, 512 Kbps and 768 Kbps bit rate separately. The image format is CIF. We uploaded these video into server by TD-SCDMA wireless network and access them via HTTP. The experiment result of bit rate and quality of adaptive streaming are shown in the table i.

TABLE I

Adaptive streaming via TD-SCDMA wireless network

Fig. 4. Illustration eye gaze orientation in driving environment in car. The road scene camera is fixed on the back of the rear view mirror. The left side mirror, right side mirror and rearview mirror are used to annotate and calibrate driver’s eye gaze orientation. Eye gaze parameters are labeled manually with those different eye gaze orientation in this paper.

|

Encoded Bit rate (Kbps) |

Resolution |

Upload (Kbps) |

Adaptive streaming (Kbps) |

|

|

1 |

256 |

CIF |

150-200 |

150-250 |

|

2 |

512 |

CIF |

200-300 |

200-500 |

|

3 |

768 |

CIF |

200-500 |

200-600 |

In vehicle surveillance scenarios, it is useful to have the metadata playback with the video data so that a client application can get more information about the

ParaX

Fig. 6. The annotated eye gaze result. The eye gaze when the driver look at the rearview, left side mirror and right side mirror are used to calibrate the system. Zone 1 is the left top region of the windshield, zone 2, zone 3 and zone 4 represent the right top, left bottom and right bottom of the windshield separately. The data when subject look at instrument board and center console are also collected.

surveillance system, such as speed limit, vehicle position in map, turn light status and accelerator etc. When a client requests a video slice from the IIS Web server, the server retrieve the appropriate starting chunk in the multimedia database and then lifts the chunks out of the file and sends them over the wire to the client.

-

V. CONCLUSION

We have successfully developed and implemented a vehicle video surveillance system which includes video annotation and driving behavior retrieval based on a driving behavior database. Adaptive streaming method for vehicle surveillance system is also presented in this paper. The streaming and video annotation method meets the needs of our driving behavior studies. Our vehicle surveillance architecture has several advantages such as tracking the driver’s view orientation in a real world driving environment, and the road scene images are synchronies with eye gaze orientation. The client applications retrieve and playback video and vehicle information using adaptive streaming method. These techniques are developed for vehicle status and driving intent application, they can be applied in many other driving behavior studies.

In future studies, the system will provide valuable objective data to help us better understand what driver is looking at and how the traffic situation is. We expect to establish associations between certain patterns of driving behavior and driving performance, based on those data and analyses, to develop evidence-based traffic instruction programs.

Acknowledgment

Supported in part by “the Fundamental Research Funds for the Central Universities.

Список литературы A Driving Behavior Retrieval Application for Vehicle Surveillance System

- Y. Alemu, K. Jong-bin, M. Ikram, and K. Dong-Kyoo, "Image Retrieval in Multimedia Databases: A Survey," in Intelligent Information Hiding and Multimedia Signal Processing, Fifth International Conference on, 2009, pp. 681-689.

- H. Jiang and A. K. Elmagarmid, "WVTDB-a semantic content-based video database system on the World Wide Web," Knowledge and Data Engineering, IEEE Transactions on, vol. 10, pp. 947-966, 1998.

- C. Shu-Ching and R. L. Kashyap, "A spatio-temporal semantic model for multimedia database systems and multimedia information systems," Knowledge and Data Engineering, IEEE Transactions on, vol. 13, pp. 607-622, 2001.

- S. Clippingdale, M. Fujii, and M. Shibata, "Multimedia Databases for Video Indexing: Toward Automatic Face Image Registration," in Multimedia, 11th IEEE International Symposium on, 2009, pp. 639-644.

- H. Weiming, D. Xie, F. Zhouyu, Z. Wenrong, and S. Maybank, "Semantic-Based Surveillance Video Retrieval," Image Processing, IEEE Transactions on, vol. 16, pp. 1168-1181, 2007.

- L. Hung-Yi and C. Shih-Ying, "Indexing and Querying in Multimedia Databases," in Intelligent Information Hiding and Multimedia Signal Processing, Fifth International Conference on, 2009, pp. 475-478.

- C. Shi-Kuo, V. Deufemia, G. Polese, and M. Vacca, "A Normalization Framework for Multimedia Databases," Knowledge and Data Engineering, IEEE Transactions on, vol. 19, 2007, pp. 1666-1679.

- W. Kienzle, G. Bakir, M. Franz, and B. Scholkop, "Face Detection - Efficient and Rank Deficient," Advances in Neural Information Processing Systems, vol. 17, pp. 673-680, 2005.

- T. Miyake, T. Asakawa, T. Yoshida, T. Imamura, and Z. Zhong, "Detection of view direction with a single camera and its application using eye gaze," in Industrial Electronics, 2009. IECON '09. 35th Annual Conference of IEEE, 2009, pp. 2037-2043.