A Novel Medical Image Registration Algorithm for Combined PET-CT Scanners Based on Improved Mutual Information of Feature Points

Автор: Shuo JIN, Hongjun WANG, Dengwang LI, Yong YIN

Журнал: International Journal of Engineering and Manufacturing(IJEM) @ijem

Статья в выпуске: 5 vol.1, 2011 года.

Бесплатный доступ

Accurate registration of PET and CT images is an important component in oncology, so we aim to develop an automated registration algorithm for PET and CT images acquired by different system. These two modalities offer affluent complementary information: CT provides specificity to anatomic findings, and PET provides precise localization of metabolic activity. In this paper, we proposed an improved registration method that can accurately align PET and CT images. This registration algorithm includes two stages. The first stage is to deform PET image based on B-Spline Free Form Deformations (FFD). It is consists of three independent steps. After the feature points of PET and CT images have been extracted in the preprocessing step. As a next step, the PET image is deformed by B-Spline Free Form Deformations (FFD) with feature points of CT images. The second stage is to register PET and CT images based on Mutual Information (MI) of feature points combined with Particle Swarm Optimization (PSO) algorithm and Powell algorithm that are used to search the optimal registration parameters.

Positron Emission Tomography (PET), Computed Tomography (CT), Registration, Feature Points, Freeform Deformation, Mutual Information

Короткий адрес: https://sciup.org/15014211

IDR: 15014211

Текст научной статьи A Novel Medical Image Registration Algorithm for Combined PET-CT Scanners Based on Improved Mutual Information of Feature Points

Based on image various aspects of the disease process for clinical diagnosis, noninvasive technologies are divided into two types—structural and functional images. Computed tomography (CT) and magnetic resonance (MR) imaging provide high-resolution images with anatomical information, however, single-photon emission computed tomography (SPECT) and positron emission tomography (PET) provide functional information, but with coarser resolution[1]. Registering these two types of images addresses the problem of the insufficiency of the information provided by a single modality[2]. Integrating anatomical and functional datasets to improve

* Corresponding author:

both qualitative detection and quantitative determination in patient with a suspected cancerous lesion is expected to provide much more information.

Recently, the use of combined PET/CT systems has increased sharply in many radiological applications due to better image quality and higher throughput than that achieved with the conventional standalone PET scanners. PET-CT using FDG, which combines anatomical and functional imaging, is now recognized as an effective tool for diagnosis, prognosis, staging and response to therapy in oncological imaging. Precise image registration can help medical experts to diagnose a disease more efficiently and do their researches on a longterm treatment and a surgery planning. To achieve this objective, image registration technique between images from two modalities is a very important issue. There have been a variety of methods that have been proposed to register multimode images, for instance, B-spline based deformation registration algorithms used for estimating the deformation fields in [3]. Tower algorithm based on B-spline[4] registering medical images from rigid to elastic complete registration from whole to details. Finite element model (FEM) is used to non-rigid registration of medical images[5], significantly improved the accuracy of coregistered images. The algorithm that adaptive combination if intensity and gradient field mutual information (ACMI)[6] combines the nonlinear weighted function of two kinds of information function, having better robustness than traditional.

Currently, the main disadvantage of registration is the potential misalignment between the structures of the cancer or the defined region of interest (ROI) in PET and CT data[7]. This misalignment can significantly affect the results and increase the chances of diagnostic misinterpretation. The main cause of this misregistration is the respiratory motion or setup error of patients in the process of radiotherapy.

In this paper, we proposed an automated registration method of CT images and PET images, and we aim to improve the accuracy and robustness of coregistered image. The registration method we proposed is combined B-spline free form deformation based on feature points of PET and CT images with mutual information using Particle Swarm Optimization (PSO) algorithm[8] and Powell algorithm.

-

2. Methods

-

2.1. Free Form Deformation Based on Feature Points of PET and CT Images

-

2.1.1 Contour Extraction Algorithm of CT image

The proposed registration method includes two major components based on our recent research and each stage is based on the information and feature of images[9]. The method takes advantage of local control of free form deformation[10], and in the stage of using mutual information, PSO algorithm[8] and Powell algorithm are used to search the best registration parameters. More accurate and robustness coregistered image can be achieved as a result

The first stage, free from deformation based on feature points of PET and CT images consists of three steps. The effect of deforming images using FFD method relies on the accurate extraction of the features points of PET and CT images. CT image with higher resolution than PET image can best reflect the details of the anatomy of the human body, so we adopt the CT image as a benchmark to deform the PET image. The first two steps are to extract the feature points of PET and CT images independently, and the last step is to deform PET image based on B-spline free form deformation.

-

• The first step: According the window width and window level of CT image, transform original image pixel values of each point into the range of 0~255 .

-

• The second step: Segment threshold of the obtained image by first step. Define threshold value as the maximum gray value f max ×0.85 ( f max =255 ).

• The third step: Using Roberts edge detection operator[11] to find all edge structure of the obtained image by second step. The Roberts operator is defined as:

g (x, y) = {[f(x, y) - f (x + 1, y +1)]2 + [f (x + 1, y) - f (x, y +1)]2 }2

where f(x,y) is the gray value of (x,y), and g(x,y) is the new gray value of that point.

-

• The last step: Using 3x3 structure as shown in Fig.1 to search the image processed by the third step.

5

6

7

4

P

0

3

2

1

-

2.1.2 Contour Extraction Algorithm of PET image

Fig. 1. 3×3 Structure used for search the contour line

-

• The first step: Transform original image pixel values of each point into the range of 0~255 based on linear transformation.

-

• The second step: Segment threshold of the obtained image by first step. Define threshold value as the maximum gray value f max ×0.05 ( f max =255 ).

-

• The third and forth steps of PET image are same as these of CT image.

-

2.1.3 Deform PET Image Based on B-Spline Free Form Deformation

B-spline based FFD method[12] is a powerful tool for three dimension deformation model, it has been applied to the heart of the image tracking and motion analysis. As B-spline with a local deformation (interpolation) effect, moving of some feature points of two-dimension image only affects the nearby points and will not make the whole points to be deformed. This section is divided into four steps:

-

• The first steps: Extract the feature points of PET and CT images respectively by automatic recognition algorithm, and then calculated contour mass center of the PET and CT images.

-

• The second step: Move the mass center of PET image to the same location as CT image, and then rotate register images based on mass center as the center of a circle by using maximum mutual information.

-

• The third step: Calculate the corresponding feature points of PET and CT images for deformation respectively, composed of the same angle of contour line.

-

• The last step: Deform PET image by B-spline free form deformation, and the PET image obtained of nearly shape to CT image.

-

2.2. Mutual Information Combined with PSO and Powell Algorithm

-

2.2.1 Theoretical Basis of Mutual Information

B-spline function only can generate a smooth curve approaching the control points, but in most cases the smooth curve will not pass through control points, therefore, the deformed image and reference image may not be completely matched, so it is necessary to use mutual information method for accurate registration.

Mutual information is a fundamental concept in information theory[13], usually used to describe the statistical correlation between two random variables, or used to measure the amount of information of a variable contains another. Entropy can be used to describe the mutual information.

Let A, B be the two random variables, pA(a) , pB(a) are their marginal probability, and pAB(a,b) are joint probability, respectively. If pAB(a,b)= pA(a) · pB(a) , A,B are statistic independence. Mutual information I ( a , b ) is used to measure the distance of the joint probability distribution p AB (a,b) of image grey value and the joint probability p A (a) · p B (a) when the two images are treated as independence variable, that is, measure the dependence of the two images. When precise match, the dependence between images is high, and the mutual information is greater; when misalignment, the dependence reduced and the mutual information is lower. That is to say, when two images are geometric alignment, the mutual information of the intensity of corresponding voxel values is maximum.

The mutual information is formulated as:

PAB ( a , b )

PA ( a ) ■ PB ( b )

I ( A , B ) = Z PARaa , b ) log a , b AB

The relationship of mutual information and entropy can by following equation:

'H ( A ) + H ( B ) - H ( A, B )

I ( A , B ) = ^ H ( A ) - H ( A / B )

H ( B ) - H ( B / A )

where H(A), H(B) are the entropy of A and B respectively, H(A,B) is the joint entropy of A,B, H(A/B), H(B/A) are the condition entropy when B or A is known respectively.

-

2.2.2 Space Transformation Model

The preprocessed images based on B-spline FFD[10] mentioned in second stage can be regarded as rigid body, and the transform can be divided for rotation and translation transformation. The transformation model is

P (x) = Rx + T

where, x=(x, y, z) is spatial location of pixel; R is a 3×3 rotation matrix, and T is a 3×1 translation vector. The constraint condition of matrix R is RTR=I, det I =1, RT is a transpose of matrix R, and I is unit matrix.

Assuming the image rotation angels around x, y, z axis is α , β , γ and the translation value is T x , T y , T z respectively.

R = RyRpRt cos в 0

sin в

|

cos y |

|

|

- sin y 0 |

|

|

0 |

- sin в |

|

1 |

0 |

|

0 |

cos в |

sin у0

cos у 0

"10

■ 0 cos a

0 - sin a

0 sin a cos a

T = ^ Tx , Ty , Tz ] T

In the case of two dimensions, there are three parameters in the image plane: translation value T x , T y , and rotation angels φ around original point. We aim to find the best three parameters T x , T y and φ , so that the mutual information of PET and CT images is maximum.

-

2.2.3 Optimization Algorithm of Best Transformation Parameters

Medical image registration process is essentially an optimization problem of multi-parameter, that is to say, the registration process is to find several space transformation parameter values which make the mutual information maximum. Therefore, the registration problem is to optimize the registration function. Optimal registration transformation parameters can be obtained by the registration cost function extreme values based on the optimization algorithm. In this stage, Particle Swarm Optimization (PSO) algorithm[14] and Powell algorithm are combined organically, that is, after each an iteration of PSO algorithm, increasing a local optimizing algorithm on the current whole optimal solution.

-

a) PSO Algorithm Theory

PSO algorithm is an evolutionary computation technology based on the swarm intelligence method, primarily used to seek for the global optimal solution. The basic idea is that potential solution for each optimization problem is seen to be a bird in the search space, named as particles, and their fitness is determined by an optimal objective function. In addition, each particle has a speed to determine their flying direction and distance, then the particles will fight search in the solution space following the current optimal particle.

To start with, initializing a group of random particles (random solutions). Then, find the optimal solution through evolution or iteration. Each particle updates itself by tracking two extreme values: one extreme is the optimal position found by particle itself, and this position is called individual extremum pBest; another extreme is the optimal position found by the whole particle swarm, and often called global extremum gBest.

-

b) Algorithm Process of Search the Optimal Parameter

-

• Stepl: Initially, the solution space is constituted by the rotation ф , translation component Tx in X direction and translation component T y in Y direction, then choose the particle number n, the maximum iteration step S appropriately, and n particles are randomly assigned to a position in the solution space.

-

• Step2: For one step of iteration, calculate the speed and position of each particle.

-

• Step3: Local optimizing of obtained the global optimal solution using Powell algorithm. If the global optimal solution is less than the minimum allowable error, or the steps of iteration are over S, end the iteration, and the corresponding solution is the ultimate request solution, otherwise turn Step3.

-

3. Relusts and Discussion

-

3.1. Qualitative Analysis

-

3.2. Quantitive Analysis

We proposed the registration algorithm based on the feature of images, which can reflect the significant information; and in this method deform PET image using B-splines FFD algorithm according feature points of CT image that has the property of local deformation, only affects the nearby points and will not make the whole points to be deformed when moving of some feature points of two-dimension image; then registration the multimode images based on mutual information combined with PSO and Powell as optimization algorithm, aimed to search the best transformation parameters. It can be confirmed that the proposed algorithm has a better effect in the registration process by the following qualitative and quantitative analysis.

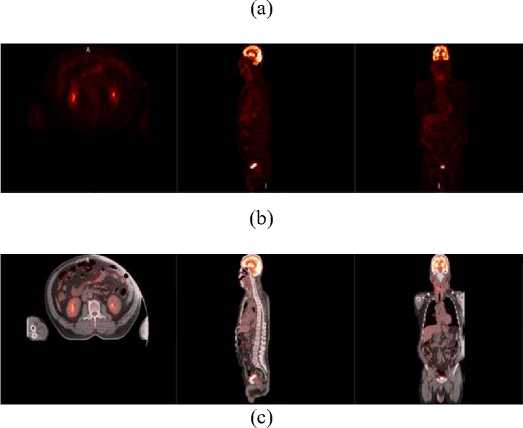

Original datasets for the experiments are obtained from Shandong Tumor Hospital. Fig. 2 shows the results of proposed algorithm qualitatively. Fig. 2(a) is CT images which can provide high-resolution images with anatomic information. Fig. 2(b) is PET images which can provide functional information, but with coarser resolution. So we proposed the registration method to integrate information of PET and CT. Fig. 2(c) is result of PET and CT registration. As shown in Figure 2, the structure is successfully matched and the coregistered images provide both anatomic and functional information.

Fig. 2. Registration images of proposed algorithm (a) CT images, (b) PET images and (c) coregistered PET/CT images

The quantitative evaluation results compared our proposed algorithm with other registration method including maximum mutual information and multi-level B-splines are shown in Tab. 1. The maximum information entropy (MI entropy), correlation coefficient (CC) and root mean square error (RMS error) are used to evaluate registration method. Analyzing the qualitative indexes for each method, we can concluded that our proposed registration algorithm outperform other traditional methods.

Table 1. Comparisons among different registration methods

|

Registration Methods |

Qualitative Indexes |

||

|

MI entropy |

CC |

RMS error |

|

|

Before registration |

0.3084 |

0.6484 |

51.8244 |

|

Maximum MI |

0.3154 |

0.6717 |

51.7608 |

|

Multi-level B-splines |

0.3425 |

0.6923 |

50.7538 |

|

The proposed method |

0.3725 |

0.7012 |

50.5542 |

-

4. Conlusion

We developed an automated registration algorithm for PET and CT images. As the degree of freedom greater and the better local deformation effect, we adopted the B-spline FFD algorithm to deform PET image based on the CT feature points, and then adopted the mutual information to register PET and CT images which method combined with PSO and Powell algorithm.

The preliminary results indicate that the proposed algorithm is an effective and automated registration method for PET and CT images with higher accuracy, faster speed, better robustness and other properties.

Acknowledgements

This work is supported by the Shandong Natural Science Foundation (ZR2010HM010 and ZR2010HM071).

Список литературы A Novel Medical Image Registration Algorithm for Combined PET-CT Scanners Based on Improved Mutual Information of Feature Points

- Schöder, H., et al., PET/CT: a new imaging technology in nuclear medicine. European Journal of Nuclear Medicine and Molecular Imaging, 2003. 30(10): p. 1419-1437.

- Grosu, A.-L. and W.A. Weber, PET for radiation treatment planning of brain tumours. Radiotherapy and Oncology, 2010. 96(3): p. 325-327.

- Paquin, D.C., D. Levy, and L. Xing. 2009, DigitalCommons@CalPoly.

- Xingang, L., C. Wufan, and C. Guangjie. A New Hybridized Rigid-Elastic Multiresolution Algorithm for Medical Image Registration. in Engineering in Medicine and Biology Society, 2005. IEEE-EMBS 2005. 27th Annual International Conference of the. 2005.

- Archip, N., et al., Non-rigid Registration of Pre-procedural MR Images with Intra-procedural Unenhanced CT Images for Improved Targeting of Tumors During Liver Radiofrequency Ablations, in Medical Image Computing and Computer-Assisted Intervention – MICCAI 2007, N. Ayache, S. Ourselin, and A. Maeder, Editors. 2007, Springer Berlin / Heidelberg. p. 969-977.

- Jiangang, L., T. Jie, and D. Yakang. Multi-modal Medical Image Registration Based on Adaptive Combination of Intensity and Gradient Field Mutual Information. in Engineering in Medicine and Biology Society, 2006. EMBS '06. 28th Annual International Conference of the IEEE. 2006.

- Khurshid, K., K.L. Berger, and R.J. McGough. Automated PET/CT brain registration for accurate attenuation correction. in Engineering in Medicine and Biology Society, 2009. EMBC 2009. Annual International Conference of the IEEE. 2009.

- Hakjae, L., et al. Image registration for PET/CT and CT images with particle swarm optimization. in Nuclear Science Symposium Conference Record (NSS/MIC), 2009 IEEE. 2009.

- Takahashi, Y., et al. Feature Point Extraction in Face Image by Neural Network. in SICE-ICASE, 2006. International Joint Conference. 2006.

- Jieqing, F. and P. Qunsheng. B-spline free-form deformation of polygonal objects through fast functional composition. in Geometric Modeling and Processing 2000. Theory and Applications. Proceedings. 2000.

- Rosenfeld, A., The Max Roberts Operator is a Hueckel-Type Edge Detector. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 1981. PAMI-3(1): p. 101-103.

- Tustison, N.J., B.A. Avants, and J.C. Gee. Improved FFD B-Spline Image Registration. in Computer Vision, 2007. ICCV 2007. IEEE 11th International Conference on. 2007.

- Butz, T. and J.-P. Thiran, Affine Registration with Feature Space Mutual Information, in Medical Image Computing and Computer-Assisted Intervention – MICCAI 2001, W. Niessen and M. Viergever, Editors. 2001, Springer Berlin / Heidelberg. p. 549-556.

- Lu, Z.-s., Z.-r. Hou, and J. Du, Particle Swarm Optimization with Adaptive Mutation. Frontiers of Electrical and Electronic Engineering in China, 2006. 1(1): p. 99-104.