A Real-time Light-weight Computer Vision Application for Driver’s Drowsiness Detection

Автор: Saikat Baul, Md. Ratan Rana, Farzana Bente Alam

Журнал: International Journal of Engineering and Manufacturing @ijem

Статья в выпуске: 2 vol.14, 2024 года.

Бесплатный доступ

The issue of drowsiness while operating a motor vehicle is an increasingly common occurrence that has been found to contribute significantly to a substantial number of fatal accidents annually. The urgency of the current situation necessitates implementing a solution to mitigate accidents and fatalities. The present study aims to investigate a less intricate and less expensive but remarkably efficient approach for detecting drowsiness in drivers, in contrast to the existing complex systems developed for this purpose. This paper focuses on developing a simple drowsy driver detection system utilizing the Python programming language and integrating the OpenCV and Dlib models. The shape detector provided by Dlib is employed to accurately determine the spatial coordinates of the facial landmarks within the given video input. This enables the detection of drowsiness by monitoring various factors such as the aspect ratios of the eyes, mouth, and the angle of head tilt. The performance evaluation of the system under consideration is conducted through the utilization of standardized public datasets and real-time video footage. When tested with dataset image inputs, the system showed exceptional recognition accuracy. The performance comparison is done to show the efficacy of the proposed approach. Traveling can be made safer and more effective by combining the proposed system with additional safety features and automation technology in cars.

Drowsiness detection, computer vision, Dlib, OpenCV, ear, mar, head tilt angle

Короткий адрес: https://sciup.org/15019325

IDR: 15019325 | DOI: 10.5815/ijem.2024.02.02

Текст научной статьи A Real-time Light-weight Computer Vision Application for Driver’s Drowsiness Detection

The driver's attention is hampered by drowsiness and fatigue, particularly during extended periods of travel or during nighttime driving [1]. The event is caused by long periods of driving and exhaustion. The growing number of collisions on the road caused by drivers' reduced levels of attentiveness has evolved into a significant challenge for modern society. Several of these collisions directly result from the driver's existing health issues. On the other hand, most of these collisions are caused by driver tiredness and sleepiness on the part of drivers [1]. Accidents on the road caused by fatigued drivers are more likely to be severe, which can result in serious injuries and even deaths.

The Road Safety Foundation estimates that 7713 people died in traffic accidents last year in Bangladesh [2]. At least three students are killed on average every day, making the number of student road fatalities 16%. Which is a significant loss for the nation. 81% of persons killed in traffic accidents were adults. Most people killed or seriously injured in traffic accidents were their household's primary or only earners. Nearly Tk 350 billion in human resources is lost yearly due to road accidents [2].

Mohammad Mahbub Alam Talukder, Md. Shahidul Islam, Ishtiaque Ahmed, and Md. Asif Raihan listed fourteen unique causes of driver fatigue [3]. In their investigation, the highest proportions were found for the following causes of fatigue: malnutrition resulting from improper dietary consumption, pressure from business owners and executives, longer driving distances, lack of recreational breaks during lengthy drives, overlapping and roster duty, and income concern. The bus driver who recently crashed into a ditch and murdered 19 passengers was not taking breaks. After operating the vehicle for 33 hours, he was fatigued and reportedly lost control of the bus due to drowsiness [4].

Numerous research investigations demonstrate using diverse computer vision techniques, machine learning algorithms, and feature analysis methods to detect drowsiness and fatigue in drivers solely through eye-based approaches [5,6,7,8,9,10,11,12,13,14,15]. Several studies employed eye and mouth movements as variables in their research [16,17,18,19,20]. Many models require improvement in their performance under suboptimal lighting conditions. Some systems sometimes fail to detect the eyes, mainly when the driver wears glasses. Tilting the head is a notable indicator of drowsiness, a sign that has yet to be extensively explored in most research studies. Some studies have acknowledged this as an area for future investigation [16]. The head tilt angle has been included in our proposed system to tackle this issue and enhance the efficacy of drowsiness detection.

Drowsiness in humans is identifiable by a limited set of distinct movements and facial expressions, including the gradual closure of the eyes, the opening of the mouth in a yawn, the relaxation of the jaw, and the tilting of the head. The primary objective of this study is to investigate the utilization of eye tracking, mouth tracking, and tilt angle analysis for drowsiness detection and the classification of drivers as drowsy. To facilitate the real-time implementation of the model, the input video can be obtained by attaching a camera to the vehicle's dashboard. This camera would capture the driver's facial features, hands, upper body, and obstructions, such as non-tinted eyeglasses.

The combined use of eye tracking, mouth tracking, and tilt angle analysis in identifying drowsiness among drivers has the potential to enhance road safety within the community substantially. By monitoring physiological and behavioral signals, it becomes feasible to detect indications of sleepiness in drivers and implement suitable measures to mitigate accidents resulting from driver fatigue. Detecting drowsiness and fatigue in drivers within real-time systems is primarily accomplished through eye-based methodologies. Numerous studies have incorporated eye and mouth movements as variables in their research endeavors. Combining the head tilt angle in our proposed system aims to improve the effectiveness of drowsiness detection, a topic identified for further investigation in previous studies. The above uniqueness is the key difference between our and other real-time systems. The focus on driving safety, nonintrusiveness, real-time monitoring, cost-effectiveness, integration potential, customizability, continuous monitoring capabilities, and uniqueness make the proposed approach for drowsiness detection a good choice.

Drowsiness detection systems can identify signs of driver fatigue early so actions can be taken quickly to avoid accidents. It helps protect the driver, passengers, and other road users. The proposed system can help to keep the roads safer for everyone by alerting a driver who is falling asleep. The data collected by the system can contribute to a better understanding of the prevalence and patterns of drowsy driving. More effective road safety programs and policies can be made with this knowledge. Combining the proposed system with other safety features and automation technologies in vehicles can make traveling safer and more efficient.

The remaining portion of the paper is structured as follows. Section 2 discusses the relevant literature; Section 3 outlines the methodology used in the system, followed by Section 4, which presents the experimental results, comparison, and discussion. Finally, Section 5 offers the conclusion of the study.

2. Related Work

Over the past two decades, a significant amount of research has been conducted, and many research papers have proposed various methodologies for identifying indications of fatigue and drowsiness in drivers. The literature review overviews various innovations in real-time drowsiness detection systems.

Mehrdad Sabet, Reza A. Zoroofi, Khosro Sadeghniiat-Haghighit, and Maryam Sabbaghian have developed a novel system to monitor and effectively identify driver fatigue and inattentiveness occurrences [5]. The system applies cutting-edge technologies linked to computer vision and AI. A feedback system using Local Binary Patterns (LBP) was implemented to ensure accurate face tracking to reduce the risk of divergence and potential target loss. The integration of feedback systems enables effective collaboration between detection and tracking modules. The analysis of the eye state was conducted using a Support Vector Machine (SVM) classifier, with features extracted using the LBP operator.

Antoine Picot, Sylvie Charbonnier, and Alice Caplier proposed an algorithm to detect drivers’ drowsiness by analyzing visual signs extracted from high-frame-rate videos [6]. This study examines various visual features using a standard database to assess their significance in detecting drowsiness by applying data mining. The study incorporates fuzzy logic to integrate notable blinking attributes, encompassing duration, amount of eye closure, frequency of the eye blinks, and amplitude-velocity ratio.

According to Vandna Saini and Rekha Saini, eye sensor systems usually use IR or near-IR LEDs to illuminate the driver’s pupils, allowing eye detection [21]. The computer algorithms analyzed the frequency and length of eye blinking to ascertain the state of drowsiness. The surveillance camera system was used to identify symptoms of fatigue, like yawning and sudden head nods, by monitoring facial expressions and head angles.

According to Jay D. Fuletra and Dulari Bosamiy, drowsiness and falling asleep while operating a vehicle are identified as the primary factors that cause fatal accidents [7]. The various methods for detecting drowsiness are categorized and differentiated based on their characteristics. One of the techniques employed in image processing is computer vision-based methods. By tracking the driver’s eye states and facial movements, the system identifies drowsy states based on multiple categories of driver photographs.

Yong Du, Peijun Ma, Xiaohong Su, and Yingjun Zhang proposed an effective method for detecting driver fatigue, focusing on a specific aspect [8]. The inter-frame variance might be used for observing the facial region encompassing the color data. When the facial area is detected, it is partitioned according to a composite derived from a model of skin tones. Taking advantage of the crystallization method can be used to determine the precise placement of the eyes from the face region. Before that, every feature of the human eye can be used to evaluate its state. The validation of driver fatigue is accomplished by examining the modifications in the states of the eyes.

Shinfeng D. Lin, Jia-Jen Lin, and Chin-Yao Chung proposed a system for recognizing sleepy eyes to detect drowsiness [9]. The determination of the facial region is initially conducted by utilizing a cascaded AdaBoost classifier that incorporates Haar-like features. In addition, the eye region’s localization is achieved by using the Active Shape Models search algorithm. The binary pattern is subsequently used, and edge detection techniques are employed to extract the distinct characteristics of the eyes and figure out their present state.

Shruti Mohanty, Shruti V Hegde, Supriya Prasad, and J. Manikandan analyzed ocular and oral activity to detect signs of fatigue and categorize drivers as exhausted [16]. To facilitate the real-time implementation of the model, they obtained the input video using a camera on the vehicle’s dashboard. This setup allows for capturing the driver’s facial features. This setup allows for capturing the driver’s facial features, like the methodology employed by the Dlib approach. Specifically, the 68 facial landmark detectors provided by the Dlib library’s pre-trained model are utilized for this purpose. A face detection system was developed, which utilized the HOG technique. The proposed algorithm for monitoring the driver’s blinking pattern is the EAR. On the other hand, evaluating whether the driver had yawning behavior in the frames of the uninterrupted video stream relies on the MAR.

The approach recommended by Jithina Jose et al. uses Python, Idlib, and OpenCV to develop a real-time system with an automated camera to track the driver’s moving eyes and yawning [17]. A camera was employed to track the driver’s blinks and instances of yawning. A trigger was activated to notify the driver. The proposed system detects the level of drowsiness in the driver. It issues a warning alert when there is a close correlation between eye closures and yawns within a specific timeframe.

Wanghua Deng and Ruoxue Wu introduced a system named DriCare, designed to identify the fatigue levels of drivers through the analysis of video images [18]. This system can detect various indicators of fatigue, including yawning, blinking, and the duration of eye closure. Notably, DriCare accomplishes this without requiring drivers to wear any additional devices on their bodies. Due to the limitations observed in prior algorithms, a novel face-tracking algorithm was proposed to enhance tracking precision. A novel facial region detection method was developed, utilizing 68 key points. Subsequently, the facial regions are employed to assess the drivers’ cognitive and emotional state. Integrating the visual and informal characteristics in DriCare enables the system to effectively notify drivers of their fatigue through a warning mechanism.

Several issues hamper the accuracy of drowsy state identification systems and need improvement. It is challenging to identify sleepy states precisely because of changes in lighting, background changes, and facial orientations. The detection of the ideal head angle tilt, essential in recognizing drowsiness, is one area that might be improved. These systems’ alerting capabilities must also be enhanced to inform drivers when detecting drowsiness. Furthermore, imprecision and computational complexity add to the difficulty of correctly recognizing drowsiness. Another challenge is precisely determining the human eye’s location and size, which impacts the dimensions of how well drowsiness is detected. In addition, challenges may arise when the driver wears eyeglasses, presenting a potential obstacle to accurately identifying drowsiness.

3. Methodology

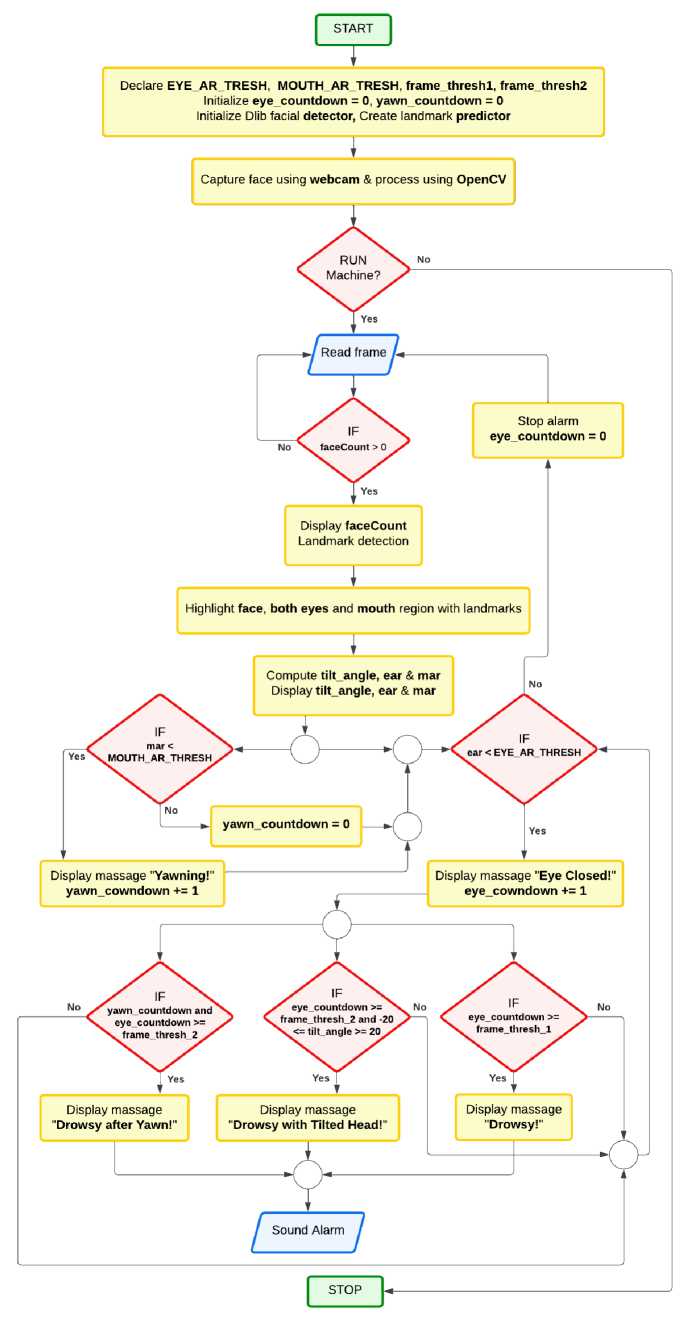

All the phenomenon of drowsiness while operating a vehicle has been identified as a significant contributor to severe vehicular accidents. A model has been developed to mitigate the risks associated with driver drowsiness in response to this issue. Fig. 1, displays a graphic representation of the flowchart illustrating the driver fatigue detection system.

-

3.1. System Workflow

The system will work in the following manner:

-

• Whether pre-recorded or real-time, the input video undergoes a process wherein each frame is resized and transformed into grayscale.

-

• Utilize Dlib to accurately detect and identify the facial features in the provided video frame.

-

• Identification of (x, y) coordinates for various regions of the face involves the detection of a total of 68 points.

-

• The process of identifying both eyes and mouth regions involves localizing the landmarks.

-

• The coordinates of the left eye’s leftmost corner and the right eye’s rightmost corner are used to calculate the head tilt angle based on the “atan2” formula.

-

• The coordinates of both eyes and the mouth are utilized to calculate the eye aspect ratio for both eyes and the mouth aspect ratio for the mouth based on the Euclidean distance.

-

• Then, depending on three conditions, the system will alert the driver with an alarm and three messages for three states.

-

3.2. Facial Landmark Detection

Fig 1. Proposed driver drowsiness detection system.

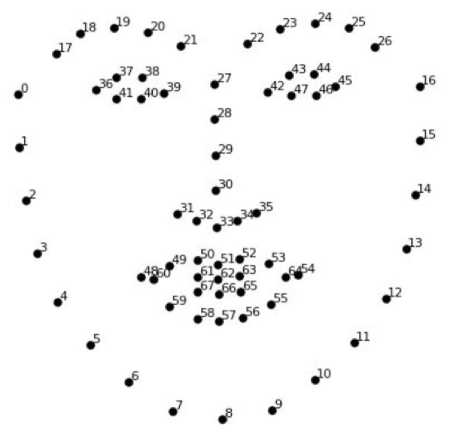

In this method, the drowsiness detector is executed utilizing OpenCV and Python. The detection and localization of facial landmarks were done using Dlib’s facial landmark detector, which was pre-trained. The facial landmark detector of Dlib has two kinds of shape predictor models. These models can localize 5 and 68 landmark points within an image of a human face. A variant of the model capable of localizing 68 landmark points has been developed, employing the GTX model. Both models have undergone training using the i-Bug 300-W dataset [22]. The study involves identifying and analyzing 68 distinct points on the human face, commonly called facial landmarks, which are visually represented in Fig. 2.

Fig 2. Visualization of the 68 facial landmark coordinates, as shown in [23].

The Dlib software employs a face detection methodology that utilizes a Histogram of Oriented Gradients (HOG) along with a Linear Support Vector Machine (SVM) classifier [16]. The proposed approach involves using localized regions to construct histograms based on the frequencies of the gradient direction of an image. It exhibits greater accuracy than Haar cascades in numerous instances due to its comparatively low false positive ratio [24]. Additionally, performing tuning during the testing phase necessitates a lower number of parameters. The method is well-suited for facial detection due to its exceptional ability to explain contour and edge features across different objects. It also executes operations on local cells, thereby enabling the movement of the object to be disregarded. Furthermore, the researchers found that the HOG descriptor detects humans in images [24].

Fig 3. Facial landmark mapping for dataset and real-time video.

Using predefined landmarks facilitates shape prediction by identifying specific facial regions, including eyebrows, eyes, mouth, nose, and jawline. Different variations in the parameters of these notable points result in various expressions of an individual’s characteristics.

-

3.3. Head Tilt Angle Detection

-

3.4. EAR Calculation

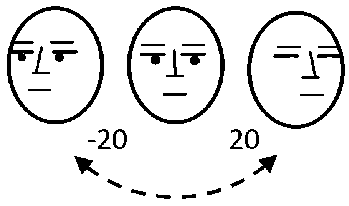

The detection of head tilt angle involves the calculation of the angle of tilt of an individual’s head in comparison with a fixed point or axis. The face’s tilt angle was determined by using detected landmarks. Landmarks at indexes 36 and 45 correspond to the left eye’s left corner and the right eye’s right corner, respectively. These points have been derived from the 68-point facial landmark detection model made available by Dlib.

Fig 4. Head tilt angle visual representation.

Calculating the tilt angle involves calculating the differences in the x-coordinates (dx) and y-coordinates (dy) of the left eye’s leftmost corner and the right eye’s rightmost corner. Subsequently, the angle is computed utilizing the “math.atan2” function, which considers the dx and dy values. The “math.degrees” function converts the angle from radians to degrees.

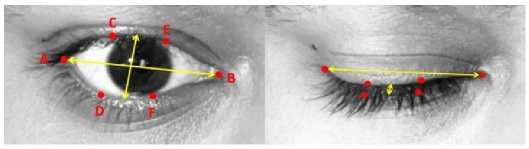

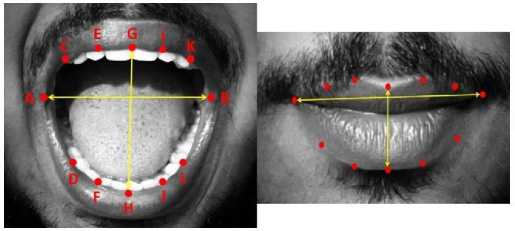

The study utilized the eye aspect ratio (EAR) metric to detect eye blinking. It is calculated as the vertical span between both superior and inferior eyelids divided by the horizontal span of the eye. The physiological process of blinking is characterized by a decrease in the vertical distance separating the superior and inferior eyelids. There is a tendency to increase the span between both eyelids in an open eye.

EAR=^ +^- 2*|AB|

Equation (1) mathematically determines the calculation of EAR [16]. After calculating EAR for both the left and right eye, estimate the average ear. Whenever the average ear count falls below the fixed threshold value of 0.23, it increases the value of blink counts and displays the message “Eye Closed!”. And if the average ear count exceeds the fixed threshold value, it sets the blink count value as 0.

Fig 5. Coordinate to calculate EAR.

-

3.5. MAR Calculation

The study utilized the mouth aspect ratio (MAR) metric to detect yawns. It is calculated as the vertical span between both superior and inferior lips divided by the horizontal span of the mouth. A noticeable increase in the vertical distance between the superior and inferior eyelids characterizes the act of yawning. There is a tendency to decrease the distance between the respective lips in a closed mouth.

MAR =

|CD| + |EF| + |GH| + |IJ| + |KL| 2*|AB|

In Equation (2) mathematically determines the calculation [13]. Whenever the calculated value of the MAR count is more than the fixed threshold value, which is 0.5, it increases the value of yawn counts. It displays the message “Yawning!”. And if the MAR count falls below the fixed threshold value, it sets the value of yawn counts as 0.

Fig 6. Coordinate to calculate MAR.

-

3.6. Alarm Activation

In the proposed system, an alarm will be triggered to awaken the driver in case the eye count, yawn count, and head tilt angle surpass predetermined threshold values for a specific number of consecutive frames. This will be interpreted as an indication that the driver may have fallen asleep. The alarm was triggered on three distinct occasions, specifically:

• yawn count >= 3 AND eye count >= 5

• eye count >= 5 AND (tilt angle >= 20 OR tilt angle <= -20)

• eye count >= 10

4. Results and Discussion

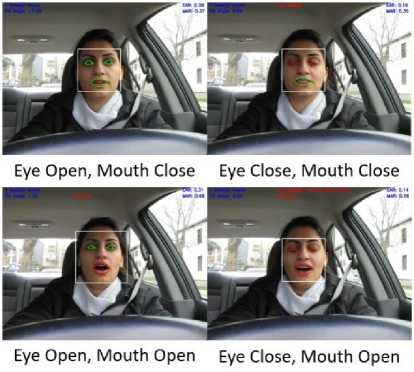

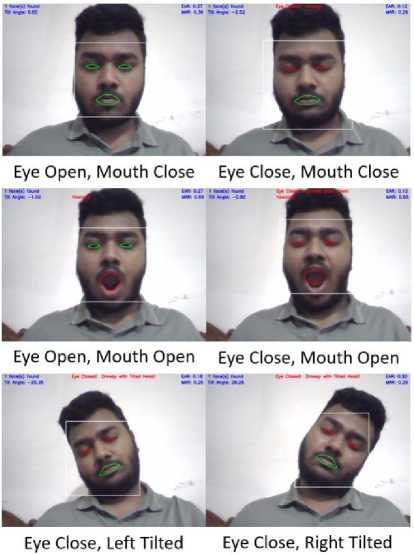

Upon meeting the initial condition, the screen shows the message “Drowsy after Yawn!” as a warning. Upon fulfilling the second condition, the frame will show the message “Drowsy with Tilted Head!”. The screen must display “Drowsy!” if the third condition is met. These conditions and the outputs are visibly represented in Fig. 7 and Fig. 8.

Fig 7. Four separate cases of drowsiness detection on a dataset video.

Fig.8. Six separate cases of drowsiness detection on a real-time video.

This section comprehensively depicts the subjects used to conduct accurate testing and analysis. As our primary data source for evaluating the efficacy of our system, we selected the YawDD Dataset [25]. This study utilized a subset of the YawDD dataset, consisting of 28 videos. Each video was extracted with ten images, resulting in 1120 images. These images were collected under four distinct conditions, allowing for a comprehensive dataset analysis. The experimental conditions encompass four different states: Yawn, No Yawn, Open Mouth, and Closed Mouth.

Table 1. Obtained results.

|

Features |

Accuracy |

|

|

Dataset |

Real-time |

|

|

Blink Detection |

98.39% |

89.48% |

|

Yawn Detection |

99.11% |

90.83% |

Table 1 presents the recognition accuracy results of two distinct approaches: eye closure and yawn detection. The evaluation was conducted using both standard datasets and real-time scenarios. The evaluation of tilt head angle was conducted using only real-time scenarios.

The present study involved the computation of real-time results by utilizing data collected from a sample size of eleven individuals. This sample comprised nine male and two female participants, each recorded at distinct locations. The mean outcome encompassed individuals wearing glasses and those not, thereby calculating the entire sample population. The video frames depict two distinct states, namely the sleepy and non-sleepy states, observed in each trial.

Table 2. Comparison of the proposed method with similar techniques.

|

Article |

Year |

Facial Features |

Model |

Results |

|

[10] |

2019 |

Eye |

Dlib |

Eye detection average accuracy rate of 94%. |

|

[11] |

2023 |

Eye detection average accuracy rate of 94.80%. |

||

|

[12] |

2023 |

The blink detection rate for the dataset is 81%. |

||

|

[13] |

2023 |

Eye detection with 99% accuracy. |

||

|

[14] |

2021 |

Eye detection accuracy of 98%. |

||

|

[15] |

2023 |

Eye detection accuracy of 92.5% approx. on the yawning dataset (YawDD). |

||

|

[16] |

2019 |

Eye and Mouth |

The blink detection rate for dataset and real-time is 93.25% and 82.02%. The yawn detection rate for dataset and real-time is 96.71% and 85.44%. |

|

|

[17] |

2021 |

It achieves a fatigue accuracy of 97% for real-time scenarios. |

||

|

[18] |

2019 |

The proposed app (DriCare) achieved around 92% accuracy. |

||

|

[19] |

2018 |

The accuracy rate of fatigue detection is up to 97.93%. |

||

|

[20] |

2018 |

Eye and Mouth accuracy ranges from 85% to 95%. |

||

|

Proposed System |

2024 |

Eye, Mouth, and Head Pose |

The blink detection rate for dataset and real-time is 98.39% and 89.48%. The yawn detection rate for dataset and real-time is 99.11% and 90.83%. |

Table 2 presents the comparison of results based on the accuracy achieved by other papers. Mainly focusing on eye or mouth detection using the Dlib library. Here, some papers only used eye detection features and some papers used both eye and mouth detection features to detect blinking and yawn detection for driver drowsiness. But none of those papers use eye, mouth, and head pose detection features to detect blinking and yawn detection for driver drowsiness. Many of those papers only used real-time videos to detect drowsiness. But in our proposed Dlib model-based drowsiness detection system both real-time videos and dataset videos are utilized to detect driver drowsiness. In the comparison of the results, it is noticeable that the proposed models demonstrate a superior outcome. The results of this study indicate that the proposed drowsiness detection system based on Dlib has better results than other drowsiness detection systems shown in the comparison table.

Attempted several techniques to fix the issue shown in Fig. 9, but none worked. First, the facial points of the left and right eyebrows and the jawline were used to determine the face-aspect ratio. The problem with this strategy is that the threshold levels for males and females differ. Also, attempted to measure the gap between the jawline and the upper lips. The issue with this strategy is that there are different threshold values for various camera distances.

Fig. 9. The system failed to detect yawning when the mouth was opened too much.

5. Conclusion

This study used the pre-trained 68 facial landmark detectors offered by Dlib. The Histogram of Oriented Gradients (HOG) technique was implemented in the face detector. The system under consideration tracked the driver’s blinking pattern using the Eye Aspect Ratio (EAR) quantitative metric. The driver’s yawning was also detected using the Mouth Aspect Ratio (MAR). The tilt angle was also measured to calculate the angle at which the driver’s head was tilted in each frame of the continuous video stream. The experimental results indicate that using Dlib for detecting eyes and yawns resulted in average test accuracies of 89.48% and 90.83% when applied to real-time videos. Conversely, when the same approach was employed on dataset images, the average test accuracies reached 98.39% and 99.11% for detecting eyes and yawns, respectively.

The observed detection rate in real-time scenarios is lower than in the dataset. The performance of our model has been regarded to be highly satisfactory when operating under optimal to near-optimal lighting conditions, as well as under moderate lighting conditions. The observed real-time detection rate outcomes exhibit a lower rate, which can be due to the diverse lighting conditions encountered during the real-time testing phase. The dataset images display optimal lighting conditions in contrast. The improved detection rate observed for the dataset can be due to this factor.

Future research initiatives will mainly focus on improving the existing model to operate effectively in sub-optimal lighting scenarios, encompassing both poor and mediocre conditions. This will be achieved by incorporating infrared or night vision camera technology into the model’s framework. In this study, we will investigate the comparative capabilities of various libraries, namely Mediapipe and Keras. By exploring and analyzing the functionalities of these libraries, we aim to figure out differences or similarities in their performance. In conclusion, our research aims to develop an optimal algorithm for constructing an IoT device. This device will have advanced software capabilities to provide valuable services to individuals and enhance road safety.

Список литературы A Real-time Light-weight Computer Vision Application for Driver’s Drowsiness Detection

- K.Sakthidasan Sankaran, N. Vasudevan, and V. Nagarajan. Driver Drowsiness Detection using Percentage Eye Closure Method. In 2020 International Conference on Communication and Signal Processing (ICCSP), pages 1422–1425, July 2020.

- Samsur Rahman. Road accidents kill highest number of students in 2022, January 2023. Available: https://en.prothomalo.com/bangladesh/accident/nr3n0krxfx

- Mohammad Mahbub Alam Talukder, Md Shahidul Islam, Ishtiaque Ahmed, and Md Asif Raihan. Causes of Truck and Cargo DriversˆaC™ Fatigue in Bangladesh. Jurnal Teknologi, 65(3), October 2013. Number: 3.

- Madaripur crash: Exhaustion from driving 33 hours led to accident. Available: https://www.dhakatribune.com/nation/2023/03/20/madaripur-crash-exhaustion-from-driving-33-hours-led-to-accident

- Mehrdad Sabet, Reza A. Zoroofi, Khosro Sadeghniiat-Haghighi, and Maryam Sabbaghian. A new system for driver drowsiness and distraction detection. In 20th Iranian Conference on Electrical Engineering (ICEE2012), pages 1247–1251, May 2012. ISSN: 2164-7054.

- Antoine Picot, Sylvie Charbonnier, and Alice Caplier. Drowsiness detection based on visual signs: blinking analysis based on high frame rate video. In 2010 IEEE Instrumentation & Measurement Technology Conference Proceedings, pages 801–804, May 2010. ISSN: 1091-5281.

- Jay D. Fuletra and Dulari Bosamiya. A Survey on Drivers Drowsiness Detection Techniques. International Journal on Recent and Innovation Trends in Computing and Communication, 1(11):816–819, November 2013. Number: 11.

- Yong Du, Peijun Ma, Xiaohong Su, and Yingjun Zhang. Driver Fatigue Detection based on Eye State Analysis. pages 132–137. Atlantis Press, December 2008. ISSN: 1951-6851.

- Shinfeng D. Lin, Jia-Jen Lin, and Chin-Yao Chung. Sleepy Eye’s Recognition for Drowsiness Detection. In 2013 International Symposium on Biometrics and Security Technologies, pages 176–179, July 2013.

- Feng You, Xiaolong Li, Yunbo Gong, Hailwei Wang, and Hongyi Li. A Real-time Driving Drowsiness Detection Algorithm With Individual Differences Consideration. IEEE Access, 7:179396–179408, 2019.

- Ruben Florez, Facundo Palomino-Quispe, Roger Jesus Coaquira-Castillo, Julio Cesar Herrera-Levano, Thuanne Paixão, and Ana Beatriz Alvarez. A CNN-Based Approach for Driver Drowsiness Detection by Real-Time Eye State Identification. Applied Sciences, 13(13):7849, January 2023. Number: 13 Publisher: Multidisciplinary Digital Publishing Institute.

- Jafirul Islam Jewel, Md. Mahabub Hossain, and Md. Dulal Haque. Design and Implementation of a Drowsiness Detection System Up to Extended Head Angle Using FaceMesh Machine Learning Solution. In Md. Shahriare Satu, Mohammad Ali Moni, M. Shamim Kaiser, and Mohammad Shamsul Arefin, editors, Machine Intelligence and Emerging Technologies, Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, pages 79–90, Cham, 2023. Springer Nature Switzerland.

- Khuzaimah Rabiah Mahamad Khariol Nizar and Mohamad Hairol Jabbar. Driver Drowsiness Detection with an Alarm System using a Webcam. Evolution in Electrical and Electronic Engineering, 4(1):87–96, May 2023. Number: 1.

- Nageshwar Nath Pandey and Naresh Babu Muppalaneni. Real-Time Drowsiness Identification based on Eye State Analysis. In 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), pages 1182–1187, March 2021.

- Tasawor Ahmed Sofi and Shabana Mehfuz. Drowsiness and fatigue detection using multi-feature fusion. In 2023 International Conference on Recent Advances in Electrical, Electronics & Digital Healthcare Technologies (REEDCON), pages 672–675, May 2023.

- Shruti Mohanty, Shruti V Hegde, Supriya Prasad, and J. Manikandan. Design of Real-time Drowsiness Detection System using Dlib. In 2019 IEEE International WIE Conference on Electrical and Computer Engineering (WIECON-ECE), pages 1–4, November 2019.

- Jithina Jose, J S Vimali, P Ajitha, S Gowri, A Sivasangari, and Bevish Jinila. Drowsiness Detection System for Drivers Using Image Processing Technique. In 2021 5th International Conference on Trends in Electronics and Informatics (ICOEI), pages 1527–1530, June 2021.

- Wanghua Deng and Ruoxue Wu. Real-Time Driver-Drowsiness Detection System Using Facial Features. IEEE Access, 7:118727–118738, 2019.

- Burcu Kır Savas¸ and Yas¸ar Becerikli. Real Time Driver Fatigue Detection Based on SVM Algorithm. In 2018 6th International Conference on Control Engineering & Information Technology (CEIT), pages 1–4, October 2018.

- Rateb Jabbar, Khalifa Al-Khalifa, Mohamed Kharbeche, Wael Alhajyaseen, Mohsen Jafari, and Shan Jiang. Realtime Driver Drowsiness Detection for Android Application Using Deep Neural Networks Techniques. Procedia Computer Science, 130:400–407, January 2018.

- Vandna Saini and Rekha Saini. Driver drowsiness detection system and techniques: a review. International Journal of Computer Science and Information Technologies, 5(3):4245–4249, 2014.

- Davis E. King. davisking/dlib-models, March 2024. original-date: 2015-09-22T00:30:07Z. Available: https://github.com/davisking/dlib-models.

- Pavel Korshunov and Sébastien Marcel. Speaker Inconsistency Detection in Tampered Video. In 2018 26th European Signal Processing Conference (EUSIPCO), pages 2375–2379, September 2018. ISSN: 2076-1465.

- N. Dalal and B. Triggs. Histograms of Oriented Gradients for Human Detection. In 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), volume 1, pages 886–893, San Diego, CA, USA, 2005. IEEE.

- Shabnam Abtahi, Mona Omidyeganeh, Shervin Shirmohammadi, and Behnoosh Hariri. YawDD: a yawning detection dataset. In Proceedings of the 5th ACM Multimedia Systems Conference, MMSys ’14, pages 24–28, New York, NY, USA, March 2014. Association for Computing Machinery.