Advanced Mobile Surveillance System for Multiple People Tracking

Автор: Sridhar Bandaru, Amarjot Singh

Журнал: International Journal of Intelligent Systems and Applications(IJISA) @ijisa

Статья в выпуске: 5 vol.5, 2013 года.

Бесплатный доступ

The paper develops an efficient people surveillance system capable of tracking multiple people on different terrains. Recorded video on rough terrains is affected by jitters resulting into significant error between the desired and captured video flow. Video stabilization is achieved by calculating the motion and compensational parameters using the LSE analytical solution to minimize the error between present and desired output video captured from an autonomous robot’s camera moving on a rough terrain used for surveillance of unidentified people. This is the first paper to the best of our knowledge which makes use of this method to design mobile wireless robot for human surveillance applications. As the method used is fast then conventional methods, making the proposed system a highly efficient surveillance system as compared to previous systems. The superiority of the method used is demonstrated using different evaluation parameters like RMCD, variability and reliability. The system can be used for surveillance of people under different environmental conditions.

Video-Stabilization, Motion Parameters, Compensation, Error Minimization

Короткий адрес: https://sciup.org/15010423

IDR: 15010423

Текст научной статьи Advanced Mobile Surveillance System for Multiple People Tracking

Published Online April 2013 in MECS

Intelligent image processing systems are becoming increasingly important and have been applied in various fields like biomechanics [1], [2], education [3], [4], [5], [6], medical [7], [8], [9], photography [10], biometrics [11], [12], [13], [14], [15], [16], [17] motion tracking [18], [19], [20], [21], [22], [23], [24], defense [25], surveillance [26], character recognition [27], [28], [29], bioinformatics [30], [31], etc. The development of intelligent systems based on mobile sensors has been aroused by the increasing need for automated surveillance of indoor environments such as airports [32], warehouses [33], production plants [34] etc. Those based on mobile robots are still in their initial stage of progress unlike the case with accustomed non-mobile surveillance devices. The latent of surveillance systems is remarkably amplified by the use of robot which can not only detect events and trigger alarms, but can also be used to communicate with the environment, humans or with other robots for more complex cooperative [35] to active surveillance.

A number of mobile security platforms have been introduced in the past for multiple applications in different areas. Mobile Detection Assessment and Response System (MDARS) [34] is a multi-robot system used to inspect warehouses and storage sites, identifying anomalous situations, such as flooding and fire, detect intruders, and finally determine the status of inventoried objects using specified RF transponders. The Airport Night Surveillance Expert Robot (ANSER) [32] of an Unmanned Ground Vehicle (UGV) makes use of non-differential GPS unit for night patrols in civilian airports and similar wide areas, communicating with a fixed supervision station under control of a human operator. At the Learning Systems Laboratory of AASS, the rivet of a research project has been a Robot Security Guard [34] for remote surveillance of indoor environments aimed at developing a mobile robot platform. The system is able to patrol a given environment, acquire and update maps, keep watch over valuable objects, recognize people, discriminate intruders from known persons, and provide remote human operators with a detailed sensory analysis. The autonomous robots also have been used for delivering crucial information on the different aspects like location of fissure [35], [36], target locations after dynamite explosions [37] and accessing specific areas where survival is hard.

A great need for mobile human surveillance have appeared in the past due to increasing requirement to track and follow criminals [38] and social control [39].

In multiple applications, camera mounted on the robot produces jitters in video to be streamed due to the movement of the robot on uneven random terrains. Complications in the stabilized video streaming may also arise in rough terrains due to geography, obstructions like slopes, steps and turnings etc. These problems cause the camera to shake severely and induces disturbance in video flow. To get best information from the video streaming, a stabilized video is required.

Video stabilization is an important technique employed to reduce the translational and rotational distortions in moving platform applications. The algorithm searches for the object in specified dimensions of the frames and reduces the displacement by fixing the view on the object. The video stabilization process aims to compensate the disturbing motions in video frames.

A number of methods have been used for video stabilization in the past. Some of the previous methods for stabilization include Motion imprinting which is based on Mosaicking with consistency constraint [37].Video stabilization based on 3D perspective model which computes the motion parameters and stabilizes using filters and wraps the resultant video to get the output [40] and Non-Metric Image-Based Rendering for

Video Stabilization [41].

In this paper, we developed an efficient people surveillance system capable of tracking multiple people on different terrains. Video stabilization is achieved by calculating the motion and compensational parameters using the LSE analytical solution to minimize the error between present and desired output video captured from an autonomous robot’s camera moving on a rough terrain used for surveillance of unidentified people. Real time scheme is used to stabilize the undesired fluctuation in position of the surveillance video.

The paper is further divided into following sections. Section II focuses on the design of the robot used in the paper while section III explains the video stabilization algorithm. Section IV explains the evaluation metrics used for the algorithms. Section V elaborates the results and finally section VI presents a brief summary of the paper.

ПОТО* r*|Sie

MOTO* Ml VC* ж

CIRCUIT Ofr RECEIVER

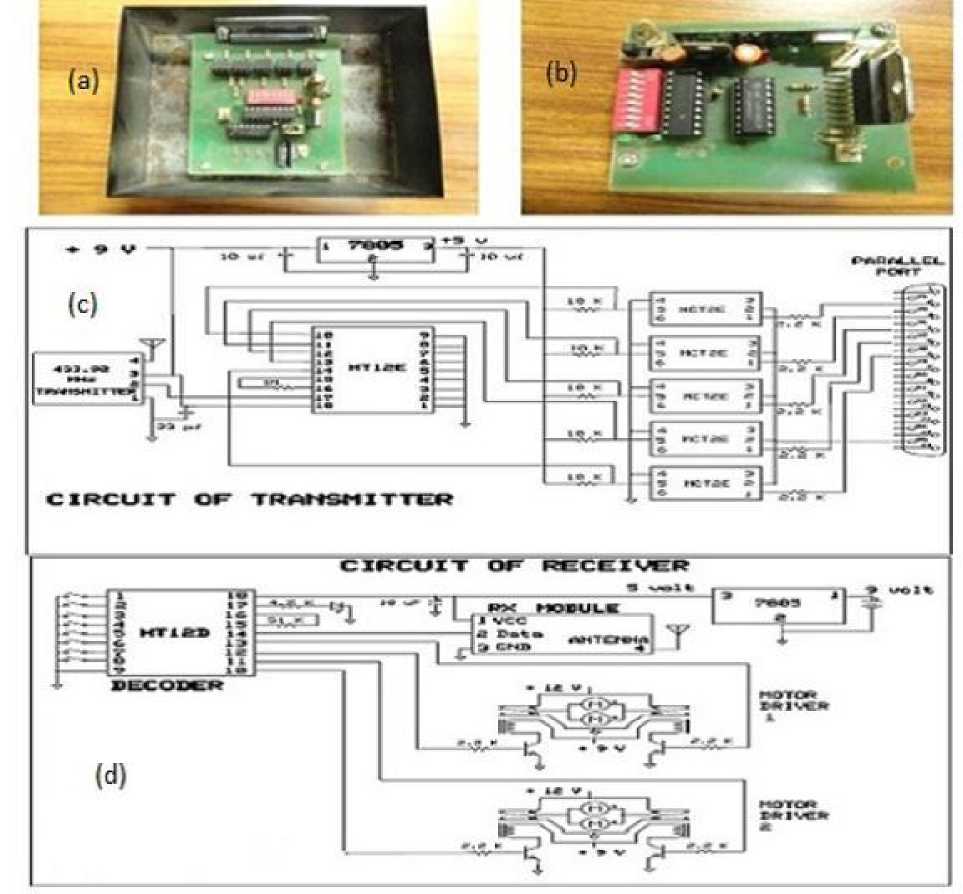

Fig. 1: (a) Transmitter Circuit of Wireless Robot (b) Receiver Circuit of Wireless Robot (c) Datasheet of Transmitter Circuit of Wireless Robot (d) Datasheet of Receiver Circuit of Wireless Robot

-

II. Robot Design

The mobile surveillance robot developed, has a 16 cm by 16 cm chasis with a camera mounted on it. The robot is controlled using a PC controlled transmitter and receiver pair. The transmitter is connected to the personal computer while the receiver is placed on the robot. Once the target person is recognized, video is transmitted to the control room. The controller controls the robot using the transmitter. The signal is transmitted using a transmitter (Fig. 1 (a)) HT12E which encodes the signal to be transmitted to the receiver (Fig. 1 (b)). The transmitter is connected to the PC using MCT2E integrated circuit chips. The information to be sent is encoded on a 12 bit signal sent over a serial channel. The 12 bit signal is a combination of 8 address bits and 4 data bits. The information sent by the transmitter is received by the HT 12D receiver. The information is analyzed as 8 address bits and 4 data bits. If the address bits of the receiver and the address bits sent by the transmitter are same then the data bits are further processed which leads to the movement of the robot. The detailed circuit diagram of the transmitter as well as receiver is shown in fig. 1 (c) and fig. 1 (d).

-

III. Video Stabilization Algorithm

-

3.1 Motion Model

The stabilization algorithm [42] consists of image segmentation blocks which can compute the local and global motion vectors. The parameter estimation block gets its input from the image segmentation block. Once the required parameters for the mathematical model have been computed, a smoothing procedure is used to correct images resulting into desired stabilized video flow. The vital components of the algorithm depend on model parameters, smoothing and correction blocks. In the following section, we describe the motion model to represent the camera trajectory for estimation of motion parameters.

The motion model selection aims to find the best description to note the differences between two successive frames. The motion model computes the changes in the scene by using the differences in order to align two adjacent frames.

The method adopted uses affine model for depicting the rotations, translations and panning in successive video frames due to its simplicity in computation and satisfactory operational conditions. The differences in the pixel location in the successive frames are given by a transformation as:

present frame, (x, y) are the coordinates of the respective pixel in the reference frame, a and ( d u , d V ) are the global rotational and translational parameters between two corresponding images while ∂ represents the depth of focus. The trajectory of the camera can be modeled by computing the 4 unknown parameters mentioned above.

For calculating these motion parameters, the corresponding pixels must be determined through the global motion vectors which are described at length in below. The global motion compensation parameters do not sufficiently represent the moving objects within a frame. They can only state the motion of the camera trajectory but do not account for the objects moving within the frames.

The local motion estimation is used to calculate the change in the position of the objects within the frame as they cannot be calculated from the global motion parameters. Local motion parameter estimation computes the moving objects in the frames and estimates the compensational parameters for stabilization. Together both these parameters compensate the motion of the camera along with that of the objects.

The camera motion can be represented by three different types of motion - rotations, translations and panning. Global motion vector represents all the three motion vectors of camera, if the camera is treated as a single object. The global motion vector cannot be measured by using any extra instruments as the add-ons increase the extra weight on the robot which is unexceptional as it increases the complexity of system. The best way to compute the global motion vectors is by using successive frames of the video flow. Further, the global motion vectors can be equaled to the local motion vectors when there are no moving parts in frames of video flow. This is because if there are no moving parts in the video frame then change in position of object within a frame is only due to the change in position of the camera which is computed by the global motion parameters. The local motion vectors can be calculated by dividing the whole image into small regions, the global motions vectors are thus deduced from the local motion vectors. For example let the fig represent an image then the whole image can be divided into n equal parts and each part motion vector is represented by the search block S. It is to be noted that the number N should be larger than number of parameters in affine model. To calculate the local motion vector we prioritize and give criterion to the abbreviation SAD which means sum of absolute differences. The vector (x,y,t) is evaluated as :

cos a - sin asin a cos a

6 u . d v

Where (u, v) are the coordinates of pixel in the

MIN (SAD( x,y))

MIN ^

ww

EEIf(i, j, t) - f(i-u, j

^ i = 1 j = 1

- V, t-1)| '

In the above equation t represents the pixel value at ( i , j ) and the size of each element is given by w

global motion parameters gives the least error. Let

3.2 Calculating the Global Motion Vectors using LSE

From the equation 2 we get the local motion vector which is given by the coordinates of pixel at that point where SAD is minimum. The method used for estimation of motion vectors will generate errors when there are moving objects in video flow. The LSE analytical solution has been adopted to obtain better precise results.

Fn =

F n - i

P =

x

y

u

v

d cos a - d sin a d sin a d cos a

|

d u, = ( U - c • U + b • V ) / N |

|

|

dv = ( V - c • V - b • U ) / N |

|

|

d = cc 2 + b b |

|

|

a = arctg ( b / c ) |

(3) |

|

N • UU '-U • U ' +N • VV ' -VV ' |

|

|

(4) |

|

|

N • UU -U • U +N • VV -N • V |

|

|

, N • UV '- U • V '- N • U ' V + U '• V |

|

|

b =-------------------------- |

(5) |

|

N • UU - U • U + N • VV - V • V |

|

|

N = E 1 |

(6) |

|

U = E U |

(7) |

|

V = E V |

(8) |

|

U ' = E ( u + my u , v ) |

(9) |

|

V '= e ( v + my u , v ) |

(10) |

|

UU = E u • u |

(11) |

|

VV = E V • V |

(12) |

|

UU '= E u ( u + my u , v ) |

(13) |

|

VV '= E v ( v + my . , v ) |

(14) |

|

UV '= E u ( v + my u , v ) |

(15) |

|

U ' V = E v ( u + my u , v ) |

(16) |

Where u and v are central pixel coordinate of search

u my myv block in previous frame and u,v and u,v are the local motion vectors on u and v direction.

3.3 Parameter Estimation and Error Optimization

The LSE analytical solution returns the best-fit curve; the best fit curve is the curve for which the sum of the deviations squared (least square error) is minimal for a given set of data. This process of minimization of the

Q =

5 x

■ y

The equation 1 can be represented as

Fn = P • Fn-i + Q

This equation represents only the motion between previous frame and current frame while to represent the motion of the video flow from the first frame to the present frame N, the equation is modified as

F n = P n • F n - 1 + Q n =

\ k = n

\ n / k +1 __ ___

F + E П Pm Qk = PF + Qn

/ k = 1 V m = n /

PQ

Where n and n represent the translational movement in u and v direction.

To minimize the error, we use the weight least algorithm and calculate the compensational errors

n

E =E w (put+q - v)

I = 0

p =

E wuv - У wuV Wv

III I I I I

wu 2

ii

- ( E wu i ) 2

error

E wv E q= —

w u 2

-V W^Y WMV:

-(E w u)

Using equation 7 and 8, we compensate the error in u and v directions and can use entire compensational method for rotational movement.

IV. Evaluation Measures

To quantify the registration accuracy of the method, root-mean-squared color difference (RMSCD) between registered images is used [43]:

RMSCD

rc

^ - ZZ (R +|[i, j ] - Rk [ i, j ])2 + (Gk+1[ i, j ] - Gk [ i, J ])2 + (Bk+i[ i, J ]—Bk [ i, j 1)2 , rc i=1 j=1

1/2

Here, Rk, Gk, Bk are the red, green and blue components of frame k, Vk. However, the root-mean-squared intensity difference between the images is used to quantify the registration accuracy, if the images to be registered are in gray-scale. In general, smaller RMSCD or the registration is more accurate when the area covered by the moving targets is much smaller than the background area. Further, we denote Vk as the reference image and Vk+1 as the test image. It should be noted that Vk and Vk+1 need not to be consecutive frames, rather frame Vk+1 may appear even after Vk in the sequence.

The standard deviation of the RMSCD over several registrations is calculated to ascertain the variability of the registration method. The registration will be more stable if the variability is smaller. So the behavior of a stable registration is predictable and is enviable.

Now, the registered images are visually examined in order to determine the registration reliability. The one incorrectly registered is identified. The metric to quantify reliability is the number of correctly registered images over the number of registrations tried in a video. A reliability of 1 is required for successful tracking.

-

V. Results

The results obtained from the simulation enable us to evaluate the capability of the surveillance system. A four wheeled camera mounted robot is used for surveillance of people on random terrains. The system is used at 30 frames per sec with size of a resolution of 640*480 pixels. The stabilization results are simulated taking the video clip captured by Stabilization results for different frames as shown in the figure with frame rates 25.

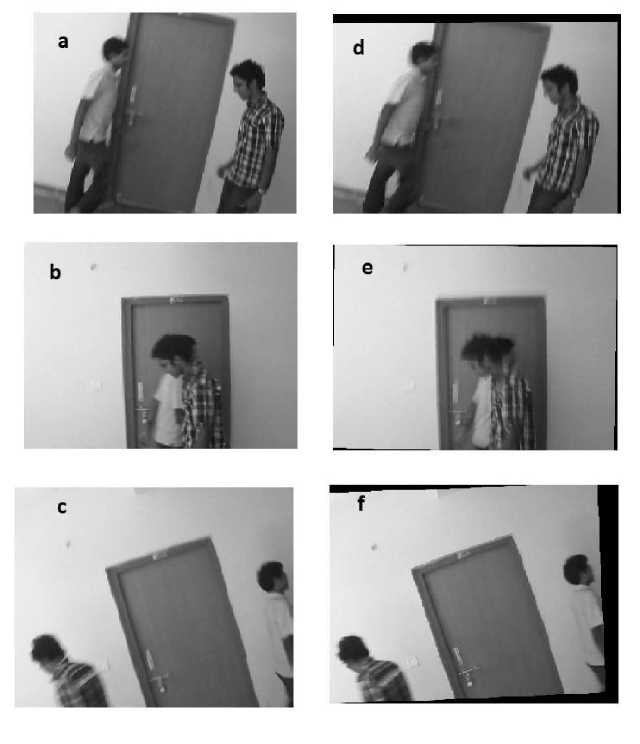

The video stabilization algorithm is applied at 10, 40 ad 65 frames as shown in fig. 2. It is evident from the fig. 2 (a), 2 (b), 2 (c) that the disturbance in the video increases with the increase in the number of video frames. The stabilization results obtained on applying the algorithm effectively show the decrease in the rotational and translational disturbances which are represented in the fig. 2 (d), 2 (e), 2 (f).

Fig. 2: (a) input video frame 10 (b) input video frame 40 (c) input video frame 65 (d) stabilized video frame 10 (e) stabilized video frame 40 (f)

stabilized video frame 65

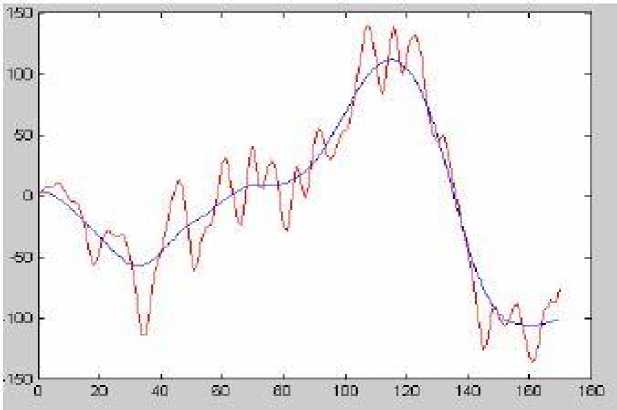

Fig. 3 illustrates the efficiency of the algorithm in smoothing the parameters calculated from motion model represented in red to that of the compensated parameters represented in blue. The compensation result is for the robot moving on a smooth terrain where video captured is affected due to jitters. As the terrain gets rougher the curve gets more deformed and the magnitude changes, however the compensation parameters are smoothed enabling the user to get a better view of the video.

Fig. 3: Compensation of the motion parameters

The average of average RMSCD, average of variability and average of reliability was respectively calculated to 8.7, 1.516 and .97 as shown in table. 1.

Table: 1 Registration result

|

Video No. |

Average RMSCD |

Variability |

Reliability |

|

1 |

9.3 |

.9 |

.99 |

|

2 |

9.2 |

.95 |

.97 |

|

3 |

7.6 |

2.8 |

.95 |

|

Average |

8.7 |

1.516 |

.97 |

Once the video is stabilized, it is transmitted to the controller in the control room. The controller tracks the people by sending wireless signals to the robot from control room. The robot is controlled manually using transmitter connected to the computer. The signal 111100111111 was transmitted to the wireless robot giving it the command to move forward. The command consisted of first 8 bits as address bits while the last 4 bits as data bits. Once the address bits were matched by the receiver HT12D, then only the command corresponding to the data bits was executed.

-

VI. Conclusion

The mobile surveillance system has proved to track people efficiently in different terrains with the help of controller. The algorithm used for video stabilization has proved to be effective in reducing the jitters in video recorded at random terrains. Jitters are decreased to a great extent by adopting the LSE analytical solution. The smoothness of the video flow is ensured by the weighting least error fitting algorithm providing better stabilization results. The system has multiple applications in social and criminal areas.

Список литературы Advanced Mobile Surveillance System for Multiple People Tracking

- Amarjot Singh, S.N. Omkar, “Digital Image Correlation using GPU Computing”, in International Journal of Computational Vision and Bio-Mechanics , Vol. 4, No. 1, June-December 2011.

- Amarjot Singh, S.N. Omkar, “Analysis of Wrist Extension using Digital Image Correlation”, in International Journal on Image and Video Processing (ICTACT), Vol. 2, Issue-3, pp: 343-351, February, 2012

- Amarjot Singh, Srikrishna Karanam, Devinder Kumar, “Constructive Learning for Human-Robot Interaction”, in IEEE Potentials Magazine . (Best Paper Award (3rd (Undergraduate)) in IEEE Region 10 Paper Contest), 2013 Issue.

- Srikrishna Karanam, Amarjot Singh, Devinder Kumar, “Karate with Constructive Learning”, in International Journal on Image and Video Processing (ICTACT), Vol. 2, Issue-3, pp: 382-386, February, 2012

- Sai Sruthi Kotamraju, Amarjot Singh, N Sumanth Kumar and Arundathy Reddy, “Perception of Emotions Using Constructive Learning Through Speech”, in International Journal of Computer and Electrical Engineering , Vol. 2, No. 4, pp (s): 321-325, June, 2012

- Sai Sruthi Kotamraju, Arundatty Reddy, Amarjot Singh, "Augmenting Emotion Recognition Accuracy using Constructive Learning", in International Journal of Image, Graphics and Signal Processing (IJIGSP) 2013.

- Cyn Dwith, Vivek Angoth, Amarjot Singh, “Wavelet Based Image Fusion Algorithm for Malignant Brain Tumor Detection ” in International Journal of Image, Graphics and Signal Processing (IJIGSP) Vol. 5, No. 1, pp(s): 25-31, January, 2013.

- Amarjot Singh, Srikrishna Karanam, Shivesh Bajpai, Akash Choubey, Thaluru Raviteja, “Malignant Brain Tumor Detection”, in International Journal of Computer Theory and Engineering, Vol. 4, No. 6, pp(s): 1002-1006, December, 2012. .

- Vivek Angoth, Cyn Dwith, Amarjot Singh, "A Novel Wavelet Based Image Fusion for Brain Tumor Detection" in International Journal of Computer Vision & Signal Processing, 2013.

- Srikrishna Karanam, Amarjot Singh, Devinder Kumar, Akash Choubey, Ketan Bacchuwar, "Analysis and Improvement of SNR using Time Slicing" in 3rd International Conference on Digital Image Processing (ICDIP 2011) Proc. SPIE 8009, 800912 (2011); doi:10.1117/12.896180.

- Shivesh Bajpai, Amarjot Singh, K.V. Karthik, “An Experimental Comparison of Face Detection Algorithms”, in International Journal of Computer Theory and Engineering , (ICMV) pp(s): 196-200

- Akash Choubey, Amarjot Singh, Srikrishna Karanam, Devinder Kumar, Ketan Bacchuwar, “A Novel Signature Verification based Automatic Teller Machine”, in International Journal of Information and Electronics Engineering , Vol. 2, No. 4, pp (s): 570-574 , July 2012

- Devinder Kumar, Amarjot Singh, “Occluded Human Tracking and Identification using Image Annotation", in International Journal of Image, Graphics and Signal Processing (IJIGSP) , Vol.4, No.12, November 2012..

- Devinder Kumar , Amarjot Singh, S.N. Omkar "A Novel Visual Cryptographic Method for Color Images", in International Journal of Image, Graphics and Signal Processing (IJIGSP) , 2013.

- Amarjot Singh, Akash Choubey, Sreedhar Bandaru, Lalit Mohan, Mohit Dhiman, “The Ultimate Signature Identifier?” in 3rd International Conference on Electronics Computer Technology (ICECT 2011) (IEEE Xplore) Vol. 2, pp. 52-56, 2011

- Arundathy Reddy, Amarjot Singh, N Sumanth Kumar, Sai Sruthi Kotamraju, “The Decisive Emotion Identifier?”, in 3rd International Conference on Electronics Computer Technology (ICECT 2011) (IEEE Xplore)Vol. 2, pp. 28-32, 2011

- Amarjot Singh, Ketan Bacchuwar, Akash Choubey, Srikrishna Karanam, Devinder Kumar, “An OMR Based Automatic Music Player”, in 3rd International Conference on Computer Research and Development (ICCRD 2011) in, (IEEE Xplore), Vol. 1, pp. 174-178, 2011.

- Amarjot Singh, Devinder Kumar, "Integrating Occlusion and Illumination Modeling for Object Tracking using Image Annotation", in International Journal of Image, Graphics and Signal Processing (IJIGSP) , Vol. 4, Number. 10, pp: 40-47, September 2012.

- Amarjot Singh, Devinder Kumar, Ketan Bacchuwar, Akash Choubey, Srikrishna Karanam, “Annotation Supported Contour Based Object Tracking With Frame Based Error”, in International Journal of Machine Learning and Computing (IJMLC) , Vol. 2, No. 4, pp (s): 521-525, August 2012

- Amarjot Singh, Devinder Kumar, Phani Srikanth, Srikrishna Karanam, Niraj Acharya, “An Intelligent Multi-Gesture Spotting Robot to Assist Persons with Disabilities”, in International Journal of Computer Theory and Engineering , Vol. 4, No. 6, pp(s): 998-1001, December, 2012.

- Devinder Kumar, Amarjot Singh, "Annotation Supported Occluded Object Tracking", in International Journal on Image and Video Processing (ICTACT) , Vol. 3, Issue: 1, August, 2012.

- Vinay Jeengar, S.N. Omkar, Amarjot Singh, Manish Kumar Yadav, Saksham Keshri , "A Comparison of DCT and DWT applied to Image Compression", in International Journal of Image, Graphics and Signal Processing (IJIGSP) , Vol.4, No.11, September 2012.

- Amarjot Singh, Sumanth Kumar, "An Advanced Convexity Based Image Segmentation Algorithm", in International Journal of Image, Graphics and Signal Processing (IJIGSP), 2013

- Sridhar Bandaru, Amarjot Singh, S.N. Omkar, "An Advanced Video Stabilization Algorithm for UAV", in International Journal of Computer Vision & Signal Processing ( Link), 2013

- Sumedh Mannar, Amarjot Singh, “Reflection based Stealth Design Technique for Army Units”, in 17th international biennial conference of the Society for Philosophy and Technology (SPT 2011) in Texas, USA [Oral Presentation]

- Saksham Keshri, S.N. Omkar, Amarjot Singh, Vinay Jeengar, Manish Kumar Yadav, "An Advanced Mobile Surveillance System", in International Journal of Computer Vision & Signal Processing ( Link) 2013

- Amarjot Singh, Ketan Bacchuwar, Akshay Bhasin, "A Survey of OCR Applications " in International Journal of Machine Learning and Computing (IJMLC) Vol. 2, Issue-3, pp: 314-318, june, 2012

- Amarjot Singh, Ketan Bacchuwar and Akash Choubey, “A Novel GA Based OCR Enhancement and Segmentation Methodology for Marathi Script in Bimodal Framework”, in International Conference on Information Systems for Indian Languages (ICISIL-2011), Conf, Proc. of Springer, CCIS 139, pp. 271–277, 2011

- Ketan Bachuwar, Amarjot Singh, Gaurav Bansal, Saurav Tiwari, “An Experimental Evaluation of Preprocessing Parameters for GA Based OCR Segmentation” in 3rd International Conference on Computational Intelligence and Industrial Applications (PACIIA 2010) , proceedings, Vol. 2, pp. 417 -420, 2010

- Aditya Nagrare, Amarjot Singh, Phani Srikanth, Devinder Kumar, Cyn Dwith, "A Comparison of Biclustering with Clustering Algorithms" in 3rd Pacific-Asia Conference on Circuits, Communications and System (PACCS 2011) (IEEE Xplore) Vol. 1, pp. 1-4, 2011

- Nishchal K. Verma, Shruti Bajpai, Amarjot Singh, Aditya Nagrare, Sheela Meena, Yan Cui, “A Comparison of Biclustering Algorithms” in International conference on Systems in Medicine and Biology (ICSMB 2010) (IEEE Xplore) Vol. 1, pp. 95-104, 2010

- Capezio, F.; Sgorbissa, A. & Zaccaria, R. (2005). GPS Based Localization for a Surveillance UGV in Outdoor Areas, Proceedings of the Fifth International Workshop on Robot Motion and Control(RoMoCo’05), pp 157-162, ISBN: 83-7143-266-6, Dymaczewo Poland, June 2005

- Everett, H & Gage, D.W (1999), From Laboratory to Warehouse: Security Robots Meet the Real World, International Journal of Robots Research, Special Issue on Field and Service Robotics, Vol 18, No. 7, pp 760-768.

- Duckett, T; Cielniak G.; Andreasson, H; Jun L; Lilienthal, A; Biber, P. & Martinez, T (2004), Robotic Security Guard- Autonomous Surveillance and Remote Perception, Proceedings of IEEE International Workshop on Safety, Security, and Rescue Robotics, Bonn, Germany, May 2004

- Burgard, W. et al. 2000. "Collaborative Multi-Robot Exploration." Proc. IEEE International Conference on Robotics and Automation (ICRA), San Francisco CA.

- Yorger, D.R.,Bradley A.M, Walden B.B, Cormier M.H, Ryan W.B.F, “Fine-Scale seafloor survey in rugged deep-ocean terrain with an autonomous robot” Robotics and Automation, 2000. Proceedings, ICRA.IEEE International Conference-2000, pg:1787-1792 vol. 2

- Telea, “An Image Inpainting Technique Based on the Fast Marching Method,” J. Graphics Tools, vol. 9, no. 1, pp. 23-34, 2004

- Sociological Theory and Social Control Morris Janowitz American Journal of Sociology Vol. 81, No. 1 (Jul., 1975), pp. 82-108 Published by: The University of Chicago Press Article Stable

- Bakaoukas, Michael. "The conceptualisation of 'Crime' in Classical Greek Antiquity: From the ancient Greek 'crime' (krima) as an intellectual error to the christian 'crime' (crimen) as a moral sin." ERCES ( European and International research group on crime, Social Philosophy and Ethics). 2005.

- Chris Buehler, Michael Bosse, Leonard McMillan MIT Laboratory for Computer Science Cambridge, MA 02 139.

- Eddy Vermeulen, Barco nv “Real-time Video Stabilization For Moving Platforms” 21st Bristol UAV Systems Conference – April 2007

- Wei Zhu, Kejie Li, Xueshan Gao, Junyao Gao, Jize Li, Xiaolei Zhang, “A Real-time Scheme of Video Stabilization for Mine Tunnel Inspectional Robot*”, Proceedings of the 2007 IEEE International Conference on Robotics and Biomimetics December 15 -18, 2007, Sanya, China, Pg. 702-705

- Fischler, M., Bolles, R.: Random sample consensus: A paradigm for modeling fitting with applications to image analysis and automated cryptography . Comm. ACM, 24(6):381-395(1981)