Advancing Blood Cancer Diagnostics: A Comprehensive Deep Learning Framework for Automated and Precise Classification

Автор: Md. Samrat Ali Abu Kawser, Md. Showrov Hossen

Журнал: International Journal of Engineering and Manufacturing @ijem

Статья в выпуске: 2 vol.15, 2025 года.

Бесплатный доступ

A vital component of patient care is the diagnosis of blood cancer, which necessitates prompt and correct classification for efficient treatment planning. The limitations of subjectivity and different levels of skill in manual classification methods highlight the need for automated systems. This study improves blood cancer cell identification and categorization by utilizing deep learning, a subset of artificial intelligence. Our technique uses bespoke U-Net, MobileNet V2, and VGG-16, powerful neural networks to address problems with manual classification. For the purposes biomedical image segmentation U-Net architecture is used, MobileNet V2 is used for lightweight neural network model design and VGG-16 is used for image classification. A hand-picked dataset from Taleqani Hospital in Iran is used for the rigorous training, validation, and testing of the suggested models. The dataset is refined using denoising, augmentation, and linear normalisation, which improves model adaptability. The results show that the MobileNet V2 model outperforms related studies in terms of accuracy (97.42%) when it comes to identifying and categorizing blast cells from acute lymphoblastic leukemia. This work offers a fresh approach that adds to artificial intelligence's potentially revolutionary potential in medical diagnosis.

Blood Cancer, Acute Lymphoblastic Leukemia, Deep Learning, Medical Image Analysis, Automated Diagnosis, Neural Networks

Короткий адрес: https://sciup.org/15019702

IDR: 15019702 | DOI: 10.5815/ijem.2025.02.04

Текст научной статьи Advancing Blood Cancer Diagnostics: A Comprehensive Deep Learning Framework for Automated and Precise Classification

The identification and categorization of blood cancer cells is a crucial step in the diagnostic procedure that has a significant impact on treatment strategies and patient outcomes in general. Despite its widespread use, manual classification has some drawbacks that have led to the investigation of automated systems to guarantee precise and timely classification. The intricacies entailed in precisely categorizing blast cell pictures, which are vulnerable to discrepancies in proficiency across medical practitioners, emphasize the necessity for sophisticated technologies [1]. In response, this study explores the incorporation of deep learning to automate the classification of blood cancer. The constraints and subjectivity involved in manual blood cancer classification are the main issues at hand. The difficulties in accurately and consistently classifying healthcare workers are exacerbated by differences in their training and areas of competence.

The manual techniques are not as efficient as they should be for accurate and timely diagnosis. The issue must be resolved since it directly affects the accuracy and timeliness of diagnosis, which in turn affects treatment choices and patient outcomes. Classifying blood cancer automatically improves the diagnostic process's dependability and effectiveness, enabling prompt interventions for a better prognosis [2]. The challenge stems from the complexities of reliably identifying and categorizing photos of blast cells. These pictures frequently show minute details that a manual check could miss. Being able to identify subtle patterns in photos requires complex algorithms, which makes achieving high accuracy and efficiency difficult. Our strategy entails using deep neural networks' potential to automatically classify blood cancer in response to these difficulties. Deep learning presents a promising way to increase the effectiveness and precision of blood cancer diagnostics thanks to its amazing picture processing capabilities. Our approach is centered on the careful preparation of the data and the specialized use of cutting-edge deep-learning models that are adapted to the unique requirements of the detection and categorization of blast cell images. Our method is unique since it includes a full data preparation pipeline, makes sophisticated architectural decisions, and upgrades structures based on use cases. Our goal is to develop a more reliable, accurate, and efficient solution for the categorization of acute lymphoblastic leukemia blast cells by utilizing deep neural networks, notably VGG-16, MobileNet V2, and a unique UNet architecture. This approach deviates from conventional techniques by providing a strong framework that is based on transfer learning and customized to the specifics of blood cancer blast cell images.

2. Literature Review

Studies highlighting the revolutionary potential of AI-driven medical image analysis are featured in the literature review. The revolutionary potential of AI-driven automated medical picture analysis is highlighted in [2], offering a chance to completely improve modern healthcare through accurate and timely diagnosis. Due to differences in medical professionals' backgrounds and levels of experience, traditional leukemia screening techniques are frequently subjective. The scientists have developed an effective pathway for the automatic diagnosis and classification of leukemia throughout distinct phases by utilizing convolutional neural networks and a three-stage transfer learning approach. With the InceptionResNetV2 architecture, experimental results show a notable improvement in accuracy with reductions in error rates of 1.65% and 6.05%, respectively. The robustness of their suggested framework is demonstrated statistically by a T-test, which yields a positive difference of 4.71% when compared to the standard transfer learning mechanism.

The author [3] explores the characteristics of Chronic Lymphocytic Leukemia (CLL), highlighting that it is the most common type of leukemia in Western countries, accounting for 25% of cases, and that it is a B cell neoplasm. A thorough analysis of the benefits and drawbacks of modern machine learning (ML) algorithms for CLL patient diagnosis and evaluation can be found in [4]. It is explained how the success of artificial intelligence (AI) in healthcare can be ascribed to the development of high-performance computing and the availability of massive annotated datasets. The unique characteristics of medical imaging are described, together with clinical requirements and technical difficulties, clarifying how deep learning trends that are developing now meet these problems. An overview of machine learning, its current applications, and its requirements in both traditional and emergent sectors are given in [5], which contextualizes the discipline. We present an example that uses deep learning to understand the swarm behavior of molecular shuttles. Author [6] draws attention to a spike in leukemia cases during the previous 22 years. In [7], a researcher examines the growing application of AI in ophthalmology, highlighting the growing usage of DL models in the diagnosis and treatment of ocular disorders, especially in hereditary optic neuropathies as Leber's hereditary optic neuropathy (LHON). We examine the possible advantages of DL in providing prompt diagnostic and clinical accuracy for LHON.

A suggested strategy in [9] outperforms earlier efforts and produces state-of-the-art results. The strategy achieves an excellent Dice score of 83.33, which is supported by in-depth conversations and comparisons with other strategies that have been put out. explores the creation of artificial neural networks and offers a thorough examination of Deep Learning Architectures (DLA), emphasizing the possible uses of DLA in a range of medical imaging modalities. Using DLA for medical image classification, detection, and segmentation is covered in a comprehensive literature review.

In conclusion, this study tackles the pressing requirement of automated blood cancer blast cell identification and categorization, utilizing deep learning capabilities to surmount the constraints of human techniques. Our technique is unique because of its sophisticated architectural decisions and validation procedures, which pave the way for a diagnosis of blood cancer that is both accurate and efficient. The dataset, preprocessing methods, and suggested models are described in detail in the next sections, which emphasize how thorough and inventive of our research.

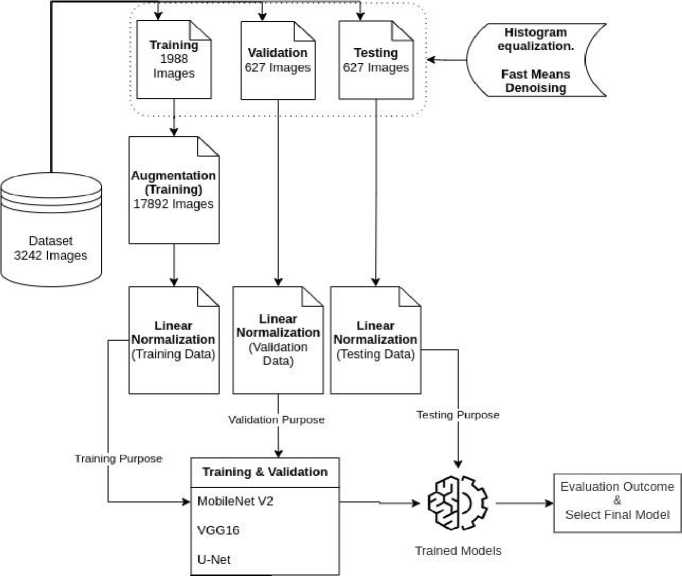

3. Methodology

This technique is based on a carefully selected dataset from Taleqani Hospital that consists of images of peripheral blood smears that have been segmented using color thresholding. Preprocessing techniques applied to the dataset include data augmentation, linear normalization, and denoising using Fast Means, Gaussian Blur, and Median Blur The methodology's central idea is Transfer Learning, which makes use of pre-trained models like VGG-16, MobileNet V2, and a specially designed UNet, all of which have been specifically tailored for the goal of blast cell picture recognition and classification. This all-encompassing strategy guarantees the model's flexibility, resilience, and effectiveness in identifying complex characteristics pertinent to the diagnosis of blood cancer. The suggested method's workflow is displayed in Fig. 1.

Fig. 1. Workflow of the proposed method

-

3.1. Dataset Description

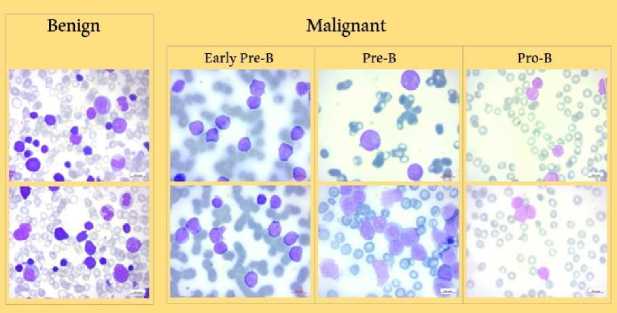

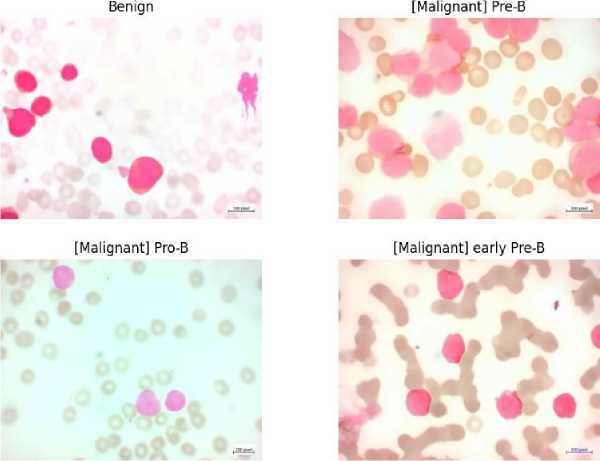

The photos used in this study are taken from the Acute Lymphoblastic Leukemia (ALL) Image collection [11, 12], which was carefully processed at the Taleqani Hospital's Bone Marrow Laboratory in Tehran, Iran. This dataset, which consists of 3242 peripheral blood smear pictures, includes specimens from 89 patients who may have ALL. Skilled laboratory staff carefully processed and dyed the blood samples. Notably, 64 patients with a verified diagnosis of ALL subtypes, including Early Pre-B, Pre-B, and Pro-B ALL, and 25 persons with a benign diagnosis (hematogone) are included in the dataset.

At a magnification of 100x, a Zeiss camera attached to a microscope was used to capture the photographs, which were then saved in JPG file format. A specialist using the flow cytometry instrument made a specialized determination of the cell kinds and subtypes. The dataset was segmented using color thresholding within the HSV color space to make more analysis easier. Additionally, the curators of the dataset made sure that segmented images were available. A sample of these images is shown in Fig. 2., which shows early pre-B ALL Cells, pro-B ALL Cells, benign (hematogone) Cells, and pre-B ALL Cells, in that order.

Fig. 2. Dataset Samples

The deep-learning models described in this research project were trained, validated, and tested using this ALL Dataset as the fundamental resource. The dataset, which has a multiclass structure, includes three different blast cell subtypes, identified as Early Pre-B, Pre-B, and Pro-B, as well as a benign cell. The dataset was carefully divided into about 60% for training, 20% for validation, and 20% for testing in order to provide a reliable evaluation. Table 1 displays the sample distribution.

Table 1. Distribution in the ALL dataset

|

Dataset |

Benign |

Early Pre-B |

Pre-B |

Pro-B |

Total |

|

Original |

512 |

979 |

955 |

796 |

3242 |

|

Training |

318 |

599 |

583 |

488 |

1988 |

|

Validation |

97 |

190 |

186 |

154 |

627 |

|

Testing |

97 |

190 |

186 |

154 |

627 |

Following this partitioning, the dataset was carefully subjected to a number of different preprocessing methods. The models were then trained using the train-validation set, and their performance was assessed on the assigned test set after that. This split methodology, with separate training and testing stages, is essential to the thorough evaluation of the suggested deep-learning models.

-

3.2. Data Preparation

-

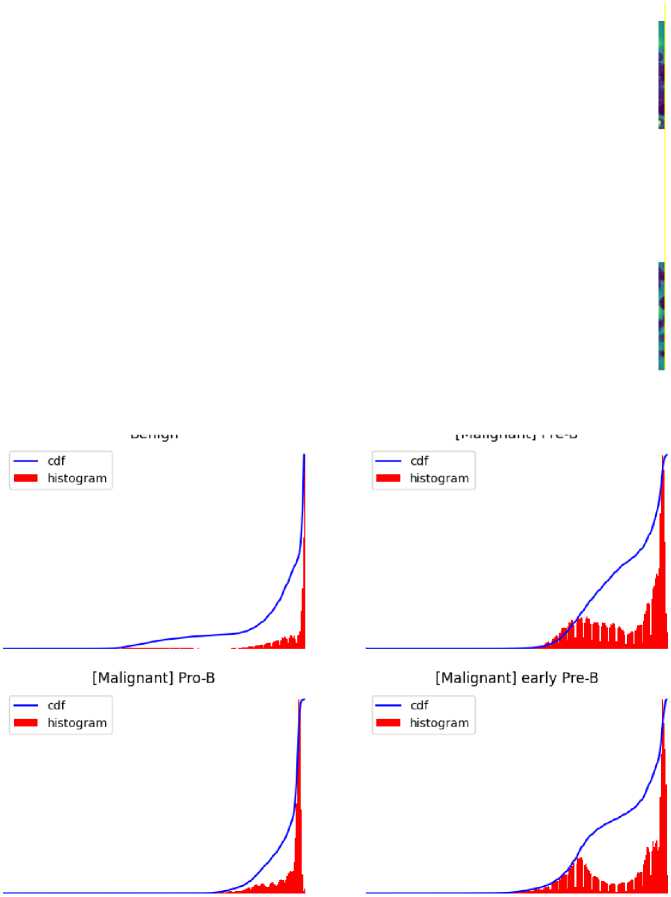

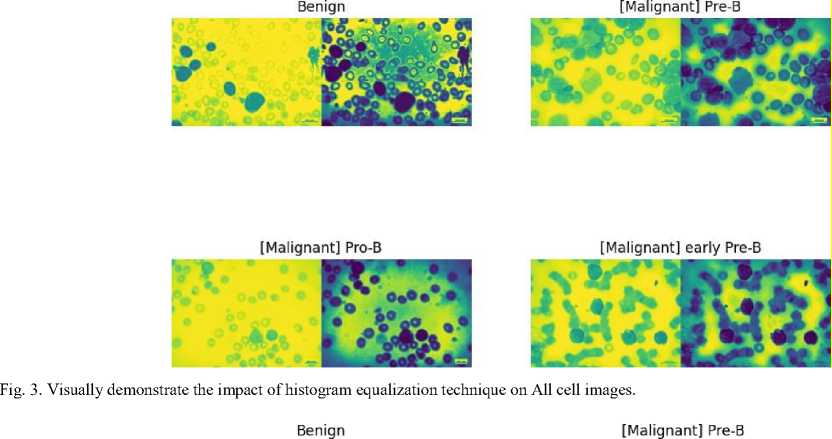

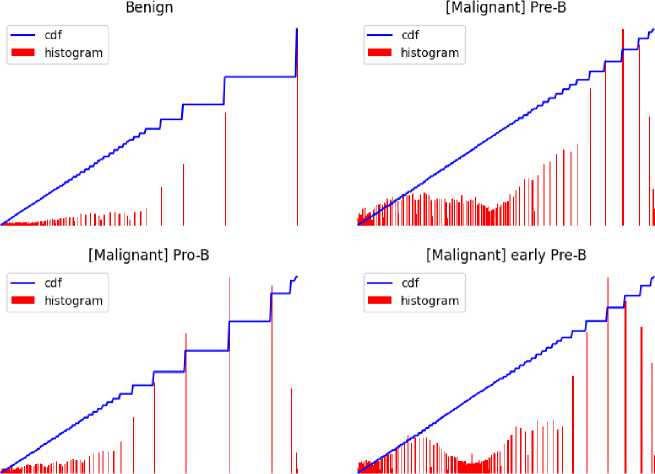

3.2.1 Histogram Equalization

-

An important image processing method that enhances the visual quality of medical images for precise illness detection is histogram equalization. It is a crucial preprocessing step that optimizes overall contrast in blood cancer, notably Acute Lymphoblastic Leukemia (ALL), by redistributing pixel intensity values. This method helps to accurately understand important aspects in blood cancer cell pictures by highlighting minute details in cell architecture. It works well at minimizing changes in pixel intensity, ensuring that the features of the image are represented consistently. Actually, it draws attention to relevant structures such as blast cells, which help distinguish between benign and malignant cell types.

Fig. 4. Visually demonstrate the impact of histogram equalization technique on all cell images.

Fig. 5. Visually demonstrate the impact of histogram equalization technique on All cell images.

-

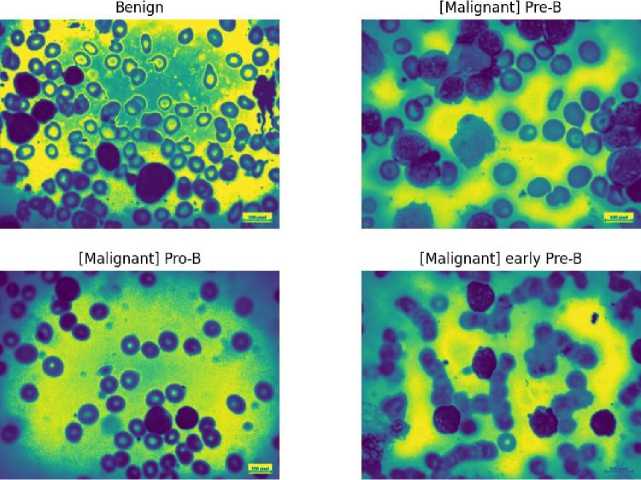

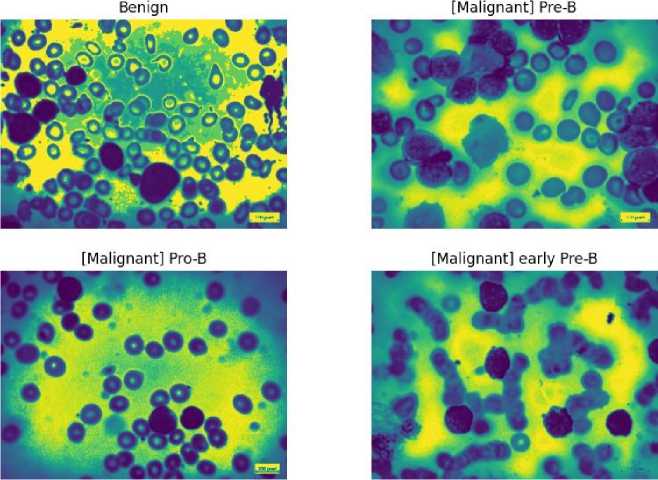

3.2.2 Denoising

Several error sources have an impact on the microscopic image quality, particularly noise. Since different types of noise have distinct effects on image processing, it is not necessary to remove them all [13]. To improve image quality, a three-pronged approach to denoising was used; we first analyzed the Fast Means, Gaussian Blur, and Median Blur methods, applying them to the images in turn. After a thorough evaluation, we selected the Fast Means method because it performed better than the other two. This was demonstrated by the generated denoising histogram.

The basis of Fast Means' operation is the local neighbourhood average of pixel values. It computes mean values quickly, effectively lowering noise while keeping important information in the image. A weighted averaging process is used by Gaussian Blur, with a focus on core pixels. Smoothing is achieved using convolution with a Gaussian kernel, which also reduces noise and enhances the visual refinement of the image.

Each pixel value is replaced with the median value in its immediate area when using median blur. By successfully removing outliers, this non-linear filter produces a denoised image with edges that are retained.

Fig. 6. Denoised images using Fast Means

[Malignant] Pre-B

Benign

[Malignant] Pro-B

[Malignant] early Pre-B

Fig. 7. Denoised Image using Gaussian Blur

Fig. 8. Denoised Images using Median Blur

4. Result and Discussion

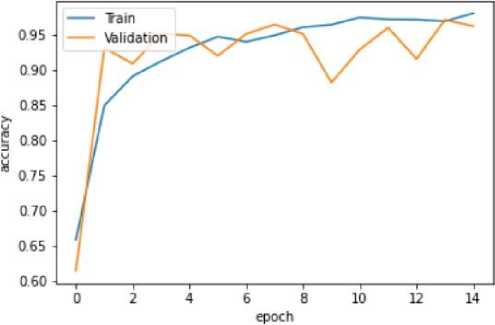

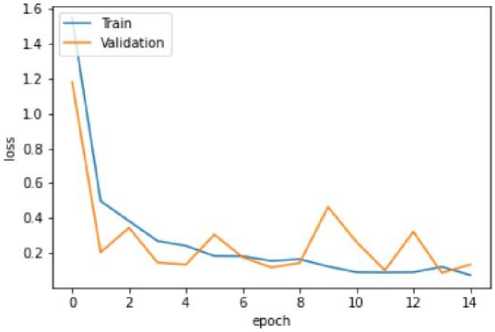

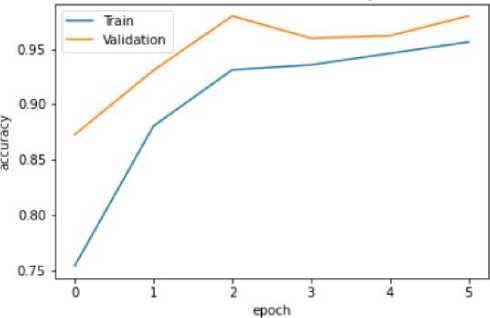

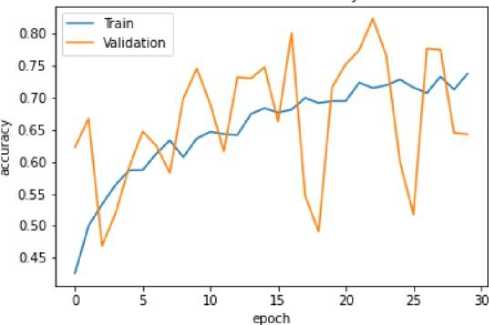

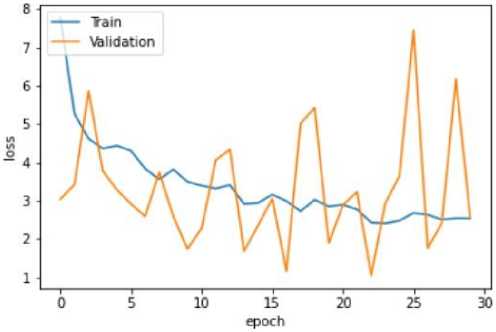

The efficacy of the suggested methodology is evaluated using extensive metrics for testing, validation, and training on three different models: VGG-16, Mobile Net V2, and U-Net. The models' learning processes are illustrated by the training and validation accuracy graphs (Figs. 10, 12, 14), which reveal that the U-Net model shows a relatively lower accuracy during training while the VGG-16 and Mobile Net V2 models reach high accuracy levels. In accordance with this, the loss graphs (Figs. 9, 10, 11) demonstrate how well the model can reduce errors during the learning process.

Fig. 9. Training and Validation Accuracy Graph of the VGG16 model

Fig. 10. Training and Validation Loss Graph of the VGG16 model

Fig. 11. Training and Validation Accuracy Graph of the Mobile Net V2 model

Fig. 12. Training and Validation Loss Graph of the Mobile Net V2 model

Fig. 13. Training and Validation Accuracy Graph of the U-Net model

Fig. 14. Training and Validation Loss Graph of the U-Net model

The VGG-16 model shows excellent accuracy of 96.13% on the testing set with low matching loss values in the quantitative findings (Table 2). The Mobile Net V2 model outperforms with a 97.42% accuracy rate in testing, demonstrating its effectiveness in classifying blood cancer images. The U-Net model performs admirably given its architectural concentration on segmentation rather than classification, even if it exhibits lesser accuracy.

Table 2. Training, Validation, and Testing Result of the Proposed Methods

|

Training |

Validation |

Testing |

||||

|

Model |

Accuracy |

Loss |

Accuracy |

Loss |

Accuracy |

Loss |

|

VGG-16 |

94.88% |

0.15 |

96.43% |

0.11 |

96.13% |

0.09 |

|

Mobile Net V2 |

93.12% |

0.77 |

97.99% |

0.1792 |

97.42% |

0.23 |

|

U-Net |

71.51% |

2.43 |

82.37% |

1.05 |

85.26% |

0.84 |

The competitiveness of the suggested approach is demonstrated by a comparison analysis (Table 4) with similar efforts. Our study's Mobile Net V2 model achieves the best accuracy of 97.42% on the testing set, outperforming other algorithms in related research. A curated dataset from Taleqani Hospital in Iran undergoes preprocessing steps such as denoising, augmentation, and linear normalization to boost model performance. Among the models, MobileNet V2 achieves superior accuracy (97.42%) in identifying blast cells from acute lymphoblastic leukemia, outperforming existing methods, which used traditional or alternative deep learning approaches. This comparative analysis underscores the efficiency of MobileNet V2 in medical diagnostics, paving the way for more accurate and accessible cancer detection. This outperforms the most advanced algorithm in the comparative trials, underscoring the effectiveness of our approach in the automated categorization of acute lymphoblastic leukemia.

Table 3. Comparative Study with related works

|

Title and Year |

Best Algorithm |

Accuracy |

Loss |

|

Automated blast cell detection for Acute LymphoblasticLeukemia diagnosis (2021) [21] |

YOLOv4 |

96.00% |

4.00 |

|

Feature Extraction of White Blood Cells Using CMYK-Moment localization and Deep Learning in Acute Myeloid Leukemia Blood Smear Microscopic Image(2022) [22] |

SVM |

96.41% |

3.59 |

|

Executing Spark BigDl for Leukemia Detection from Microscopic Image using Transfer Learning (2021) [23] |

GoogleNet |

96.06% |

3.99 |

|

Hybrid Inception v3 XGBoost Model for Acute lymphoblastic Leukemia Classification (2021) [24] |

MobileNetV2 |

95.80% |

4.2 |

|

Approach of this Study |

MobileNet V2 |

97.42% |

0.23 |

5. Conclusion

In the end this work offers a new method for automatically classifying blood cell cancer by using three cuttingedge deep learning models: VGG-16, Mobile Net V2, and U-Net. The outcomes demonstrate the efficacy of the Mobile Net V2 model, which achieved an impressive 97.42% accuracy rate in the classification of blood cancer cell images. The combination of augmentation and denoising approaches improves the robustness of datasets and the generalization of models. The paper does acknowledge certain limitations, such as the segmentation focus of the U-Net model, which leads to comparatively poorer accuracy. Subsequent investigations may focus on enhancing segmentation-oriented architectures to achieve better classification outcomes. Furthermore, the study's dependence on a particular dataset encourages additional research into how well the model performs on other datasets, addressing potential biases. Notwithstanding these drawbacks, the study offers insightful information about automated diagnostics and medical picture processing, demonstrating the revolutionary potential of deep learning to improve vital diagnostic procedures.