An Efficient Gait Recognition Approach for Human Identification Using Energy Blocks

Автор: Manjunatha Guru V G, Kamalesh V N, Dinesh R

Журнал: International Journal of Image, Graphics and Signal Processing(IJIGSP) @ijigsp

Статья в выпуске: 7 vol.9, 2017 года.

Бесплатный доступ

Human gait recognition is an emerging research topic in the biometrics research field. It has recently gained a wider interest from machine vision research community because of its rich amount of merits. In this paper, a robust energy blocks based approach is proposed. For each silhouette sequence, gait energy image (GEI) is generated. Then it is split into three blocks, namely lower legs, upper-half and head. Further, Radon transform is applied to three energy blocks separately. Then, standard deviation is used to capture the variation in radial axis angle. Finally, support vector machine classifier (SVM) is effectively used for the classification procedures. The more prominent gait covariates such as multi views, backpack, carrying, least number of frames, clothing and different walking speed conditions are effectively addressed in this work by choosing sequential, even, odd and multiple’s of three numbering frames for each sequence. Extensive experiments are conducted on four considerably large, publicly available standard datasets and the promising results are obtained.

Energy blocks, Fusion, Human gait, Part-based, Radon, Support vector machine

Короткий адрес: https://sciup.org/15014204

IDR: 15014204

Текст научной статьи An Efficient Gait Recognition Approach for Human Identification Using Energy Blocks

Published Online July 2017 in MECS DOI: 10.5815/ijigsp.2017.07.05

The gait is the non invasive biometric source. However, it does not require subject’s cooperation and even awareness of the individual under observation. Also, it can be recognized at a distance. From these rich set of attractive properties, gait is becoming popular biometric trait in security sensitive environments, i.e. banks, airport, government secretariats and public transport stations etc.

The gait can be a good biometric trait when all other traditional biometric sources such as face, fingerprint, speech, signature, hand geometry and iris etc failed. Hence, this has led to the development of multi modal biometric systems for efficient human identification procedures. The gait biometric source consists of both psychological and physiological states of the person. Hence, it is also referred as a behavioral biometric source. Even bipedal locomotion nature is similar in human’s, gait is greatly differs in certain details such as the relative timing of head, hand and leg movements, stride length, step width, cycle time and motion based features etc. [5][13][17][34][36]

The gait patterns are often influenced by the external challenges like random view, backpack, wearing, carrying objects and different walking speed, i.e. normal, slow and fast. The variation of gaits is also influenced by the psychological state of humans, surface condition and time difference etc. Therefore, gait researchers have to take care of all these challenges to make the gait recognition systems as robust to the pragmatic conditions. Demanding requirements for security in a variety of situations, many researchers are working on vision based systems that use video cameras to analyze the movements of human gait. The gait is not only useful in visual surveillance applications; also it supports many other fields such as early detection of Parkinson disease, human action recognition, gender recognition and optimal sport training etc. [13] [34]

Human gait recognition systems are majorly classified into three major research fields such as machine vision, wearable device and floor sensor. Due to the merits of machine vision approach such as continuous authentication, cost effectiveness and simplicity, this paper focus on machine vision based human gait recognition system. However, we use video images of a person to construct a binarized silhouette. Because binarized silhouettes are more insensitive to the color and texture information of the object. [5][13][34][36]

Many silhouette based recognition methods have been built with the assumption of a fixed camera observing a mostly static scene. This enables silhouettes to be obtained through a simple background modeling and subtraction methods. [17]

The merits of gait recognition technology motivated many machine vision researchers to build a new generation of biometric enabled security systems. Also, current developments reported that automated gait recognition systems might be ready for commercial use in the near future. As indicated in the literature, vision based human gait recognition is emerging research area in non-invasive biometrics. Also, the current state of the art methods shown that gait can recognize the person uniquely in restricted environments. The algorithmic details of more recent attempts on gait recognition are highlighted below.

Appearance based approaches to a greater extent affected by the gait covariate conditions. Hence, this problem can be overcome by partitioning the human silhouette into a number of parts. However, some previous attempts have been done related to the part based gait classification, where more partitions are considered. This had led to the redundant parts. However, those attempts have consumed the extra care and computational cost to reduce the redundant parts. The below paragraph highlights the some part based approaches.

Altab Hossain et al [18] divide the human body into eight sections, including four overlapping sections. BEN Xianye et al [22] partitioned the human body into several equal size parts of smaller size. Rokanujjaman et al [26] segment the human body into five parts and used frequency domain gait entropy features for the classification.

Lee et al [15] have proposed a recursive principal component analysis based algorithm to eliminate the effect of backpack. Further, they have conducted experiments on CASIA-C dataset by incorporating simple nearest neighbor classifier and reported 82.68% of recognition rate in their work.

Ali et al [23] have conducted the two kinds of experiments on GEI, i.e. gait energy image. The first is PCA (i.e. principle component analysis) without Radon and second is PCA with Radon. Further, a simple matching criteria based on specified threshold value was employed and reported 94% and 96% of recognition rates, respectively, for the above two experiments.

Hofmann et al [24] have proposed a gradient histogram energy image based gait recognition algorithm. Further, a simple nearest neighbor classifier was used for the classification procedure. They have reported 75.36% of recognition rate on side view sequences of HumanID gait challenge dataset.

Theekhanont et al [25] have proposed a trace transformation based gait recognition algorithm. Further, template matching based classifier was employed on small CASIA-A dataset and reported 91.25% recognition rate in their work.

Rohit Katiyar and Vinay Kumar Pathak [27] have proposed an energy deviation image as a gait representation method. Further, fuzzy principal component analysis was incorporated to reduce the dimension and a simple nearest neighbor classifier was employed for the classification procedures. They have reported 90.01% of recognition rate for the first 45 subjects from CASIA-B dataset.

Yumi et al [29] have applied affine moment invariants on multiple blocks of GEI. They have reported 97.7% and 94% of recognition rates, respectively for CASIA B and CASIA C datasets.

LI et al [28] have proposed a structural gait energy image based algorithm. Further, a combination of PCA and LDA was incorporated to achieve the best class separability. A simple NN classifier was employed on side view sequences of CASIA-B dataset and reported 89.29% of recognition rate.

Mohan Kumar et al [30] have proposed a symbolic approach based gait recognition algorithm. A simple symbolic approach based similarity matching was employed in their work. They have reported 88.99% and 92.79% of recognition rates, respectively, for CASIA -B and UOM dataset. They have considered only side view sequences in their work.

Suvarna Shirke et al [32] have extracted the simple features for each silhouette. Further, NN classifier was employed on CASIA-A dataset and reported 90% of recognition rate. They have addressed only side view sequences in their experiments.

Deepak et al [35] have proposed LDA (latent Dirichlet allocation) based algorithm which transforms the gait sequences into word representation. They have reported satisfactory results on three data sets.

The contents are organized in a well constructive manner. The Section II shows the algorithmic procedure of the proposed methodology. The sections from III to IX respectively discuss the experimental results and comparative analysis. Lastly, section X concludes the discussion.

-

II. Proposed Methodology

The proposed methodology majorly consists of three phases, i.e. gait representation, feature extraction and classification. For these implementations, the publicly available datasets [4] [7] [9] [10] are used. These datasets provide the gait sequences in the silhouette format, which means the background is black and human region is white.

-

A. Gait Representation6

For each silhouette image, morphological operators are used to remove the spurious pixels from the background area. Then, bounding rectangle is used to extract the silhouette region with the largest size for ignoring all the residual noises. The same operations are applied to all silhouettes in a sequence and are align to the same centre in order to get better gait representation.

With the help of study on GEI, i.e. gait energy image based approaches; it is found that GEI [6] greatly captures the motion and static characteristics of gait. Also, it overcomes from the practical problems such as noise in the silhouette, distortion, poor illumination, least number of frames and different lighting conditions. Therefore, the proposed approach effectively incorporated GEI as a gait representation method. For each aligned sequence of an individual, gait energy image is obtained by using the formula mentioned in (1).

-

B. Energy Blocks Based Gait Representation

The larger blocks are more sensitive to the clothing, carrying and backpack conditions. But, their discrimination capability is high and vice versa for the smaller blocks. Hence, the proposed approach has considered the two smaller blocks and one larger block to minimize this tradeoff.

People have different anatomical proportion of body parts with regard to height (H). Anatomical experts have remarked in their literature that the measurement from foot to neck is 0.870H, waist is 0.535H and knee is 0.285H. Based on these, the proposed approach splits the energy image into three blocks (i.e. head, upper-half and lower legs), since the human ambulation shows usual periodic motion, especially lower legs, hand and head, which reflects the individual’s unique movement pattern. This form of segmentation helps to address the lateral and oblique view conditions. Since, these viewing conditions have more dynamic characteristics in the three above said body components. Further, it is also helps to address frontal and back view conditions. Because the frontal and back view conditions have a considerable amount of dynamic characteristics in the upper portion of the human body than the lower portion. The Fig. 1 depicts the proposed energy blocks based representation.

The proposed energy blocks based representation method extensively captures the local dynamic characteristics of GEI. Hence, it can effectively address the speed variation condition. Since, transitional characteristics of the leg, head and hand may considerably less when he/she is in relax mood, whereas it may considerably higher in a busy/hurry/stress mood.

As identified in the above paragraphs, the three cropped human body components provide their own contribution to the gait recognition. Even the bipedal locomotion nature of humans looks similar; gaits greatly vary from the apparent movements of these three body components. Thus, a feature level fusion of head, upperhalf and lower legs based approach is effectively proposed in this work in order to address the robust gait recognition.

GEI =µ(I1,I2,...,IN) (1)

Where ‘N’ represents the total number of frames in a sequence and ‘I’ represents the silhouette image.

(a)

(b)

(c)

(d)

Fig.1. (a) A sample GEI (b) Head (c) Upper-Half (d) Lower Legs

-

C. Block Based Radon Approach for Feature Extraction

The Radon transform maps the 2-D image in Cartesian coordinate system (x, y) into polar coordinate system (ρ, θ). The Radon transform on an image f (x, y) for a given set of angles can be considered as the projection of the image along the given angles. The result is a new image R (ρ, θ).

This can be written mathematically by defining

ρ = x cos θ + y sin θ

After which the Radon transform can be written as

R ( ρ , θ ) = ∫∫ f ( x , y ) δ ( ρ - x cos θ - y sin θ ) dxdy (3)

Where δ (.) is the Dirac delta function.

The Radon transform based features are invariant to translation and other geometric transformations, i.e. scaling. The more theoretical description about Radon transform is found in [11]. In this paper, a robust block based Radon approach is employed in the context of applying Radon separately on three energy blocks (i.e. parts) and fuses (i.e. concatenate) the features for the classification procedure.

After a Radon transform, standard deviation, i.e. σ(R) is computed from the Radon transformed vector R for a given angle. A set of angels usually from θ = [0; 180), i.e. 1800 is not included since the result would be identical to the 00. However, all radial lines oriented from 00 to 1790 angles may miss lead the classifier and also it increases the dimension of the feature vector. Hence, the angles chosen here are 00, 300, 600, 900, 1200 and 1500, since these angles cover the vertical, horizontal and diagonal directions.

-

D. Feature Level Fusion of Three Body Components

As described in the above section, six features (i.e. 00, 300, 600, 900, 1200 and 1500) are selected for the each cropped part. Then, three part’s features are fused at the feature level, which means total 18 features (i.e. 3 cropped parts X 6 features) are used for the classification procedure.

The Table 1 demonstrated that, a feature level fusion of head, upper-half and lower leg parts given more contribution towards the gait recognition rather than the whole energy image. Also, it is better than any individual body component and a combination of any two. For this experiment, total 960 sequences from CASIA A dataset (i.e. total 240 sequences X 4 frame orders) are used by incorporating the leaving one out type procedure. Also, the extensive experiments on other datasets using the proposed fusion approach have shown the promising results. The detailed experimental results are shown in section III to VIII.

-

E. Normalization

Standardization of the features enhances the functioning of the support vector machine classifier in terms of speed and result. Since, it helps the classifier to finds the support vectors with the minimal time duration. To achieve this, mean (ц) and standard deviation (g) are computed from the feature vector. Then, each feature value (X i ) is subtracted from the mean and the subtracted result divided by the standard deviation as indicated in below formula. In (4), The ‘X’ represents the feature vector and ‘X i ’ represents the feature value for i = 1...18.

X i

- µ

σ

-

F. Classifier

The proposed method has incorporated the more generalized and powerful classifier like SVM. A support vector machine has already proven its significant contribution in many recognition systems including the gait recognition system.

With the background of experiments, it is found that the proposed gait patterns are non linear separable in nature. However, the experiments are carried on two widely used non linear kernels, namely polynomial and radial basis function (RBF) to verify the kernel’s contribution for the proposed gait patterns. Further, it is found that polynomial kernel (order 3) with sequential minimal optimization (SMO) procedure finds the hyper plane which best bisects the data. Also, it is reported the more promising results compared to RBF. Extensive experimental procedures are shown that the proposed features are robust and work well with SVM classifier.

-

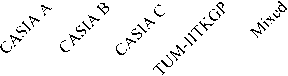

III. Datasets and Utilization Scheme

In this work, extensive experiments are carried out on four publicly available standard datasets, namely CASIA A [4], CASIA B [7], CASIA C [9] and TUM-IITKGP [10]. Among the four, CASIA A dataset is used to verify the effectiveness of the fusion of lower legs, upper-half and head. Also, it is used to address multi view, different walking speed and least number of frames conditions. CASIA B is utilized to address multi view, clothing and carrying conditions. CASIA C is used to address backpack and different walking speed conditions. Further, TUM-IITKGP is used to address view, backpack and hands in pocket condition. The extensive experimental results on these publicly available datasets are clearly depicted in Table 1, Fig. 2 and Fig. 3.

In the real time scenario, one test sample has to against the huge number of samples. Hence, leaving one out type experimental procedure is effectively employed for the experiments. Also, hold out and ‘k’ fold validation procedures are used in the experiments to evaluate the performance of the proposed approach.

-

A. Effective Dataset Utilization Scheme

The evaluation of the method on the large number of samples, subjects and different speed variations is more essential. However, four different ordering frames (i.e. sequential, odd, even and multiple’s of three) are selected for each sequence in order to increase the number of samples [36]. Also, these sequences resemble the normal walk, normal fast and quick fast walking scenarios. Further, two different institution datasets are combined in order to increase the number of subjects. For this, TUM-IITKGP dataset (i.e. 35 subjects) and CASIA C dataset which consists of high number of subjects (i.e. 153 subjects) compared to the other Chinese academy datasets, are combined together to build the considerably large dataset (i.e.188 subjects).

-

IV. Experimental Results On Casia – a Dataset

CASIA A dataset consists of 20 subjects and each subject walked in three views, namely lateral, oblique and frontal. Each subject’s individual view consists of four sequences. However, each subject consists of 12 sequences and 240 sequences in total. [3] [4]

For the experiments, each subject’s individual view is considered as a class. Hence, 60 classes, i.e. 20 subject’s x 3 views are considered for the experiments instead of the 20 subject’s.

-

A. Experiment 1

Leaving one out type experimental procedure is employed in this experiment. Total 960 multi view sequences (i.e. 240 sequences × 4 frame orders) are used for this experimentation. In each turn, 959 sequences are used as a gallery and the rest is used as a probe.

-

B. Experiment 2

This experimental setup includes hold out type procedure. Hence, each view of an individual is partitioned into two sets where each set has two sequences. Total 480 sequences (i.e. 20 subject’s × 3 views × 2 sequences × 4 frame orders) are used as the training set. The remaining sequences are used as the testing set (i.e. 20 subject’s × 3 views × 2 sequences × 4 frame orders).

-

C. Experiment 3

In the real time scenario, the considerable number of frames may not be available which means that less amount of subject’s gait information may be recorded. Hence, a prominent gait pragmatic condition (i.e. least number of frames) is newly addressed in this work compared to the attempts in the gait literature. However, extensive experiments are conducted by providing least number of frames, i.e. 12 frames (approximately one gait cycle) and 6 frames (approximately half gait cycle).

For 12 frames experiment, total 720 sequences (i.e. 240 sequences X 3 cycles per sequence) are used. In each turn, 719 sequences are used as the training set and the rest is used as a test sample. This partitioning is repeated for 720

different times, so that each sequence of a person is considered as a test sample.

For 6 frames experiment, total 960 sequences (i.e. 240 sequences X 4 half cycles per sequence) are used. In each turn, 959 sequences are used as the training set and the rest is used as a test sample. This partitioning is repeated for 960 different times, so that each sequence of a person is considered as a test.

The proposed approach reported the correct classification rates of 51. 66% and 37.08% respectively for 12 frame and 6 frame experiment. The 12 frame experimental result is depicted in Fig. 3.

-

V. Experimental Results on Casia – b Dataset

CASIA B dataset consists of 124 subjects and each subject walked in 11 viewing angles from 00 to 1800 with 180 intervals. However, it consists of 11 views, i.e. frontal views (00 and 1800), oblique views (180, 360, 540, 1260, 1440 and 1620) and approximate lateral views (720, 900, and 1080). Also, each subject consists of three gait covariates namely normal walk, clothing and carrying condition. Each view of an individual consists of 10 sequences (2 carrying bag sequences + 2 wearing coat sequences + 6 normal walking sequences). However, each view of 124 subjects consists of 1,240 sequences (i.e. 124 subjects X 10 sequences) and 13,640 sequences in total (1,240 sequences per view X 11 views). [7]

With the help of general observation made on the literatures, it is found that, gait information is same when the same subject walks in frontal and back view. But, gait varies in shape, static and dynamic characteristics when the same subject walks in lateral, oblique and frontal views. However, each subject is considered as the three classes with regard to the views (i.e. lateral, oblique and frontal). Hence, 372 classes (i.e. 124 Subjects X 3 classes) are considered for the below experiments instead of 124 subjects in order to effectively verify the proposed method.

-

A. Experiment 1

Leaving one out type experimental procedure is employed in this experiment. Total 54,560 samples (i.e. 13,640 sequences X 4 frame orders) are used in this experiment by including 11 viewing angle sequences (i.e. 00, 180, 360, 540, 720, 900, 1080, 1260, 1440, 1620 and 1800). In each turn, 54,559 sequences are used as a gallery and the rest is used as a test sample. This partitioning is repeated for 54,560 different times, so that each sequence of a person is considered as a test.

-

B. Experiment 2

This experimental setup includes the hold out type validation procedure. For this, the normal walking sequences are used as the training set. The sequences with the carrying and clothing conditions are used as the test set in order to verify the robustness of the proposed approach with respect to the gait covariates. The below experimental settings covers 60% samples as a gallery set and 40% samples as a probe set.

Total 66 sequences (i.e. 6 normal walk sequences X 11 views) are selected for each subject. Hence, total 32,736 sequences (i.e. 66 sequences X 124 subjects X 4 frame orders) are used as the training set by including all 11 views. Further, 44 sequences (i.e. 2 carrying bag sequences + 2 wearing coat sequences X 11 views) are selected for each subject. Hence, total 21,824 sequences (i.e. 44 sequences X 124 subjects X 4 frame orders) are used as the test set

-

C. Experiment 3

CASIA B is popular for evaluating cross view gait recognition, and in the cross view gait recognition, observation views of the probe and gallery are different. Hence, this experimental setup is incorporated k – fold cross validation procedure in order to effectively evaluate the proposed approach with respect to the view condition. However, the whole dataset is partitioned into 11 subsets with respect to the viewing angles (i.e. 00, 180, 360, 540, 720, 900, 1080, 1260, 1440, 1620 and 1800). Each subset consists of particular view sequences. Each time, one of the ‘k’ subsets is used as a probe and the other k-1 subsets are put together to form a gallery.

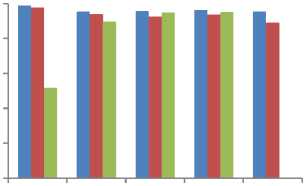

In each turn, total 49,600 sequences (i.e. 1240 sequences per view X 10 views X 4 frame orders) are used as the training set and the rest 4,960 sequences (i.e. 1240 sequences X 1 view X 4 frame orders) are used as the testing set. This form of the experimental setting covers 90% samples as a gallery and 10% samples as a probe. The cross view experimental results and average CCR are pictorially depicted in Fig. 2 and Fig. 3 respectively.

-

VI. Experimental Results On Casia – c Dataset

CASIA C consists of 153 subjects and each subject consists of two prominent pragmatic conditions, namely backpack and different walking speed conditions. Each subject consists of 10 sequences, i.e. 2 backpack sequences + 4 normal walking sequences + 2 fast walking sequences + 2 slow walking sequences. However, 1,530 sequences in total. [9]

-

A. Experiment 1

Leaving one out type experimental procedure is employed in this experiment. Total 6,120 sequences (i.e. 1,530 sequences X 4 frame orders) are used in this experiment by including both backpack and different walking speed variations. In each turn, 6,119 sequences are used as a gallery set and the rest is used as a test sample. This partitioning is repeated for 6,120 different times, so that each sequence of a person is considered as a test sample.

-

B. Experiment 2

This experimental setup includes hold out type procedure. For this, normal walking sequences are used as the training set. The sequences with gait covariate factors (i.e. backpack, fast and slow walking conditions) are used as the testing samples in order to verify the robustness of the proposed approach with respect to the gait covariates. This form of hold out type experimental setting covers 40% samples as a gallery and 60% samples as a probe.

Total 16 sequences (i.e. 4 normal walking sequences X 4 frame orders) are selected for each subject. Hence, total 2,448 sequences (i.e. 16 sequences X 153 subjects) are used as the training set. Further, 24 sequences (i.e. 2 backpack sequences + 2 slow walking sequences + 2 fast walking sequences X 4 frame orders) are selected for each subject. Hence, total 3,672 sequences (i.e. 24 sequences X 153 subjects) are used as the testing set.

-

C. Experiment 3

Hold out type procedure is employed in this experiment. For each subject, first five sequences are selected as the training samples and the rest five sequences are considered as the testing. However, total 3,060 sequences (i.e. 153 subjects X 5 sequences X 4 frame orders) are used as a gallery and the same number of sequences is used as a probe.

-

VII. Experimental Results On Tum-iitkgp Dataset

TUM–IITKGP dataset consists of 35 person’s lateral view gait sequences with six configurations. Three configurations from each person are used for the below experiments. For each subject, 12 sequences (i.e. 4 normal walking sequences + 4 backpack sequences + 4 hands in pocket sequences) are selected for the below experiments. However, 420 sequences in total, i.e. 35 subjects X 12 sequences. [10]

-

A. Experiment 1

Total 1,680 sequences (i.e. 35 people X 12 sequences X 4 frame orders) are used for the combined gait covariates experiment. In each turn, 1679 sequences are used as a gallery set and the rest is used as a test sample. This partitioning is repeated for 1,680 different times, so that each sequence of a person is considered as a test sample.

-

B. Experiment 2

This experimental setup includes the hold out type validation procedure. For this, normal walking sequences are used as the training set. The sequences with backpack and hands in pocket conditions are used as the testing set. The settings of this experiment cover 40% samples as a gallery set and 60% samples as a probe set.

Total 560 sequences (i.e. 35 subjects X 4 normal walking sequences X 4 frame orders) are used as the training set and 1,120 sequences (i.e. 35 subjects X 8 sequences with backpack and hands in pocket conditions X 4 frame orders) are used as the testing set.

-

C. Experiment 3

The proposed algorithm is tested by considering the three configurations, i.e. normal walk, hands in pocket and backpack. For each configuration, two sequences are selected as the training set and the rest two sequences are considered as the testing set.

Total 840 sequences (i.e. 35 subjects X 3 configurations per subject X 2 sequences per configurations X 4 frame orders) are used as a gallery. The same number of sequences is used as a probe, i.e. 35 subjects X 3 configurations X 2 sequence X 4 frame orders.

-

VIII. Experimental Results On Combined/Mixed Dataset

CASIA C (153 subjects) and TUM- IITKGP (35 subjects) data sets are combined to form a mixed dataset (188 subjects) in order to evaluate the proposed approach on the considerable large number of subjects. These datasets consist of lateral view sequences with some gait covariates, i.e. backpack, slow walking, fast walking and hands in pocket. CASIA C consists of 1,530 sequences and TUM-IITKGP dataset consists of 420 sequences in total.

-

A. Experiment 1

Total 7,800 sequences (i.e. 1530 + 420 X 4 frame orders) are used for the combined gait covariates experiment. In each turn, 7799 sequences are used as a gallery set and the rest is used as a test sample. This form of experimental setting is repeated for 7,800 different times, so that each sequence of a person is used as a test sample.

-

B. Experiment 2

For this experiment, normal walking sequences of each subject are selected as the training set and the sequences with gait covariates (i.e. backpack, slow walking, fast walking and hands in pocket) are selected as the testing. This form of the experimental setting covers 39% samples as a gallery and 61% samples as a test set.

Total 3,008 sequences are used as a gallery set, i.e. 188 subjects X 4 normal walking sequences per subject X 4 frame orders. The rest 4,792 sequences are used as a probe set, i.e. 153 subjects X 6 sequences per subject X 4 frame orders + 35 subjects X 8 sequences per subject X 4 frame orders.

Table 1. Experimental results on body parts and fusion

|

Body Components |

CCR |

|

Whole GEI |

65% |

|

Lower legs |

47.91% |

|

Upper-half |

36.87% |

|

Head |

27.91% |

|

Lower legs + Upper-half |

81.25% |

|

Lower legs + Head |

71.97% |

|

Upper-half + Head |

59.27% |

Fusion of Lower legs, Upper-half and 98.95% Head

Fig.2. Cross view experimental results on CASIA-B

■ Experiment - 1

■ Experiment - 2

■ Experiment - 3

Fig.3. Correct classification rates on CASIA (A, B and C), TUM-IITKGP and Mixed dataset

-

IX. Comparative Analysis and Discussions

We made extensive comparisons with the current state of the art methods by utilizing the same pattern of experimental procedure and the same setting of the gallery and probe set. The prominent comparisons with the current state of the art algorithms are highlighted in the below paragraphs.

Some of the previous works [25] [28] [29] [30] [32] [33] have addressed a few gait covariates with the restricted single viewing angle. Most of the attempts [23] [25] [27] tested on a single dataset, least number of samples and subjects. There is some part based approaches are present in the literature which divides the human body into a number of segments. Hence, processing a number of parts may require high computational cost, i.e. space and time. [18] [26] [29] [30]

Firstly, the proposed approach compared with some similar form of part based approaches. LI et al [28] have incorporated only side view sequences from CASIA B dataset. In their experiment, three normal walking sequences of each person were considered as a gallery set and the left seven sequences as probes, i.e. three normal walking sequences, two carrying bag sequences and two wearing coat sequences. They have reported 89.29% of recognition rate in their work. However, we use the same experimental settings to evaluate our proposed approach and achieved 92.39% of classification rate.

The part based attempt [30] was incorporated only side view sequences from CASIA B dataset. In their experiment, first four normal walking sequences of each person were considered as a gallery set and the left six sequences as probes, i.e. last two normal walking sequences, two carrying bag sequences and two sequences with the coat. They have reported 79.01% of the correct classification rate in their work. However, we use the same experimental setup to evaluate our proposed approach and achieved 93.43% of the correct classification rate (CCR).

Multi block based attempt [29] was tested on two benchmark datasets i.e. CASIA B and CASIA C. For CASIA B dataset experiment, six lateral view sequences with normal walking condition were used. Further, their method was tested by a 2-fold cross validation method, i.e. 124 subjects × 3 sequences were used for the training and the rest was used for the testing. For CASIA C dataset experiment, four normal walk sequences of each subject were used. Further, the method was tested by a 4fold cross validation method, i.e. 153 subjects × 3

sequences were used for the training and the rest was used for the testing. They have reported 97.7% and 94% of the correct classification rates, respectively, for CASIA B and C datasets in their work. However, we use the same experimental setup to evaluate our proposed approach and achieved 98.92% and 97.38% of the correct classification rates, respectively, for CASIA B and C datasets.

Lee et al [15] have evaluated the method on CASIA C dataset. They have used four normal walk sequences of each person as a gallery set and two backpack sequences as a probe set. They have reported 82.68% of CCR in their work. However, we use the same experimental setup to evaluate our proposed approach and achieved 88.23% of CCR.

Deepak et al [35] have conducted experiments on three datasets namely CASIA A, B and TUM-IITKGP. For CASIA A dataset experiment, they have conducted separate experiments on three viewing angles. Further, their algorithm was tested by a 4-fold cross validation procedure, i.e. 20 subjects X 3 sequences were used for the training and rest was used for the testing. They have reported 85%, 85% and 85% of average recognition rates, respectively, for lateral, frontal and oblique view conditions. We use the same experimental setting for the comparison and achieved 100%, 98.75 and 98.75% of average recognition rates, respectively, for the three views. For CASIA B dataset experiment, they have used six normal walking sequences for each viewing angle. Further, their method was tested by a 3-fold cross validation procedure, i.e. 124 subjects X 4 normal walking sequences were used for the training and the two sequences were used for testing. They have reported 82.2% of average recognition for all 11 viewing angles. The same setup is used for our proposed method and achieved 92.74% of average CCR. For TUM-IITKGP dataset experiment, they have used four configurations, i.e. normal walk, hands in pocket, backpack and clothing condition. For each configuration, the first walk sequence either left-right or from right-left of 35 individuals was used as the testing set and the remaining three sequences were used as the training set. They have reported 90% of average recognition rate in their work. The same setup is used for our proposed method and achieved 92.85% of average CCR.

The some current attempts [2] [3] [8] [12] [14] [19] [20] [21] [31] have extracted features directly from the binarized silhouette. The some practical conditions (i.e. different lighting conditions, noise and poor illumination) affect the binarized silhouettes. Therefore, the proposed approach has incorporated GEI as the gait representation method to overcome from the practical problems.

Compared to some feature level fusion attempts [28] [29] [30], the proposed feature level fusion based approach is tested on the considerable large number of samples and subjects.

The extensive comparisons and promising experimental results have shown that, the proposed approach outperforms the current state of the art methods and also suits to the real time scenario.

-

X. Conclusion

This paper has explored a fusion of three human body components based gait recognition approach. To verify the efficiency of the proposed approach, extensive experiments are carried out on four considerably largest datasets and one mixed dataset. Also, more prominent gait covariates such as multi views, backpack, carrying, least number of frames, clothing and different walking speed conditions are effectively addressed by considering different frame numbering sequences. Extensive experimental results have demonstrated the effectiveness and the merits of the proposed algorithm in all prominent gait covariates. The comparisons with the current gait recognition methods demonstrate that the proposed method is promising gait recognition approach in current gait research. Also, the experimental results on indoor, outdoor, day and night sequences demonstrate that the proposed algorithm outperforms the current prominent attempts in the gait recognition literature.

Acknowledgement

The authors would like to thank the creators of CASIA A, B and C and TUM-IITKGP datasets for providing the publicly available gait datasets.

Список литературы An Efficient Gait Recognition Approach for Human Identification Using Energy Blocks

- Liang Wang., Weiming Hu., Tieniu Tan., 2002. A New Attempt to Gait-based Human Identification. 1051-4651/02 ©IEEE.

- Kale A., N. Cuntoor., B. Yegnanarayana., A N. Rajagopalan., and R. Chellappa., 2003. Gait analysis for human identification. In: AVBPA, pp. 706-14.

- Liang Wang., Tieniu Tan., Weiming Hu., and Huazhong Ning., 2003. Automatic Gait Recognition Based on Statistical Shape Analysis. IEEE Transactions On Image Processing, Vol. 12, No. 9.

- Liang Wang., Tieniu Tan., Huazhong Ning., Weiming Hu., 2003. Silhouette analysis based gait recognition for human identification. IEEE transactions on Pattern Analysis and Machine Intelligence (PAMI), Vol. 25, No. 12, pp. 1505-1518.

- Jeffrey E. Boyd and James J. Little., 2005. Biometric Gait Recognition. M. Tistarelli, J. Bigun, and E. Grosso (Eds.): Biometrics School, LNCS 3161, pp. 19–42, © Springer-Verlag Berlin Heidelberg.

- J. Han and B. Bhanu., 2006. Individual recognition using gait energy image. IEEE Transactions on Pattern Analysis and Machine Intelligence. vol. 28, pp. 316 – 322.

- Shiqi Yu., Daoliang Tan., and Tieniu Tan., 2006. A Framework for Evaluating the Effect of View Angle, Clothing and Carrying Condition on Gait Recognition. In Proc. of the 18′th International Conference on Pattern Recognition (ICPR). Hong Kong, China.

- Bo Ye., Yumei Wen., 2006. A New Gait Recognition Method Based on Body Contour. In proceedings of ICARCV, 1- 4244 0342-1/06 © IEEE.

- Daoliang Tan., Kaiqi Huang., Shiqi Yu., and Tieniu Tan., 2006. Efficient Night Gait Recognition Based on Template Matching. In Proc. of the 18′th International Conference on Pattern Recognition (ICPR06). Hong Kong, China.

- Martin Hofmann, Shamik Sural, Gerhard Rigoll., 2007. Gait Recognition In The Presence Of Occlusion: A New Dataset and Baseline Algorithms, IEEE Conference on Computer Vision and Pattern Recognition, CVPR '07, ISSN: 1063-6919, pp. 1 – 8.

- Carsten Hoilund., 2007. The Radon Transform. Aalborg University. VGIS, 07gr721, November 12, 2007.

- Sungjun Hong., Heesung Lee., Imran Fareed Nizami., and Euntai Kim., 2007. A New Gait Representation for Human Identification: Mass Vector. 1-4244-0737-0/07, IEEE.

- D.Gafurov., 2007. A Survey of Biometric Gait Recognition: Approaches, Security and Challenges, NIK-2007 conferences.

- Edward Guillen., Daniel Padilla., Adriana Hernandez., Kenneth Barner., 2009. Gait Recognition System: Bundle Rectangle Approach. World Academy of Science, Engineering and Technology 34.

- Lee H., Hong S., and Kim E., 2009. An Efficient Gait Recognition with Backpack Removal. Hindawi Publishing Corporation. EURASIP Journal on Advances in Signal Processing. Article ID 384384, 7 pages, pp: 1-7, doi:10.1155/2009/384384.

- Wang, Liang., 2009. Behavioral Biometrics for Human Identification: Intelligent Applications. IGI Global, 530 pages, 31.

- Craig Martek., 2010. A Survey of Silhouette-Based Gait Recognition Methods.

- Md. Atlab Hossain., Yasushi Makihara., Junqiu Wang., Yasushi Yagi., 2010. Clothing invariant gait identification using part based clothing categorization and adaptive weight control. Pattern Recognition. 43(6): 2281-2291, DOI: 10.1016/j.patcog.2009.12.020.

- M. Pushpa Rani and G.Arumugam., 2010. An Efficient Gait Recognition System for Human Identification Using Modified ICA. International Journal of Computer Science and Information Technology. Vol 2, No 1.

- Lili Liu., Yilong Yin., and Wei Qin., 2010. Gait Recognition Based on Outermost Contour. J. Yu et al. (Eds.): RSKT 2010, LNAI 6401, pp. 395–402 © Springer-Verlag Berlin Heidelberg.

- Jyoti Bharti., M.K Gupta., 2011. Human Gait Recognition using All Pair Shortest Path. 2011 International Conference on Software and Computer Applications IPCSIT vol.9 © IACSIT Press, Singapore.

- BEN Xianye., AN Shi, WANG Jian, LIU Haiyang, 2011. A Subblock Matrix Approach to Data-reduction in Gait Recognition. Advanced Materials Research Vols. Trans Tech Publications. 181-182, pp. 902-907.

- Hayder Ali., Jamal Dargham., Chekima Ali., Ervin Gobin Moung., 2011. Gait Recognition using Gait Energy Image. International Journal of Signal Processing, Image Processing and Pattern Recognition. Vol. 4, No. 3.

- M. Hofmann and G. Rigoll., 2012. Improved Gait Recognition Using Gradient Histogram Energy Image. IEEE International Conference on Image Processing. Orlando, FL, USA, pp. 1389-1392.

- P. Theekhanont., W. Kurutach., and S. Miguet., 2012. Gait Recognition using GE1 and Pattern Trace Transform. International Symposium on Information Technology in Medicine and Education (ITME). vol. 2, pp. 936-940.

- Md. Rokanujjaman.,Md. Shariful Islam.,Md. Atlab Hossain.,Yasushi Yagi., 2013. Effective part based gait identification using frequency domain gait entropy features. Multimedia Tools and Applications. 74(9), DOI: 10.1007/s11042-013-1770-8.

- Rohit Katiyar and Vinay Kumar Pathak., 2013. Gait Recognition Based on Energy Deviation Image Using Fuzzy Component Analysis. International Journal of Innovation, Management and Technology. Vol. 4, No. 1.

- Xiaoxiang LI and Youbin CHEN., 2013. Gait Recognition Based on Structural Gait Energy Image. Journal of Computational Information Systems 9: 1 (2013), p. 121–126.

- Yumi Iwashita., Koji Uchino and Ryo Kurazume., 2013. Gait Based Person Identification Robust to Changes in Appearance. Sensors, 13 (6) (2013), pp.7884-7

- Mohan Kumar H P and Nagendraswamy H S., 2014. Symbolic Representation and recognition of Gait: An Approach Based on LBP of Split Gait Energy images. Signal & Image Processing: An International Journal (SIPIJ). Vol.5, No.4.

- Mohan Kumar H P., Nagendraswamy H S., 2014. Fusion of Silhouette Based Gait Features for Gait Recognition, International Journal of Engineering and Technical Research (IJETR). ISSN: 2321-0869, Volume-2, Issue-8.

- Suvarna Shirke and Soudamini Pawar., 2014. Human Gait Recognition for Different Viewing Angles using PCA. International Journal of Computer Science and Information Technologies. Vol. 5 (4), p. 4958-4961.

- Mohan Kumar H P, Nagendraswamy H S, "Change Energy Image for Gait Recognition: An Approach Based on Symbolic Representation", IJIGSP, vol.6, no.4, pp.1-8, 2014.DOI: 10.5815/ijigsp.2014.04.01

- V. G. Manjunatha Guru and V. N. Kamalesh., 2015. Vision based Human Gait Recognition System: Observations, Pragmatic Conditions and Datasets. Indian Journal of Science and Technology. ISSN (Print): 0974-6846, ISSN (Online): 0974-5645, Vol. 8(15), 71237.

- Deepak N.A., Sinha U.N., 2016. Topic Model for Person Identification using Gait Sequence Analysis. International Journal of Computer Applications. pp. 0975 - 8887, Volume 133 - No.7.

- Manjunatha Guru V G, Kamalesh V N, Dinesh R., 2016. Human Gait Recognition Using Four Directional Variations of Gradient Gait Energy Image. In Proc. of the IEEE International Conference on computing, communication and automation (ICCCA), Greater Noida, India.