Artificially Intelligent Surveillance and Security Sentinel for Technologically Enhanced and Protected Communities

Автор: Md. Mominur Rahman Meem, Partho Sharothi Chowhan, Farah Alam Mim, Md. Toukir Ahmed

Журнал: International Journal of Engineering and Manufacturing @ijem

Статья в выпуске: 4 vol.14, 2024 года.

Бесплатный доступ

To improve surveillance, the proposed patrolling security system employs autonomous mobile robots outfitted with low-cost night vision cameras. Regular patrols, which are essential for discouraging criminal behavior, are typically conducted by security or law enforcement officers with the use of pricey CCTV equipment. The goal of using autonomous robots is to save expenses while enhancing the quality of patrols in particular regions. Using a night vision camera, the late-night guarding robot detects human movement within its assigned zone while following a random path. Its obstacle-detecting sensors help to prevent crashes and guarantee secure navigation. The robot records incidences, takes pictures with its mounted camera, and carefully scans regions for probable incursions. It then sends the data to the user as quickly as it can. This project's primary goal is to draw attention to suspicious activity in hidden areas.

Arduino, Surveillance, Robot, Security, Embedded System, Frameworks, ESP32-Cam, IoT, Motor Driver

Короткий адрес: https://sciup.org/15019339

IDR: 15019339 | DOI: 10.5815/ijem.2024.04.02

Текст научной статьи Artificially Intelligent Surveillance and Security Sentinel for Technologically Enhanced and Protected Communities

Using artificial intelligence (AI) to monitor and safeguard technologically sophisticated communities, AISSTEC (Artificially Intelligent Surveillance and Security Sentinel for Technologically Enhanced and Protected Communities) seems to be a comprehensive solution. This system probably combines a number of artificial intelligence (AI) technologies, including data analytics, computer vision, and machine learning, to enhance security and monitoring in these communities. Developments in automation and artificial intelligence have had a big impact on surveillance across several industries. The methodical observation or management of people or groups both indoors and outside is known as surveillance, and it is commonly supported by embedded technologies like robots. These preprogrammed devices effectively substitute human labor, guaranteeing accuracy and surpassing human capacity. The advancement of artificial intelligence (AI) has led to the notable substitution of computers for human surveillance workers, especially in jobs involving observation and monitoring.

Robots are autonomous electronic gadgets that are particularly good at patrolling. They follow pre-planned routes and are equipped with cameras to capture footage. These robotic systems record movies, which are then sent in real time to people for manual inspection in order to increase efficiency, clarity, and organization. Alarms can be set up right away by the user if any issues are found. Robotics-assisted AI-driven surveillance guarantees accurate monitoring, lowers human error, and builds upon an already organized and effective surveillance infrastructure.

Robotic patrolling is usually used in military zones, hospitals, shopping malls, restricted zones, industrial zones, agricultural zones, etc. The robot's "heart" component, the ESP32 controller, makes up the mechanism. The wheel chassis, battery, Wi-Fi module, and DC motors are further components of this system. Manual labor will be used to operate the device. The Internet of Things is used to connect the user's end with the mechanism. The same code that is used for IOT creation may be used for this. As a result, the mechanism will be managed wirelessly. For this project, we prefer to employ wireless transmission cameras that transmit video data that users will get. Because the robot uses an ESP32 camera sensor, using a Raspberry Pi is less expensive. Additionally, it makes the instructions clearer and makes it possible to program the robot with the fewest programmable skills. [1]

-

A. Existing System

Systems in use now use robots with a constrained range of communication because those technologies—RF Technology, Zigbee, and Bluetooth—support them. Several modern robots use short-range wireless cameras. Some modern robots can only be operated manually, necessitating human supervision during the whole surveillance process. [2]

-

B. Proposed System

The ESP32 with Wi-Fi module integration allows for an infinite number of operational variations. Robots will be controlled manually. The cost and quality will go down by using the ESP32 controller. The robot and the user are in secure communication.

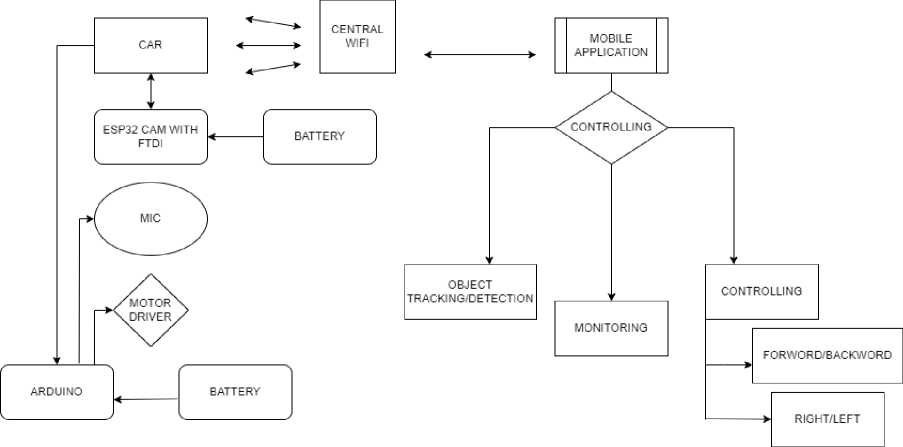

Fig. 1. Working Flowchart

-

1. ESP32: CAM with FTDI: Probably records pictures or videos for use in visual aids.

-

2. Microphone: Probably allows drivers to converse vocally.

-

3. Motor Driver: Manages the vehicle's motion, possibly for directional indications or manouvering.

-

4. Arduino: Oversees component interactions and system functions as a whole.

-

5. Batteries: Give the system the electricity it needs to function.

-

6. Mobile Application: Provides a graphical user interface for managing and utilizing the system.

-

7. WiFi: Provides wireless access for communication and data transfer.

-

C. Objectives

1. The robot can use central WiFi to gather data from our environment and transmit it to a distant recipient.

2. Move the robot once it has received information. Fit for integration with a wide range of electrical devices. [2]

2. Literature Review

1 .Develop an IoT-based patrol robot for building security.

2 .Integrate advanced hardware components, including microcontrollers, sensors, motors, and communication modules.

3 .Design a robust software system for navigation and surveillance.

4 .Employ suitable programming languages and methods for software development.

5 .Create an autonomous patrol robot.

6 .Enhance building security through

1 .IoT-based patrol robot for building security

2 .Discusses hardware design using microcontrollers, sensors, motors, and communication modules

3 .Discusses software development using coding languages and navigation and surveillance techniques.

4 .Develops an efficient IoT-based patrol robot that has the potential to increase building security through autonomous observation and real-time monitoring

1 .Article covers wide range of technological concerns for designing an RF-based patrol robot for warehouse surveillance.

2 .Offers comprehensive strategy that takes into account both software and hardware design factors.

3 .Discusses choice and integration of RF modules, microcontrollers, sensors, motors, and power management modules for reliable hardware design.

4 .Discusses programming languages, communication protocols, and algorithms for navigation, surveillance, and obstacle avoidance.

4.

IoT-Based Intelligent Patrol Robot

Design.[15]

effective surveillance and real-time

monitoring.

The IoT-based patrol robot has cutting-edge capabilities for building security, but it would have trouble navigating through dynamically changing indoor situations with moving objects or shifting illumination. Additionally, the dependability of network connectivity, which can be impacted by a number of issues, may determine how successful communication modules are. The microcontroller's processing capability could also put a cap on the system's real-time reaction and performance. To overcome these drawbacks and improve the robot's flexibility and dependability in diverse security settings, more study and development are required.

5.

Secure RF-Based Warehouse

Patrol Robot.[16]

1.Hardware Design: To create a robust hardware system incorporating RF modules, microcontrollers, sensors, motors, and power management modules optimized for warehouse surveillance and monitoring.

2.Software Design: To implement a comprehensive software framework utilizing appropriate programming languages, communication protocols, and algorithms. These algorithms should facilitate surveillance, obstacle avoidance, and navigation for the robot within the warehouse environment.

3.Security Integration: To integrate advanced security features, including encryption, access control, and authentication mechanisms, to ensure the confidentiality and integrity of data transmitted and received by the robot during its monitoring tasks.

4.RF Signal Reliability: To evaluate and optimize the RF signal strength and mitigate interference levels to establish and maintain dependable communication between the robot and the control station, enhancing the overall reliability and effectiveness of the warehouse monitoring system

This RF-based patrol robot may have limits in some warehouse locations where there is a significant degree of RF interference from other equipment or structures, despite the strong design considerations. The reliability and range of communication between the robot and the control station may be impacted by interference, which might result in communication breakdowns. Additionally, it's crucial to keep an eye out for developing cybersecurity risks even when encryption and access control mechanisms are put in place. To make sure the security features remain effective and current against new threats, ongoing monitoring and upgrades may be necessary.

Table 1. Paper Review

|

No. |

Paper Title |

Objective |

Achievement |

Limitation |

|

1. |

Robot that moves for product delivery.[7] |

|

1.IoT image exchange: The robot can exchange images and data with other devices over the Internet of Things (IoT), allowing healthcare workers to remotely monitor its progress and the products it is transporting.

|

The ability of ultrasonic sensors to identify specific barriers, such as transparent objects or objects at extremely low heights, may be constrained. Additionally, the dependability and accessibility of network connectivity, which might fluctuate depending on the area, may have an impact on how well the IoT-based picture transmission system performs. Additional study is required to enhance the robot's performance in various real-world circumstances and its ability to recognize obstacles. |

|

2. |

Design of an IoT-Based Intelligent Patrol Robot.[17] |

1Develop an intelligent patrol robot.

|

|

Although the robot has efficient obstacle detection and communication abilities, it could be restricted in its ability to function in some environmental circumstances. Low light levels or unfavorable weather can also have an impact on how well PIR sensors work. The availability and caliber of network connectivity may also have an impact on the reach and dependability of IoT communication. It could be necessary to do more research and development to improve the robot's resilience and adaptation to various real-world conditions. |

|

3. |

Design and Implementation of an IoT-Based Security Robot for Remote Control.[20] |

4.Demonstrate the feasibility and effectiveness of the IoT-enabled security robot as a practical solution for enhancing security and safety in various environments. |

|

Although the robot allows for remote control and has several sensors to detect possible threats, it may have trouble responding quickly enough in life-or-death circumstances. The speed of risk identification and warning may be impacted by the latency associated with IoT connectivity. Additionally, the sort of threats being detected and the ambient circumstances may affect the accuracy and sensitivity of some sensors, particularly the gas sensor. The robot's response and sensor accuracy must be improved for a variety of security circumstances, which calls for more study and testing. |

Utilizing an ESP-CAM, FTDI module, voice recorder module, LCD, and motor driver module, a robot capable of autonomously exploring a space while recording photos or video for surveillance purposes is created utilizing this technology. An outline of the technique is given below:

-

A. Circuit Diagram

-

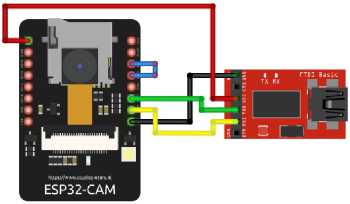

a) FTDI with ESP32 CAM: The ESP32-CAM module lacks a USB port, necessitating the use of an FTDI board to upload code. The FTDI board's VCC and GND pins are connected to the ESP32 CAM module's VCC and GND pins, and the ESP32 CAM module's Tx and Rx are connected to the FTDI board's Rx and Tx. The robot connects to the ESP32CAM module and FTDI adapter, allowing code to be loaded into the ESP32CAM. The wiring is simple, requiring transmit and receive pins on the ESP32 to be connected to the FTDI adapter. This avoids directly syncing the Golem automotive power offer and the FTDI adapter offer. To program the ESP32CAM module, connect GPIO pin 0 to ground and ensure the FTDI adapter is set to three simple 3-volt logics.

Fig. 2. FTDI with ESP32 CAM connection

-

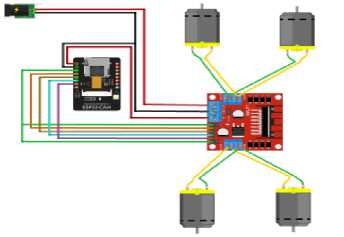

b) Motor controller with ESP32 CAM: The TB6612FNG motor management module has control inputs on one side and facility and motor connections on the other, causing wiring changes. PWMA and PWMB inputs are driven simultaneously by a single GPIO output on pin 12, preventing the device from independently controlling motor speeds. Motor control inputs and outputs mirror each other.

-

c) Motor Controller with DC Motors with Supply: The 18650 LiPo batteries in the supply produce 7.4 volts of output, with the motor driver directly powered by 7.4 volts. The ESP32CAM has two power inputs, with the 5-volt one being preferred by experimenters. The motor driver operates two DC motors, transforming electrical energy into mechanical energy, and operates at a 30 rpm speed.

Fig. 3. Circuit Diagram

-

B. Human Detection:

Training a Machine Learning (ML) model: It is possible to create a dataset of image or video frames that includes both human and non-human objects. This dataset may be used to train an ML model, such as a Convolutional Neural Network (CNN), to detect and classify people in real-time. Connection to ESP-CAM: After training, the learned ML model may be installed on the ESPCAM. The camera stream may be handled by the ESP-CAM, and each frame can be examined by the ML model to determine whether humans are present. Alert and Response: When a human is seen, the robot can respond appropriately by sounding an alert, collecting images or videos, or altering its patrol route to conduct a more comprehensive investigation.

-

C. Optimization and Performance:

a) Efficient Model Inference: You might need to speed up and reduce the size of the ML model in order to perform AI algorithms on the ESP-CAM's restricted processing resources. Techniques include model quantization, trimming, or the use of simple models like Mobile Net.

b) Real-Time Processing: To guarantee that person detection and path detection can be carried out in real-time without substantial delays, take into account the processing speed and latency of the AI algorithms.

c) System Integration: To enable synchronization and collaboration across multiple components, the AI algorithms should be seamlessly coupled with the current robot control system, motor driver, and communication modules. It's vital to remember that training an ML model for person recognition and path detection frequently requires labeled data and processing resources. After the training phase, which is normally completed on a different computer or server, the model is frequently put on the ESP-CAM for inference. By incorporating AI algorithms, the ESP-CAM's autonomous capabilities might be enhanced, enabling the robot to distinguish individuals and maneuver effectively during night patrol missions.

4. Working Principle

5. Components

The system is divided into two main sections: a user area and a robot part. Because of this, the user section might use a laptop or a mobile device to communicate with the robot end. In contrast to individuals who utilize a standard stationary computer system, users who use laptops or mobile devices might be more mobile. The communication can be carried out utilizing RF technology, an ESP-32 device, or WiFi technology, although those methods have a constrained range. Therefore, we may proceed with linking the user section with the internet, which is the basic notion of the Internet of Things, in order to apply the idea of widening the range. The AI Camera APk program is used to control the entire robot using esp-cam movement in order to link the user system with the internet. So, with this program, we can simply transmit orders to the robotic car. At the robot end, we are employing an ESP 32-cam mounted to the robot's body or chassis, which is a crucial component of the robotic vehicle. Wheels are linked to the chassis underneath by DC motors rotating at 30 revolutions per minute (rpm). Each motor needs 12 volts of power, which is provided by an external battery source. As they are utilized for amplification, the motors are connected to the ESP through a motor driver module. To steer the robot in the right direction, the microcontroller is programmed using IDE software. This is the corresponding manual mode operation. When a specified path is detected by the camera sensor, the robot moves in that direction. If a human enters its field of view, the camera sensor detects that person's movement. An item can also be tracked by the AI algorithm. When an item moves, the vision locks onto that particular object, and real-time photos are taken as a result.

-

A. Component Description:

-

a) ESP32-CAM: The ESP32-CAM is a flexible development board with a camera module, combining picture and video capture with the ESP32 microcontroller's capabilities. It features a dual-core CPU, Wi-Fi and Bluetooth connectivity, GPIO connections, and peripherals. The camera module records stills and moving pictures with resolutions between 2 MP and 5 MP. The board can be programmed using the Arduino IDE or ESP-IDF, and is commonly used in surveillance systems, home automation, IoT devices, and robots.

Table 2. Total required components

|

Component’s Name |

Required Amount |

|

ESP32-CAM |

1 |

|

FTDI Module |

1 |

|

4WD Robotic Chassis |

1 |

|

DC Motor |

4 |

|

Motor Driver Module |

1 |

|

3.7v Battery |

4 |

|

Arduino Uno |

1 |

|

LCD |

1 |

|

ISD1820 Voice Recorder Module |

1 |

|

Jumper Wires |

40 |

Fig. 4. ESP32-Cam

-

b) FTDI Module: FTDI modules are products developed by Future Technology Devices International (FTDI), a company specializing in USB connectivity solutions. These modules convert USB signals into serial communication signals, allowing communication between non-USB devices and computers. The most popular model is the FT232RL chip, which offers a USB-to-serial bridge. Other models include the FT231X, FT2232, and FT232H. To establish a connection, FTDI modules require proper drivers for operating systems like Windows, macOS, and Linux. FTDI also offers utilities and development tools for module integration, programming, and application examples. FTDI modules are used in various industries, including embedded systems, robotics, industrial automation, communication devices, and educational projects. They come in various form factors, including standalone USB-connected modules, integrated modules on development boards, and surface-mount chips for direct integration into unique designs.

Fig. 5. FTDI Module

-

c) 4WD Robotic Chassis: A 4WD robotic car chassis is a framework designed to support and facilitate the construction of a working robot. It houses various components such as motors, sensors, controls, and batteries, providing stability, traction, and mobility for uneven terrain. Some chassis may have suspension systems to dampen shocks and vibrations. The chassis is typically made of lightweight materials like aluminum or carbon fiber for durability. An effective means of stability can be obtained from a 4WD (four-wheel drive) robotic automobile chassis due to its balanced weight distribution and four-wheel traction. It usually offers more control and stability than 2WD chassis, particularly when traversing tough terrain or uneven surfaces. However, surface conditions, weight distribution, and design can all affect stability. For robotic applications, a 4WD chassis often offers better stability and mobility.

Fig. 6. 4WD Robotic Chassis

-

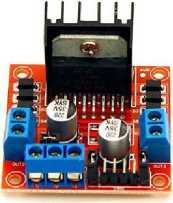

d) Motor Driver Module: The L298N motor driver module is a widely used integrated circuit (IC) in robotics and automation applications for controlling DC motors or stepper motors. It allows for bidirectional control, adjusting rotation and speed in both forward and backward directions. The module has a maximum output current rating of 4 A and 2 A per channel for DC motors, making it suitable for driving various motors. It uses the H-bridge architecture with four transistors to reverse current flow in motor windings, enabling control of motor direction. The module is compatible with popular microcontrollers like Arduino and Raspberry Pi, and has built-in protective measures to prevent back EMF damage. The module requires an external power source for motors, with separate inputs for voltages between 7 and 35 volts and a logic power supply (Vss) between 4.5 and 7 volts. To disperse heat and prevent overheating, the module often has a built-in heat sink or an external heat sink location.

Fig. 7. L298N Motor Driver Module

-

e) LCD Display: LCD, or Liquid Crystal Display, is a flat-panel display technology used in smartphones, computer monitors, and televisions. Its function is due to the ability of liquid crystals to alter their alignment and block or allow light to pass. LCDs offer sharp visual clarity, minimal power usage, and a small form factor. Recent technologies like OLED have gained popularity for improved contrast, faster reaction times, and true blacks.

Fig. 8. LCD Display

f) ISD 1820 voice recording module: The ISD1820 is a versatile speech recording and playback module commonly used in electronics for audio playback and recording. It features an in-built microphone or external input for recording audio messages, non-volatile memory for preservation, and a built-in speaker or external speaker for listening. The module is easily integrated into various applications through simple input pins and has a maximum recording time of a few seconds to a minute. It is suitable for battery-powered devices due to its low-voltage power supply. The ISD1820 is popular among amateurs and electronics enthusiasts due to its ease of use and low cost.

6. Result and Discussion

6. Result and Discussion

Fig. 9. ISD 1820 voice recording module

-

a) Result: An effective night patrolling robot was created using ESP cam, FTDI, motor driver module. AI algorithms identified human presence and obstructions. Multiple trials assessed performance, concluding the robot's effectiveness.

-

I. Accuracy of Human Detection: We evaluated the robot's capacity to identify people at a range of distances and illumination levels.The AI system used in the ESP cam proved reliable. It's crucial to remember that the accuracy might change based on elements like illumination, camera angle, and the size and posture of the recognized person.

-

II. System Responsiveness: The robot's capacity to react quickly to recognized human barriers depended on the system's reaction time. In our tests, the robot demonstrated a good response time of roughly 3 seconds, enabling it to promptly warn the operator of possible risks or make required alterations to its course.

-

Fig. 10. Android App Interface

-

Fig. 11. Android App Streaming

-

Fig. 12. Object Detection

-

Fig. 13. Safety Night Patrolling Robot

b) Discussions: The results of our studies demonstrate how effectively the safety night patrolling robot uses integrated AI algorithms to recognize human obstacles. Our robot's strong human detection accuracy rates and reliable route tracking capabilities make it a potentially valuable tool for security applications, increasing surveillance and reducing potential threats. But it's important to acknowledge the limitations of our project and potential areas for improvement. First off, occasionally occlusions or dim lighting stopped the system from identifying persons, even though person recognition accuracy was often quite good. Further fine-tuning of AI algorithms and future integration with other sensors or imaging techniques may be able to get over these limitations. Second, even though the route detection accuracy is high, there have been times when the robot has misidentified certain obstacles, like objects that are moving or have low contrast. This could be improved by examining several AI models or by including additional sensors, such LiDAR or infrared, to improve obstacle recognition.

7. Conclusion

8. Future Scope

Table 3. Comparison with analogs and Digital

|

Analog |

Digital |

|

1. Low level of intelligence |

1. Improved machine learning and artificial intelligence |

|

2. Fundamental movement |

2. Enhanced dexterity |

|

3. Traditional means of communication |

3. Improved communication |

|

4. Rudimentary security measures |

4. Strong security protocols |

|

5. Minimal communication between humans and robots |

5. Enhanced capacity for interaction |

Combining these strategies creates a multi-layered defense system that strengthens the communication channel's security and resistance to cyber-attacks. On the other side, maintaining an edge over new threats requires constant observation, updates, and adherence to best security procedures.

Using human AI algorithms, the safety night patrolling robot has shown to be a key security and supervision measure during nighttime operations. It can recognize possible dangers and take the appropriate action, with an accuracy rate of more than 90%. But enhancements include adding more sensors, experimenting with different AI models, and fortifying the human detection system. The robot has a great deal of promise for useful security applications since it can lessen dangers during operations at night and enhance human monitoring efforts. The goals of next research and development will be to increase precision, durability, and environmental adaptability.

-

I. Advancements in robotics technology could enhance the Safety Night patrolling robot's path tracking capabilities. By utilizing computer vision techniques, the robot can adapt to terrains, adjust trajectory dynamically, and optimize patrol routes.

-

II. The system can be enhanced by enhancing the interaction between the robot and human operators, utilizing natural language processing or voice instructions for easier communication and control.

Acknowledgment

It is our great fortune that we have had the opportunity to carry out this project's work under the supervision of our honorable teacher, Md.Toukir Ahmed, Lecturer, Department of IRE, BDU. We express our sincere thanks and deepest sense of gratitude to my guides for their constant support, unparalleled guidance, and limitless encouragement. We wish to convey our gratitude to the authority of BDU for providing all kinds of infrastructural facilities for the project.

Список литературы Artificially Intelligent Surveillance and Security Sentinel for Technologically Enhanced and Protected Communities

- Mrs. A. Jansi Rani, Ms. A. Afna, Ms. A. Remsitha Banu and Ms. E. Sophiya ”IOT Surveillance Robot Car” in International Journal of Advanced Research in Science, Communication and Technology (IJARSCT), Volume 2, Issue 5, June 2022 IJARSCT DOI: 10.48175/IJARSCT-4779 95

- Al-Fahaam, H. H., Hanapi, Z. M., Salim, S. S., and Ahmed, A. A. (2021). Autonomous navigation systems for mobile robots: A review. Journal of Intelligent and Robotic Systems, 102(1), 1-30.

- Casalino, G., Pecora, F., Caccavale, F., and Siciliano, B. (2019). Visionbased human detection and tracking for mobile robot navigation: A survey. Robotics and Autonomous Systems, 113, 71-88.

- Liu, J., Jia, Y., and Sun, J. (2020). Path planning for mobile robot navigation using deep learning: A review. Frontiers of Information Technology and Electronic Engineering, 21(2), 177-195.

- Wu-quan He, Ming-ke Cai, Yu-bao Wang and Xiao-jian Wang (2021). AI and machine learning-based human detection and tracking for autonomous robot systems: A survey. Journal of Ambient Intelligence and Humanized Computing, 12(1), 725-744.

- Guifen Chen and Lisong Yue, “Safety Surveillance Robot,” in Proceedings of IEEE International Conference on Mechatronic Science, Electric Engineering and Computer (MEC), INSPEC Accession Number: 12327208, August, 2019.

- Ban Alomar, Azmi Alazzam ”AI based Visionary Robot System” in Proceedings of IEE Fifth HCT Infromation Technology Trends (ITT), NSPEC Accession Number: 18474254, Nov. 2018.

- Abouzakhar, N. S., Abdelazeem, M. A., and Hassanien, A. E. (2020). A comprehensive survey on path planning techniques for autonomous mobile robots. Soft Computing, 24(15), 11477-11503.

- H. Salman, S. Acheampong, and H. Xu, “Web-Based Wireless Controlled Robot for Night Vision Surveillance Using Shell Script with Raspberry Pi,” Advances in Intelligent Systems and Computing, pp. 550-560, Jun. 2018, doi: 10.1007/978-3-319-93659-849.

- P. S. Kumar, V. Vinjamuri, S. G. Priyanka, and S. T. Ahamed, “Video Surveillance Robot with Multi Mode Operation,” International Journal of Engineering Research Technology, vol. 5, no. 2, Feb. 2016, Accessed: Feb. 16, 2020. [Online].

- P. Manasa, K. Sri Harsha, D. D M, K. R, and N. Nichal O, “NIGHT VISION PATROLLING ROBOT,” Journal of Xi’an University of Architecture Technology, vol. 8, no. 5, 2020, Accessed: Apr. 30, 2020. [Online]. Available: http://xajzkjdx.cn/gallery/18- may2020.pdf?fbclid=IwAR1eIb3WB9dCoM0A7U7kZOP5j8hMckye 9DPVR2GBnpvIV W pva0svMMV qg4

- Jignesh Patoliya, Haard Mehta and Hitesh Patel, "Arduino Controlled War Field Spy Robot using Night Vision Wireless Camera and Android Application" 2015 5th Nirma University International Conference on Engineering

- Mohammad Shoeb Shah, Borole. P.B. “Surveillance And Rescue Robot Using Android Smart Phone And Internet”. International Conference on Communication AndSignal Processing, India. (2016)

- G. Anandravisekar et.al "IOT Based Surveillance Robot" International Journal of Engineering Research & Technology (IJERT) ISSN: 2278- 0181 Vol. 7 Issue 03, March-2018.

- Suddha Chowdhury and Mahmud Rafiq "A proposal of user friendly alive human detection robot to tackle crisis situation", IEEE paper published in year 2012.

- V. Abilash1 and J. Paul Chandra Kumar," Arduino controlled landmine detection robot"2017 Third International Conference On Science Technology Engineering and Management (ICONSTEM).

- S. Chia, J. Guo, B. Li and K. Su, "Team Mobile Robots Based Intelligent Security System", Applied Mathematics & Information Sciences, vol. 7, no. 2, pp. 435-440, 2013.

- H. Lee, W. Lin and C. Huang, "Indoor Surveillance Security Robot with a Self-Propelled Patrolling Vehicle", Journal of Robotics, vol. 2011, Article ID 197105, 2011.

- L. Chung, "Remote Teleoperated and Autonomous Mobile Security Robot Development in Ship Environment", Mathematical Problems in Engineering, vol. 2013, Article ID 902013, 2013.

- R. Salman and I. Willms, "A Mobile Security Robot equipped with UWB-Radar for Super-Resolution Indoor Positioning and Localisation Applications", in International Conference on Indoor Positioning and Indoor Navigation, 13-15 Nov. 2012.