Automated Wall Painting Robot for Mixing Colors based on Mobile Application

Автор: Ayman Abdullah Ahmed Al Mawali, Shaik Mazhar Hussain

Журнал: International Journal of Engineering and Manufacturing @ijem

Статья в выпуске: 1 vol.13, 2023 года.

Бесплатный доступ

The final stage, which is the building paint or the adopted design, is where most real estate developers and constructors struggle. Where extensive painting is required, which takes a lot of time, effort, and accuracy from the firm doing the work. Additionally, it might be challenging to decide on the precise color grades for the design and calculate the right amount of paint to use for the job. Where these activities are extremely expensive, and the complex implementation is accompanied by worries and skepticism. These are the motivations behind the development of painting machines that blend colors. Artificial intelligence is used in the machine's design to make it efficient and quick at what it does. High accuracy is needed when selecting the proper colors, and this machine is distinguished by its ability to select the proper color tone. The color sensor (TCS34725 RGB) determines the relevance and accuracy of the desired color by comparison with the system database with the assistance of the light sensor (STM32), which measures the degree of illumination of the chosen place. By combining basic colors, this technique saves the customer the hassle of looking at specialized stores for the level of color they require. By giving the system the codes assigned to each color, it may also blend colors. The system also has the feature of controlling the machine remotely via smart phone application by enabling bluetooth and wifi features.

TCS34725 RGB, STM32, Bluetooth, Wi-Fi, Artificial intelligence

Короткий адрес: https://sciup.org/15018607

IDR: 15018607 | DOI: 10.5815/ijem.2023.01.04

Текст научной статьи Automated Wall Painting Robot for Mixing Colors based on Mobile Application

Urban development and final design and also the process of choosing colors is one of the most problems faced by real estate developers and contractors, and according to the Occupational Safety and Health Administration, 20.5% of worker deaths occurred in buildings under construction in 2019 [1,2,3]. in addition, there is great difficulty in choosing the right color, as well as searching for it in the local markets, and the demand from international markets takes longer until it reaches the project site. as these works cost huge amounts of money and more time amid concerns and doubts about the accuracy of implementation. Which is the main reason for choosing the painting machine with mixing colors.

Urban growth and increased costs in the use of manpower to paint and provide the appropriate colors for buildings on time. This makes it costly for property developers and property owners. Many ideas and advantages have been put forward, which have some limitations and advantages. But not in the framework of the desired results. It makes this project important which it helps reduce the time of the painting process by 30% and the amount of cost up to 50%, according to studies that have been done on many real estate developers and real estate owners. Furthermore, with the lack of a machine that combines mixing colors based on the desire of the developers and owners at the work site, as well as its ability to paint the building automatically using smart phones remotely, which makes it more important at the present time.

The main purpose of this work is to review the existing works, gaps, and inconsistencies related to the painting machine and propose a mechanism to design and implement a Painting Machine with Mixing Colors using the latest technologies and control it through smart phone applications.

The following are the outcomes of this work:

(i) To Create a smartphone application that will connect to an Arduino Mega and allow for remote operation of a painting machine.

(ii) To paint precisely, the painting machine's movement needs to be oriented using two stepper motors in four directions.

(iii) To obtain the correct color with excellent accuracy, the TCS34725 RGB Color Sensor and STM32 Light Sensor must be interfaced with the Arduino Mega.

2. Methodology

All buildings under construction, as well as buildings that need to change the color of the paint, such as buildings, homes, schools, colleges, universities, and companies.

This paper is organized as follows: The background, aim, objectives, and applications of the project are included in the first section of the work that is being presented; the project methodology is included in the second section; literature review and theory are included in the third section; and design and analysis, which includes system analysis, requirements analysis, system design, system test plan, and the conclusion, are included in the final section.

The following are some of them given that the methodology is a set of fundamental strategies for finishing the project, that it includes numerous methods for studying the project for it to be developed in a proper manner, that it helps with project control and management, and that the project's importance is divided into several categories: -Creating project specs

- Offering a workable strategy.

- Dispensing information at a specific time.

- Designing a system that is dependable, well-thought-out, and simple to manage.

3. Literature Review

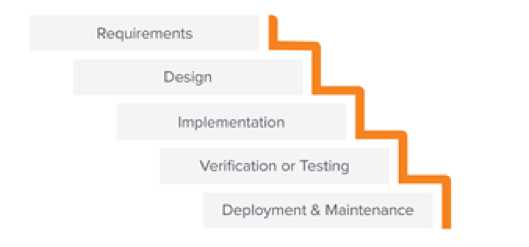

The methodology that has been adapted in this work is waterfall methodology. The waterfall model will be used in this project because it is straightforward to implement, has an easy-to-understand concept, and has stages that can be finished independently of one another while the first stage is still being finished. This allows the project to be implemented correctly, successfully, and without gaps. Points can be easily added to each step as well. In contrast to the spiral model, this one is more expensive for small projects to implement, complex, and has multiple interconnected stages. Fig. 1 shows the steps implemented in waterfall methodology. The waterfall method's primary goal is to gather and clarify all requirements upfront, preventing development from going 'downhill' without the ability to make changes. Hence, this methodology facilitates to achieve the research objectives easily.

Fig. 1 Waterfall Methdology

A modular wall-painting robot (WPR) has been demonstrated in [3] and shown in Fig. 2 with a built-in remote control for use in potentially dangerous environments. The mechanical system was designed and created using materials that could be found around. The setup includes steel for the robot's frame, wood for the arm, a wastewater dispenser bottle for the paint reservoir, 12V and 24V DC electric motors, a surface pump (0.5 hp), hose, tires, and rollers, as well as a micro-controller unit, cables, and the bottom frame. When the robotic arm is in use, a pump that raises the paint at regular intervals while executing the reciprocating up and down motion feeds the roller. To automate and synchronize the setup, a programmable microcontroller was used. The robot's performance was evaluated using the conventional metric system as outstanding. The research is limited in terms of repeatability and precision during the painting process because of the arm's dexterity. More research can be done on the arm's control. The research's distinctive elements include the recycling of waste plastic water dispenser bottles as paint reservoirs, light wood for the robot's upper and lower arms, a DC motor from a wrecked Benz automobile as a programmed stepper, and waste tubeless tires from disused trolleys as tires for the WPR. Oil and gas infrastructure and equipment can be painted in small spaces using the developed method. It will reduce the amount of time needed to accomplish the task, lower labor expenses significantly, eliminate any potential health hazards, and enhance dependability, productivity, and surface finishes. The outcome of this investigation is distinctive, durable, and advantageous to the environment. It has been demonstrated that turning trash into useful robots is feasible in the era of Industry 4.0 [4,5,6].

Fig. 2. WPR

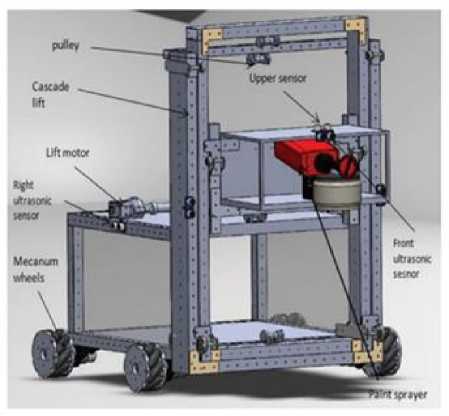

An autonomous wall painting robot [5] as shown in Fig.3 that can use a paint sprayer and a cascade lift mechanism to paint the interior walls of a room. This cascade lift system enables the paint sprayer to ascend to the required heights. Easy movement in all six directions with two degrees of freedom is made possible by the wheels with DC motors attached to the robot's base (degrees of freedom). The robot uses ultrasonic sensors to gauge its distance from the walls, adjust to them, and check to see if the sprayer has reached their tops. The master controller manages the ultrasonic sensors, wheels, and every other component of the robot. The autonomous wall-painting robot was implemented using a prototype version of the robot. The base and the cascade lift were combined to create it. Four wheels are attached to a wooden box that serves as the basis. Ultrasonic sensors are placed in the proper locations to measure the separation from the wall. The cascade lift, which is attached to the front portion of the base, has a paint sprayer holder that the paint sprayer is placed inside of. The lift was built by Cascade using aluminum profiles that were 4x4 and 2x2 in size. It consists of a single-stage lift that moves the paint sprayer up and down with the help of a DC motor attached to the top of the wooden box. The lift motor raises the sprayer by advancing the load's rope through a pulley. A second ultrasonic sensor in the sprayer's front part detects and maintains a constant distance between the robot and the front wall. The master controller for this prototype is a Raspberry Pi 3 powered by an SMPS [5-8].

Fig. 3. Autonomous Wall Painting Robot

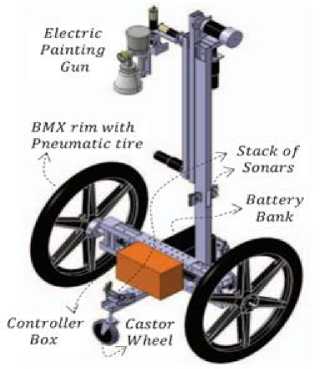

The development of an automatic wall painting machine prototype is described in this work. The prototype is controlled by the user's input of the wall's dimensions and paint color via a camera embedded Raspberry Pi 2 module. The Raspberry Pi Module gathers the wall image and utilizes image processing tools to calculate the dimensions, which it then transmits through Bluetooth transfer to the base painter system. The mechanical design comprises of a 2-D plotter with a painting joint connected to a line-by-line moving DCV (Directional Control Valve) spray paint. Two Nema 17 stepper motors power the plotter, which is managed by an STM32 slave controller that gets the dimensions from a Bluetooth module. Everything in the system is powered by a double shaft Nema 23 stepper motor [9,10,11].

Fig. 4. AGWallP

The camera module is attached to the Raspberry Pi 3 module. The user specifies the color to be painted on the wall, which is also the subject of the photograph. The foundation wall painting system, where steppers and spray systems work in tandem, is another component where electronics are used. An IoT (Internet of Things) system with Raspberry Pi units on either side of the setup was considered before utilizing the Bluetooth technology. The systems would be connected via a shared network, but this proved to be too expensive and beyond the scope of the project's goal. Finally, it was decided to build up a Bluetooth connection between the distant user and the painting system. The slave controller STM32F103C8T6, a 32-bit cortex-M3 microcontroller, is incorporated in the base painting station, as is the Bluetooth module HC-05 transceiver. The main rationale for selecting the controller was to obtain a less expensive but more powerful controller. Furthermore, because the STM32 controller has a huge online support community, it is easy to troubleshoot and debug any faults that occur during programming [12-15].

The detailed computer-aided design (CAD) model of a fully working wall painting robot for interior finishes is shown in this study. In addition to beautiful wall drawings, the RoboPainter can paint full-scale wall-ceiling murals. A DOF spray painting arm is installed on a DOF differentially driven mobile base in these degrees of freedom (DOF) mobile robot system. In comparison to previous literature, the design proposed achieves numerous accomplishments in terms of overall robot mass and painting pace. To enable for further improvement in terms of robot motion control, detailed dynamic model parameters are supplied. Fig. 5 shows robo painter

Fig. 5. RoboPainter

The designed technical data for the RoboPainter is a DOF mobile painting robot. A spray painting pistol is at the end of the painting arm. Thanks to the arm tip's generic fixation assembly, a variety of commercially available painting guns can be mounted to it; for example, see for sprayers that use 220 volte mains electricity and for those that use 110 volte mains electricity. It was designed with the intention of using off-the-shelf components to reduce development time and allow for easy, quick component replacement in the event of failure. RoboPainter's joints are controlled by ESCON servo controllers and Maxon DC-geared motors, all of which are equipped with position encoders. Despite the painting arm's considerable reach (1.29 m), its lateral motion is limited to the breadth of two paint strips in either direction from the robot centerline. The wall is separated into two pieces during the motion planning phase of the spray-gun path: the core and the outline sections. In the core section painting, the maximum permissible painting workspace per fixed mobile base post. The goal of such a constraint is twofold. By reducing the volume of space occupied by the robot structure, it improves human-robot safety. In an average of 10 seconds, the painting arm can deliver a strip of paint measuring 0.25 m wide by 2.45 m high.

Table 1. Comparision of existing approaches

Список литературы Automated Wall Painting Robot for Mixing Colors based on Mobile Application

- AlSakiti, Shaik Mazhar Hussain Mohammed Khalifa. "IoT based monitoring and tracing of COVID-19 contact persons." Journal of Student Research (2020).

- Isehaq Al Balushi, Shaik Mazhar Hussain. "IoT BASED AIR QUALITY MONITORING AND CONTROLLING IN UNDERGROUND MINES." Journal of Student Research (2022).

- Madhira, Kartik, et al. "AGWallP — Automatic guided wall painting system." 2017 Nirma University International Conference on Engineering (NUiCONE). IEEE, 23-25 November 2017.

- Nowf Sulaiman Nasser Sulaiman Al Habsi, Shaik Mazhar Hussain. "Protection of foodstuffs in storage warehouses system." Journal of Student Research (2022).

- Okwu, Modestus, et al. "Development of a Modular Wall Painting Robot for Hazardous Environment." 2021 International Conference on Artificial Intelligence, Big Data, Computing and Data Communication Systems (icABCD). Durban, South Africa: IEEE, 05-06 August 2021.

- Shaik Mazhar Hussain, Elham Ahmed Shinoon Nasser Al Saadi. "Voice enabled Elevator system." Journal of Student Research (2022).

- Sorour, Mohamed. "RoboPainter — A detailed robot design for interior wall painting." 2015 IEEE International Workshop on Advanced Robotics and its Social Impacts (ARSO). IEEE, 2015.

- N. M. Borodin, S. Martin and R. Sokolowsky, "Roy. G. Biv: The Color Matching Application for Artists With Limited Pigments," 2021 IEEE Integrated STEM Education Conference (ISEC), 2021, pp. 212-212, doi: 10.1109/ISEC52395.2021.9763974.

- R. Radharamanan and H. E. Jenkins, "Robot Applications in Laboratory-Learning Environment," 2007 Thirty-Ninth Southeastern Symposium on System Theory, 2007, pp. 80-84, doi: 10.1109/SSST.2007.352322.

- H. Rhee, M. -R. Jung and S. -J. Kim, "Live colors: Visualizing celluar automata," 2017 4th International Conference on Computer Applications and Information Processing Technology (CAIPT), 2017, pp. 1-5, doi: 10.1109/CAIPT.2017.8320744.

- S. H. Choi, H. Kim, K. -C. Shin, H. Kim and J. -K. Song, "Perceived Color Impression for Spatially Mixed Colors," in Journal of Display Technology, vol. 10, no. 4, pp. 282-287, April 2014, doi: 10.1109/JDT.2014.2300488.

- A. Fairuzabadi and A. A. Supianto, "CoMiG: a Color Mix Game as a Learning Media for Color Mixing Theory," 2018 International Conference on Sustainable Information Engineering and Technology (SIET), 2018, pp. 197-201, doi: 10.1109/SIET.2018.8693166.

- N. Gossett and Baoquan Chen, "Paint Inspired Color Mixing and Compositing for Visualization," IEEE Symposium on Information Visualization, 2004, pp. 113-118, doi: 10.1109/INFVIS.2004.52.

- L. Lin, K. Zeng, Y. Wang, Y. -Q. Xu and S. -C. Zhu, "Video Stylization: Painterly Rendering and Optimization With Content Extraction," in IEEE Transactions on Circuits and Systems for Video Technology, vol. 23, no. 4, pp. 577-590, April 2013, doi: 10.1109/TCSVT.2012.2210804.

- S. N and S. Feiner, "Synesthesia AR: Creating Sound-to-Color Synesthesia in Augmented Reality," 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 2022, pp. 874-875, doi: 10.1109/VRW55335.2022.00288.