Automatic System Recognition of License Plates using Neural Networks

Автор: Kalid A.Smadi, Takialddin Al Smadi

Журнал: International Journal of Engineering and Manufacturing(IJEM) @ijem

Статья в выпуске: 4, 2017 года.

Бесплатный доступ

The urgency to increase the efficiency of recognition of car number plates on images with a complex background need the development of methods, algorithms and programs to ensure high efficiency, To solve the task the author has used the methods of the artificial Intelligence, identification and pattern recognition in images, theory of artificial neural networks, convolution neural networks, evolutionary algorithms, mathematical modeling and models characters were then statistics by using feed forward back propagated multi layered perception neural networks.. The proposed this work is to show a system that solves the practical problem of car identification for real scenes. All steps of the process, from image acquisition to optical character recognition are considered to achieve an automatic identification of plate.

Automatic System, Neural Networks, Recognition, of license plates

Короткий адрес: https://sciup.org/15014444

IDR: 15014444

Текст научной статьи Automatic System Recognition of License Plates using Neural Networks

not need to be a continuous view data from multiple cameras to confirm that the system "special" situations. “Subcategory" intelligent video surveillance systems are the system of automatic locking violations of the rules of the road, capable to identify violations such as excessive collisions displays the speed of the planned for the solid or stop the line, travel to red light. In this article is the journalists study with a view to the establishment of the detection unit vehicles to correct the failure of the benefits of planned unregulated transition [2].Identifying such violations requires simultaneous trajectory tracking of pedestrians and vehicles that it's harder than locking system cited above, it was decided to start by examining the applicability of algorithms based on background subtraction and follow-up to filter the areas of processing and object recognition in images is one of the most difficult tasks in the field of information technology. Due to the importance of this issue, research facility of recognition, image and speech analysis are included in the list of priority directions of science, technology and development of Federal critical technologies. The proposed system acquires a car image with a digital camera. Snapshot improved by removing noise processing stage by applying the median filter. Contrast enhancement is carried out on the filtered image to reduce various lighting effect day. Also improved the text and background contrast registration number. Edge detector applied on the test image, maximum amount and rejecting edges found by using the proposed method, which gives the location of the plate at the last stage of the proposed growing window algorithm is used to remove the false number plate areas. After marking plates area is done for the convenience of the use [3,4,5].

The Work will be held Modern methods of character recognition in images are used for a wide range of scope of work such as text recognition

-

• Infrastructure of Implementation

This work has been surfaces of various work; Corresponding block diagram of total

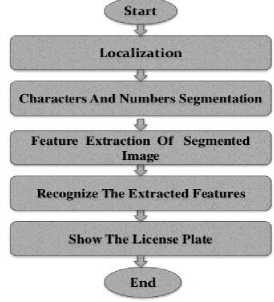

Steps of the algorithm in this part are shown in Fig. 1. the following steps:

-Start and - Localization

-Character and Numbers Segmentation

-Feature Extraction of Segmented Image

-Recognize the Extracted Features

-Show the License Plate

-End

Fig.1. Character Segmentation for Extraction of Number Plates

Currently, these technologies are implemented in three traditional methods, they are: structural, feature and template methods. Each of these methods is focused on its conditions of use which it is effective for. However, all these methods have disadvantages. When image recording the greatest transformations, affecting the recognition result, are made by the affine and Projective transformations occurring due to change in the imaging angle change of scale and weather conditions. Also, the presence of foreign objects in images with complex background significantly reduce the reliability of the recognition methods used in modern systems of car number plates recognition in images and video sequences [6].

-

3. Proposed Method for License Plate Location

The most frequently, the classical neural network architectures (multi-layer perception network with radial basis function, etc.) are used for recognition and identification of the image, but analysis of these works and experimental studies are followed that the use of classical neural network architectures for this problem solving is ineffective for the following reasons:

-

• Images are of a greater dimension, respectively, the size of the neural network increases

-

• A large number of parameters increases the capacity of the system and therefore requires more training sampling, and expands the time and computational complexity of the training process;

-

• To improve the efficiency of the system, it is desirable to apply several neural networks (which are trained with different initial values of synaptic coefficients and order of images presentation), but it increases the computational complexity of problem solving and a run-time.

-

• There are no invariability to changes in the image scale, the camera’s shooting angles and other geometric transformations of the input signal

Therefore, to solve the problem of the extraction of the characters area we chose the neural networks of the high accuracy because they provide a partial resistance to changes in scale, shifting, rotation, angle change and other transformations [7].

Each layer of the neural networks of the high accuracy is a set of planes made up of neurons. Neurons in one plane have the same synaptic coefficients, leading to all the local sites of the previous layer. Each neuron of the layer receives inputs from some area of the previous layer (a local receptive field), that is, the input image of the previous layer is scanned by a small window and passed through a set of synaptic coefficients, and the result is displayed on the corresponding neuron of the current layer. Thus, the set of planes is a map of characteristics, and each plane finds “its own” parts of the image anywhere on the previous layer. The size of the local receptive field is selected independently during the development of the neural network [8].

The layers are divided into two types: the convolution type and sub-selective one. When scanning the receptive fields partially superimpose on each other like shingles in the convolution layers, but the areas of neighboring neurons do not overlap in sub Sam piling layer. Sub sampling layer decreases the scale of planes by local averaging the outputs of neurons, thus the hierarchical organization is achieved. Subsequent layers take more common characteristics which are less dependent on the image’s transformations. After passing through several layers the map of characteristics degenerates into a vector [9].

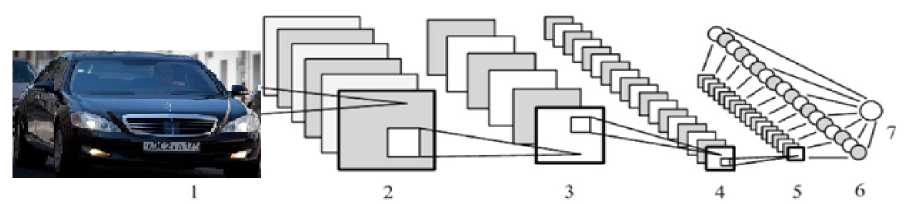

Gradually, the neural network is trained to extract the key characteristics of the cars number plates in the input images. Convolution neural network consisting of seven layers for character area extraction in images is proposed. (Fig.2)

The input layer with size of 28x44 neurons consists of 1232 neurons. It does not bear any functional load and serves only to feed the input image to the neural network.

Following the input layer is the first hidden layer, which is a convolution one. This layer consists of 6 convolution planes. The size of each plane of this layer is 24x40 = 960 neurons.

The second hidden layer is sub sampling one, which is also composed of six planes, each of which has a synaptic mask with size of 2x2. The size of each plane of that layer is 12x 20 = 240 neurons, which is half as big as the size of the plane of the previous layer.

The third hidden layer is the convolution layer. It consists of 18 planes with a size of 16x8 = 128 neurons.

The fourth hidden layer is a sub sampling layer and it consists of 18 planes with a size of 4x12 = 48 neurons.

Fifth hidden layer consists of 18 simple sigmoid neurons, one for each plane of the previous layer. The role of this layer is to provide a classification after characteristics extraction and reducing the input dimension are satisfied. Each neuron in this layer is fully connected with each neuron of only one plane of the previous layer.

The sixth layer is the output layer. It consists of a single neuron, which is fully connected with all neurons in the previous layer. In accordance with a problem to be solved it is enough only one output in the structure of the neural network. The output value of the neural network is within [-1; 1] that is, respectively, the presence or absence of car number plate on the image under classification.

Thus, when scanning the input image, the feedbacks of neural network form the peaks at locations of car number plates. The responses are within [-1; 1], in accordance with the selected activation function.

Synaptic mask in convolution layers is 5x5 neurons; the synaptic mask in sub sampling layers is 2x2 neuron.

The use of the principle of synaptic coefficients association gives the effect of reducing the number of adjustable parameters of the neural network.

The size of the convolution plane is determined in accordance with the following expression:

wc — w— — К + 1 Q1

hc — hu — К + 1, Q2

Here wc, hc is the width and height of the convolution layer respectively; wu, hu is the width and height of the previous layer’s plane; K is the width (height) of the scanning window.

We chose the hyperbolic tangent as an activation function:

Fig.2. Modified Structure Convolution Neural Network for Character Area Extraction: 1) input; 2, 4) convolution layers; 3, 5) sub sampling layers; 6, 7) layers of the usual neurons

Some pictures of car plates from the training sample, arranged frontally with respect to the recording device. The images were obtained under different weather conditions, time of day and different lighting and contrast.

Here f (a) is a required value of the item, a is weighted sum of the signals of the previous layer, ( А) is an amplitude of this function, S determines its position relatively to the reference point.

This activation function is odd one with horizontal asymptotes (+A and –A).

This function has a number of advantages to solve the problem:

-

• Symmetrical activation functions, such as the hyperbolic tangent, provide a faster convergence than the standard logistic function;

-

• The function has a continuous first derivative and simple derivative, which can be computed in terms of its value which saves a computation.

The Q of convolution layer’s neuron operation:

У (k'J) = bk+ Z£i Z£i wk„,.v 1 ' '" ! )' Q3

y^is k-th neuron of convolution layer’s plane; bk is neural displacement of the k-th plane; K is size of the receptive field of the neuron; W k>s>t t is matrix of synaptic coefficients; x is outputs of neurons of the previous layer.

The formula of subsampling layer’s neuron operation:

У^ = bk+\wk Z; , Z?=i Wk,s,tX((i-1)+s'O+t))

Algorithm of back propagation of error - a standard one for the neural network - is used. To measure the quality of recognition we used the function of average square error:

Ep = |Zj(tpj — Opj)2

E p is the value of the error function for the image p; t pj is desired output neuron j for image p; o pj is valid output neuron j for image p.

The final correction of the synaptic coefficients is made according to the formula:

wt+1 + 1) = wtj)) + T]6p jOpjQ6

( п) Is a proportionality factor affecting., the algorithm of back propagation of error is calculated for the entire training set of data to calculate the average or the true gradient. When unadjusted network is supplied with input image, it gives some random output. The error function is the difference between the current network output and the ideal output to be obtained. For successful network training it is required to bring the network output to the desired output, i.e. to reduce sequentially the value of the error function. This is achieved by setting of interneuron connections. Each neuron in the network has its own weight; they are adjustable to reduce the value of the error function.

The values of the weighting factors were randomly selected from a normal distribution with zero average and standard deviation:

6W = Vm m is a number of bonds included in the neuron.

To train the network we developed a database with 1000 images of car number plates.

To create more examples and to increase the degree of invariance of the neural network to various rotations we developed a set of images of car number plates, arranged at different angles on vertical and horizontal directions and on the plane relatively to the recording device (Fig. 3). The images were obtained under different weather conditions, time of day and different lighting and contrast.

Fig.3. Pictures of Car Plates from the Training Sample, Arranged Frontally with Respect to the Recording Device.

Fig.4. Horizontal histogram Ming. Lines 1 And 2 Correspond to the Two Largest Peaks. X Is A Number of the Image Line, Y Is an Average Intensity Of The Image Line

Further we conduct a vertical histogram at an angle mutually perpendicular to n, and here we can see about 10 peaks in the intervals between characters. Thus, the areas of the certain characters on the car number plates are extracted (Fig. 5).

Fig.5. Pictures Of Car Plates from the Training Sample are Inclined with Respect to the Recording Device.

-

3.1. Extraction of separate characters using the average intensity histograms

-

3.2. Convolution neural network for characters recognition on images

After extraction the characters’ area in the image it is necessary to extract the separate characters for further recognition. To this end we propose to use a method based on average intensity histograms.

The characters’ area extracted in the previous stage is scanned pixel by pixel from left to right, top to bottom, while the average intensity of pixels in each column is calculated. In those places where there is no character, the average intensity will be significantly different from the intensity of the places where the characters are. Further, by performing the same operation row wise, we get a set of separate characters, which may be already analyzed In order to extract the character line from the entire image, we propose to calculate the horizontal histogram at first. Since the background of the car number plate is the brightest area in the image, the two largest peaks will correspond to areas 1 and 2 (Fig. 4).

When recording, the plate’s image is exposed to various mixings and transformations therefore the lines corresponding to regions 1 and 2 will not be positioned horizontally, but with an unknown angle. In this connection, we propose to build not one but n histograms of an average intensity each of which is not built horizontally but at a predetermined angle [11].

The required amount of histograms of an average intensity is determined from the technical specifications of image recording. As per this conditions the rotation angle of the image does not exceed 20 ° horizontally in the right and the left side, therefore, n = 41. Among

Constructed n histograms it hall be chosen that one which contains the largest value of y-direction because the largest value will correspond to area 1 or 2 (fig. 4) [12].

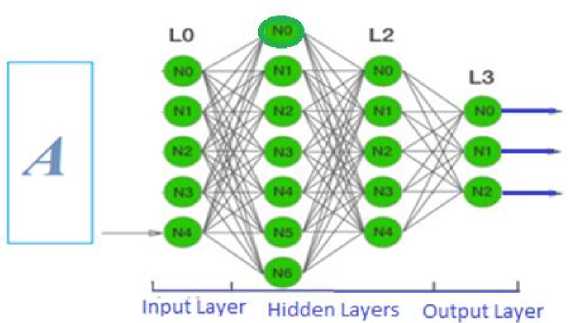

We have developed the convolution neural network with 4 hidden layers in order to extract the selected characters (Fig. 6).

The first layer is the input one, and consists of 28x28 = 841 neuron.

A second layer is convolution one and composed of six planes with size 24x24 = 578 neurons.

The size of the convolution plane is determined in accordance with the formulas (1, 2).

The thir d layer is the sub sampling one and also consists of five planes with size 12x12 = 144 neurons.

Fig.6. The Convolution Neural Network for Character Recognition: 1) input; 2, 4) convolution layers; 3) sub sampling layers; 5, 6) layers of the usual neurons

The fourth layer is a convolution layer and it consists of 50 planes with a size of 8x8 = 64 neurons.

The fifth layer consists of 126 simple sigmoid neurons. The role of this layer is to provide a classification after characteristics extraction and reducing the input data dimension are satisfied.

The sixth layer is the output layer and consists of 21 neurons.

According to 50577-11 the car number plates may include the following characters: A, B, E, K, M, Н, O, P, C, T, X, Y and the numbers from 0 to 9. Therefore, the output layer consists of 21 neurons because 21 characters are to be recognized.

For network training, we used a database of 60,000 images of handwritten digits and created a database of 20,000 letter images. The size of the test sample is 10,000 characters.

The basis of the aforementioned algorithms we developed a software system that provides the probability not less than 98% for car number plate’s recognition in images with complex background under the following conditions of recording:

-

• Processing time: 35 milliseconds;

-

• Character height is not less than 14 pixels;

-

• Car number plate illumination shall in the range from 50 to 1000 lux;

-

• The horizontal deviation angle of car number plates in respect to the recording device is up to ± 60 °;

-

• The vertical deviation angle of car number plates in respect to the recording device is up to ± 65 °;

-

• The rotation angle of the car number plate’s license on the plane is up to ± 25.

-

3.3. Comparison of technical specifications of Car Number Plates Recognition Systems

-

4. Conclusion

As we can see from Table1, the developed software system yields to systems existing in the market in all characteristics,

Table 1 Comparison of technical specifications of Car Number Plates Recognition Systems and on the contrary in some respects it leaves them behind.

As we can see from Table 3, the developed software system is able to recognize car number plates located at the biggest deviation angles in comparison with other systems.

|

System name |

Recognition probability % |

Recognition time |

Illumination lux |

The minimum height of character in image |

|

"Auto-Inspector" |

95 |

not specified |

at least 50 |

not specified |

|

"Auto-Intellect" |

90 |

not specified |

not specified |

|

|

«SL-Traffic» |

90 |

not specified |

25 pixels |

|

|

"Dignum- auto" |

90 |

not specified |

not specified |

|

|

«CarFlow II» |

93.98 |

60 ms |

not specified |

|

|

Developed software |

98 |

35 ms |

from 50 to 1000 |

12 pixels |

In this paper a general algorithmic model is proposed based on the average pixel intensity histograms for individual characters selection is used. The six layer convolution neural network for character recognition on images is implemented. The represented software system can recognize car number plates with deviation horizontally, vertically and in a plane angels under high speed.

The work is scheduled to receive actual scientific results in the Following areas:

-

• Parametric model of multilayer neural network.

-

• Programming model in object-oriented view.

-

• Quality evaluation of neural network recognition of human individuals.

-

• Connect the input blocks images from video cameras, face recognition and selection.

-

• Analysis of the effectiveness of facial recognition in real time.

Acknowledgments

Список литературы Automatic System Recognition of License Plates using Neural Networks

- Al Smadi, T.A. Computing Simulation for Traffic Control over Two Intersections, Journal of Advanced Computer Science and Technology Research 1 (2011) 10-24. https://www.sign-ific-ance.co.uk/index.php/JACSTR/article/viewFile/379/382

- Sonka, Milan, Vaclav Hlavac, and Roger Boyle. Image processing, analysis, and machine vision. Cengage Learning, 2014.

- Ibrahim, Nuzulha Khilwani, et al. "License plate recognition (LPR): a review with experiments for Malaysia case study." arXiv preprint arXiv: 1401.5559 (2014).

- Yan, Jianqiang, Jie Li, and Xinbo Gao. "Chinese text location under complex background using Gabor filter and SVM." Neurocomputing 74.17 (2011): 2998-3008.

- Wang, Runmin, et al. "License plate detection using gradient information and cascade detectors." Optik-International Journal for Light and Electron Optics125.1 (2014): 186-190.

- Uchida, Seiichi. "Text localization and recognition in images and video."Handbook of Document Image Processing and Recognition. Springer London, 2014. 843-883.

- Das, Kaushik, Dipjyoti Pathak, and Asish Datta. "Number Plate Recognition and Number Identification-A Survey." Communication, Cloud and Big Data: Proceedings of CCB 2014 (2014).

- Ashtari, Amir Hossein, Md Jan Nordin, and Mahmood Fathy. "An Iranian License Plate Recognition System Based on Color Features." (2014): 1-16.

- Giannoukos, Ioannis, et al. "Operator context scanning to support high segmentation rates for real time license plate recognition." Pattern Recognition43.11 (2010): 3866-3878.

- Sedighi, Amir, and Mansur Vafadust. "A new and robust method for character segmentation and recogni-tion in license plate images." Expert Systems with Applications 38.11 (2011): 13497-13504.

- Ibrahim, Nuzulha Khilwani, et al. "License plate recognition (LPR): a review with experiments for Malaysia case study." arXiv preprint arXiv: 1401.5559 (2014).

- Al-Hmouz, Rami, and Khalid Aboura. "License plate localization using a statistical analysis of Discrete Fourier Transform signal." Computers & Electrical Engineering 40.3 (2014): 982-992.

- Al Smadi, T.A. Design and Implementation of Double Base Integer Encoder of Term Metrical to Direct Binary, Journal of Signal and Information Processing, 4, 370. (2013) http://dx.doi.org/10.4236/jsip.2013.44047

- Takialddin Al Smadi Int. An Improved Real-Time Speech Signal in Case of Isolated Word Recognition. Journal of Engineering Research and Applications, 3, 1748-1754. http://www.ijera.com/papers/Vol3_issue5/KC3517481754.pdf