Biometric Person Identification System: A Multimodal Approach Employing Spectral Graph Characteristics of Hand Geometry and Palmprint

Автор: Shanmukhappa A. Angadi, Sanjeevakumar M. Hatture

Журнал: International Journal of Intelligent Systems and Applications(IJISA) @ijisa

Статья в выпуске: 3 vol.8, 2016 года.

Бесплатный доступ

Biometric authentication systems operating in real world environments using a single modality are found to be insecure and unreliable due to numerous limitations. Multimodal biometric systems have better accuracy and reliability due to the use of multiple biometric traits to authenticate a claimed identity or perform identification. In this paper a novel method for person identification using multimodal biometrics with hand geometry and palmprint biometric traits is proposed. The geometrical information embedded in the user hand and palmprint images are brought out through the graph representations. The topological characterization of the image moments, represented as the virtual nodes of the palmprint image graph is a novel feature of this work. The user hand and palmprint images are represented as weighted undirected graphs and spectral characteristics of the graphs are extracted as features vectors. The feature vectors of the hand geometry and palmprint are fused at feature level to obtain a graph spectral feature vector to represent the person. User identification is performed by using a multiclass support vector machine (SVM) classifier. The experimental results demonstrate, an appreciable performance giving identification rate of 99.19% for multimodal biometric after feature level fusion of hand geometry and palmprint modalities. The performance is investigated by conducting the experiments separately for handgeometry, palmprint and fused feature vectors for person identification. Experimental results show that the proposed multimodal system achieves better performance than the unimodal cues, and can be used in high security applications. Further comparison show that it is better than similar other multimodal techniques.

Hand geometry, Palmprint, Feature level fusion, Graph representation, Spectral graph properties, Hu moments, Support vector machine

Короткий адрес: https://sciup.org/15010805

IDR: 15010805

Текст научной статьи Biometric Person Identification System: A Multimodal Approach Employing Spectral Graph Characteristics of Hand Geometry and Palmprint

Published Online March 2016 in MECS

Multimodal biometric systems are expected to be more reliable than unimodal biometric system due to the presence of multiple, (fairly) independent pieces of evidence [1]. The unimodal systems have to contend with a variety of problems such as, noise in sensed data, intraclass variations, inter-class similarities, non-universality, and possibilities of spoof attacks, especially as they rely on single source of information for authentication. Multimodal biometric systems address the problem of non-universality by covering sufficient population and also provide a better deterrent for spoof attacks. A multimodal system can operate in one of three different modes: serial mode, parallel mode, or hierarchical mode [2]. The integration in multimodal biometrics system can be performed at sensor, feature, score or decision level [3] to provide greater level of security. The sensor and feature levels of fusion are suitable to be carried out prior to matching, while score and decision levels of fusion can take place after matching. Fusion after matching is split up into four categories: dynamic classifier fusion, rank level fusion, score level fusion and decision level fusion. Whereas feature level fusion combines different feature vectors collected by either using multiple sensors or employing multiple feature extraction algorithms on the same sensor data. The biometric systems that integrate information at an early stage of processing are believed to be more effective than those systems which perform integration at a later stage [4]. Integration at a later stage leads to loss of information associated with each trait in every processing steps. Since the features contain richer information about the input biometric data, fusion at the feature level will provide better recognition results than fusion at other levels, due to loss of information associated with every subsequent step in the biometric system [5].

Graph theoretic techniques have been widely used in biometric applications mainly for biometric trait representation [6,7]. In graph-based representation, graphs are used to describe the topological structure of biometric pattern. The characteristics naturally associated with the graphs are known as graph spectra or spectral graph properties [8] and are not much explored in biometric systems, reported. The spectral graph properties of biometric images can be matched for user identification/verification.

In the proposed multimodal biometric system, features of hand geometry and palmprint biometrics traits are represented as spectral graph properties and integrated using feature level fusion. These traits have been selected because of their robustness and ease of capture. An individual's hand-geometry does not significantly change after a certain age as the bone structure remains constant beyond a certain growth period. Palmprint characteristics remain unique and stable, further they can be easily acquired. The characteristics of palmprint that include geometrical features, line features, delta, minutiae points etc, are easily obtained and can be efficiently represented. In this work, a novel graph theoretic approach to multimodal biometric system using hand geometry and palmprint biometrics traits is presented. A virtual multimodal biometric(i.e. chimeric) database is constructed for 144 users by augmenting the peg-free hand images from GPDS150 hand database and palmprint images from PolyU palmprint database. Hence, the virtual multimodal biometric database contains 2880 images by selecting 10 peg-free right hand images and 10 right hand palmprint images from each user. The peg-free hand image and palmprint image of each user is represented as a separate weighted undirected graph.

The hand contour is traced to locate 12 nodes on hand image and is represented as weighted undirected complete connected graph. The geometrical information embedded in the hand image is explored using the graph characteristics. Further, in-order to represent the palmprint image as weighted undirected graph, the palmprint image is partitioned in to four sub-zones, covering the principal lines in each zone. The centroid value for each zone is computed and located as a node. Seven Hu invariant moments are computed from each sub-zone and represented as seven virtual nodes. A palmprint image is represented as weighted undirected graph using 11 nodes i.e. four centroids and seven virtual nodes representing Hu invariant moments. This topological characterization to the image moments representing the virtual nodes of the palmprint image graph is a novel feature of this work. The weighted adjacency matrix of a graph is formulated by calculating the euclidean distance between the centroids, and using Hu invariant moments to represent the other edge weights. The principal eigenvector features are extracted from weight adjacency matrix associated with both hand image and palmprint image graphs. The feature vectors extracted from hand and palmprint images are combined to construct the multimodal feature vector. Finally, spectral characteristics of the graphs are used to construct multiclass support vector machine classifier for person identification.

The experimental result show that, feature level fusion of spectral characteristics of hand and palmprint graphs achieves identification rate of 99.19% for 144 user's. Further, for the sake of comparison, experimentation for unimodal biometric systems are also conducted separately for graph representations of hand and palmprint traits. The identification rate achieved for unimodal biometric system, of hand geometry trait is 93.23% and for palmprint trait an identification rate of 98.34% is achieved. This shows fusion of graph spectral characteristics of hand and palmprint at the feature level will provide better recognition rate. The performance comparison of the proposed method with similar other methods brings out its robustness.

The rest of the paper is organized into four sections: section II reviews the developments in fusion strategies and multimodal biometric technology. The proposed model of multimodal biometric system using hand geometry and palmprint is described in section III. The experimental results and analysis are given in section IV. The section V concludes the work and lists the future directions.

-

II. Review of Related Work

Researchers have examined various fusion rules in multimodal biometrics systems and have reported that the fusion increases accuracy. Feature level fusion is accomplished by concatenating two or more compatible feature sets. The multimodal biometrics systems developed by employing feature level fusion of multiple biometric traits provided promising results in the verification and identification process.The information embedded in the different biometric traits are extracted and combined in feature level fusion. The feature level fusion is employed in either concatenation (serial) or parallel mode [9]. A few state of the art approaches that have been proposed in the literature for multimodal biometrics with feature level fusion are summarized here. The Curvelet-PCA features from fingerprint, face and off-line signature traits are combined for person identification. For 40 users, genuine acceptance rate (GAR) of 97.15% is obtained using SVM classifier in[10]. Local statistical features from the DCT coefficients obtained from the pre-defined blocks of face, palmprint and palm vein modalities are fused and a GAR of 100% is achieved for 100 users [11]. Fingerprint and iris code are combined using fuzzy vault classifier and it is shown that uncorrelated features when combined gives best possible equal error rate (EER) [12]. Further, directional features from face and fingerprint modalities are extracted by utilizing Gabor filter bank with two scales and eight orientations and recognition accuracy up to 99.25% is achieved[13]. The fingerprint and palmprint image features are extracted using Modified Gabor filter and an accuracy of 80% is obtained using euclidean distance metric for 40 users[14]. Hand geometry is characterized with 23 features by measuring the length, width and shape of the hand (solidity, eccentrity and extent). The palmprint is characterized with 144 DCT coefficients. The fusion of 75 most important characteristics is conducted [15]. The finger widths and lengths features of hand geometry and the Gabor features from palmprint are fused and an accuracy of 94.70% is obtained for 50 users using hamming distance metric[16]. The perimeter and chain code features of hand geometry are combined with the chain code features of palmprint and the user is recognized by means of dynamic time warping method[17]. Accelerated segment test (FAST) features of handgeometry and region properties of the palmprint are integrated in [18]. A new feature fusion method adopting the idea of canonical correlation analysis (CCA) is proposed in [19].A summary of multimodal biometrics system developed by feature level fusion [10-18] are depicted in Table 1.

Table 1. Multimodal Biometrics Systems Using Feature Level Fusion

|

Traits Used |

Description of Features |

Classifier |

Accuracy and No. of Users |

Reference |

|

Fingerprint, Face and Off-line signature |

Curvelet-PCA features |

SVM |

97.15% (40 Users) |

Karki Maya et al., [10], 2013. |

|

Face, Palmprint and Palm vein |

Local statistical features from the DCT coefficient |

Euclidean distance metric |

100% (100 users) |

Gupta Aditya et al., [11], 2014. |

|

Fingerprint and Iris |

Fingerprint Code and Iris Code |

Fuzzy Vault |

98.20% (160 users) |

Nandakumar K. et al., [12], 2008. |

|

Face and Fingerprint |

Directional features |

Hellinger & Canberra distance metric |

99.25% (40 Users) |

Deshmukh A et al., [13], 2013. |

|

Fingerprint and Palmprint |

Modified Gabor features |

Euclidean distance metric |

80% (40 Users) |

Mhaske et al., [14], 2013. |

|

Handgeometry and Palmprint |

Geometrical features of handgeometry and DCT coefficients from palmprint |

Bayes, K- NN, SVM and ANN |

98% (100 users) |

Ajay Kumar et al., [15], 2006. |

|

Handgeometry and Palmprint |

Geometrical features of hand geometry and the Gabor features from palmprint |

Hamming distance metric |

94.70% (50 Users) |

Han C., [16], 2004. |

|

Handgeometry and Palmprint |

Perimeter and chain code features from handgeometry and Chain code features of palmprint |

Dynamic time warping method |

89% (50 Users) |

Dewi Yanti Liliana et al., [17], 2012. |

|

Handgeometry and Palmprint |

Accelerated segment test (FAST) features of handgeometry and Region properties of the palmprint |

Distance Metrics |

93.75% (07 Users) |

Swapnali G. et al., [18], 2014. |

In the literature several challenges are reported by researchers in developing unimodal biometrics systems. Some of the challenges are non-universality, noisy sensor data, large intra-user variations and susceptibility to spoof attacks, which reduce the accuracy of the system [20]. Multiple biometric features have been used to increase the performance of authentication systems. Hence, there is a scope to explore new methods to minimize the limitations of unimodal biometric system by combining the characteristics of different biometric modalities to provide better security and authentication and also to reduce the feature acquisition cost. Since, the handgeometry and palmprint traits are robust and can be easily acquired using a single scanner; they are employed in the proposed multimodal biometric system. The description of the proposed methodology is given in the next section.

-

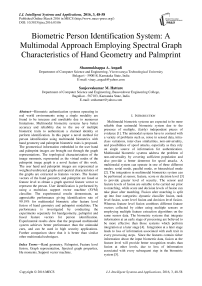

III. User Identification System using Feature Level Fusion of Hand-Geometry and Palmprint Traits

The steps involved in proposed multimodal biometrics system using peg-free hand geometry and palmprint traits is shown in Fig. 1. The features are extracted independently from both the traits. The hand geometry features are extracted after performing image preprocessing, hand segmentation and graph representation of hand images. The palmprint images are passed through image preprocessing, zoning and Hu moment extraction and graph representation modules before feature extraction. The extracted features namely the spectral features of graph representation from hand geometry and palmprint images are fused together to construct the single multimodal biometric template. Finally, the identification is done with the help of multiclass support vector machine classifier.

In proposed methodology for user identification using multimodal biometrics system, the right hand image from GPDS150 hand database and right hand palmprint images from PolyU palmprint database are used to construct an artificial database. The hand images and palmprint images are preprocessed to remove the noise. The noise free gray scale hand and palmprint images are converted into binary image. Further, both the hand and palmprint images are segmented from the background of binary image to derive a single pixel wide contours using canny edge detection. By tracing the hand contour 12 nodes are located on hand image that form the nodes of a graph representation for hand geometry. The graph is represented as adjacency matrix, which is further processed to extract the eigen values/eigen vectors providing the spectral characteristics of the graph.

The palmprint contour is traced to establish the new coordinate values for locating the region of interest (ROI). Only the principal lines are extracted from the ROI of the palmprint image using morphological operations. Further, the ROI containing the principal lines of the palmprint image is divided into four zones covering the principal lines in each zone. The seven Hu invariant moments are computed for every zone to indicate the characteristics of the major lines in zones. Further, the geometric centroid value of each zone and the euclidean distance between the centroids are computed. A palmprint image is represented as graph using 11 nodes i.e. four centroids and seven nodes virtually representing Hu invariant moments. The graphs is represented as an adjacency matrix and further processed to extract graph spectral properties. Finally, to generate a single multimodal biometric template with feature level fusion the spectral properties from the weight adjacency matrix representations of both the hand geometry graph and the palmprint graph are extracted and fused (i.e. concatenated) nd stored in knowledgebase. The knowledgebase is used to train multiclass support vector machine classifier. Finally, the user is identified by using the SVM classifier which uses the fused multimodal feature vector. The detailed description of each module of the proposed multimodal biometrics system is presented in the following subsections.

Fig.1. Steps involved in Multimodal biometrics using hand geometry and palmprint traits.

-

A. Database Description

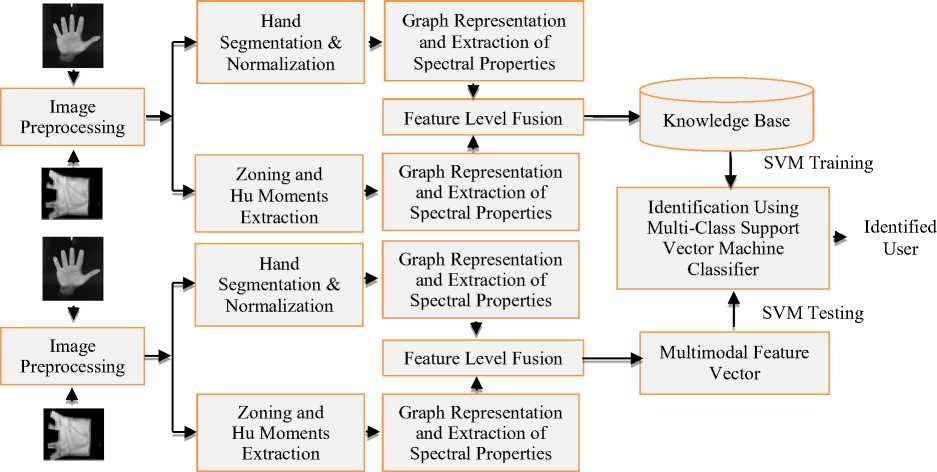

Image acquisition and preparation is the first step in development of any biometrics system. The proposed multimodal biometrics system uses a virtual multimodal biometric (i.e. chimeric) database for performance testing. The virtual multimodal biometric database contains 2880 images, which is constructed for 144 users by selecting 10 peg-free right hand images and 10 right hand palmprint images from each user by augmenting the images from General Primary Data Sources(GPDS150) hand database [21] and Hong Kong Polytechnic University (PolyU) palmprint database [22] respectively. The hand images of GPDS150 hand database also contain the palm area, but the principal line information in the palmprint is not properly visible due to the low resolution and distortions produced by the contact surface. Hence, the palmprint images of PolyU palmprint database is combined with GPDS150 hand database to construct the virtual multimodal biometric (i.e. chimeric) database. The sample images of virtual multimodal hand and palmprint database are shown in Fig. 2. These images are

preprocessed separately for further operations as explained in next subsection.

-

B. Image Preprocessing

The hand and palmprint images are preprocessed separately in order to prepare for feature extraction. The detailed description of each preprocessing step is presented in the following subsections.

Fig.2. Sample Images of Virtual Multimodal Hand and Palmprint Database

-

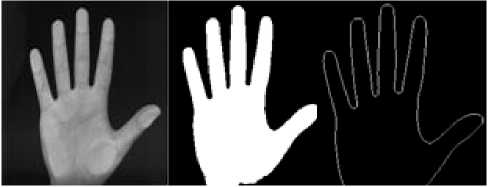

1) Hand Image Preprocessing

Firstly the additive noise from hand image is removed using the wiener adaptive filter as it preserve edges and other high-frequency components. A binarized hand image is obtained from preprocessed gray level hand image using Otsu's auto-thresholding method [23]. A binary image produced by thresholding suffers from small gaps and isolated pixels. A morphological filter is employed to fill-in all small gaps to blacken the isolated white pixels and to reduce the blurring of edges. In order to derive a single pixel wide contour from the processed binary image, canny edge detection technique is used, which localizes pixel intensity transitions. Since the portion above the wrist is required for the hand geometry analysis, the tip of the middle finger and wrist are identified from the hand contour to segment and normalize the portion above the wrist. Further, the normalized hand images were scaled down four times for better performance in terms of speed. The resulting hand images after preprocessing steps are shown in Fig. 3.

(a) (b) (c)

Fig.3. (a) Preprocessed hand image (b) Binarized hand image (c) Normalized hand image

-

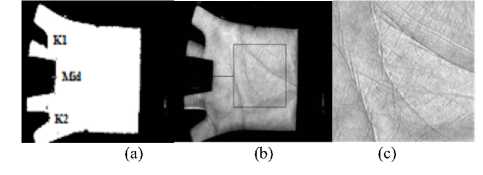

2) Palmprint Image Preprocessing and Zoning

In order to extract principal line features from the palmprint images, they are preprocessed. The steps involved in preprocessing are additive noise removal from palmprint image, binarization, contour extraction, key point detection and establishing the new coordinate values for locating the area of region of interest (ROI). The preprocessing steps employed for hand image i.e. additive noise removal, binarization and contour extraction, are also carried for palmprint image. Further, a new coordinate system is setup to align palmprint images and to segment a ROI of palmprint image with a size of 128 x 128 pixel resolution, for feature extraction. Two key points are located to establish the coordinate values by tracing the contour image using eight-connected component technique. The key point K1 with coordinate values x1 and y1 is located between index finger and middle finger. Similarly, the key point K2 with coordinate values x2 and y2 is located between little finger and ring finger. Further, the midpoint is computed using the average of pixel locations of key points K1

and K2. The midpoint is used to establish the coordinate values for locating the central part of the palmprint i.e. area of region of interest (ROI) and to extract the reliable features. Further, the area of region of interest (ROI) of the palmprint image is cropped with size of 128 x 128 pixel resolution. The resulting images are as shown in Fig. 4.

Fig.4. (a) Binary Palm Image (b) Locating ROI (c) Cropped palmprint image.

The principal lines are extracted from the ROI image using morphological operations. The most basic morphological operations are dilation and erosion. Mathematically, dilation and erosion are defined in terms of set operations [24].

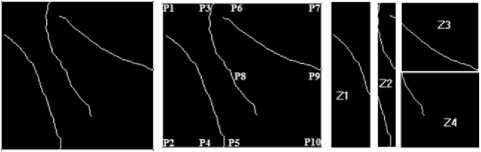

The orientations of the principal lines are mainly distributed in four direction i.e. 0, 45, 90,135 degrees. Hence, the bottom-hat operation is applied in these directions separately on preprocessed palmprint image. The resulting line images obtained from the bottom-hat operation are combined to extract the principal lines. The principal lines extracted from the ROI palmprint image is shown in Fig. 5(a). Further, to segment the ROI containing the principal line image, ten landmark points are located as shown in Fig. 5(b) and is divided into four zones as shown in Fig. 5(c).

(a) (b) (c)

Fig.5. (a) Principal line extraction from ROI (b) Landmarks for segmenting the zones (c) Zoning

-

C. Graph Representation and Feature Extraction

The function of next module of the proposed system is graph representation of the hand and palmprint image and further feature extraction. The detailed description is presented in the following subsections.

The following algorithm 1, describes the location of points and the zoning of palmprint images;

Algorithm 1. Zoning:

input : Palmprint ROI image output : Four zones containing the principal lines

\\ begin

Step 1. In the ROI image containing the principal lines, locate the points P1,P2,P7 and P10 at the four corners. i.e. point P1 at the origin (i.e. x= first column, y= first row), point P2 (i.e. x= first column, y= last row), point P7 (i.e. x= last column, y= first row) and point P10 (i.e. x= last column, y=last row).

Step 2. Trace horizontally from the point P1towards right till pixel with value ‘1’ is encountered (i.e. starting of the head line) locate the point P3.

Step 3. From the point P3 trace vertically towards downwarddirection till the last row to locate point P4.

Step 4. Segment the area covered between the points P1, P2, P4 and P3 of the ROI image to represent zone1 (Z1).

Step 5. From the point P4 trace horizontally towards right till pixel with value ‘1’ is encountered (i.e. end of the heart line) locate the point P5.

Step 6. From the point P5 trace vertically towards upward-direction till the first row to locate point P6.

Step 7. Segment the area covered between the points P3, P4, P5 and P6 of the ROI image to represent zone2 (Z2).

Step 8. Locate the point P8 by computing the midpoint value of points P5 and P6.

Step 9. Trace horizontally from the point P8 towards right till the last column to locate the point P9.

Step 10. Segment the area covered between the points P6, P7, P9 and P8 of the ROI image to represent zone3 (Z3).

Step 11. Segment the area covered between the points P5, P8, P9 and P10 of the ROI image to represent zone4 (Z4).

\\ end

Note: Refer to Fig. 5(b).

-

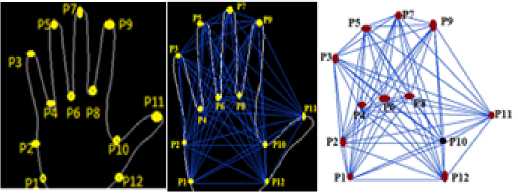

1) Graph Representation of Hand Image

The hand image is represented as a weighted undirected complete connected graph by locating twelve landmarks points (nodes) by tracing the hand contour using eight-connected component technique. Firstly the five fingertip nodes (i.e. P3, P5, P7, P9 and P11) and four valley point nodes (i.e. P4, P6, P8, P10) between adjacent fingers are located by tracing the hand contour. Further two reference nodes (P1, P12) are located above the wrist. Where nodes P1and P12 are the bottom left and bottom right points of the palm respectively. Node P12 is located by tracing vertically towards downward-direction from node P10 till pixel with value ‘1’ lies in the same column i.e. width of the thumb finger. Further, node P1 is located by searching the pixel value ‘1’ towards left from the nodes P12. Similarly the node P2 is located by searching the pixel value ‘1’ towards left from the node P10. The twelve nodes (vertices) located on the hand contour image is shown in Fig. 6(a). After locating the ‘N’ (twelve) landmark nodes on the hand contour, every node is connected with all other (N-1) nodes of the hand image as shown in Fig. 6(b) and weights are assigned to each of the N/2*(N-1) edges by measuring their euclidean distance, in the image.

Let the weighted undirected complete graph representation of the hand image be described with G = (V, E) as shown in Fig. 6(c), where V= (P1, P2,. ., PN) denotes set of vertices of a graph and E denotes its set of edges which are N/2*(N-1) in number. Each edge is assigned a distance value d: V XV ^ R satisfying d (Pi, Pj) = d (Pj, Pi) and d (Pi, Pj) 2? 0. Where ‘d’ is the straight-line distance between any pair of nodes, calculated using the euclidean distance.

d ij = (4 - xj)2 + (У1 - yj)2) (1)

where (xi, yi) and (xj, yj) are spatial coordinates of two nodes Pi and Pj of the complete connected graph representation of the hand image [25]. The representations of palmprint image graph is explained in the following subsection.

(b)

(a)

Fig.6. (a) Referencing nodes extraction (b) Interconnection between nodes (c) Graph representation of the hand image

-

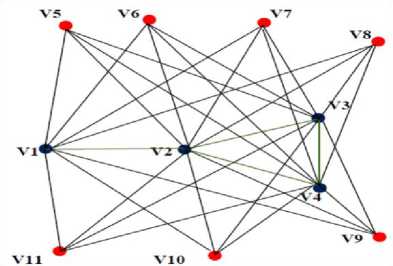

2) Graph Representation of Palmprint Image

To represent the palmprint image as a weighted undirected graph it is required to compute the seven Hu invariant moments and centroids of each of the four zones. The four centroids and seven Hu moments are represented as nodes of a graph G = (V, E). Where V= (V1, V2,. ., V11) is a set of eleven vertices of a graph. Firstly the four centroid points corresponding to zone 1, zone 2, zone 3 and zone 4 (i.e. V1, V2, V3 and V4) are located further seven virtual nodes (i.e. V5, V6, V7, V8, V9, V10 and V11) are used to represent the seven absolute Hu moments.

Further the edges E= (E1,E2,..E32) are constructed as follows: an edge from node V1 to node V2, an edge from node V2 to node V3, an edge from node V2 to node V4, an edge from node V3 to node V4.

Fig.7. Graph representation of the palmprint image

Seven other edges are constructed from every centroid node (i.e. V1, V2, V3 and V4) to the remaining seven virtual nodes (i.e. V5, V6, V7, V8, V9, V10 and V11). Hence totally thirty two edges are constructed. Each edge is assigned a weight value d: V XV ^ R satisfying d (Vi, Vj) = d (Vj, Vi) and d (Vi, Vj) 2? 0. The weights are assigned to the edges (V1, V2), (V2, V3), (V2, V4) and (V3, V4) by measuring euclidean distance between the centroid nodes. The seven absolute Hu moments computed for each zone of the image represent the edge weights of the edges from the centroids of the corresponding zone to the virtual node representing the moment. The eleven nodes (vertices) used for representing the palmprint image are shown in Fig. 7.

Moment invariants have been extensively applied to image pattern recognition, image registration and image reconstruction. Hu derived six absolute orthogonal invariants and one skew orthogonal invariant based upon algebraic invariants, which are independent of position, size and orientation [26]. The central moment relationships u p to the level three are used for computations of Hu invariant moments. The scale invariance is obtained by normalization [27].

The normalized central moments are computed using “(2)”.

u pa p + q npq = — Where r = -—- +1 (2)

u r 00 2

The seven moment invariants have the useful property of being unchanged under image scaling, translation and rotation and are given in “(3)”.

-

ф 1 = n 20 + n 02

-

ф 2 = (n 20 + n 02 ) 2 + 4 n 2

-

ф 3 = (n 30 - 3 n 12 ) 2 + ( 3 n 21 - n 03 ) 2

-

ф 4 = (n 30 + n 12 ) 2 + (n 21 + n 03 ) 2 (3)

-

ф 5 = (n 30 - 3 n 12 ) (n 30 + n 12 ) [(n 30 + n 12 ) 2 - 3 (n 21 + n 03 ) 2 ] + ( 3 n 21 - n 03 ) (n 21 + n 03 )

[ 3 (n 30 + n 12 ) 2 - (n 21 + n 03 ) 2 ]

-

ф 6 = (n 20 - n 02 ) [(n 30 + n 12 ) 2 - (n 21 + n 03 ) 2 ] +

-

4 n11 (n 30 + n 12 ) (n 21 + n 03 ) 2

-

ф 7 = ( 3 n 21 - n 03 ) (n 30 + n 12 ) [ (n 30 + n 12 ) 2 -

-

3 (n 21 + n 03 ) 2 ] - (n 30 - 3 n 12 ) (n 21 + n 03 )

[ 3 (n 30 + n 12 ) 2 - (n 21 + n 03 ) 2 ]

These moment invariants are computed for all the zones of the image. In this work the moment invariants are used as edge weights of the edges from the centroids of the corresponding zone to the virtual node representing the moment. This novel representation gives a topological characterization to the image moments. Further these characteristics are abstracted through the spectral properties of the graph representation giving a more abstract representation of the features. The feature extraction from the graph representation of hand and palmprint to construct the multimodal feature vector is described in the following sub-section.

-

3) Feature Extraction from Graph Representation of Hand and Palmprint Image

In the proposed work, the multimodal biometric feature vector is formed by concatenating the spectral properties extracted from the undirected graph representation of both hand and palmprint images. In order to calculate the spectral properties (eigenvalues and eigenvectors), the weighted adjacency matrix representation of the undirected graph is used.

Let S and T be the weight adjacency matrices, representing the weight of the edges between pairs of the vertices of a hand image graph and palmprint image graph respectively. The adjacency matrix is nonnegative, symmetric and irreducible for undirected graphs. In general terms this can be written as

S (Pi, Pj) =

dij

if (Pi, Pj)

^

E 1 ^ i < N, 1

T (Pi, Pj) = 0 Otherwise (4)

Where N is the number of nodes in respective graph. The spectral properties of the weight adjacency matrix will provide useful and complete information about the structure of the graph. In geometric terms the relationship between weighted adjacency matrices S, T and spectral properties is given by

(S - X1I)u 1 = 0 and (T - X2I)u 2 = 0 (5)

where u1 and u2 are the column vectors and λ 1 and λ 2 (lambda) are scalars. For non-zero vectors u1 and u2 the scalars λ 1 and λ 2 are called eigenvalues of the graph's weighted adjacency matrices S and T respectively, the vectors u1 and u2 are called eigenvectors corresponding to λ 1 and λ 2 . Each eigenvector is associated with a specific eigenvalue. One eigenvalue can be associated with several number of eigenvectors. Among the eigenvalues of the weighted adjacency matrices, the largest eigenvalues and the smallest eigenvalues (i.e. spectral radius) are referred to as the principal eigenvectors. The feature vector for each hand image FHG of size 24 X 1 is constructed by selecting two principal eigenvectors (i.e. EVHG1 and EVHG2 ) of the weighted adjacency matrix S. The eigenvector EVHG1 is associated with largest eigenvalue and the eigenvector EVHG2 is associated with smallest eigenvalue;

F HG = [ EV HG1 | EV HG2 ] (6)

Similarly the feature vector for each palmprint image FPP of size 22 X 1 is constructed by selecting two principal eigenvectors (i.e. EV PP1 and EV PP2 ) of the weight adjacency matrix T. The eigenvector EV PP1 is associated with largest eigenvalue and the eigenvector EV PP2 is associated with smallest eigenvalue;

FPP = [ EVPP1 | EVPP2] (7)

In order to enhance the performance of the biometric system, the feature vectors extracted from each biometric trait are fused (i.e. concatenated) together to produce a single multimodal biometric template i.e. F MB of size 46 x 1. As the feature vectors extracted from both the traits are compatible, normalization of the multimodal biometric template is not required. Hence the multimodal biometric template with feature level fusion is represented as

FMB = [ FHG | FPP ] (8)

The feature level fusion is expected to be the best type of fusion as the feature vectors constitute the richest source of information [25]. The knowledge base is constructed using the templates of 144 users.

In the proposed work, the multiclass support vector machine classifier is used for user identification. The support vector machine (SVM) is a statistical learning method based on a structural risk minimization principle and works by minimizing the Vapnik Chervonenkis (VC) dimensions [26]. Support vector machine (SVM) is a supervised statistical learning method that analyzes data and recognizes patterns and is used for classification and regression analysis. A classification task usually involves training and testing data which consists of some data instances. Each instance in the training set contains one “target value” (class labels) and several “attributes” (features). The performance of SVM largely depends on the kernel(i.e. discriminant) function. The different kernels functions used in SVM classifier are linear, polynomial, Gaussian radial basis function (RBF) and sigmoid (hyperbolic tangent). In this work Gaussian radial basis function (RBF) kernel is employed as it selects smooth solutions and is given by “9,”

x - x

K ( x , X j ) = exp( - 11 J ) (9)

2a

A SVM classifier can predict or classify input data belonging to two distinct classes by finding a hyper plane of a maximum margin between them. However, SVMs can be used as multiclass classifiers by treating a K-class classification problem as K two-class problems which is known as one-against-all classification.

The identification is one-to-many matching, and is ‘closed-set’ if the user is assumed to exist in the database. In ‘closed-set’ identification, the SVM classifies the individual by producing the output as +1 if the user sample is belongs to the class xj; otherwise the output is -1which is obtained using “(10)”;

M

8 j = Sa tytK ( xi, xj ) + b i = 1

where N is the number of training samples i.e. N=864 (6 x 144) feature vectors, yi is the class label i.e. 1 ≤ yi ≤

-

144, αi is the Lagrangian multiplier, the elements xi for which αi > 0 are the support vectors, ‘ b ’ is bias and K(xi, xj) is the kernel function. The experimentation using the techniques described in this paper, for user identification using feature level fusion of handgeometry and palmprint is described in the following section.

-

IV. Experimentation

The experimentation is carried out for analyzing the performance of the proposed multimodal biometric system with feature level fusion of handgeometry and palmprint traits. The system performance is evaluated with virtual multimodal biometric database,constructed for 144 users by augmenting the images of GPDS150 hand database and PolyU palmprint database. As discussed in section 3, for each user multimodal feature vector of size 46X1 is constructed by concatenating the graph spectral features (i.e. two principal eigenvectors) extracted from handgeometry i.e. 24X1 values and palmprint i.e. 22X1 values. For every user six feature vectors are used to train multiclass SVM. The remaining images of the virtual multimodal biometric database are employed for testing. Hence, for 144 users the knowledge base with 864 feature vectors is constructed and used for training the RBF kernel based multiclass support vector machine.

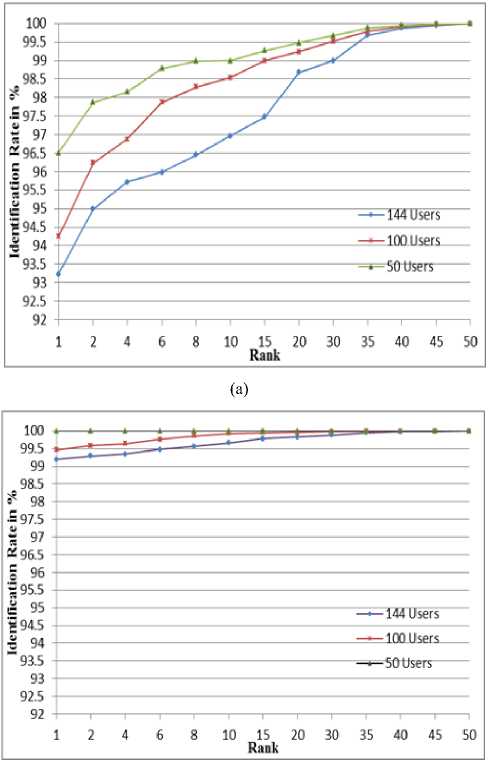

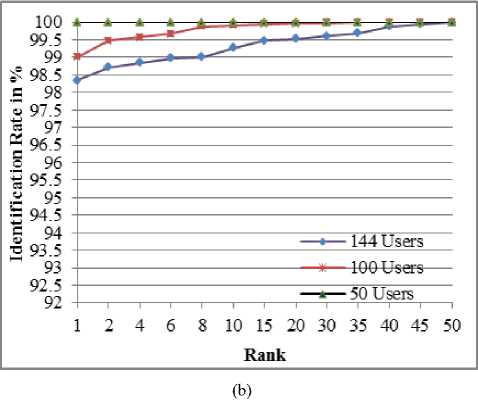

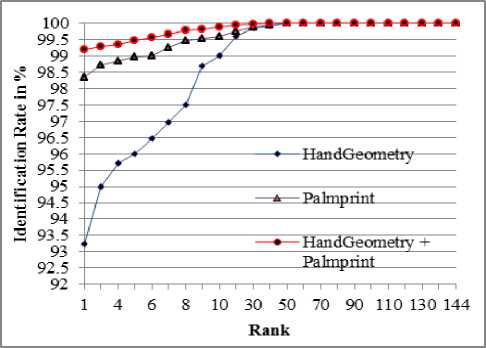

During identification, the multimodal feature vector of a user is generated and is identified using trained multiclass support vector machine. The experimentation is conducted for different set of users viz. 50, 100 and 144 users to test the robustness of the proposed multimodal biometric system by using multiclass SVM classifier. In order to analyze the performance, the proposed multimodal biometric system is compared with unimodal biometric systems with handgeometry and palmprint traits only. The system performance is tabulated in Table 2.

The identification rate of 93.23% for hand geometry, 98.34% for palmprint and 99.19% for multimodal biometric after feature level fusion of hand geometry and palmprint modalities are achieved for 144 users at the rank, k=1.

Table 2. Recognition Performance

|

Number of Users |

Modality |

GAR in % |

FAR in % |

FRR in % |

|

50 |

Hand Geometry |

96.50 |

1.50 |

3.50 |

|

Palmprint |

100.00 |

0.00 |

0.00 |

|

|

Proposed Method |

100.00 |

0.00 |

0.00 |

|

|

100 |

Hand Geometry |

94.25 |

1.50 |

5.75 |

|

Palmprint |

99.00 |

0.50 |

1.00 |

|

|

Proposed Method |

99.46 |

0.30 |

0.54 |

|

|

144 |

Hand Geometry |

93.23 |

0.68 |

6.76 |

|

Palmprint |

98.34 |

0.37 |

1.66 |

|

|

Proposed Method |

99.19 |

0.17 |

0.81 |

Biometric Person Identification System: A Multimodal Approach Employing Spectral

Graph Characteristics of Hand Geometry and Palmprint

The results show the robustness of the proposed methodology using multimodal biometric traits. But as the number of users are increased the performance degrades as shown in Table 2.

(c)

Fig.8. Cumulative Match Characteristics Curve (a) Handgeometry Biometric trait (b) Palmprint Biometric trait (c) Proposed multimodal biometric system (d) Comparison of handgeometry, palmprint and multimodal biometric system for 144 users

(d)

Table 3. Comparison of The Proposed Work With Previous Works

Список литературы Biometric Person Identification System: A Multimodal Approach Employing Spectral Graph Characteristics of Hand Geometry and Palmprint

- Kuncheva L. I, Whitaker C. J, Shipp C. A and Duin R. P. W, 2000, “Is independence good for combining classifiers?,” Procedings of International Conference on Pattern Recognition (ICPR), Vol. 2, (Barcelona, Spain), pp. 168–171, 2000.

- Hong L and Jain A. K, 1998, “Integrating faces and fingerprints for personal identification,” IEEE Transactions on PAMI, vol. 20, pp. 1295–1307, Dec 1998.

- Donatello Conte, Pasquale Foggia, Carlo Sansone, Mario Vento, 2007, “How and Why Pattern Recognition and Computer Vision Applications Use Graphs,” Applied Graph Theory in Computer Vision and Pattern Recognition, Vol. 52, pp. 85-135, 2007.

- Ross, A.K. Jain, 2004,“Multimodal Biometrics: an Overview”, Proceedings of 12th European Signal Processing Conference, Vienna, Austria, pp. 1221-1224 , 2004.

- Dakshina Ranjan Kisku, Phalguni Gupta, Jamuna Kanta Sing, " Multibiometrics Feature Level Fusion by Graph Clustering", International Journal of Security and Its Applications, Vol. 5, No. 2, April 2011.

- Rashmi Singhal, Narender Singh, Payal Jain, 2012, “Towards an Integrated Biometric Technique,” International Journal of Computer Applications, Vol. 42, No.13, pp. 20-23, March 2012.

- Yufei Han, Tieniu Tan, Zhenan Sung, "Palmprint Recognition Based on Directional Features and Graph Matching", Book section in Advances in Biometrics, Ed by Seon-Whan Lee and Stan Li, pp. 1164-1173, 2007.

- Wang H. and Hancock E.R, 2004, “A Kernel View of Spectral Point Pattern Matching,” Procedings of International Workshops on Advances in Structural and Syntactic Pattern Recognition and Statistical Techniques in Pattern Recognition, pp. 361-369, 2004.

- Yang Jian, Yang Jing-yu, Zhang David and Lu Jian-feng, 2003, “Feature fusion: parallel strategy vs. serial strategy,” Pattern Recognition, Vol. 36, No. 6, pp. 1961-1971, 2003.

- Karki Maya, Sethu Selvi S, 2013, “Multimodal Biometrics at Feature Level Fusion using Texture Features,” International Journal of Biometrics and Bioinformatics (IJBB), Vol.7, Issue 1, pp. 58-73, 2013.

- Gupta Aditya, Walia Ekjok, Vaidya Mahesh, 2014, “Feature level fusion of face, palm vein and palm print modalities using Discrete Cosine Transform” IEEE Proceedings on International Conference on Advances in Engineering and Technology Research (ICAETR), pp. 1-5, 2014.

- Nandakumar K. and Jain A. K., 2008, "Multibiometric Template Security using Fuzzy Vault," In Proceedings of IEEE Second International Conference on Biometrics: Theory, Applications and Systems, 2008.

- Deshmukh A,Pawar S and Joshi M, 2013, “Feature Level Fusion of Face And Fingerprint Modalities Using Gabor Filter Bank,” IEEE International Conference on Signal Processing, Computing and Control (ISPCC), pp.1-5, 2013.

- Mhaske, V.D., Patankar, A.J., 2013, “Multimodal biometrics by integrating fingerprint and palmprint for security,” IEEE International Conference on Computational Intelligence and Computing Research (ICCIC),pp. 1-5, 2013.

- Ajay Kumar, David Zhang, 2006, “Personal Recognition Using Hand Shape and Texture,” IEEE Image Processing, Vol. 15, No.8, pp. 2454-2461, 2006.

- Han C.C, 2004, “A hand-based personal authentication using a coarse-to fine Strategy”, Image and Vision Computing, Vol. 22, Issue. 11, pp. 909–918, 2004.

- Dewi Yanti Liliana, Eries Tri Utaminingsih, 2012, “The combination of palm print and hand geometry for biometrics palm recognition,” International Journal of Video & Image Processing and Network Security, Vol. 12, No.1, pp.1-5, 2012.

- Swapnali G. Garud, K.V. Kale, 2014, “Palmprint and Handgeometry Recognition using FAST features and Region properties,” International Journal of Science and Engineering Applications,Vol. 3 Issue 4, pp. 75-82, 2014.

- Quan-Sen Sun, Sheng-Gen Zeng, Pheng-Ann Heng and De-Sen Xia, 2005 “The Theory of Canonical Correlation Analysis and Its Application to Feature Fusion (in Chinese),”Chinese Journal of Computers, Vol. 28, No. 9, pp. 1524-1533, 2005.

- Nandakumar K, 2008, "Multibiometric Systems: Fusion Strategies and Template Security," Ph.D. Thesis, Michigan State University, USA, 2008.

- Miguel A. Ferrer, Aythami Morales, Carlos M. Travieso and Jesws B. Alonso, 2007, “Low Cost Multimodal Biometric identification System Based on Hand Geometry, Palm and Finger Textures,” 41 Annual IEEE International Carnahan Conference on Security Technologies ISBN:1-4244-1129-7, 52-58, 2007. Available from: (http://www.gpds.ulpgc.es).

- Zhang D., 2006, PolyU Palmprint Database, Biometric Research Centre, Hong Kong Polytechnic University, (Online) Available from: (http://www.comp.polyu.edu.hk/?biometrics/), 2006.

- Otsu N., 1978, “A threshold selection method from gray-scale histogram,” IEEE Transaction on SMC, 8, pp. 62-66, 1978.

- Gonzalez R.C. and Woods R.E., 2002, “Digital Image Processing using MATLAB”, Prentice-Hall, New Jersey, 2002.

- Shanmukhappa A. Angadi, Sanjeevakumar M. Hatture, 2011, “A Novel Spectral Graph Theoretic Approach To User Identification Using Hand Geometry,” International Journal of Machine Intelligence, Vol.3, No.4, pp. 282-288, 2011.

- Zhihu Huang, Jinsong Leng, 2010, “Analysis of Hu's Moment Invariants on Image Scaling and Rotation”, Proceedings of Second International Conference on Computer Engineering and Technology, pp. 476-480, 2010.

- Jin Soo Noh and Kang Hyeon Rhee, 2005, “Palmprint Identification Algorithm using Hu Invariant Moments and Otsu Binarization,” Proceedings of the Fourth Annual ACIS International Conference on Computer and Information Science, pp. 94-99, 2005.

- Arun Rossa and Rohin Govindarajan, 2005, "Feature Level Fusion Using Hand and Face Biometrics," Procedings of SPIE conference on Biometric Technology for Human Identification II, Vol.5779, pp. 196-204, 2005.

- Chih-Wei Hsu, Chih-Jen Lin, 2002,“A Comparison of Methods for Multiclass Support Vector Machines,” IEEE Transaction on Neural Networks, Vol. 13, No. 2, pp. 415-425, 2002.

- Ajay Kumar, David C. M. Wong, Helen C. Shen, Anil K. Jain, 2004, "Personal verification using Palmprint and Hand Geometry Biometric," Department of Computer Science, Hong Kong University of Science and Technology, Clear Water Bay, Hong Kong 2004.