Biorthogonal Wavelet Transform Using Bilateral Filter and Adaptive Histogram Equalization

Автор: Savroop Kaur, Hartej S. Dadhwal

Журнал: International Journal of Intelligent Systems and Applications(IJISA) @ijisa

Статья в выпуске: 3 vol.7, 2015 года.

Бесплатный доступ

Image fusion is a process of combining data from multiple sources to achieve refined or improved information for making decisions. It has many applications. When we use images with a similar acquisition time, the expected result is to obtain a fused image that retains the spatial resolution from the panchromatic image and color content from the multi-spectral image. In recent time different methods have been developed. These methods are both in spatial domain and in wavelet domain. Out of these two the wavelet domain based methods are more suitable as they are capable to handle the spatial distortion produced by the spatial domain. In this paper the proposed method is compared with principle component analysis, discrete cosine transform and also with biorthogonal wavelet transform in which bilateral filter and adaptive histogram is not present. This comparison is on the bases of different parameters. Biorthogonal wavelet transform is capable to preserve edge information and hence reducing the distortions in the fused image. It has two important properties wavelet symmetry and linear phase which are not present in spatial domain. The performance of the proposed method has been extensively tested on several pairs of multi-focus and multimodal images. Experimental results show that the proposed method improves fusion quality by reducing loss of significant information available in individual images.

Fusion, Preprocessing, Adaptive histogram, Resampling, Wavelets

Короткий адрес: https://sciup.org/15010666

IDR: 15010666

Текст научной статьи Biorthogonal Wavelet Transform Using Bilateral Filter and Adaptive Histogram Equalization

Published Online February 2015 in MECS

Image fusion is a mechanism which is used to compute two or more images of the same scene but having different focus points [1]. The new resultant image thus generated should not have any artifact introduced in it. Image fusion techniques have been developed for fusing the complementary information of multisource input images in order to create a new image that is more suitable for human visual or machine perception. The way the sources combine their information depends on the nature of the images and the way they have been taken. The images can be fused using spatial domain [2, 3] and in frequency domain [4, 5]. Some popular spatial domain methods are Arithmetic Averaging, Principal Component Analysis (PCA) [6], Sharpness criteria and Intensity Hue Saturation based on fusion scheme [7]. However these methods are complex and time consuming which are hard to be performed on real-time applications. These methods produce edge distortions in the fused image.

Improvement in the existing techniques is proposed time to time. The most recent is the wavelet transforms [12] based image fusion. Image fusion has been used in application area such as remote sensing and astronomy intelligent robots, military applications, medical imaging, forensic science, surveillance and so on.

Assessment of the quality of the fused images is an important issue. The most basic requirements for image fusion are that the fused should contain all the relevant information contained in the source images and the fused image should not have any artifacts introduced in the resultant image [8]. Image fusion is performed at three different levels. These are pixel level, region level and decision level. Pixel level fusion deals with the information associated with each pixel where each pixel value in the fused image is determined from the corresponding pixel values of source images. Pixel level fusion is easy to implement. In feature level fusion, source images are segmented into regions and features and these features are used for fusion. Decision level fusion is a high level fusion which uses decisions coming from various fusing sensors [9]. In wavelet transform domain, there are several methods to fuse the number of images together. The main two steps involved are decomposition of source images and selection of coefficients from the decomposed images. This is also called the fusion rule. The decomposition of the image produce coefficients in transform domain and fusion rule merges these coefficients without losing original information in the individual images and without introducing any artifacts or inconsistencies.

The traditional wavelet transform based on Mallet algorithm. This algorithm was complex as it involves convolution to process large numbers of image data. Therefore it requires more space for write and read operations. This becomes costly for real-time imaging applications. Also, the orthogonal filters in wavelet transform do not have the characteristics of linear phase, therefore phase distortion will lead to the distortion of the image [10, 11] edges and hence loss of important image content. BWT overcomes these shortcomings.

The organization of paper is as follows: section 2 describes about the preprocessing of image before fusion. Section 3 describes about the adaptive histogram equalization. Section 4 consists of different fusion techniques. Section 5 provides the over view of the proposed method and the last section gives the qualitative and quantitative results of the methods.

-

II. Preprocessing of Image Fusion

Two images taken in different angles of scene sometimes cause distortion. Most of objects are the same but having a bit different shapes. At the beginning of fusing images, we have to make sure that each pixel at correlated images has the connection between images in order to fix the problem of distortion; image registration can do this. Two images having same scene can register together using software to connect several control points. After registration, resampling is done to adjust each image that about to fuse. The fused image is of the same dimension. The result obtained by resampling will adjust the images to same size. Several approaches can be used to resample the image; the reason is that most approaches we use are all pixel-by-pixel fused.

Images with the same size will be easy for fusing process. After the re-sampling, fusion algorithm is applied. Sometimes we have to transfer the image into different domain, sometimes haven’t depending on the algorithm. Inverse transfer is necessary if image has been transferred into another domain.

Images

Image Registration

Image Resampling

Image Fusion

Fused Image

-

Fig. 1. Preprocessing of Image Fusion

-

III. Adaptive Histogram Equalization

Adaptive histogram equalization is a computer image processing technique used to recover contrast in images. Adaptive histogram equalization is an excellent contrast enhancement for both natural images and medical images and other initially non visual images. It differs from ordinary histogram equalization in the respect that the adaptive method computes many different histograms, and each histogram corresponds to a distinct section of the image, and then uses them to redistribute lightness value of the image. The image fusion process may degrade the sharpness of the fused image so to overcome this problem of poor brightness adaptive histogram equalization will be used to enhance the results further. We can say that adaptive histogram equalization will come in action to preserve the brightness of the fused image.

-

IV. Fusion Techniques

-

A. Biorthogonal Wavelet Transform

In filtering applications none of the orthogonal filters except the haar filters provide the linear phase. The biorthogonal filters are designed to provide the symmetric property and the exact reconstruction by using two wavelet filters instead of one. Biorthogonal Wavelet transform is used for image denoising as it has the property of linear phase. The orthogonal filter of wavelet transform does not have the characteristics of linear phase; therefore the phase distortion will lead to the distortion of the image edge. This problem is reduced by the use of biorthogonal wavelet, as it contains spline wavelets [14]. This will help in perfect reconstruction of the image by using Finite Impulse Response filters. This property is not present in orthogonal filters.

Wavelet transform fusion is more formally defined by considering the wavelet transforms w of the two registered input images I1 (x, y) and I2 (x, y) with the fusion rule µ. After that the inverse wavelet transform w-1 is computed, and the fused image I (x, y) is reconstructed:

I (x,y) = w-1 (µ ( w ( I1 (x,y) ), w( I2 (x,y) ) ) ) (1)

At the last when a fused image is retrieved, it is passed through bilateral filter which is a non-linear, edge preserving and smoothing filter and adaptive histogram equalization which is used to enhance the contrast in the image.

A good quality of image is retrieved using these filters. The final fused image is thus obtained at last by passing it through different blocks.

-

B. Principal component analysis

Principal component analysis (PCA) is used to reduce the size and dimensionality of the image. PCA is a vector space transform. It helps in identifying and to show patterns in data, and it also highlight their similarities and differences, and hence reduce dimension without loss of data [13].

If (x,y) = Р 1 7 1 (х,у),х + Р2 ^(^У), y (2)

-

C. Discrete Cosine Transform

Discrete cosine transform (DCT) is a very important transform in image processing. DCT coefficients are concentrated in the low frequency region, therefore it is known to have great energy compactness properties and edges may contribute high frequency coefficients [15].

Fig. 2. General Biorthogonal wavelet based image fusion scheme

-

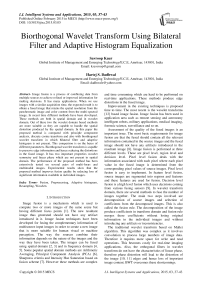

V. Proposed Method

The proposed method of image fusion uses integrated biorthogonal wavelet transform with bilateral filter and adaptive histogram equalization. To attain the goal, step-by-step methodology is used in this dissertation. Subsequent are the dissimilar steps which are used to accomplish this work. Following are the steps used to accomplish the objectives of the dissertation.

The proposed algorithm includes:

-

1. First of all two partially blurred digital images are acquired and converted into digital images.

-

2. Now image will be divided into the three channels i.e. Red, Green and Blue channels.

-

3. Now fusion will be done for each channel separately using BWT based fusion.

-

4. Now separate Red, Green and Blue channels will be combined to form the fused image.

-

5. Now bilateral filter will come in action in order to decrease the fusion artifacts and will result in a new high quality vision.

-

6. In end adaptive histogram equalization will be done to produce the final fused vision.

In order to implement PCA, DCT and BWT the previous methods and the new proposed method, design and implementation is done in MATLAB using image processing toolbox. Table 1 is showing the various images that have been taken in this research work for experimental purpose. The images are in .jpg format. Other formats like .jpeg, .png, and .gif can also be used. The size of each image is mentioned in the table. The size of images is almost same for both the images.

Table 1. Input image

|

Image name |

Format |

Size in KB (left) |

Size in KB (right) |

|

.jpg |

19.5 |

19.3 |

|

|

2 |

.jpg |

23.7 |

23.1 |

|

3 |

.jpg |

25.2 |

23.8 |

|

4 |

.jpg |

19.2 |

21.0 |

|

5 |

.jpg |

24.4 |

25.4 |

|

6 |

.jpg |

25.4 |

25.8 |

|

7 |

.jpg |

57.4 |

56.5 |

|

8 |

.jpg |

67.6 |

74.0 |

|

9 |

.jpg |

26.0 |

27.8 |

|

10 |

.jpg |

99.1 |

109.0 |

For experimental analysis an image is first taken. Figure 3 is the left blurred input image. Figure 4 is the right blurred input image. The overall objective of this is to combine relevant information from multiple images into a single image that is more informative and suitable for both visual perception and further computer processing.

Fig. 3. First Input Image

Fig. 4. Second Input Image

Fig. 5. Output Image existing technique

Figure 5 has shown the output image taken by the proposed method. The output image preserves the brightness of original blurred images to be fused. Figure 6 is obtained by using bilateral filter and at last figure 7 is obtained after adding adaptive histogram equalization to the total result. This is the image obtained by using the proposed method. This image is more cleared and near to the original image. In the previous methods bilateral filters are not used. The bilateral filter is edge-preserving, non-linear and noise-reducing smoothing filter. This enhances the performance of the proposed method.

Fig. 6. Bilateral output

Fig. 7. Proposed Image

-

VII. Performance Analysis

This section contains the comparative analysis between the previous methods and the proposed method based image fusion. Different image fusion metrics has been considered to find that the proposed algorithm is more efficient among the available algorithms.

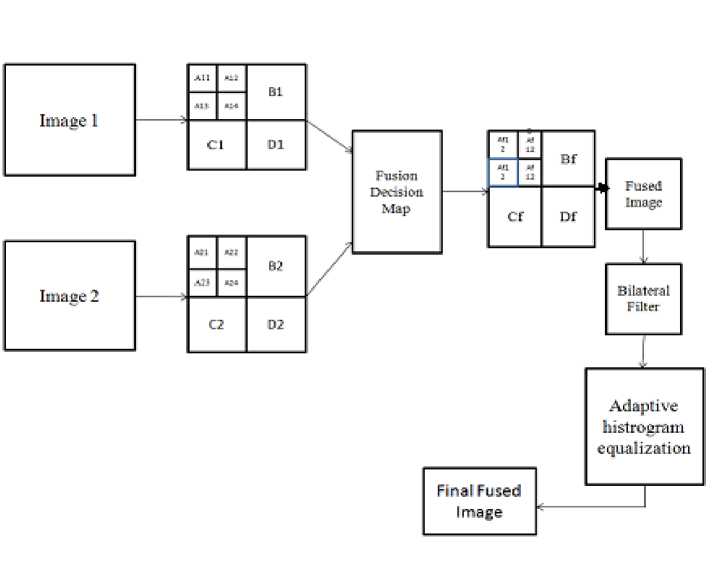

Table 2 and figure 8 have the comparative analysis of PSNR between the method used and the proposed method. It has been clearly shown that among the available techniques proposed method is better.

Table 2. Peak signal to noise ratio

|

Image no. |

Method used |

Proposed Method |

|

1 |

51.5596 |

69.2834 |

|

2 |

47.1594 |

80.7360 |

|

3 |

45.5491 |

83.1163 |

|

4 |

49.2258 |

83.2246 |

|

5 |

45.9568 |

80.2615 |

|

6 |

50.6048 |

69.6400 |

|

7 |

53.6933 |

85.0611 |

|

8 |

49.6993 |

81.4048 |

|

9 |

48.0665 |

81.3426 |

|

10 |

45.7702 |

80.0989 |

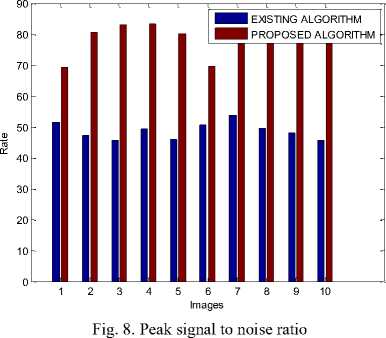

Table 3 and figure 9 is the comparative analysis of MSE between the method used and the proposed method. It has been clearly shown that among the available techniques proposed method is better.

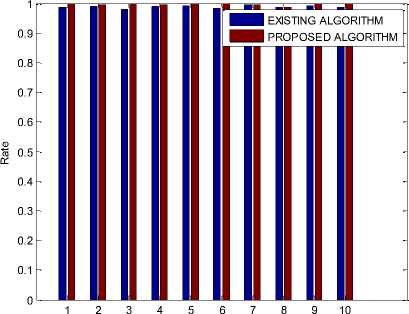

Table 4 and figure 10 have the comparative analysis of NCC between the method used and the proposed method. It has been clearly shown that among the available techniques proposed method is better.

Table 3. Mean Square Error

|

Image No. |

Used Method |

Proposed Method |

|

1 |

171 |

22 |

|

2 |

285 |

5 |

|

3 |

343 |

4 |

|

4 |

224 |

4 |

|

5 |

327 |

6 |

|

6 |

191 |

21 |

|

7 |

134 |

3 |

|

8 |

212 |

5 |

|

9 |

256 |

5 |

|

10 |

334 |

6 |

Images

Fig. 10. Normalized Cross Correlation

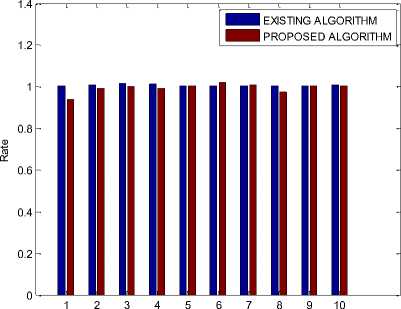

Table 5and figure 11 shows the comparative analysis of MSE between the method used and the proposed method. It has been clearly shown that among the available techniques proposed method is better.

Table 4. Normalized cross correlation

|

Image no. |

Used Method |

Proposed Method |

|

1 |

0.9872 |

0.9998 |

|

2 |

0.9902 |

0.9958 |

|

3 |

0.9814 |

0.9998 |

|

4 |

0.9908 |

0.9960 |

|

5 |

0.9937 |

0.9982 |

|

6 |

0.9848 |

0.9983 |

|

7 |

0.9948 |

0.9962 |

|

8 |

0.9875 |

0.9878 |

|

9 |

0.9935 |

0.9975 |

|

10 |

0.9878 |

0.9976 |

Table 5. Mean Square Error

|

Image no. |

Method used |

Proposed Method |

|

1 |

1.0070 |

0.9411 |

|

2 |

1.0078 |

0.9915 |

|

3 |

1.0161 |

0.9993 |

|

4 |

1.0123 |

0.9920 |

|

5 |

1.0035 |

1.0034 |

|

6 |

1.0054 |

1.0233 |

|

7 |

1.0042 |

1.0074 |

|

8 |

1.0067 |

0.9759 |

|

9 |

1.0057 |

1.0048 |

|

10 |

1.0075 |

1.0044 |

Images

Fig. 11. Structure Content

The usefulness of the proposed image fusion method is tested on multifocus and multimodal images. For this, we have presented two pair of images and their fusion results. Different set of images with varying noise are fused using absolute maximum selection fusion rule. On the bases of these experiments and comparison with existing traditional image fusion methods, we observed that the proposed method performs better in most of the cases. The performance is measured on the basis of qualitative and quantitative criteria. According to the results of fusion, the biorthogonal wavelet transform using adaptive histogram equalization produce better results and more applicable because it can retain information of individual image like edges, lines, curves, boundaries in the fused image in better way. This is because of the linear phase and symmetry properties of filters used in biorthogonal wavelet transform. Also, experimental results show that the multiscale wavelet decomposition is greatly effective for image fusion, and therefore, the proposed image fusion method presented in this paper is effective for different multimodal and multifocus image fusion.

Список литературы Biorthogonal Wavelet Transform Using Bilateral Filter and Adaptive Histogram Equalization

- G. Liu, Z. Jing, S. Sun "Image fusion based on an expectation maximization algorithm” Shanghai Jiaotong University Information and Electrical Engineering Shanghai 2003.

- P. Shah, S. N.Merchant and U. B.Desai, “An efficient spatial domain fusion scheme for multifocus images using statistical properties of neighbourhood,” Multimedia and Expo (ICME), pp. 1-6, 2011.

- H. Chen, “A multiresolution Image Fusion based on Principle Component Analysis, “Fourth International Conference on Image and Graphics, pp. 737-741, 2007.

- R. Singh, R. Srivastava, O. Prakash and A. Khare, "DTCWT based multimodal medical image fusion", in proc. of International conference on Signal, image and video processing, January 2012, pp.403-407, IIT Patna.

- H. H. Wang, “A new multiwavelet based approach to Image Fusion”, Journal of Mathematical Imaging and Vision, vol.21, pp.177-192,

- G.Piella, “A general framework for multiresolution image fusion: from pixels to regions, Information Fusion,” Vol. 4, No. 4, pp. 259-280, 2003.

- V. P. S. Naidu and J. R. Raol, “Pixel-level image fusion using wavelets and Principal Component Analysis”, Defence Science journal, Vol.58, No 3, pp. 338-352, 2008.

- S. Daneshvar, H. Ghassemian, “MRI and PET Image Fusion by combining IHS and Retina-Inspired models”, Information Fusion Vol.11, No.2, pp.114-123, 2010.

- Anjali Maviya, S.G. Bhirud, "Objective criterion for performance evaluation of Image Fusion Techniques", International Journal of Computer Applications (0975 - 8887) Volume 1 - No. 25, 2010.

- J. J. Lewis, R. J. O'Callaghan, S. G. Nikolov, D. R. Bull and C. N. Canagarajah, “Region-based image fusion using Complex Wavelets”, 7th International conference on Information Fusion (Fusion 2004),International Society of Information Fusion (ISIF), Stockholm, pp. 555-562, 2004.

- W. Sweldens, “The Lifting Scheme: A Construction of Second Generation Wavelets”, SIAM J. Math. Anal., 1997.

- W. Sweldens, “The Lifting Scheme: A Custom Design construction of Biorthogonal Wavelets,” Appl. Computer. Harmon. Anal., Vol. 3, 1996.

- Ujwal Patil, Uma Mudengudi, “Image Fusion using hierarchal PCA”, International Conference on Image Information Processing (ICIIP 2011), 2011.

- Om Prakash, Richa Srivastava, Ashish Khare “Biorthogonal wavelet transform based image fusion using absolute maximum fusion rule”, Proceedings of IEEE Conference on Information and Communication Technologies (ICT 2013),2013.

- VPS Naidu, “Discrete Cosine Transform based Image Fusion Techniques”, Journal of Communication, Navigation and Signal Processing Vol. 1, No. 1, pp. 35-45, January 2012.