Color Local Binary Patterns for Image Indexing and Retrieval

Автор: K. N. Prakash, K. Satya Prasad

Журнал: International Journal of Intelligent Systems and Applications(IJISA) @ijisa

Статья в выпуске: 9 vol.6, 2014 года.

Бесплатный доступ

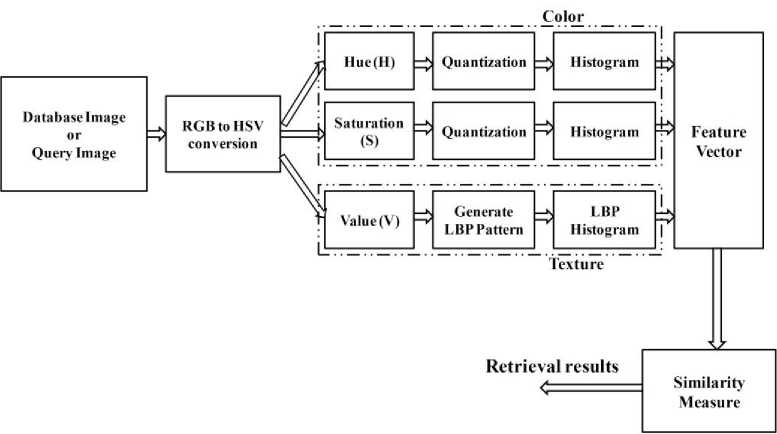

A new algorithm meant for content based image retrieval (CBIR) is presented in this paper. First the RGB (red, green, and blue) image is converted into HSV (hue, saturation, and value) image, then the H and S images are used for histogram calculation by quantizing into Q levels and the local region of V (value) image is represented by local binary patterns (LBP), which are evaluated by taking into consideration of local difference between the center pixel and its neighbors. LBP extracts the information based on distribution of edges in an image. Two experiments have been carried out for proving the worth of our algorithm. It is further mentioned that the database considered for experiments are Corel 1000 database (DB1), and MIT VisTex database (DB2). The results after being investigated show a significant improvement in terms of their evaluation measures as compared to LBP on RGB spaces separately and other existing techniques.

Color, Texture, Feature Extraction, Local Binary Patterns, Image Retrieval

Короткий адрес: https://sciup.org/15010606

IDR: 15010606

Текст научной статьи Color Local Binary Patterns for Image Indexing and Retrieval

Published Online August 2014 in MECS

-

A. Motivation

The need for an efficient system to facilitate users in searching and organizing image collections in a large scale database is crucial. However, developing such systems is quite difficult, because an image is an ill-defined entity consisting of complex and highly variable structures. Hence there is a need of some automatic search technique called content based image retrieval (CBIR). The feature extraction is a very important step and the effectiveness of a CBIR system depends typically on the method of features extraction from raw images. The CBIR uses the visual contents of an image such as color, texture, shape, faces, spatial layout etc. to represent and index the image. The visual features can be further classified as general features (color, texture and shape) and domain specific features as human faces and finger prints. There is no single best representation of an image for all perceptual subjectivity, because the user may take the photographs in different conditions (view angle, illumination changes etc.). Comprehensive and extensive literature survey on CBIR is presented in [1]–[4].

Swain et al. proposed the concept of color histogram in 1991 and also introduced the histogram intersection distance metric to measure the distance between the histograms of images [5]. Stricker et al. used the first three central moments called mean, standard deviation and skewness of each color for image retrieval [6]. Pass et al. introduced color coherence vector (CCV) [7]. CCV partitions the each histogram bin into two types, i.e., coherent, if it belongs to a large uniformly colored region or incoherent, if it does not. Huang et al. used a new color feature called color correlogram [8] which characterizes not only the color distributions of pixels, but also spatial correlation of pair of colors. Lu et al. proposed color feature based on vector quantized (VQ) index histograms in the discrete cosine transform (DCT) domain. They computed 12 histograms, four for each color component from 12 DCT-VQ index sequences [9].

Texture is another salient and indispensable feature for CBIR. Smith et al. used the mean and variance of the wavelet coefficients as texture features for CBIR [10]. Moghaddam et al. proposed the Gabor wavelet correlogram (GWC) for CBIR [11, 12]. Ahmadian et al. used the wavelet transform for texture classification [13]. Moghaddam et al. introduced new algorithm called wavelet correlogram (WC) [14]. Saadatmand et al. [15, 16] improved the performance of WC algorithm by optimizing the quantization thresholds using genetic algorithm (GA). Birgale et al. [17] and Subrahmanyam et al. [18] combined the color (color histogram) and texture (wavelet transform) features for CBIR. Subrahmanyam et al. proposed correlogram algorithm for image retrieval using wavelets and rotated wavelets (WC+RWC) [19].

-

B. Related Work

Ojala et al. proposed the local binary patterns (LBP) for texture description [20] and these LBPs are converted to rotational invariant for texture classification [21]. pietikainen et al. proposed the rotational invariant texture classification using feature distributions [22]. Ahonen et al. [23] and Zhao et al [24] used the LBP operator facial expression analysis and recognition. Heikkila et al. proposed the background modeling and detection by using LBP [25]. Huang et al. proposed the extended LBP for shape localization [26]. Heikkila et al. used the LBP for interest region description [27]. Li et al. used the combination of Gabor filter and LBP for texture segmentation [28]. Zhang et al. proposed the local derivative pattern for face recognition [29]. They have considered LBP as a nondirectional first order local pattern, which are the binary results of the first-order derivative in images.

Abdullah et al. [30] proposed fixed partitioning and salient points schemes for dividing an image into patches, in combination with low-level MPEG-7 visual descriptors to represent the patches with particular patterns. A clustering technique is applied to construct a compact representation by grouping similar patterns into a cluster codebook.The codebook will then be used to encode the patterns into visual keywords. In order to obtain high-level information about the relational context of an image, a correlogram is constructed from the spatial relations between visual keyword indices in an image. For classifying images k-nearest neighbors (k-NN) and a support vector machine (SVM) algorithm are used and compared.

C H Lin et al. [31] combined the color feature, k-mean color histogram (CHKM) and texture features, motif cooccurrence matrix (MCM) and difference between the pixels of a scan pattern (DBPSP). MCM is the conventional pattern co-occurrence matrix that calculates the probability of the occurrence of same motif between each motif and its adjacent ones in each motif transformed image, and this probability is considered as the attribute of the image. According to the sequence of motifs of scan patterns, DBPSP calculates the difference between pixels and converts it into the probability of occurrence on the entire image. Each pixel color in an image is then replaced by one color in the common color palette that is most similar to color so as to classify all pixels in image into k-cluster, called the CHKM feature. Vadivel et al. [32] proposed the integrated color and intensity co-occurrence matrix (ICICM) for image retrieval application. First they analyzed the properties of the HSV color space and then suitable weight functions have been suggested for estimating relative contribution of color and gray levels of an image pixel. The suggested weight values for a pixel and its neighbor are used to construct an ICICM.

-

C. Main Contribution

To improve the retrieval performance in terms of retrieval accuracy, in this paper, we combined color (color histogram on H and S spaces) and texture (local binary patterns on V space) feature on HSV image. Two experiments have been carried out on Corel database and MIT VisTex database for proving the worth of our algorithm. The results after investigation show a significant improvement in terms of their evaluation measures as compared to LBP RGB spaces separately and other existing techniques.

The organization of the paper as follows: In section I, a brief review of image retrieval and related work is given. Section II, presents a concise review of local binary patterns. Section III, presents the proposed system framework and similarity measure. Experimental results and discussions are given in section IV. Based on above work conclusions are derived in section V.

-

II. Local Binary Patterns

The LBP was originally proposed by Ojala et al. [20] as a grayscale invariant measure for characterizing local structure in a 3×3 pixel neighborhood. Later, a more general formulation was proposed that further allowed for multi-resolution analysis and rotation invariance [21].

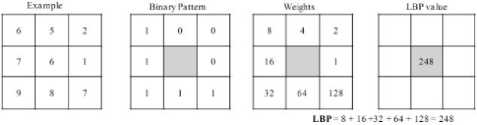

For a given center pixel in an image, a LBP value is computed by comparing it with those of its neighborhoods as shown in Fig. 1:

P - 1

LBP P,R = X 2 X f ( g i - g c )

= 0

f ( x ) =

x > 0

else

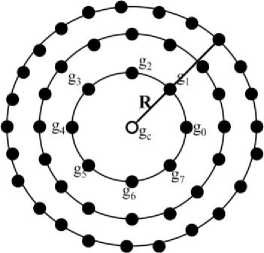

where g is the gray value of the center pixel, g is the gray value of its neighbors, P is the number of neighbors and R is the radius of the neighborhood. Fig. 2 shows the examples of circular neighbor sets for different configurations of ( P , R ) .

Fig. 1. LBP calculation for 3×3 pattern

Fig. 2. Circular neighborhood sets for different (P,R)

Fig. 3. Illustration of LBP.

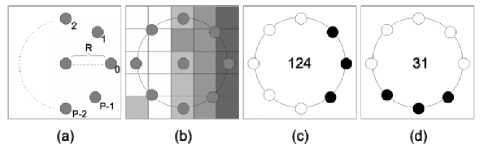

(a) The LBP filter is defined by two parameters; the circle radius R and the number of samples P on the circle.

(b) Local structure is measured w.r.t. a given pixel by placing the center of the circle in the position of that pixel.

(c) Samples on the circle are binarized by thresholding with the intensity in the center pixel as threshold value. Black is zero and white is one. The example image shown in (b) has an LBP code of 124.

(d) Rotating the example image in (b) 900 clockwise reduces the LBP code to 31, which is the smallest possible code for this binary pattern. This principle is used to achieve rotation invariance.

The LBP measure the local structure by assigning unique identifiers, the binary number, to various microstructures in the image. Thus, LBP capture many structures in one unified framework. In the example in

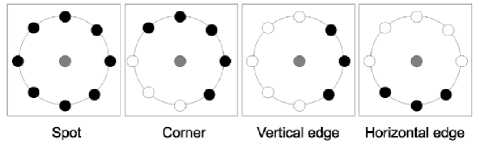

Fig. 3(b), the local structure is a vertical edge with a leftward intensity gradient. Other microstructures are assigned different LBP codes, e.g., corners and spots, as illustrated in Fig. 4. By varying the radius R and the number of samples P, the structures are measured at different scales, and LBP allows for measuring large scale structures without smoothing effects, as is, e.g., the case for Gaussian-based filters.

Fig. 4. Various microstructures measured by LBP. The gray circle indicates the center pixel. Black and white circles are binarized samples; black is zero and white is one.

After identifying the LBP pattern of each pixel (j, k) , the whole image is represented by building a histogram:

N 1 N 2

H lbp ( i ) = ZZ f ( lbp ( j , k ), i ); i eW (2 P - 1)] (3)

j = 1 k = 1

f ( x , y ) =

x = У else

(hue, saturation, and value) image, then the H and S images are used for histogram calculation by quantizing into Q levels and the local region of V (value) image is represented by local binary patterns (LBP), which are evaluated by taking into consideration of local difference between the center pixel and its neighbors. Finally, feature vector is constructed by concatenating the features collected on HSV spaces and then these are used for image retrieval. The flowchart of the proposed system is shown in Fig. 5 and algorithm for the same is given below:

Algorithm:

Input: Image; Output: Retrieval results.

-

1. Load the input image.

-

2. Convert RGB image into HSV image.

-

3. Perform the quantization on H and S spaces.

-

4. Calculate the histograms.

-

5. Calculate the LBP features on V space.

-

6. Form the feature vector by concatenating the HSV features.

-

7. Calculate the best matches using (5).

-

8. Retrieve the number of top matches.

-

A. Similarity Measurement

In the presented work d 1 similarity distance metric is used as shown below:

where the size of input image is ^ x N2 .

D ( Q , I 1 )

=z i =1

Q , i

Q , i

-

III. Proposed System Framework

In this paper, we proposed the new technique by using color and texture features for image retrieval. First the where Q is query image, Lg is feature vector length, I1 is image in database; f is ith feature of image I in the database, f is ith feature of query image Q.

RGB (red, green, and blue) image is converted into HSV

Fig. 5: Flowchart of proposed system

-

IV. Experimental Results and Discussions

For the work reported in this paper, retrieval tests are conducted on two different databases (Corel 1000, and MIT VisTex) and results are presented separately.

-

A. Database DB1

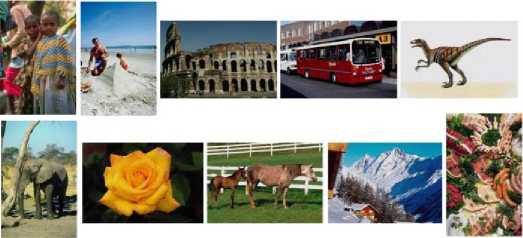

Corel database [33] contains large amount of images of various contents ranging from animals and outdoor sports to natural images. These images are pre-classified into different categories of size 100 by domain professionals.

Some researchers think that Corel database meets all the requirements to evaluate an image retrieval system, because of its large size and heterogeneous content. In this paper, we collected the database DB1 contains 1000 images of 10 different categories (groups G ). Ten categories are provided in the database namely Africans, beaches, buildings, buses, dinosaurs, elephants, flowers, horses, mountains and food . Each category has 100 images ( NG = 100) and these have either 256 x 384 or 384 x 256 sizes. Fig. 6 depicts the sample images of Corel 1000 image database (one image from each category)

The performance of the proposed method is measured in terms of average precision and average recall by (6) and (7) respectively.

Precision =

Recall = No . of Re levantImagesRetrieved x 100 (7)

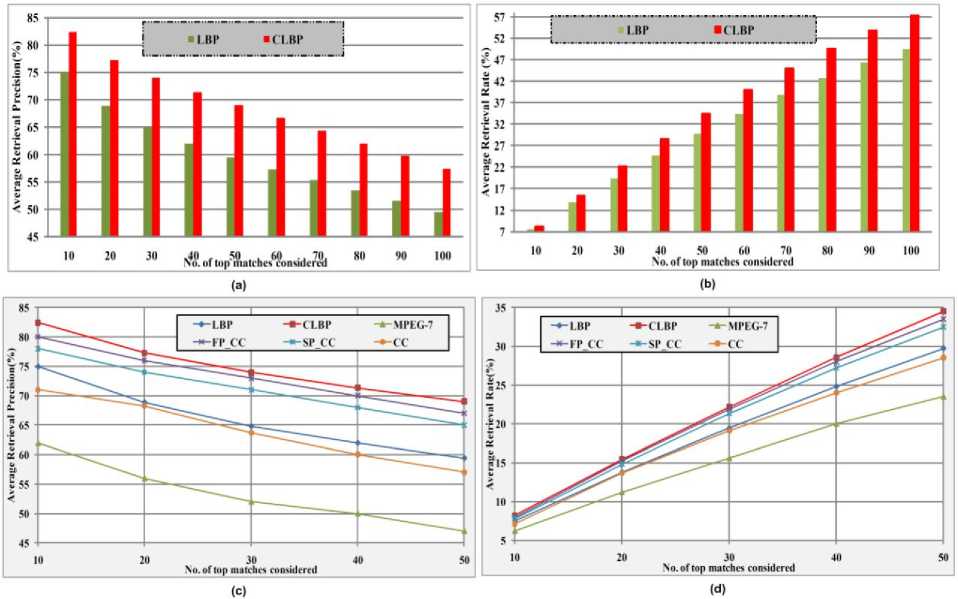

Table I and II summarizes the retrieval results of the proposed method (CLBP), LBP and other previously available methods (Jhanwar et al. [34], C H Lin et al. [31], and color correlogram (CC)) in terms of average retrieval precision and recall respectively. From Table I, Table II, and Fig. 7, it is clear that the proposed method showing better performance compared to LBP and other previously available methods in terms of average retrieval precision and recall. Fig. 8 illustrates the query retrieval results of the proposed method on DB1 database.

Fig. 6. Sample images from Corel 1000 (one image per category)

Table 1. Results of All Techniques In terms of Precision (%) on DB1 Database

Table 2. Results of All Techniques In terms of Recall on DB1 Database

|

Category |

Recall (n=100) |

||

|

CC |

LBP |

CLBP |

|

|

Africans |

46.29 |

39.18 |

53.75 |

|

Beaches |

25.29 |

38.19 |

37.28 |

|

Buildings |

35.01 |

34.46 |

47.95 |

|

Buses |

60.97 |

72.72 |

76.81 |

|

Dinosaurs |

89.59 |

91.08 |

95.23 |

|

Elephants |

34.14 |

28.91 |

37.15 |

|

Flowers |

77.69 |

72.99 |

71.83 |

|

Horses |

36.13 |

44.98 |

66.12 |

|

Mountains |

21.02 |

27.51 |

30.47 |

Table 3. Results of Proposed Method using Different Distance Measures on DB2 Database

|

Distance |

ARR(%) |

|

City Block (L1) |

88.36 |

|

Euclidian (L 2 ) |

74.43 |

|

Canberra |

90.68 |

|

d 1 |

91.06 |

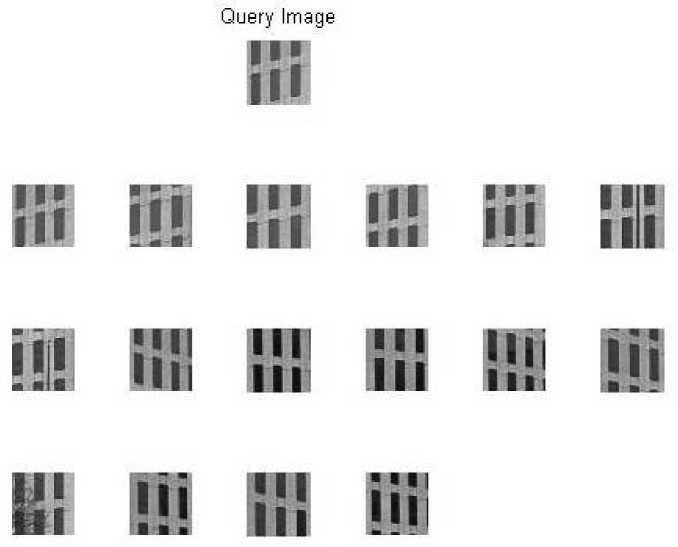

Fig. 8. Query results of the proposed method on DB1 database.

Fig. 9. Comparison of proposed method (CLBP) with LBP in terms of: (a) average retrieval precision, (b) average retrieval rate

Fig. 10. Query results of the proposed method on DB2 database.

-

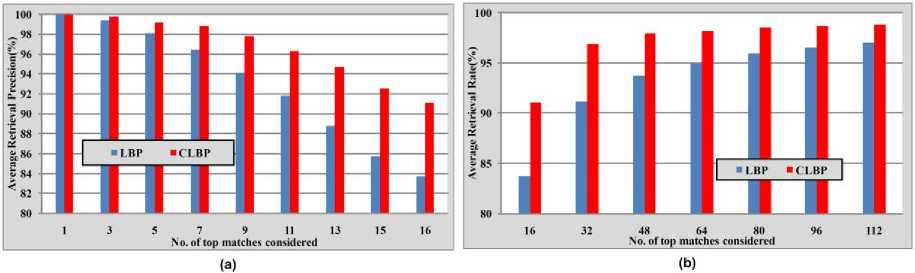

B. Database DB2

The database DB2 used in our experiment consists of 40 different textures [35]. The size of each texture is 512 x 512 . Each 512 x 512 image is divided into sixteen 128 x 128 non-overlapping sub-images, thus creating a database of 640 (40 x 16) images. The performance of the proposed method is measured in terms of average retrieval rate (ARR) is given by (8).

DB

ARR = ^ E R ( I,n )

n = 16

The database DB2 is used to compare the performance of the proposed method CLBP with LBP on RGB spaces separately. Fig. 9 illustrates the retrieval results of proposed method (CLBP) with LBP on RGB spaces separately in terms of average retrieval precision and average retrieval rate. From Fig. 9, it is evident that the proposed method CLBP is outperforming the LBP. Fig. 10 illustrates the query retrieval results of the proposed method on DB2 database.

The results of the proposed method are also compared with the different distance measures as shown in Table III. From Table III, it is found that the d 1 distance is outperforming (91.03%) the L1 distance (88.36%), L2 distance (74.43%), and Canberra (90.60%).

-

V. Conclusions

A new image indexing and retrieval algorithm is proposed in this paper by combining color histogram features on H and S spaces and local binary patterns on V space. Two experiments have been carried out on Corel database and MIT VisTex database for proving the worth of our algorithm. The results after being investigated shows a significant improvement in terms of their evaluation measures as compared to LBP on RGB space separately and other existing techniques.

Список литературы Color Local Binary Patterns for Image Indexing and Retrieval

- Y. Rui and T. S. Huang, Image retrieval: Current techniques, promising directions and open issues, J.. Vis. Commun. Image Represent., 10 (1999) 39–62.

- A. W.M. Smeulders, M. Worring, S. Santini, A. Gupta, and R. Jain, Content-based image retrieval at the end of the early years, IEEE Trans. Pattern Anal. Mach. Intell., 22 (12) 1349–1380, 2000.

- M. Kokare, B. N. Chatterji, P. K. Biswas, A survey on current content based image retrieval methods, IETE J. Res., 48 (3&4) 261–271, 2002.

- Ying Liu, Dengsheng Zhang, Guojun Lu, Wei-Ying Ma, Asurvey of content-based image retrieval with high-level semantics, Elsevier J. Pattern Recognition, 40, 262-282, 2007.

- M. J. Swain and D. H. Ballar, Indexing via color histograms, Proc. 3rd Int. Conf. Computer Vision, Rochester Univ., NY, (1991) 11–32.

- M. Stricker and M. Oreng, Similarity of color images, Proc. SPIE, Storage and Retrieval for Image and Video Databases, (1995) 381–392.

- G. Pass, R. Zabih, and J. Miller, Comparing images using color coherence vectors, Proc. 4th ACM Multimedia Conf., Boston, Massachusetts, US, (1997) 65–73.

- J. Huang, S. R. Kumar, and M. Mitra, Combining supervised learning with color correlograms for content-based image retrieval, Proc. 5th ACM Multimedia Conf., (1997) 325–334.

- Z. M. Lu and H. Burkhardt, Colour image retrieval based on DCT domain vector quantization index histograms, J. Electron. Lett., 41 (17) (2005) 29–30.

- J. R. Smith and S. F. Chang, Automated binary texture feature sets for image retrieval, Proc. IEEE Int. Conf. Acoustics, Speech and Signal Processing, Columbia Univ., New York, (1996) 2239–2242.

- H. A. Moghaddam, T. T. Khajoie, A. H Rouhi and M. Saadatmand T., Wavelet Correlogram: A new approach for image indexing and retrieval, Elsevier J. Pattern Recognition, 38 (2005) 2506-2518.

- H. A. Moghaddam and M. Saadatmand T., Gabor wavelet Correlogram Algorithm for Image Indexing and Retrieval, 18th Int. Conf. Pattern Recognition, K.N. Toosi Univ. of Technol., Tehran, Iran, (2006) 925-928.

- A. Ahmadian, A. Mostafa, An Efficient Texture Classification Algorithm using Gabor wavelet, 25th Annual international conf. of the IEEE EMBS, Cancun, Mexico, (2003) 930-933.

- H. A. Moghaddam, T. T. Khajoie and A. H. Rouhi, A New Algorithm for Image Indexing and Retrieval Using Wavelet Correlogram, Int. Conf. Image Processing, K.N. Toosi Univ. of Technol., Tehran, Iran, 2 (2003) 497-500.

- M. Saadatmand T. and H. A. Moghaddam, Enhanced Wavelet Correlogram Methods for Image Indexing and Retrieval, IEEE Int. Conf. Image Processing, K.N. Toosi Univ. of Technol., Tehran, Iran, (2005) 541-544.

- M. Saadatmand T. and H. A. Moghaddam, A Novel Evolutionary Approach for Optimizing Content Based Image Retrieval, IEEE Trans. Systems, Man, and Cybernetics, 37 (1) (2007) 139-153.

- L. Birgale, M. Kokare, D. Doye, Color and Texture Features for Content Based Image Retrieval, International Conf. Computer Grafics, Image and Visualisation, Washington, DC, USA, (2006) 146 – 149.

- M. Subrahmanyam, A. B. Gonde and R. P. Maheshwari, Color and Texture Features for Image Indexing and Retrieval, IEEE Int. Advance Computing Conf., Patial, India, (2009) 1411-1416.

- Subrahmanyam Murala, R. P. Maheshwari, R. Balasubramanian, A Correlogram Algorithm for Image Indexing and Retrieval Using Wavelet and Rotated Wavelet Filters, Int. J. Signal and Imaging Systems Engineering.

- T. Ojala, M. Pietikainen, D. Harwood, A comparative sudy of texture measures with classification based on feature distributions, Elsevier J. Pattern Recognition, 29 (1): 51-59, 1996.

- T. Ojala, M. Pietikainen, T. Maenpaa, Multiresolution gray-scale and rotation invariant texture classification with local binary patterns, IEEE Trans. Pattern Anal. Mach. Intell., 24 (7): 971-987, 2002.

- M. Pietikainen, T. Ojala, T. Scruggs, K. W. Bowyer, C. Jin, K. Hoffman, J. Marques, M. Jacsik, W. Worek, Overview of the face recognition using feature distributions, Elsevier J. Pattern Recognition, 33 (1): 43-52, 2000.

- T. Ahonen, A. Hadid, M. Pietikainen, Face description with local binary patterns: Applications to face recognition, IEEE Trans. Pattern Anal. Mach. Intell., 28 (12): 2037-2041, 2006.

- G. Zhao, M. Pietikainen, Dynamic texture recognition using local binary patterns with an application to facial expressions, IEEE Trans. Pattern Anal. Mach. Intell., 29 (6): 915-928, 2007.

- M. Heikkil;a, M. Pietikainen, A texture based method for modeling the background and detecting moving objects, IEEE Trans. Pattern Anal. Mach. Intell., 28 (4): 657-662, 2006.

- X. Huang, S. Z. Li, Y. Wang, Shape localization based on statistical method using extended local binary patterns, Proc. Inter. Conf. Image and Graphics, 184-187, 2004.

- M. Heikkila, M. Pietikainen, C. Schmid, Description of interest regions with local binary patterns, Elsevie J. Pattern recognition, 42: 425-436, 2009.

- M. Li, R. C. Staunton, Optimum Gabor filter design and local binary patterns for texture segmentation, Elsevie J. Pattern recognition, 29: 664-672, 2008.

- B. Zhang, Y. Gao, S. Zhao, J. Liu, Local derivative pattern versus local binary pattern: Face recognition with higher-order local pattern descriptor, IEEE Trans. Image Proc., 19 (2): 533-544, 2010.

- A. Abdullah, R. C. Veltkamp and M. A. Wiering, Fixed Partitioning and salient points with MPEG-7 cluster correlogram for image categorization, Pattern Recognition, 43, (2010) 650-662.

- C H Lin, Chen R T, Chan Y K A., Smart content-based image retrieval system based on color and texture feature, Image and Vision Computing 27 (2009) 658-665.

- A. Vadivel, Shamik Sural, and A.K. Majumdar, An Integrated Color and Intensity Co-occurrence Matrix, Pattern Recognition Letters 28 (2007) 974–983.

- Corel 1000 and Corel 10000 image database. [Online]. Available: http://wang.ist.psu.edu/docs/related.shtml.

- N. Jhanwara, S. Chaudhuri, G. Seetharamanc, and B. Zavidovique, Content based image retrieval using motif co-occurrence matrix, Image and Vision Computing 22, (2004) 1211–1220.

- MIT Vision and Modeling Group, Vision Texture. [Online]. Available: http://vismod.media.mit.edu/pub/.