Controlling Citizen’s Cyber Viewing Using Enhanced Internet Content Filters

Автор: Shafi’í Muhammad ABDULHAMID, Fasilat Folagbayo IBRAHIM

Журнал: International Journal of Information Technology and Computer Science(IJITCS) @ijitcs

Статья в выпуске: 12 Vol. 5, 2013 года.

Бесплатный доступ

Information passing through internet is generally unrestricted and uncontrollable and a good web content filter acts very much like a sieve. This paper looks at how citizens’ internet viewing can be controlled using content filters to prevent access to illegal sites and malicious contents in Nigeria. Primary data were obtained by administering 100 questionnaires. The data was analyzed by a software package called Statistical Package for Social Sciences (SPSS). The result of the study shows that 66.4% of the respondents agreed that the internet is been abused and the abuse can be controlled by the use of content filters. The PHP, MySQL and Apache were used to design a content filter program. It was recommended that a lot still need to be done by public organizations, academic institutions, government and its agencies especially the Economic and Financial Crime Commission (EFCC) in Nigeria to control the internet abuse by the under aged and criminals.

Web Content, Content Filter, Firewalls, Servers, Web 2.0, Hacker

Короткий адрес: https://sciup.org/15012005

IDR: 15012005

Текст научной статьи Controlling Citizen’s Cyber Viewing Using Enhanced Internet Content Filters

Published Online November 2013 in MECS DOI: 10.5815/ijitcs.2013.12.07

The internet delivers cyber surfers with unrestricted information and opportunities to connect and share information across the world. Data passing through the cyberspace are generally open and uncontrollable. The internet users can access and share images, audios and videos of violence, pornography, material about prohibited drugs, computer viruses and malwares through the cyberspace. There are several websites and applications hosting and sharing adult-oriented resources. According to the figures reported in [1], 230 million pornographic web pages access are being filtered out of thirty nine billion through their content filters. The issues become even more complex when the cyberspace atrocities involve a state of not being known or identified by name (anonymity), publication considered bellicose or a menace to the security and reorganize in this way. In the traditional media such as television programs and motion pictures, legislation can lessen the problems. Nonetheless, in the global and the domestic network, the person that is not barred from ethical principles can basically hold their resources in a nation with less restrictive bylaws and policies. Consequently, the uncoordinated regulations cannot function effectively at the national level. To protect children and checkmate grownups from retrieving prohibited materials from the cyberspace while at work in the offices, content grading schemes and blocking applications have been designed by software houses or companies [2].

It appears that those persons whose standpoint was for strict sieving and those who have a contrary opinion and supported uncontrolled and complete liberty for cyber content access are all not correct. No doubts, the access to cyberspace should be moderated through filtering and regulations. Though, the practice of using cyber content filters should be sensible in order to offer the young ones an opportunity to feel the atmosphere of information age and, at the same time, to allow the young ones not to face cyber contents that are mostly not destined for children. For example, broadcasting violence, explicit sex contents, drugs, etc. [3].

The conception of this notion is not as easy as it might appear at a glimpse. Although the issue of censorship of cyber contents did not misplace its actuality, the purposes of this issue do exist. The supporters of the use of cyber filters claim that citizens deserve the best possible opportunities and at the same time, should be protected from viewing the illegal content. Yet, the problems of societal implications and the effectiveness of filtering and internet blocking programs become the issues of the day. With rapid growth of internet, it becomes more and more difficult to determine the content of internet sites that should be legitimately required to be blocked.

A Web filter is a program that can screen an incoming Web page to determine whether some or all of it should not be displayed to the user. The filter checks the origin or content of a Web page against a set of rules provided by company or person who has installed the Web filter [4]. With the advent of Web 2.0 technologies, websites are now mash-ups of content that is aggregated from many other sites. This scenario adds complexity to filtering websites based on domain names alone and also opens up new avenues of attack for hackers and virus writers who are becoming increasingly successful at compromising syndicated feeds. If just one feed of data is compromised, all the websites that pull in that feed will deliver malicious code to their trusted users. An effective content filtering solution will judge incoming web data based on its content and not its source alone. Malicious content that is smuggled into trusted sites will still be detected and filtered out thereby protecting the internal network.

In case a user needs access to specific internet site that is currently blacklisted, they can apply to authorities to get access to the website. The opponents of use of internet filters predominantly claim that blocking and filtering the content of web sites violate human right but the basic argument is that the use of internet filters is ineffective, unnecessary and discriminatory. This research work seeks to check and control unauthorized and fraudulent sites using content filters.

The aim of this paper is to focus on how to control the cyber access of the citizens using an enhanced internet content filter. Section II discusses the related works. Section III explains the different types of content filters. Section IV explains the materials and method used and the last section, which is section V is for the conclusions and recommendations.

-

II. Related Works

Barbara et al. [6] said that the disorder of primary societies and opted to metropolitan life, with its attendant loss of rich cultural values has encroached on the adolescent’s ability to handle their newly awakened sexual impulses. These authors noticed gross sexual misconducts among different age groups in our nation by the claim of urbanization, modernization, spurious sexual expressions in junk magazines and of course pornography and internet dating. The consequences are not farfetched; they include child-pregnancy, abortion, sexually transmitted diseases and of course possible increase in the incidence of Acquired Immune Deficiency Syndrome (AIDS) due to unguided sexual escapades.

Across all the tribes represented in Nigeria, there are forefathers who gave young ones informal/traditional education to prepare them for life. These traditional education systems from the North to the South and the East to the West maintained that young men and women were taught to acquire a healthy attitude towards sex as part of their preparation for adult life. The expressions of sexuality were delayed until the youngsters are matured enough to face the challenges of adulthood [7].

Research shows that in Nigeria, over 40% of Internet usage is related to browsing of sex sites. The remaining 60% is distributed among searches for information on academics, entertainment, migration, sports and etc. [8]. In continuation to the research [9], reviews the impact of Internet pornography on web-users in Nigeria and advocates the use of web filtering programs as a robust measure against unwanted Internet content.

The opponents of use of internet filters predominantly claim that blocking and filtering the content of web sites violate human rights. The basic argument is that the use of internet filters is ineffective, unnecessary and discriminatory; it demeans the status of teachers as well as students, and violates constitutional rights [10]. According to them, when one group of people or a person (e.g. a Board of Education, a superintendent, a school director, etc.) decides what the other people should think and read, they are exceeding their proper role and probably violating the First Amendment of the U.S. Constitution [11].

According to the research study conducted by the Phillips [12], the use of internet filters Bess (developed by N2H2 and Surf Control companies) erroneously block the vast majority of quite legitimate educational web sites simply because of the blacklist of key words that contains words that are relatively admissible. In another research paper, the regulatory option of internet filtering measures was put into the broader perspective of the legal framework regulating the (exemption from) liability of Internet Service Providers (ISPs) for usergenerated contents. In addition, the paper suggests proposals on which regulatory decisions can better ensure the respect of freedoms and the protection of rights. The paper introduces several significant cases of blocking online copyright infringing materials. Copyright related blocking techniques have been devised for business reasons – by copyright holders’ associations. It must be recalled, however, that these blocking actions cannot be enforced without the states’ intervention [13].

In another research paper, an interface was developed that can help parents, teachers and kids to search the web in a secure way and make the interaction session with the web a pleasurable experience. The Algorithm discussed will keep the kid focused by not blocking or filtering the websites but by redirecting the interest of the kid towards the educational side of his interest. The interface designed using the algorithm will not block any website but has redirected the query to the educational part of kids’ interest [20, 21, 22].

-

III. Types of Content Filters

-

3.1 Browser based Filters:

-

3.2 Client – Side Filters:

-

3.3 Content-Limited ISPs:

-

3.4 Search-Engine Filters:

-

3.5 Server-Side Filters:

Content filters can be classified into the following five broad categories;

Browser based content filtering solution is the most lightweight solution to do the content filtering in which there is no need to install native software or library to home computer unlike Google Chromium and Firefox browser in which most of their browser add-on/extensions are written in JavaScript which must reviewed and tested by a review editor team before releasing to internet users. The major advantage of the browser based content filtering solution is that add-on/extension solution is green, clean and easy to install and uninstall.

Client – Side filters is installed directly on computers. This type of filters uses a password and only those with the password can change the filters settings. Users can customize client side filters to meet their specific needs and is best used for homes or businesses that need to filter only certain computers or sets of computers.

Content-Limited ISPs are filters created by Internet Service Provider which are not limited to specific users or computers but to everyone who uses the service. In addition to filtering or blocking certain sites, many Content-Limited ISPs also monitor e-mail and chat traffic, blocking inappropriate users or sites as needed and prevent any chat or e-mail message from anyone except other users of the ISPs.

Search-Engine Filters helps users to filters out inappropriate material on a search engine level such as Google or Yahoo. Users can turn on the filters and this filter does not prevent a user from visiting a website with inappropriate content but does not display the sites in search results. It is useful when trying to avoid certain viruses or pornography that may use misleading description to entice you to visit.

Server-Side Filters affect every users on the network in which businesses or institutions receive access from these filters since they can set one filter for all computers and users. It is installed on a central server computer which is connected to other computers on the same network.

-

IV. Materials and Method

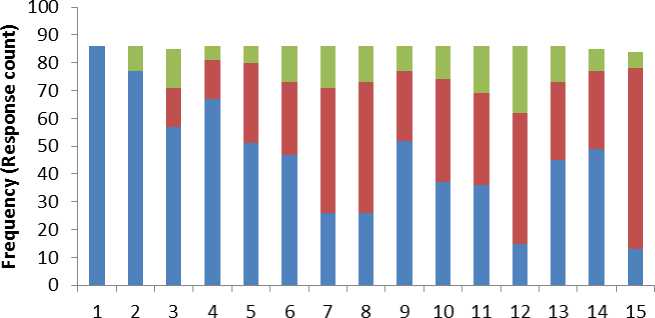

The survey method employed was the use of questionnaire, which solicits information from respondents selected for the research. The questionnaire titled “Internet Content Filters and Internet Abuse” was administered to respondents in different locations in Nigerian. A total of 100 questionnaires were administered over a one-month period of which only 86 were returned. In other to get a more reliable data set, confidentiality of personal information provided by the respondents was guaranteed and some internet terms were explained to assist the respondents in understanding each question. The primary data generated were analyzed statistically by Statistical Package for Social Sciences (SPSS) and Microsoft Excel. Fig. 1 below shows the summary of responses of the raw data obtained from the questionnaire.

SOMETIMES

■ NO

■ YES

Questions

Fig. 1: A component bar chart illustrating a summary of responses (Questions 1 – 15 as indicated in Table 1)

-

4.1 Data Analysis

-

4.2 System Development

The results of the Chi-Square (χ-Square) analyses are presented in this section in other to provide a richer understanding of the target audience’s perception of this study. Table 1 summarizes the frequencies and corresponding percentages for the general public perceptions to this study. It reveals that 100.0% of the respondents know what the internet is, but only 89.5% of these respondents use the internet and about 10.5% of the total respondents sometimes use the internet.

Table 1: summary of the frequencies and corresponding percentages of respondents’ perceptions of using content filter

|

QUESTION |

FREQUENCY |

PERCENTAGE |

MEAN |

STANDARD DEVIATION |

||||

|

YES |

NO |

SOMETIMES |

YES (%) |

NO (%) |

SOMETIMES (%) |

|||

|

1 |

86 |

0 |

0 |

100 |

0 |

0 |

28.6667 |

49.65212 |

|

2 |

77 |

0 |

9 |

89.5 |

0 |

10.5 |

28.6667 |

42.09909 |

|

3 |

57 |

14 |

14 |

66.4 |

16.3 |

16.3 |

28.3333 |

24.82606 |

|

4 |

67 |

14 |

5 |

77.9 |

16.3 |

5.8 |

28.6667 |

33.50124 |

|

5 |

51 |

29 |

6 |

59.3 |

33.7 |

7.0 |

28.6667 |

22.50185 |

|

6 |

47 |

26 |

13 |

54.7 |

30.2 |

15.1 |

28.6667 |

17.15615 |

|

7 |

26 |

45 |

15 |

30.2 |

52.3 |

17.4 |

28.6667 |

15.17674 |

|

8 |

26 |

47 |

13 |

30.2 |

54.7 |

15.1 |

28.6667 |

17.15615 |

|

9 |

52 |

25 |

9 |

60.5 |

29.1 |

10.5 |

28.6667 |

21.73323 |

|

10 |

37 |

37 |

12 |

43.0 |

43 |

14.0 |

28.6667 |

14.43376 |

|

11 |

36 |

33 |

17 |

41.8 |

38.4 |

19.8 |

28.6667 |

10.21437 |

|

12 |

15 |

47 |

24 |

17.4 |

54.7 |

27.9 |

28.6667 |

16.50253 |

|

13 |

45 |

28 |

13 |

52.3 |

32.6 |

15.1 |

28.6667 |

16.01041 |

|

14 |

49 |

28 |

8 |

57.1 |

32.6 |

9.3 |

28.3333 |

20.50203 |

|

15 |

13 |

65 |

6 |

15.4 |

75.6 |

7.0 |

28.0000 |

32.23352 |

The Table 1 also points out that about 66.4% of the respondents considered in this study agrees that the internet is abused while those that are of the opinions that the internet is not abused, or sometimes abused have the same response count of 14 representing 16.3% of the total respondents for each. Over 65% think and believe that the internet is abused and this abuse can be controlled. They tend to be a bit more neutral on whether a specific age range abuses the internet most and whether the freedom of information law and the cybercrime bill in Nigeria have a connection with the internet viewing control. Also over 50% agree that the use of web filters outweigh the risk of using web filters and thereby increasing the chances of controlling the abuse of the internet while indicating strongly that the EFCC have not done enough in combating internet abuse.

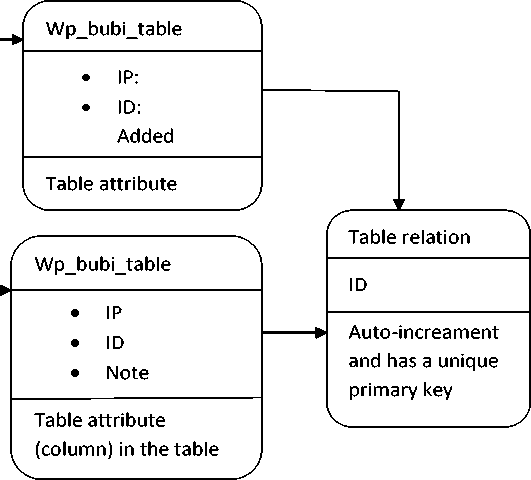

The semantics of the content filter was designed using Unified Modelling Language (UML) specifically the class diagram which is a type of static structure diagram that describes the structure of a system by showing the system’s classes, their attributes, operations or methods and the relationships among the classes. The class diagram shown in Fig. 2 illustrates the server acting as the interrelation for the browser and the database check. While Fig. 3 and Fig. 4 shows the program interfaces for testing IP address banned by the Content Filter and for IP address is been removed by the Content Filter from the Database.

WampServer

-

• Database

-

• Word

List of database in the local server

Word

Wp_bubi_table

|

• |

Wp_bubi_table |

|

• |

Wp_comment_table |

|

• |

Wp_comment |

|

• |

Wp_links |

|

• |

Wp_option |

|

• |

Wp_postmeta |

|

• |

Wp_posts |

|

• |

Wp_term |

|

• |

Wp_term_relationship |

|

• |

Wp_term_taxonomy |

|

• |

Wp_user_meta |

|

• |

Wp_users |

The database where our tables

Databasename

|

Block |

|

|

Tables |

in database |

|

• |

Wp_bubi_table |

|

• |

Wp_comment_table |

|

• |

Wp_comment |

|

• |

Wp_links |

|

• |

Wp_option |

|

• |

Wp_postmeta |

|

• |

Wp_posts |

|

• |

Wp_term |

|

• |

Wp_term_relationship |

|

• |

Wp_term_taxonomy |

|

• |

Wp_user_meta |

|

• |

Wp_users |

are stored

Fig. 2: Proposed System Class Diagram

Ban User By IP

WARNING! Do not enter your IP address or you will be banned.

(You can recover by deleting the plugin via FTP or by altering •prefix*_bubi_table)

Ask for support at Ban User Bv IP Official Page

Add a new IP address to your ban list.

IP to Ban Optional Note Add

IP to Ban Optional Note Action

127.0.0.1 - delete

Fig. 3: Testing IP address banned by the Content Filter

У Server: localhost > S Database: word » ц Table: wp_bubi_taMe

|

Field |

Type |

■ Function |

Null |

Value ^Ц |

|

id |

mediumint(9) |

* |

4 |

|

|

ip |

varchar(20) |

* |

127 00B | |

|

|

note |

varchar(20) |

- |

Save * and then Go back to previous page *

Ф j Go Reset

Fig. 4: IP address is been removed by the Content Filter from the Database

Content filters software was developed to ban user from access illegal contents or fraudulent websites. This approach enables the administrator to have access to the client server and any illegal site viewed can be deleted. Since internet viewing by the citizen can now be controlled.

-

V. Conclusion and Recommendations

The results of this study reveal that almost all the respondents considered in the survey know about internet and the vast majority of them use it. It was also shown that the internet has been actually abused, and this abuse can be controlled with the government and its agencies such as Economic and Financial Crimes Commission (EFCC) taking the lead. It was suggested that children's access to content on the internet should be restricted while that of the adults should be relaxed. Besides, it was also found out that the freedom of information law and the cybercrime bill in Nigeria have a connection with the internet viewing control. The results also indicated that the EFCC has not done enough in combating internet abuse and more still need to be done.

This study also achieved designing a programme that is capable of filtering illegal websites since each website has a unique IP Address, any website that is illegal can be banned from accessing by typing the IP Address of that particular site. This approach enables the administrator to have an access to the client server and any illegal site viewed can be deleted since internet viewing by the citizen can now be controlled using content filters if not completely eradicated. For instance, if a client enters any website that is fraudulent or illegal in which the IP address is automatically in the database, such website will be banned from being accessed by giving client.

Though the objectives of this project have been achieved, more can be done to improve on the use of content filters to tackle the menace of citizen viewing illegal websites ( internet abuse) . Based on the results of this study, the following recommendations are made:

-

i. government should provide more policies that support the use of web filtering on public organizations.

-

ii. government agencies such as the EFCC should strengthen their efforts in controlling internet abuse

-

iii. public organizations such as business outfits, academic communities etc should also encourage the use of web content filtering to help fight internet abuse .

-

iv. the university authorities in various Nigerian institution should affiliate with other online resources centres worldwide so as to enable their student access relevant materials.

Список литературы Controlling Citizen’s Cyber Viewing Using Enhanced Internet Content Filters

- Contentwatch.com. . http://www.contentwatch.com/blog (Accessed on 20 August, 2012).

- Haruna C., Abdulhamid M. S., Abdulsalam Y. G., Ali M. U., Timothy U. M. Academic Community Cyber cafes - A Perpetration Point for Cyber Crimes in Nigeria, International Journal of Information Science and Computer Engineering (IJISCE), 2011, 2(2): 6-13.

- Abdulhamid M. S., Chiroma, H. and Abubakar, A. Cybercrimes and the Nigerian academic institutions networks, the IUP Journal of Information Technology, 2011, VII(1): 47– 57.

- Satterfield, B. "Understanding Content Filtering: An FAQ for Nonprofits". 2007. Techsoup.org.

- Katherine H. M. and Piercy, F. P. Therapists' Assessment And Treatment Of Internet Infidelity Journal of Marital and Family Therapy. 2006, 34(4): 481-497.

- Barbara, S. M., Daniel, B., Wesley H. C. & Binka, P. The Changing Nature of Adolescence in the Kassena-Nankana District of Northern Ghana. Studies in Family Planning. 1999, 30(2): 95-111.

- Fafunwa, A. B. History of Education in Nigeria. London: George Allen and Union Ltd. 1974.

- Longe, F. A. (2004). The Design of An Information-Society Based Model For the Analysis of Risks and Stresses Associated Risks Associated with Information Technology Applications. Unpublished M. Tech. Thesis, FUT, Akure, Nigeria.

- Longe O. B. and Longe F. A., “The Nigerian Web Content: Combating Pornography using Content Filters”, Journal of Information Technology Impact. 2005. 5(2), 59-64.

- Patrick C. "In the Shadows of the Net" (ISBN 978-1-59285-478-3) Hazelden.

- McKenzie, J. Big Brother Comes to School: Telling Teachers What to Read and What to Believe. 2005. Retrieved March 22, 2013, from http://www.fno.org/feb2005/censoring.html.

- Phillips, L. Do Internet Filters Abridge Free Speech? 2007. http://www.legalzoom.com/us- freedom-speech/do-internet-filters-abridge-free (Accessed on 20 August, 2012).

- Parti, K. and L. Marin, Ensuring Freedoms and Protecting Rights in the Governance of the Internet: A Comparative Analysis on Blocking Measures and Internet Providers’ Removal of Illegal Internet Content. Journal of Contemporary European Research, 2013. 9(1): 138-159.

- Warf, B., Global Internet Censorship, in Global Geographies of the Internet. 2013, Springer. 45-75.

- Kizza, J.M., Virus and Content Filtering, in Guide to Computer Network Security. 2013, Springer. 323-341.

- Iranmanesh, S.A., H. Sengar, and H. Wang, A Voice Spam Filter to Clean Subscribers’ Mailbox, in Security and Privacy in Communication Networks. 2013, Springer. p. 349-367.

- Biswas, Soumak, Sripati Jha, and Ramayan Singh. "A Fuzzy Preference Relation Based Method for Face Recognition by Gabor Filters." International Journal of Information Technology and Computer Science (IJITCS). 2012. 4(6): 18-23.

- Wurtenberger, A.M., C.S. Hyde, and C.D. Halferty, Restricting mature content, 2013, US Patent 8,359,642.

- Santos, I., et al. Adult Content Filtering through Compression-Based Text Classification. in International Joint Conference CISIS’12-ICEUTE´ 12-SOCO´ 12 Special Sessions. 2013. Springer.

- Gupta, N. and S. Hilal, Algorithm to Filter & Redirect the Web Content for Kids’. International Journal of Engineering and Technology, 2013. 5.

- Victor, O. W., Okongwu, N. O., Audu, I., Olawale, S. A, Abdulhamid, M. S., Cyber Crimes Analysis Based-On Open Source Digital Foresics Tools. International Journal of Computer Science and Information Security (IJCSIS), 2013. 10(1): p. 30 - 43.

- Abdulhamid, M. S., et al., Privacy and National Security Issues in Social Networks: The Challenges. International journal of the computer, the internet and management, 2011. 19(3): p. 14-20.