Development of a Real-time Driver’s Drowsiness Detection System Using MediaPipe Face Mesh

Автор: Saikat Baul, Md. Ratan Rana, Nusrat Jahan Trisna, Farzana Bente Alam

Журнал: International Journal of Engineering and Manufacturing @ijem

Статья в выпуске: 5 vol.15, 2025 года.

Бесплатный доступ

Recently, accidents caused by drowsy driving have emerged as a significant concern for society, often resulting in severe consequences for victims, including fatalities. Lives are the most valuable asset in the world and deserve greater safety on the road. Given the urgency, it is essential to develop an effective drowsiness detection system that can identify drowsiness in drivers and take necessary steps to alert them before any unfortunate incident occurs. Dlib and MediaPipe Face Mesh have shown promising results. However, most previous studies have relied solely on blinking patterns to detect drowsiness, while some have combined blinking with yawning patterns. The proposed research focuses on creating a straightforward drowsy driver detection system using Python, incorporating OpenCV and MediaPipe Face Mesh. The shape detector provided by MediaPipe Face Mesh assists in finding critical facial coordinates, allowing for the calculation of the driver's eye aspect ratio, mouth aspect ratio, and head tilt angle from video input. The system's performance evaluation utilizes standardized public datasets and real-time video footage. Notably, in both scenarios, the system exhibited remarkable recognition accuracy. A performance comparison was undertaken, demonstrating the proposed method's effectiveness. The proposed system has the potential to enhance travel safety and efficiency when integrated with vehicles' supplementary safety features and automation technology.

Drowsiness Detection, Computer Vision, MediaPipe Face Mesh, OpenCV, EAR, MAR, Head Tilt Angle

Короткий адрес: https://sciup.org/15019956

IDR: 15019956 | DOI: 10.5815/ijem.2025.05.04

Текст научной статьи Development of a Real-time Driver’s Drowsiness Detection System Using MediaPipe Face Mesh

During long-distance travel or driving at night, drivers often lose focus due to drowsiness [1]. This is frequently caused by insufficient rest between long stretches of driving, which primarily leads to exhaustion. In modern society, vehicles are among the most crucial elements; ensuring road safety has become a significant challenge. In countries like Bangladesh, where there are many people to account for, the number of accidents resulting from driver drowsiness is rising, making it a serious concern.

There can be many potential reasons for driver drowsiness. According to the research by Talukder et al. (2013), there are 14 distinct causes of driver drowsiness [2]. The researchers found that the following factors caused the most fatigue: inadequate nutrition due to poor eating habits, pressure from business owners and executives, long driving distances, failure to take breaks during long drives, overlapping shifts, and concerns about finances. In 2023, a tragic bus accident occurred in Bangladesh that claimed the lives of 19 passengers, with reports indicating that the bus driver did not take any breaks [3]. The driver continuously operated his bus for 33 hours, leading to significant fatigue. Consequently, he lost control of the bus and crashed into a bridge pillar [3]. It can be stated that accidents caused by fatigued drivers are particularly dangerous, often resulting in fatalities.

The National Safety Council (NSC) states that drowsy driving causes over 100,000 crashes each year, injuring 71,000 people and resulting in the deaths of 1,550 individuals [4]. The AAA Foundation for Traffic Safety conducted research that revealed approximately 328,000 crashes are caused by drowsy driving [4]. This figure is more than three times higher than the number of accidents reported by the police. The research indicated that 109,000 incidents attributed to drowsy driving led to various injuries, with around 6,400 of these incidents culminating in fatalities. According to the Centers for Disease Control and Prevention, roughly 4% of adult drivers have reported falling asleep while driving due to fatigue [5]. The Road Safety Foundation (RSF) notes that from 2019 to June 2024, there were 32,733 traffic accidents in Bangladesh, resulting in 35,384 deaths and 53,196 injuries [6]. A total of 37.17% of these accidents were due to a loss of control. Fatigue and drowsiness while driving are primary contributors to this loss of vehicle control. The RSF reports that in 2024, 7,294 individuals died in traffic accidents in Bangladesh [7]. On average, at least three students are killed daily, resulting in a student road fatality rate of 15%. This represents a significant detriment to the nation. 84% of those who died in traffic incidents were adults. The majority of those killed or severely injured in these accidents were the principal or sole earners of their households. Each year, approximately Tk 210 billion in human capital is lost due to road accidents [7].

There are several specific gestures and facial expressions that indicate if a person is sleepy, such as slowly closing their eyelids (closing their eyes), gradually opening their lips (yawning or opening their mouth), relaxing their jaw, and tilting their head [5]. Given that this is a significant issue for modern society, researchers from various countries have attempted to find optimal solutions to this problem. Many research studies illustrate the application of different computer vision techniques, machine learning algorithms, and feature analysis methods to detect drowsiness and fatigue in drivers using eye-based approaches alone. Several studies have incorporated both eye and mouth movements as variables in their investigations. Many models struggle in low light conditions. Some systems fail to consistently detect the eyes, particularly when drivers wear glasses. Head tilting is a crucial predictor of drowsiness, a phenomenon that has not been thoroughly examined in most research studies. Some studies have highlighted this as an area for future exploration [8]. The head tilt angle has been integrated into the proposed techniques to address this problem and improve the effectiveness of drowsiness detection.

The main goal of this study is to investigate how eye tracking, mouth tracking, and tilt angle analysis can be utilized to determine if a driver is drowsy. To enable real-time application of the model, a camera can be mounted on the vehicle's dashboard to capture input footage. This camera would record the driver's face and any obstructions, such as eyeglasses or sunglasses. Combining eye monitoring, mouth tracking, and tilt angle analysis to detect sleepy drivers could significantly enhance road safety within the community. Monitoring physiological and behavioral signals allows for the identification of signs that a driver is drowsy and enables proactive measures to prevent accidents resulting from fatigued drivers.

The emphasis on driving safety, non-intrusiveness, real-time monitoring, cost-effectiveness, integration possibilities, customizability, continuous monitoring capabilities, and distinctiveness makes the proposed drowsiness detection method suitable. This study presents and examines an innovative driver drowsiness detection system, which employs OpenCV and MediaPipe Face Mesh libraries. The microkernel operating system is essential in various domains, including real-time systems [9]. Therefore, utilizing a microkernel-based operating system will be advantageous. Comparing different models provides a clear understanding of whether the proposed system’s outcome is superior [10, 11]. These studies used comparisons to select the best model for detecting cancerous cells. By comparing the system's results with those of other systems, this study aims to determine whether the proposed approach yields better outcomes in detecting driver drowsiness. The libraries analyze photos and stream live videos to identify specific objects, including facial features, eyes, and mouth. The proposed systems demonstrated superior performance compared to alternative systems.

The subsequent sections of the paper are organized as follows: Section 2 reviews the relevant literature; Section 3 outlines the methods employed in the system; Section 4 presents the experimental results and comparisons; and, finally, Section 5 offers the study's conclusion.

2. Related Work

In recent years, extensive research has led to numerous scholarly articles suggesting various approaches for detecting fatigue and drowsiness in drivers. This related work section summarizes the advancements in real-time fatigue detection systems.

Dalve et al. (2023) employ deep learning and MediaPipe as a feature extractor to detect driver drowsiness [12]. In their approach to this unresolved problem, the authors utilize the MediaPipe Face Mesh framework to capture facial features and implement a Binary Classification Neural Network to accurately detect drowsy states. The method employs deep learning techniques to derive numerical features from photographs, which are then integrated into a fuzzy logic system. Rasyid et al. (2023) proposed a blink detection system that uses machine learning to identify video flashes captured in low-light environments [13]. The efficacy of the proposed blink detection technique was assessed using the MediaPipe face mesh landmark and the confusion matrix. Results demonstrate that the machine learning methodology in the eye blink detection system had remarkable accuracy in detecting blinking occurrences in videos captured under low-light conditions. Akrout and Fakhfakh (2023) presented a methodology utilizing transfer learning and the deep LSTM (long short-term memory) network [14]. The authors aim to define and delineate five distinct levels of driver weariness. Their approach features an initial pre-processing phase that involves identifying areas of interest using the Google MediaPipe Face Mesh technique and standardizing the required properties. The researchers employed the Adam optimization method to determine the ideal hyperparameters from the three datasets used in their analysis. Florez et al. (2023) developed a technique for identifying fatigue using MediaPipe [15]. The procedure enhances the area surrounding the eyes to obtain the region of interest (ROI). Similarly, three Convolutional Neural Networks (CNNs) are used as the base: InceptionV3, VGG16, and ResNet50V2. A proposed update seeks to modify the configuration of the fully connected network employed in the classification process. They utilized the Grad-CAM visual method to analyze how each Convolutional Neural Network (CNN) functioned. The CNN based on ResNet50V2 primarily focuses on the eye area, making it more effective at detecting signs of fatigue. Jewel et al. (2022) proposed a system design to detect driver fatigue by monitoring the angle position of the driver's head during operation, subsequently providing alerts to the driver [16]. FaceMesh technology is utilized to evaluate the driver's eye and facial expressions. This system examines multiple facial points to ascertain the presence of drowsiness.

Abbas et al. (2023) employed the OpenCV and MediaPipe frameworks to create an effective solution for detecting drowsy driving. The study investigates driver drowsiness detection under various settings, utilizing Convolutional Neural Networks (CNN) [17]. The MediaPipe face mesh model delineates facial features, emphasizing the eyes and mouth. This is achieved by employing the eye and mouth aspect ratios to identify these features. The system consistently aims to notify drivers of their behavior in real-time situations. Jose et al. (2022) presented SleepyWheels, a technique that integrates a lightweight neural network with facial landmark recognition to detect driver weariness in real-time [18]. SleepyWheels exhibits efficacy across various test scenarios, including instances when facial features are concealed, such as the eyes or mouth, and in situations involving drivers of varying skin tones, unique camera placements, and fluctuating observational angles. SleepyWheels employed EfficientNetV2, a deep learning model integrated with a face landmark detector for drowsiness identification. Safarov et al. (2023) proposed an approach for identifying indicators of drowsiness by integrating various algorithms [19]. The initial phase involved acquiring facial landmark coordinates from the MediaPipe model using a specifically prepared dataset. Data was gathered under several conditions: alertness with open eyes, drowsiness with closed eyelids, and instances of yawning. The driver's drowsiness was assessed solely by calculating the landmark coordinates. Eye-gaze markers evaluated blink frequencies. The EAR equation measured blinking, facilitating the classification of eye wave variations into Open and Closed categories. A specified threshold was set to enable this classification procedure. Sofi and Mehfuz (2023) suggested a model that uses facial landmarks to find and track the movement of the mouth and eyes to predict driver behavior [20]. This model can potentially save many lives and prevent accidents by issuing warnings whenever a driver feels drowsy. It employs hybrid parameters, which enhance the system's reliability. They described the concept as cost-effective and feasible for addition to vehicles at a minimal price.

Mohanty et al. (2019) examined the eyes and mouth to identify indicators of exhaustion and classify drivers as weary [8]. They obtained input footage from a camera on the car's dashboard to facilitate real-time model usage. This setup enables visibility of the driver's face, similar to the Dlib technique. The Dlib library's pre-trained model includes 68 facial landmark detectors for this purpose. A face detection system was developed using the HOG method. The EAR algorithm is suggested for monitoring the driver's blinking patterns, while the MAR is employed to detect yawning in uninterrupted video stream frames. Jose et al. (2021) propose a methodology utilizing Python, Idlib, and OpenCV to create a real-time system featuring an automated camera for monitoring the driver's eye movements and yawning behavior [21]. A camera was used to track the driver's blinks and instances of yawning, initiating a trigger to alert the driver. Their proposed technology assesses the level of driver fatigue, generating a warning notification when a significant relationship exists between eye closures and yawns over a specific duration. Deng and Wu (2019) introduced a system called DriCare, designed to evaluate drivers' fatigue levels by analyzing video footage [22]. This technique can identify multiple signs of exhaustion, including yawning, blinking, and the duration of eye closure. Notably, DriCare achieves this without requiring drivers to wear any additional devices. Addressing the limitations noted in previous methods, a novel face-tracking algorithm has been introduced to enhance tracking accuracy. A unique approach for recognizing facial areas was developed, utilizing a collection of 68 key points. The facial regions assess drivers' cognitive and emotional states. Integrating visual and informal aspects into DriCare allows the system to effectively alert the driver to fatigue via a warning mechanism. Baul et al. (2024) proposed a real-time, lightweight system using OpenCV and Dlib to detect drowsiness [5]. It uses the eye aspect ratio, mouth aspect ratio, and head tilt angle to determine if a driver is drowsy. A camera detects the driver's face to identify signs of drowsiness, such as closed eyes, yawning, and head tilt (left or right). The system triggers an alarm and displays appropriate messages based on different conditions.

Several factors limit the accuracy of drowsy state identification systems and require improvement. Changes in lighting, background, and facial alignment make it difficult to determine when someone is sleeping. One area that may see enhancement is finding the proper head tilt angle, crucial for detecting drowsiness. Furthermore, the alerting features of these systems need enhancement to notify drivers when they are becoming sleepy. Additionally, the challenge of accurately recognizing tiredness is compounded by imprecision and the complexity of the calculations. Another issue is determining the exact position and size of the human eye, which impacts the detection of drowsiness. Moreover, challenges may arise when a driver wears eyeglasses or sunglasses, which can hinder effective fatigue recognition.

3. Methodology

Drowsiness while driving is a significant cause of serious car accidents. To address this issue, many models have been developed to reduce the risks associated with sleepy drivers.

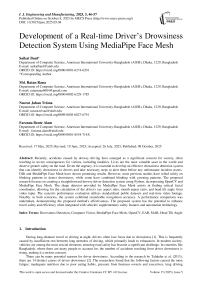

Fig. 1. Proposed driver drowsiness detection system based on MediaPipe Face Mesh.

Fig. 1 illustrates the flowchart of the MediaPipe-based driver drowsiness detection system. The system functions as follows: First, the input video, whether pre-recorded or live, undergoes processing in which each frame is enlarged and converted to Red, Green, and Blue (RGB) format. The study employed MediaPipe Face Mesh to locate and identify facial features in the provided video frame. MediaPipe Face Mesh identifies 468 points for the (x, y) coordinates of various facial parts. We must identify the landmarks between the eyes and the mouth. The angle of head tilt is determined using the coordinates of the left corner of the left eye and the right corner of the right eye. The coordinates of both the eyes and the mouth are utilized to calculate the eye aspect ratio for both eyes and the mouth aspect ratio for the mouth, based on the Euclidean distance. The system then triggers an alarm and sends three messages to the driver based on three different scenarios.

-

3.1. Facial Landmark Detection

-

3.2. Eye Aspect Ratio

The drowsiness detector is implemented using OpenCV and Python. The proposed system utilizes Google's MediaPipe library to recognize and localize facial landmarks through Face Mesh.

The MediaPipe library is pre-trained. The Face Mesh system employs a two-step neural network pipeline to determine the precise three-dimensional coordinates of 468 points on a person's face. This process derives threedimensional positioning from a two-dimensional image. The first network, BlazeFace, detects faces by analyzing the entire image [18]. The second network operates within a specific region to identify the locations of landmarks. The entire pipeline is designed for maximum speed, with tests indicating it can perform in under ten milliseconds on high-performance devices [18]. The MediaPipe framework has enhanced its pipeline, allowing the landmark generator to utilize previous facial crops for tracking landmarks, even when the face remains within the crop's boundaries. This approach minimizes the need for an additional detector, conserving power, memory, and CPU usage [12]. MediaPipe's Face Mesh displays the 468 facial markers in Fig. 2.

Fig. 2. Visualization of the 468 facial landmark coordinates as shown in [13].

The MediaPipe Face Mesh is a technique that detects face landmarks and provides a continuous stream of estimated coordinates for important facial features in real time. This method can determine the three-dimensional surfaces of a person's face without needing a depth sensor. The Face Mesh module is built on BlazeFace, which has been optimized for mobile GPU inference using MobilenetV2 and an SSD detector as its core frameworks [14]. Google's application programming interfaces (APIs) and software development kits (SDKs) simplify the addition of MediaPipe Face Mesh landmarks to larger programs or systems. The model was developed on the TensorFlow framework, enabling users to retrain or modify it to suit their needs [13]. This approach is effective because, when paired with certain landmark locations, the frequency of video data changes more significantly than that of the accelerometer data.

Using predetermined landmarks makes it easier to infer a person's shape by highlighting specific features of their face, such as their eyebrows, eyes, lips, nose, and jawline. Variations in the proportions of these key areas result in different ways of conveying what defines an individual.

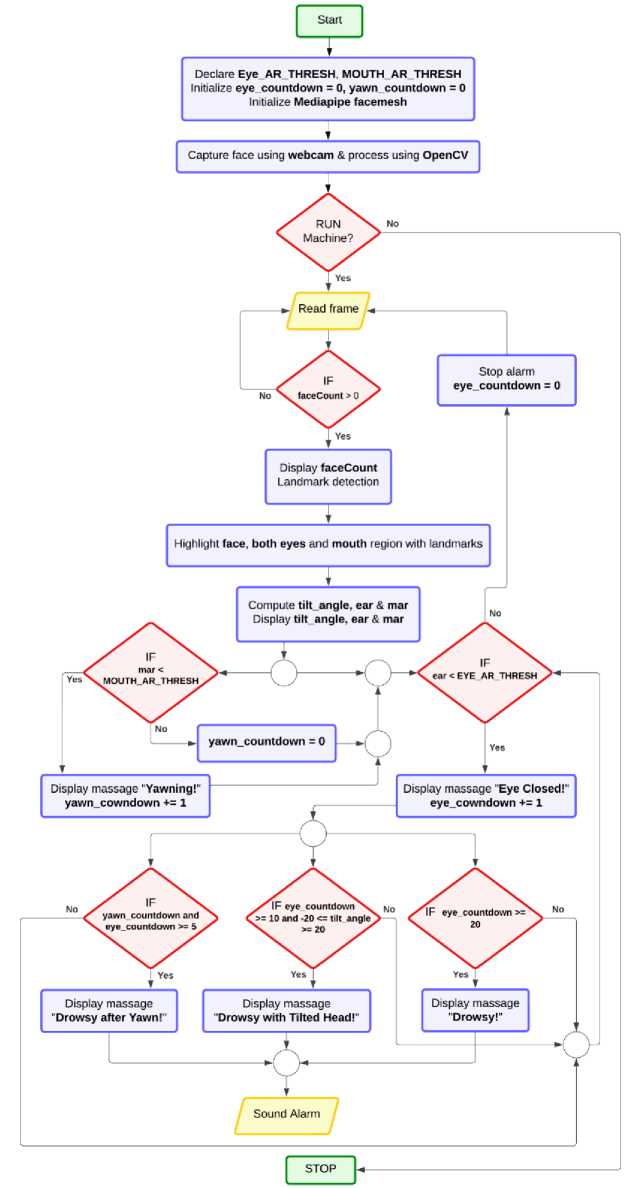

The eye aspect ratio (EAR) measure was used to identify eye blinking. This measurement is calculated by dividing the vertical distance between the upper and lower eyelids by the horizontal width of the eye. The physiological process of blinking decreases the perpendicular distance between the upper and lower eyelids. When the eye is open, the eyelids tend to widen the gap between them.

Fig. 3. Coordinates to calculate EAR for MediaPipe.

EAR = | ^ |

| AB |

Equation (1) mathematically calculates the EAR [8]. The coordinates for calculating EAR in MediaPipe are illustrated in Fig. 3. After computing the EAR for both the left and right eyes, calculate the average EAR. The established cutoff value is 0.22. It is measuring based on various factors, including face shape (male or female), camera angle, and distance from the subject. Male faces usually have larger, more angular eye shapes, while female faces tend to have smaller, rounder eyes. As a result, the threshold value may be slightly calibrated differently for males and females to account for these facial differences. Moreover, the camera angle and distance from the driver can significantly influence the calculation of EAR. If the camera is too close or positioned at an odd angle, the eye aspect ratio may be distorted, which could affect the detection accuracy. To mitigate these issues, the system dynamically adjusts the threshold values based on the camera setup, ensuring that the EAR remains consistent and accurate across different individuals and environmental conditions. When the average EAR falls below this cutoff, it increases the blink count and displays the message “Eye Closed!”. The blink count resets to zero if the average EAR exceeds the defined cutoff.

-

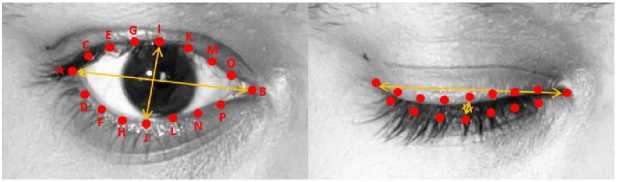

3.3. Mouth Aspect Ratio

The mouth aspect ratio (MAR) measure was utilized to identify yawns. It is calculated by taking the vertical distance between the upper and lower lips and dividing it by the horizontal distance of the mouth. A significant increase in the vertical space between the upper and lower lips characterizes yawning. There is a tendency to decrease the distance between the lips when the mouth is closed.

Fig. 4. Coordinates to calculate MAR for MediaPipe.

MAR = | в |

| AB |

Equation (2) mathematically defines how to calculate the MAR [23]. The coordinates for calculating the MAR for MediaPipe are shown in Fig. 4. After computing the MAR for both the inner and outer lips, find the average MAR. The established cutoff value is 0.65. However, the accuracy of MAR measurement is influenced by camera angle and distance. For instance, if the camera is too close to the subject, the mouth may appear overly large, leading to an inaccurate MAR reading. Conversely, if the camera is too far away, the mouth may appear smaller, potentially causing the MAR to fall below the required threshold even if the driver is yawning. Additionally, face shape differences between males and females impact the MAR, as males typically have broader jawlines, which may alter mouth measurements. Therefore, to ensure reliable yawning detection, the system dynamically adjusts the MAR threshold based on the camera's position and the individual’s facial features, providing accurate results regardless of the camera setup or face shape. When the calculated MAR count exceeds the cutoff, it increases the yawn count and presents the message "Yawning!" If the average MAR count falls below the cutoff, it assigns a zero value to the yawn counts.

-

3.4. Head Tilt Angle Detection

-

3.5. Alarm Activation

Detecting head tilt angle is crucial for improving the accuracy and reliability of real-time drowsiness detection systems. While eye and mouth tracking are common methods used to detect driver fatigue, they can sometimes fail to provide consistent results, particularly in certain conditions like poor lighting or when drivers wear glasses [26]. Head tilt angle serves as an additional, often more reliable indicator of drowsiness. The primary reason for including head tilt in our system is that it provides early signs of fatigue that may not be immediately visible through eye or mouth tracking [27]. A driver’s head begins to tilt as they lose focus, often before the more overt signs like eye closure or yawning occur. This makes head tilt detection an essential feature for real-time applications where early intervention is key to preventing accidents.

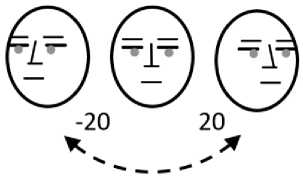

To find the head tilt angle, we need to determine how much the person's head is tilted concerning a specific point or axis. Using recognized landmarks, we measured the angle at which the face was tilted. In Face Mesh's 468-point facial landmark detection model, the landmarks at indices 133 and 363 correspond to the leftmost corner of the left eye and the rightmost corner of the right eye, respectively. Fig. 5 illustrates examples of head tilt angles.

Fig. 5. Head tilt angle visual representation.

Incorporating this feature allows us to capture drowsiness symptoms that might otherwise go undetected. For instance, head tilt is a more consistent indicator across various lighting and facial conditions compared to blinking or yawning.[26] When a driver’s head tilts significantly, it is a strong signal that they may be drowsy, and integrating this with eye and mouth tracking enhances the system’s overall ability to detect fatigue on time. By monitoring head tilt alongside eye and mouth movements, our system provides a more robust and comprehensive drowsiness detection solution [26].

In this proposed system, an alert will activate to notify the driver if the eye count, yawn count, and head tilt angle exceed established thresholds for a specified number of consecutive frames. This will be interpreted as evidence that the driver may have fallen asleep. The alert will trigger on three separate occasions, which are:

-

❖ yawn count >= 3 AND eye count >= 5

-

❖ eye count >= 5 AND (tilt angle >= 20 OR tilt angle <= -20)

-

❖ eye count >= 10

Upon satisfying the initial criterion, the screen displays "Drowsy after Yawn!" as a cautionary alert. Upon fulfilling the second criterion, the screen will display "Drowsy with Tilted Head!". If the third criterion is satisfied, the display must indicate "Drowsy!" Fig. 6 and Fig. 7 clearly illustrate the circumstances and the output.

Fig. 6. Four separate cases of drowsiness detection on a dataset video.

Eye Open, Mouth Close Eye Close, Mouth Close

Eye Open, Mouth Open Eye Close, Mouth Open

Eye Close, Left Tilted Eye Close, Right Tilted

Fig. 7. Six separate cases of drowsiness detection on a real-time video.

4. Results and Discussion

This section thoroughly outlines the subjects used to perform precise testing and analysis. We chose the YawDD Dataset [24] as our primary data source for assessing the effectiveness of our approach. This research utilized a subset of the YawDD dataset, consisting of 28 films. A total of 1120 photos were taken from each film, with 10 photographs per video. The images were collected under four specific settings, enabling a thorough dataset analysis. The experimental contexts include four distinct states: Yawn, No Yawn, Open Mouth, and Closed Mouth.

This study computed real-time results using data from a sample of eleven participants. This sample consisted of nine males and two females; each was recorded at a separate location. The mean outcome included individuals who wore glasses and those who did not, thereby assessing the entire sample population. The video frames illustrate two distinct conditions: the sleepy and non-sleepy states, captured in each experiment.

Table 1. Obtained results.

|

Features |

Accuracy |

||

|

Dataset |

Real-time |

Average |

|

|

Blink Detection |

99.28% |

96.19% |

97.74% |

|

Yawn Detection |

99.29% |

97.03% |

98.16% |

|

Head Tilt Detection |

- |

100% |

- |

Table 1 shows the recognition accuracy results of three distinct methodologies: eye closure detection, yawn detection, and head tilt detection. The assessment was conducted using standard datasets and real-time scenarios, but the head tilt angle was evaluated only in real time.

Table 2 illustrates the accuracy of the comparison using two different methods: eye detection and mouth detection. Here, some studies employed the Dlib library, while others utilized the MediaPipe library, but none of those papers used both libraries. Additionally, certain studies exclusively relied on eye detection features, while others combined both eye and mouth detection features to identify blinking and yawning indicators of driver drowsiness. However, only one article features methods that can detect when someone is blinking, yawning, or tilting their head, to assess driver sleepiness. Many of those publications analyzed real-time videos to determine if someone was tired. However, using our proposed method, we applied real-time videos alongside a dataset to evaluate whether a driver is sleepy. The comparison of findings in Table 2 indicates that the proposed model demonstrates superior performance. The results of this investigation show that the MediaPipe-based drowsiness detection system outperforms the other methods listed in Table 2. Moreover, the MediaPipe-based drowsiness detection system proved to be significantly more accurate than the previously mentioned drowsiness detection techniques, particularly in real time.

Table 2. Comparison of the proposed method with similar techniques.

|

Article |

Year |

Facial Features |

Model |

Results |

|

[12] |

2023 |

Eye |

MediaPipe |

Eye detection has an accuracy of 91% on training data and 92% on test data. |

|

[13] |

2023 |

Winking in low-light footage with 100% accuracy. |

||

|

[14] |

2023 |

The fatigue detection system is 98.4% accurate. |

||

|

[15] |

2023 |

Eye detection has the best accuracy, with an average rate of 99.71% on a dataset. |

||

|

[16] |

2022 |

Eye detection achieved with an accuracy of 97.5% |

||

|

[17] |

2023 |

Eye and Mouth |

Demonstrated an accuracy of 98.3% and maintained an accuracy of 94.7% under varying light conditions. |

|

|

[18] |

2022 |

Fatigue detection is accurate up to 97% of the time. |

||

|

[19] |

2023 |

Accuracy for drowsy-eye detection is 97% and 84% for yawning detection. |

||

|

[20] |

2023 |

98.16% accuracy in finding fatigue. |

||

|

[8] |

2019 |

Dlib |

The average rate of detecting blinks in the dataset and in real time is 87.68%. The dataset and real-time have an average yawn detection rate of 91.13%. |

|

|

[21] |

2021 |

It attains a fatigue accuracy of 97% in real-time situations. |

||

|

[22] |

2019 |

The proposed application (DriCare) attained approximately 92% accuracy. |

||

|

[23] |

2023 |

The accuracy of the eyes and mouth detection is between 85% and 95%. |

||

|

[25] |

2018 |

The accuracy of fatigue detection is up to 97.93%. |

||

|

[5] |

2024 |

Eye, Mouth, and Head Pose |

The average rate of detecting blinks in the dataset and in real time is 93.94%. The dataset and real-time have an average yawn detection rate of 94.97%. |

|

|

Proposed System |

2025 |

MediaPipe |

The average rate of detecting blinks in the dataset and in real time is 97.74%. The dataset and real-time have an average yawn detection rate of 98.16%. |

The integration of the proposed drowsiness detection system with existing vehicle technologies, such as automatic braking systems, lane-keeping assist, and in-vehicle alerts, would significantly enhance road safety. When signs of drowsiness, such as eye closure, or excessive yawning, or tilting head are detected, the system could trigger the vehicle's automatic braking system to slow down or stop the vehicle if the driver fails to respond to the warnings. Similarly, by linking the system with lane-keeping assist technology, the vehicle could automatically steer itself back into the lane if the driver begins to drift due to fatigue. Additionally, the system could trigger in-vehicle alerts, including auditory, visual, or haptic warnings, such as vibrations in the steering wheel, to notify the driver in real time when drowsiness is detected, prompting them to take action like pulling over or taking a break. These integrations would enable a more proactive and real-time safety response, reducing the likelihood of accidents caused by drowsy driving. By combining the drowsiness detection system with other Advanced Driver-Assistance Systems (ADAS), such as adaptive cruise control, the vehicle could adjust its speed and maintain a safe distance from the car ahead, further reducing the risk of collisions. Integrating the system with vehicle telematics could also allow emergency services to be notified if the driver remains unresponsive, ensuring prompt assistance in critical situations.

The Dlib-based System failed to The MediaPipe-based System detects yawning. successfully detects yawning.

Fig. 8. When the mouth was too broad.

The authors tried several ways to address the problem on the left side of Fig. 8, but none worked [5]. To begin, they examined the facial points of the left and right eyebrows along with the jawline to determine the face-aspect ratio. The issue with this method is that the threshold levels for men and women are not the same [5]. Afterward, they attempted to measure the distance between the jawline and the top lip. The problem with this approach is that different camera distances produce varying threshold values [5]. However, the proposed MediaPipe-based drowsiness detection system does not encounter this issue; it can still detect the mouth even if it is too widely open.

5. Conclusion

This work utilizes the pre-trained 468 facial landmark detector from MediaPipe Face Mesh to develop a system that identifies tiredness in real-time and alerts drivers to mitigate the increasing number of accidents caused by fatigued drivers. The proposed method monitors the opening and closing patterns of the eyes by utilizing the eye aspect ratio derived from the coordinates of the left and right eyes. The device also tracks the opening and closing patterns of the mouth by calculating the mouth aspect ratio from the coordinates of the upper and lower lips. Finally, the system evaluates the head tilt angle to determine whether the driver's head is aligned or tilted to the left or right. Three criteria were established based on these three quantitative metrics, and the driver receives three distinct messages accompanied by a loud alarm according to these criteria.

The experimental results indicate that using MediaPipe to detect eyes and yawns resulted in an average test accuracy of 96.19% and 97.03% when applied to real-time videos. Conversely, when the same approach was employed on dataset images, the average test accuracy reached 99.28% and 99.29% for detecting eyes and yawns, respectively. The accuracy for detecting head tilt angles was 100%. This study exclusively used real-time video samples to detect the head tilt angle. The real-time detection rate is lower than that of the dataset. The model's performance is considered satisfactory under optimal to near-optimal lighting and moderate lighting conditions. The results for real-time detection indicate a lower rate compared to the dataset result for the proposed system, likely due to the varying lighting conditions encountered throughout the testing phase. However, the system's real-time detection rate was also satisfactory. The dataset photographs feature ideal lighting settings in contrast. The enhanced detection rate observed for the dataset may be due to this factor. In conclusion, compared to relevant studies, the proposed system exhibited better outcomes in detecting fatigue.

Future research initiatives will primarily focus on developing our model using a convolutional neural network (CNN). This research aims to create an optimal algorithm for building an IoT device. This device will feature advanced software capabilities to provide essential services to users and enhance road safety.