Enhanced Face Recognition using Data Fusion

Автор: Alaa Eleyan

Журнал: International Journal of Intelligent Systems and Applications(IJISA) @ijisa

Статья в выпуске: 1 vol.5, 2012 года.

Бесплатный доступ

In this paper we scrutinize the influence of fusion on the face recognition performance. In pattern recognition task, benefiting from different uncorrelated observations and performing fusion at feature and/or decision levels improves the overall performance. In features fusion approach, we fuse (concatenate) the feature vectors obtained using different feature extractors for the same image. Classification is then performed using different similarity measures. In decisions fusion approach, the fusion is performed at decisions level, where decisions from different algorithms are fused using majority voting. The proposed method was tested using face images having different facial expressions and conditions obtained from ORL and FRAV2D databases. Simulations results show that the performance of both feature and decision fusion approaches outperforms the single performances of the fused algorithms significantly.

Data Fusion, Principal Component Analysis, Discrete Cosine Transform, Local Binary Patterns

Короткий адрес: https://sciup.org/15010358

IDR: 15010358

Текст научной статьи Enhanced Face Recognition using Data Fusion

Published Online December 2012 in MECS

In the last two decades face recognition problem has emerged as a significant research area with many possible applications that surely alleviate and assist safeguard our everyday lives in many aspects [1]. Generally, face representation fails into two categories. The First category is global approach or appearancebased, which uses holistic texture features and is applied to the face or specific region of it. The second category is feature-based or component-based, which uses the geometric relationship among the facial features like mouth, nose, and eyes. Many attempts to use data fusion for improving face recognition performance in recent years are introduced [2][3][4][5]. In [6] authors tried to overcome the small sample size problem by combining multiclassifier fusion with the RBPCA MaxLike approach block-based principal component analysis (BPCA). Kisku et al. [7] applied Dempster-Shafer decision theory to use global and local matching scores they obtained using SIFT [8] features.

The idea behind this paper is to use data fusion technique to improve the performance of the face recognition system. Two fusion approaches are used. Feature fusion approach, were we concatenated the three feature vectors generated using principal component analysis, discrete cosine transform and local binary patterns algorithms. The new feature vector is then applied to similarity measure classifier. In decision fusion approach, feature vectors generated from the three algorithms are fed to classifiers separately and decisions are fused using majority voting approach. Experiments with different scenarios are implemented on two databases, namely; ORL database [9] and FRAV2D database [10].

The paper is organized as follows: section two lists the used feature extraction algorithms. Section three discusses the data fusion approaches while Section 4 shows the structure and discussions of the implemented experiments. We concluded our work in section 5.

-

II. Feature Extraction

The Feature extraction is a very crucial stage of data preparation for later on future processing such as detection, estimation and recognition. It is one of the main reasons for determining the robustness and the performance of the system that will utilize those features. It’s important to choose the feature extractors carefully depending on the desired application. As the pattern often contains redundant information, mapping it to a feature vector can get rid of this redundancy and preserve most of the intrinsic information content of the pattern. The extracted features have great role in distinguishing input patterns.

In this work, employ features derived from principal component analysis (PCA) [11][12] discrete cosine transform (DCT) [13][14][15] and local binary patterns (LBP) [16][17]. The idea here was to use diverse algorithms to guarantee extraction of the most salient features out the face images. For mathematical models of these 3 algorithms readers should return to before mentioned corresponding references.

-

III. Data Fusion

-

3.1 Feature Fusion

The feature vectors extracted using the various algorithms are fused to achieve feasibly higher recognition rates. In this work, we investigated two schemes, namely, fusing PCA, DCT and LBP feature vectors extracted from the face images, and fusing the classification decisions obtained separately from the three feature extractors.

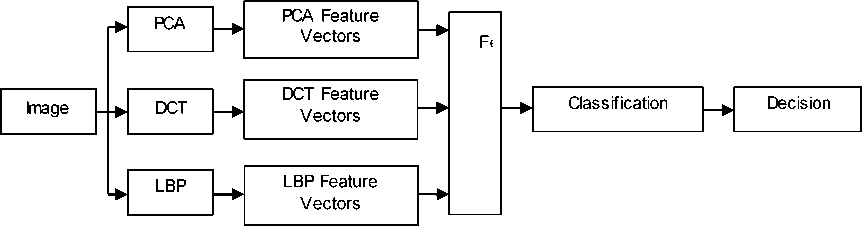

Fig. 1: Block diagram for feature fusion

In feature fusion scheme, feature extraction is performed using PCA, DCT and LBP algorithms. The extracted feature vectors from the above algorithms are concatenated to construct a new feature vector to be used for classification as shown in Figure 1.

The feature vectors are extracted using three feature extraction algorithms, namely, PCA, DCT and LBP. In feature fusion scheme, a feature vector of face image is formed by concatenating the extracted feature vectors using the previously mentioned algorithms. Assuming F 1 , F 2 and F 3 are the feature vectors generated using PCA, DCT and LBP algorithms, respectively. The feature vectors are defined as follows:

= [i#(1)

Vdct = [Гм],

- = [й],

-

V- . = [VpСЛ V DCT V LBP]

-

3.2 Decision Fusion

||[l/pCA DeTT VLBP]H’ where ||.|| is the second norm. Since the ranges of the values ın the feature vectors extracted from the three different algorithms are not same, the feature vectors F1, F2 and F3 are normalized as in (1)-(4), respectively, to make sure that the influence of the three different algorithms to the feature vectors are similarly weighted. FFusion is the final feature vector generated by concatenating the three feature vectors obtained using the three feature extraction algorithms.

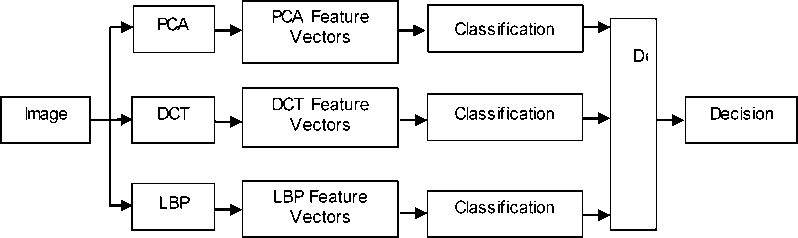

In decision fusion, each of the previously mentioned feature extraction algorithms is run separately for face classification. Decisions coming from the three algorithms are compared and majority voting is applied to obtain the final decision. Block diagram of the system is shown in Figure 2.

-

IV. Experimental Results and Analysis4.1 Datasets

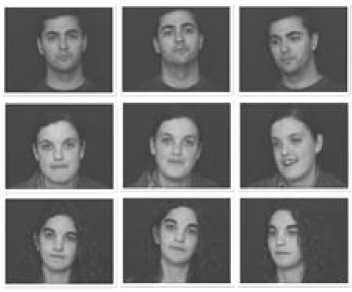

We evaluated our proposed system by carrying out extensive experiments using two face databases. ORL face database is the first database we experimented with. It consists of 40 people with 10 images acquired for each one with different facial expressions and illumination variations.

FRAV2D database is the second database that is used. It comprises 109 people, with 32 images each. It includes frontal images with different head orientations, facial expressions and occlusions. In our experiments, we used a subset of the database consisting of 60 people with 10 images each with no overlap between the training and test face images. Face images in Figure 3 are examples from both databases.

Fig. 2: Block diagram for decision fusion

(a)

(b)

Fig. 3: Face examples from: (a) ORL database (b) FRAV2D database

-

4.2 Similarity Measures

-

4.3 Similarity Measures

-

4.4 Experiments using Separated Algorithms

-

4.5 Experiments using Feature Fusion

The similarity measures used in our experiments to evaluate the efficiency of different representation and recognition methods include L 1 distance measure, δL 1 , L 2 distance measure, δL 2 , and cosine similarity measure, δcos, which are defined as follows [18]:

SL, С^У) = En|Xn -Ул1,(5)

6L 2 (УУ) = (Х-У)Чх —У),(6)

8сOS (х'у) = ^^^i,

In the experiments for face recognition, three similarity measures are used, namely; Manhattan ( L 1 ) distance, Euclidean ( L 2 ) distance, and Cosine ( Cos ) distance. Many experiments are implemented as it shown in the following sections. Firstly, we implemented the face recognition system using the three feature algorithms separately without the use of fusion technique using both ORL and FRAV2D databases. These results are used as a baseline for comparisons with the results obtained from the other scenarios where feature and decision fusion techniques are used.

In these experiments we implemented PCA, DCT, and LBP algorithms for face recognition separately. Three similarity measures are used as classifiers. Number of used training images changed between 1 to 9 out of 10 images per person. Table 1 and Table 2 show the obtained results using ORL and FRAV2D databases, respectively.

In this section, we implemented feature fusion approach by concatenating the three feature vectors obtained from PCA, DCT, and LBP algorithms to form a new feature vector. The new feature vector is then applied to the classifier which is in this case one of the used similarity measures. Again, number of used training images changed between 1 to 9 out of 10 images per person. Table 3 shows the results using ORL database while Table 4 shows the results using FRAV2D database.

Table 1: Experimental results on ORL database using PCA, DCT and LBP separately with different similarity measures

|

Algorithm |

Similarity Measure |

Number of training images |

||||||||

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

||

|

PCA |

L1 |

62.58 |

78.06 |

83.50 |

87.17 |

88.35 |

90.13 |

91.75 |

92.13 |

91.25 |

|

L2 |

63.94 |

79.06 |

85.04 |

89.46 |

92.10 |

93.69 |

96.25 |

95.63 |

95.75 |

|

|

Cos |

64.94 |

80.19 |

86.32 |

91.04 |

93.50 |

94.88 |

96.75 |

95.38 |

95.50 |

|

|

DCT |

L1 |

71.94 |

82.19 |

85.36 |

89.58 |

90.50 |

96.25 |

95.00 |

96.25 |

92.50 |

|

L2 |

71.94 |

82.81 |

85.71 |

88.33 |

90.50 |

96.25 |

95.83 |

96.25 |

95.00 |

|

|

Cos |

71.94 |

82.81 |

85.71 |

88.33 |

90.50 |

96.25 |

95.83 |

96.25 |

95.00 |

|

|

LBP |

L1 |

57.50 |

68.13 |

72.50 |

75.42 |

84.00 |

84.38 |

88.33 |

91.25 |

97.50 |

|

L2 |

55.28 |

64.38 |

65.71 |

70.83 |

78.00 |

83.13 |

85.00 |

85.00 |

95.00 |

|

|

Cos |

55.28 |

64.38 |

65.71 |

70.83 |

78.00 |

83.13 |

85.00 |

85.00 |

95.00 |

|

Table 2: Experimental results on FRAV2D database using PCA, DCT and LBP separately with different similarity measures

|

Algorithm |

Similarity Measure |

Number of training images |

||||||||

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

||

|

PCA |

L1 |

63.07 |

78.77 |

84.59 |

87.33 |

89.99 |

91.23 |

92.20 |

94.08 |

91.69 |

|

L2 |

64.75 |

80.70 |

85.63 |

91.32 |

93.69 |

94.94 |

96.59 |

96.50 |

95.98 |

|

|

Cos |

65.14 |

80.22 |

87.81 |

92.59 |

94.79 |

96.05 |

97.21 |

95.60 |

96.09 |

|

|

DCT |

L1 |

72.21 |

82.27 |

85.74 |

90.56 |

91.26 |

96.67 |

95.87 |

96.77 |

93.14 |

|

L2 |

73.86 |

83.15 |

87.09 |

89.21 |

92.12 |

96.85 |

96.46 |

97.07 |

95.85 |

|

|

Cos |

73.86 |

84.11 |

86.08 |

89.23 |

91.57 |

97.19 |

97.68 |

97.44 |

96.01 |

|

|

LBP |

L1 |

58.65 |

69.59 |

73.24 |

76.03 |

84.70 |

84.84 |

89.19 |

91.77 |

97.67 |

|

L2 |

55.40 |

65.67 |

66.97 |

71.85 |

79.88 |

84.81 |

85.37 |

86.21 |

95.53 |

|

|

Cos |

55.75 |

65.28 |

67.27 |

71.85 |

79.75 |

83.51 |

86.81 |

86.42 |

96.60 |

|

Table 3: Experimental results on ORL database using feature fusion among PCA, DCT and LBP with different similarity measures

|

Similarity Measure |

Number of training images |

||||||||

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

|

|

L1 |

81.75 |

91.59 |

93.18 |

94.04 |

96.70 |

96.88 |

97.83 |

97.38 |

98.50 |

|

L2 |

79.86 |

88.47 |

90.54 |

91.67 |

94.50 |

96.13 |

97.17 |

96.63 |

97.25 |

|

Cos |

80.03 |

88.59 |

90.57 |

91.75 |

94.60 |

96.38 |

97.25 |

96.88 |

97.50 |

Table 4: Experimental results on FRAV2D database using feature fusion among PCA, DCT and LBP with different similarity measures

|

Similarity Measure |

Number of training images |

||||||||

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

|

|

L1 |

84.52 |

92.17 |

94.83 |

94.73 |

98.51 |

97.40 |

98.68 |

97.73 |

99.70 |

|

L2 |

83.03 |

89.70 |

92.50 |

92.83 |

96.26 |

97.31 |

97.79 |

97.47 |

98.19 |

|

Cos |

83.53 |

89.12 |

92.03 |

91.97 |

96.24 |

96.42 |

97.57 |

97.06 |

98.89 |

Table 5: Experimental results on ORL database using decision fusion among PCA, DCT and LBP with different similarity measures

|

Similarity Measure |

Number of training images |

||||||||

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

|

|

L1 |

73.00 |

86.72 |

90.68 |

93.79 |

96.20 |

97.88 |

98.00 |

98.13 |

98.25 |

|

L2 |

73.42 |

86.31 |

89.68 |

93.54 |

96.25 |

98.00 |

98.83 |

98.38 |

99.00 |

|

Cos |

73.86 |

86.72 |

90.04 |

93.92 |

96.75 |

98.13 |

98.92 |

98.00 |

99.00 |

-

4.6 Experiments using Decision Fusion

Different from the previous section, here we implemented decision fusion approach by applying PCA, DCT, and LBP algorithms, separately. The obtained three feature vectors are applied to the classifiers. After classification, the decisions form different classifiers are fused using majority voting technique. Same as previous scenarios, number of used training images changed between 1 to 9 out of 10

images per person. Table 5 and Table 6 show the results using ORL and FRAV2D databases, respectively.

Form the comparison in Table 7; it is very clear that the use of fusion technique helped improving the overall performance of the face recognition system. Implementing the three algorithms separately gave 93.5% as the highest result using PCA with Cos distance. While using feature fusion managed to increase the performance to 96.7% using L1 distance.

Table 6: Experimental results on FRAV2D database using decision fusion among PCA, DCT and LBP with different similarity measures

|

Similarity Measure |

Number of training images |

||||||||

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

|

|

L1 |

78.64 |

87.39 |

91.37 |

94.02 |

96.54 |

97.88 |

98.92 |

98.55 |

99.78 |

|

L2 |

79.37 |

86.60 |

89.75 |

94.21 |

97.03 |

98.60 |

98.83 |

98.84 |

100 |

|

Cos |

79.10 |

87.39 |

90.29 |

94.76 |

97.43 |

98.51 |

99.38 |

98.77 |

100 |

Table 7: Comparison among the different approaches using ORL database with 5 training / 5 testing images per perso n

|

Similarity measures |

|||

|

Algorithms |

L1 |

L2 |

Cos |

|

PCA |

88.35 |

92.10 |

93.50 |

|

DCT |

90.50 |

90.50 |

90.50 |

|

LBP |

84.00 |

78.00 |

78.00 |

|

Feature Fusion |

96.70 |

94.50 |

94.60 |

|

Decision Fusion |

96.20 |

96.25 |

96.75 |

Decision fusion raised this to 96.75% using Cos distance. The results in Table 7 obtained using ORL database with 5 training and 5 testing images per person.

-

V. Conclusion

In this paper we introduce the use of data fusion for improving the face recognition performance. Two fusion techniques were applied, namely; feature fusion and decision fusion. Experimental results show the benefit of using such techniques in the face recognition problem. Both techniques shows promising results but more sophisticated experiments may led us to find out which technique is optimal for face recognition problem. Also, the effect of using different classifiers on system performance can be investigated in the further work.

Список литературы Enhanced Face Recognition using Data Fusion

- W. Zhao, R. Chellappa, A. Rosenfeld and P.J. Phillips, “Face Recognition: A Literature Survey/”. ACM Computing Surveys, pp. 399-458, 2003.

- F. Al-Osaimi, M. Bennamoun and A. Mian, “Spatially Optimized Data Level Fusion of Texture and Shape for Face Recognition”. To appear in IEEE Trans. on Image Processing, 2011.

- M. Vatsa, R. Singh, A. Noore, and A. Ross, “On the Dynamic Selection of Biometric Fusion Algorithms”. IEEE Transactions on Information Forensics and Security, vol. 5, no. 3, pp. 470-479, 2010.

- A. Ross and A. K. Jain, “Fusion Techniques in Multibiometric Systems”, in Face Biometrics for Personal Identification. R. I. Hammound, B. R. Abidi and M. A. Abidi (Eds.), Springer, 2007, pp. 185-212.

- A. Ross and A. K. Jain, “Multimodal Human Recognition Systems”, in Multi-Sensor Image Fusion and Its Applications. R.S. Blum and Z. Liu (Eds.), CRC Taylor and Francis, 2006, pp. 289–301.

- D.H.P. Salvadeo, N.D.A. Mascarenhas, J. Moreira, A.L.M. Levada, D.C. Corrêa, “Improving Face Recognition Performance Using RBPCA MaxLike and Information Fusion”. Computing in Science & Engineering journal vol. 13 no. 5, pp. 14-21, 2011.

- D.R. Kisku, M. Tistarelli, J.K. Sing, and P. Gupta, “Face Recognition by Fusion of Local and Global Matching Scores using DS Theory: An Evaluation with Uni-Classifier and Multi-Classifier Paradigm”. Computer Vision and Pattern Recognition Workshop, 2009, pp. 60-65.

- D. G. Lowe, “Distinctive Image Features From Scale-Invariant Keypoints”. International Journal of Computer Vision, vol. 60, no. 2, pp. 91-110, 2004.

- The Olivetti Database; http://www.cam-orl.co.uk/facedatabase.html.

- Á. Serrano, I. M. de Diego, C. Conde, E. Cabello, L Shen, and L. Bai, “Influence of Wavelet Frequency and Orientation in an SVM Based Parallel Gabor PCA Face Verification System”, H. Yin et al. (Eds.): IDEAL Conference 2007, Springer-Verlag LNCS 4881, pp. 219–228, 2007.

- M. Turk, A. Pentland, “Eigenfaces for Recognition”. Journal of Cognitive Neuroscience, vol. 3, pp. 71-86, 1991.

- M. Kirby, and L. Sirovich, “Application of the Karhunen-Loeve Procedure for the Characterization of Human Faces”. IEEE Pattern Analysis and Machine Intelligence, vol. 12, no. 1, pp. 103-108, 1990.

- Fabrizia M. de S. Matos, Leonardo V. Batista, and JanKees v. d. Poel. 2008. Face Recognition Using DCT Coefficients Selection”. In Proceedings of the 2008 ACM Symposium on Applied computing, 2008, pp. 1753-1757.

- Podilchuk, C. Xiaoyu Zhang, “Face Recognition using DCT-Based Feature Vectors”, IEEE International Conference on Acoustics, Speech, and Signal Processing, 1996, vol. 4, pp. 2144-2147.

- Z. M. Hafed and M. D. Levine, “Face Recognition Using the Discrete Cosine Transform”. International Journal of Computer Vision, vol. 43 no.3, 2001.

- T. Ahonen, A. Hadid, and M. Pietikainen. “Face Recognition with Local Binary Patterns”. In European Conference on Computer Vision, 2004, pp. 469-481.

- T. Ojala, M. Pietikainen, T. Maenpaa, “Multiresolution Gray-Scale and Rotation Invariant Texture Classification with Local Binary Patterns”. IEEE Transaction on pattern analysis and machine learning, vol. 24, no. 7, pp. 971-987.

- V. Perlibakas. “Distance Measures for PCA-Based Face Recognition”. Pattern recognition Letters. vol. 25, no. 6, pp. 711-724, 2004.