Enterprise architecture measurement: an extended systematic mapping study

Автор: Ammar Abdallah, Alain Abran

Журнал: International Journal of Information Technology and Computer Science @ijitcs

Статья в выпуске: 9 Vol. 11, 2019 года.

Бесплатный доступ

A systematic mapping study (SMS) of proposed EA measurement solutions was undertaken to provide an in-depth understanding of the claimed achievements and limitations in evidence-based research of enterprise architecture (EA). This SMS reports on 22 primary studies on EA measurement solutions published up to the end of 2018. The primary studies were analyzed thematically and classified according to ten (10) mapping questions including, but not limited to, positioning of EA measurement solutions within EA schools of thought, analysis of consistency-inconsistency of the terms used by authors in EA measurement research, and an analysis of the references to the ISO 15939 measurement information model. Some key findings reveal that the current research on EA measurement solutions is focused on the “enterprise IT architecting” school of thought, does not use rigorous terminology as found in science and engineering, and shows limited adoption of knowledge from other disciplines. The paper concludes with new perspectives for future research avenues in EA measurement.

Enterprise architecture, EA measurement terminology, EA schools of thought, EA measurement solutions, EA concepts, EA project life cycle

Короткий адрес: https://sciup.org/15016383

IDR: 15016383 | DOI: 10.5815/ijitcs.2019.09.02

Текст научной статьи Enterprise architecture measurement: an extended systematic mapping study

Published Online September 2019 in MECS DOI: 10.5815/ijitcs.2019.09.02

-

I. Introduction

Today's organizations are operating in fast-paced business environments that entail emerging technologies and changing business needs [1], and these challenges increase pressure on organizations to survive, adapt and integrate with change [1,2]. Enterprise architecture (EA) was introduced in 1987 to manage and align technology with business needs, improve enterprise integration and reduce the gap between business and information technology (IT) [2]. The first years of EA research were focused on understanding EA, including the claimed benefits of EA [3] and created the expectation that EA would help improve decision-making, reduce IT costs, improve business processes and enhance the re-use of resources [4-8].

However, EA comes at a price [9,10] and organizations planning to invest in it must be able to identify and quantify the expected benefits. Evaluating EA is therefore necessary to ensure that organizations are harvesting the expected benefits. For example, [66] proposed an EA value measurement framework in an attempt to measure the expected EA value, while others have suggested a “balanced scorecard” with a multi-perspective framework (financial, customer, internal, learning perspectives) for justifying investments in EA [11,12].

Due to its considerable expected benefits, interest in EA has increased in both academia and industry. The systematic literature review (SLR) in [3] reports that between 1987 and 2015 the number of peer-reviewed publications has grown 21% on average per year while the number of total publications has grown by 3%.

Furthermore, in an attempt to facilitate the realization of EA benefits and value for organizations, other studies have proposed measurement solutions for EA from various angles including, but not limited to, EA quality [7], EA complexity [8], EA ROI [9], and EA risk [10]. However, what is the quality of these EA measurement solutions in terms of measurement and metrology criteria?

When there is a growth of publications in a research field, it is useful and necessary to characterize the existing body of knowledge in order to identify the state of the art, including gaps and biases. For example, medical research has built a solid work in providing such summaries and classification schemes using evidencebased research through systematic mapping studies (SMS) and systematic literature reviews (SLR) [11].

The objective of the study reported in this paper was to explore the research on EA measurement, classify related studies based on measurement criteria, and to identify gaps and limitations of EA measurement-related research to date. The motivation is to extend the body of knowledge about the state-of-the-art on EA measurement research and help shape future research avenues.

The rest of this paper is structured as follows. Section II presents the related work. Section III presents the research methodology of this systematic mapping study (SMS). Section IV details the answers to the mapping questions selected for this study. Section V presents a discussion of findings and Section VI, the conclusions and future research avenues.

-

II. Related Work

The SLR in [12] distinguishes between the expected EA values supported by empirical evidence and those not supported by empirical evidence referred to as “myths”. It provides a synthesis of EA values supported by evidence and by five myths, pointing out a number of weaknesses in the EA research.

The EA concept itself is often characterized ambiguously as highlighted in [13] where the challenges of a lack of common understanding of EA definitions and related factors are detailed. In line with their work on EA in the government sector to gain a better e-government service delivery, the SLR in [14] reports on EA readiness measurement mechanisms and EA readiness measurement factors that could assist government agencies in measuring their EA readiness.

Apart from evidence-based research on understanding and conceptualization of EA, [15] focused on EA and visual analytics. Due to the dynamic changes in businesses and IT, enterprises need to adapt quickly, and EA analysis is one of the methods that stakeholders use in enterprise transformation. Since EA elements have diverse relations, EA analysis is highly complex. Therefore, [15] conducted an SLR in order to investigate the state-of-the-art on visual analytics in enterprise architecture management (EAM).

The SMS study in [2] identified the role of EA in enterprise integration, and reported on the state-of-the art on the subject including gaps and limitations.

Of all the SLR-SMS on EA, only three explicitly addressed EA measurement and EA evaluation [16-18]. Furthermore, the existing EA evaluation methods focused on business and IT alignment or on architecture maturity while ignoring all other parts of EA implementation [17]. All other SLR-SMS focused on proposing EA measurement solutions without an analysis of the corresponding limitations and gaps.

In this paper, we report on an extended version of our initial SMS study in [18] and we go beyond the limitations of the related work on EA measurement research. In addition, we investigate and analyze a number of additional research questions, including:

-

• The positioning of proposed EA measurement solutions within the EA project life cycle.

-

• A classification of the research type (e.g., evaluation research) of the proposed EA measurement solutions.

-

• A classification of EA measurement techniques used or adopted from other disciplines to support the design of EA measurement solutions.

-

• An extended analysis of consistency-inconsistency of the terms used by the authors in EA measurement research (e.g., measurement, evaluation, assessment, analysis, etc.).

-

• An analysis of the proposed measurement solutions based on the ISO 15939 measurement information model.

-

III. Research Methodology

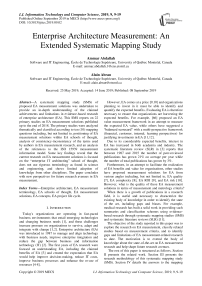

While an SLR explores and evaluates primary studies based on best practices [11], an SMS classifies the primary studies into specific categories according to the selected mapping questions in order to identify the research themes and explain the structure of the literature. In this SMS, the research objective was to provide classification schemes and evidence-based research. We followed the SMS guidelines of [19-21] on mapping questions, search strategy, study selection and data extraction – see Fig.1. The outcome was a set of 22 relevant primary studies to be used to answer the mapping questions.

Fig.1. Search methodology to select primary studies

-

A. Mapping questions

The mapping questions (MQ) selected for this SMS together with their related objectives are given in Table 1.

-

B. Search & selection processes

Selecting the relevant electronic databases is one of the main steps toward answering the mapping questions. Based on six databases (AIS, Compendex, IEEE, Inspec, Scopus, and SpringLink) our SMS used a combination of keywords to create nine search strings – see Table 2.

The search and selection processes were divided into the following phases:

Phase 1: Initially, the nine search strings were applied with filters limiting the search to explore titles and abstracts of journal papers. The rationale for limiting the search to journals derives from the general practice at conferences to present preliminary results and later publish in journals the detailed and complete analysis. After exploring the databases using the search strings in Table 2, 771 candidate primary studies were identified.

Table 1. Mapping questions and objectives. Revised and extended from [18]

|

ID |

Mapping Question |

Objective |

|

MQ1 |

What are the sources of publication on EA measurement? |

To discover where EA measurement research is published. |

|

MQ2 |

How has publication on EA measurement changed over time? |

To discover the timeline of EA measurement publications. |

|

MQ3 |

Which "EA schools of thought" have addressed research in EA measurement? |

To help identify which EA schools of thought have addressed research in EA measurement, and which have not, thereby leaving aspects of EA unexamined. |

|

MQ4 |

Where are EA measurement solutions helpful in the EA project lifecycle? |

To discover where the proposed EA measurement solution can be used in the EA project life cycle. This will help researchers design EA measurement solutions capable of assisting organizations to measure EA throughout the EA project life cycle. |

|

MQ5 |

What were the research intentions of EA measurement research? |

To discover the intentions and motivations behind conducting research in EA measurement. This will provide the research community with insights on current research directions. |

|

MQ6 |

What are the most popular research types in EA measurement literature? |

To discover which research types have been most frequently used, and to determine gaps and candidate avenues for future research. |

|

MQ7 |

What are the most frequently adopted foundations from other fields (disciplines), including the EA field, in EA measurement research? |

To provide a classification scheme of the techniques used to date to design or propose EA measurement solutions from other fields, including the EA field. This will provide an understanding of how EA measurement solutions have been designed and allow determination of gaps and candidate avenues for future research. |

|

MQ8 |

What are the EA measurement solutions described in the EA literature, and what is the terminology most used? |

To identify the EA concepts or attributes targeted for measurement and the terminology most used to describe EA measurement solutions. |

|

MQ9 |

What measurement terms are most frequently used in EA measurement research? Are measurement, evaluation, assessment and analysis used interchangeably? |

To recognize the most frequently used measurement-related semantics in EA measurement literature. In addition, to identify the consistency-inconsistency usage of measurement-related semantics (interchangeability). It is expected that there is no clear definition of each term in the primary studies, nor a differentiation between the distinct terms. |

|

MQ10 |

Is EA measurement research referencing the ISO 15939 standard on software process measurement? |

To identify the presence of ISO 15939 within the text and references of the primary studies as an indicator of the awareness of the authors of the measurement terms consensually used in science and engineering. |

Phase 2 : Since the 771 primary studies may contain duplicates among the six databases for each search string, and among the six databases overall, pivot tables were used to remove duplicates, reducing the unique primary studies to a set of 233.

Table 2. Search strings - revised from [18]

|

String ID |

Search String |

|

String 1 |

"Enterprise architecture" AND (measure OR evaluate OR assess) |

|

String 2 |

"Enterprise Architecture" AND Scorecard |

|

String 3 |

"Enterprise Architecture" AND (benefit OR value OR impact) |

|

String 4 |

“Enterprise architecture” AND (success OR effectiveness) |

|

String 5 |

"Enterprise architecture" AND Quality |

|

String 6 |

"Enterprise architecture" AND Maturity |

|

String 7 |

"Enterprise architecture" AND Realization |

|

String 8 |

Enterprise architecture” AND "Quantitative analysis" |

|

String 9 |

“Enterprise architecture” AND Performance |

Phase 3 : To select the most relevant primary studies out of the 233, the following inclusion and exclusion criteria were defined.

Inclusion : the primary studies meeting the following criteria were selected:

-

• Exact keyword “enterprise architecture” is present in the title of the primary study;

Exact keyword “measurement,” or (evaluation, assessment, analysis) is present in the title and/or the entire text of the primary study;

-

• Only the most recent publication for a study reported more than once;

-

• Discusses an EA measurement solution - this can be, but not limited to, method, theory, framework and tool.

Exclusion : primary studies not meeting the inclusion criteria above were removed.

Phase 4 : Then, by an additional scanning on the databases, two (2) additional conference papers were added. The selection criteria (high-quality criteria) for selecting the additional papers are as follows:

-

• Provide answers to all MQs.

-

• Mention ISO 15939, or propose measurement units with mathematical basis.

-

C. Data extraction through coding

Data extraction is a key step in SMS. Here, the type of mapping questions and the objectives we developed led us to analyze documents rich in textual content. These documents represent the raw data to be interpreted for conducting data extraction and analysis. Therefore, thematic analysis, which plays a significant role in data reduction, was selected as the analysis tool [20]. Thematic analysis starts with reading the primary studies (raw data), and then assigning codes (labels) to the text where these codes can be inductive (data driven) and/or deductive (pre-defined list). Assigning codes is an iterative process where the researcher can create additional codes, and/or merge with others. Hence, the codes will ultimately allow creating a theme [21].

A theme is a coherent integration that captures something important about the data with respect to the research questions. It reduces a large amount of text into smaller units, which allows the researcher to build a level of abstraction. Therefore, it is an expression of the latent content of the text [22].

Data extraction for MQ1 and MQ2 was based on a predefined list of codes extracted directly from the databases: article title, year of publication, and source of publication. For MQ1 and MQ2 no effort was made to read the latent meanings behind the text.

Data extraction for MQ3 was based on pre-defined codes to determine the type of EA based on the taxonomy of the three EA schools of thought in[23]:

-

• Enterprise IT architecting: “EA is about aligning enterprise IT assets (through strategy, design, and management) to effectively execute the business strategy and various operations using proper IT capabilities”.

-

• Enterprise integrating: “EA is about designing all facets of the enterprise. The goal is to execute the enterprise strategy by maximizing overall coherency between all of its facets—including IT.”

-

• Enterprise ecological adaption: “EA is about fostering organizational learning by designing all facets of the enterprise -including its relationship to its environment - to enable innovation and system-in-environment adaption.”

Data extraction for MQ4 was based on a pre-defined list of codes to determine where the EA measurement solution can be used within an EA project life cycle. For this SMS, we adopted the EA project life cycle of [6] where the phases provide traceability of the EA project, and provide information about where in the cycle EA value may be created:

-

• Development: In the development phase, EA is developed and maintained. This phase corresponds to the Architecture Development Method (ADM) phases of: architecture vision, business

architecture, information systems architectures and technology architecture.

-

• Realization (implementation): In the realization phase, the projects are defined and carried out to implement the changes defined in the EA. This phase corresponds to the ADM phases of: opportunities and solutions, migration planning and implementation governance.

-

• Use: After implementation, changes have been implemented in the organization and the promised benefits should materialize. This corresponds to architecture change management in ADM of TOGAF 9.1.

Data extraction for MQ5 was based on data driven codes to investigate the research intentions on EA measurement research in order to gain insights into the relevance of the research to stakeholders. This is a valuable piece of information for outlining research directions. Hence, the codes for this question were extracted by identifying phrases in the primary studies that indicate research intentions such as, but not limited to, “the key contribution is...”, “this investigation helps organizations to...”

Data extraction for MQ6 was based on a pre-defined list of codes. The objective of MQ6 was to provide the EA community with a classification scheme of the most popular research types in the EA measurement literature. The classification type and criteria in EA measurement research of [11] were adopted:

-

• Validation research: A primary study where the measurement solution is not yet implemented in practice with an industry partner, but however uses statistics, hypothesis, or regression analysis to test a model or to validate a research hypothesis related to the EA measurement solution.

-

• Evaluation research: A primary study that has an industry partner and implements the EA measurement solution in practice with the industry partner.

-

• Solution proposal: A primary study that has no industry partner and explains the potential benefits of the EA measurement solution but has no statistics, hypothesis, or regression analysis, and is not yet implemented in practice.

-

• Philosophical research: A primary study that provides taxonomy on EA measurement research, structures the EA measurement research field, and provides a new way of looking and understanding the EA measurement literature.

Data extraction for MQ7 was based on data driven codes to provide a classification for which foundations have been established in other fields (disciplines) of engineering or business, or from the EA field, in order to design the EA measurement solution.

Data extraction for MQ8 was based on data driven codes to identify the most frequently used terminology to describe EA measurement solutions, and to identify the EA concepts or attributes targeted in each EA measurement solution. Two different levels of research were investigated:

-

1 . The abstraction level to find the main concept (EA concept) for the EA measurement solution.

-

2 . The detailed level to investigate a decomposed EA concept (sub-concept) for the EA measurement solution.

Data extraction for MQ9 was based on a pre-defined list of codes where the following definitions of measurement, evaluation, assessment, and analysis do not share the same meaning:

-

• Evaluation [24]: The process of determining merit, value or worth. The six basic components of evaluation are: target, criteria, yardstick (the ideal target against which the real target is to be compared), data gathering techniques, synthesis techniques, and the evaluation process.

-

• Assessment [25]: A procedure which includes initiating the assessment, planning the assessment, briefing, data acquisition (normally through interviews and a review of documents), process rating (outcome of the assessment), and reporting results.

-

• Measurement [26]: in the measurement context model and the ISO 15939 measurement information model measurement can be: a method of assigning a numerical value to an object, the action of measuring, the result of measurement, the use of measurement results, or any of these.

Data extraction for MQ10 was also based on a predefined list of codes. Until recently, the maturity of EA measurement solutions concerning metrology had not been identified as a qualitative issue in EA measurement research. In particular, it was not clear whether EA measurement solutions incorporated measurement standards in the attempt to propose EA measurement solutions. Consequently, through MQ10 we investigated if the literature on EA measurement referred to the ISO 15939 measurement information model and terminology.

To extract data and answers for MQ10, we used a search tool to find the words ISO 15939 and/or the International Vocabulary of Metrology (VIM) from the list of references of each primary study. The primary studies were classified into:

-

• Primary studies not using ISO 15939 where none of the words (ISO, ISO 15939 and/or VIM) was found in the references.

-

• Primary studies likely to use ISO 15939 where at least one of the words (ISO, ISO 15939 and/or VIM) was present in the list of references. The presence of these words in the references list does not assure that the primary study follows ISO 15939. Rather it represents a potential for these primary studies to be aligned with ISO 15939 and requires further investigation and analysis.

-

D. Intercoder reliability

We used the planning guide of [28] for the best practices of codebook development and coding instructions for each mapping question. The goal of the codebook was to reproduce the research results with a certain level of agreement between coders. In this SMS, two coders were given instructions to code the primary studies and obtain codes and themes for the mapping questions, then left alone to code accordingly.

To represent the intercoder reliability (i.e. reliability between coders) we used the Krippendorff alpha about reliability best practices on calculating alpha [27].

To confirm the external validity of this SMS, the classification was validated by recoding a sample of 53 primary studies.

Intercoder reliability is only calculated for the mapping questions involving coder interpretations: MQ3, MQ4, MQ5, MQ6, MQ7, MQ8, and MQ9.

The two coders had similar coding for MQ3, MQ4, MQ7, MQ8, and MQ9 but had some coding differences for MQ5 and MQ6. Therefore, the Krippendorff alpha coefficient was calculated to find the level of agreement on MQ5 and MQ6, and at 0.87, it corresponded to a high agreement amongst the coders, the maximum being 1.0.

-

IV. Results: Answers to the Mapping Questions

This section presents the coding results (e.g., answers) to the mapping questions for the 22 primary studies. These answers provide an understanding of the structure of this research area and insights into its limitations and gaps.

-

A. Answers to MQ1 & MQ2

MQ1: The 22 primary studies have been published in 21 distinct publication sources: two in “Information Systems and e-Business Management” and the rest in 20 separate journals – see APPENDIX A.

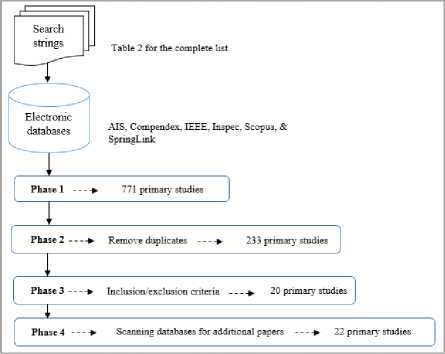

MQ2: Fig.2 shows one to two publications from 2004 to 2010, two to three from 2011 to 2016, and a range of one to three from 2015 to the end of 2018. The trend line shows a slowly increasing publication pattern, but this is still a fairly low number of publications on EA measurement over a period of almost 15 years.

Fig.2. Number of publications over time (answers to MQ2)

-

B. Answers to MQ3

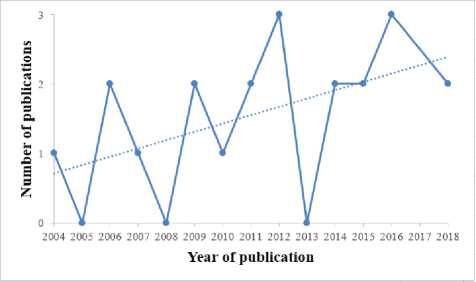

The objective of MQ3 was to investigate and discover which EA schools of thought had addressed EA measurement-related issues. Fig.3 shows that almost 80% of EA measurement research was addressed within the “enterprise IT architecting” school of thought, with much less within the other two EA schools of thought.

Fig.3. EA schools of thought (answers to MQ3)

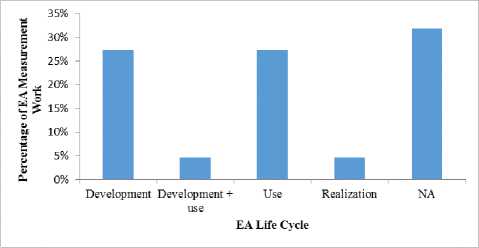

Fig.4. Targeted use of EA measurement solutions within the EA project life cycle (answers to MQ4)

-

B. Answers to MQ4

The objective of MQ4 was to investigate where within the EA project life cycle a proposed EA measurement solution can be used. Fig.4 shows that:

-

• 27% were used during the development phase, e.g.,

before spending costs and resources on EA.

-

• 27% support practitioners after implementing EA,

e.g., EA use.

-

• 9% support EA in two phases: development and

use

-

• 5 % in the implementation phase,.

-

• 32% of the primary studies (NA) did not take into

account the EA life cycle when proposing an EA mesurement solution.

-

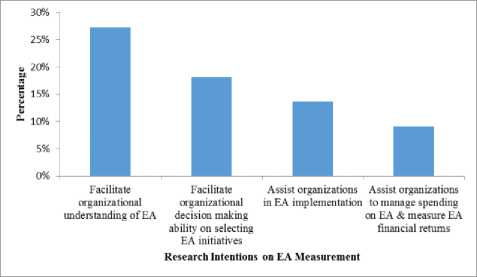

C. Answers to MQ5

The objective of MQ5 was to investigate the research intentions that motivated researchers to propose EA measurement solutions. Fig.5 shows the distribution of the research intentions in the primary studies:

-

• 27% to facilitate organizational understanding of EA.

-

• 18% to facilitate organizational decision-making

ability in selecting EA initiatives.

-

• 15% to assist organization in EA implementation.

-

• 10% to assist organizations to manage EA spending

and measure EA financial returns.

Fig.5. Intentions of EA measurement research (answers to MQ5)

-

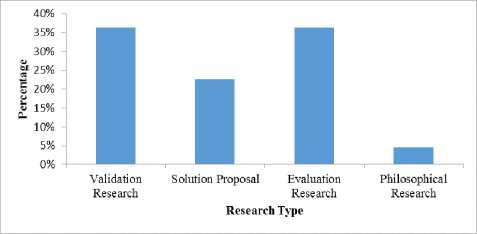

D. Answers to MQ6

The objective of MQ6 was to provide a classification scheme of the literature on EA measurement by identifying the research types used in EA measurement research.

Fig.6 shows the distribution of the research types in these primary studies:

-

• 36% evaluation research,

-

• 35% validation research,

-

• 23% solution proposal”, and

-

• 5% philosophical research”.

Fig.6. Research type distribution in the EA measurement literature (answers to MQ6)

-

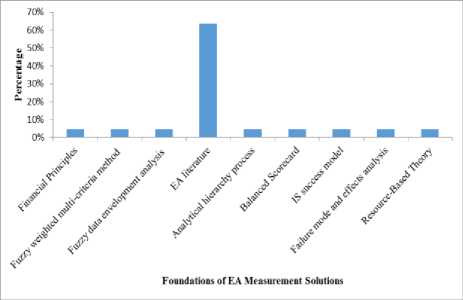

E. Answers to MQ7

The objective of MQ7 was to explore the techniques used to design or propose EA measurement solutions from other fields, including the EA field. Fig.7 shows that in these primary studies:

-

• The majority (e.g., 64%) refers to EA literature in order to design and structure the EA measurement solutions.

-

• 36% refer to foundations from various fields,

including the AHP method, a widely accepted decision-making technique, balanced scorecards, IS success model, and financial principles.

Fig.7. Foundations of EA measurement solutions (answers to MQ7)

-

F. Answers to MQ8

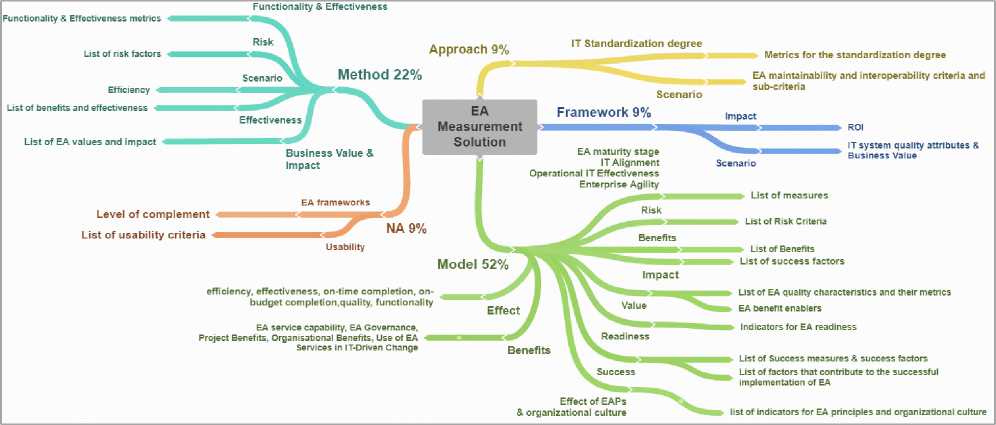

The first objective of MQ8 was to investigate the terminology used to describe EA measurement solutions. Fig.8 indicates that:

-

• 50% of the primary studies used “model” to

describe their measurement solution.

-

• 23% used “method”.

-

• 9% used “framework”.

-

• 9% used “approach”.

-

• 9% did not use any terminology to describe their

measurement solutions.

Having identified the terminology used to describe the EA measurement solutions, the second objective of MQ8 was to identify what measurement concepts were being measured, evaluated, analyzed, or assessed. To measure an attribute, the concept to be measured needs to be defined and characterized [26].

Characterization is accomplished by identifying how the sub-concepts contribute to the concept to be measured (e.g., the size of the software code). Therefore, the EA measurement solutions presented in Fig.8 were analyzed and coded to find the EA concepts and sub-concepts of each EA measurement solution including the terminology used by the researchers themselves. For example, in the center right-hand side of Fig.8, the proposed ‘framework’ solution attempts to measure the impact of EA, meaning that the concept to be measured was identified as “impact”, and its sub-concept was identified as “ROI” – the return on investments.

-

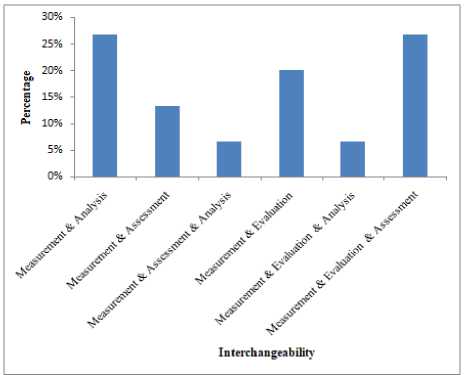

G. Answers to MQ9

The objective of MQ9 was to recognize the most frequently used measurement-related semantics in the EA measurement literature. Fig.9 shows that in these primary studies interchangeably used the combination without a clear definition of each term, nor a differentiation between the distinct terms. For example:

-

• 27% of the primary studies interchangeably

-

• 27% of the primary studies interchangeably

Fig.9. Measurement-related semantics and terms (answers to MQ9)

-

H. Answers to MQ10

The objective of MQ10 was to explore the presence of ISO 15939 within the text and references of the primary studies. The findings indicate that:

-

• ISO 15939 is not present in 95% of the primary studies. Consequently, these primary studies are classified as not utilizing ISO 15939 in their EA measurement design.

-

• Only one primary study [A13] mentioned ISO 15939 within the text. However, mentioning ISO 15939 in the text or references was not of a sufficient detail to classify the primary study as utilizing ISO 15939 in its proposed EA measurement design.

-

V. Discussion

This section discusses the key findings from this SMS that may guide future improvements in EA measurement research.

The limited number of primary studies published in journals, as the answers to MQ1 and MQ2, show evidence that the research on EA measurement is still emerging. This could indicate either a lack of interest or a major research challenge in tackling EA measurement issues.

From the answers to MQ3, the “Enterprise IT Architecting” EA school of thought has by far published the largest majority of EA measurement solutions. According to [23], each EA school of thought has a different belief system (i.e. definitions, concerns, and assumptions) and a different vision which impacts on what the EA school of thought maintains it can deliver to the organization.

Therefore, measuring “Enterprise IT Architecting” implies that measurement solutions were limited to the design of technological solutions, with a focus on assuring high quality models that include planning scenarios.

Furthermore, the research on EA measurement has mostly been limited to providing information on how EA can assist aligning enterprise IT assests and business strategy execution. Since EA measurement contributions have mostly been limited to the IT aspects of EA the state-of-the-art on EA measurement lacks an all inclusive perspective on EA measurement solutions. In fact, there is a scarcity of information on EA-related organizational efficiency and EA-related organizational innovation and sustainability. Thus, researchers need to look to design solutions to questions about how to measure enterprise ecological adaption and enterprise integration, the other major schools of thought in EA measurement.

From the answers to MQ4 it is observed that the majority of EA solutions focused on the development and post-implemention phases of an EA project. Given that EA comes at a large cost and requires considerable human and financial resources, EA measurement should support management throughout the EA project life cycle. Therefore, future research is needed to design innovative EA measurement solutions for all the distinct phases of the EA life cycle.

From the answers to MQ5, we observed that research intentions were diverse, each primary study individually proposing an EA measurement solution to support the organization from a distinct perspective and standpoint.

For instance, the research intention of some primary studies was to attempt to explain how EA adds value to the organization, while other studies discussed how to facilitate organizational decision making to select the most valuable EA initiative. Furthermore, none of these research intentions was aiming to improve the design of EA measurement solutions, or to design measurement solutions based on recognized measurement theories and best practices.

From the answers to MQ6, the majority of the primary studies fell under evaluation research and validation research. In evaluation research, researchers attempt to evaluate the proposed EA measurement solutions and determine the impact and outcomes of these solutions on organizations. These outcomes can provide readers insights about whether the research intentions and design of the EA measurement solution meets the intended objectives and benefits, including benefits to the organization. This contrast with the primary studies that fell under validation research: these measurement solutions were not yet implemented in practice with an industry partner. Readers of these primary studies would not therefore gain insights about the benefits of these EA measurement solutions on the organization. Therefore, researchers are encouraged to design more evaluation research on EA measurement.

From the answers to MQ7, the majority (64% - Fig.7) of the primary studies did not adopt knowledge from disciplines other than the emerging EA literature itself to propose an EA measurement solution. In other words, the majority conducted a literature review on EA measurement, and proposed an EA measurement solution based on this limited scope. For instance, primary study [A19] proposed an EA measurement solution (model) on such concepts as EA maturity stage, IT alignment, and operational IT effectiveness. On the other hand, 36% of the primary studies adopted concepts and practices from other disciplines in EA measurement. For instance, primary study [A9] proposed an approach to measure EA scenarios based on AHP method – a widely accepted decision-making technique. This being said and given that EA measurement research is still emerging and only slowly increasing (see MQ2 findings of this study), adopting measurement best practices and guidelines from other disciplines, such as science or engineering, to EA measurement research is a key direction to develop not only innovative but also sound EA measurement solutions. In addition, addressing the limitations found in the EA measurement solutions proposed to date is another key priority.

From the findings for MQ9, the research on EA measurement has inconsistently used distinct measurement terms and semantics. For example, approximatly 68% of the primary studies interchangeably used terms such as measurement, evaluation, assessment, and analysis. Since these terms refer to distinct concepts in measurement, this shows that the EA measurement literature lacks the terminology rigor that we find in engineering disciplines and science. EA measurement researchers should therefore adopt the measurement terminology used in mature fields.

From the answers to MQ10, ISO 15939 is present in almost none of the primary studies in the references list. Consequently, this indicates that the primary studies may not be considering measurement best practices in their design of EA measurement solutions. Hence, another research avenue is improving the design of EA measurement solutions based on the large consensus of metrology terms and best practices.

The literature on enterprise architecture (EA) posits that EA is of considerable value for organizations due to its expected significant benefits towards helping organizations achieve their business and effectiveness goals by aligning IT initiatives with business objectives. However, while the EA literature documents a number of proposals for EA measurement solutions, there is little evidence-based research to support the achievements and limitations of EA measurement research findings. In other words, few researchers have performed systematic reviews on enterprise architecture measurement topics.

This paper has reported on a systematic mapping study (SMS) of proposed EA measurement solutions. The study identified 22 relevant primary studies published from 2004 to the end of 2018, which were read and analyzed according to the objectives of ten mapping questions (MQ1-MQ10). The 22 studies were explored from various perspectives including, but not limited to, positioning of the EA measurement solution within an EA project life cycle, analysis of consistencyinconsistency of the terms used by authors in EA measurement research, and an analysis of references to the ISO 15939 measurement information model.

The SMS also undertook a classification of the research area within the primary studies revealing significant gaps and limitations. For instance, the findings indicate a limited adoption of knowledge from other disciplines in proposing an EA measurement solution, and in addition, that current EA research lacks the terminology rigor that found in science and engineering.

This SMS represents a first step in investigating and presenting new perspectives for future research in EA measurement research including:

-

• Designing EA measurement solutions that can

contribute to the different EA schools of thought.

-

• Designing EA measurement solutions that can

support the full EA project life cycle.

-

• Resolving the issues regarding consistency

inconsistency of using distinct terminologies such as “measurement,” “evaluation,” “analysis,” and “assessment.”

-

• Resolving the overlap of various measured concepts and sub-concepts to ensure widely accepted EA measurement solutions.

-

• Adopting knowledge from mature disciplines that provide guidelines and best practices on measurement and metrology.

In the interest of high quality primary studies, this SMS was focusing on primary studies published in journals. Thus, the primary studies that were analyzed may be a relatively small portion of the total publications on EA measurement research. This may affect the generalization of results of the 22 primary studies.

However, since the majority of the selected primary studies of this SMS are published in journals, we believe that that the quality and credibility of the primary articles is relatively high and representative. In future work, this SMS could be extended to include conference papers and books as well.

Appendix A. Selected Primary Studies

-

[A1 ] D. F. Rico, “A framework for measuring ROI of enterprise architecture,” J. Organ. End User Comput. , vol. 18, no. 2, pp. 1–12, 2006.

-

[A2 ] K. M., “Evaluation of ARIS and Zachman frameworks as enterprise architectures,” J. Inf. Organ. Sci. , vol. 30, no. 1, pp. 115–136, 2006.

-

[A3 ] F. Zandi and M. Tavana, “A fuzzy group multi-criteria enterprise architecture framework selection model,” Expert Syst. Appl. , vol. 39, no. 1, pp. 1165–1173, 2012.

-

[A4 ] T. Tamm, P. Seddon, G. Shanks, and P. Reynolds, “How Does Enterprise Architecture Add Value to Organisations?,” Commun. Assoc. Inf. Syst. , vol. 28, no. 10, pp. 141–168, 2011.

-

[A5 ] F. M.a, A. M.S.a, T. A. R.b, and R. J.a, “A novel credibility-based group decision making method for Enterprise Architecture scenario analysis using Data Envelopment Analysis,” Appl. Soft Comput. J. , vol. 32, pp. 347–368, 2015.

-

[A6 ] B. Jahani, S. R. S. Javadein, and H. A. Jafari, “Measurement of enterprise architecture readiness within organizations,” Bus. Strateg. Ser. , vol. 11, no. 3, 2010.

-

[A7 ] R. Foorthuis, M. van Steenbergen, S. Brinkkemper, and W. A. G. Bruls, “A theory building study of enterprise architecture practices and benefits,” Inf. Syst. Front. , vol. 18, no. 3, pp. 541–564, 2016.

-

[A8 ] S. Aier, “The role of organizational culture for grounding, management, guidance and effectiveness of enterprise architecture principles,” Inf. Syst. E-bus. Manag. , vol. 12, no. 1, pp. 43–70, 2014.

-

[A9 ] M. Razavi, F. S. Aliee, and K. Badie, “An AHP-based approach toward enterprise architecture analysis based on enterprise architecture quality attributes,” Knowl. Inf. Syst. , vol. 28, no. 2, pp. 449–472, 2011.

-

[A10 ] B. A. and A. R., “Usability elements as benchmarking criteria for enterprise architecture methodologies,” J. Teknol. (Sciences Eng. , vol. 68, no. 2, pp. 45–48, 2014.

-

[A11 ] M. Gammelgåd, M. Simonsson, and Å. Lindström, “An IT management assessment framework: Evaluating enterprise architecture scenarios,” Inf. Syst. E-bus. Manag. , vol. 5, no. 4, pp. 415–435, 2007.

-

[A12 ] F. Nikpay, R. Ahmad, and C. Yin Kia, “A hybrid method for evaluating enterprise architecture implementation,” Eval. Program Plann. , vol. 60, pp. 1–16, 2017.

-

[A13 ] M. Meyer and M. Helfert, “Design Science Evaluation for Enterprise Architecture Business Value Assessments,” Design Science Research in Information Systems. Advances in Theory and Practice, vol. 7286, pp. 108–121, 2013.

-

[A14 ] M. Lange, J. Mendling, and J. Recker, “An empirical analysis of the factors and measures of Enterprise Architecture Management success,” Eur. J. Inf. Syst. , vol. 25, no. 5, pp. 411–431, 2016.

-

[A15 ] J. M. Morganwalp and A. P. Sage, “Enterprise architecture measures of effectiveness,” Int. J. Technol. Policy Manag. , vol. 4, no. 1, pp. 81–94, 2004.

-

[A16 ] H. Safari, Z. Faraji, and S. Majidian, “Identifying and evaluating enterprise architecture risks using FMEA and fuzzy VIKOR,” J. Intell. Manuf. , vol. 27, no. 2, pp. 475–486, 2016.

-

[A17 ] I. Velitchkov, “Enterprise architecture metrics in the balanced scorecard for IT,” Inf. Syst. Control J. , vol. 3, pp. 1–6, 2009.

-

[A18 ] S. Lee, S. W. Oh, and K. Nam, “Transformational and transactional factors for the successful implementation of enterprise architecture in public sector,” Sustain. , vol. 8, no. 5, pp. 1–15, 2016.

-

[A19 ] R. V. Bradley, R. M. E. Pratt, T. A. Byrd, C. N. Outlay, and J. Donald E. Wynn, “Enterprise architecture, IT effectiveness and the mediating role of IT alignment in US hospitals,” Inf. Syst. J. , vol. 22, no. 2, pp. 97–127, 2011.

-

[A20 ] Y. I. Alzoubi, A. Q. Gill, and B. Moulton, “A measurement model to analyze the effect of agile enterprise architecture on geographically distributed agile development,” J. Softw. Eng. Res. Dev. , vol. 6, no. 1,2018.

-

[A21 ] M. Brückmann, K.-M. Schöne, S. Junginger, and D. Boudinova, “Evaluating enterprise architecture management initiatives - How to measure and control the degree of standardization of an IT landscape,” Enterp. Model. Inf. Syst. Archit. , pp. 155–168, 2009.

-

[A22 ] G. Shanks, M. Gloet, I. Asadi Someh, K. Frampton, and T. Tamm, “Achieving benefits with enterprise architecture,” J. Strateg. Inf. Syst. , vol. 27, no. 2, pp. 139–156, 2018.

Список литературы Enterprise architecture measurement: an extended systematic mapping study

- J. Nurmi, “Examining Enterprise Architecture : Defini- Tions and Theoretical Perspectives,” Master thesis, University of Jyväskylä, pp.1-68, 2018.

- N. Banaeianjahromi and K. Smolander, “The Role of Enterprise Architecture in Enterprise Integration – a Systematic Mapping,” in European, Mediterranean & Middle Eastern Conference on Information Systems, Doha (UAE), pp. 1–22, 2014.

- F. Gampfer, A. Jürgens, M. Müller, and R. Buchkremer, “Past, current and future trends in enterprise architecture—A view beyond the horizon,” Comput. Ind., vol. 100, pp. 70–84, 2018.

- M. J. a. Bonnet, “Measuring the Effectiveness of Enterprise Architecture Implementation, Master thesis,” Delft University of Technology, pp.1-90, 2009.

- S. H. Kaisler, F. Armour, and M. Valivullah, “Enterprise Architecting: Critical Problems,” in 38’th Annual Hawaii International Conference on System Sciences, Big Island (Hawai), pp. 1–10, 2005.

- H. Plessius, R. Slot, and L. Pruijt, “On the categorization and measurability of enterprise architecture benefits with the enterprise architecture value framework,” in Trends in Enterprise Architecture Research and Practice-Driven Research on Enterprise Transformation, Berlin (Germany), pp. 79–92, 2012.

- M. Razavi, F. S. Aliee, and K. Badie, “An AHP-based approach toward enterprise architecture analysis based on enterprise architecture quality attributes,” Knowl. Inf. Syst., vol. 28, no. 2, pp. 449–472, 2011.

- O. González-Rojas, A. López, and D. Correal, “Multilevel complexity measurement in enterprise architecture models,” Int. J. Comput. Integr. Manuf., vol. 30, no. 12, pp. 1280–1300, 2017.

- D. F. Rico, “A framework for measuring ROI of enterprise architecture,” J. Organ. End User Comput., vol. 18, no. 2, pp. 1–12, 2006.

- H. Safari, Z. Faraji, and S. Majidian, “Identifying and evaluating enterprise architecture risks using FMEA and fuzzy VIKOR,” J. Intell. Manuf., vol. 27, no. 2, pp. 475–486, 2016.

- K. Petersen, R. Feldt, S. Mujtaba, and M. Mattsson, “Systematic Mapping Studies in Software Engineering,” in 12’th International Conference on Evaluation and Assessment in Software Engineering, Bari, vol. 17, no. 1, pp. 68–77, 2008.

- Y. Gong and M. Janssen, “The value of and myths about enterprise architecture,” Int. J. Inf. Manage., vol. 46, pp. 1–9, 2019.

- P. Saint-Louis and J. Lapalme, “Investigation of the Lack of Common Understanding in the Discipline of Enterprise Architecture : A Systematic Mapping Study,” in 20’th IEEE International Enterprise Distributed Object Computing Workshop, Vienna (Austria), pp. 1–9, 2016.

- S. S. Hussein et al., “Towards designing an EA readiness instrument: A systematic review,” in 4’th IEEE International Colloquium on Information Science and Technology (CiSt), Tangier (Morocco), pp. 158–163, 2016.

- D. Jugel, K. Sandkuhl, and A. Zimmermann, “Visual Analytics in Enterprise Architecture Management: A Systematic Literature Review,” in International Conference on Business Information Systems, Poznań (Poland), pp. 99–110, 2017.

- P. Andersen and A. Carugati, “Enterprise Architecture Evaluation: A Systematic Literature Review,” in 8’th Mediterranean Conference on Information Systems, Verona (Italy), pp. 1–41, 2014.

- F. Nikpay, R. Ahmad, B. D. Rouhani, and S. Shamshirband, “A systematic review on post-implementation evaluation models of enterprise architecture artefacts,” Inf. Softw. Technol., vol. 62, no. 2015, pp. 1–20, 2015.

- A. Abdallah, J. Lapalme, and A. Abran, “Enterprise Architecture Measurement: A Systematic Mapping Study,” in 4’th International Conference on Enterprise Systems: Advances in Enterprise Systems, Melbourne (Australia), pp. 13–20, 2016.

- B. Kitchenham and S. Charters, “Guidelines for performing Systematic Literature reviews in Software Engineering Version 2.3,” UK, 2007.

- E. Namey, G. Guest, L. Thairu, and L. Johnson, “Data Reduction Techniques for Large Qualitative Data Sets,” in Handbook for Team-based Qualitative Research, AltaMira Press, pp. 137–162, 2008.

- D. S. Cruzes and T. Dyba, “Recommended Steps for Thematic Synthesis in Software Engineering,” in International Symposium on Empirical Software Engineering and Measurement, Banff (Canada), pp. 275–284, 2011.

- M. Vaismoradi, H. Turunen, and T. Bondas, “Content analysis and thematic analysis: Implications for conducting a qualitative descriptive study,” Nurs. Heal. Sci., vol. 15, no. 3, pp. 398–405, 2013.

- J. Lapalme, “Three schools of thought on enterprise architecture,” IT Prof., vol. 14, no. 6, pp. 37–43, 2012.

- M. Zarour, “Methods to Evaluate Lightweight Software Process Assessment Methods Based On Evaluation Theory and Engineering Design Principles, ”PhD thesis, Ecole de Technologie Superieure, Montréal (Canada), p. 240, 2009.

- H. van Loon, Process Assessment and Improvement: A Practical Guide for Managers, Quality Professionals and Assessors, 1'st ed. Berlin, Heidelberg: Springer-Verlag, 2004.

- A. Abran, Software Metrics and Software Metrology. John Wiley & Sons Interscience and IEEE-CS Press, New York, 2010.

- K. Krippendorff, Content analysis : an introduction to its methodology, 4th ed. Sage Publications, Los Angeles, 2018.

- K. Krippendorff and M. A. Bock, The content analysis reader. Sage Publications, Los Angeles, 2009.