Impact of Peer Feedback in a Web Programming Course on Students’ Achievement

Автор: Jelena Matejić, Aleksandar Milenković

Журнал: International Journal of Cognitive Research in Science, Engineering and Education @ijcrsee

Рубрика: Original research

Статья в выпуске: 1 vol.13, 2025 года.

Бесплатный доступ

In addition to learning technical aspects of website creation, students must connect different knowledge, think critically, seek more information, and approach their work from various perspectives. These requirements, or specified competencies, can be developed through providing and receiving peer feedback, which is confirmed as an efficient learning strategy in various empirical studies on different subjects. The aim of this research is to investigate the impact of peer feedback on students’ achievements in the Web Programming Course. We conducted a quantitative study with two groups of students. Students from the experimental group participated in online giving and receiving peer feedback regarding students’ assignments, while students from the control group did not have this opportunity. For evaluating students’ work, we used rubrics. The results indicate that students, through analyzing their peers’ work, providing qualitative assessments, reflecting on their work in line with received comments, and making necessary corrections, create content that surpasses the quality of websites created by their peers who did not have the opportunity to participate in peer feedback activities. Since students extensively analyzed their work after receiving comments and suggestions, made specific corrections, and consequently improved their projects, organizing peer feedback activities in programming courses, especially web programming, should be given more attention.

Peer feedback, web programming, students’ achievements, higher education

Короткий адрес: https://sciup.org/170209043

IDR: 170209043 | УДК: 378.147:004, 159.9.072-057.875 | DOI: 10.23947/2334-8496-2025-13-1-33-49

Текст научной статьи Impact of Peer Feedback in a Web Programming Course on Students’ Achievement

Traditionally, the main aim of assessment is to determine to what degree students have achieved the expected learning objectives by the end of the teaching period. Recent assessment approaches have moved away from the explicit focus on end-of-the-course (i.e., summative) testing to in-course (i.e., formative) assessment ( Rotsaert, 2017 ; Sudakova et al., 2022 ). This shift from a testing culture to an assessment culture ( Birenbaum, 2003 ), which makes assessment an integral aspect of learning, is better known as the ‘assessment for learning’ position ( Black and Wiliam, 1998 ).

Formative assessment has a rather student-centered character. Instead of contributing to final grades, formative assessment aims to provide support and feedback, allowing students to monitor their learning progress and identify their strengths and weaknesses ( Ashenafi, 2015 ). While the importance of formative assessment is stressed by several authors (e.g., Falchikov and Goldfinch, 2000 ; Carless, 2007 ), traditional assessment systems still seem to dominate the higher education scene ( Cañadas, 2020 ).

Peer assessments can be formative, summative, or a combination of both ( Dunn and Mulvenon, 2010 ; Topping, 2010a ). Based on the work of Topping (1998) , Strijbos and Sluijsmans (2010, p. 266) define peer assessment as “an educational arrangement where students judge peer’s performance quantitatively, by providing the peer with scores or grades, and/or qualitatively, by providing the peer with written or oral feedback.” In this paper, we explore the use of peer assessment, which Strijbos and Sluijsmans (2010) describe as a progressive and formative form of assessment, in an Introduction to Web Programming course.

Several benefits of peer assessment can be found in the literature. In line with society’s growing demand for more responsible, critic al, and thoughtful professionals in the labor market, an activity in which

students are challenged to assess fellow students’ work would help them to learn, among other things, how to criticize constructively and strengthen important cognitive dimensions such as critical thinking ( Wang et al., 2012 ). Peer evaluation is extremely important for strengthening critical thinking for students. It allows students to learn the process of evaluating their work but also to strengthen the skill of giving comments and suggestions assertively. Also, this is a skill that is necessary for teamwork, and it is very useful to practice developing it in the higher education process.

Research reports and case studies that examine peer review in the context of teaching programming have, in most cases, positive conclusions. Peer-reviewing enables students to critically analyze the work of their peers (colleagues). The opportunity to see and analyze different solutions can bring new ideas to students and help them view their work more thoughtfully. This gives the potential for improvement of programming courses in higher education, where the quality of the solutions is often measured ( Loll and Pink wart, 2009 ; Reily, Finnerty, and Terveen, 2009 ). In the case of student assessment in programming courses, peers could be exposed to various styles of coding and asked to give evaluations of their colleagues’ work, which allows students to improve their proficiency as programmers and deepen their understanding.

With this paper, we aim to contribute to the existing scientific literature that explores the significance and effects of peer feedback, specifically its application in an Introduction to Web Programming course with computer science students. Additionally, we seek to provide a practical contribution to the teaching of programming-related courses, to improve the teaching process and enhance the knowledge and competencies of students, who are future professionals in this field.

The paper is organized as follows: The second section presents findings from the literature, specifically on peer review, peer assessment, and peer feedback. The third section outlines the research question and hypothesis. The fourth section describes the research methodology, including the sample, of Introduction to Web Programming course characteristics, and the research procedure. The fifth section presents the results of the study, comparing the achievements of a group of students who engaged in peer feedback as part of their course projects with those of a group who did not have this opportunity. In the sixth section, the findings are discussed in the context of previous related research. Finally, the seventh section provides conclusions and pedagogical implications.

Theoretical background

The concepts of peer review, peer assessment, and peer feedback are closely interconnected and complement each other to a significant extent. In this section, we will describe their characteristics from the perspective of researchers who have explored these topics.

Peer review

The instructional aim of peer review with a feedback learning strategy is to enhance higher-level thinking by mutually exchanging critiques among peers (students). In the traditional process, students receive comments from the teacher, and in the peer review process, review comments are received from peers. Therefore, peer pressure may encourage students to perform higher-level cognitive functions ( Topping, 1998 ). During the peer review process, students expressed higher-level thinking such as critical thinking, planning, monitoring, and self-regulation ( Liu, Chiu, and Yuan, 2001 ). In the peer-reviewing process, beneficiaries are simultaneously the students who learn from others’ solutions and receive feedback and the teachers who can get assistance for the evaluation. In this manner, students may find peers’ solutions interesting because it can give them new ideas and a chance to learn analytical abilities from varying styles of solving problems ( Diefes-Dux and Verleger, 2009 ).

Peer Assessment

The term assessment is often interpreted as referring to marking, grading, measuring, or ranking. Consequently, peer assessment is usually regarded as students giving marks or grades to each other. Peer assessment is about getting and giving feedback, not about giving grades. It is the process whereby groups of individuals rate their peers. To overcome social relationship problems, feedback is best given online rather than in class because students tend to be more forthcoming and constructive in an online environment ( Liu and Carless, 2006 ).

Advocates for peer assessment argue that regular feedback on learning not only contributes to skill development but also motivation for learning ( Topping, 2009 ; Topping, 2010a ; William, 2011 ). For peer assessment to be considered effective, goals, practices, and outcomes of the practice must align. Student involvement in assessment appears to have been increasing in recent years. This increase appears across the spectrum of discipline areas, including science and engineering, arts and humanities, mathematics, computer science and education, social sciences, and business. Peer assessment is grounded in philosophies of active learning (e.g., Piaget, 1971 ) and may also be seen as a manifestation of social constructionism (e.g., Vygotsky, 1962 ), as it often involves the joint construction of knowledge through discourse. An important educational function of peer assessment is the provision of detailed peer feedback ( Falchikov, 1994, 1995, 2001 ).

The benefits of peer assessment may only be realized after a serious effort is made to incorporate it into everyday teaching practices in a way that is positive, non-threatening, and attractive to students ( Sluijsmans, Brand-Gruwel, and van Merrienboer, 2002 ).

Formative assessment by Cizek (2010) represents “the collaborative processes engaged in by educators and students to understand the students’ learning and conceptual organization, identification of strengths, diagnosis of weaknesses, areas of improvement, and as a source of information teachers can use in instructional planning and students can use in deepening their understanding and improving their achievement.” In defining ‘assessment for learning’, Black, Harrision, Lee, Marshall, and Wiliam (2004) include any action that provides information that teachers and their students can use as feedback in assessing themselves and one another and in modifying the teaching and learning activities in which they engage. In discussing formative peer assessment, Falchikov (1995) stated that peer assessments can focus on either the assessment of a product, such as writing, or the performance of a particular skill. This exercise may or may not entail previous discussion or agreement over criteria. It may involve the use of rating instruments or checklists, which may have been designed by others before the peer assessment exercise or by the user group to meet their needs. For Topping (2009) , peer assessment is an arrangement for learners to consider and specify the level, value, or quality of a product or performance of other equal-status learners (peers). Peer assessments appear to enhance the intrinsic motivation of students, and this form of assessment offers opportunities for students to learn outside the conventional pattern of student-teacher interaction ( Falchikov, 2003 ).

Peer feedback

Peer feedback refers to a collaborative activity between at least two peers ( Kollar and Fischer, 2010 ). It is a reflective engagement that impacts the work performed by both the giver and the receiver ( Fal- chikov, 2003 ). When peers are engaged as the agents for feedback, students benefit from the process, as the opportunity to observe and compare peers’ work could lead to work improvement ( Chang, Tseng, and Lou, 2012 ). Some research studies are proposed to understand how to deliver feedback in the best way possible, especially in providing constructive feedback that provides direction for improvement ( Fong et al., 2021 ). Feedback is a consequence of the expertise and performance of a student, as well as aims of reducing the discrepancy between the current and desired level of performance or understanding. Furthermore, different agents provide different forms of feedback, and perceptions of the usefulness of feedback depend both on the content of the feedback as well as on the provider of that feedback ( Evans, 2013 ). Feedback needs to provide information specifically relating to the task or process of learning that fills a gap between what is understood and what is intended to be understood. Winne and Butler (1994) provided an excellent summary in their claim that “feedback is information with which a learner can confirm, add to, overwrite, tune, or restructure information in memory, whether that information is domain knowledge, meta-cognitive knowledge, beliefs about themselves and tasks, or cognitive tactics and strategies” ( Winne and Butler, 1994 ).

There are two approaches to peer feedback: asynchronous and synchronous. In an asynchronous approach, the focus is on exclusively giving peer feedback without the need to act upon the feedback; in a synchronous approach, it involves iterative dialogues among students that could be induced by the given feedback (Adachi, Tai, and Dawson, 2018). Traditional face-to-face feedback occurs in a classroom setting, while online feedback could be text messages sent by the giver to the recipient via a technology platform (Liu, Du, Zhou, and Huang, 2021). Formative peer feedback can be given quantitatively (using a number to grade), qualitatively (verbal or text-based), or both (Topping, 2017). Specific feedback is more effective than unspecific or generalized feedback (Fong et al., 2021; Park, Johnson, Moon, and Lee, 2019). Being specific in the feedback comments implies elaborations in correction, confirmation justification, questioning, or suggestions that follow simple verifications (Alqassab, Strijbos, and Ufer, 2018).

Peer feedback thus provides the student with a broader scope of skills, but it also has some other advantages. Research on peer feedback suggests that involving students in the process of giving feedback improves the effectiveness of formative assessment and supports the learning process and outcomes ( Lu and Bol, 2007 ). To provide good-quality feedback, that is, feedback that the receiver can use to effectively enhance their learning, a minimum level of domain and content knowledge ( Geithner and Pollastro, 2016 ) and problem-solving skills are required.

Researchers suggest that anonymity within the peer feedback process may encourage student participation and reduce insecurity when giving feedback by reducing peer pressure ( Raes, Vanderhoven, and Schellens, 2015 ). For example, Raes, Vanderhoven, and Schellens (2015) investigated the effects of increasing anonymity using emerging technology. These researchers showed that anonymous peer feedback through a digital feedback system combines the positive feelings of safety by being anonymous with the perceived added value of giving peer feedback. In that manner, the anonymity of the feedback thus seems to influence the way the message is received and processed. Interaction between learners is a critical part of learning in many domains, including computer programming ( Warren, Rixner, Greiner, and Wong, 2014 ).

Feedback by itself may not have the power to initiate further action. Effective feedback must answer three major questions asked by a teacher and/or by a student: What are the goals? What progress is being made toward the goal? What activities need to be undertaken to make better progress? These questions correspond to notions of feed-up, feedback, and feed-forward ( Hattie and Timperley, 2007 ).

For the provider of peer feedback, it helps to improve students’ higher-level learning skills ( Davies and Berrow, 1998 ), critical thinking ( Ertmer et al., 2007 ; Lin, Liu, and Yuan, 2001 ), creating new concepts and connecting to what students already knew ( Nicol, 2009 ). Van Popta, Kral, Camp, Martens, and Si mons (2017) and Ion et al. (2019) suggested that providing feedback triggers several cognitive processes, such as comparing and questioning ideas, evaluating, suggesting modifications and reflection, planning and regulating one’s thinking, thinking critically, connecting to new knowledge, explaining, and taking different perspectives. Regarding the reliability of the students’ peer feedback, Falchikov and Goldfinch (2000) carried out a meta-analysis of 48 quantitative peer assessment studies that compared peer and teacher marks, demonstrating that students are generally able to make reasonably reliable judgments.

When it comes to programming assignments, students found the use of peer-reviewing systems for programming projects useful ( Hämäläinen, Hyyrynen, Ikonen, and Porras, 2011 ). They say that feedback indicates that reviewing others’ code and receiving comments is helpful, but that the numerical feedback given by the students and teachers was clearly on different scales, which leads us to think that evaluation criteria must be defined more clearly, and evaluations should also be rated. More attention must also be paid to providing written feedback, which is considered the most valuable part by the students ( Hämäläin- en, Hyyrynen, Ikonen, and Porras, 2011 ). Examining a Web-Based Peer Feedback System in an Introductory Computer Literacy Course Peer feedback, in which peer learners reflectively criticize each other’s performance according to pre-defined criteria electronically or face-to-face, is used in peer assessment, but mainly for formative use, and it enhances the quality of the learning process ( Pavlou and Kyza, 2013 ).

Research question

In our research, the main question is: Does the application of the web-based peer feedback process contribute to better students’ achievements in creating websites on the given topic?

We expect that after thoroughly analyzing the work of their peers, gaining new ideas, and approaching problem-solving from different angles, students will make a critical review of their work and think about how they could improve their product for the project assignment, i.e., their website.

Thus, the hypothesis for the research question is: The use of the web-based peer feedback process contributes to better students’ achievements in creating websites compared with the achievements of students who did not have the opportunity to analyze their peer work and make a critical review of their work based on the comments and suggestions written by their peers.

Materials and Methods

Participants

The research was conducted on 95 students in their first year of bachelor studies at the Department of Computer Science at the Faculty of Science and Mathematics in Niš, Serbia. The study took place over the academic years 2021/2022 (n=43) and 2022/2023 (n=52), and all participating students attended the “Introduction to Web Programming” course for the first time.

Course description

The “Introduction to Web Programming” course is designed in such a way that students first pass the pre-exam requirements in the amount of 65 points and then the oral exam in the amount of 35 points. In the pre-exam obligations, students’ project assignments are an obligatory part. The project assignment is based on practical work, where each student individually creates a website (in the following text, we will use the terms website and project assignment equally). The maximum value of the project is 20 points, which represents 20% of the total number of points in the course. That is slightly more than 30% of the points that the student can achieve on the pre-examination requirements of this course. The knowledge that is needed in creating a project is largely acquired in lectures and exercises on the subject “Introduction to Web Programming.” However, for the highest quality project, it is necessary for students to independently research, use adequate literature, and especially use Internet resources in the proper way for which they were trained during the course.

Students were randomly assigned one of thirty different project topics, and their assignment was to create a website on the given topic to simulate a real-life situation. In real life, an employee receives a client who comes with a request and an already predetermined topic. The list of project topics is very diverse, and some of the topics are travel agencies, flower shops, airline companies, IT companies, pet shops, marketing agencies, law offices, school/college websites, dental offices, hair salons, event planner, taxi association, cinema, car showroom, etc.

The research procedure

During the year 2022 of the research study, 43 students participated in the research. At the beginning of the course, all the practical information and course expectations were communicated, including the assessment criteria for the realization of the website. As they continuously gain knowledge during the course, they can upgrade their project every week, and the deadline for the final submission of the project is 7 days after the end of the course. In 2022, no peer assessment activity was linked to the realization of the website, so this group of students is seen as the control group in this study.

During 2023, 52 students participated in the course and the research. We will name these 52 students and their projects as the experimental group. Similarly to the first year of research, in the second year of research, students received a clear plan and programme for the distribution of points. After the first month of the course, each student was randomly assigned a project topic. The deadline for submitting the final project remained the same, which was 7 days after the end of the course. After the students had submitted their projects, the teaching assistant divided the students into groups of four. The moment of submission determined to which group the student belonged; the first four assignments were placed in the first group, and so on. As 52 projects were submitted in the second year of research, the students were divided into 13 groups of 4 members. Each group of students got their appointment, where each of the students had the opportunity to present their project to colleagues from their group and to the teaching assistant. After the presentation session, each member of the group had the task of assessing the work of the three other colleagues in the group by writing feedback to peers. The assessment was done anonymously by filling out a form through a series of questions (see Appendix 2). For each of the questions, the student should write a comment and suggestion to their peer, while their peer can give his or her feedback on the comment and suggestion they received. Suggestions and responses to suggestions were open-ended, and there was no limit to the number of characters required to fill in these fields.

Students’ projects were evaluated using rubrics in both years (for both the control and experimental groups), and the feedback form was aligned with the elements being evaluated. When creating feedback questions, special care was taken to ensure that students saw what the most important elements were when creating a project.

After the presentation session, students were given three days to complete a feedback form for all members of their group. During those three days, the students had the right to view the work of colleagues from their group, where they could look at the work itself in more detail and adequately answer the questions in the feedback form, giving comments and suggestions to peer colleagues. As previously stated, the peer feedback process is anonymous for the students because they will not know which feedback they received from which colleague. However, for the teaching assistant, the feedback process is not anonymous. The teaching assistant could have an insight into the regularity of giving answers and determine if any of the students did not give their colleagues an answer. In this way, irregularities can be prevented, and, if necessary, the teaching assistant can write to a specific student. In the realization of the described procedure, the authors created a web application that was used for these purposes. As stated earlier, the deadline for posting comments to group members was 3 days. After receiving the comments, the student had a deadline of 5 days to eventually make corrections to the project, correct errors if they were noticed by a peer colleague and improve the program code. After the time had expired, the teaching assistant evaluated the last received version of the project, which was considered to be the final version of the project. The final versions of the websites were evaluated according to the rubrics. Let us emphasize again that the evaluation criteria were the same during the first and second years of research (for the control group and the experimental group of students).

Assessment of the students’ project assignment by the teacher

The teaching assistant checks all the students’ projects (projects created by the students from the control group and those from the experimental group) and evaluates them according to the table of criteria (see Appendix 1 ). For both groups, students received a universal table of rubrics, requirements, and criteria, according to which their projects were evaluated.

As can be seen from Table 1 ( Appendix 1 ), each of the sections is marked with a different color. Colors range from lighter to darker, where lighter colors represent simpler project criteria while darker colors represent more advanced project criteria. For each of the categories, we have defined three levels at which the project meets the given criteria: below average, average, and above average. Each of these levels, for each of the criteria, is explained descriptively, along with the number of points that students can achieve for a certain category and a certain level of success in that category. As can be seen, there is a difference of 0.5 between each of the two values on the point scale. In this way, we achieve a more detailed and transparent evaluation process.

Web application for peer review process

To implement peer review, we needed a tool that would divide students into groups and allow them to give specific feedback on each other’s work. Although there are applications that enable the division of students into groups and their collaboration, we decided to create our own web application. The application is completely free and can be found at the link: The main reason for creating an application was a combination of specific requirements for the research. We will start with registration in the application. In the application, it is possible to register as a teacher or as a student. There are different opportunities depending on the chosen role. Naturally, the teacher has a much wider range of powers than the student.

Teacher’s role in peer review application

As previously mentioned, registering in the application as a teacher offers a wider range of possibilities and control. If you register as a teacher in the application, your main advantage is that you can create student profiles, divide students into groups, create a survey, add questions, and assign surveys to students who are in the system. Given that the teacher can create student profiles, it significantly facilitates the job of dividing students into groups even without their prior knowledge of the application.

Before the project presentation day, the teacher can very easily create all student profiles (by specifying the students’ email addresses), divide them into groups, and be sure that it has provided the necessary prerequisites for peer feedback.

Another important aspect is creating a questionnaire that each of the students will fill out for each of their colleagues in their group. Creating a questionnaire and adding questions is very simple, like in surveys in other similar systems. After creating the questionnaire, it can be assigned to the desired groups of students who need to complete it for each of the members within their group. All students’ responses are recorded in the database and can be viewed by the teacher in the user interface of the application. In such a manner, the teacher can follow the interactions and responses of the students, as well as analyze the content of those responses. This type of monitoring is very useful and provides teachers with an extremely broad picture, but on the other hand, it can also be based on the interactions of one specific student or the answers of all students to a specific question.

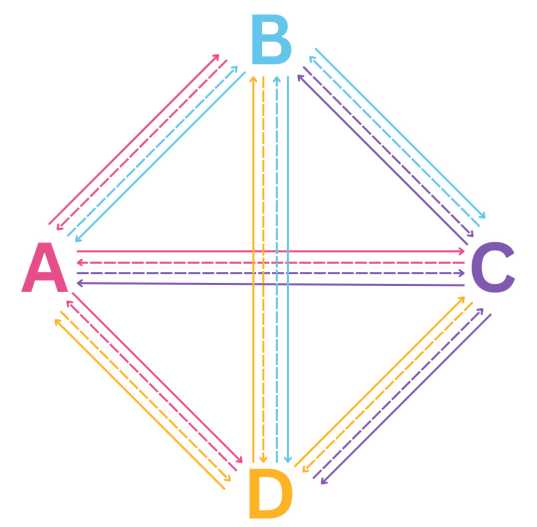

Observed formative assessment is also the most complex part of the process for the teacher. The teacher must monitor all student interactions and consider the comments and suggestions that the students have received, as well as the reaction of the evaluated students to the comments. Using the example of a four-member group, we will graphically present all interactions that the teacher should monitor. Solid arrows represent giving comments or suggestions on colleagues’ work, while dashed arrows represent a feedback response to a comment or suggestion received.

Figure 1. Peer feedback scheme

With a simple calculation, we conclude that in one four-member group, we have 24 interactions. In the concrete example of 13 groups, as many as there were in the experimental group, the teacher needs to check 312 interactions, where each interaction represents the textual feedback or the textual response to the feedback that the student got earlier. This is a very complex process, requiring a lot of energy and time for analysis.

Students’ role in peer review application

When a student registers on the web platform, the student uses an email address. After logging in, the student can see all the questionnaires on the page assigned to that email address by the teacher. As the questionnaires are related to peer feedback and each of the students is in a group with their other colleagues, the logged-in student will see more questionnaires to complete. More precisely, the student should write an opinion for the projects of all colleagues from the team and have as many questionnaires as there are members in the group. In our specific case, there were 4 students in each group; therefore, each of the students got 3 projects to evaluate. Questionnaires were coded so that the student knew exactly which project was being evaluated. For example, let there be students A, B, C, and D in one group.

Student A should write feedback for the projects of students B, C, and D. Student A knows exactly which questionnaire is dedicated to student B, which one is to student C, and which one is to student D. After student A gives feedback to colleague B, colleague B receives the feedback in the given form, and from that moment on, they can start considering them. For each of the feedback that they got, student B can give feedback (answer) to the feedback, express an opinion about the feedback received, and forward it to the colleague that they got it from. It can be noticed once again that the teacher who created the questionnaire supervises the entire process of peer feedback, while this process is anonymous for students (students know which other three students write feedback for them because they wrote feedback to them, but they do not know which of this feedback were written by which student).

Results

As already mentioned earlier, rubrics were used for the evaluation of the project by teachers, where five criteria were considered, and for each criterion, three levels were defined depending on the extent to which the students satisfied the given criterion (below average, average, and above average, see Table 1). Since not all criteria were equally important for the quality of the entire project, a scale of the number of points was created for each level within each criterion. Based on all those five criteria, the total number of points for the student project was calculated. Bearing in mind that the number of points, as well as the total number of points scored by the students for the five criteria mentioned, did not have a normal distribution, the non-parametric Mann-Whitney test was used to compare the results of the control and experimental groups.

Table 1. Students’ results for every criterion in project assignment

|

Criterium |

Group |

Number of students |

Medians |

Mean rank |

Sum of ranks |

Mann-Whitney U test Z p (2-tailed) |

|

|

Clean code |

Experimental |

52 |

1.50 |

52.80 |

2745.50 |

-2.280 |

0.023 |

|

Control |

43 |

1.50 |

42.20 |

1814.50 |

|||

|

Header/ footer, navigation |

Experimental |

52 |

3.00 |

48.82 |

2538.50 |

-0.465 |

0.642 |

|

Control |

43 |

3.00 |

47.01 |

2021.50 |

|||

|

Tables, forms, pictures |

Experimental |

52 |

3.00 |

48.80 |

2537.50 |

-0.391 |

0.696 |

|

Control |

43 |

3.00 |

47.03 |

2022,50 |

|||

|

Responsive web design |

Experimental |

52 |

4.50 |

53.01 |

2756.50 |

-2.032 |

0.042 |

|

Control |

43 |

3.00 |

41.94 |

1803.50 |

|||

|

JavaScript functional part of the |

Experimental |

52 |

6.50 |

53.37 |

2775 1785 |

-2.114 |

0.034 |

|

web site |

Control |

43 |

5.00 |

41.51 |

|||

The first aspect of the project that was evaluated was “clean code.” The maximum number of points that students could score for this criterion was 1.5. Looking at Table 1, as the medians of the given characteristics for both groups are equal to 1.5, we can conclude that more than half of the students in both groups had the maximum number of points. However, the number of students in the experimental group with the maximum number of points in this category is higher, because the distribution of the number of points for “clean code” is statistically significantly different for the students of the experimental group and the control group, in favor of the students of the experimental group (Z=-2.280, p=0.023).

Another aspect that was valued by the teacher (and about which the students were informed) was related to the quality of the students’ responses to the requests regarding the header, footer, as well as navigation on the created web page. With the appropriate statistical analysis, it was established that there are no statistically significant differences in the answers of students in the control and experimental groups in the quality of solving this aspect of websites (Z=-0.465, p=0.642). There are no significant differences in the students’ results regarding the third criterion, which refers to the choice of tables, forms, and images for the website, as well as the way in which these elements were implemented on the students’ project assignment (Z=-0.391, p=0.696). As the maximum number of points for both criteria are 3 and as the median number of points for both criteria, in both groups of students, equals 3, we can conclude that the students generally responded very well to these requirements, regardless of which group they belonged to.

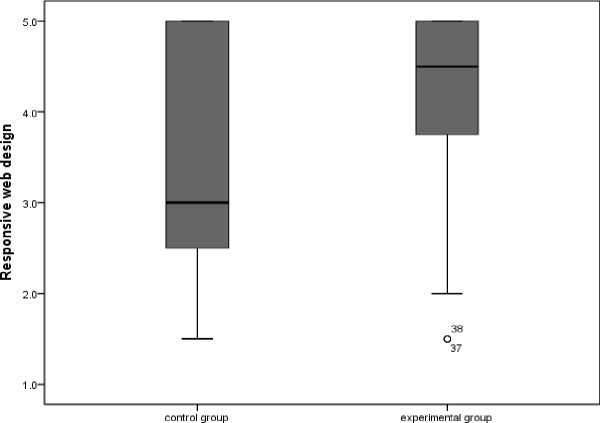

The next criterion considered during the evaluation of the project assignment by the teacher was related to the responsiveness of the website, which represents one of the two most important segments of the student project assignment.

group

Figure 2. Distribution of points earned by students for web page responsiveness.

The results of the statistical analysis, i.e., the Mann-Whitney test, support the fact that the distribution of the number of points of students in the experimental group and the number of points of students in the control group is statistically significantly different (Z=-2.032, p=0.042). Based on the results presented in Fig.2 and Table 1, the results of the statistical analysis imply that the students of the experimental group responded to a greater extent to the requirements of this extremely important aspect in the creation of a website, i.e., web programming.

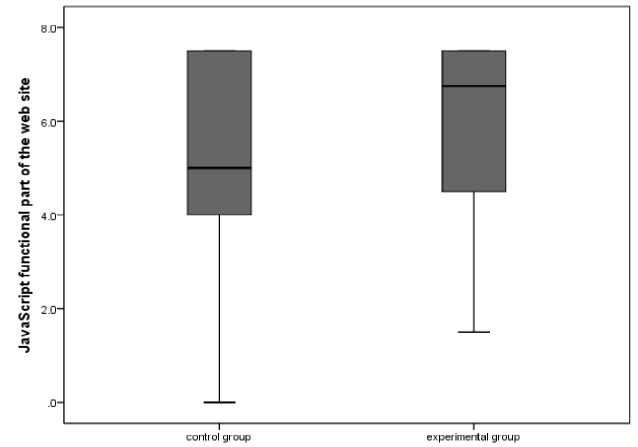

group

Figure 3. Distribution of points earned by students for the JavaScript functional component of their webpages

The last criterion, and at the same time, the most important one for the successful creation of a website, is the use of JavaScript code in the creation of a website. Out of a total of 20 points provided for the evaluation of the project, 7.5 points could be achieved by the students for JavaScript functionality. Based on the median (which for this feature in the experimental group was 6.5 points and 5 points in the control group), the effects of peer feedback on the success of students can be noticed when it comes to the last criterion (see Fig.3 and Table 1). These differences were confirmed with the Mann-Whitney test, so we can conclude that the differences in students’ success in the functionality of the students’ project results are statistically significant (Z=-2.114, p=0.034) in favor of the experimental group.

Table 2. Results of the Mann-Whitney test for total number of points for the project assignment

|

Group |

Number of students |

Medians |

Mean rank |

Sum of ranks |

Test of difference Z |

between arithmetic means p (2-tailed) |

|

Experimental |

52 |

18.00 |

54.30 |

2823.50 |

-2.460 |

0.014 |

|

Control |

43 |

15.00 |

40.38 |

1736.40 |

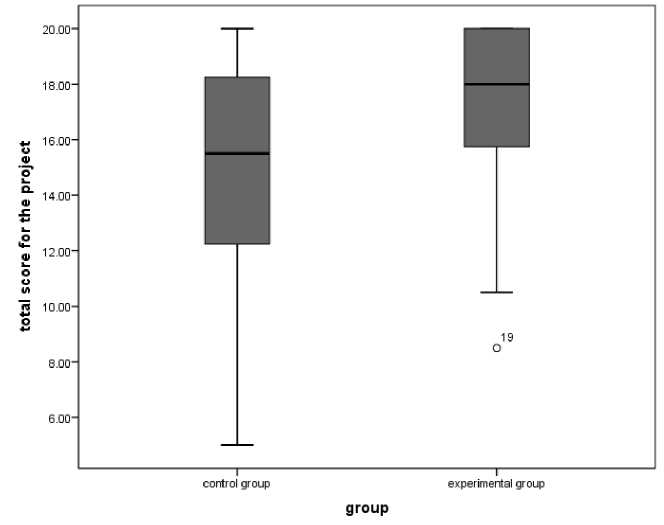

All the differences in the considered criteria were reflected in the overall result of the project assignment, i.e., the website created by the students on the given topic. Based on the results from Table 2 we can see that students who mutually made formative assessments for the works of their colleagues and had the opportunity to review their work and correct it (after receiving the comments and suggestions of their colleagues), if they think that the acceptance of the comments and working in accordance with those comments could lead to the improvement of their website, achieved better results (the median of the total number of points of the experimental group is 18, while the median of the total number of points of the experimental group is 15 points). This was confirmed by the Mann-Whitney test (Z=-2.460, p=0.014), so we can claim that the peer feedback process of the students’ work influenced the students of the experimental group achieved statistically significantly better achievements. This practically represents one of the most significant results of this research.

Figure 4. Distribution of total points earned by students for their project assignment

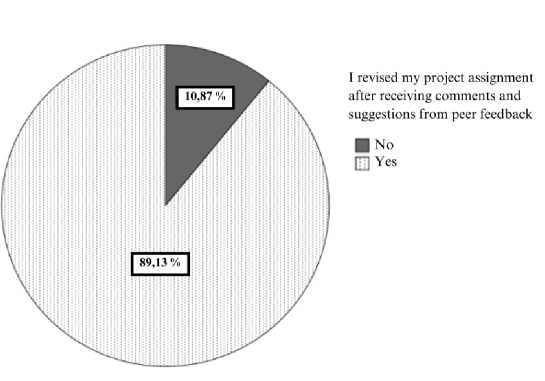

In addition to the analysis of the above-mentioned students’ answers, we were particularly interested in whether the students, based on the comments they received from their colleagues, revised their work again. Based on the graph presented in Figure 4, we can conclude that out of 46 surveyed students, only 5 of them did not revise their work, i.e., 89.13% of students have additionally analyzed the product of their project assignment, which speaks in favor of the fact that students took the comments they have received from their colleagues seriously into consideration. This is also a significant result of this research because it supports the fact that comments and suggestions from peer feedback contribute to higher cognitive levels of learning as well as to the development of critical thinking among students, which is of course one of the goals of higher education in general.

Figure 5. Number of students that revised their project assignment after receiving comments and suggestions from peer feedback

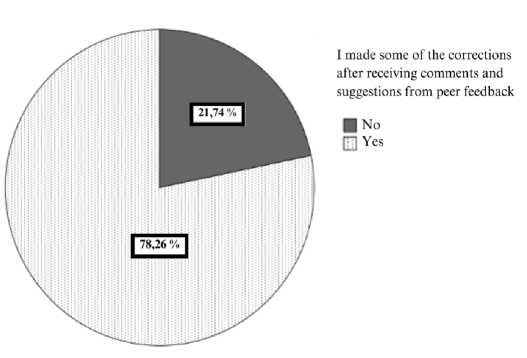

With the second question in the survey, we wanted to determine what percentage of students made certain changes in their work based on the comments and suggestions they received from their colleagues. As many as 36 out of 46 (78.26%) surveyed students made some of the corrections in accordance with what their colleagues advised and suggested. This, of course, speaks in favor of the fact that students, in general, performed a serious evaluation of the given project, which represents another extremely significant result of this methodological approach in the web programming course.

Figure 6. Number of students that made some of the corrections after receiving comments and suggestions from peer feedback.

After the last statement in the questionnaire, we wanted to determine what kind of improvement students made (if they made any changes) after reading and thinking about the peer feedback they received. Therefore, in the questionnaire, we gave the open-ended question to write what kind of changes they made (if they have made any, of course). After analyzing their responses, we categorized them according to the criteria we gave them in the feedback form. We must emphasize that some students made changes regarding more than one criterion, but we also must emphasize that some students commented that they did not agree with some comments and suggestions they received, with the explanation that they did not believe that proposed changes would improve their project assignment.

Table 3. Number of changes that students made according to the comments and suggestions they received from their peer colleagues

|

Criterion |

Number of changes |

|

Changes concerning header and/or footer corrections, images, menus, personal presentation pages, tables, forms, events, and functions. Changes concerning the responsiveness of the site. Changes concerning the content of the page. Changes concerning aesthetic components (choice of colors and text). Changes regarding the visibility and intuitiveness of the site. Changes in JavaScript code. |

24 12 9 18 7 19 |

After analyzing the number of changes that students made in Table 3, we can see that the students mostly made changes in their project assignment that they believed they improved. Two-thirds of the students made some changes regarding header and/or footer management, image, menu, personal presentation page, table, form, function, or event. Nineteen students made changes regarding the functionality of the website they created by improving their JavaScript code, which is more than half of the students who made some adjustments. Fifty percent of the students who made some adjustments made their website aesthetically better. One-third of the students who made some changes tried to make the responsiveness of the website better. The changes that refer to the visibility and intuitiveness of the website (7) and its content (9) are present to a lesser extent in students’ attempts to improve their project assignments.

Discussion

During the design of the research, we opted for a synchronous approach, establishing a two-way peer-to-peer dialogue involving the exchange of comments and suggestions, as well as responding to received feedback ( Adachi, Tai, and Dawson, 2018 ). The students’ analysis of their peers’ work, along with a reflective review of their work in line with the received comments and advice for improvement, influenced students’ ability to provide their peers with constructive criticism and develop essential cognitive functions such as critical thinking, as it has been linked to the previous research ( Wang et al., 2012 ). Considering that all students fulfilled their obligations regarding providing feedback and responding to it, made efforts to be objective, and made no comments interpreted personally, the significant number of students genuinely considering their peers’ advice and suggestions for improving their work indicates that participating in these activities in an online environment contributes to a well-accepted and constructive dialogue ( Liu and Carless, 2006 ). This result aligns with the findings of Falchikov and Goldfinch (2000) , who concluded in their meta-analysis that students predominantly provide reasonable and objective comments.

Our results suggest that students thrived in acquiring knowledge and skills related to website creation through the given activities, where they had the opportunity to analyze their peers’ works and compare their approaches to solving specific problems with those of their peers ( Chang, Tseng and Lou, 2012 ). The research results indicate that participating in peer assessment through formative evaluation of peers’ work ( Topping, 2017 ) supports the learning process and leads to better achievements ( Lu and Bol, 2007 ).

Limitations and recommendations for future research

In this study, we analyze the peer feedback provided by students for their fellow students, aiming to help students achieve better results and critically reflect on their work. During this process, we did not pay attention to the way students were distributed into groups in the context of their achievements, learning styles, and other characteristics. Considering these aspects could be a direction for future, related research.

Conclusions

Based on all the results obtained, we can conclude that using peer feedback gives good results when it comes to integrating the peer feedback process into the project assignment, which refers to creating a website on the given topic. Firstly, we analyzed five criteria to examine the influence of this approach in more detail. Statistically important differences were shown in analyzing the used code “clean”; the website is responsive, which is of course a very important part of the task, having in mind that we could approach the website from different devices in everyday situations; and of course, the very important part of web programming and website creation, the JavaScript functionality. The differences in students’ success in some technical respects, such as headers and footers, navigation, tables, forms, and pictures, between the works of students who didn’t have the opportunity to write feedback and read the ones written for their work assignments and the students who did have that kind of opportunity were not significant statistically. Despite that, the differences in clean code, responsiveness of the website, and JavaScript functionality influenced the total score of the students’ achievements. This confirms our hypothesis that the web-based peer feedback process contributes to better students’ achievements in creating a website compared with the achievements of the students who did not have that opportunity. Additionally, many of the students reviewed their work, and in many cases, they also made some changes that they believed would lead to project improvements. This illustrates that students were aware of the advantages of the web-based peer feedback process for their project assignment, as they had the possibility of improving their assignment. Having all the results in mind, we can conclude that the use of the web-based peer feedback process contributes to better students’ achievements while creating their project assignments in a web programming course.

Considering that when creating specific solutions for given problems or requirements from various sectors of society through programming, the way an individual (student) perceives the problem, and its solution may vary to some extent from person to person. Obtaining qualitative peer feedback can indicate to the student that the given problem can be solved in different ways and that various principles and ideas can be considered. Therefore, the possibility for computer science students to write and receive peer feedback should be taken more seriously, and these activities should be practiced more frequently with students during their academic studies.

Conflict of interests

The authors declare no conflict of interest.

Acknowledgments

This work was supported by the Erasmus+ Programme of the European Union under the project Master Degree in Integrating Innovative STEM Strategies in Higher Education (Project Reference: 2024-1-BG01-KA220-HED-000253761).

Additional support was provided by the Ministry of Science, Technological Development and Innovation of the Republic of Serbia, under the research program for 2025 (Agreements No. 451-03-137/202503/200124 and No. 451-03-137/2025-03/ 200122).

Author Contributions

Conceptualization, J.M. and A.M.; methodology, J.M. and A.M.; software, J.M. and A.M; formal analysis, J.M. and A.M.; writing—original draft preparation, J.M. and A.M.; writing—review and editing, J.M. and A.M. All authors have read and agreed to the published version of the manuscript.