Implementation of Computer Vision Based Industrial Fire Safety Automation by Using Neuro-Fuzzy Algorithms

Автор: Manjunatha K.C., Mohana H.S, P.A Vijaya

Журнал: International Journal of Information Technology and Computer Science(IJITCS) @ijitcs

Статья в выпуске: 4 Vol. 7, 2015 года.

Бесплатный доступ

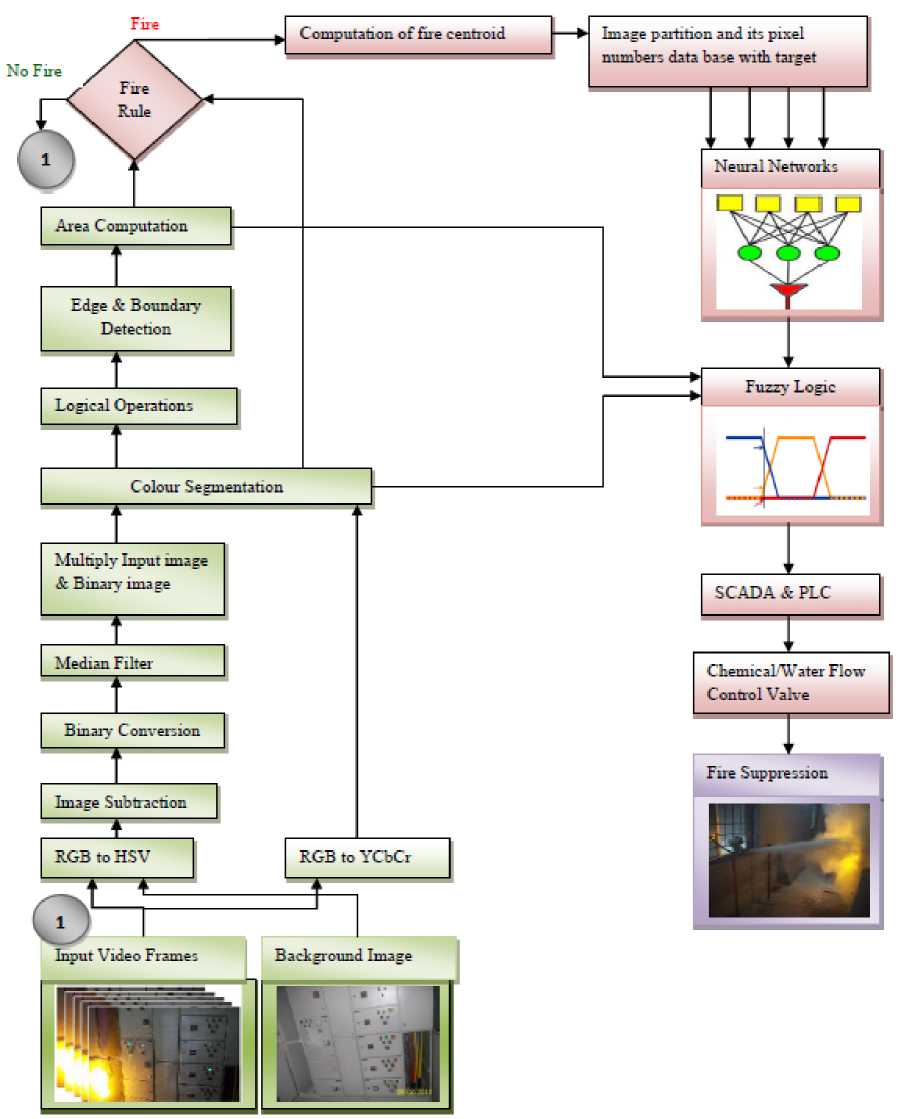

A computer vision-based automated fire detection and suppression system for manufacturing industries is presented in this paper. Automated fire suppression system plays a very significant role in Onsite Emergency System (OES) as it can prevent accidents and losses to the industry. A rule based generic collective model for fire pixel classification is proposed for a single camera with multiple fire suppression chemical control valves. Neuro-Fuzzy algorithm is used to identify the exact location of fire pixels in the image frame. Again the fuzzy logic is proposed to identify the valve to be controlled based on the area of the fire and intensity values of the fire pixels. The fuzzy output is given to supervisory control and data acquisition (SCADA) system to generate suitable analog values for the control valve operation based on fire characteristics. Results with both fire identification and suppression systems have been presented. The proposed method achieves up to 99% of accuracy in fire detection and automated suppression.

Onsite Emergency System, SCADA, PLC, Weighted Centroid, Fire Pixel Number, Neuro-Fuzzy Algorithm

Короткий адрес: https://sciup.org/15012268

IDR: 15012268

Текст научной статьи Implementation of Computer Vision Based Industrial Fire Safety Automation by Using Neuro-Fuzzy Algorithms

Published Online March 2015 in MECS

Vision-based fire detection and automated suppression (VFDAS) systems are one of the most important mechanisms in manufacturing and process industries. It is more critical in industries which use oil, gas and petrochemicals as fuels. A fast automated detection system has to be ready in order to prevent any fire accidents and avoid loss of life and property. VFDAS is newly developed technique based on computer vision, image processing and neuro-fuzzy algorithms. Visionbased fire detection (VFD) has many advantages over traditional methods such has fast response, non-contact and no installation limitations. Currently, most of the fire detection systems use sensors for detecting smoke, rise in temperature etc. They need a considerable time for responding as the sensors require product of fire (e.g., smoke, temperature etc.) to reach the sensors. Also they have to be carefully placed in selected locations. Such a sensor-based fire detection system is unsuitable for large industries with open spaces when products of combustion may become out of reach of the sensors and can reduce the possibility of detection.

Vision-based fire detection and automated suppression system offers several advantages. First, the cost is less, as this system is based on cameras and industries are mostly equipped with CCTVs for surveillance. SCADA and PLC may also be present if there is process automation. Second, the response time is faster as it does not have to wait for any product of combustion. Fire suppression chemical control valve will be operational at the start of fire itself thus reducing the scope for spreading of fire. Finally, in case of false alarm, confirmation can be done from a control room without rushing to the location. As it is important to have a fast fire detection and suppression system, a computer vision based technique is proposed in this paper. This paper initially focuses on video and image processing for flame pixels detection. Once the fire is confirmed the focus is on the computation of location and intensity of the fire using neuro-fuzzy algorithms. Finally, the attention is on the fire suppression chemical control valve operation through SCADA, PLC and I/O configuration. In industries, critical areas which are prone to fire accidents are identified by a survey. CCD cameras are installed in these areas with proper scene planning. Also a number of fire suppression chemical control valves are installed which are connected to centralized chemical pumping station. Series of image frames from the continuous video stream is acquired. These frames are processed with a stored image taken when the situation is normal -considered as the background image. Successive frames (ith and i+1th) of the video stream and normal background image (j) are processed at a time. Fire rules are applied to identify the fire pixels. In second stage, the particular location where fire has occurred is identified by using neural-network. Finally, the fire suppression chemical control valve in that location is operated with the help of fuzzy logic. The control system communication is done through PROFIBUS and I/O signal conditioning circuits.

This paper is structured in such a way that literature pertaining to recent developments in computer vision and their applications. Problem is defined based on fundamental study and practical experience, the fire characteristics are studied in profound manner for the implementation. Methodologies are framed for the automated fire suppression system and sequential implementation has been done to achieve the anticipated results. All the sequential results are presented and discussed in the vision of performance of the implemented system.

-

II. Related Works

Several approaches have been suggested in literature to identify fire by using variety of image processing techniques. But very few systems have been attempted for automated fire suppression. Tao Chen, Hongyong Yuan, Guofeng Su, Weicheng Fan (2004) experimented an automatic fire searching and suppression system with remote controlled fire monitors for large space. The fire searching method is based on computer vision using one CCD camera fixed at the end of a fire monitor chamber. A sensor based automated firefighting system with smoke and temperature detection has been attempted by Mohammad Jane Alam Khan Muhammed Rifat Imam, Jashim Uddin, M. A. Rashid Sarkar (2012). An autonomous fire extinguishing system that detects, targets, and extinguishes a fire within a working space by using heat sensors, a flow control system, servo motors, and a water extinguishing gun has been implemented by A. Rehman, N. Masood, S. Arif, U. Shahbaz (2012). Andrey N. Pavlov, Evgeniy S. Povemov (2009), conducted an experiment to test of automatic fire gas explosion suppression system. Changwoo Ha, Ung Hwang, Gwanggil Jeon, Joongwhee Cho, and Jechang Jeong (2012) proposed vision-based fire detection algorithm by using optical flow algorithm. Tian Qiu, Yong Yan and Gang Lu (2012) have attempted the determination of flame or fire edges. A rule-based generic color model for flame pixel classification has been proposed by Turgay C- elik, Hasan Demirel (2008). Bo-Ho Cho, Jong-Wook Bae, and Sung-Hwan Jung (2008) studied about automatic fire detection system without the heuristic fixed threshold values and presented an automatic method using the statistical color model and the binary background mask. A Multisensor Fire detection algorithm is suggested by KuoL. Su (2006). Hideaki Yamagishi Jun’ichi Yamaguchi (1999) developed a method which fire flame can be detected by calculating a space-time fluctuation data on a contour of the flame area extracted by color information. A hybrid clustering algorithm for fire detection is proposed by Ishita Chakraborty and Tanoy Kr. Paul (2010), the same analyzed with color based thresholding method. Dengyi Zhang, Shizhong Han, Jianhui Zhao (2009) proposed a real-time forest fire detection algorithm using artificial neural networks based on dynamic characteristics of fire regions segmented from video images. Fire region is obtained from image with the help of threshold values in HSV color space. A fire detection model based on fuzzy neural network is studied by Quanmin GUO Junjie DAI Jian WANG (2010) and the simulation experiments were

carried out for smoldering fire SH1 and flaming fire SH3 of the china national standard test fires. The simulation study shows that the model combines the advantages of fuzzy system and neural network, and improves the intelligence of fire detection, has a stronger ability to adapt the environment. GUO Jian, ZHU Jie, and ZHAO Mingru (2009) are applied Self-Adaptive Neural Fuzzy Network in Early Detection of Conveyor Belt Fire. It has four inputs to neural fuzzy called temperature, rate of temperature change, dense of carbon monoxide and rate of CO dense change. In 2004, the fire detection method proposed by Chen et al. adopted the RGB color based chromatic model and used disorder measurement. They used the intensity and saturation of red component and the segmentation by image differencing. In 2007, Lee and Han also used the RGB color input video for real-time fire detection in the tunnel environment with many predetermined threshold values.

-

III. Problem Definition

Fire is a big threat for large and medium scale industries involved in production or processing. Fire accidents result in loss of life and property. It damages goodwill and effects environment severely. It may affect the industries around it. A survey conducted by Federation of India Chambers Commerce and Industry (FICCI) in 2010-11, has reported as many as 22,187 fire related calls resulting in 447 deaths and 2,613 injuries across India. The risk of fire has been rated among the top six risks in India. In a survey conducted by Allianz Global Corporate & Specialty (AGCS) during January 2013, it was found that fire and explosion replaced “economic risk” as the third most important forwardlooking risk for the year ahead. Fig (1) shows fire explosions in different industrial areas along with the current suppression system. Fire occurred at motor control center of a steel plant due to short circuit shown in figs 1(a) and 1(b). Fig 1(c) shows the fire caused due to improper closing of manhole door in the steel making furnace. Fire on electrical cable tray due to overheat is shown in fig 1(d). A fire explosion at power plant transformer station is shown in figs 1(e) and 1(f). Fig 1(g) and 1(h) show a fire explosion in a natural gas and oil refinery industry. Currently available manual fire suppression systems are shown in figs 1(i) and 1(j).

Fig.1.(a)

Fig.1.(b)

Fig.1.(f)

Fig.1.(g)

Fig.1.(c)

Fig.1.(d)

Fig.1.(e)

Fig.1.(i)

Fig.1.(j)

-

(c) Improper closing of steel furnace door caused fire leakage,

-

(d) Fire in electrical cable tray, (e) & (f) Fire at power plant substation,

-

(g) Fire explotion at natural gas plant,

-

(h) Fire explosion at oil refinary industry,

-

(i) & (j) Currently available manual fire suppression system.

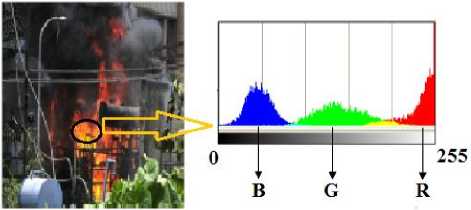

Fig. 2. Avearge RGB values of fire region.

Fig. 3.(a)

Fig. 3.(b)

Fig. 3.(c)

Fig. 3.(d)

Fig. 3.(e)

Fig. 3.(f)

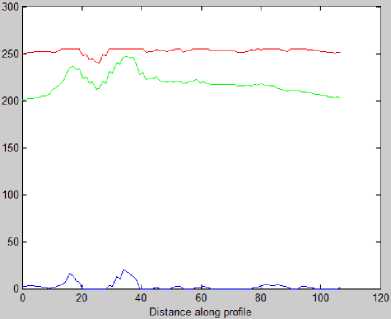

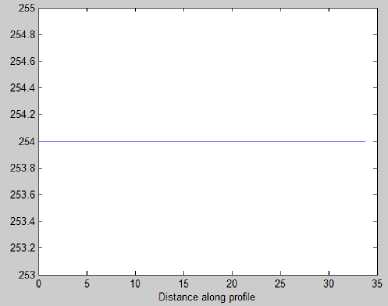

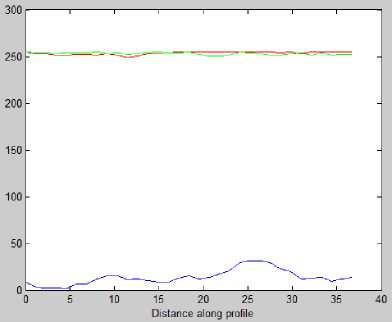

Fig. 3. (b) RGB intensity values of circle marked pixels in fire like sodium bulb image (a). (d) RGB intensity values of circle marked pixels in fire image (c). (f) RGB intensity values of circle marked pixels in fire image.

Automation by Using Neuro-Fuzzy Algorithms

IV. Charecterstics of Fire

It is well known that fire has unique visual signatures. Colour, geometry, and motion of fire region are features for efficient classification. The shape of fire region often keeps changing and exhibits a stochastic motion, which depends on surrounding environmental factors such as the type of burning elements and wind. Major useful features for detecting fire are colour, randomness of fire area size fire boundary roughness, surface coarseness, skewness and spatial distribution. Fire has very distinct colour characteristics, and although empirical, it is the most powerful single feature for finding fire in video sequences.

Based on tests with several images in different resolutions and scenarios, it is reasonably assumed that generally the color of flames belongs to the red-yellow range. Laboratory experiments show that this is the case for hydrocarbon flames by C. E. Baukal, Jr. (2001) which are the most common type of flames seen in nature. For these type of flames it is noticed that for a given fire pixel, the value of red channel is greater than the green channel, and the value of the green channel is greater than the value of blue channel, as illustrated in Fig 2.

RGB intensity values of specifically earmarked pixels of the image have been plotted in the fig 3. Fire-like images and their pixels intensity values are plotted in figs 3(a) and 3(b). Fire images and their various regions pixel values are plotted in figs 3(c), 3(d), 3(e) and 3(f).

H =240 +

∗( )

( , , )

B. Image Subtraction

The subtraction of two images is performed in a single pass. The output pixel values are given by:

Q(i, j) = P i (i, j) - P 2 (i, j) (6)

Where P 1 & P 2 are input images and Q is the output image with i columns and j rows.

C. Binary Conversion.

Binary images are obtained from gray-scale images by thresholding operations. A thresholding operation chooses some of the pixels in the image as foreground pixels that make up the objects of interest and rest as background pixels. Binary images are simplest to process and outline of the object is easily obtainable.

-

• Binary transformation map b : C ^ {0,1}

-

• Thresholded image b ° I: X ^ {0, 1}

-

• For each x ∈ X,

b(x) = {

1 if belongs to an object(s)of interest

otherwise

V. Methodologies

A. RGB to HSV Conversion

In this work, RGB data obtained from a color camera is transformed into HSV data. In the HSV color space, gray scale and color information are in separate channels. Then, if a pixel’s color is transformed into the flame color region in the HSV space, the pixel is regarded as a flame color area in the input image. The color information is used for quick attention. This method does not suffer from the contrast distortion issues seen in RGB-based combination.

RGB to HSV color space conversion can be done using the following set of equations:

V = Max (R,G,B)

S= {

( , , )

V

0,

, V ≠0 V=0

If S = 0, Then H = 0; If R = V, Then

H= {

( ) , G≥В

( , , ) ,

360 + ( ) G <

( , , ) ,

If G = V, Then

H=120 + ( )

( , , )

If B = V, Then

D. Impulse noise and Median filtering The PDF of impulse noise is given by

P p(z) = {P 0

for z = a for z = b otherwise

Noise impulses can be negative or positive. Negative impulses appear as black (pepper) points and positive impulses appear white (salt) noise.

Median Filtering: The best known filter for noise reduction is the median filter, which replaces the value of a pixel by the median of the gray levels in the neighborhood of that pixel- f̂(x, y) = median ( , ) {g(s, t)} (9)

The original value of the pixel is included in the computation of the median.

E. Decision Rules for fire pixels

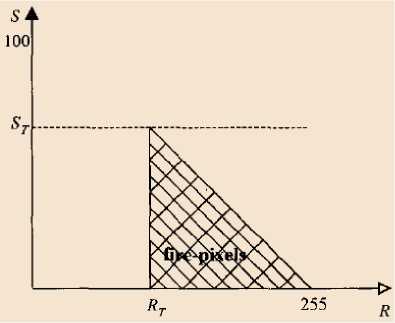

First phase of the proposed fire-detection algorithm is based on RGB color model. The hue value of fire pixels is in the range of 0° to 60° and the corresponding RGB value will be mapped to the conditions: R>G and G>B, i.e., the color range of red to yellow. In spite of various colors of fire flames, the initial flame frequently displays red-to-yellow color. Thus, the condition to detect fire color in the proposed method is R>G>B. The value of R component should be over a threshold R T . To avoid the effect of background illumination, the saturation value of fire-flame is set to be over some threshold.

Three rules are used for identifying fire pixels in an image:

Rule 1: R > R T

Rule 2: R > G > B

Rule 3: IF (S > ((255 - R) x S T / RT)) (10)

Automation by Using Neuro-Fuzzy Algorithms

FIRE = ((Rule1 AND Rule 2) OR Rule 3) (11)

Fig. 4. Relation between R component and saturation.

In rule 3, S T denotes the value of saturation when the value of R component is RT for the same pixel. Based on the concept that the saturation will degrade with the increasing R component, we have the expression ((255-R)*S T /R T ). The relation between R component and Saturation for the extracted fire pixels is shown in the Fig 4.

In the second phase fire pixel classification is done for which YCbCr colour space is better. RGB colour space has disadvantages of illumination dependence. Furthermore, it is not possible to separate a pixel’s value into intensity and chrominance. The chrominance can be used in modelling colour of fire rather than its intensity.

The conversion from RGB to YCbCr colour space is formulated as follows.

"Y" [ ] .Cr.

0.2568 0.5041

[-0.1482 - 0.2910

0.4392 - 0.3678

-

0.0979

0.4392] [ ]+

0.0714

16 [128] 128

Where Y is Luminance, Cb and Cr are Chrominance Blue and chrominance Red components, respectively. The range of Y is [16 235], Cb and Cr are equal to [16 240].

For a given image, one can define the mean values of the three components in YCbCr colour space as

Y = ∑ Ү (x ,y)(13)

Cb = ∑ Cb (x ,y)(14)

Cr = ∑ Cr (x,y)(15)

where (x i , y i ) is the spatial location of the pixel, Y mean , Cb mean , and Cr mean are the mean values of luminance, Chrominance Blue, and chrominance Red channels of pixels, and K is the total number of pixels in image.

The rules defined for RGB colour space, i.e. R>G>B, and R>Rmean can be translated into YCbCr space as

Y(x,y) > Cb(x,y) (16)

Cr (x,y) > Cb (x,y) (17)

where Y(x,y), Cb(x,y), and Cr(x,y) are luminance, Chrominance-Blue and Chrominance Red values at the spatial location (x, y). 16 and 17 imply, respectively, that flame luminance should be greater than Chrominance Blue and Chrominance Red should be greater than the Chrominance Blue. 16 and 17 can be interpreted to be a consequence of the fact that the flame has saturation in red colour channel (R). For the fire pixels Y colour value is greater than Cb colour value and Cr colour value is greater than the Cb colour value [Turgay C-elik, Hasan Demirel (2008)].

Besides these two rules, since the flame region is generally the brightest region in the observed scene, the mean values of the three channels, in the overall image -Ymean, Cbmean, and Crmean - contain valuable information. For the flame region the value of the Y component is larger than the mean Y component of the overall image while the value of Cb component is in general smaller than the mean Cb value of the overall image. Furthermore, the Cr component of the flame region is larger than the mean Cr component. These observations have been verified in a large number of experiments with images containing fire regions and are formulated as the following rule:

F(x,y)={

1, if Y(x, y)˃ Y , Cb(x, y) >Cb ,Cr(x,y)>Cr

0, otherwise

Any pixel F(x, y) which satisfies condition in Equation (18) is labelled as fire pixel.

-

F. Edge Detection

Edge detection is done using the procedure by Canny. The purpose is to detect edges suppressing the noise at the same time.

-

• Smoothen the image with a Gaussian filter to reduce noise and unwanted details and textures.

g(m,n) = Ga(m,n) * f(m,n)

where i г ?n2+n2i

Gσ= [- ]

√

-

• Compute gradient of g (m,n) using any of the gradient operators (Roberts, Sobel, Prewitt, etc) to get:

M (m,n)= √g (m,n)+g (m, n)(21)

and

( , )=tan [ ( , )/ ( , )](22)

-

• Threshold M:

( , )= { ( , ) ( , )>

0ℎ where T is so chosen that all edge elements are kept while most of the noise is suppressed

-

• Suppress non-maxima pixels in the edges in M T obtained above to thin the edge ridges (as the edges might have been broadened in step a. To do so, check to see whether each non-zero MT (m,n) is greater than its two neighbors along the gradient direction θ (m, n). If so, keep MT(m,n) unchanged,

otherwise, set it to 0.

-

• Threshold the previous result by two different values t 1 and t 2 (where t 1

2 ) to obtain two binary images T 1 and T 2 . Compared to T 1 , T 2 has less noise and fewer false edges but larger gaps between edge segments. -

• Link the edge segments in T 2 to form continuous edge. To do so, trace each segment in T 2 to its end and then search its neighbors in T1 to find any edge segment in T1 to bridge the gap until reaching another edge segment in T2.

-

G. Boundary Detection and Fire Area Calculations.

The Moore-Neighbor tracing algorithm modified by Jacob’s stopping criteria has been explored for boundary detection. Using Jacob's stopping criterion will greatly improve the performance of Moore-Neighbor tracing making it the best algorithm for extracting the contour of any pattern irrespective of its connectivity. The reason for this is largely due to the fact that the algorithm checks the whole Moore neighborhood of a boundary pixel in order to find the next boundary pixel. Unlike the Square Tracing algorithm, which makes either left or right turns and misses "diagonal" pixels; Moore-Neighbor tracing will always be able to extract the outer boundary of any connected component. The reason for that is: for any 8-connected pattern, the next boundary pixel lies within the Moore neighborhood of the current boundary pixel. Since Moore-Neighbor tracing proceeds to check every pixel in the Moore neighborhood of the current boundary pixel, it is bound to detect the next boundary pixel. MATLAB shape measurement tool ‘Area’ has been used for two consecutive images of input video frame to calculate change in the area. This is the scalar parameter and the actual number of pixels in the region.

-

H. Neuro – Fuzzy algorithms

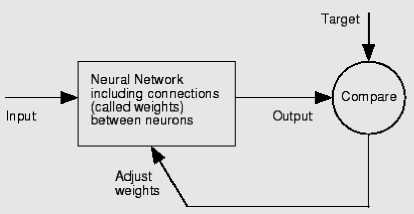

Neural networks are composed of simple elements operating in parallel. These elements are inspired by biological nervous systems. As in nature, the connections between elements largely determine the network function. You can train a neural network to perform a particular function by adjusting the values of the connections (weights) between elements. Typically, neural networks are adjusted, or trained, so that a particular input leads to a specific target output. Fig (5) illustrates such a situation. There, the network is adjusted, based on a comparison of the output and the target, until the network output matches the target. Typically, many such input/target pairs are needed to train a network.

Neural networks have been trained to perform complex functions in various fields, including pattern recognition, identification, classification, speech, vision, and control systems.

Fig. 5. Neural network system.

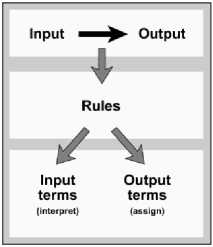

Fig. 6. General Fuzzy system.

The fig (6) provides a roadmap for the fuzzy inference process and it shows the general description of a fuzzy system.

-

VI. Implementation

-

• CCD cameras are installed in the critical areas of the industry with proper scene planning. At single scene or single camera level, a multiple number of fire suppression chemical control valves are installed which are connected to centralized chemical pumping station shown in fig 8.

-

• Background image is stored in the reference database and continuous video is acquired using frame grabber.

-

• Median filter is applied for the reduction of salt and pepper noise.

-

• Input RGB image and the Binary image are multiplied.

-

• Color segmentation is done.

-

• Fire area estimation is done.

-

• Fire pixel edge detection and fire boundary estimation is done

-

• Fire area calculation is done by using region properties.

-

• Fire rules based on RGB, HSV, YCbCr color spaces and change in the fire region area are applied.

-

• Fire centroid pixel is identified.

-

• If the existence of fire is confirmed, the image is partitioned into four quadrants and the neural network is trained with fire quadrant pixel number inputs.

-

• Using neural networks computation of fire region is done.

Fig. 7. Block Diagram.

Automation by Using Neuro-Fuzzy Algorithms

Fig. 8.(a)

VII. Algorithms & Results

Three color spaces are used to detect fire, to ensure high reliability. Change in fire area in two consecutive input video images is estimated in order to avoid false detection. The detailed system algorithm is as follows.

> Read input image and background image - Fig 9.

Fig. 9.(a)

Fig. 9.(b)

Fig. 8. (b)

Fig. 9. (a) input img, (b) background img

Fig. 8. (c)

Fig. 8. (a) Camera installed, (b) Fire suppression control valves, (c) Centralized chemical/water pumping station.

-

> Convert the RGB image into HSV equivalent and YCbCr equivalent. Also convert background RGB image into HSV image – Fig 10.

-

> Continuously subtract the background image from input video frames - Fig 11.

-

> Convert the remainder image to binary with fixed threshold and apply median filter - Fig 12.

Fig. 10.(a)

-

• Opening and closing parameters (values between 0 and 1) of fire control valve are calculated using fuzzy logic based on the fire region area and degree of brightness of the fire.

-

• Valve control parameters are communicated via PROFIBUS to SCADA and PLC systems.

-

• Fire suppression control valve supply (Current/ Voltage) is calculated in accordance with the fuzzy output using SCADA & PLC.

Fig. 10.(b)

Fig. 10.(c)

Fig. 11. Result of image subtraction

Fig. 12.(a)

Fig. 12.(b)

-

> Multiply the input image and the binary image. Fig 13.

Fig. 13. Image multiplication output

-

> Perform color segmentation and add all segmented images – Fig. 14.

Fig. 14. (a)

Fig. 14. (b)

Fig. 14. (c)

Fig. 14. (d)

Fig. 14. (a) Red, (b) orange, (c) Yellow, (d) addition of all color segments.

-

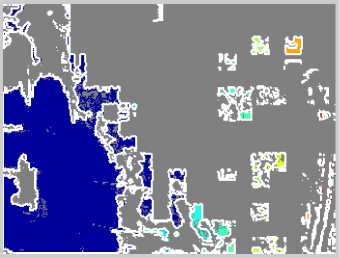

> Extract RGB, HSV and YCbCr color spaces for applying of fire rules - Fig 15.

Fig. 15.(a)

Fig. 15.(b)

Fig. 15.(c)

Fig. 15. (a) converted RGB image, (b) converted HSV image, (c) converted YCbCr.

-

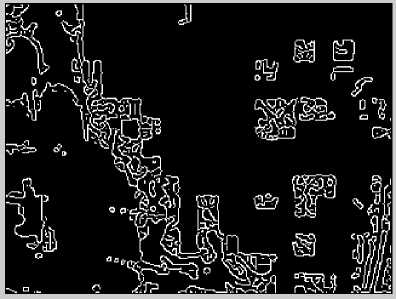

> Perform edge detection and boundary extraction -Fig 16.

Fig. 16.(b)

Fig. 16. Edge and boundary of the image

-

> Apply fire rules for detection of fire

-

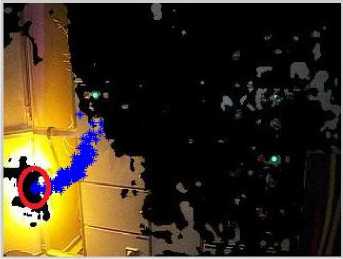

> Identify the fire region centre pixel number by using weighted centroid tool - Fig 17.

Fig. 17. Blue dotted centroid pixels in fire centre

-

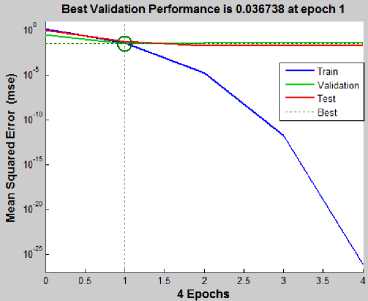

> Partition the image with equal quantity of pixels in all regions and train the neural network – Fig 18.

Fig. 16.(a)

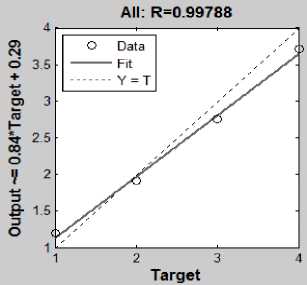

Fig. 18. (b): NN training performance

Fig. 18. (a): Four equal regions of image.

Fig. 18. (c): NN training regression

-

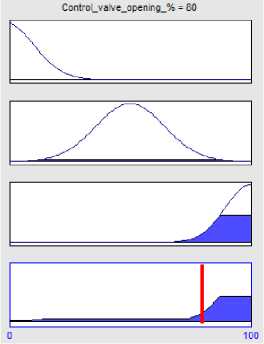

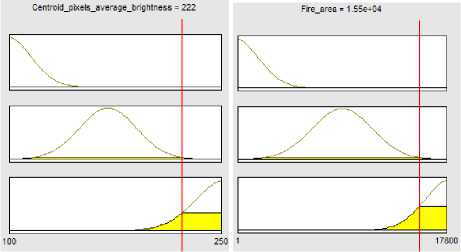

> Apply Fuzzy logic algorithm for opening and closing of fire suppression control valve - Fig 19.

-

> Convert 0 to 100% fuzzy output into 4-20 milliamps to drive the control valve by using PLC & SCADA.

Fig. 19. (b): output based on input membership functions.

-

> Finally establish communication via PROFIBUS -Fig 20.

Fig. 19. (a): Input parameter values

Fig. 20. fire suppression control valve with 80% opening.

Table 1. Results of automated fire suppression system.

Cases

Input image

Output image

Fire rules

N-F parameter

Automated action

Fire at Steel making furnace service door.

RGB √ RN 2

|

YCbCr |

√ |

ABV |

252 |

|

CA |

√ |

FAV |

4300 |

|

CVOP |

60% |

Fire on electrical Cable trays.

RGB √ RN 3

|

YCbCr |

√ |

ABV |

195 |

|

CA |

√ |

FAV |

2800 |

|

CVOP |

50% |

Fire at steel furnace backflow discharge Point.

RGB √ RN 4

|

YCbCr |

√ |

ABV |

200 |

|

CA |

√ |

FAV |

1700 |

|

CVOP |

50% |

Fire like lamp

NO FIRE

NO FIRE

CA: Change in area, RN: Region number, ABV: Average brightness value of region in 0-255, FAV: Fire area value in pixel numbers, CVOP: Control valve opening percentage

VIII.Discussion on Results

Performance analysis has been carried out using five sets of videos. Varieties of fire image frames and fire-like image frames are included. It has been observed that the proposed system has very high fire detection and automated fire suppression rate. Determination of change in fire area can differentiate most of fire-like regions with real fire regions. YCbCr color space has the ability of discriminating luminance from chrominance information. Since the chrominance dominantly represents information without being affected by luminance, the chrominance-based rules and the color model defined in the chrominance plane are more reliable for fire detection. The basic challenge was the implementation of neuro-fuzzy algorithms for automated fire suppression system. Trained neural network has given consolidated output based on targets to identify correct fire region in an image frame. Table 1 describes different sets of results, which are obtained from critical industrial areas. In the first case steel making furnace service door has been considered. Due to improper sealing of furnace doors, fire leakages occur. Another critical area is the electrical cable system and which is explored in second case. Overflow point in steel furnace is described in the third case where fire suppression agent is water. In the last a fire-like region was input to the system and it could identify that it is not fire. For the first three cases neural-network is trained with as much as 19200 pixels for single region and fuzzy algorithm is implemented with suitable membership functions. All automated fire suppression actions are shown in last column of table.

-

IX. Conclusions

Since fire can cause much damage and have devastating consequences, great efforts are put into the development of systems for its early detection and automated suppression. Video-based systems of fire detection have great advantages and can be used to overcome the shortcomings of sensor based systems In recent years, various fire detection systems using image processing have been proposed, but many of them aim at only fire detection. This paper has focused on automated fire suppression based on fire video images.

Список литературы Implementation of Computer Vision Based Industrial Fire Safety Automation by Using Neuro-Fuzzy Algorithms

- Tao Chen, Hongyong Yuan. An automatic fire searching and suppression system for large spaces. Elsevier Fire Safety Journal, 297-307, doi:10.1016/j.firesaf.2003. 11.007, 2004.

- MEI Zhibin, YU Chunyu. Machine Vision Based Fire Flame Detection Using Multi-Features. 24th Chinese Control and Decision Conference (CCDC), 2844-2848, 2012.

- A. Rehman, N. Masood. Autonomous Fire Extinguishing System. IEEE Transactions, 218-222, 2012.

- Hideaki Yamagishi. Fire Flame Detection Algorithm Using a Color Camera. IEEE international symposium on MHS,255-259, 1999.

- Paulo Vinicius Koerich Borges. A Probabilistic Approach for Vision-Based Fire Detection in Videos. IEEE transactions on circuits and systems for video technology, vol. 20, no. 5, 721-731, 2010.

- Bo-Ho Cho , Jong-Wook Bae. Image Processing-based Fire Detection System using Statistic Color Model. International Conference on Advanced Language Processing and Web Information Technology, 245-250, 2008.

- Mohammad Jane Alam Khan, Muhammed Rifat Imam. Automated Fire Fighting System with Smoke and Temperature Detection. IEEE International Conference on Electrical and Computer Engineering. 232-235, 2012.

- Suzilawati Mohd Razmi, Nordin Saad. Vision-Based Flame Detection: Motion Detection & Fire Analysis. IEEE Student Conference on Research and Development, 187-191, 2010.

- Andrey N. Pavlov, Evgeniy S. Povemov. Experimental Installation for Test of Automatic Fire Gas Explosion Suppression System. 10thB IEEE International Conference and Seminar EDM, 332-334, 2009.

- Turgay Celik, and Kai-Kuang Ma. Computer Vision Based Fire Detection in Color Images. IEEE Conference on Soft Computing in Industrial Applications, 258-263, 2008.

- Jie Hou, Jiaru Qian, Zuozhou Zhao. Fire Detection Algorithms in Video Images for High and Large-span Space Structures. IEEE Transactions, 2009.

- KuoL. Su. Automatic Fire Detection System Using Adaptive Fusion Algorithm for Fire Fighting Robot. IEEE International Conference on Systems Cybernetics, 966-971, 2006.

- Changwoo Ha, Ung Hwang. Vision-Based Fire Detection Algorithm Using Optical Flow. IEEE Sixth International Conference on Complex, Intelligent, and Software Intensive Systems, 526-530, 2012.

- Tian Qiu, Yong Yan. An Autoadaptive Edge-Detection Algorithm for Flame and Fire Image Processing. IEEE Transactions on Instrumentation and Measurement, 1486-1493, 2012.

- Chen Jun, Du Yang, Wang Dong. An Early Fire Image Detection and Identification Algorithm Based on DFBIR Model. IEEE World Congress on Computer Science and Information Engineering, 229-232, 2009.

- And& Neubauer. Genetic Algorithms in Automatic Fire Detection Technology. Genetic Algorithms in Engineering Systems: Innovations and Applications, 180-185, 1997.

- T. Chen, P. Wu and Y. Chiou. An Early Fire-Detection Method Based on Image Processing. Proc. of IEEE ICIP ’04, 1707–1710, 2004.

- Lee and Dongil Han. Real-Time Fire Detection Using Camera Sequence Image in Tunnel Environment. Proceedings of ICIC, vol. 4681, 1209-1220, 2007.

- Ishita Chakraborty, Ishita Chakraborty. A Hybrid Clustering Algorithm for Fire Detection in Video and Analysis with Color based Thresholding Method. IEEE International Conference on Advances in Computer Engineering. 277-280, 2010.

- Dengyi Zhang, Shizhong Han. Image Based Forest Fire Detection Using Dynamic Characteristics With Artificial Neural Networks. IEEE International Joint Conference on Artificial Intelligence, 290-293, 2009.

- Quanmin GUO Junjie DAI. Study on Fire Detection Model Based on Fuzzy Neural Network. IEEE Transactions, 1-4, 2010.

- GUO Jian, ZHU Jie. Application of Self-Adaptive Neural Fuzzy Network in Early Detection of Conveyor Belt Fire. IEEE Transactions, 978-983, 2009.

- Turgay Çelik, Hüseyin Özkaramanl. Fire Pixel Classification Using Fuzzy Logic and Statistical Color Model. IEEE Transactions, 1205-1208, 2007.

- Ana Del Amo. Fuzzy Logic Applications to Fire Control Systems. IEEE International Conference on Fuzzy Systems, 1298-1304, 2006.

- Xuan Truong, Tung. Fire flame detection in video sequences using multi-stage pattern recognition techniques. Engineering Applications of Artificial Intelligence 1365-1372, 2010.

- Wirth,M. Zaremba,R. Flame region detection based on histogram backprojection. CRV 7th Canadian Conference on Computer and Robot Vision, 167-174, 2010.

- Xitao Zheng, Yongwei Zhang, Yehua Yu, Recognition of marrow cell images based in fuzzy clustering, International Journal of Information Technology and Computer Science (IJITCS), volume 1, Pages 40, 2012.

- Hadi A. Alnabriss, Ibrahim S. I. Abuhaiba, Improved Image Retrieval with Color and Angle Representation, International Journal of Information Technology and Computer Science (IJITCS), Pp 68-81, 2014.

- Amanpreet Singh, Preet Inder Singh, Prabhpreet Kaur, Digital Image Enhancement with Fuzzy Interface System, International Journal of Information Technology and Computer Science (IJITCS), Pages 51, 2012.