Information Technology for Sound Analysis and Recognition in the Metropolis based on Machine Learning Methods

Автор: Lyubomyr Chyrun, Victoria Vysotska, Stepan Tchynetskyi, Yuriy Ushenko, Dmytro Uhryn

Журнал: International Journal of Intelligent Systems and Applications @ijisa

Статья в выпуске: 6 vol.16, 2024 года.

Бесплатный доступ

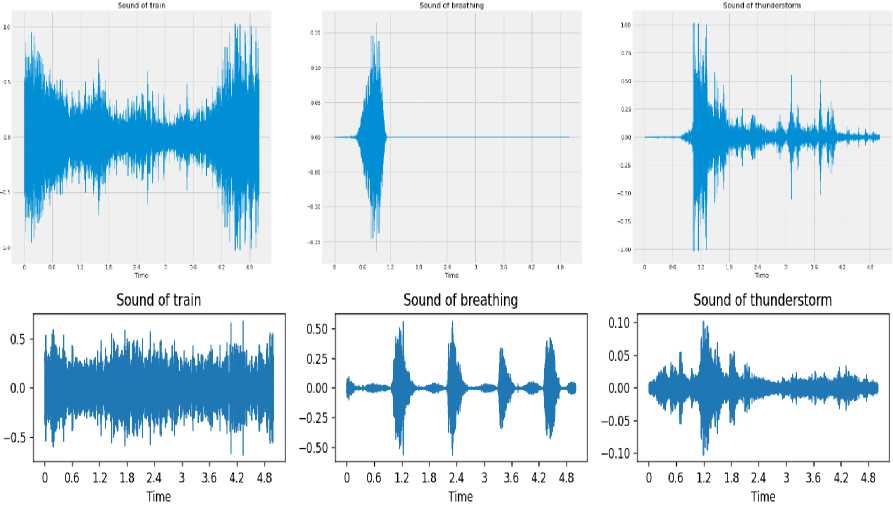

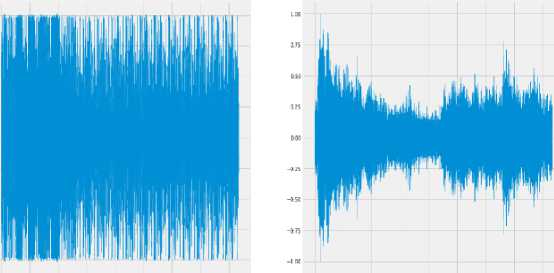

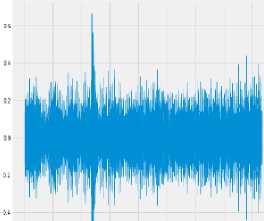

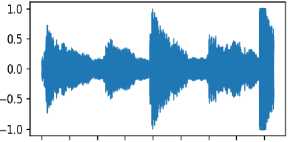

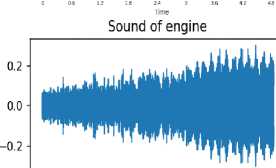

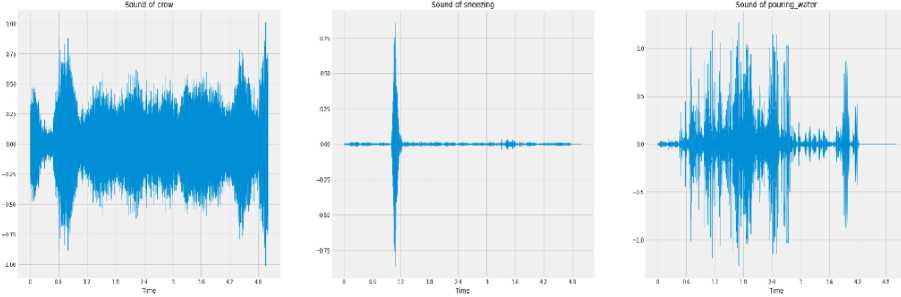

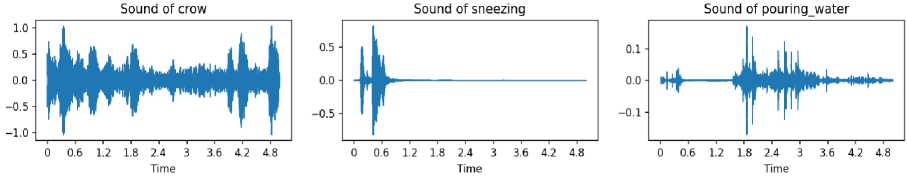

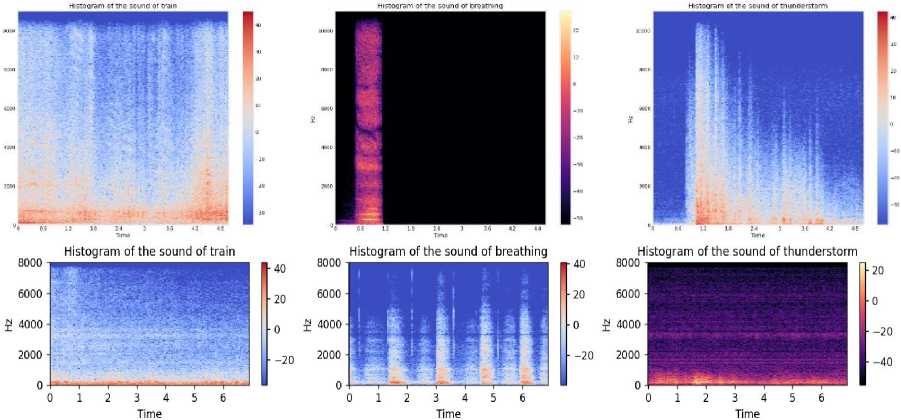

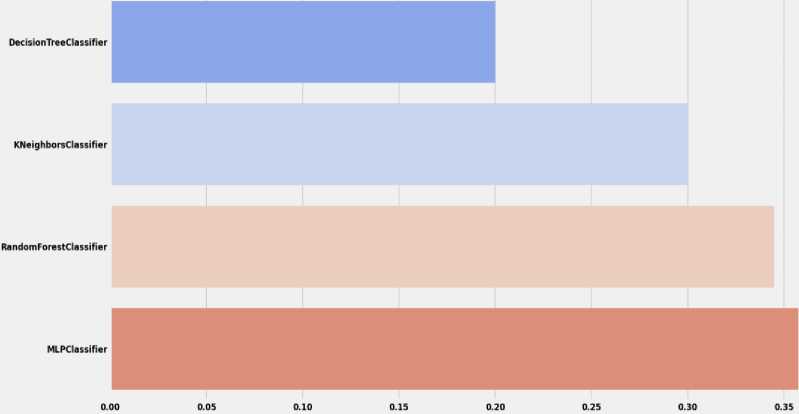

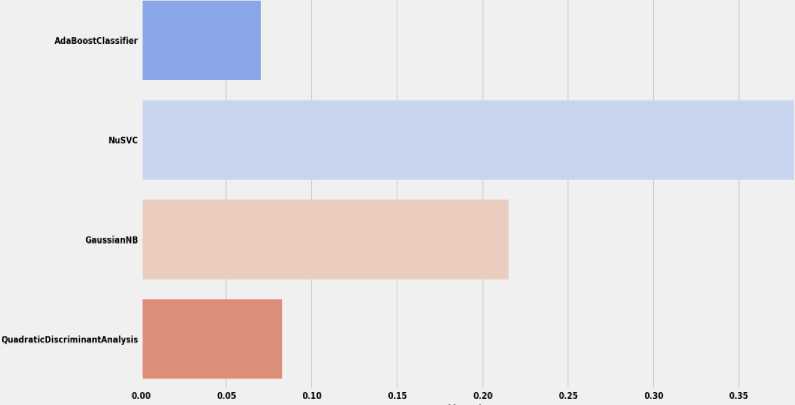

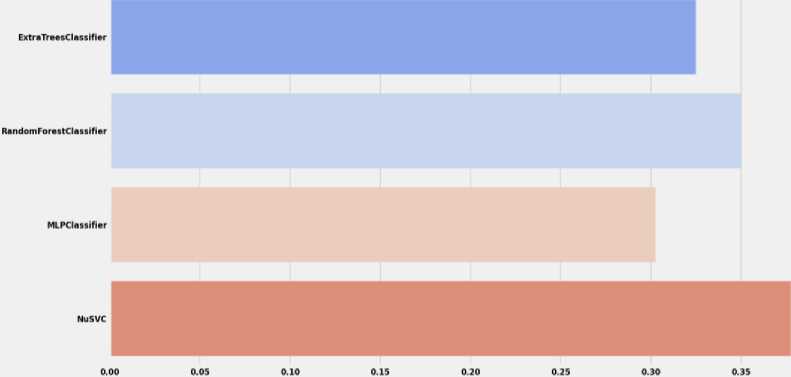

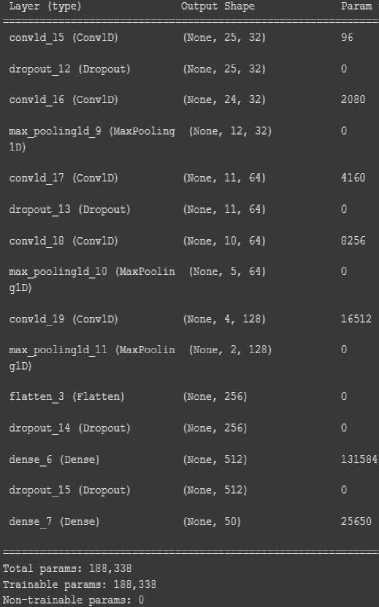

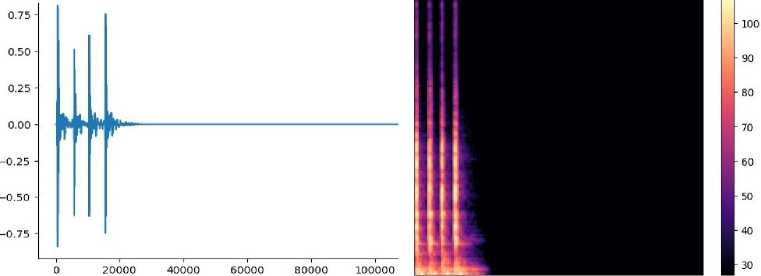

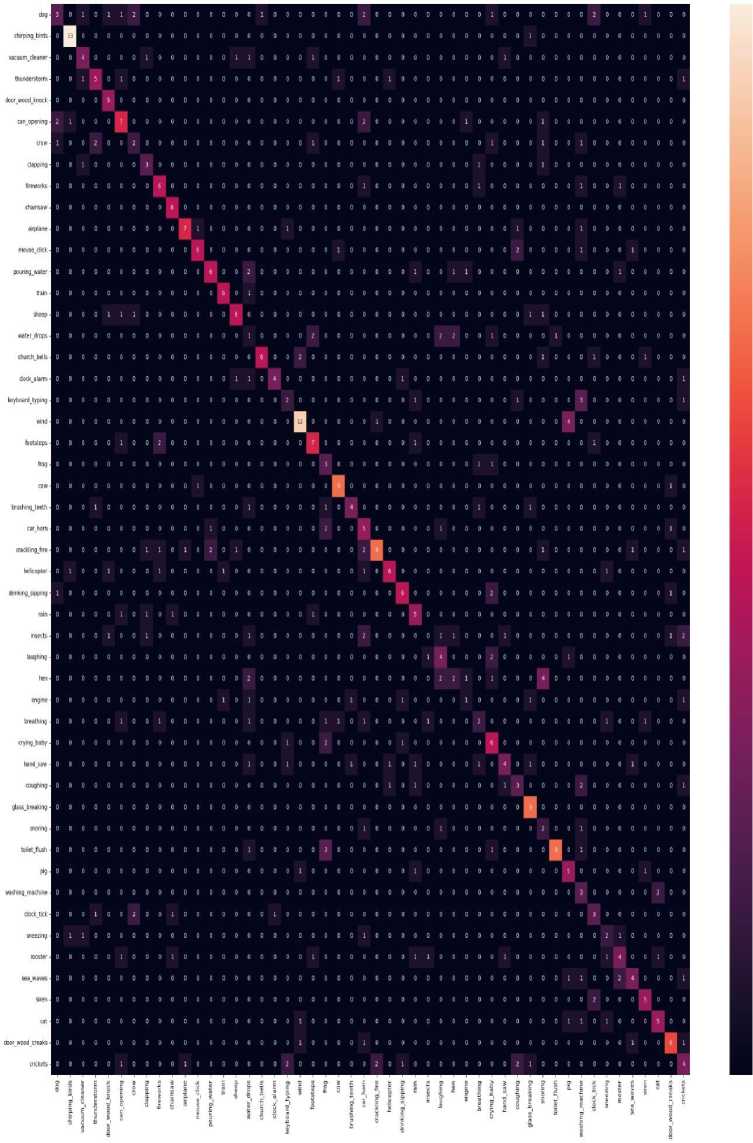

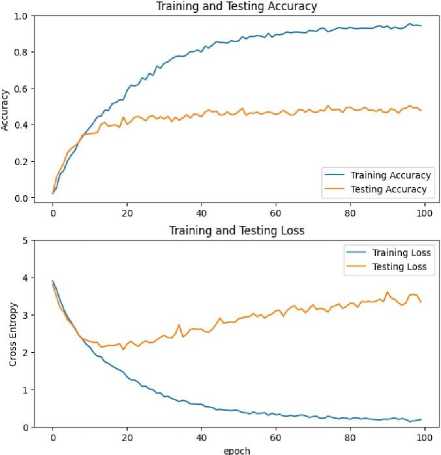

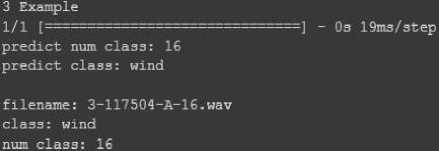

The goal of designing and implementing an intelligent information system for the recognition and classification of sound signals is to create an effective solution at the software level, which would allow analysis, recognition, classification and forecasting of sound signals in megacities and smart cities using machine learning methods. This system can help people in various fields to simplify their lives, for example, it can help farmers protect their crops from animals, in the military it can help with the identification of weapons and the search for flying objects, such as drones or missiles, in the future there is a possibility for recognizing the distance to sound, also, in cities can help with security, so a preventive response system can be built, which can check if everything is in order based on sounds. Also, it can make life easier for people with impaired hearing to detect danger in everyday life. In the part of the comparison of analogues of the developed product, 4 analogues were found: Shazam, sound recognition from Apple, Vocapia, and SoundHound. A table of comparisons was made for these analogues and the product under development. Also, after comparing analogues, a table for evaluating the effects of the development was built. During the system analysis section, a variety of audio research materials were developed to indicate the characteristics that can be used for this design: period, amplitude, and frequency, and, as an example, an article on real-world audio applications is shown. A precedent scenario is described using the RUP methodology and UML diagrams are constructed: Diagram of use cases; Class diagram; Activity chart; Sequence diagram; Diagram of components; and Deployment diagram. Also, sound data analysis was performed, sound data was visualized as spectrograms and sound waves, which clearly show that the data are different, so it is possible to classify them using machine learning methods. An experimental selection of the machine learning method as staandart clasificers for building a sound recognition model was made. The best method turned out to be SVC, the accuracy of which reflects more than 30 per cent. A neural network was also implemented to improve the obtained results. The result of training a model based on a neural network during 100 epochs achieved a result of 97.7% accuracy for training data and 47.8% accuracy when checking performance on test data. This result should be higher, so it is necessary to consider improving recognition algorithms, increasing the amount of data, and changing the recognition method. Testing of the project was carried out, showing its operation and pointing out shortcomings that need to be corrected in the future.

Data Augmentation, Intelligent System, Application, Sound Waves, Sound Spectrum, SkLearn, Feature Extraction, Sound Analysis, Machine Learning Methods

Короткий адрес: https://sciup.org/15019593

IDR: 15019593 | DOI: 10.5815/ijisa.2024.06.03

Текст научной статьи Information Technology for Sound Analysis and Recognition in the Metropolis based on Machine Learning Methods

The powerful leap of information technologies in recent years stimulates the development of new areas of their application, designed to automate routine operations and increase the convenience and efficiency of production processes and service provision. Today, it is possible to observe the large-scale implementation of methods and means of artificial intelligence, as well as IoT technologies in the everyday lives of people. Now you won't surprise anyone with voice control of household appliances, cars and other convenient gadgets. All this unites a new technology called "smart home", or even more broadly, "smart technologies". Important achievements have also been achieved in the areas of pattern recognition, processing of natural language and several others, which allows to improve and increase the quality of life of the population. However, the quality and timeliness of the provision of services by emergency services in the event of emergencies, in particular, by ambulances, firefighters, police, etc., is a determining factor in human life.

The sounds reproduce the noise, bustle, and bustle of the metropolis, which in turn affects the psycho-emotional state and health of the residents. At the same time, the analysis, recognition and classification of sounds will improve the quality of service in megapolises and smart cities. In particular, taking into account the frantic pace of development of road traffic associated with the increase of motor vehicles and the slow steps towards the expansion of infrastructure in large cities, there are problems with car traffic jams. The presence of traffic jams in cities does not allow emergency services to work effectively, since the accumulation of cars at traffic lights and imperfect infrastructure do not allow them to quickly arrive at the scene of an emergency. Therefore, an urgent task is the development of a computer system for the automatic recognition and classification of sound signals generated by sirens of special-purpose vehicles for the formation of green corridors when passing through intersections with traffic light regulations.

The computer system for the recognition and classification of sound signals is designed to automate the process of determining and establishing the identity of the signals generated by the sound source, based on which certain decisions are made. The designed system should provide the ability to collect sound signals and convert them into a digital format. In addition, received signals with appropriate characteristics based on models and machine learning algorithms should be automatically classified into appropriate classes with appropriate labels.

Computer systems for the recognition and classification of sound signals have a wide range of applications, ranging from autonomous independent systems to subsystems of more complex IT solutions. Autonomously, such systems can be used in the formation of music albums by genres, artists or other categories. As subsystems of more complex solutions, a computer system for recognizing and classifying sound signals can be used in the organization of security and voice control of a "smart home". In addition, taking into account the number of cars and their constant growth with unchanged infrastructure, the creation of systems for analysing the sound signals of cars is urgent. This will allow emergency services to get to the places of dangerous situations more quickly and efficiently, by forming green corridors. The computer system must allow for the accumulation of data on car horns and the automatic identification of horn labels. At the hardware level, the system should be responsive to events, portable and able to coexist with other compatible systems.

The work will consider the main aspects of the sound environment in the metropolis, the methods and technologies of sound recognition, and their application in various areas, from measuring the noise level to detecting emergencies and monitoring sound pollution. An analysis of the possible effects of the sound environment on human health and life will also be conducted, as ways of optimization to create a more comfortable and safer urban environment will be considered. The work is aimed at highlighting the importance of the problem of sound recognition in the metropolis, as at the search for innovative solutions and the new approaches development to solving this urgent problem.

The goal of designing and implementing an intelligent information system for the recognition and classification of sound signals is to create an effective solution at the software level, which would allow analysis, recognition, classification and forecasting of sound signals in megacities and smart cities using machine learning methods. The task of the work is to develop software for recognizing sounds using machine learning methods, which could identify sounds in real-time and from recordings. List of main tasks for solving the problem:

-

• Analyse the sound recognition market;

-

• Analyse possible solutions to this problem and choose the optimal one;

-

• Conduct a system analysis for the future recognition system;

-

• Develop MVP of this system.

The object of research is the process of sound recognition. The subject of the research is the system of sound analysis and recognition in the metropolis. A scientific novelty is the analysis of the possibilities of machine learning methods in working with sound with the help of various activation functions and at different. The practical value of this work is the developed system product that will be able to recognize sound using machine learning methods.

This application can be used in various areas:

-

• Simple user – can use to control buildings, in case of absence;

-

• As a radio nanny;

-

• As an aid to people with hearing impairments;

-

• To help automation, for example, farmers to scare birds;

-

• To determine the type of drone (it is possible to determine the location if the sound of the drone is set);

-

• To determine the type of ammunition used.

This topic is relevant because it can help ensure order, help people with various activities and capabilities, and help in military affairs. Information technology use helps detect animals in agriculture and automate animal deterrent measures, as 35% of annual crops are lost by farmers due to the birds’ impact [1]. In military applications, this system can detect the flight of missiles at low altitudes or drones, such as the Shahed 136. The Shahed-136 is a modern combat drone developed by the Iranian industry, designed to neutralize ground targets at a distance. This aircraft bypasses antiaircraft defences and attacks ground targets, launching from a launch pad. His work is characterized by a distinctive sound similar to a moped. This drone was discovered thanks to published footage in December 2021 [2]. It can also help in the detection and classification of ground targets, such as air defence systems or artillery installations.

In the current digital era, the importance of sound recognition systems has increased in many fields, including speech recognition, music classification, acoustic monitoring, etc. These systems attempt to automatically decode, interpret, and extract relevant data from audio signals. Neural networks have become effective tools for audio recognition tasks thanks to recent developments in artificial intelligence and machine learning.

Traditional audio recognition methods often rely on hand-crafted features and rule-based algorithms, which can be time-consuming and have limitations when processing complex audio data. Neural networks, in turn, have the ability to automatically detect and extract relevant features from raw audio signals, increasing the accuracy and reliability of sound recognition tasks.

The demand for efficient and accurate sound recognition systems in many fields has motivated the development of this research. For example, in speech recognition, accurate transcription and understanding of spoken language is essential for voice assistants, transcription services, and speech processing applications. Similar to visual surveillance, acoustic surveillance can enhance security systems and monitoring procedures by being able to automatically detect and classify certain sounds. Therefore, in our work, we strive to overcome the shortcomings of conventional approaches and develop the field of sound recognition, using the capabilities of machine learning technology.

The focus of this research is on audio recognition tasks such as speech recognition, environmental sound classification, and audio event detection. The research will build and deploy machine learning models, collect or use relevant datasets, pre-process audio data, train and evaluate the models, and analyze the results.

The importance of voice recognition systems lies in their potential to improve automation, user interaction, security, enable intelligent decision-making, and create new applications in various fields.

Thus, this research is relevant and aimed at creating systems that will simplify processes such as voice commands, speaker identification, emotion detection, music genre classification, environmental monitoring, and abnormal sound detection through accurate sound identification and classification.

2. Literature Review 2.1. Analytical Review of Developments and Research in the Field of Sound Recognition

The life that surrounds us is permeated with various sounds, which can range from pleasant melodies to loud noises. These audio elements, being an inseparable part of our existence, have a significant impact on our emotional state and shape our perception of the world around us [3-4]. Even when we unconsciously react to sounds, our brain constantly analyses them, creating a comprehensive picture of our environment, which can provide us with important information about the environment [5-7]. There are deep connections between the acoustic environment and our emotional state [9-10]. For example, pleasant music can lift the mood and create an atmosphere of joy, while unpleasant noises can cause stress and irritation. Sound impressions can also affect our decisions and concentration. Thus, the analysis of sounds not only expands our understanding of the world around us but also opens up new opportunities for enriching our emotional experience and improving the quality of our lives. Thanks to modern technologies that allow deeper analysis of sound signals, we can get much more information and understand their impact on us [11-12]. Sound classification in audio deep learning is not only a powerful tool for distinguishing sound signals but also a key element in the development of modern technologies aimed at understanding the sound environment. This approach involves studying whole aspects of sounds to provide accurate classification and prediction of their category. One of the main advantages of sound classification in deep learning is its versatility. This method can be successfully applied to various scenarios, which extends its practicality. For example, in the classification of music videos, it can be used to automatically determine the genre of music, which facilitates the search and selection of music content. Also, the classification of short utterances based on a set of speakers allows one to identify a specific speaker by voice, which can be used in recognition and personal identification systems. This approach is becoming an important element in the development of modern audio technologies, which opens wide prospects for automating and improving various aspects of our interaction with sound signals. Accordingly, further improvement of sound classification methods in deep learning will open up new opportunities for expanding our abilities to understand and use the sound environment in various aspects of life [13-14].

Audio analysis is a complex and dynamic process that involves the transformation, examination and interpretation of audio signals captured by digital devices. This process is used to uncover the depths of sound data and identify important characteristics and regularities in them [15-21]. The most popular types of audio analysis are environmental sound recognition; Music recognition; Voice recognition; and Language recognition. Audio data is analogue sounds converted to digital format while preserving the key characteristics of the original. This technological achievement opens up opportunities for more convenient and efficient analysis and processing of sound signals. According to the basics of physics, sound is a wave of vibrations that propagates through the medium and reaches our ears. The basic characteristics of sound, such as period, amplitude and frequency, determine its basic properties and perception. The period indicates the interval between oscillations, and the amplitude determines the loudness. The frequency indicates the number of oscillations per unit of time and affects the pitch of the sound. These parameters not only help in recognizing the nature of the sound but also allow its reproduction in digital format. The importance of these characteristics becomes apparent when analysing audio data, as they determine many aspects of audio signals. A high frequency can indicate light tones or noise, a significant amplitude - a loud sound and a change in the period can indicate a variety of sound events [20].

To test the effectiveness of sound recognition in the real world, you can refer to the work of Avijeet Kumar and Roop Pahuja, who used sound recognition to identify bird species in their research [21]. They developed a special tool with an efficient graphical user interface that processes audio recordings and generates a statistically evaluated characteristic matrix based on a spectrogram obtained by Fourier transformation. This matrix is used to determine the vocalization patterns of different bird species. This technology has wide applications in ornithology for studying migratory routes of birds and can be useful in agriculture.

-

2.2. Features of Sound Analysis

In our daily life, sound is a crucial component. It allows us to communicate, enjoy music and perceive our environment. But what exactly is sound? Sound is a type of energy that travels through a medium in the form of waves, such as air, water, or solids. The particles of the medium contract and expand as these waves travel through it. Being mechanical waves, sound waves require a physical medium to travel through. As a result, sound cannot be heard in space because it cannot travel in a vacuum. Sound waves occur when an object vibrates because it causes a disturbance in the environment in which it is located. Frequency, amplitude, and phase are just some of the characteristics that define sound waves. Frequency, which is measured in hertz (Hz), is the number of complete oscillations or cycles per second. The range of frequencies that the human ear can hear is from 20 to 20,000 Hz. Conversely, amplitude, which is expressed as the loudness or intensity of a sound, is measured in decibels (dB). The position of a sound wave in a wave cycle is determined by its phase.

Sound waves are analog in nature and must be converted to a digital format in order to be stored, transmitted, or processed. Sampling and quantization are the two most important steps of the transformation. Sampling involves taking repeated measurements or snapshots of a sound wave at predetermined time intervals. Each instantaneous amplitude of the sound wave is recorded in samples. The rate at which these samples are taken is called the sampling rate, and is usually expressed in oscillations per second or hertz (Hz). 44.01 kHz (CD), 48 kHz (common for video and audio), and 96 kHz (for high-resolution audio) are typical sampling rates used in digital audio systems. Assigning numerical values to discretized amplitudes is known as quantization. In other words, it involves converting a continuous range of analog amplitudes into discrete values that can be represented digitally. For this, the amplitude range is divided into an arbitrary number of levels, each of which is assigned a numerical value. The number of levels determines the bit depth or resolution of the digital audio representation. 16-bit and 24-bit are the two most commonly used bit depths in digital audio. A 24-bit signal can represent 16,777,216 discrete levels compared to the 65,536 discrete levels of a 16-bit signal. More levels mean that the digital representation can more accurately capture the subtleties of the original analog sound wave.

Analog-to-digital conversion, or ADC for short, is the process of converting an analog sound wave into a digital representation. The steps that make up this conversion process:

-

• Sampling: A continuous analog sound wave is sampled regularly. How many samples are taken every second depends on the sample rate. For example, a sample rate of 44.1 kHz means that 44,100 samples are taken every second.

-

• Quantization: After sampling, the values are quantized, giving each sample a numerical value. The analog value is rounded to the nearest discrete level during the quantization process depending on the selected bit depth. Quantization error adds some distortion or noise to the digital representation.

-

• Encoding: Binary representation is used to encode quantized values. The binary code called pulse-code modulation (PCM) is most often used. Each sample is represented by a binary number, with the number of bits used varying depending on the bit depth selected.

-

• An analog sound wave can be accurately modeled in the digital domain by following these steps. Each discrete sample in the resulting digital audio file has a corresponding numerical value that represents the amplitude of the output sound wave at that precise moment in time.

Digital audio can be stored in a variety of formats, each with its own characteristics and compression algorithms. Among the common audio formats:

-

• WAV (Waveform Audio File Format): WAV is an uncompressed audio format that preserves the full fidelity of the original sound wave. It is widely supported, but can take up a lot of storage space.

-

• MP3 (MPEG Audio Layer-3): MP3 is a popular audio format that uses lossy compression to reduce file size. It achieves compression by removing irrelevant audio data. The trade-off is a slight loss of sound quality.

-

• AAC (Advanced Audio Coding): AAC is another popular audio format that offers better compression efficiency compared to MP3. It provides improved audio quality at lower bitrates, making it suitable for streaming and portable devices.

-

• FLAC (Free Lossless Audio Codec): FLAC is a lossless audio format that compresses audio data without compromising quality. It offers smaller file sizes compared to WAV while maintaining the original audio fidelity.

These are just a few examples of digital audio formats, and each has its own strengths and uses. The choice of format depends on factors such as memory capacity, desired audio quality, and intended use.

Once the audio is converted to a digital format, it becomes suitable for various digital signal processing (DSP) techniques. Digital audio processing enables a wide range of applications, including audio editing, effects processing, equalization and noise reduction. Here are some key concepts and techniques used in digital signal processing for audio:

-

• Fast Fourier Transform (FFT): FFT is a mathematical algorithm that transforms a time-domain signal, such as an audio signal, into its frequency-domain representation. It decomposes the signal into its frequency components, which allows analysis and manipulation in the frequency domain. FFT is widely used in sound spectrum analysis, filtering and effects processing.

-

• Filtering: Filtering techniques are used to change the frequency content of an audio signal. Low-pass filters pass frequencies below a certain cutoff limit while attenuating higher frequencies. High-pass filters do the opposite, passing higher frequencies and attenuating lower frequencies. Bandpass filters allow you to pass a certain range of frequencies while rejecting others. Filtering is often used to remove unwanted noise, shape the sound spectrum, or create certain effects.

-

• Echo and Reverb: Echo and reverb effects are commonly used in audio production and sound design. Echo creates repetitions of the original sound with reduced intensity, simulating reflections from distant surfaces. Reverberation simulates the complex acoustic environment of a room or space. Both effects are achieved using delay lines and feedback loops, where the delayed sound is combined with the original signal to create the desired effect.

-

• Equalization: Equalization is the process of adjusting the relative amplitudes of the various frequency components of an audio signal. This allows you to tonally shape and correct by emphasizing or reducing certain frequency ranges. Graphic EQs provide adjustable gain bands to control different frequency ranges, while parametric EQs offer more precise control with adjustable center frequencies, bandwidth and gain.

For storage and transmission purposes, audio compression is critical to reducing the size of digital audio files. While maintaining a decent level of sound quality, compression algorithms reduce the file size. Lossless compression and lossy compression are the two main types of compression techniques. Lossless compression: Lossless compression algorithms minimize file size without degrading audio quality. By taking advantage of statistical redundancy in audio data, they can compress the data. Lossless audio compression formats include Apple Lossless (ALAC) and FLAC. If it is important to maintain audio quality, for example during professional audio creation or archiving, lossless compression is suitable. Lossy Compression: Lossy compression algorithms reduce audio data that is considered less important to perception by permanently removing it. Audio quality is slightly degraded as a result of the final deletion of data. Lossy audio compression formats such as MP3 and AAC are common in many applications. When it comes to music streaming, mobile devices, and web distribution, lossy compression is often used because smaller file sizes are critical.

By simulating sound localization and spatial cues, spatial audio technologies aim to create immersive and realistic sound. These developments give sound reproduction a new perspective, improving the perception of depth, directionality and movement. Applications such as virtual reality (VR), augmented reality (AR) and gaming benefit greatly from spatial audio. Multichannel audio systems, binaural recording and playback, ambisonics, and object-oriented audio are all used in spatial audio techniques. Multi-channel audio systems, such as 5.1 or 7.1 surround sound configurations, use multiple speakers to play sound from different angles. Using specialized microphone technology and headphones, binaural recording and playback aims to mimic the way human ears naturally perceive sound. Ambisonics is a technique that captures and reproduces the full spectrum of sound using a spherical array of microphones and speakers. Object-based audio enables dynamic audio reproduction where audio objects can be positioned and moved in 3D space. Entertainment, communications and other industries are being revolutionized by the development of spatial audio technologies that offer more realistic and immersive sound.

Sounds and audio signals have numerous applications in various fields. Here are a few key areas where sound plays a crucial role:

-

• Entertainment: Sound is an integral part of entertainment media, including music, movies, television and games. This heightens the emotional impact, creates an immersive experience, and adds depth to the narrative. Audio engineers and sound designers work tirelessly to create and manipulate soundscapes, musical compositions and special effects that capture and manipulate audiences.

-

• Communication: Sound plays a vital role in human communication. From everyday conversations to public address systems, sound waves carry spoken words and transmit emotions. Telecommunications systems, including telephones, voice over IP (VoIP), and video conferencing, rely on audio signals to facilitate remote communication.

-

• Broadcasting: Radio and television broadcasting rely heavily on audio signals to transmit news, music and other content. Sound engineers and broadcasters work together to capture and deliver high-quality audio that engages and informs audiences.

-

• Speech recognition and synthesis: Audio signals are used in speech recognition systems that convert spoken words into text. These systems have applications in voice assistants, transcription services, and accessibility tools. In contrast, speech synthesis technologies convert text into spoken words, enabling applications such as text-to-speech systems and voice assistants.

-

• Medical Applications: Sound waves are used in various medical imaging techniques such as ultrasound and sonography. Ultrasound imaging uses high-frequency sound waves to create real-time images of internal body structures, aiding in diagnosis and monitoring. In addition, auditory cues and sound therapy are used in audiology and rehabilitation facilities.

-

• • Automotive Systems: Audio and sound play an important role in automotive systems. Car audio systems

-

2.3. Comparison of Analogues of the Product under Development

provide entertainment and enhance the driving experience. In addition, warning signals such as horn and engine sounds help with communication and road safety.

These are just a few examples of the various applications of beeps and beeps. Sound technology continues to evolve, opening up new possibilities in areas such as virtual reality, artificial intelligence, machine/deep learning methods, and human-computer interaction.

In this subsection, a comparison of analogues with the product under development is made, which will help identify the shortcomings and advantages of the development. As the analysis result, analogues of sound recognition programs with completely different fields of application were found (Fig. 1): Shazam; Sound recognition from Apple; Vocapia; and SoundHound.

$

VOCAPIA

Speech-to-text

Fig.1. The shazam logo, using apple voice recognition and the vocapia logo

Shazam is a mobile app and service dedicated to music recognition. Users can use an app to capture a short audio clip from the surrounding sound, and Shazam identifies a famous piece of music, providing artist, song and album information. The service also allows users to purchase music or go to platforms to listen to a full track. Shazam also supports Mac OS and Windows operating systems [22]. This product impressed with intelligence and wide popularity. To use it, simply hold your Shazam-enabled phone up to an unknown music piece and it will automatically send you a text message with the song title and the artist, as a link to purchase the track. The software effectively filters out unrelated background noise, allowing users to identify music tracks used in movies or TV shows. In addition, it can recognize music played in animated pubs or clubs [23]. Currently, in the 2020s, this service has information on more than 11,000,000 music tracks in its repertoire [22]. Shazam's annual revenue is $92.0 million. Shazam is one of the most popular and widely used music recognition apps available today. Shazam has a huge user base thanks to its userfriendly interface and powerful recognition capabilities. Shazam can quickly identify a track and provide users with details such as song title, artist, album and even lyrics, simply by playing a short audio clip of the song. Users can discover new music, create playlists and enjoy seamless listening by integrating Shazam's extensive music database with popular streaming services.

Google Voice Search gives users an easy way to recognize songs playing around them and is integrated into a suite of Google services, including Google Assistant and Google Now. Google Voice Search uses audio fingerprint technology to identify a song and provide relevant information when the app is activated and allowed to listen to the audio. Users can learn more about a specific song, listen to it on different platforms and find related music based on their preferences.

Sound recognition from Apple. Apple's new smartphones are equipped with sound recognition features that can detect a variety of common audio signals, such as smoke alarms, animal voices including cats and dogs, fire alarms, doorbells, running water, and babies crying. This feature is based on the fact that even if you can't hear a certain sound, your iPhone or iPad can recognize it. If a sound is detected (like a cat barking), your device vibrates, plays the sound, and sends you a message. During the tests, it can be noted that the sound recognition function works quite effectively. It should be taken into account that the device must be close enough to detect the sound. In addition, when the voice recognition function is activated, it is possible to call Siri. However, it's important to note that this approach isn't perfect, as the feature may not recognize some of the sounds it was originally configured to recognize. It should be remembered that sound recognition should be considered as an auxiliary tool in cases where you cannot listen to sounds personally. It is cautioned against relying on this feature in emergency or risky situations [24]. When the device detects a certain sound, such as the doorbell, you receive a notification on the screen. In addition, it is possible to delay the recognition of this sound for 5, 20 minutes or 2 hours. If the device is in sleep mode, there is also the possibility to receive messages on the lock screen [24].

Vocapia is a software suite that uses advanced speech analysis technologies, including speech recognition, speech detection, speaker recording, and speech-to-text alignment. This tool can convert audio and video documents, such as broadcasts and parliamentary hearings, into text format. It works with different languages and provides web services through a REST API to convert speech to text. It is also possible to use services for analysing telephone speech and creating subtitles for videos [25]. Vocapia Research specializes in the creation of advanced multilingual speech processing technologies that use artificial intelligence techniques, in particular, machine learning. These technologies provide continuous large-vocabulary speech recognition, automatic audio segmentation, speech recognition, speaker recording, and audio-to-text synchronization. The Vocapia VoxSigma™ speech-to-text software suite guarantees high performance for different languages when processing various types of audio data, such as broadcasts, parliamentary hearings, and conversational data conversion [26].

SoundHound is an innovative language platform that uses artificial intelligence and is developed based on the company's unique technology. It provides a unique voice interface with individual customization and full transparency in data usage. The popular sound recognition app SoundHound has a wide range of uses. With additional features such as lyrics display and voice search, it goes beyond music identification. SoundHound's music recognition capabilities cover a variety of input methods, such as humming, singing, or typing. The app gives users access to detailed song information, including artist bios, music videos, song previews, and the ability to share discoveries with friends and on social networking sites. The main advantages of SoundHound are:

-

• Performs both steps of the speech-to-text process and vice versa in one step, eliminating the need for a conventional two-step approach. This allows you to get results faster and more accurately;

-

• The voice assistant can effectively answer several questions at the same time and refine the results, taking into account the user's intentions to respond to complex questions;

-

• The multimodal interface provides customers with the opportunity to receive immediate interaction in real-time, thanks to instant audiovisual feedback, and also allows changing or updating requests using voice commands and a touch screen [27].

When it comes to voice recognition and intelligent assistance on iPhone, iPad and other Apple devices, Siri, Apple's virtual assistant, has become known as the industry standard. Users can interact with their devices using natural language commands and voice requests thanks to Siri's voice recognition capabilities. Siri uses speech recognition technology to understand and respond to user voice input, making everyday tasks more convenient and hands-free, whether users are setting reminders, sending messages, making calls, or getting information.

Google Assistant, which uses voice recognition technology, is a well-known virtual assistant that can be found on Android devices and other platforms. Google Assistant interprets voice commands, provides answers to requests, performs tasks and controls compatible smart devices thanks to its sophisticated speech recognition capabilities. Through integration with various Google services, users can access personalized information, receive personalized recommendations and have a seamless voice experience across devices.

These modern apps and sound recognition apps have completely changed the way we interact with technology and discover music. They have become essential tools for music enthusiasts, offering personalized and immersive sound thanks to their precise recognition algorithms and user-friendly interfaces. The addition of voice recognition technology to virtual assistants like Siri, Google Assistant, and Cortana has also changed the way we interact with our devices, making tasks more convenient and accessible through voice commands. In summary, sound and audio signals play a fundamental role in our lives, providing communication, entertainment and perception of the world around us. Understanding the nature of sound waves and their representation in the digital realm is essential to working effectively with sound and audio signals. As technology advances, sound and audio signal processing will continue to evolve, providing new opportunities for creative expression, immersive experiences, and innovative applications in a variety of fields.

We will conduct a comparison of analogues with the product under development, for this we will develop a comparison table of development and analogues. However, first, you need to define a grading system. Scores will be integers from 1 to 10, where 1 is very bad (not developed), and 10 is perfect, if it is impossible to answer the question with a score, then Boolean values yes/no can be used (Table 1).

Table 1. Comparative table of the developed product and analogues

|

Characteristics |

Products |

||||

|

Development |

Shazam |

Sound recognition from Apple |

Vocapia |

SoundHound |

|

|

WEB |

7 |

8 |

9 |

5 |

9 |

|

Mobile |

7 |

6 |

7 |

6 |

7 |

|

Functionality |

8 |

8 |

8 |

2 |

8 |

|

Reliability |

8 |

9 |

10 |

7 |

8 |

|

Productivity |

8 |

7 |

7 |

7 |

9 |

|

Maintainability |

8 |

6 |

8 |

4 |

9 |

|

Programming language |

10 |

7 |

8 |

7 |

7 |

|

Convenience |

9 |

8 |

9 |

7 |

10 |

|

Intelligibility |

8 |

8 |

9 |

5 |

8 |

|

Security |

9 |

7 |

8 |

7 |

8 |

|

Suitability for use |

9 |

6 |

7 |

7 |

9 |

We will define innovations and evaluate the effect they will have on development. In advance, for this, you need to develop a rating system: Numerical rating (from 0 to 10); and Boolean evaluation (yes or no). Based on a developed evaluation system and goals, we will build a table for evaluating the effects of the developed product (Table 2):

Table 2. Estimates of development effects

|

Target |

Effect |

Units of measurement |

Value of assessment |

|

Development of the project is financially beneficial in the field of technical application |

financial |

Numerical |

7 |

|

Development of functional methods |

economic |

Numerical |

8 |

|

Development of optimization methods |

temporal |

Numerical |

7 |

|

Develop a guide to using the methods |

educational |

Numerical |

3 |

|

Develop an advertising campaign |

economic |

Numerical |

no |

|

Develop a multi-cloud platform |

technical |

Numerical |

4 |

|

Develop a 24/7 online customer support system |

economic |

Numerical |

3 |

|

Placing social ads on the platform |

social |

Boolean |

no |

After conducting the work in this section, we can say with confidence that the chosen research topic has a great demand and a wide range of applications, which makes the developed product universal for future use.

3. Material and Methods 3.1. Methods of Sound Signal Processing

Audio signal processing techniques allow you to manipulate, enhance, and analyse audio signals. Here are some common audio signal processing techniques:

-

• Audio effects: Audio effects change the characteristics of audio signals to achieve certain artistic or technical goals. Examples of sound effects include reverb, delay, chorus, flanger, and distortion. These effects can be applied to individual tracks or mixed audio to create desired textures, atmospheres or stylistic elements.

-

• Dynamic Range Compression: Dynamic Range Compression aims to reduce the difference between the loudest and quietest parts of an audio signal. It is commonly used in audio mastering and broadcasting to ensure a consistent perceived volume level. Compression methods include ratio, threshold, attack time, release time, and compensation gain control.

-

• Pitch Shifting and Time Stretching: Pitch shifting changes the perceived pitch of an audio signal without affecting its duration, while time stretching changes the duration without changing the pitch. These techniques are used in music production, sound design, and audio post-production to correct pitch, create harmony, or manipulate the tempo of audio recordings.

-

• Noise Reduction: Noise reduction techniques aim to minimize unwanted background noise or artifacts in audio signals. A variety of algorithms and filters, such as spectral subtraction, Wiener filtering, and noise gating, are used to reduce noise while preserving the desired audio content.

-

• Audio Equalization: Audio Equalization adjusts the frequency response of an audio signal to boost or cut certain frequency ranges. It is used to correct tonal imbalance, shape sound or compensate room acoustics. Graphic equalizers, parametric equalizers, and shelving filters are commonly used in an audio equalizer.

-

• Spatial audio processing. Spatial audio processing techniques manipulate audio signals to create an immersive sound field with precise localization and spatial cues. These techniques include techniques such as panning, spatial filtering, and binaural reproduction to create a realistic auditory environment.

-

3.2. Problems and Considerations in Sound and audio Signal Processing

These techniques, among many others, form the basis of audio signal processing, allowing artists, engineers and researchers to shape and transform audio signals to suit their creative and technical requirements.

Although sound and audio signal processing offer many opportunities, there are also a number of challenges and factors that must be taken into account. Below are some of the main difficulties.

-

• The time that elapses between the input of an audio signal and its processed output is called delay. Low latency is essential to maintain synchronization and responsiveness in real-time applications such as live performances, games, and communication systems.

-

• Computational complexity involved: A number of complex audios processing techniques, including convolution, reverberation and spatial rendering of audio, can be computationally intensive.

-

• Such algorithms must be processed in real-time, subject to hardware or platform limitations. This requires effective implementation strategies.

-

• Audio signal processing constantly strives to provide high quality sound processing, reducing artifacts and distortion. It can be difficult to find the right balance between artistic or technical goals and maintaining sound fidelity.

-

• Human perception of audio is complex and individual. Factors such as psychoacoustics, individual hearing differences, and cultural preferences should be considered when designing sound processing algorithms and systems.

-

• Due to the wide range of audio formats, devices and platforms available, ensuring compatibility and interoperability between different systems and formats can be difficult. The Audio Engineering Society (AES) and Audio Video Interleave (AVI) are standards and protocols that help solve these problems.

-

• In addition, ethical issues such as privacy, security, and accessibility must be taken into account when designing and implementing audio signal processing systems.

-

3.3. Traditional Methods of Sound Recognition

Traditional sound recognition techniques encompass a number of approaches, including rule-based systems and statistical methods. These methods use signal processing techniques and machine learning algorithms to analyze audio signals and classify them into different sound categories. Here is a brief overview of these approaches.

Rule-based systems rely on predefined rules and heuristics to recognize sounds. These rules are usually developed by experts in the field based on their knowledge of the characteristics and features of specific classes of sound. The rules are designed to capture the discriminative properties of different sounds and use signal processing techniques to extract relevant features from the audio data. For example, in speech recognition, rule-based systems can use techniques such as phonetic analysis, language modeling, and grammar rules to identify words or phrases. Similarly, in environmental sound recognition, rule-based systems can use features such as spectral characteristics, temporal patterns, and amplitude variations to distinguish between different classes of sounds. Although rule-based systems provide interpretability and explicit control over the recognition process, they may be limited in their ability to handle complex or ambiguous audio scenarios. Creating rules manually can be time-consuming, and performance can depend heavily on the experience of the rule developer.

Statistical methods use mathematical models and algorithms to analyze sound signals and make decisions based on statistical properties. These methods often involve feature extraction, where relevant acoustic features are extracted from the audio data, followed by a classification step.

Signal processing techniques are used to extract discriminative features from audio signals, which are then used for classification. Commonly used features include:

-

• Mel-Frequency Cepstral Coefficients (MFCC): MFCCs capture the spectral characteristics of sound by

analyzing frequency bands and their amplitudes.

-

• spectral centroid: This function represents the center of mass of the frequency distribution and provides

information about the perceived brightness or darkness of the sound.

-

• zero crossing rate: This function counts the number of times the audio signal crosses the zero amplitude line and indicates the time characteristics of the sound.

-

• short-time Fourier transform (STFT): STFT decomposes an audio signal into frequency components over time, allowing analysis of spectral content and changes.

After feature extraction, statistical models such as Hidden Markov Models (HMMs), Gaussian Mixture Models (GMMs) or Support Vector Machines (SVMs) are trained to classify the extracted features into different sound classes.

Machine/deep learning algorithms are used for learning:

-

• patterns and relationships from audio data without explicit rules. These algorithms are trained on labeled audio samples to create models that can automatically classify new, unseen audio data.

-

• supervised learning: In supervised learning, a labeled data set is used to train a classification model of audio samples. Popular algorithms include decision trees, random forests, naive Bayes algorithms, and various neural network architectures such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

-

• unsupervised learning: unsupervised learning techniques aim to detect patterns or groupings in audio data without predefined labels. Clustering algorithms such as K-means or Gaussian Mixture Models (GMM) can be used to identify similar patterns or sound clusters.

-

• deep learning: Deep learning models, such as deep neural networks, have gained considerable popularity in audio recognition tasks. Deep learning architectures can automatically learn hierarchical representations of audio data, resulting in improved classification accuracy. Convolutional neural networks (CNNs) are often used to analyze spectrograms or other image-like representations of audio, while recurrent neural networks (RNNs) and variants such as long-short-term memory (LSTM) networks are suitable for sequential audio like speech or music.

-

3.4. System Functioning Purpose Analysis

These statistical and machine/deep learning methods have the advantage of being able to process complex audio data and adapt to different sound environments. They can study large volumes of labeled data and automatically detect discriminative features, providing more reliable and accurate sound recognition.

However, these methods also require large labeled datasets for training and can be sensitive to changes in acoustic conditions and recording parameters. In addition, the effectiveness of these methods largely depends on the quality and representativeness of the extracted features and the availability of a variety of training data.

Before starting work in this section, let's define what a system analysis is and what it is used for. System analysis is aimed at studying the system or its constituent parts to determine its goals. This method of problem-solving is aimed at improving the system and ensuring that all its components work effectively to achieve the objectives. System analysis determines what functions the system should perform [28]. The purpose of this work is software for the analysis and recognition of sounds in the metropolis using machine learning methods, which will help people in everyday life, in farming, in military affairs and public order. As a final result of the project, the user should be able to use a mobile phone or a website to recognize any sound with a microphone connected to the device, in real-time or using prerecorded audio. To achieve the main goal, we will highlight goals that will help implement the project:

-

• Receive data with different sounds, process the data and analyse the received data. This goal must be fulfilled in the first version of the developed product;

-

• Determine the machine learning method that is most suitable for solving the given problem: sound recognition. This must be done in the first version of the product under development;

-

• Implement the best machine learning method obtained after completing the previous goal. It must be done in the first version of the product under development;

-

• Develop an API for user usability. Not critical for the first version of the developed product;

-

• Develop the user interface. Not critical for the first version of the product under development.

-

3.5. Modelling System Requirements and Risks

User requirements are documents that specify in detail how a system, tool, or process must function to meet user requirements and expectations. The user can be both a person and a machine that actively interacts with the process or system [29]. To determine the requirements, we will build a table of the order of formulating the requirements for the system (Table 3):

Table 3. Procedure for formulating system requirements

|

Type of requirements |

Business requirements |

User requirements |

Functional requirements |

Non-functional requirements |

|

Appointment |

|

|

|

|

|

Example of the content of the requirements |

|

|

|

|

Description of the RUP standard case scenario deployment:

-

• Stakeholders of the precedent and their requirements:

-

- User – wants to recognize the sound.

-

- Data analyst – wants to find data and build machine learning models to recognize sounds.

-

• The user of the product is the main actor of this precedent:

It is generally the user who will choose the work methods he needs for sound recognition.

-

• Preconditions of the precedent (preconditions):

-

- The product under development must be functional;

-

- Developers need to find data to train machine learning methods;

-

- Developers should find a way to receive data from users and microphones;

-

- Developers should find an opportunity to process user data;

-

- The data must be correct;

-

- The payment system must work properly;

-

• The main successful scenario:

-

- The user logs into the system, registers/authorized;

-

- The user is authenticated;

-

- The user pays for the subscription;

-

- The user allows access to audio and microphone;

-

- The user selects the operating mode;

-

- The user provides audio;

-

- The user receives recognition results;

-

• Expansion of the main script or alternative streams:

The user chooses the type of recognition:

-

- Danger recognition mode;

-

- "Protection" recognition mode;

-

- Audio recording recognition mode;

-

- Real-time recognition mode.

-

• Post-conditions:

-

- The user received the results;

-

- Audio saved;

-

• Special JI:

-

- Ensure the reliability of data transmission;

-

- Provide a convenient interface;

-

- Provide round-the-clock support;

-

- To ensure fast processing of the request.

-

• List of necessary technologies and additional devices:

-

- The product under development must be a web platform or a mobile application;

-

- Device for visual display of results.

-

- Microphone.

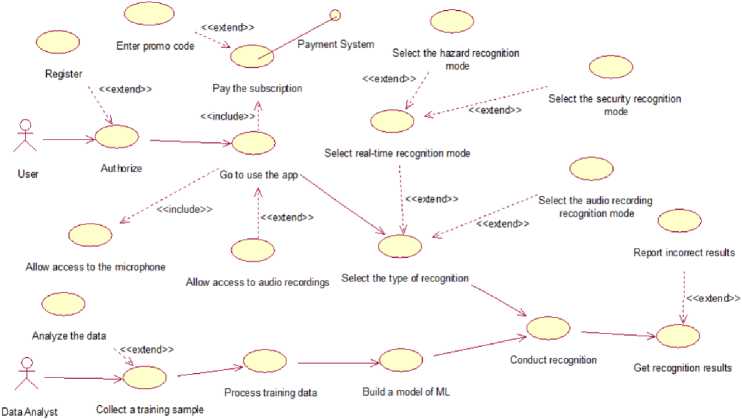

Fig.2. Use case diagram

Let's build a use case diagram to illustrate the functional content of our data analysis system (Fig. 2). A use case diagram (sometimes known as a use case diagram) is a general representation of the functional purpose of a system that answers the key modelling question: What does the system do in the outside world? Fig. 2 shows a diagram of use cases. The diagram shows two actors: The user and the Data Analyst. For successful work, a data analyst must:

-

• Collect a study sample;

-

• Analyse data;

-

• Process training data;

-

• Build a machine learning model.

To work in the system, the user needs:

-

• Log in to the system, if the user does not enter the system for the first time, then it is necessary to register, the user can also use a promotional code;

-

• The user must proceed to use the application, at the same time give the system access to audio recordings and the microphone and pay for the subscription, if this has not already been done;

-

• The user must select the recognition type by pointing to the microphone or audio recording. The user can choose 1 of the operating modes: danger recognition mode, "Protection" recognition mode; audio recording recognition mode, or real-time recognition mode;

-

• The user goes to the recognition step;

-

• The user receives a result and can leave feedback on incorrect recognition.

-

3.6. Modelling of Subject Area Objects

Table 4. Description of object classes of the system of sound analysis and recognition in the metropolis

|

Object classes |

Class attributes |

Class methods |

|||

|

Class name |

Class assignments |

Attribute name |

Attribute content |

Method name |

Method content |

|

Management |

A class that implements system management |

Path to Audio |

A record that contains the audio path |

Download Model |

Loads the ML model for recognition |

|

Path to ML |

A record that contains the path to the MN model |

Select Audio |

A method that selects audio from the user's library |

||

|

Recognition type |

The record that contains the selected recognition work type |

Select Recognition Type |

A method that sets the type of recognition selected by the user |

||

|

System management |

A class that follows Control, a user session in the system |

||||

|

Result |

Implements work with results |

ResultRecognition |

The sound class that was recognized |

Save Recognition |

Stores recognition in the system |

|

DeleteResult |

Deletes the result from the system |

||||

|

Subscription |

Implements the possibility of user subscription for access to the system |

NameSubscriptions |

Subscription display name |

Pay |

Subscription payment (wrapper for third-party payment system) |

|

Description Subscriptions |

Contains a detailed description of subscription options |

||||

|

Cost of subscriptions |

Subscription price |

CheckPayment |

Checks the validity of a user's subscription |

||

|

Eligibility term |

User subscription expiration date |

||||

|

Authentication |

Implements user authorization and authentication |

ID |

User ID in the system |

Register |

Registers a user in the system |

|

Login |

Unique username feed |

||||

|

Password |

User's secret feed |

Sign in |

Performs user login |

||

|

Electronic Mail |

User email |

||||

|

Name |

Display Name |

Delete Account |

Deletes the user's account permanently |

||

|

Audio |

Implements audio display in the system |

File name |

File display and ID in the system |

RecordAudio |

Record audio from the user's microphone |

|

PathToFile |

Audio placement path |

DownloadAudio |

Downloading user audio |

||

|

DeleteAudio |

Removes the user's audio from the system |

||||

|

ProcessAudio |

Processing of user audio for further recognition |

||||

|

ML |

Implements the ML model class |

Study sample |

Data for model training |

Train ML |

Trains a machine learning model |

|

Test data |

Data for testing the trained model |

Protest ML |

Tests a machine learning model |

||

|

Save ML |

Saves the model in the system |

||||

|

RecognizeAudio |

Recognizes the sound specified by the user |

||||

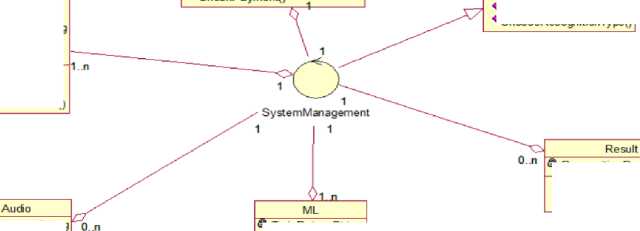

Let's start by defining the classes, their methods and attributes that implement the data analysis system. A class diagram is used to display the static structure of a system model, using the terminology of classes in object-oriented programming. This diagram is a form of a graph, where the vertices are elements of the "classifier" type, which are connected by various types of structural relations. It is important to note that a class diagram can also include relationships, packages, interfaces, and even individual instances such as relationships and objects. In general, this diagram represents a static structural model of the system, which is considered a graphical representation of such structural relationships of the logical model of the system that remain constant concerning time. Let's build a table of system class descriptions (Table 4):

Let's build a table to determine the relationship between classes in Table 5 and draw a class diagram in Fig. 3.

Table 5. Description of relations between classes of the system of analysis and recognition of sounds in the metropolis

|

Relationship name |

Classes between which a relationship is defined |

Type of relation |

Dimensionality |

|

|

Relationship 1 |

System management |

Audio |

Aggregation |

1-0..n |

|

Relationship 2 |

System management |

Subscription |

Aggregation |

1-1 |

|

Relationship 3 |

System management |

ML |

Aggregation |

1-1..n |

|

Relationship 4 |

System management |

Authentication |

Aggregation |

1-1..n |

|

Relationship 5 |

System management |

Management |

Follows |

- |

|

Relationship 6 |

System management |

Result |

Aggregation |

1-0..n |

Subscription ^SubscriptionName : String ^•SubscriptionDescn ption i String ^•SubscriptionPrice . Double ^ExpirationDate : Date

Authentication

^>ID : String ^?Login : String ^•Password: Sting ^?Email : String ^lame : String

♦Рай)

*CheckPayment()

*SignUp()

♦Sig^nO

*RemweAccount(}

Management ^>AudoPath : String SbMLPath : String ^>Recognition~Iype : String

*LoadModel() ^ChoseAudio()

*C h oo s e Rec ogn iti onTy pe ()

^>FileName : String

^>FilePath : String

^RecordAudioQ

*UploadAudio() ^RemcweAudioQ *ProcessAudio()

^RecognitionResult : String

^SaveRecognition()

*DeleteResult()

a>T rai nD ata Stri ng ^•TestData : String

♦TramMLO

*TestML()

♦SaveML()

^Reco g niz eAu di o()

Fig.3. Class diagram

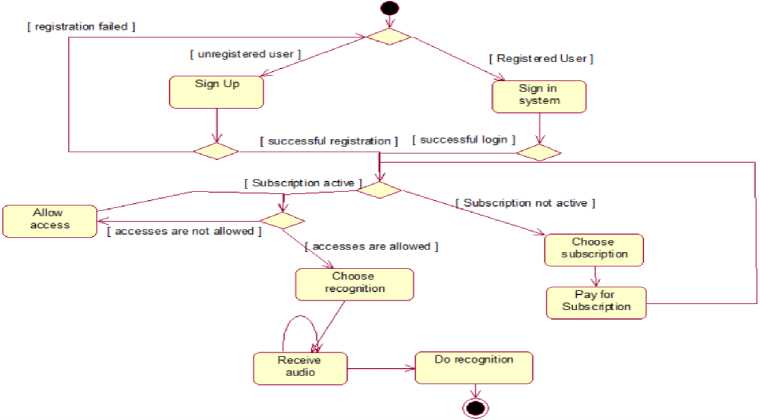

Fig.4. Activity diagram

-

3.7. Modelling of System Processes

An activity diagram (Fig. 4) is an alternative to a state diagram, and it is based on a difference in approach: activities are the main component in an activity diagram, while static state is important in a state diagram. In the context of an activity diagram, the key element is the "action state", which specifies the expression of a specific action that must be unique within the given diagram. An action state is a specific state initiated by certain input actions and has at least one output. The visualization of an activity diagram is similar to a graph of a finite automaton, where vertices correspond to specific actions and transitions occur after actions are completed. An action acts as the basic unit of behaviour definition in a specification, taking a set of inputs and converting them into outputs. Let's draw an activity diagram for our system:

This diagram shows the activities that occur during program execution:

-

• If the user is not registered, he is registered in the system;

-

• If the user is already registered, he enters the system;

-

• If registration or login is unsuccessful, the user returns to the beginning;

-

• If the user does not have a paid subscription, then he must choose a subscription and pay for it;

-

• If the user has an active subscription and has allowed all settings, he enters the recognition stage, otherwise, he

must allow access and return to the previous stage;

-

• Next, the User selects the recognition mode and provides audio;

-

• After which the recognition is done and the user receives the result.

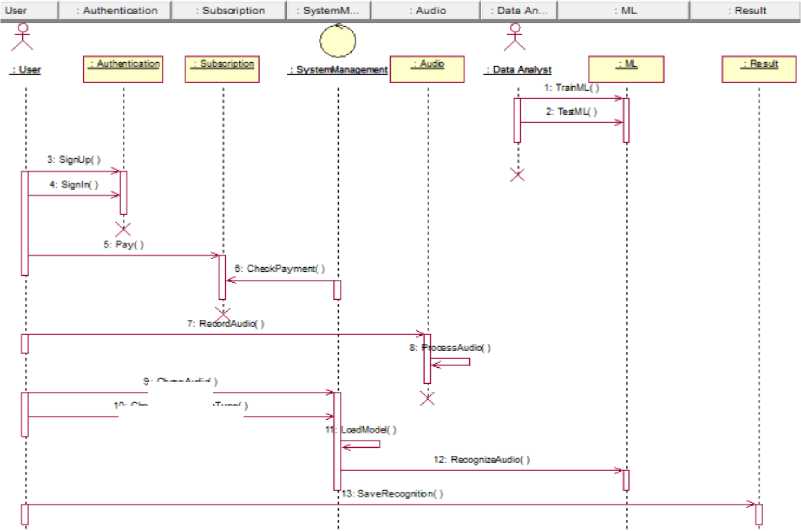

Let's build a sequence diagram to determine the sequence of interaction of objects in chronological order (Fig. 5). The diagram provides information about the order of events and messages between objects during a certain period. This simplifies the perception of the sequence of various actions and their interaction. The diagram also indicates how objects interact in a particular scenario, emphasizes parallel execution of actions, and can indicate synchronization between objects. Chronological sequence of system actions:

-

• A data analyst trains a machine learning model;

-

• The data analyst tests the machine learning model;

-

• The user registers in the system;

-

• The user logs into the system;

-

• The user pays for the subscription;

-

• Management of the system checks the payment;

-

• The user records audio;

-

• The Audio class processes audio;

-

• The user selects audio;

-

• The user chooses the type of recognition;

-

• The system loads the portable model;

-

• System management starts recognition;

-

• The user can save recognition.

TrarVLi

Fig.5. Sequence diagram

3: Ch

IQ: ChooseRecognitwnTypef)

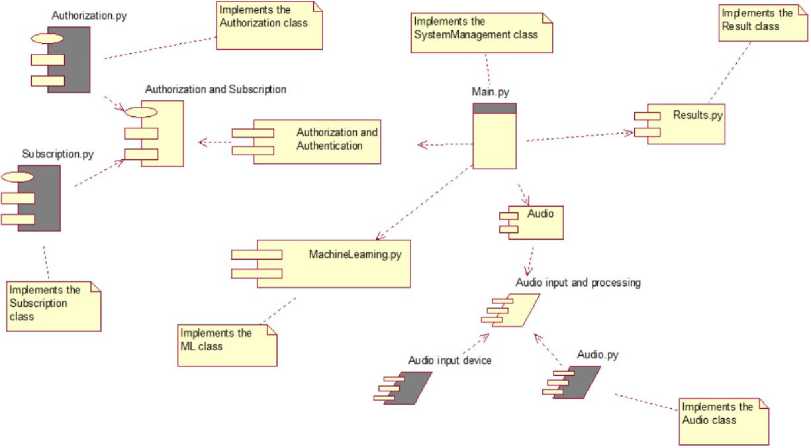

Let's build a diagram of components to visualize the architecture of the system structure in Fig. 6.

Fig.6. Component diagram

Fig. 6 is shown diagram of the components, which shows the structural interaction between the components:

-

• Main.py – implements the System Management class, this class operates the entire system;

-

• Results.py – implements the Result class;

-

• MachineLearning.py – implements the ML class;

-

• The authorization and authentication component contains the package specification, which is implemented using: Subscription.py – which implements the Subscription class and Authorization.py implements the Authorization class;

-

• The audio component contains the task specification - audio input and processing, which is implemented by the audio input device and Audio.py, which implements the Audio class.

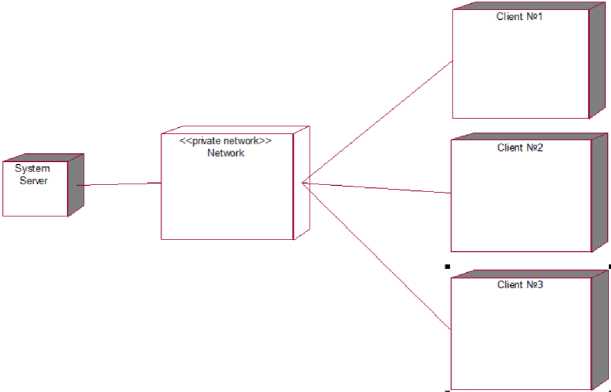

Let's build a deployment diagram to see how users interact with the system in Fig. 7.

Fig.7. Deployment diagram

The deployment diagram shows 3 processors and 1 device. System processors:

-

• User #1 – system user, can be connected both from the phone and the web application. Has a network connection with the device.

-

• User #2 – system user, can be connected both from the phone and the web application. Has a network

connection with the device.

-

• User #3 – system user, can be connected both from the phone and the web application. Has a network

connection with the device.

-

• The system server is the place where all the activities of the program are performed, from receiving data from the user to returning the results to the user. Has a network connection with the device.

Devices:

• Network – a closed network that performs the function of communicating between the user and the system. It has a connection with the processors: User #1, User #2, User #3 and with the System Server.

4. Experiments

4.1. Formulation and Justification of the Problem

The above experiments will significantly help and simplify the development of the project because the main concepts and principles of the system have already been described.

The task of this work is to create software for the analysis and recognition of sounds in megacities using machine learning methods. A working machine learning model that can recognize certain types of sounds can be considered a good performance of this work.

This system can be used by users of various industries. Nowadays, loud sounds have become a constant stress, so even at home, this system will be able to help distinguish a real explosion from falling materials at a construction site. In farming, this drone can be used to automate the driving away of animals from crops. In military affairs, the data of this system can help with the detection of extraneous sounds, for example, drones, and in the future, the recognition of the distance of the sound echo, which can help in easier detection of enemy equipment and missiles. In cities, it can help with security, so a preventive response system can be built, which can check whether everything is in order based on sounds. Also, it can make life easier for people with impaired hearing to detect danger in everyday life.

The system is very easy to use, a user of any age and profession will be able to use it easily. All you need is a smartphone and a microphone. The effects obtained from the implementation of the project:

-

• Economical – investment in installing cheaper microphones instead of video surveillance cameras.

-

• Temporary - the period of people's reaction to a certain loud sound will decrease.

-

• Social - help to people with impaired hearing.

-

• Educational – can inspire people to study sound and machine learning techniques even more carefully.

-

4.2. Building a Model for Problem-solving

It is necessary to build a model of the machine learning method. For this, we need:

Get data → Analyse data → Visualize data → Choose the best method → Build a model

To begin with, consider the possible methods of machine learning [30-42]:

Support vector classification (SVC) in machine learning uses support vectors to efficiently classify objects. The support vectors are the points closest to the class separation boundary, and they define the hyperplane that separates the classes in the feature space. The method also uses functions to solve nonlinear classification problems. The regularization parameter controls the trade-off between the accuracy of the training data and the generality of the model. It is used in various fields such as pattern recognition, bioinformatics and financial analytics [30].

ExtraTreesClassifier is a classification method that uses a combination of decision trees and is known for its extreme randomness. When using it to build a model, features and thresholds are randomly selected for each tree node. All this is intended to reduce the risk of overtraining and ensure greater stability of the model. ExtraTreesClassifier allows solving classification tasks where it is necessary to determine whether objects belong to certain categories based on their features [31].

AdaBoost is a machine learning method that uses a sequential approach to training classifiers. At each step, the algorithm trains a weak classifier and assigns a weight to it based on how effectively it classified the data. By combining these classifiers, AdaBoost tries to focus on those areas of the data where previous classifiers made mistakes. The main idea is to train new classifiers in such a way that they focus on objects that were incorrectly classified by previous classifiers. The importance of each classifier is governed by its accuracy. Thanks to this approach, AdaBoost can create a strong classifier that can effectively solve classification problems, even if weak classifiers are included [32].

MLPClassifier is a classifier that uses an artificial neural network to solve the classification problem in machine learning. It belongs to the scikit-learn library and is used to model complex relationships in input data. A neural network has different layers, such as input, hidden, and output, and learns the relationships between them during training. MLPClassifier is used to solve classification problems, where the model tries to understand the relationship between the input data and the target class. Various parameters of this classifier, such as the number of layers, the number of neurons in each layer, and the learning rate, can be adjusted to achieve optimal results on specific data [33].

-

4.3. Selection and Justification of Problem-solving Methods

The best methodology in our case is the use of Agile methodologies. Agile software development has several advantages that justify its use:

-

• Flexibility and adaptability: Agile provide the ability to respond quickly to changing requirements, even at late stages of development. This is especially useful in a changing business environment and user requirements.

-

• Iterative approach: Agile involves developing a product in small iterations (sprints), which allows the user to obtain the functionality of the product at each stage of development and make adjustments.

-

• Involvement of the user and teamwork: The methodology promotes active interaction between the user and the development team. This helps to better understand the requirements and resolve possible misunderstandings.

-

• Better product quality: More frequent integration testing and small, frequent releases allow bugs to be discovered and fixed more quickly, resulting in a higher-quality software product.

-

• User satisfaction: Agile allows the user to see the results of the work at early stages and actively influence the development process, which contributes to the user's satisfaction with the product.

-

• Containment of costs and risks: Agile allows you to control costs and reduce risks, as it allows you to quickly respond to changes in requirements or market conditions.

-

• Promotes team self-organization: Agile puts the focus on developing team self-organization, which can positively impact team productivity and creativity.

-

4.4. Development of Problem-solving Algorithms

-

4.5. Selection and Justification of Development Tools

Therefore, development according to the Agile methodology contributes to the improvement of product quality, provides flexibility and speed of response to changes, and also improves interaction between the user and the development team. Therefore, since our product does not yet have a single target audience, it is better to develop flexibly to be able to change the product according to needs.

To solve the given task, you need:

Analyse data → Find the best method for machine learning → Build a model → Test the developed model

To analyse the data, you need:

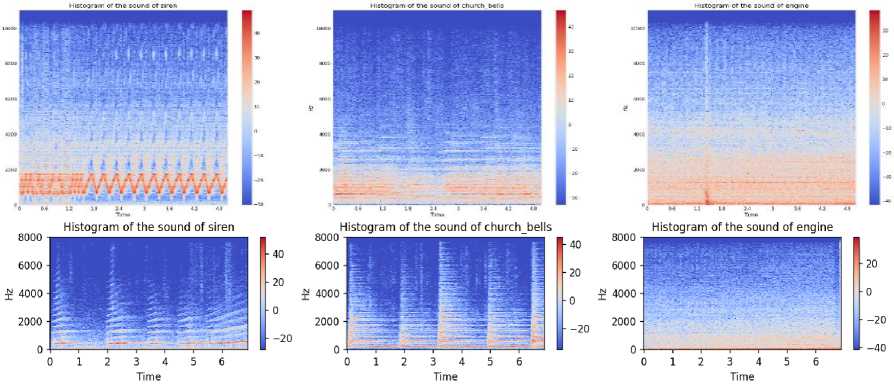

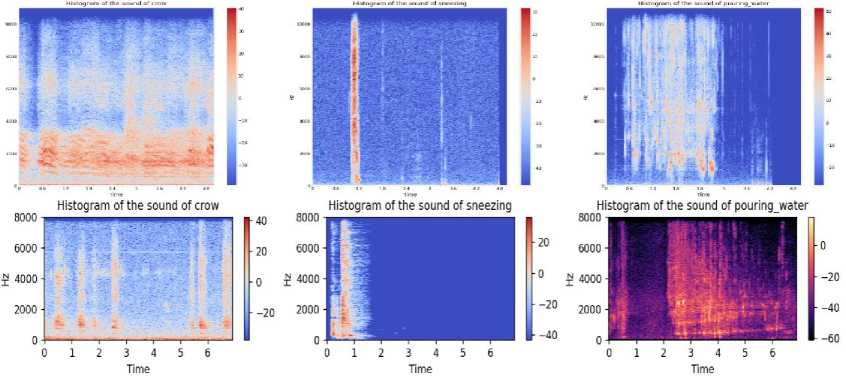

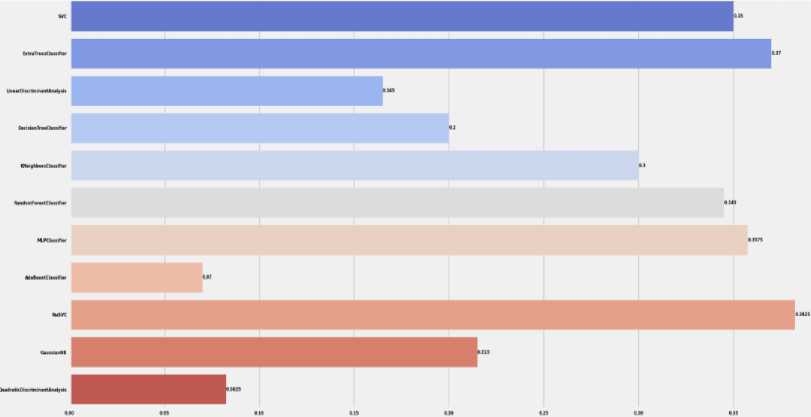

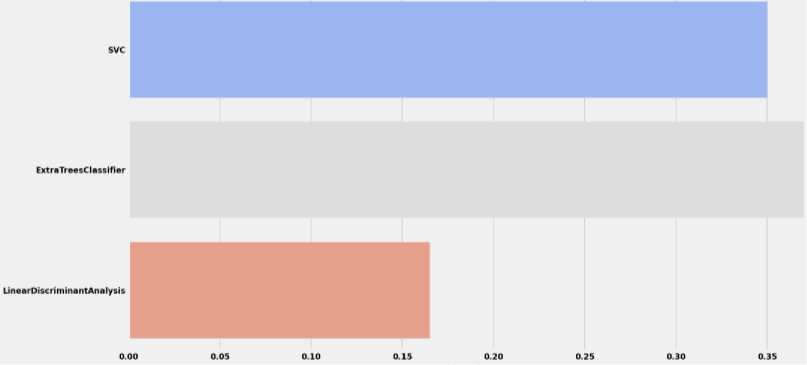

Download data → Analyse the downloaded data → Visualize sound waves → Visualize spectrograms

To find the best method, it is necessary to conduct experiments with various models and compare them. To build a model, you need to find out how you can implement the model. Test the model - you need to load the data from the test dataset and compare it with the expected ones.

To begin with, we need a laptop on which this data analysis system will be created, we have a Lenovo Legion and a cloud provider for raising our data analysis system. AWS - cloud development from Amazon, Google Cloud Platform - development from Google and Microsoft Azure - development from Microsoft can be identified as leaders among cloud providers. In our case, we will choose AWS as the services in it are perfectly described, and their development is very easy. From software resources, we need an OS. Popular OSes include Linux, Mac, and Windows. In our case, we will choose Windows as it is very convenient. We need text editors, in the case of Python, it is best to use Visual Studio Code, as it contains very convenient plugins for work. Also, we need a message broker among the most popular Kafka and RabbitMQ, since we will perform asynchronous tasks, it is better to use Kafka.

To implement this project, the following tools are used:

-

• Lenovo Legion laptop with Intel i5 gen 7 processor, 16 GB RAM and 1T hard disk;

-

• IBM Rational Rose for building UML diagrams;

-

• Visual Studio Code text editor;

-

• Apache Kafka – for sending asynchronous messages;

-

• Windows 10;

-

• AWS EC2 for system deployment.

Apache Kafka is a powerful data streaming system that is often used as a message broker between microservices. She has a number of strengths that make her an attractive choice for the role. It is designed to process huge streams of data in real time. Its high throughput means it can process hundreds of thousands or even millions of messages per second. This makes Kafka ideal for large systems that generate large amounts of data. In addition, Kafka is extremely reliable. It has built-in failover mechanisms that provide fault tolerance and ensure that messages are not lost if one or more nodes fail. Apache Kafka is also known for its flexibility. It supports various models of data processing, including the implementation of both queues and topics (publish-subscribe model). This means that Kafka can be used to support a wide range of usage scenarios, from simple message forwarding to complex data processing flows. Finally, Kafka scales well, allowing you to increase the amount of data processing as the system grows. It supports a distributed architecture that allows you to add nodes to a Kafka cluster to process even more data.

To support service independence and rapid development, the following 4 types of services were selected:

-

• Main (SSO) Service: performs tasks of user authorization, storage of their projects, general data, etc.

-

• Audio Meta Service: deals only with data processing. Since the processing of audio files is a long, blocking

and synchronous process, we moved it to a separate service and added operation queues using BullMQ.

-

• Render Service: an orchestrator of render machines, which, in cooperation with Kafka, operates render machines, creates render tasks and controls the process of their passage. It also has an API for managing project templates.

-

• Render Worker: a render engine that in turn reads a render task from a Kafka topic and starts rendering, downloading all required assets and files from S3

The AWS EC2 service was used as an environment that provides the opportunity to conduct a dialogue between users executing the server part.