Intelligent Application for Predicting Diabetes Spread Risk in the World Based on Machine Learning

Автор: Dmytro Uhryn, Victoria Vysotska, Daryna Zadorozhna, Mariia Spodaryk, Kateryna Hazdiuk, Zhengbing Hu

Журнал: International Journal of Intelligent Systems and Applications @ijisa

Статья в выпуске: 3 vol.17, 2025 года.

Бесплатный доступ

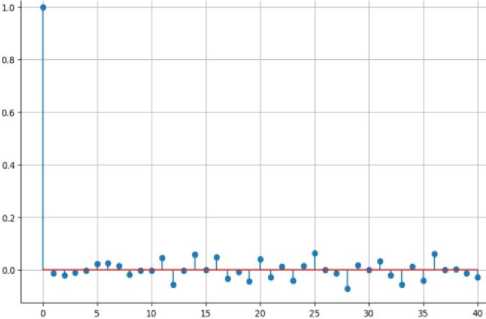

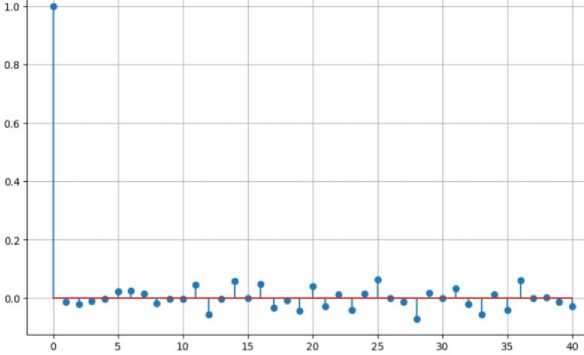

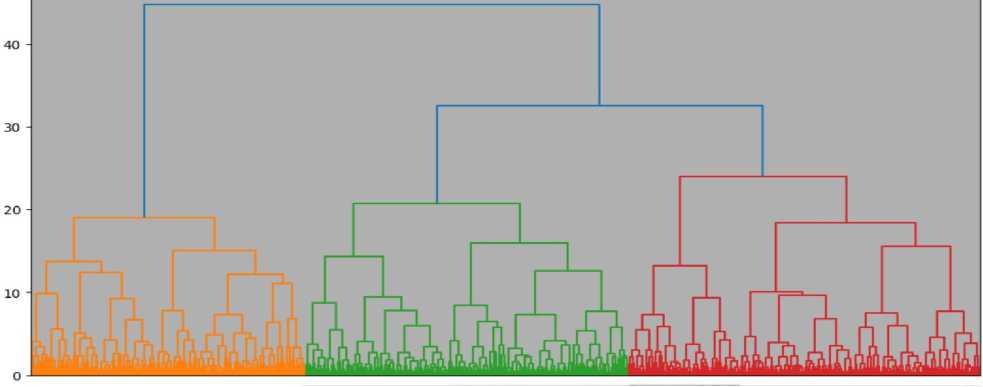

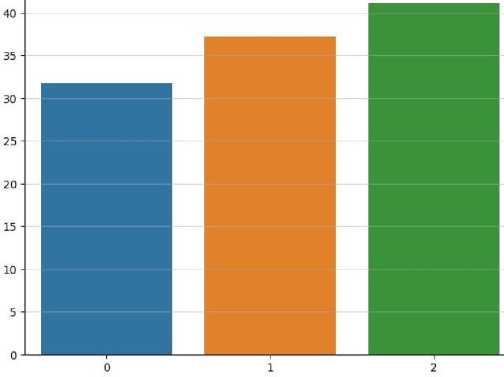

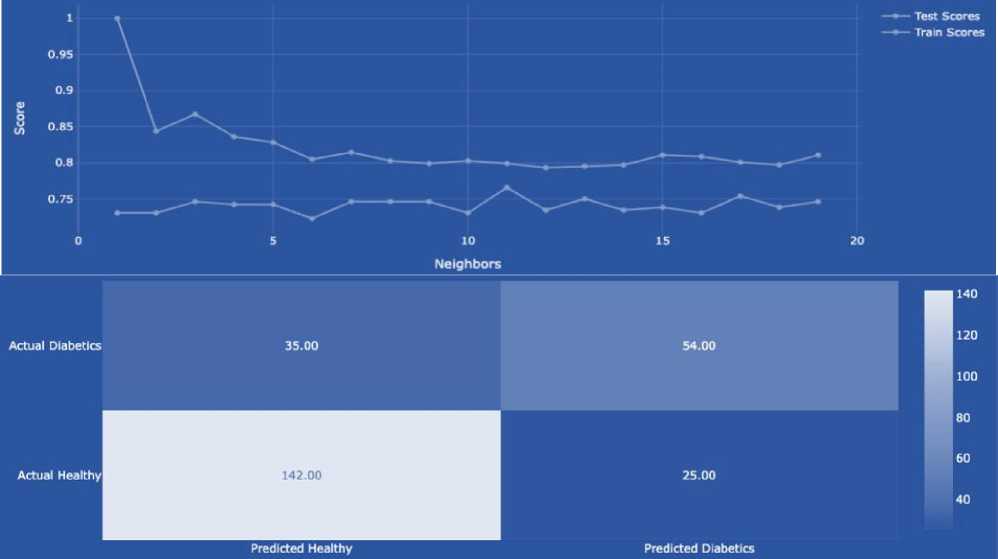

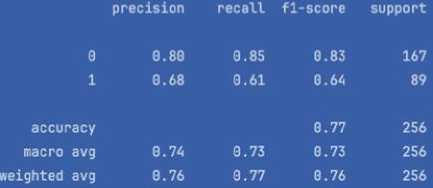

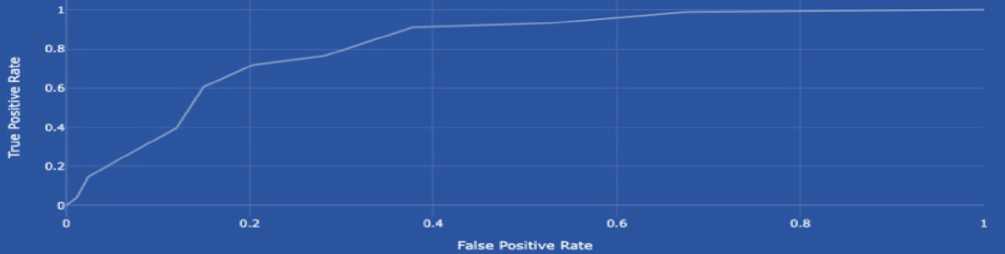

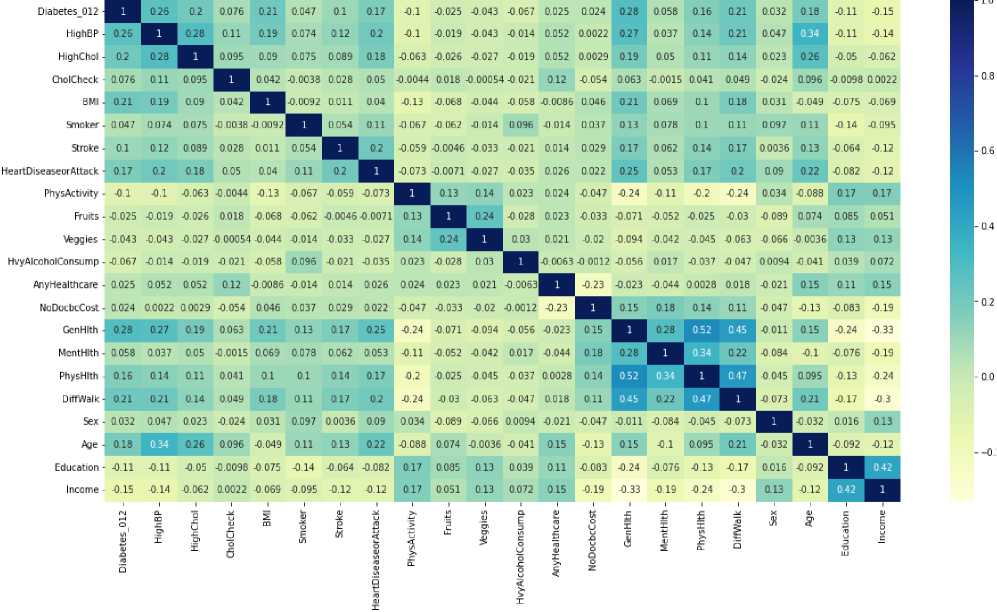

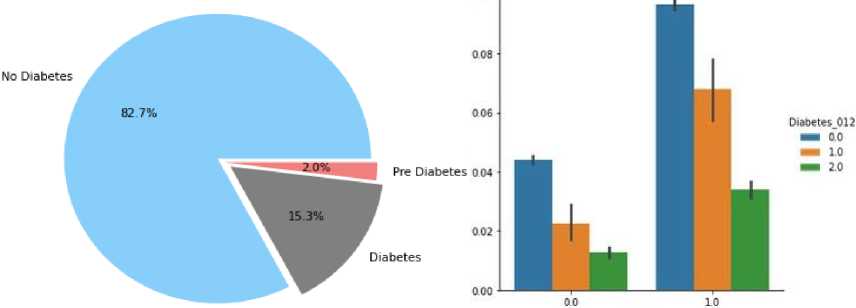

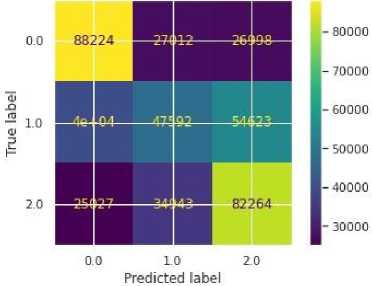

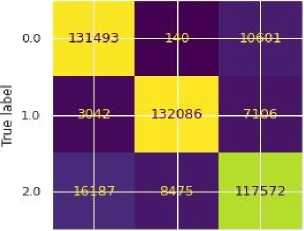

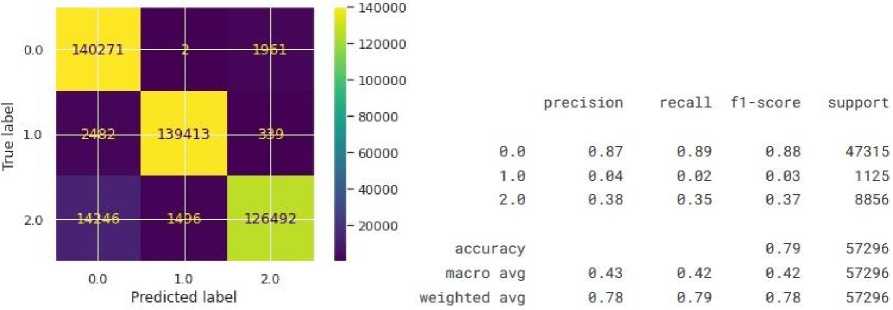

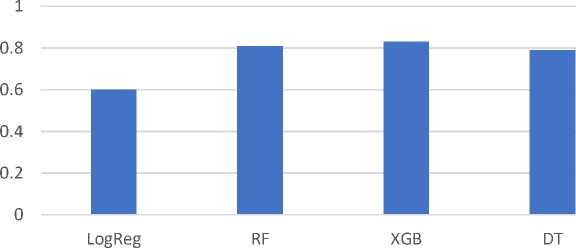

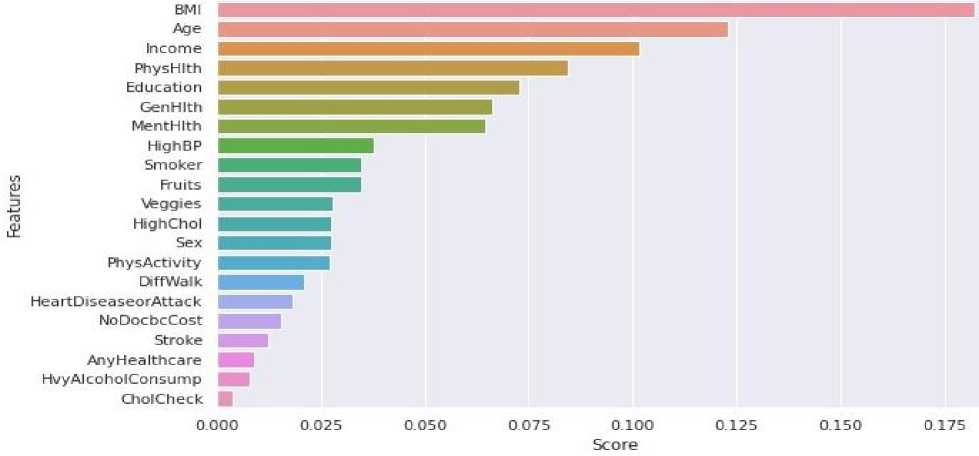

This paper presents the development and implementation of an intelligent system for predicting the risk of diabetes spread using machine learning techniques. The core of the system relies on the analysis of the Pima Indians Diabetes dataset through k-nearest neighbours (k-NN), Random Forest, Logistic Regression, Decision Trees and XGBoost algorithms. After pre-processing the data, including normalization and handling missing values, the k-NN model achieved an accuracy of 77.2%, precision of 80.0%, recall of 85.0%, F1-score of 83.0% and ROC of 81.9%. The Random Forest model achieved an accuracy of 81.0%, precision of 87.0%, recall of 91.0%, F1-score of 89.0% and ROC of 90.0%. The Logistic Regression model achieved an accuracy of 60.0%, precision of 93.0%, recall of 61.0%, F1-score of 74.0% and ROC of 69.0%. The Decision Trees model achieved an accuracy of 79.0%, precision of 87.0%, recall of 89.0%, F1-score of 88.0% and ROC of 83.0%. In comparison, the XGBoost model outperformed with an accuracy of 83.0%, precision of 85.0%, recall of 96.0%, F1-score of 90.0% and ROC of 91.0%, indicating strong prediction capabilities. The proposed system integrates both hardware (continuous glucose monitors) and software (AI-based classifiers) components, ensuring real-time blood glucose level tracking and early-stage diabetes risk prediction. The novelty lies in the proposed architecture of a distributed intelligent monitoring system and the use of ensemble learning for risk assessment. The results demonstrate the system's potential for proactive healthcare delivery and patient-centred diabetes management.

Diabetes Prediction, Machine Learning, XGBoost, K-NN Algorithm, Blood Glucose Monitoring, Intelligent System, Healthcare AI, Ensemble Methods, Risk Assessment, Pima Dataset

Короткий адрес: https://sciup.org/15019783

IDR: 15019783 | DOI: 10.5815/ijisa.2025.03.06

Текст научной статьи Intelligent Application for Predicting Diabetes Spread Risk in the World Based on Machine Learning

Published Online on June 8, 2025 by MECS Press

Information technologies today are the engine of development and evolution in many areas of human activity, from general-purpose systems to the sphere of critical technologies. The efficiency of the processes that they allow to automate depends on the quality and reliability of the use of software and hardware complexes. Systems of general-purpose information technologies include mass-operated systems, in particular, software systems that can be freely downloaded from marketplaces and installed locally on both mobile devices and users' computers. Critical systems include systems that have a direct impact on the life and health of people, the environment, etc. The implementation of information technologies in the field of medicine is especially relevant. They make it possible to analyse, monitor and predict the development of various diseases, improve the quality of life of patients, and also act as decision-making support systems for doctors when establishing a diagnosis. The current stage of human development is accompanied by the spread of diseases that tend to increase. Diabetes mellitus, cardiovascular diseases, strokes and heart attacks, Alzheimer's disease and multiple sclerosis, and cancers, which are mainly caused by genetics and lifestyle characteristics, geographical location of people, etc., are becoming widespread. Trends are observed regarding the increase in the number of patients to the scale of a pandemic analysing the spread of diabetes mellitus. Thus, according to forecasts of international organizations, there is a seven-fold increase in morbidity in 2045 compared to 2000, which is expressed in absolute terms as 700 million people compared to 145 million respectively. Given such disappointing indicators, the current task today is to build a comprehensive system for determining and predicting the development of diabetes mellitus.

Modern technologies and artificial intelligence (AI) are opening up new horizons in the field of medical research and the treatment of chronic diseases such as diabetes. Diabetes is a severe disease characterized by metabolic disorders and high blood glucose levels, which can lead to various complications, including heart, kidney, nervous system and vision damage. Therefore, timely detection and prediction of the development of this disease is crucial to prevent its complications. The development of artificial intelligence methods, in particular machine learning and deep learning, opens up prospects for creating practical tools for predicting the development of diabetes. These methods allow the analysis of large amounts of medical data, including laboratory tests, clinical indicators, lifestyle information and genetic factors, to identify individuals at high risk of developing diabetes at an early stage. Monitoring blood glucose levels is a defining part of life for people with diabetes, so it is essential, first of all, to ensure the convenience and accuracy of monitoring glucose levels, automated data transfer to a central repository, and prediction of its development.

Diabetes is generally categorized into three primary types: type 1, type 2, and gestational diabetes (which occurs during pregnancy). Type 1 diabetes is believed to result from an autoimmune response, where the body's immune system mistakenly attacks insulin-producing cells, leading to little or no insulin production. This type accounts for approximately 5–10% of all diabetes cases and typically appears rapidly, most often in children and young adults. Individuals with type 1 diabetes must take daily insulin to survive, and currently, there are no known prevention methods. Type 2 diabetes, the most common form – affecting 90–95% of people with diabetes – occurs when the body becomes resistant to insulin or fails to use it effectively. It develops gradually over time and is usually diagnosed in adults. Since early symptoms may be mild or absent, regular screening is essential, especially for those at risk. Type 2 diabetes can often be prevented or delayed by maintaining a healthy weight, following a balanced diet, and engaging in regular physical activity. Gestational diabetes develops during pregnancy in women who did not previously have diabetes. Although it typically resolves after childbirth, it increases the mother's long-term risk of developing type 2 diabetes.

Additionally, children born to mothers with gestational diabetes have a higher likelihood of obesity and diabetes later in life. Risk factors for type 1 diabetes are less well defined but include a family history of the disease and younger age at onset, typically during childhood, adolescence, or early adulthood. Type 2 diabetes risk factors include being overweight, aged 45 or older, having a family history of the condition, being physically inactive, having prediabetes, or having a history of gestational diabetes. Fortunately, type 2 diabetes can often be avoided or postponed through proven lifestyle adjustments.

Given the increasing prevalence and risks associated with all types of diabetes, there is a pressing need to develop a comprehensive system that combines both hardware and software solutions. Such a system should facilitate continuous data collection, management, and predictive analysis to monitor and support diabetes prevention and care.

The research purpose is to study the methods, software, and hardware used to process data in blood sugar monitoring systems. The object of the research is the processes of data collection and accumulation, as well as forecasting the development of diabetes mellitus. The subject of the study is the methods and means of data accumulation and forecasting the development of diabetes mellitus. The following tasks are set in the master's qualification work to achieve this goal:

-

• analyse scientific publications on factors influencing the development of diabetes mellitus;

-

• investigate existing software and hardware and other solutions for detecting and regulating the level of glucose

in human blood, accumulating and processing such data;

-

• propose an architecture and possible ways to implement a software and hardware system for collecting, accumulating and predicting blood glucose levels;

-

• create models and algorithms for predicting the development of diabetes mellitus based on existing open data;

-

• implement software to predict the development of diabetes mellitus.

When solving the tasks of the qualification work, the following methods and tools were used: analysis and generalization - when analysing statistical data on the incidence of diabetes mellitus and choosing ways to implement a blood sugar monitoring system; set theory and machine learning methods – when formalizing and building a conceptual model of the distributed architecture of the monitoring system and when predicting the development of diabetes mellitus; design and programming – when implementing a software model for predicting the development of diabetes mellitus; experiment and measurement – when assessing the accuracy of the prediction results and identifying factors with the most significant impact on the development of diabetes mellitus. This work focuses on the use of machine learning methods to predict the risk of developing type 2 diabetes mellitus based on the Pima Indians Diabetes dataset. The object of the work is the Pima Indians Diabetes dataset. The goal of the work is to develop and train a k-nearest neighbour (k-NN) model that is able to classify individuals accurately by the level of risk of developing diabetes. It is necessary to perform to achieve this goal:

-

• comprehensive data cleaning, including missing value filling and data normalization, to ensure the reliability of the results;

-

• optimize the model hyperparameters to find the best value for the number of neighbours for k-NN that maximizes the prediction accuracy;

-

• determine and analyse the leading performance indicators of the model, such as the error matrix, precision, completeness, F1 score, ROC-curve, and AUC score. It will allow us to evaluate the model’s ability to correctly classify cases with high and low risk of developing diabetes.

The scientific novelty of the obtained research results lies in the following:

• For the first time, algorithms for the functioning of a glucometer and a global information system for managing medical data were proposed, which together constitute a system for 24-hour monitoring and management of the patient's blood glucose level, which makes it possible to ensure the collection, processing and prediction of the appearance or development of diabetes mellitus.;

• For the first time, a conceptual model of a distributed architecture of a data collection and processing system for monitoring blood sugar levels has been constructed and mathematically presented, which includes a set of local and central control nodes and allows for the exchange of messages and the prediction of the development of the disease.

2. Related Works

2.1. Basic Principles of Diabetes

The implementation of the proposed solution for the use of software and hardware in the implementation of blood sugar monitoring systems allows for 24-hour monitoring and prediction of the potential occurrence and development of diabetes. The relevance of the work lies in the need to optimize medical care, improve the quality of life of patients, and reduce the economic burden associated with the treatment and complications of diabetes. The use of AI for diabetes prediction can significantly improve the processes of diagnosis, monitoring, and treatment, contributing to the transition from reactive treatment to a proactive and personalized approach in medicine. This work is aimed at studying data and developing an artificial intelligence model that will allow the prediction of the development of diabetes with high accuracy based on the analysis of various patient data. The results can be used to develop new clinical recommendations and strategies for managing the risk of developing diabetes.

Diabetes mellitus (DM) is one of the most commonly diagnosed diseases in the world. According to the International Diabetes Federation, more than 439 million people will be diagnosed with diabetes by 2030. Approximately 2–5 million patients die from DM each year. Diabetes mellitus is one of the most significant global health challenges. According to the World Health Organization, the number of people with diabetes has increased rapidly from 108 million in 1980 to more than 422 million in 2014 [1]. This number is projected to continue to increase, especially in developing countries.

Diabetes mellitus significantly increases the risk of developing serious complications, reduces quality of life and increases mortality. The main factors contributing to the increase in morbidity include an ageing population, increasing obesity, and lifestyle changes such as insufficient physical activity and an unbalanced diet [2]. An essential part of fighting the diabetes epidemic is raising awareness about the risk factors and the importance of early detection. Despite the high prevalence of the disease, no practical method has yet been proposed to reduce its incidence, although various methods are currently used to treat and control the disease. Almost all the foods we eat are broken down into sugar (called glucose) and released into the bloodstream. When blood glucose levels rise, this signals the pancreas to secrete insulin. It acts as a key for the sugar in the blood to enter the cells of your body to be used as energy. If you have diabetes, it means that your body does not produce enough insulin or does not use it properly. When there is not enough insulin or the cells stop responding to insulin, too much blood sugar remains in the blood. People with diabetes are at high risk of developing diseases such as heart disease, kidney disease, stroke, eye problems, nerve damage, and more. It is reportedly the fourth leading cause of death in most human societies.

Diabetes mellitus is divided into three main types: type 1 diabetes, type 2 diabetes, and gestational diabetes [3]. Type 1 diabetes occurs when the body’s immune system destroys the insulin-producing cells in the pancreas. This type of diabetes is most commonly diagnosed in children and young adults but can develop at any age. Type 2 diabetes, which accounts for approximately 90–95% of all cases, usually develops in adults and is associated with insulin resistance and insufficient insulin production. Gestational diabetes can occur in women during pregnancy and usually resolves after delivery, but increases the risk of developing type 2 diabetes later in life. These types of diabetes have different causes and treatments, emphasizing the need for accurate diagnosis and an individualized approach to each patient.

Type 2 diabetes is the most common type of diabetes. It has multiple risk factors that can influence its development. Genetics plays a significant role. However, genetic factors interact with a number of environmental factors, including lifestyle. Physical inactivity and being overweight significantly increase the risk of developing the disease. Other factors include age and ethnicity. Diet is also an essential factor: high-calorie foods rich in simple carbohydrates and saturated fats can contribute to insulin resistance [4]. Understanding these factors allows us to more effectively identify individuals at high risk of developing diabetes and to take preventive measures:

-

• Genetics, such as having a first-degree relative with diabetes, significantly increases the risk of developing the disease. Heredity plays a key role in the predisposition to type 1 and type 2 diabetes. Genetic mutations can affect the body's ability to produce or use insulin;

-

• Lifestyles, such as physical inactivity and unhealthy eating habits, are important risk factors. Regular exercise and a healthy diet can significantly reduce the risk of developing type 2 diabetes. On the other hand, high levels of sugar and fat intake increase the likelihood of developing diabetes and its subsequent complications;

-

• Obesity, as excess weight, especially abdominal fat, causes insulin resistance, which is a significant factor in the development of type 2 diabetes. Weight management can significantly reduce the risk of developing diabetes;

-

• Age, in particular, the risk of developing diabetes increases with age, especially after the age of 45. It is due to decreased physical activity, decreased muscle mass, and changes in metabolic patterns;

-

• Ethnicity, for example, some ethnic groups, such as African Americans, Hispanics, and South Asians, are at higher risk of developing diabetes. It may be due to genetic factors, as well as cultural factors and access to healthcare.

If you have any of the following symptoms of diabetes, see your doctor about testing your blood sugar: passing a lot of urine, often at night; feeling very thirsty; losing weight without trying; feeling very hungry all the time; having blurred vision; having numbness or tingling in your hands or feet; feeling very tired; having dehydrated skin; having sores that heal slowly; – having more infections than usual. There is no cure for diabetes yet, but losing weight, eating a healthy diet, being active, and getting your diabetes diagnosed early can really help. Diabetes can lead to many serious complications that not only affect the quality of life of patients but can also be life-threatening. The importance of early detection is to prevent or minimize these complications:

-

• Cardiovascular diseases, in particular diabetes, significantly increase the risk of developing cardiovascular diseases, such as coronary artery disease, myocardial infarction, and stroke. High blood glucose levels can damage the inner lining of blood vessels, contributing to atherosclerosis. These conditions complicate blood circulation and can ultimately lead to serious cardiac events that require immediate medical attention;

-

• Diabetic neuropathy is damage to nerve fibres throughout the body, which most often affects the lower extremities. Symptoms include pain, tingling, and loss of sensation, which significantly reduces quality of life and increases the risk of injury due to loss of sensation. In severe cases, neuropathy can lead to foot deformity, requiring orthopaedic intervention;

-

• Diabetic retinopathy is one of the leading causes of vision loss among people of working age. High blood sugar levels damage the small blood vessels in the retina, which can lead to bleeding, scarring, and, ultimately, retinal detachment. Regular eye exams and timely treatment with laser or other methods can help prevent vision loss;

-

• Kidney failure, particularly diabetes, is one of the leading causes of chronic kidney failure. High glucose levels gradually damage the kidneys, particularly the filtering structures, which can eventually lead to the need for dialysis. Early detection of changes in kidney function and aggressive management of blood sugar and blood

pressure can prevent or delay progression to end-stage kidney disease;

-

• Diabetic foot is a serious complication that involves nerve damage and poor circulation in the feet, which can lead to infections, ulcers, and even amputations. Diabetic patients should regularly check their feet for injuries, cracks, or ulcers and use special footwear to prevent injuries. Early treatment of infections and timely surgical intervention for ulcers can prevent more serious consequences.

-

2.2. The Role of Artificial Intelligence in Medicine

AI in medicine has deep roots, dating back to the mid-20th century when the concepts and algorithms that gave rise to this field were first developed. Early research focused on creating systems that could mimic the clinical thinking of doctors. One of the first known systems was the MYCIN program [6], developed in the 1970s at Stanford University. MYCIN used rules to diagnose infectious diseases and recommend antibiotic treatment, although it was never used in clinical practice due to the limitations of the technology at the time. With the advent of more powerful computers and the development of machine learning, AI began to be integrated into medicine with new force. In the 1980s and 1990s, numerous diagnostic and navigation systems were created based on artificial intelligence, helping medical professionals make decisions based on large amounts of data. These systems used knowledge bases filled with data about symptoms, treatments, and patient feedback [7]. The current stage of AI in medicine is characterized by the use of sophisticated deeplearning algorithms that can analyse medical images, interpret medical records, and even predict potential medical conditions based on genetic information. For example, deep learning systems such as those developed by Google Health and DeepMind have demonstrated the ability to detect diabetic retinopathy and other eye diseases at the same or even better than trained professionals [7]. The value of AI in medicine continues to grow due to its ability to process and analyse large amounts of data faster and more accurately than humans can. It not only increases the efficiency of medical research and diagnostic procedures but also contributes to the development of personalized approaches to treatment, opening up new opportunities for the prevention and treatment of diseases at the individual level.

Regular monitoring of blood glucose levels, adherence to diet, physical activity and proper medication are key aspects of treatment. In addition, regular consultations with doctors and other health professionals allow you to detect any changes in your health in time and adjust therapy. It is also essential to pay attention to your psycho-emotional state, as stress and depression can negatively affect the course of the disease.

Adequate control of diabetes requires an integrated approach that includes medication, lifestyle changes, regular self-monitoring, and patient education. Medication is the mainstay of treatment for type 1 diabetes, where patients require insulin because their bodies cannot produce it. Insulin is administered by injection or with insulin pumps, allowing for precise control of blood sugar levels. For type 2 diabetes, different classes of blood glucose-lowering drugs are used, such as metformin, sulfonylureas, insulin, and others [5]. It is crucial to tailor treatment to each patient, taking into account their overall health, age, lifestyle, and other medical conditions. Lifestyle changes include recommendations for a healthy diet and physical activity. A healthy diet for people with diabetes includes limiting simple carbohydrates, increasing fibre, and balancing protein and fat intake. Physical exercise helps maintain a healthy weight, improve insulin resistance, and improve overall health. At least 150 minutes of moderate aerobic exercise per week is recommended. Patients with diabetes should regularly monitor their blood glucose levels using glucometers. It allows patients to track the effects of diet, physical activity, medications, and stress on glucose levels.

Regular self-monitoring is critical to avoiding hypo- and hyperglycaemic states, which can be dangerous [5]. It is also essential that patients are well-educated about all aspects of their condition, including how to manage their diabetes, how to recognize and treat hypo- and hyperglycaemia, and how to prevent complications. Effective diabetes education programs can include individualized education, group classes, and seminars, as well as educational materials and online resources. Psychological support is an important aspect. A diagnosis of diabetes can cause emotional distress. Mental health support is an essential component of comprehensive care for people with diabetes. It may include access to psychological counselling, support groups, and other resources to help manage stress and emotions.

AI is revolutionizing medicine, particularly through the introduction of advanced technologies and tools that improve the diagnosis, treatment, and monitoring of diseases. The application of AI in medicine encompasses a wide range of technologies, each with its characteristics and areas of application. Machine learning is one of the main components of AI in medicine. It includes algorithms that can learn from experience without being explicitly programmed for each task. In medicine, machine learning is used to analyse large amounts of data, from laboratory test results to medical records. These algorithms are able to identify patterns and abnormalities that may not be obvious to the human eye. Deep learning, a subcategory of machine learning, involves models that mimic the structure and functioning of the human brain using artificial neural networks. It is beneficial for processing and analysing medical images, such as X-rays, MRIs, or ultrasounds. Deep learning can detect subtle pathological changes in images that may indicate early stages of diseases such as cancer or heart disease. Natural language processing (NLP) is used to analyse medical records, transforming unstructured medical information into a structured form that can be easily interpreted and used to support clinical decisions. NLP can help identify trends in symptoms, treatments, and outcomes and standardize records for further analysis. Computer vision is another crucial AI tool used to identify, classify, and quantify images. In medicine, it can be used to automatically detect abnormalities in medical images, enabling faster and more accurate diagnoses. Robotic surgery, while not a purely software aspect of AI, uses machine learning algorithms to control surgical instruments, allowing for more precise surgeries with smaller incisions. It helps patients recover faster and reduces complications.

Overall, these technologies and tools are creating the basis for new approaches in medicine, increasing the efficiency of diagnostics and treatment, and providing more personalized healthcare. Artificial intelligence has the potential to radically change medical practice, making it more accurate, efficient and accessible. Disease prediction and diagnosis using AI opens up new possibilities for medical science. AI can significantly improve the accuracy of diagnostic procedures and the ability to predict the future development of patient health based on the analysis of vast data [8]:

-

• diagnostic accuracy, for example, AI uses machine learning algorithms to analyse medical images such as X-rays, MRIs, and ultrasound scans. Deep learning systems, especially convolutional neural networks, are effective at recognizing pathological changes in medical images that are often missed by the human eye. AI algorithms can detect minimal deviations from the norm, which allows for the diagnosis of diseases at an early stage;

-

• disease risk prediction, for example, artificial intelligence models use a patient’s medical history, genetic information, and lifestyle habits to predict the likelihood of developing diseases such as diabetes, heart disease, and cancer. The use of biomarkers, such as biochemical indicators in the blood, contributes to the accurate determination of risk. Predictive models allow the identification of individuals at high risk of developing a disease before symptoms appear, which can increase the effectiveness of preventive measures;

-

• early detection and intervention, for example, through the analysis of medical data, AI allows the detection of minimal changes in health that may indicate the onset of a disease. Early intervention based on AI data can include recommendations for changes in diet, exercise, or medication. Predicting serious complications in chronic patients (for example, predicting hypoglycaemic states in patients with diabetes) can help avoid lifethreatening situations;

-

• Optimization of health care, in particular, AI contributes to a more efficient allocation of medical resources, such as determining the need for specialized examinations or interventions. Automation of routine diagnostic procedures reduces the burden on medical staff and shortens waiting times for patients. With the help of AI, it is possible to analyse the effectiveness of different treatment methods and choose the most effective ones based on large volumes of clinical data.

It opens up new possibilities for medicine, making the processes of prediction and diagnosis not only faster but also more accurate, leading to improved overall quality of healthcare, reduced costs, and better outcomes for patients. Personalized medicine, often referred to as data-driven medicine or precision medicine, is seen as the future of healthcare. This approach uses detailed analysis of each person’s genetic information, biomarkers, and other characteristics to develop individualized treatment and prevention plans [9]. Here are some more details about the prospects and future of personalized medicine:

-

• ndividualized treatment plans, in particular, the use of artificial intelligence in personalized medicine, allows doctors to better understand how different factors, such as a patient’s genetic profile, can affect a disease and its treatment. It leads to the development of more effective treatment plans that can reduce the risk of side effects and improve treatment outcomes;

-

• Genomics and pharmacogenetics, for example, AI helps analyse vast amounts of genomic data to identify mutations that affect the risk of developing diseases. Such analysis can also indicate how a patient will respond to certain drugs, thereby allowing for the selection of the most effective drugs without unnecessary trial and error;

-

• Predicting treatment responses based on the use of machine learning to predict treatment responses, which changes approaches to patient management. AI models can predict how likely patients are to respond to treatment with certain drugs, allowing for individual sensitivity to drugs or the likelihood of resistance;

-

• Bioinformatics and data integration, in particular, personalized medicine, requires the integration of diverse data, including genetic, biomedical, epidemiological and clinical data. Bioinformatics plays a key role in combining these data into holistic models that allow for deeper analysis of the relationships between different types of information and increase the accuracy of medical predictions;

-

• Ethical and legal challenges based on the implementation of personalized medicine also face ethical and legal challenges, in particular, issues of confidentiality and access to genetic information. It requires the development of new regulatory frameworks that would protect patients' rights and, at the same time, promote scientific research;

-

• The future and innovation, in particular, the prospects for personalized medicine look promising given the rapid development of biotechnology and artificial intelligence. In the future, it is possible to create even more accurate tools for monitoring and treating diseases at the individual level, which will change medical practice;

-

• Interaction with patients and healthcare professionals, such as the increasing adoption of personalized medicine, requires a new level of interaction between patients and doctors. Healthcare professionals need new skills to interpret complex data and to communicate with patients about their health based on genetic information and individual risks.

-

2.3. Artificial Intelligence and Diabetes

These aspects demonstrate that personalized medicine has the potential to radically change medical practice, making treatment more targeted and effective while creating new challenges and opportunities for medical science and practice.

Using AI to identify diabetes risk could revolutionize prevention and early diagnosis. It is possible thanks to advanced machine learning algorithms that analyse large datasets and identify potential risks before symptoms appear [9]. AI algorithms are used to analyse genetic data to identify markers associated with an increased risk of diabetes. These genetic markers can include mutations or gene variants that have been linked to diabetes in scientific studies. Risk factor screening: Algorithms can analyse a wide range of risk factors, such as age, weight, family history, physical activity levels, eating habits, and pre-existing medical conditions such as hypertension or metabolic disorders. Previous medical outcomes: Using AI to analyse a patient’s historical medical data, including blood glucose and haemoglobin A1c test results, which may indicate an earlier risk of developing diabetes. Lifestyle analysis: AI can process lifestyle data collected through mobile apps and other sources to determine how daily habits may affect the risk of developing diabetes. For example, low physical activity and a high-calorie diet are known risk factors. Risk modelling – modern AI technologies can model different scenarios based on the data provided to help predict future health outcomes based on current trends and changes in patient behaviour. The availability of AI tools in medicine, particularly in the diagnosis and treatment of diabetes, is a critical issue affecting global health. Despite the significant potential of AI to improve outcomes and reduce costs, there are a number of challenges that limit the widespread adoption of these technologies in clinical practice, especially in resource-limited settings [10]:

-

• the cost of technology, in particular, one of the main barriers is the high cost of developing and implementing AI systems. Developing practical algorithms requires significant investments that not all healthcare institutions can afford;

-

• the need for skilled professionals, in particular, the use and management of AI requires the availability of skilled professionals such as data engineers, analysts and health informatics, which are often in short supply, especially in developing countries;

-

• data infrastructure, for example, the effective use of AI requires a reliable IT infrastructure to collect, store and process large amounts of data. In many regions, the necessary IT infrastructure is lacking, which limits the possibilities of using advanced analytical tools;

-

• legal and ethical issues, in particular, the legislation governing the use of medical data and AI varies between countries, which can complicate international cooperation and technology exchange. In addition, there are issues of confidentiality and data misuse;

-

• Educational barriers, such as healthcare professionals needing additional education and training to effectively use AI. The need for continuous education and retraining can be burdensome and require time and resources;

-

• implementation in clinical practice, in particular, the integration of AI into clinical practice requires clear evidence of effectiveness and safety. Clinical trials to validate AI tools can be lengthy and expensive;

-

• acceptance of technologies, as there is scepticism from both patients and healthcare professionals about the use of AI, which can affect the acceptance and implementation of new technologies. Fears about the loss of personal contact between doctor and patient may also play a role;

-

• adaptation of technologies, i.e. the need to adapt existing AI systems to local conditions and needs, can be complex. Cultural, linguistic and demographic differences require individualized approaches;

-

• technical limitations, for example, AI tools may have limitations due to insufficient accuracy, problems with data collection, or insufficient ability for general adaptation.

Overcoming these challenges requires a concerted effort by governments, education, healthcare and the technology industry to create accessible, effective and safe AI tools that can improve the treatment and diagnosis of diabetes globally. Data collection and analysis are critical steps in the process of using AI to predict the development of diabetes. It is a process that requires high precision and care because the effectiveness of further analysis and the accuracy of predictions depends on the quality of the data collected [10, 11]. In the beginning, it is necessary to determine which data sources will be most relevant for the study. These can be medical records, laboratory test results, patient lifestyle data, as well as information from wearable devices that monitor health:

• data collection after data sources have been identified should be systematic and standardized to ensure consistency and reproducibility of results;

• data quality verification, in particular, is critical to ensure that the data used is accurate, complete, and up-to-date. Incomplete or inaccurate data can lead to errors in prediction and analysis;

• Data normalization, as different sources may provide data in different formats, is necessary for their integration. It includes unifying measurement scales, converting data to a standard format, and resolving data incompatibility issues;

• handling missing values, as missing data is a common problem in health data. It is vital to identify techniques for handling these gaps, such as imputation based on existing data;

• identifying and handling outliers that may distort the results of analysis and prediction. It is essential to identify and adequately handle outliers so that they do not affect the overall conclusions;

• modelling based on the use of machine learning algorithms to develop predictive models. This process includes training, testing, and validating models on collected data;

• evaluating the model using metrics such as accuracy, sensitivity, specificity, and area under the ROC curve;

• iterating and optimizing to fine-tune parameters and select the best algorithms for specific data.

3. Material and Methods

3.1. Analysis of Statistics and Factors Influencing the Development of Diabetes Mellitus

Integrating AI into healthcare is a complex process that requires careful consideration of regulatory, technical, educational, and ethical aspects. Regulatory regulation plays a critical role in ensuring the safety and effectiveness of AI-based medical products. It includes certifying new technologies to ensure that they meet quality and safety standards. It is also necessary to ensure that AI-based systems meet all privacy and data confidentiality requirements established in the healthcare industry [12]. Technical integration requires compatibility of AI with existing IT systems in hospitals, which can be a challenge due to the heterogeneity and obsolescence of some systems. It includes integration with electronic medical records, laboratory systems, and portable medical devices. It is also essential to ensure a high level of data protection to prevent unauthorized access and other data integrity risks. Professional training of healthcare professionals is a necessity for practical work with new technologies. Doctors and nurses need to be provided with appropriate training to ensure they understand the capabilities of AI, as well as the skills to interpret and use the results that these systems offer. Healthcare professionals must be able to integrate this data into the clinical context and make informed decisions based on it [12]. In addition to the technical and educational aspects, it is also essential to consider the ethical aspects associated with the use of AI in clinical practice. These include issues of confidentiality, informed consent of patients, and the potential impact of algorithmic errors on the health and well-being of patients. Taking these aspects into account is key to building trust and acceptance of AI in medicine.

Healthcare is always a big issue for any nation, and it is always a challenging task. The best indicator of the health of a country is the condition of the residents living there. Improving the healthcare system can directly lead to economic growth as a healthy person can prove to be a great asset to the nation and can effectively function in the workforce compared to an unhealthy person. Healthcare is the unification and integration of all the measures that can be taken to improve the healthcare system. Healthcare is about prevention, diagnosis and treatment. Improving healthcare should be a top priority. Using technology to improve healthcare has proven to be very beneficial. In hospitals today, diagnosing diabetes involves conducting a range of medical tests to gather essential information, which then guides the selection of appropriate treatment. Big Data Analytics has become increasingly important in the healthcare sector, where vast amounts of patient data are generated and stored. By applying big data techniques, healthcare professionals can analyze extensive datasets to uncover valuable insights, detect hidden patterns, and extract meaningful knowledge that supports accurate predictions and informed decision-making. Machine learning, in particular, offers powerful tools for the prevention, early detection, and treatment of a wide range of diseases.

Machine learning and data processing technologies are the best sources for improving the healthcare system. Manual detection or diagnosis done by doctors is time-consuming and inaccurate. Machines that are built to learn to detect diseases through machine learning and data mining can diagnose the problem better, and that too with high accuracy. Machine learning can not only prove helpful in analysing and predicting the disease. Still, it can also be useful in personalized treatment and behaviour modification, drug development and discovery of new patterns leading to new drugs and treatments, and clinical trial research. With the help of machine learning, we can do all these in a better way. Machine learning is considered one of the most critical functions of artificial intelligence that supports the development of computer systems that can learn from past experiences without the need to be programmed for each case. Machine learning is considered to be a pressing need in today’s situation, with the aim of eliminating human efforts by supporting automation with minimal flaws. The existing method of detecting diabetes is the use of laboratory tests such as fasting blood glucose and oral glucose tolerance. However, this method is laborious. We live in an era where data is generated exponentially, leading to the accumulation of huge data. Especially in the healthcare industry, the availability of data is large, but the need to extract knowledge from it is also significant; otherwise, the collection of big healthcare data will be useless if it is not used. Data mining and machine learning help to find helpful information that can be further widely used. In the healthcare industry, data mining and machine learning can be used to improve patient care, best practices, effective patient treatment, fraud detection, and more accessible healthcare services. Data mining can also be used to detect a plague outbreak earlier (prediction) by observing the trends in the symptoms/complaints of patients. Different prediction models have been developed and implemented by other researchers using variants of data mining methods, machine learning algorithms or also a combination of these methods [13]. In a study [14], a system using Hadoop and Map Reduce methods was implemented to analyse diabetic data. This system predicts the type of diabetes as well as the associated risks. The system is Hadoop-based and is cost-effective for any healthcare organization. In a study [15], the author used a classification technique to learn hidden patterns in a diabetes dataset. This model used naive Bayes and decision trees. The performance of both algorithms was compared, and their effectiveness was demonstrated.

In a study [16], a classification technique was used. The authors used the C4.5 decision tree algorithm to find hidden patterns from the dataset for efficient classification. In the study [17], an artificial neural network (ANN) was used in combination with fuzzy logic to predict diabetes. In [18], a hybrid prediction model was proposed, which includes a simple K-means clustering algorithm followed by a classification algorithm based on the result obtained from the clustering algorithm. The C4.5 decision tree algorithm is used to build the classifiers. In [19], a model using the Random Forest Classifier was proposed to predict the behaviour of diabetes. In [20], the C4.5 decision tree algorithm, neural network, K-means clustering algorithm, and visualization were used to indicate diabetes.

We propose to consider predicting the probability of diagnosing diabetes in the early stages using an ensemble machine learning method – XGBoost implemented in the Python programming language.

Diabetes mellitus is a chronic metabolic disease characterized by elevated blood glucose levels due to absolute or relative insulin deficiency [21]. According to the International Diabetes Federation, as of 2022, 537 million adults aged 20–79 years are currently living with diabetes worldwide. It is expected that by 2030, their number will increase to 643 million [22]. The global nature of the problem of increased incidence of diabetes mellitus has formed a complex medical and social situation, which is acquiring the characteristics and nature of a pandemic. Given the global trend towards the detection and spread of diabetes mellitus, this trend is also observed in Ukraine. In particular, over the past decade and a half, we have observed an increase in the prevalence of this disease by more than fifty per cent, and the number of cases has increased by more than 80%. Diabetes treatment aims to help people with this disease achieve near-normal glycemic levels to reduce the risk of long-term (e.g. vascular) complications while avoiding acute metabolic risks and maintaining the best quality of life. There are different types and stages of the disease. For example, type 1 diabetes is caused by an autoimmune reaction in which the human body damages the cells that are responsible for insulin. It affects its insufficiency and disruption of the body's functions. The probable cause of the development of diabetes is a specific structure at the gene level. At the same time, environmental factors, viral infections and immune system disorders are triggers that stimulate the development of diabetes [23]. A key factor in achieving reasonable glycemic control is self-management of the condition. Individuals with diabetes should [24-27]:

-

• control carbohydrate intake through food choices, an adaptation of eating behaviour to glycemic load;

-

• adhere to the principles of healthy eating;

-

• manage blood glucose levels using glucose-lowering drugs;

-

• monitor sugar levels using traditional blood tests or computer systems with appropriate sensors;

-

• provide physical activity to optimize glycemia and control body weight;

-

• organize activities in accordance with current glycemia levels and treatment requirements recommended by doctors.

-

3.2. Analysis of Existing Types of Devices for Measuring Blood Sugar Levels

If rapid-acting insulin is used (to cover elevated glucose levels after meals), assessing carbohydrate load, adjusting insulin dose, and correcting elevated glucose levels are additional necessary practices of daily diabetes self-monitoring.

Ongoing or repeated episodes of high blood glucose (hyperglycemia) significantly increase the likelihood of developing serious long-term complications related to diabetes, such as diabetic retinopathy, neuropathy, and nephropathy, often accompanied by diabetic foot syndrome. Poor glycemic control is also linked to a heightened risk of acute metabolic events, including severe hypoglycemia and extreme hyperglycemia, which may lead to conditions like ketoacidosis or hyperosmolar coma [27–28]. Therefore, consistently engaging in effective self-management behaviours aimed at achieving stable blood glucose levels is essential for preserving health and minimizing the risk of complications and disease progression [29–30]. Nevertheless, research indicates that many individuals with diabetes have room for improvement in their self-care practices. It is especially relevant for patients who also experience mental health challenges, such as depression or diabetes-related emotional distress, which can further hinder effective self-management [31]. Given that self-care plays a central role in influencing diabetes outcomes, monitoring individual behaviours to identify gaps and offering targeted support may be a valuable addition to everyday clinical care. Evaluating self-management in individuals with diabetes is especially important when glycemic control remains consistently poor, as it helps to identify underlying issues and potential risks. Such assessments may also be necessary in research settings to explore factors that support improved diabetes care – such as psychosocial influences [32] – or to measure the effectiveness of specific interventions, such as diabetes self-management education programs. A key element in supporting self-management is the use of accessible and reliable tools for monitoring blood glucose levels. A systematic review of existing instruments for assessing diabetes self-care revealed a wide variety of tools developed for this purpose. However, many of these tools have been applied in only a small number of studies, and their psychometric properties have not been thoroughly tested. As a result, only a few available scales meet the rigorous standards recommended by experts. These issues limit the applicability of existing measurement tools. In 2013, the Diabetes Self-Management Questionnaire was introduced to provide a multifactorial assessment of diabetes behaviour, which plays a vital role in glycemic monitoring in the significant types of diabetes.

In direct comparisons, the DSMQ explained significantly more variation in glycemic control than the established standard self-management scale [33]. It has since been translated into multiple languages and used in many studies, confirming its potential value for research and practice. A recent systematic review identified the DSMQ as one of three diabetes self-management scales that meet the COSMIN guidelines for instruments that can be recommended for use and that produce results that are reliable [34-35]. However, technological innovations such as continuous glucose monitoring and automated insulin delivery have changed the timing and pathways of diabetes care [36-44]. In addition, an instrument that meets the requirements set by the organization [27] should better capture some specific aspects of self-management.

According to [32], in Ukraine, there is currently no possibility of building a comprehensive system for analysing and forecasting the trend of diabetes incidence since there are no organizational mechanisms and technical means for forming and processing statistics on the development of diabetes and mortality from this disease.

Regularly checking your blood glucose (sugar) levels is the only reliable method to determine whether your levels are within a healthy range. Most people cannot accurately sense their blood sugar levels based on physical symptoms alone, so proper testing is essential. It is achieved through various specialized devices.

-

• A compact device Glucometer that requires a small drop of blood, typically taken from the fingertip and placed on a test strip. The device then calculates the glucose concentration in the blood.

-

• The Flash Glucose Monitoring (FGM) method utilizes a sensor worn on the back of the upper arm. It continuously collects glucose data and transmits it to a dedicated reader or smartphone app, eliminating the need for finger pricks. It provides 24/7 monitoring, helping users track trends throughout the day and night.

-

• A continuous Glucose Monitoring (CGM) sensor is inserted under the skin to measure glucose levels continuously. CGM is particularly beneficial for individuals with unstable blood sugar control. The average annual cost, including sensors and maintenance, is approximately $5,000. For example, in Australia, the National Diabetes Services Scheme (NDSS) subsidizes CGM and FGM devices, as well as related diabetes care supplies like syringes, test strips, and insulin pump components.

-

• Ketone Testing is primarily recommended for individuals on insulin therapy. Ketone testing helps detect the presence of ketones, which can indicate severe metabolic conditions:

o Urine ketone strips change colour based on the ketone concentration in the urine.

o Blood ketone meters function similarly to glucometers, offering more precise measurements.

Glucose meters may malfunction or produce inaccurate results due to Device ageing, Exposure to moisture, heat, or dirt, Low or depleted batteries, Expired test strips, Incorrect meter calibration codes, Incompatible or improperly inserted test strips, Insufficient blood samples, Contaminants like sugar on fingertips before testing. To ensure reliable results, always follow the manufacturer’s instructions. Hands should be washed thoroughly with soap and water and then dried before testing to prevent contamination. Additional Considerations:

-

• CGM sensors must be replaced weekly and inserted in a new location on the body. Periodic cross-checks with traditional fingerstick methods are recommended to verify CGM accuracy.

-

• Flash glucose monitors should only be applied to clean, dry skin to ensure proper adhesion and performance.

-

• Replacement batteries for diabetes-related devices are typically available at electronics stores, but users should always confirm the correct battery type and installation method.

-

3.3. Program Operation Algorithm

Diabetes can be diagnosed if the fasting blood glucose level is 126 mg/dL or higher. A typical fasting glucose test result is below 100 mg/dL. One of the main goals of diabetes treatment is to maintain blood glucose levels within a given target range. More than 400 million people worldwide live with diabetes, and they still suffer from the inconvenience of pricking their fingers several times a day to check their blood glucose levels. Various methods alternative to the finger prick method have been widely studied for determining blood glucose levels, including enzymatic or optical glucose sensors. However, they still have problems in terms of durability, portability, and accuracy. In a study [34], the research team introduced semi-continuous and continuous blood sugar monitoring with low maintenance costs without the pain of blood sampling, allowing patients to maintain quality of life through proper diabetes treatment and control. It is expected that the use of CGMS will increase, which currently stands at only 5%. The research team also conducted both an intravenous glucose tolerance test (IVGTT) and an oral glucose tolerance test (OGTT) with the sensor implanted in pigs in a controlled environment. According to the research team [34], the results of the initial in vivo proof-of-concept experiment showed a promising correlation between blood sugar levels and the frequency response of the sensor. The sensor demonstrates the ability to track blood sugar trends, and for actual implantation of the sensor, biocompatible packaging and foreign body reactions for long-term use need to be considered. In addition, an improved sensor interface system is under development. It should be noted that devices designed for continuous monitoring of blood sugar levels are currently practically not used in Ukraine, and only 5% of this type of equipment is used in the world. This is due to their cost and convenience for the patient. However, two types of glucometers are prevalent: invasive and non-invasive.

A characteristic feature of non-invasive glucose measurement devices is that they do not damage the skin, and measurements can be performed more often than with traditional glucometers. However, the disadvantage of such devices is that the accuracy of their measurement can cause significant errors in the event of impaired blood supply, the presence of coarse skin or calluses, and the monitoring itself, which must be carried out up to seven times a day. The essence of the functioning of non-invasive glucose meters is the analysis of the state of blood vessels. That is, indirect measurements are not provided. In addition, several functional models calculate glucose concentration in the blood based on the analysis of the state of the skin. It is enough to ensure the device's contact with a part of the human body to do this. Optical methods use different properties of light to interact with glucose depending on its concentration. The transdermal method involves measuring glucose levels through the skin using electrical pulses or ultrasound. Finally, thermal methods aim to measure glucose levels by detecting physiological parameters related to metabolic heat generation. Transdermal methods are affected by environmental changes, such as temperature and sweat [33], while the main limitation of optical technologies is that they depend on the properties of the matter being tested, such as skin colour [34]. MIR light only extends a few micrometres and can be used to analyse a blood sample.

On the other hand, NIR light penetrates the biological environment deeper, up to several millimetres. NIR has the potential to be used for non-invasive or minimally invasive blood analysis, even if the glucose absorption is not as high as in the MIR region. The most common non-invasive methods are listed below. When using near-infrared spectroscopy, glucose gives one of the weakest absorption signals in this infrared range per unit concentration of the main component in the body. Measuring glucose levels using near-infrared spectroscopy allows for the investigation of tissue depths in the range of 1 to 100 millimetres, with a general decrease in penetration depth with increasing wavelength. Near-infrared radiation penetrates the earlobe, the web, and the cuticle of the fingers or is reflected from the skin.

Another technology for noninvasive blood glucose monitoring is a spectroscope, which measures the absorption of far-infrared (FIR) radiation. It is part of the natural thermal spectrum and, with the appropriate device, allows the measurement of FIR absorption, which is present in natural thermal radiation or body heat. FIR spectroscopy is the only type of radiation technology that does not require an external power source. Raman spectroscopy measures scattered light, which is affected by the oscillations and rotations of the scattered light. Various Raman methods have been tested for blood, water, serum, and plasma solutions. Analytical problems include instability of the laser wavelength and intensity, errors due to other chemicals in the tissue sample, and long spectral acquisition times. [23] Photoacoustic spectroscopy uses an optical beam to rapidly heat the sample and create an acoustic pressure wave that can be measured with a microphone. The methods are also subject to the chemical effects of biological molecules, as well as the physical effects of changes in temperature and pressure. Glucose in the blood is responsible for providing the body with energy. For spectrophotometric experiments, Lambert's law of absorption notation is used and developed to express the absorption of light as a function of the concentration of glucose in the blood.

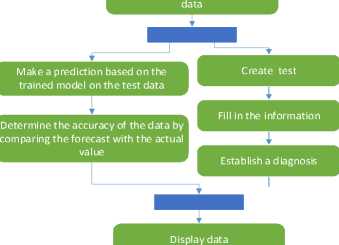

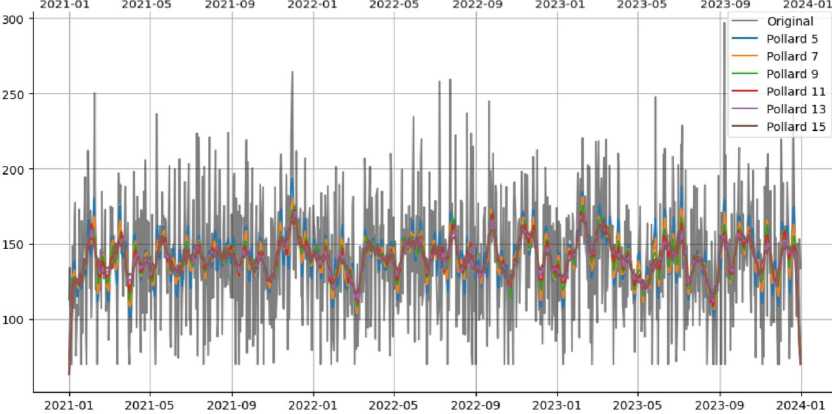

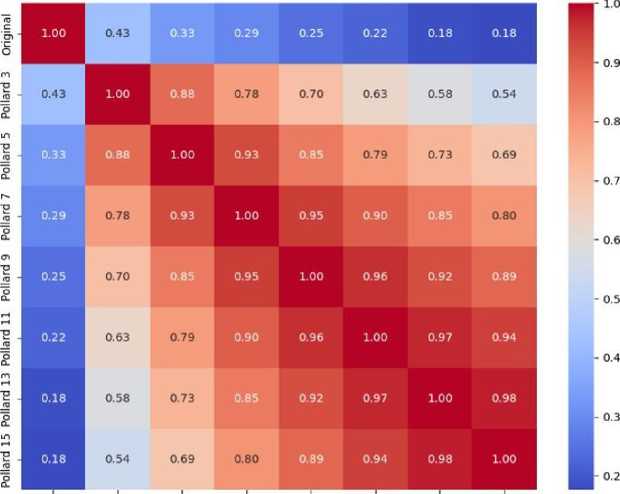

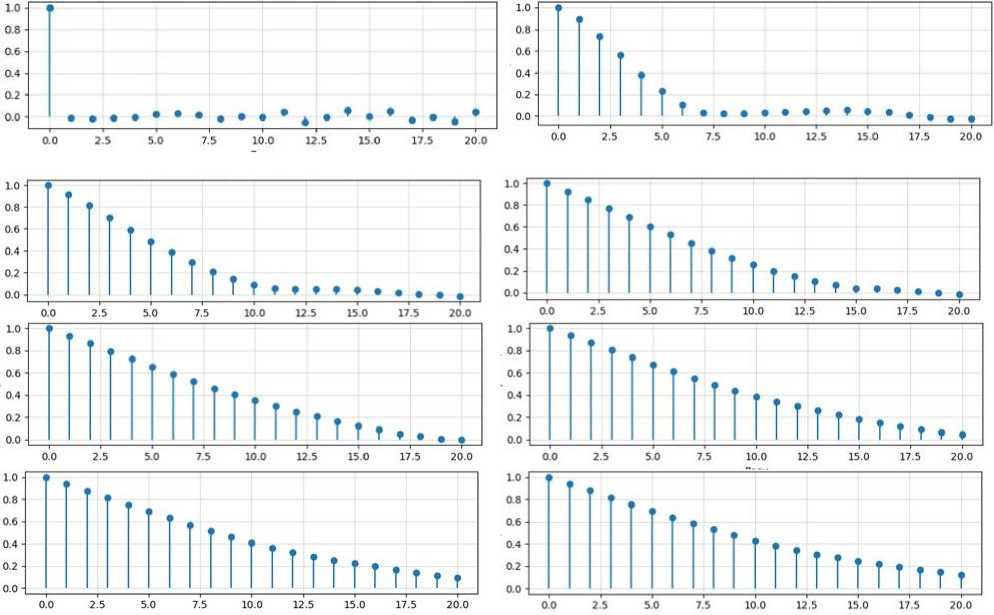

An algorithm is a set of instructions designed to perform a specific task. Therefore, the algorithm of the program operation is drawn up in the notation of an activity diagram (Fig. 1-2). According to this method, in order to conduct a preliminary diagnosis of diabetes in a person in the early stages, we need to run the software. After that, please work with the program itself, and the process of loading information from the database begins. The program analyses the information in the database, separating the columns into input (X - attributes) and output (Y - diagnosis) parameters. Next comes the process of dividing the data into training and test samples. Then, we create the XGBClassifier model and train it using training data. Now, we are ready to use the trained model to make a forecast using our test data. In order to determine the accuracy of the data, we compare our forecast with real values. In parallel with this process, we conduct testing.

Load information from the database

Separate columns into input and output parameters

Split data

Create an XGBClassifer model

Train the model using training

Fig.1. Activity diagram of the program's operation algorithm

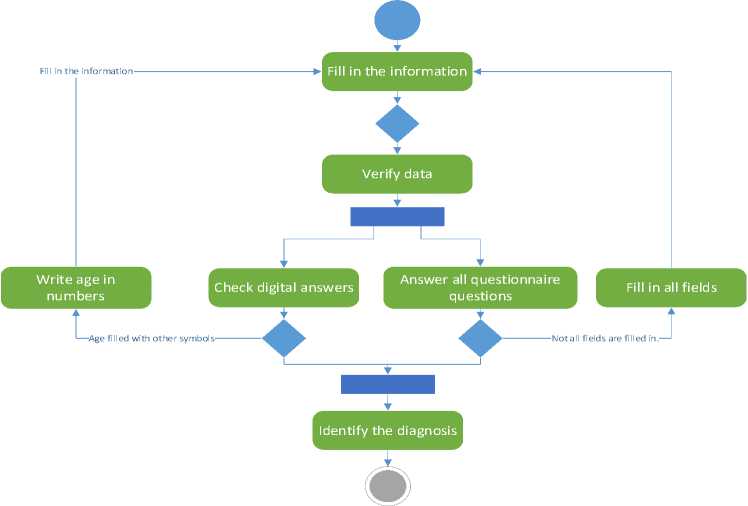

After opening the test, we fill in all the information necessary for making a diagnosis. This action can be seen in more detail in Fig. 2. After filling in the information, the data verification process begins. We check whether there are answers to all the questionnaire questions and whether the numerical answers are entered correctly. If not all the fields are filled in, then we fill in all the fields or the age is filled in with other symbols (age is the only numerical field in our program), then we write the age in numbers and return to the data verification process. If the data verification is successful, that is, we fill in all the fields and the age is filled in with numbers, our trained program makes a diagnosis. At the end, the diagnosis and the accuracy of our program data are displayed on the screen. It completes the method.

Fig.2. Activity diagram decomposition

Real-world pilot scenarios should be explored to evaluate the practical applicability of the proposed system in clinical settings. These may include integration with electronic medical records for continuous monitoring, deployment in family medicine clinics for primary screening, and patient-oriented mobile applications for self-assessment. Case studies in endocrinology clinics involving real patients can help validate the system's generalizability beyond the Pima Indian dataset and assess its behaviour with heterogeneous medical profiles.

Table 1. Real-world application scenarios and case studies for testing the system in a clinical setting

|

Name |

Script |

Purpose |

|

Primary screening in family medicine |

Family doctors use the system as a tool for pre-screening patients during annual check-ups. The patient enters basic clinical indicators (age, glucose level, BMI, blood pressure, etc.), and the system provides a probability of developing diabetes. |

Identification of high-risk individuals. Referral of such patients for in-depth diagnostics (HbA1c analysis, glucose tolerance test). |

|

Integration with electronic health records (EMRs) |

Integration of the model with medical information systems in hospitals or clinics. The algorithm automatically analyses the patient's data updates and signals to the doctor about the increased risk. |

Continuous monitoring of patients with prediabetic indicators. Warning the doctor about the need for preventive intervention. |

|

Mobile application for patients at risk |

Patients with obesity or a family history of diabetes install the app on their smartphone. They enter data on their own or through Bluetooth glucose meters/fitness trackers. The algorithm assesses the risk and provides recommendations. |

Raising awareness of one's condition. Self-monitoring without regular visits to a doctor. |

|

Case Study: Endocrinology Clinic |

To attract 100 new patients with suspected diabetes to the clinic. Compare the decisions made by the system with the diagnoses of doctors. Measure accuracy, recall, false negatives, and user trust. |

Discover how the model copes with different patient profiles. Determine how well it generalises outside of the Pima dataset. |

The data from the Pima dataset is limited to one demographic group (women of the Pima tribe). Therefore, testing in real conditions with diverse populations will help assess the generalisation of the model. An assessment of the system's immunity to noisy or incomplete data is required, which often happens in real medical practice. Integration into clinical processes is an essential step towards real implementation.

We will describe the possibility of integrating glucose monitors with an AI-based diabetes prediction system in terms of technical details about how hardware and software interact, data synchronisation methods, and challenges associated with real-time data collection.

-

• Types of glucose monitors are traditional devices (with manual reading) and CGM systems (continuous glucose monitoring). Conventional devices are plugged into a USB or Bluetooth data transmission. CGM systems are devices that automatically record glucose levels every 5-15 minutes (for example, FreeStyle Libre, Dexcom).

-

• The ways to transmit data to the AI system are Bluetooth Low Energy (BLE), Wi-Fi / GSM modules, USB or NFC readers. BLE is the most common protocol for transmission to mobile applications. Wi-Fi / GSM modules are used in more advanced devices for direct synchronisation with cloud databases. USB or NFC reading is used for periodic manual data transfer to a computer

-

• Application interfaces are REST APIs from manufacturers (for example, Dexcom API), SDKs, or drivers for local data exchange (for example, via a COM port or Bluetooth socket). Also, program-by-program interfaces include the use of an intermediate data collection module, which receives and processes incoming streams and then transmits them to the AI module.

Data synchronisation methods include timestamp alignment, buffering, transmission confirmation mechanisms, flushing, and notification queues. Timestamp alignment — each data record contains an exact measurement time; The model synchronises records over time. Buffering - if data arrives intermittently, it is temporarily stored in a buffer until the whole block is processed. Acknowledgement mechanisms are data delivery confirmations that allow retransmission in case of loss. Logging and message queues — for example, via MQTT, RabbitMQ, or Kafka for reliable delivery and real-time processing.

Table 2. Potential problems with real-time data collection

|

Problem |

Description |

Potential consequence |

|

Connection loss |

Disappearing Bluetooth/Wi-Fi connection |

Skipping measurements, incomplete data |

|

Latency |

Data arrives with a delay |

Untimely decision-making |

|

Anomalies/Outliers |

Incorrect or noisy glucose values |

False classification of the condition |

|

Dependence on the device's battery |

Turning off the device without warning |

Monitoring interruption |

|

Format incompatibility |

Data from different devices has a different structure |

The need for unification or conversion |

|

Privacy and security |

Transfer of medical data without encryption |

Risk of personal information leakage |

In the future, the system can be integrated with hardware glucose monitors, which will allow you to receive data in real time. Such integration involves the use of data transfer protocols (for example, Bluetooth LE or REST API), as well as intermediate modules for buffering and synchronising information with the AI software classifier. However, when working in real time, you should take into account potential risks such as connection loss, transfer delays, data outages, or privacy threats. For the stable operation of the system, it is necessary to implement mechanisms for retransmission, anomaly filtering, and ensuring the protection of medical data during transmission and storage.

Let us consider the financial aspects of the implementation of the diabetes prediction system, although this is an essential factor in the real implementation in medical practice. Below is a detailed analysis of the cost and resource requirements that should be considered when implementing such a system in a clinical setting.

Analysis of the cost of implementing a diabetes prediction system

-

• Hardware is continuous glucose monitors (CGMs), in particular, devices:

— FreeStyle Libre 2 / 3 (Abbott) — ≈ 80–150 USD / sensor (for 14 days);

- Dexcom G6 / G7 - ≈ 300-400 USD / starter kit, then 150-300 USD / month;

— Scanners/receivers — additional 100-200 USD (or using smartphones with NFC/Bluetooth).

The problem is the high regular cost for clinics with a large number of patients. Not all patients can afford the constant use of CGM at home. It is also necessary to certify the devices according to medical requirements.

-

• Computational resources for an AI model are based on model training. XGBoost, as a rule, does not require high computing power, but when working with larger sets (for example, >100 thousand records), there may be a need for GPU or cloud resources. The cost of using a GPU (e.g., on Google Cloud or AWS) is ≈ $0.50–$1.00/hour (GPU) and ≈ $0.10–$0.30/hour (CPU nodes). It can be implemented even on a regular server or a powerful laptop. Still, when processing data in real time (for example, from CGM), it is desirable to have a remote server or cloud infrastructure.

-

• Software infrastructure and support include:

— Cloud environment (AWS, Azure, GCP): ≈ 10-50 USD/month for the basic configuration;

- Patient databases (secure storage): ≈ 0.01–0.05 USD/GB/month;

— Integration with EMR (electronic medical records): requires separate APIs and legal compliance (HIPAA, GDPR).

-

• Additional costs are incurred for technical support, staff training and licensing/certification. In particular, for technical support, a system administrator or an IT specialist on the clinic staff is required. After training, doctors must understand how to interpret the results of the model. If the system will be used for clinical diagnostics, medical certification (CE, FDA, etc.) is required.

-

3.4. Overview of Selected Datasets

Table 3. Potential barriers to implementation in medical institutions

|

Barrier |

Explanation |

|

High cost of CGM |

Especially for chronic monitoring or mass adoption |

|

Lack of infrastructure |

Not all establishments have servers or stable Internet |

|

Misunderstanding of AI Approaches |

Distrust or misunderstanding on the part of doctors |

|

Data privacy |

The need to comply with medical data protection standards |

One of the critical aspects that has not been addressed in this study is the cost of the practical implementation of the proposed system. Continuous glucose monitors (CGMs), which could provide real-world inputs to the model, have a relatively high cost for both patients and healthcare facilities. In addition, the implementation of the software part — including real-time data processing, storage of results, and interpretation of model conclusions — requires the availability of cloud infrastructure, secure databases, and technical personnel. These factors can become a serious obstacle to scaling the system in real clinical practice, especially in public institutions or countries with limited healthcare funding.

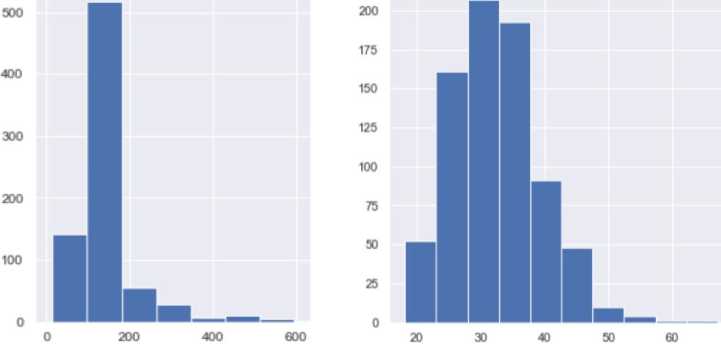

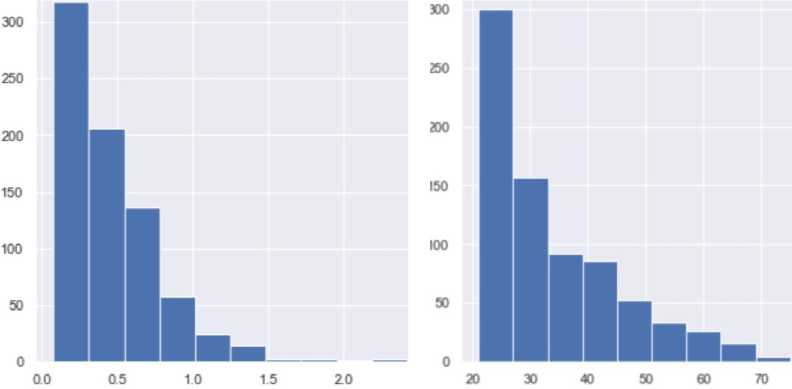

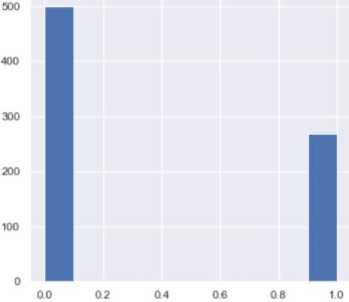

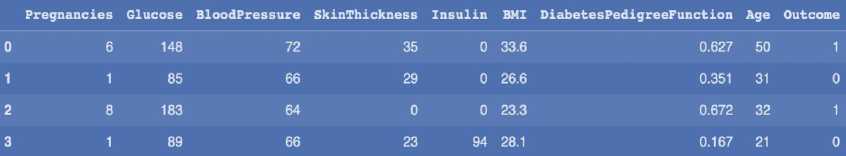

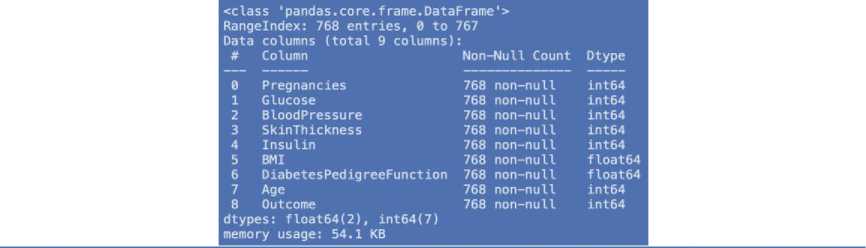

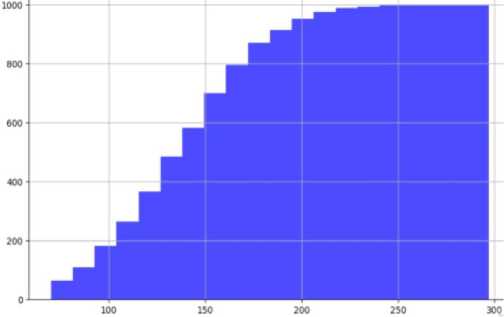

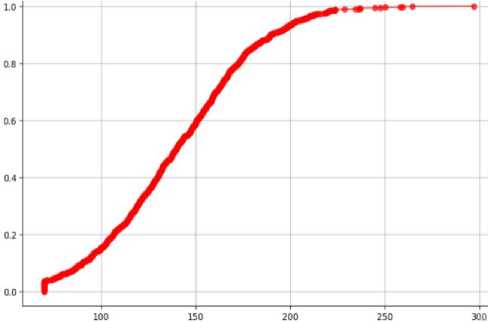

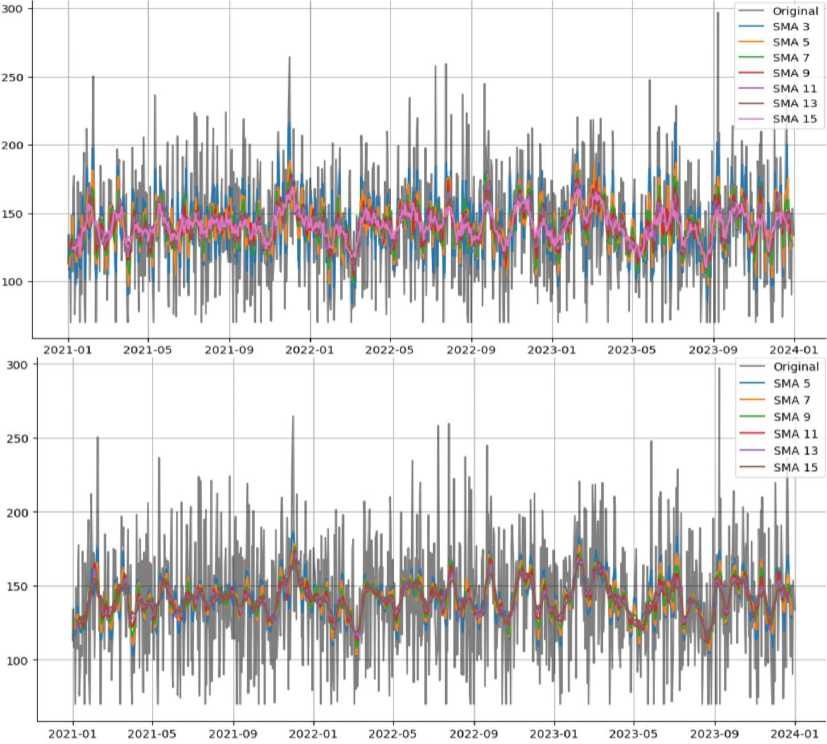

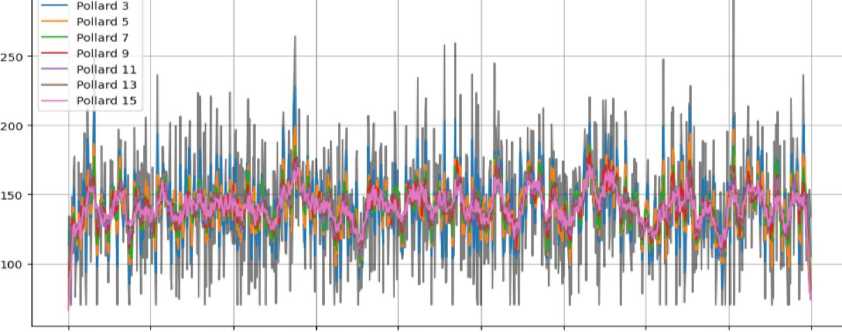

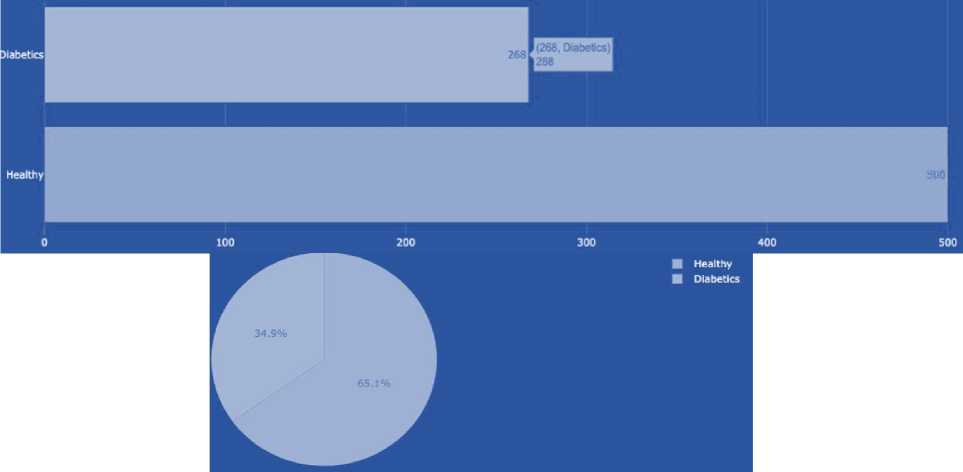

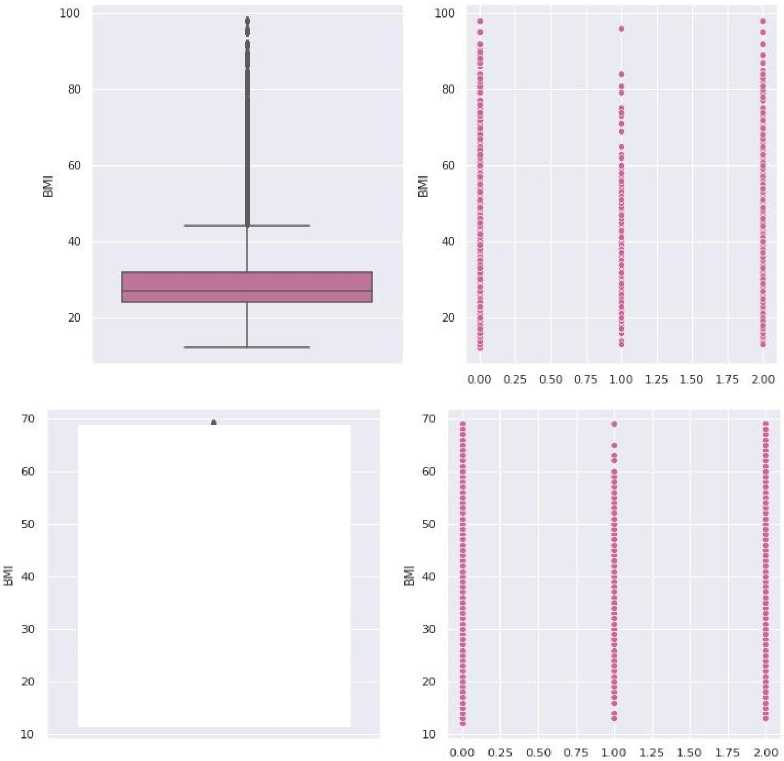

This section describes the key aspects of the dataset used to train and evaluate the model. The choice of dataset is determined by its representativeness, size, and relevance to the task at hand. Today, you can find a variety of datasets on the Internet that can be used to train machine learning models. The best resource is Kaggle, which not only allows you to access a number of tools and resources for research, study, and practice in the areas of data analysis and machine learning model development but also provides an excellent opportunity to participate in data science competitions, where participants from all over the world compete in solving real-world data collection and analysis problems. We have selected two datasets that we believe are best suited for training the model.

Table 4. Dataset characteristics

|

Data set |

Number of characteristics |

Number of records |

|

1 |

9 |

768 |

|

2 |

9 |

100,000 |

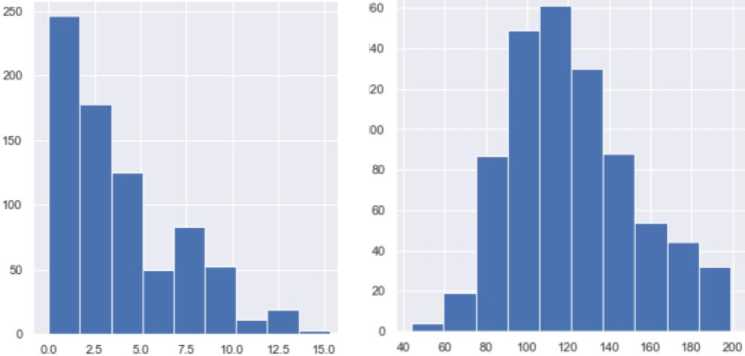

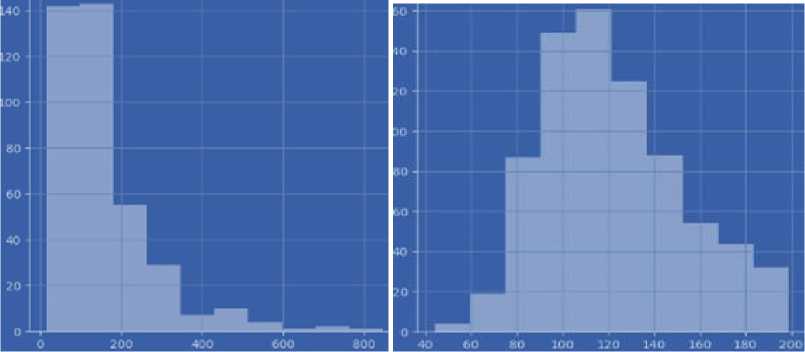

The dataset contains attributes related to women's health. It includes the following attributes:

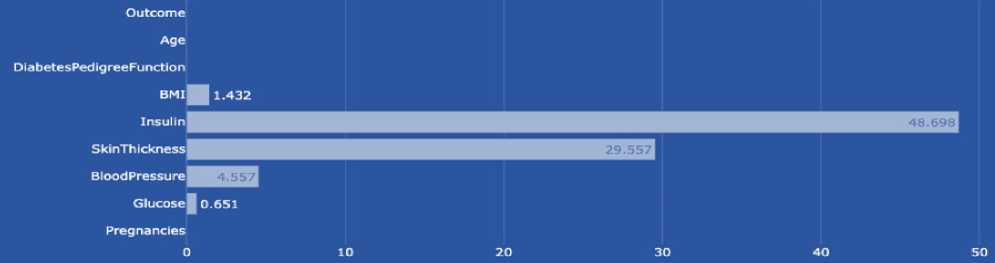

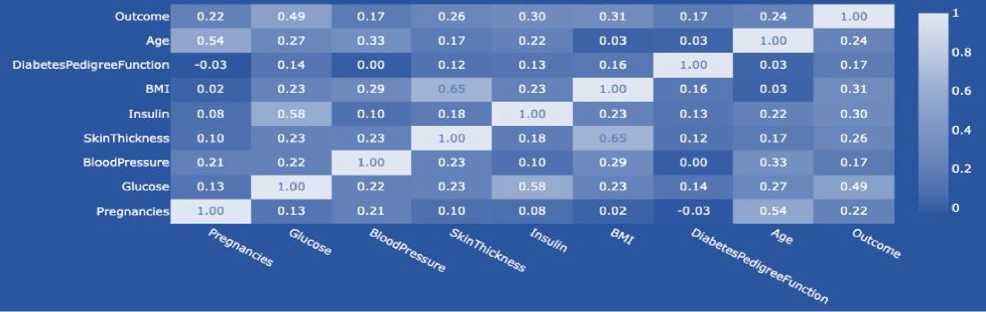

Pregnancies (number of pregnancies) affect the risk of developing diabetes, particularly gestational diabetes. Gestational diabetes is caused by insufficient insulin production or ineffective use of insulin by the body during pregnancy. This condition usually develops in the second trimester of pregnancy and may improve after delivery, but it can also increase the risk of developing type 2 diabetes later in life. The data type is integer. Thus, the frequency of pregnancies may be a risk factor through several mechanisms:

-

• Increased workload on the pancreas, particularly each new pregnancy, creates additional workload on the pancreas, which secretes insulin. The increased insulin load leads to exhaustion of the pancreatic beta cells.

-

• Metabolic changes, such as hormonal and metabolic changes, occur with every pregnancy. These changes affect the sensitivity of cells to insulin and may determine the development of insulin resistance.

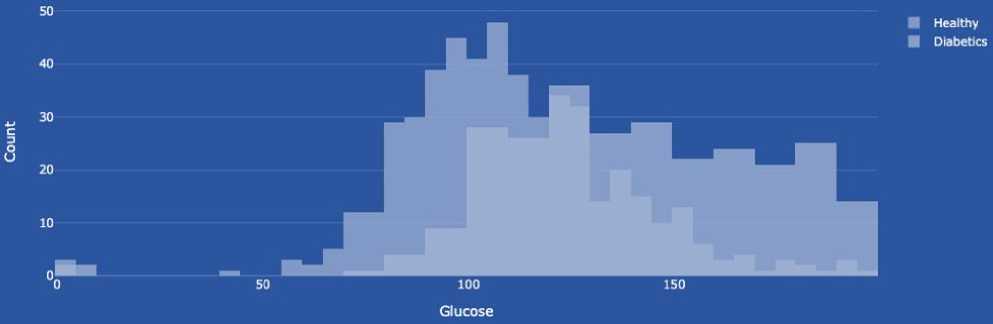

Glucose (blood glucose level) shows the amount of sugar (glucose) in the blood at a given time. Glucose is the primary source of energy for the body's cells, and its regulation in the blood is essential for the body to function correctly. Blood glucose levels are measured in milligrams per deciliter (mg/dL) or millimoles per litre (mmol/L). Normal levels can vary depending on a number of factors, including the time of day and the time since you last ate. Changes in blood glucose levels can indicate a number of conditions, including diabetes, prediabetes, hyperglycemia (high glucose levels), and hypoglycemia (low glucose levels). Healthcare professionals can determine how high and low blood glucose levels are affecting your health and develop a strategy to treat or control these conditions. The data type is integer.

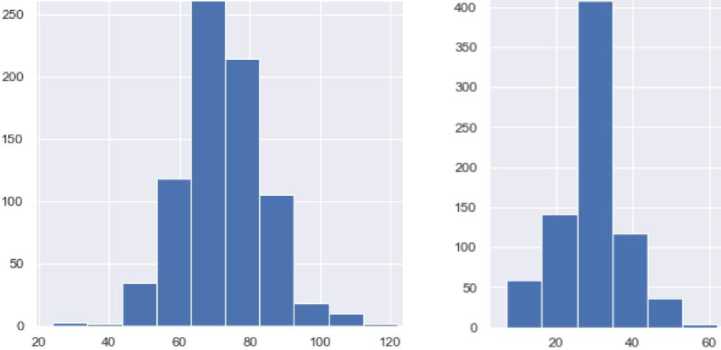

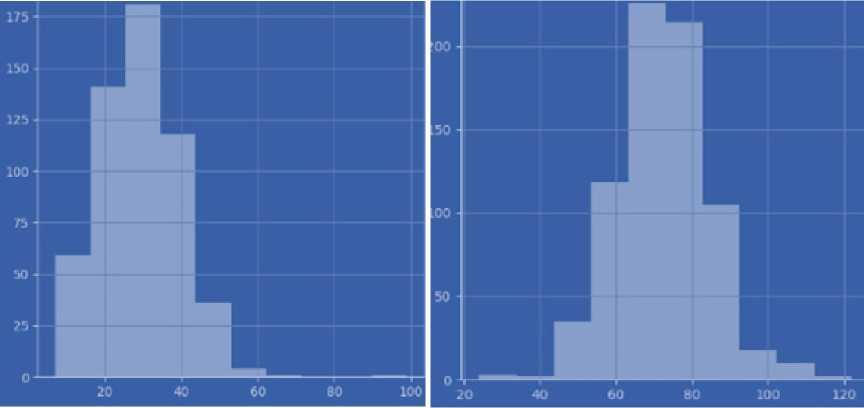

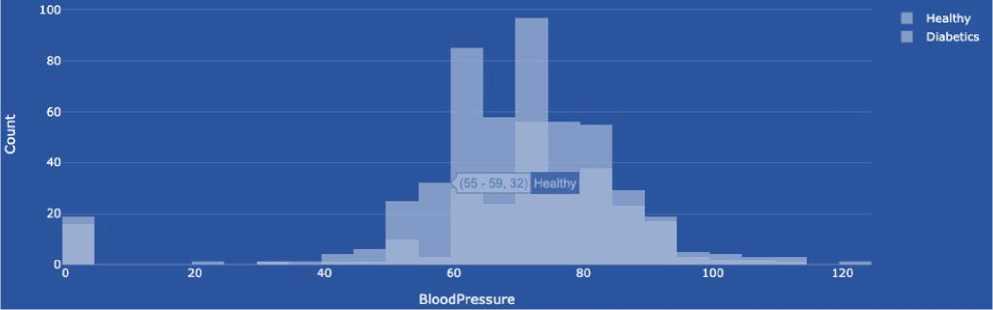

There are two types of Blood pressure. In our case, we use diastolic pressure (the lower number). Blood pressure is an essential indicator of the functioning of the circulatory system. It indicates the pressure exerted on the walls of the arteries by the blood circulating in the vessels. This pressure is determined by two numbers measured in millimetres of mercury (mm Hg). Systolic blood pressure (the higher number): reflects the maximum blood pressure in the arteries during the contraction of the heart, when blood is ejected into the vessels. Diastolic blood pressure (the lower number) reflects the lowest pressure in the arteries when the heart relaxes between heart contractions. For example, "120/80 mm Hg" means that the systolic pressure is 120 mm Hg and the diastolic pressure is 80 mm Hg. It is believed that normal blood pressure in adults is around 120/80 mm Hg. Changes in these values can indicate a number of conditions, including high blood pressure (hypertension), low blood pressure (hypotension), or other blood pressure problems that affect the cardiovascular system and overall health. Blood pressure assessment is an integral part of heart health and is considered in the diagnosis and treatment of cardiovascular disease. A data type is an integer.

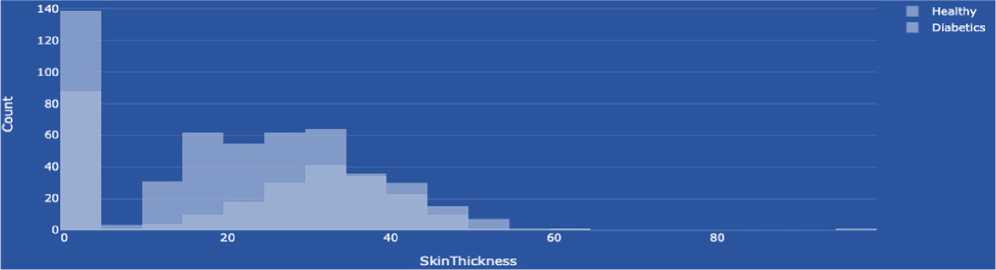

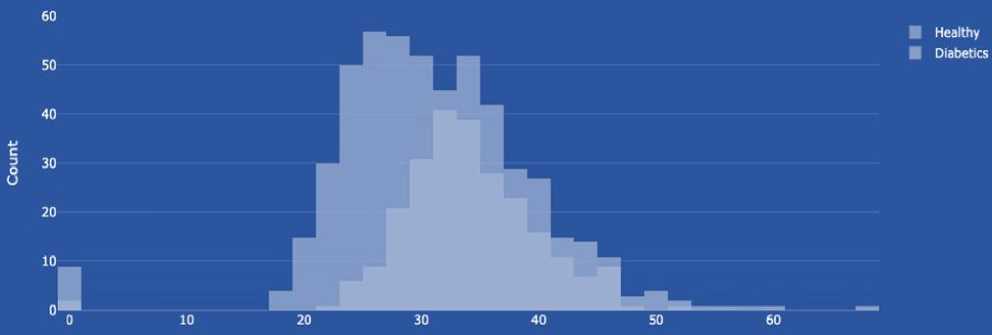

Skin thickness is commonly measured in specific dimensions and can be used as one of many characteristics to determine diabetes risk and prognosis. However, it is not a direct indicator of diabetes in itself. There are several rationales for using skin thickness in the context of diabetes research. For example, the thickness of subcutaneous fat may be associated with insulin resistance, an essential factor in the development of type 2 diabetes. Insulin resistance means that the body's cells are less sensitive to insulin, which leads to increased blood glucose levels. However, skin thickness alone is not an accurate and reliable indicator of diabetes, and its use may be limited. The diagnosis of diabetes is usually made using specific tests, such as blood glucose levels and other biomarkers. Therefore, although skin thickness can be included in risk analysis and studies of the characteristics of people at high risk of diabetes, it cannot be the sole criterion for detecting diabetes. The data type is integer.

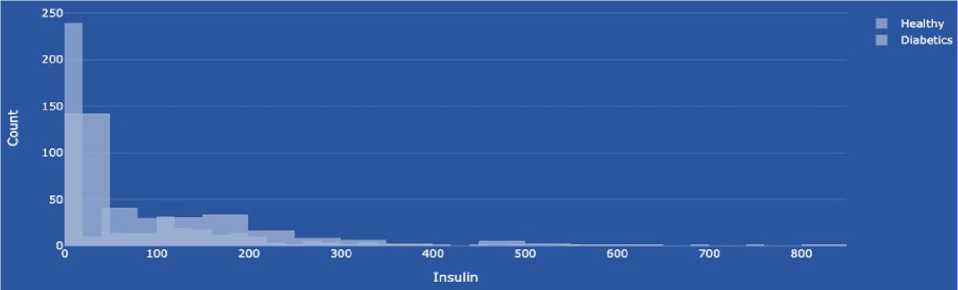

Insulin (insulin blood level) is a hormone secreted by the beta cells of the pancreas, an organ located behind the stomach, and is essential for regulating blood sugar (glucose) levels. The primary role of insulin is to regulate metabolism, especially that of carbohydrates. The data type is integer. The main functions of insulin in the body are:

-

• Lowering blood glucose levels, i.e. insulin, helps cells absorb glucose from the blood and reduces the concentration of glucose in the blood.

-

• Storing glucose in the form of glycogen, i.e. insulin, promotes the formation of glycogen - a form of glucose storage in the liver and muscles.

-

• Stimulating protein synthesis, i.e. insulin promotes the release of intracellular amino acids and facilitates protein synthesis.

-

• Accumulating fat, in particular, hormones, promotes the formation and accumulation of fat in cells.

-

• Inhibiting the breakdown of glycogen and fats, i.e. insulin maintains stable energy levels by inhibiting the breakdown of glycogen and fats.

-

• Beta-cell dysfunction and cellular insulin resistance can lead to metabolic disorders, including the development of type 2 diabetes, in which the body cannot use insulin effectively or cannot secrete enough insulin.

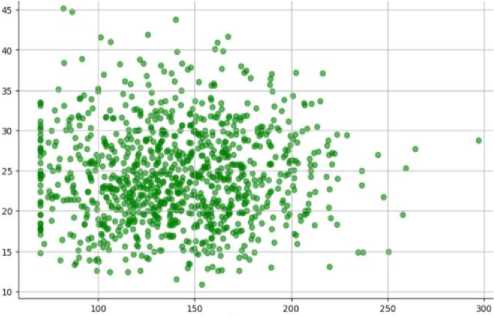

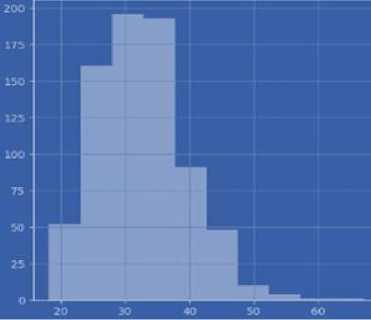

BMI (body mass index or weight-for-height ratio). Individuals with the highest BMI (mean 34.5 kg/m²) had an 11fold increased risk of developing diabetes compared to participants with the lowest BMI (mean 21.7 kg/m²). The group with the highest BMI had a higher probability of developing diabetes compared to all other BMI groups, regardless of genetic risk. Data type: floating point number.