Investigating into Automated Test Patterns in Erratic Tests by Considering Complex Objects

Автор: Akram Hedayati, Maryam Ebrahimzadeh, Amir Abbaszadeh Sori

Журнал: International Journal of Information Technology and Computer Science(IJITCS) @ijitcs

Статья в выпуске: 3 Vol. 7, 2015 года.

Бесплатный доступ

Software testing is an important activity in software development life cycle. Testing includes running a program on a set of test cases and comparing seen results with expected results. Automated testing encompasses all automation efforts across software testing lifecycle, with focus on automating system testing efforts and integration. Automated testing brings plenty of benefits that speeding up test running time, increasing accuracy of testing process and minimizing costs in different parts of system are three superior features of it. Maintenance and development of test automation tools are not as easy as traditional testing due to unexplored issues which need more examinations. Automated test patterns have been presented to mitigate some problems happening by automated testing and improve efficiency. This paper aims to investigate into automatic testing and automated test patterns. Also, demonstrates behaviour of applying an automated test pattern on a complex object. Results show during choosing an automated pattern to run, we should consider test structure especially level of test object complexity otherwise inconsistency may happen.

Test, Erratic Test, Fixture, Fixture Fresh Pattern, Automated Testing, Automated Test Pattern

Короткий адрес: https://sciup.org/15012263

IDR: 15012263

Текст научной статьи Investigating into Automated Test Patterns in Erratic Tests by Considering Complex Objects

Published Online February 2015 in MECS

-

E. Slow Test

Slow tests are kind of tests which take long enough to run. This explains why when test developers make a change to the SUT, they don't execute tests every time. Slow test reduces productivity and cause a lot of explicit and implicit cost into system and project [17]. The causes of slow tests could be either in the way we built the SUT or the way tests are coded. Main root cause of slow tests is that many tests are interacting with databases to write in or read from it in order to setup a fixture or verify results. Running these tests with slow components take about 50 times longer to run than if the same test is supposed to run against in-memory data structures. A possible solution is replacing the slow components with a test double[17] . Another factor which makes slow test is general fixture. Because each time a fresh fixture is built, each test is constructing a large general fixture and it includes many more objects. Consequently, it takes longer than usual to construct. To reduce this time and avoid rebuilding it for each test, we can use the general fixture as a shared fixture but unless we make this shared fixture immutable. On the other hand, this is likely to lead erratic tests and so should be avoided. Another way is reducing the number of fixtures being set up by each test. Also, tests need a long delay to ensure consistency and synchronization between underlying processes or threads of system to lunch, run and verify. As a result, in a big scale, these waiting times in each test, significantly slows down overall execution time .to address this problem, maybe we are obliged to avoid asynchronicity in tests by testing the logic synchronous. Another state which slow test happens is when there so many tests to run regardless of how fast they execute. Also, we may have many overlaps between them. To take over this state, if possible, we can break system into a number of fairly independent subsystems or components along with subset suite of suitable crosssection of tests to run by a logical schedule [17].

-

F. Erratic Test

Results of some tests depend on some factors such as who is running them or when tests are run. As a result, they provide different outcomes, pass or fail. There are some tests that if run for several times, provide different results and behave erratically. The results are affected by external factors including environment, who is running them or when tests are run. Maybe it sounds logical to remove the failing tests from the test suites but this leads lost test. On the other side, keeping the erratic tests may either interfere with other issues which the same tests are expected to detect and obscure resolving errors or even cause additional failures than expected. [17] there are many ways which cause erratic tests. Hence, they are a little hard to trouble shoot. Interacting tests, unrepeatable test, test run war, nondeterministic test and resource optimism are some sort of test that their performances provide erratic test.

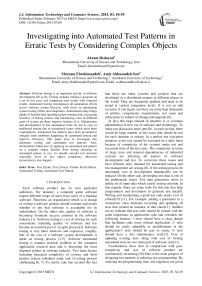

Interaction among tests may cause erratic tests. In this case, if many tests run in sequence and use same objects, even if one test fails, consecutive failures or cascading errors will happen in other tests for no evident reason, because they depend on other tests’ side effects. [17] as fig (1) shows in execution of a sequence of tests, test 2 failure may leave processobject1 in state that causes test n to fail.

Unrepeatable test is referred to tests that their results at first run time are different via results of subsequent test runs. They affect each other results to some extent. [17]

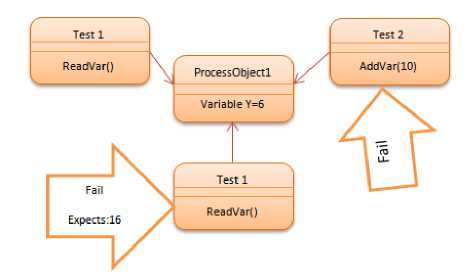

The next cause is test run war. In this state, if many test runners use some shared external resource such as a database, object, file, random results may happen and we call it a test run war [17]. As we can see in fig (2), while many parallel test runners are using processobject1, test 1 may fail whereas test 2 pass it successfully at the same time.

Non deterministic tests are tests which are dependent on non-deterministic inputs. These tests pass at some times, but if they run at another time, they face failure. This is due to lack of date and time control [17] .

Resource optimism describe tests which their results depend on where or where they are run so we have nondeterministic results. Based on some non-ubiquitous external resources tests either fail or pass [17] .

Fig. 1. interacting tests- cascading errors

Fig. 2. test run war- run-time behaviour of executing multiple tests at the same time

-

IV. Related Works

G.meszaros is one of experts in agile software development specifically in automated test patterns and test code designs. He has invented effective mechanisms to facilitate writing and running of tests in terms of test automation framework and automated test patterns in order to achieve automated testing benefits [17] [11] . Shared test fixture pattern and fresh fixture pattern are two type of automated patterns which are used for solving stated problems of slow tests and erratic tests respectively [17] . In following, we describe these patterns.

-

A. Shared Test Fixture

To run an automated test, we need a text fixture that is completely deterministic and well understood. Setting up a fresh fixture as explained before, may take long time than usual especially when we are dealing with complex system state stored in a test database.

In this pattern, a standard fixture is created, a shared fixture is a fixture which is created by one test and is reused by other tests simultaneously. Even, it can be either a prebuilt fixture that is reused by one or several tests in many test runs. Additionally, that fixture lasts longer than a single testcase object. By employing this way, many tests reuse the same standard test fixture between themselves more and more without tearing down and recreating it. Shared test fixture pattern by reducing fixture setup overhead helps to improve test run times in slow tests [17] . On other hand, it is obvious that if results of test depend on outcomes of other tests, shared test fixture bring about interaction among tests that may cause erratic tests. Also, we should consider shared fixture in order to be applicable for all tests, is bound to be more complicated than the minimal fixture needed for a single test. Greater complexity causes another type of text named fragile fixture. Other disadvantages of this pattern is elaborated in [17] .

-

B. Fixture Fresh Pattern

Fixture fresh pattern is used for avoiding erratic tests. Every test needs a test fixture. Fixture defines state of test runtime environment before running time. Making decision upon using prebuilt fixture or creating a new one is one of key test automation decisions. In this approach, only a single run of a test will use fixture and it will be torn down after finishing. Hence, tests are completely independent. Fixtures which are left over by other test runs are not used by other tests. In [17] it is mentioned that whenever we want to avoid interdependencies among tests, it is the right time to use fixture fresh pattern but in next section, we want to describe a condition that it is impossible to take advantage of the pattern on it.

-

V. Research Methodology

In previous sections, we went through different kind of tests such as slow tests and erratic tests and elaborated how automated test strategy patterns mitigate the effect of those tests. Furthermore, previous studies demonstrate that whenever we want to avoid interdependencies between tests, we are allowed to use fresh fixture pattern and no other limited condition mentioned. In this part, we want to add a significant criterion while making decision upon using fresh fixture pattern for tests. Regarding our finding, if we cannot modify slow and complex tests in erratic tests by using simple objects or smaller codes, applying fresh fixture pattern may result in interacting tests, test run war or other issues we encountered in erratic tests. Consequently, tests fail. Due to these observations, we claim that considering structure of test code is a determining factor in choosing pattern.

Here, we want to establish validity of our claim by Reductio Ad Absurdum proof method in mathematical logic. This proof is represented as set of true statements or premises which are built upon axioms or theories, we notate premises as s, along with preposition we want to prove, p.

If s U {p} P f then sp -p (1)

If s U { -p}p f then sp p (2)

Based on notations number 1 or 2 , we bring into account p, or the negation of p, with s. By further examination, if above predicate result in logical contradiction f, then we can conclude that the statements in s lead negation of p, or p itself, respectively.

According to what was said, our statement says our erratic test has one or more complex objects, and we want to prove fresh fixture pattern for this test is not possible. For proof, the claim is negated to assume fresh fixture pattern is acceptable for this object. As we described earlier, in fresh fixture pattern each test creates its fixture and tears it down after single run and fixture is rebuilt for different times. Since each test fixture is initialized in each runtime, in turn it implies process of fixture setup is not time-consuming. If fixture setup process be time-consuming result in tests take a long time to do. As a result, it contradicts one of the main benefits of automated tests, reducing runtime and increasing speed, as we introduced before. As a result, when we suppose fixture setup process does not take long time, it indicates that the same test including the fixture is not slow. This means that test does not include any complex databases or codes while this statement results in contradiction to first proposition. The contradiction means that it is impossible for an object to be both complex and simple at the same time. Consequently, it follows that the assumption fresh fixture pattern is usable for complex tests must be false and hence proving the claim.

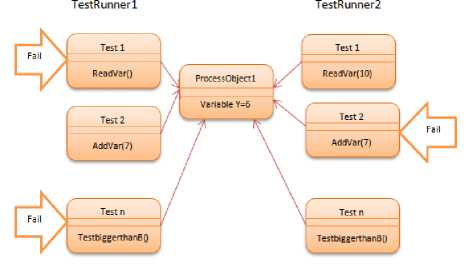

An example of a complex object is shown in fig (3).as seen, a number of tests are supposed to run. Nested loops and databases through object make it complex. While test 1 is inserting a variable into database, test 2 is updating the same database. Both want to access same resource simultaneously thus this coincidence makes test 2 failure. According to fixture fresh pattern, each test uses a single fixture for single runtime. If each test has fixture setup time and its process takes longer than expected, this significantly slows automated testing process down and brings further potential problems. Another word, speed falls and time ups.

Fig. 3. an example of complex object using fixture fresh pattern

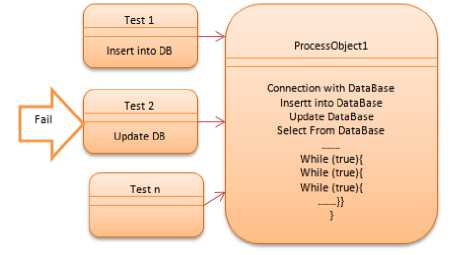

ProcessObjectl .

Public Void TestGetsubsystem(){

Set UpstandardSystemAndSubsystem ()

List SubsystemProcessesO}

Set UpstandardSystemAndSubsystem ()

{ create connect! on()

Update DBSystemProcessTable()

U pdate DB System S ubsystemProcessTa ble()

Select DBSystemProcessTable ()

Select DBSystemSubsystemProcessTable ()

Fig. 4. pseudo code of a complex object

We have shown above explanation by piece of pseudo code as shown in fig (4). Suppose plenty of tests are scheduled to run. Also have interactions among each other and their results affect other test results and since fixture should be built for any test, each fixture setup needs a lot of time. This condition negates reducing time in automated testing and states there is no possibility to run fixture fresh pattern in test complex objects.

-

VI. Conclusions

When we introduce automation, we create more software that must be coded, debugged, and maintained. In spite of benefits of automated testing, more time is added to test schedule for automation activities that if carefully not managed, we will encounter a negative return in automation investment. Automated test patterns has been developed as solutions to mitigate potential caused problems and reduce costs of implementation. Based on our examination, we conclude each automated pattern is not applicable for any kind of test. Making decision on using an appropriate automated pattern needs an exhaustive investigation that embrace all properties of test such as level of test complexity, contributed objects, number of running time of each single test, test interactions.

Список литературы Investigating into Automated Test Patterns in Erratic Tests by Considering Complex Objects

- L. J. Osterweil, et al., "Strategic directions in software quality," presented at the ACM Computing Surveys, 1996.

- F. Lanubile and T. Mallardo, "Inspecting Automated Test Code:A Preliminary Study," Springer, pp. 115-122, 2007.

- D. Alberts, "The economics of software quality assurance," presented at the AFIPS : AFIPS Joint Computer Conferences, 1976.

- G. J. Myers, Art of Software Testing: Wiley 1979.

- M. J. Harrold, "Testing: A roadmap," presented at the International Conference on Software Engineering, 2000.

- S. Jinhui, et al., "Research progress in software testing," presented at the Acta Scientiarum Naturalium Universitatis Pekinensis, 2005.

- G. Todd, et al., "An empirical study of regression test selection techniques," ACM Transactions on Software Engineering and Methodology (TOSEM), vol. 10, pp. 184-208, 2001.

- S. Eldh, et al., "Towards Fully Automated Test Management for Large Complex Systems," presented at the International Conference on Software Testing, Verification and Validation, 2010.

- B. korel, "Automated Software Test Data Generation," IEEE Transactions on Software Engineering vol. 16, pp. 870-879 1990.

- T. Kataoka, et al., "Test Automation Support Tool for Automobile Software," SEI TECHNICAL REVIEW, pp. 79-83, OCT 2013.

- G. Meszaros, et al., "The Test Automation Manifesto," in Extreme Programming and Agile Methods, Xp/Agile Universe 2003. vol. 2753, ed: Springer, 2003, pp. 73-81.

- M. Rybalov, "Design Patterns for Customer Testing " presented at the Pacific Northwest Software Quality Conference 2004.

- L. G. Hayes, The Automated Testing Handbook: Software Testing Institute, 2004.

- A. Bertolino, "Software Testing Research: Achievements, Challenges,Dreams," presented at the IEEE Computer Society, Washington, DC, USA, 2007.

- K. Karhu, et al., "Empirical Observations on Software Testing Automation," presented at the International Conference on Software Testing Verification and Validation, Denver, CO, 2009.

- S. A. Adnan, "Continuous Integration and Test Automation for Symbian Device Software," Bachelor, Helsinki Metropolia University of Applied Sciences, Helsinki 2010.

- G. Meszaros, XUnit test patterns : refactoring test code, 2007.

- J. D. McCaffrey, .NET Test Automation Recipes: A Problem-Solution Approach. NY, 2006.

- E. Dustin, Effective software testing : 50 specific ways to improve your testing: Addison-Wesley Longman Publishing, 2002.