Paradigms of application of artificial intelligence from the aspect of ethics in improving qality

Автор: Denić N., Bartulović Ž., Đorđević S., Bulut Bogdanović I.

Журнал: Ekonomski signali @esignali

Статья в выпуске: 1 vol.20, 2025 года.

Бесплатный доступ

In this research paper, based on a systematic review of the relevant scientific literature, the results of research on the use of artificial intelligence in companies from the ethical aspect will be presented. In this context, the paper will investigate the issues arising from the need to establish ethical guidelines for the effective use of methods, techniques and tools of artificial intelligence. For the purposes of the work, various aspects of ethics related to artificial intelligence will be evolved and key challenges that appear in business processes of companies and business systems will be analyzed. The fundamental goal is to investigate how to act ethically in this dynamic environment, while achieving competitive advantage and sustainable business development, that is, to identify the ethical dilemmas of using artificial intelligence in the working environment of individuals and to formulate recommendations to companies and organizations for its ethical use and implementation. The research results point to the necessity of including ethical principles already in the planning and development phase of artificially intelligent systems. The results show that it is necessary for companies and business systems to recognize values such as fairness, transparency and accountability and to integrate them into their business practices. A quantitative methodological approach was used, conducted on the basis of a survey questionnaire. The results of research into the application of artificial intelligence in practice indicate that employees evaluate all organizational practices that would help reduce the occurrence of ethical dilemmas as extremely important and useful, where the biggest identified ethical dilemma is the reduction of work ability and skills due to the possibility of relying on artificial intelligence systems.

Artificial intelligence, business, ethics, quality management

Короткий адрес: https://sciup.org/170209524

IDR: 170209524 | УДК: 005.6:[004.89:174 | DOI: 10.5937/ekonsig2501095D

Текст научной статьи Paradigms of application of artificial intelligence from the aspect of ethics in improving qality

gence represents one of the five goals of the Strategy for the Development of Artificial Intelligence in the Republic of Serbia for the period 20202025. year. Some authors point out that business ethics is a key component of successful and sustainable business in today's globalized world (Chladek, 2019).

Artificial intelligence

The literature states that if it is true that with great power comes great responsibility, then artificial intelligence has become increasingly powerful and we are only beginning to understand its potential for its application (Krogue, 2017). The European Union has passed a law on artificial intelligence (AI Act), which should enter into force in 2025. The law classifies artificial intelligence into four levels of risk, from minimal to unacceptable, and prescribes penalties. Some authors state that due to the unique properties of artificial intelligence, such as its complexity, data mining, intelligence, etc. careful handling of these systems is required (Munoko et al., 2020). In this sense, human-machine interaction is dynamic, because artificial intelligence systems learn from human behavior and adjust their performance (Cebu-lla et al., 2023). It is important to emphasize that the inclusion of artificial intelligence in work environments brings many benefits, but at the same time raises significant ethical dilemmas and concerns (Saihi, 2022). However, with the rapid advancement of artificial intelligence, many ethical issues arise that need to be addressed (Esposito, 2019).

Ethics and artificial intelligence

Research methodology

This research work is based on many years of scientific research work and theoretical knowledge, acquired during studies, studying domestic and above all foreign professional literature, as well as on practical experiences of studying and monitoring ethical aspects of artificial intelligence implementation in business. The method we use is a critical analysis of the literature. Research methods are based on description, critical analysis and observation, com- pilation method, presentation and assessment of the theoretical and practical aspects of the analysis of the ethical aspects of the application of artificial intelligence in practice from the point of view of business improvement. Essentially, the methodology of the combination of qualitative and quantitative methods of scientific research will be applied in the work. Synthesizing the results of the critical literature analysis allows us to formulate findings, recommendations or suggestions for further research, and contributes to the further development of this field.

Research results

ethical dilemma of responsibility in the use of artificial intelligence is related to taking responsibility, because the development and operation of artificial intelligence systems involve the roles and resources of different individuals, organizations, hardware components, software algorithms and users, often in complex and dynamic environments (Stahl et al., 2023). Efforts to develop and implement artificial intelligence must be in accordance with ethical standards to ensure that the technology serves a good purpose, while companies and states must invest in the development of the technology in order to exploit its potential and at the same time minimize possible risks (Nosova et al., 2023). Developers of artificial intelligence systems are responsible for ensuring the ethical and responsible development and application of their technologies, which means they should help reduce bias, ensure transparency, and provide adequate docu mentation (Salvi del Pero et al., 2022). In this sense, com panies and business systems must ensure trans-

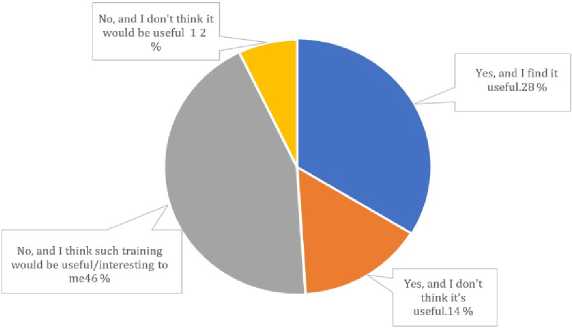

Figure 1: Training on the use of artificial intelligence and its ethics (u %)

parency in handling data and enable consumers to control their personal data (Naz and Kashif, 2024). Research in practice suggests that although the employer often bears primary responsibility for the consequences of decisions made by artificial intelligence in the workplace, it remains a complex and intricate issue with shared responsibility among stakeholders involved in the development, implementation and use of artificial intelligence (Salvi del Pero et al., 2022). The latest research showed that respondents in the sample (N=126) have a divided opinion on the use of artificial intelligence, the results also show that the more educated, those employed in the IT sector, and respondents who have more knowledge about artificial intelligence have more positive attitudes.

Research shows concerns about the disappearance of professions due to advances in artificial intelligence, as well as concerns about potential discrimination by artificial intelligence based on gender, race, sexual orientation and financial status. It is important for employers to provide employees with transparency regarding these systems, as this approach can reduce employee uncertainty and anxiety, and consequently provide them with more information to assess whether the algorithm is working properly, thus avoiding potential errors or detecting them more quickly (Todolí-Signes, 2019). It is important to ensure the wide availability of artificial intelligence and prevent the concentration of power in the hands of a few (Rosenstrauch et al., 2023). Automation of work processes through artifi- cial intelligence inevitably leads to changes in the labor market (Kopalle et al., 2024). According to the same author, the ethical challenge is not the immediate loss of jobs, but the consequences at the macroeconomic level or the labor market (Kopalle et al., 2024). In this sense, industry must actively participate in designing workplaces that combine human skills and artificial intelligence, enabling sustainable growth and social inclusion (Kopalle et al., 2024). On the other hand, many ethical dilemmas arise, such as the loss or displacement of jobs due to automation, which can be reflected at the macroeconomic level as high unemployment rates (D'Cruz et al., 2022).

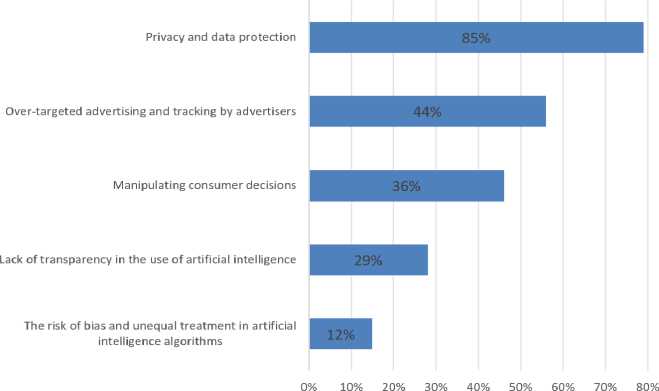

Figure 2: Ethical aspects of the use of AI

Of the 64 respondents who took part in the survey, 85% expressed concern about this aspect. This was followed by over-targeting and ad harassment, which was a concern for 44% of respondents. Manipulation of consumer decisions was a concern for 36% of participants. A less prominent, but still significant, concern was the lack of transparency in the use of artificial intelligence, which was highlighted by 29% of participants. While 12% indicated the risk of bias and unequal treatment. The ethics of using artificial intelligence in a business environment is of fundamental importance, as the technology becomes more and more present in decision-making processes, automation and interactions with customers.

Discussion of results

According to eminent authors, artificial intelligence will eliminate some jobs and at the same time create new ones, especially in fields or industries that require high skills (Gerlich, 2024). In the evaluation of ethical aspects, it is necessary to take into account both transparency and fairness (Simion and Popescu, 2023). The Law on Artificial Intelligence in the Republic of Serbia, which is in preparation, classifies systems into four categories according to the level of risk: Unacceptable risk, High risk, Limited risk and Minimal risk. The literature states that ethical practices in the ethical dilemma of job insecurity include ensuring a just transition and retraining opportunities for individuals (Kandasamy, 2024). Although it is known that AI can improve user experience and efficiency, challenges such as data quality, privacy issues and integration costs remain. Some authors in this context state that it is crucial that companies, as part of their social responsibility, actively support opportunities for retraining and development of competencies that enable employees to acquire the knowledge and skills necessary to work in an artificial intelligence environment (D'Cruz et al., 2022). Businesses and organizations must clearly explain how AI systems work and inform customers about the collection and use of their data (Naz and Kashif, 2024). In addition, it is necessary to develop strategies that will reduce the negative social and economic consequences of automation and ensure that all stakeholders benefit from technological progress (Kandasamy, 2024). In our environment, the focus of the recently implemented ethics and artificial intelligence project was, among other things, on two of the five special goals of the Strategy for the Development of Artificial

Intelligence in the Republic of Serbia, namely (1) the development of education aimed at the needs of modern society and the economy conditioned by the progress of artificial intelligence, and (5) the ethical and safe application of artificial intelligence. Some authors state that current discussions examine the best approaches that will minimize potential disruption, ensure that AI achievements are widely shared, and encourage competition and innovation, rather than suppressing it (Ford, 2015). Research results show that the use of artificial intelligence leads to a number of ethical problems, including issues related to bias, transparency, accountability and possible negative impact on employment and society. In the ethical context of the development and use of robotics and artificial intelligence, it is crucial to ensure that the technology benefits people and does not harm them (Doncieux et al., 2022). Authors Naz and Kashif (2024) emphasize that while privacy issues in AI-based business are well-researched, ethical issues such as consumer manipulation and algorithmic bias need to be better understood. Efforts to develop and implement artificial intelligence must comply with ethical standards to ensure that the technology serves a good pur- pose, while companies and states must invest in the development of the technology in order to exploit its potential and at the same time minimize possible risks (Nosova et al., 2023). Research results in practice show that artificial intelligence has large energy needs, which contributes to carbon emissions and environmental degradation and sustainable development. Some of the possible solutions to the ethical dilemma of environmental protection and sustainable development, i.e. achieving sustainability, are: investing in green technologies, optimizing algorithms for energy efficiency and using renewable energy sources in data centers and artificial intelligence operations (Kandasamy, 2024). In this sense, the development of digital literacy skills, engineering knowledge and critical thinking is crucial in order to overcome these difficulties (Walter, 2024). It also brings ethical challenges, such as data privacy and replacing human jobs with automated systems (Zawa-cki-Richter et al., 2019). The literature suggests that sustainable practices, such as using renewable energy sources and optimizing computing efficiency, can help mitigate these impacts (Kumar and Suthar, 2024).

Conclusion

Research results show that the accelerated development of new technologies and methods of artificial intelligence techniques and tools has the potential to help society, but also to cause harm. Based on the research, it can be concluded that ethics play a key role in the correct and useful use of artificial intelligence in the business world. In this context, it can be said that trust in artificial intelligence, as well as trust in those who manage it, is an ethical challenge. This research contains a theoretical and empirical part, on the basis of which it contributes to a better understanding of the complexity of ethical dilemmas brought about by the introduction of artificial intelligence into the working environment of individuals, and enables the creation of guidelines for its ethical and sustainable use. The research results indicate that solutions should be found for ethical challenges, especially related to privacy protection and transparency in the use of artificial intelligence for marketing purposes. It is clear that unacceptable or unethical use of artificial intelligence carries potential negative consequences, from discrimination and privacy violations to loss of trust in the technology. In this sense, understanding ethical stan- dards, transparency, privacy protection, maintaining integrity, economic and social responsibility, continuous improvement and making ethical decisions are key steps that IT professionals can take to enable their solutions to work and serve a better and fairer society. Further research into the ethical application of artificial intelligence in business could focus on detailed research on, for example, the psychological effects of artificial intelligence in other areas, further linked to cultural and geographical contexts.