Perceived Gender Classification from Face Images

Автор: Hlaing Htake Khaung Tin

Журнал: International Journal of Modern Education and Computer Science (IJMECS) @ijmecs

Статья в выпуске: 1 vol.4, 2012 года.

Бесплатный доступ

Perceiving human faces and modeling the distinctive features of human faces that contribute most towards face recognition are some of the challenges faced by computer vision and psychophysics researchers. There are many methods have been proposed in the literature for the facial features and gender classification. However, all of them have still disadvantage such as not complete reflection about face structure, face texture. The features set is applied to three different applications: face recognition, facial expressions recognition and gender classification, which produced the reasonable results in all database. In this paper described two phases such as feature extraction phase and classification phase. The proposed system produced very promising recognition rates for our applications with same set of features and classifiers. The system is also real-time capable and automatic.

Face Recognition, Facial Expression, Gender Classification, Feature Extraction, Eigen faces

Короткий адрес: https://sciup.org/15010373

IDR: 15010373

Текст научной статьи Perceived Gender Classification from Face Images

Published Online February 2012 in MECS

In the last several years, various feature extraction and pattern classification methods have been developed for gender classification. Emerging applications of computer vision and pattern recognition in mobile devices and networked computing require the development of resource limited algorithms. Perceived gender classification is a research topic with a high application potential in areas such as surveillance, face recognition, video indexing, and dynamic marketing surveys.

Moghaddam and Yang [1] introduced the best gender recognition algorithm in terms of reported classification rate. They adopted an appearance-based approach with a classifier based on a Support Vector Machine with Radial Basis Function Kernel (SVM+RBF)[1]. They reported a 96.6 percent recognition rate for classifying 1,775 images from the FERET database using automatically aligned and cropped images and a fivefold cross validation.

Previous simulations by Fleming and Cottrell [2], using masking of bottom and top face areas, with a more computationally demanding nonlinear approach, were not strikingly accurate for sex classification under either masking condition, but showed better performance on the top portion of the face. When the top of the face was masked the model was 29% correct, and when the bottom of the face was masked the model was 55% correct. However, this relatively low performance could be attributed, at least partially, to the great variation of training stimuli, which included non-face images. One possible reason for the difference found between top and bottom conditions is that the Fleming and Cottrell stimuli included the hair and thus provided more variation in the lower portion of the image, particularly for female faces.

Previous studies of facial area contribution to sex classification by human subjects from photographic images have used several approaches: presenting features (or a combination of features) in isolation [3,4] masking features [5,4] and replacing features within a full image [3,6]. In some cases, the studies have used individual photographic images, and in other cases, male and female prototypes have been created using various averaging techniques. These studies have produced varying results. Differences obtained between tests of features in isolation and substitution of features have been attributed to the role of configuration in facial tasks [3,4]. For example, although the nose alone provides little information, masking it diminishes the total amount of configural information perceived. In general, these studies indicate that the isolated areas contributing the most to sex classification are: the eye region (particularly the eyebrows), and the face outline (particularly the jaw).

Human facial image processing has been an active and interesting research issue for years. Since human faces provide a lot of information, many topics have drawn lots of attentions and thus have been studied intensively. The most of these is face recognition [7]. Other research topics include predicting feature faces [8] reconstructing faces from some prescribed features [9].

Gender classification is important visual tasks for human beings, such as many social interactions critically depend on the correct gender perception. As visual surveillance and human-computer interaction technologies evolve, computer vision systems for gender classification will play an increasing important role in our lives [10].

Gender classification is arguably one of the more important visual tasks for an extremely social animal like us humans many social interactions critically depend on the correct gender perception of the parties involved. Arguably, visual information from human faces provides one of the more important sources of information for gender classification. Not surprisingly, thus, that a very large number of psychophysical studies has investigated gender classification from face perception in humans [11].

The usual assumptions (behind inductive learning) may not hold for many applications. For example, if the input values of the test samples are known (given), then an appropriate goal of learning may be to predict outputs only at these points. This leads to transduction formulation [12].

This paper is organized into several sections. In Section 2, we present the related work. Section 3 describes the algorithm for feature extraction. And Section 4 also describes the method for gender classification of human faces. Experimental results are shown in Section 5. Finally, we give a conclusive remark in Section 6.

-

II. Related Work

The detection of face and facial features has been receiving researchers’ attention during past a few decades. The ultimate goal has been to develop algorithms equal in performance to human vision system. In addition, automatic analysis of human face images is required in many fields including surveillance and security, human computer interaction (HCI), object-based video coding, virtual and augmented reality, and automatic 3-D face modeling.

Face detection is the first important step in many face image processing applications. Although a lot of work has been done on detecting frontal faces much less effort has been put into detecting faces with large image-plane or depth rotations. Most templates used in face detection are whole-face templates. However, such templates are ineffective for faces significantly rotated in depth. Y. Zhu and F. Cutu [22] proposed to use half-face templates to detect faces with large depth rotations. Their experimental results show that half-face templates significantly outperform whole-face templates in detecting faces having large out-plane rotations and performs as well as whole-face templates in detecting frontal faces.

In facial feature extraction, local features on face such as nose, and then eyes are extracted and then used as input data. And it has been the central step for several applications. Various approaches have been proposed in this chapter to extract these facial points from images or video sequences of faces. The basically of approaches are come as follow [13]:

-

A. Geometry-based

Generally geometry-based approaches extracted features using geometric information such as relative positions and sizes of the face components. Technique proposed by Kanade [14], localized eyes, mouth and the nose using vertical edge map. Nevertheless these techniques require threshold, which, given the prevailing sensitivity, may adversely affect the achieved performance.

-

B. Template-based

This approach, matched facial components to previously designed templates using appropriate energy functional. The best match of a template in the facial image will yield the minimum energy. Proposed by Yuille et al [15] these algorithms require a priori template modeling, in addition to their computational costs, which clearly affect their performance. Genetic algorithms can be proposed for more efficient searching times in template matching.

-

C. Color segmentation techniques

This approach makes use of skin color to isolate the face. Any non-skin color region within the face is viewed as a candidate for eyes and/or mouth. The performance of such techniques on facial image database is rather limited, due to the delivery of ethnical backgrounds [16].

-

D. Appearance-based approaches

The concept of “feature” in these approaches differs from simple facial features such as eyes and mouth. Any extracted characteristic from the image is referred to a feature. Methods such as principal component analysis (PCA), independent component analysis (LDA), and Gabor wavelets [17] are used to extract the feature vector. These approaches are commonly used for face recognition rather than person identification.

Table I, shows all techniques in finding the facial features and compare them by the number of features extracted. As we can see there, most of techniques except of the hybrid one which are not included here, are using still images as an input and the user’s images are frontal so we don’t use the template-based. As mentioned in introduction, it is so time consuming to using the appearance-based approaches cause of training part which is take the long time.

Also we couldn’t use the color-based approaches, because just working when the eyes are visible; it means that it doesn’t give good results in different expression. T.Okabe and Y. Sato [23] proposed an appearance-based method for object recognition under varying illuminance conditions. It is know that images of an object under varying illumination conditions lie in a convex cone formed in the image space. In addition, variations due to changes in light intensity can be canceled by normalizing images. Based on these observations, they proposed method combines binary classifications using discriminant hyper planes in the normalized image space. For obtaining these hyper planes, they compared Support Vector Machine (SVM), which has been used successfully for object recognition under varying poses, and Fisher’s linear discriminant (FLD). They had conducted a number of experiments by using the Yale Face Database B and confirmed the SVMs are effective also for object recognition under varying illumination conditions.

TABLE I.

Techniques of facial feature

|

Z Author |

Technique |

No. of feature |

Video/ still-frontal/ rotate |

|

T.Kanade, 1997 |

Geometrybased |

Eye,the mouth and the nose |

Still-frontal |

|

A.Yuille, D.Cohen, and P. Hallinan, 1998 |

Templae-based |

Eyes, the mouth, the nose and eyebrow |

Still-frontal |

|

C. Chang, T.S. Huang, and C.Novak, 1994 |

Colorbased |

Eyes and /or mouth |

Still and video-frontal initially in a near frontal position and therefore both eyes are visible |

|

Y. Tian, T.Kanade, and J.F. Cohn, 2002 |

Appearance -based approaches |

Eyes and mouth |

Still-frontal and near frontal with different expression |

-

III. Feature Extraction

As mentioned previously, eyes, nose, and mouth are the most significant facial features on a human face. In order to detect facial feature candidates properly, the unnecessary information in a face image must be removed in advance. The first stage is cropping the face area as soon as the picture is taken from the webcam; the second part of preprocessing is prepared by resizing that cropped image.

To adjust the contrast and brightness of the image in order to remove noises built-in MATLAB function are used then it is converted to the gray scale image, because the corner detector can only be applied on gray level.

The Principal Component Analysis (PCA) can do prediction, redundancy removal, feature extraction, data compression, etc. Because PCA is a classical technique which can do something in the linear domain, applications having linear models are suitable. Principal Component Analysis is a suitable strategy for feature extraction because it identifies variability between human faces, which may not be immediately obvious. Principal Component Analysis does not attempt to categorize faces using familiar geometrical differences, such as nose length or eyebrow width. Instead, a set of human faces is analyzed using PCA to determine which ‘variables’ account for the variance of faces. In facial feature, these variables are called eigenfaces because when plotted they display a ghostly resemblance to human faces.

Although PCA is used extensively in statistical analysis, the pattern recognition community started to use PCA for classification only relatively recently. Principal Component Analysis is concerned with explaining the variance covariance structure through a few linear combinations of the original variables. Perhaps PCA’s greatest strengths are in its ability for date reduction and interpretation. Let us consider the PCA procedure in a training set of M face images.

Let a face image be represented as a two dimensional N by N array of intensity values, or a vector of dimension N 2. Then PCA tends to find a M- dimensional subspace whose basis vectors correspond to the maximum variance direction in the original image space. This new subspace is normally lower dimensional ( M<< M << N 2) [18].

New basis vectors define a subspace of face images called face space. All images of known faces are projected onto the face space to find sets of weights that describe the contribution of each vector. By comparing a set of weights for the unknown face to sets of weights of known faces, the face can be identified. PCA basis vectors are defined as eigenvectors of the scatter matrix S defined as:

M

S=У(xi-p)(xi-р i=1

where U is the mean of all images in the training set and x i is the ith face image represented as a vector i . The eigenvector associated with the largest eigenvalue is one that reflects the greatest variance in the image. That is, the smallest eigenvalue is associated with the eigenvector that finds the least variance.

A facial image can be projected onto M ' ( << M ) dimensions by computing

The vectors are also images, so called, eigen images, or eigenfaces . They can be viewed as images and indeed look like faces. Face space forms a cluster in image space and PCA gives suitable representation.

These operations can also be performed occasionally to update or recalculate the Eigen faces as new faces are encountered. Having initialized the system, the following steps are then used to classify new face images:

-

1. Calculate a set of weights based on the input image and the M Eigen faces by projecting the input image onto each of the Eigen faces.

-

2. Classify the weight pattern to classify the age.

-

3. (Optional) Update the Eigen faces and/or weight patterns.

In the gender classification task, the age of the subject is predicted based on the minimum Euclidean distance between the face space and each face class.

-

IV. Gender Classification of human faces

The gender classification procedure is described in this section. Features extraction- deals with extracting features that are basic for differentiating one class of object from another. First, the fast and accurate facial features extraction algorithm is developed. The training positions of the specific face region are applied. The extracted features of each face in database can be expressed in column matrix show in figure 1.

The first step in the gender classification is image acquisition that is to acquire input face image. The step deals with preprocessing that image, after the face image has been obtained. The key function of preprocessing is to improve the image in ways that increase the chances for success of the other processes.

For general, it contains removing noise, and isolating regions whose texture indicates a likelihood of alphanumeric information.

( \

Input Face Image

Preprocessing

Feature Extraction

Gender Classification

Figure 1. Fundamental Steps in Gender Classification

-

A. Acquisition of images

The quality of image is varied because of change of illumination conditions depending on the place where the system was installed or time progress. Estimation results become unstable because of this variation. So, when illumination conditions changed, in order to cope with the problem, following parameter adjustments are required [19].

-

B. Camera parameters

An adjustment dialog of the camera driver mounted in the system can adjust parameters inside the camera. In this function, parameters such as white balance, brightness, color tone, and so on can be adjusted for obtaining the stable quality of image. Generation of unnecessary shadow and saturation in the face region of the captured image can be avoided using this function [19].

-

C. Nearest neighbor classification

One of the most popular non-parametric techniques is the Nearest Neighbor classification (NNC). NNC asymptotic or infinite sample size error is less than twice of the Bayes error [20]. NNC gives a trade-off between the distributions of the training data with a priori probability of the classes involved [21].

KNN (Kth nearest neighbor classifier) classifier is easy to computer and very efficient. KNN is very compatible and obtain less memory storage. So it has good discriminative power. Also, KNN is very robust to image distortions (eg. Rotation, illumination). So this paper can produce good result by combining (PCA and KNN).

Euclidian distance determines whether the input face is near a known face. The problem of automatic face recognition is a composite task that involves detection and location of faces in a cluttered background, normalization, recognition and verification.

-

D. Pattern matching

Pattern matching is used to select the correct feature points from the feature point candidates [154]. The correct pupil candidates among those detected by the blob detector were identified by fitting the entire frame model using energy minimization. However, the edge presence was considered as a prerequisite, and this technique is by no means robust to illumination deviations and face direction variations. Other drawbacks include the high cost of convergence computations and the dependence of detection on the initial parameters. Conversely, we have used pattern matching based on the subspace method to identify correct feature points. In pattern matching, it is not necessary to extract edges. It is also robust to noise, since full pattern information is used.

-

V. Experimental condition

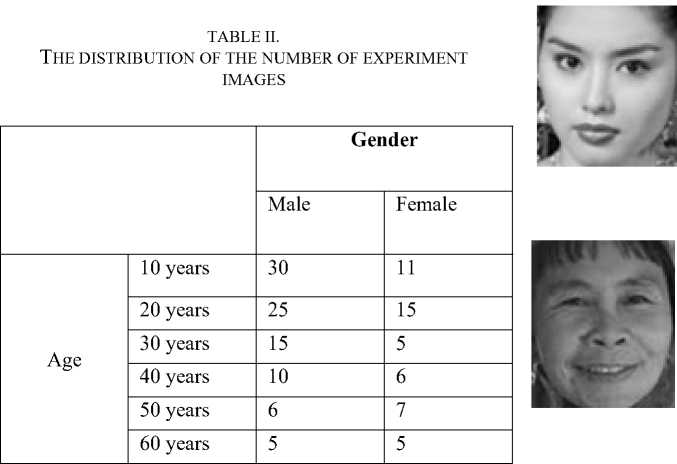

In order to evaluate the performance of the proposed algorithms, about 140 photos of different people with different races (European and Asian).The quality of image is varied because of change of illumination conditions depending on the place where the system was installed or time progress. The hitting ratios of gender classification with 140 persons were 96.3 %. Algorithms have shown good robustness and reasonable accuracy for the photos from our test set, as shown in Table II.

The proposed system has a low complexity and is suitable for real time implementations, such as real time facial animation. The processing time may be seriously reduced by algorithms and their implementation optimization, which have not yet performed.

Gender Prediction

Age Prediction Actual Age

Gender Prediction

Age Prediction Actual Age

: Female

: Between 20-25 years

: 23 years

: Female

: Between 40-45 years

: 43 years

Gender Prediction

Age Prediction Actual Age

: Female

: Under 18 years : 4 years

Figure 2. Some Female Group from Face Databases

Gender Prediction : Female

Age Prediction : Over 60 years

Actual Age : 65 years

Gender Prediction

Age Prediction Actual Age

: Male

: Between 20-25 years

: 21 years

Figure 3. Some Male Group from Face Databases

Gender Prediction

Age Prediction Actual Age

: Male

: Between 40-45 years

: 44 years

Gender Prediction : Male

Age Prediction : Over 60 years

Actual Age : 70 years

Figure 4. Result of Gender Classification

-

VI. Conclusion

A novel and practical facial feature extraction system has been described, which combines good accuracy of feature extraction and gender classification with robustness to images of different quality and picture taking conditions. In this paper, the process of the system is composed of two phases: feature extraction and gender classification. The proposed system has a low complexity and is suitable for real time implementations, such as real time facial animation.

Acknowledgment

Dr. Mint Myint Sein, University of Computer Studies, Yangon, for supervising my research, guiding me throughout my research and for her very informative lectures on Image Processing.

Список литературы Perceived Gender Classification from Face Images

- B.Moghaddam and M. H. Yang, “Learning Gender with Support Faces”, IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 24, no.5, pp.707-711, May 2002.

- Fleming MK and Cottrell GW, Categorization of faces using unsupervised feature extraction. In Proceedings of the International Joint Conference Neural Networks, vol. II (San Diego, CA, June 1990) pp. 65-70.

- Brown E and Perrett DI, What gives a face its gender? Perception 22 (1993) 829-840.

- Roberts T and Bruce V, Feature saliency in judging the sex and familiarity of faces. Perception 17 (1998) 475-481.

- Bruce V, Burton AM, Dench N, Hanna E, Healey P, Mason O, Coombes A, Fright R, and Linney A, Sex discrimination: How do we tell the difference between male and female faces? Perception 22 (1993) 131-152.

- Yamaguchi MK, Hirukawa T and Kanazawa S, Judgment of gender through facial parts. Perception 24 (1995) 563-575.

- Chellappa, R., Wilson, C.L. and Sirohey, S., “Human and machine recognition of faces: A Survey”, Proc. Of IEEE, Vol. 83, pp. 705-740 (1995).

- Choi, C., “Age change for predicting future faces”, Proc IEEE Int. Conf. On Fuzzy Systems, Vol. 3, pp.1603-1608 (1999).

- Gutta, S. And Wechler, H., “Gender and ethnic classification of human faces using hybrid classifiers,” Proc. Int. Joint Conference on Neural Networks, Vol. 6, pp.4084-4089 (1999).

- Changqin Huang, Wei Pan, and Shu Lin, “Gender Recognition with Face images Based on PARCONE Mode”, Proc. Of the Second Symposium International Computer Science and Computational technology (ISCSCT) pp.222-226 (2009).

- A.J.O’Toole, K.A.Deffene bacher, D.Valentin, K. McKee, D. Huff and H.Abdi. The Perception of Face Gender: the Role of Stimulus Structure in Recognition and Classification. Memory and Cognition, 26(1), 1998.

- V.N. Vapnik, Statistical learning theory, Wiley, New York, 1998.

- Elham Bagherian, Rahmita. Wirza.Rahmat and Nur Izura Udzir, “Extract of Facial Feature Poin”, International Journal of Computer Science and Network Security, Vol.9, No.1, January 2009.

- T. Kanade, Computer Recongition of Human faces Basel and Stuttgart: Birkhauser, 1997.

- A.Yuille, D. Cohen, and P. Hallinan, “Facial feature extraction from faces using deformable templates”, Proc. IEEE Computer Soc. Conf. On Computer Vision and Pattern Recognition, pp. 104-109, 1998.

- T.C. Chang, T.S. Huang, and C. Novak, “Facial feature extraction from color images”, Proceedings of the 12th IAPR International Conference on Pattern Recognition, vol.2, pp.39-43, Oct 1994.

- Y. Tian, T. Kanade, and J. F. Cohn, “Evaluation of Gabor wavelet-based facial action unit recognition in image sequences of increasing complexity”, Proceedings of the Fifth IEEE International Conference on Automatic Face and Gesture Recognition, pp.218-223, May 2002.

- Ji Zheng and Bao-Liang Lu, “A Support vector machine classifier with automatic confidence and its application to gender classification”, International Journal of Neurocomputing 74, 1926-1935, 2011.

- Ryotatsu Iga, Kyoko Izumi, Hisanori Hayashi, Gentaro Fukano, Tetsuya Ohtani, “A Gender and Age Estimation System from Face Images”, SICE Annual Conference in Fukui, August 4-6, 2003.

- M. J. Er, W.Chen, S.Wu “High Speed Face Recognition based on discrete cosine transform and RBF neural network” IEEE Trans on Neural Network vol. 16, no.3, pp. 679-691, 2007.

- Kwon, Y.H and da Vitoria Lobo, N. 1993. Locating Facial Features for Age Classification. In Proceedings of SPIE- The International Society for Optical Engineering Conference. 62-72.

- Y. Zhu and F.Cutu, “Face Detection using Half-Face Templats”.

- T.Okabe and Y. Sato, “Support Vector Machines for Object Recognition under varying Illuminnation Condtiions”.

- Hlaing Htake Khaung Tin, “Facial Extraction and Lip Tracking Using Facial Points”, International Journal of Computer Science, Engineering and Information Technology (IJCSEIT), Vol. 1, No. 1, March 2011.

- Sebe, N., Sun, Y., Bakker, E., Lew, M., Cohen, I., Huang, T.: “Towards authentic emotion recognition”, International Conference on Systems, Man and Cybernetics. (2004).

- Juan Bekios-Calfa, Jose M. Buenaposada, and Luis Baumela, “ Revisiting Linear Discriminant Tehcniques in Gender Recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 33, no. 4, April 2011.