Real Time Multiple Hand Gesture Recognition System for Human Computer Interaction

Автор: Siddharth S. Rautaray, Anupam Agrawal

Журнал: International Journal of Intelligent Systems and Applications(IJISA) @ijisa

Статья в выпуске: 5 vol.4, 2012 года.

Бесплатный доступ

With the increasing use of computing devices in day to day life, the need of user friendly interfaces has lead towards the evolution of different types of interfaces for human computer interaction. Real time vision based hand gesture recognition affords users the ability to interact with computers in more natural and intuitive ways. Direct use of hands as an input device is an attractive method which can communicate much more information by itself in comparison to mice, joysticks etc allowing a greater number of recognition system that can be used in a variety of human computer interaction applications. The gesture recognition system consist of three main modules like hand segmentation, hand tracking and gesture recognition from hand features. The designed system further integrated with different applications like image browser, virtual game etc. possibilities for human computer interaction. Computer Vision based systems has the potential to provide more natural, non-contact solutions. The present research work focuses on to design and develops a practical framework for real time hand gesture.

Real time, gesture recognition, human computer interaction, tracking

Короткий адрес: https://sciup.org/15010256

IDR: 15010256

Текст научной статьи Real Time Multiple Hand Gesture Recognition System for Human Computer Interaction

Published Online May 2012 in MECS

Since their first appearance, computers have become a key element of our society. Surfing the web, typing a letter, playing a video game or storing and retrieving data are just a few of the examples involving the use of computers. And due to the constant decrease in price of personal computers, they will even more influence our everyday life in the near future.

To efficiently use them, most computer applications require more and more interaction. For that reason, human-computer interaction (HCI) has been a lively field of research these last few years. Firstly based in the past on punched cards, reserved to experts, the interaction has evolved to the graphical interface paradigm. The interaction consists of the direct manipulation of graphic objects such as icons and windows using a pointing device. Even if the invention of keyboard and mouse is a great progress, there are still situations in which these devices can be seen as dinosaurs of HCI. This is particularly the case for the interaction with 3D objects. The 2 degrees of freedom (DOFs) of the mouse cannot properly emulate the 3 dimensions of space. Furthermore, such interfaces are often not intuitive to use. To achieve natural and immersive human-computer interaction, the human hand could be used as an interface device [1]. Hand gestures are a powerful human to- human communication channel, which forms a major part of information transfer in our everyday life. Hand gestures are an easy to use and natural way of interaction. Using hands as a device can help people communicate with computers in a more intuitive and natural way. When we interact with other people, our hand movements play an important role and the information they convey is very rich in many ways. We use our hands for pointing at a person or at an object, conveying information about space, shape and temporal characteristics. We constantly use our hands to interact with objects: move them, modify them, and transform them. In the same unconscious way, we gesticulate while speaking to communicate ideas (’stop’, ’come closer’, ’no’, etc). Hand movements are thus a mean of non-verbal communication, ranging from simple actions (pointing at objects for example) to more complex ones (such as expressing feelings or communicating with others). In this sense, gestures are not only an ornament of spoken language, but are essential components of the language generation process itself.

-

II. Related Work

To improve the interaction in qualitative terms in dynamic environment it is desired that the means of interaction should be as ordinary and natural as possible. Gestures, especially expressed by hands have become a popular means of human computer interface now days [3]. Human hand gestures may be defined as a set of permutation generated by actions of the hand and arm [4]. These movements may include the simple action of pointing by finger to more complex ones that are used for communication among people. Thus the adoption of hand, particularly the palm and fingers as the means of input devices sufficiently lower the technological barrier in the interaction between the disinterested users and computer in the course of human computer interaction [2]. This presents a very natural way of removing technological barriers while we are adopting the hands themselves as input devices. This needs the capability to understand human patterns without the requirement of contact sensors. The problem is that, the applications need to rely on external devices that are able to capture the gestures and convert them into input. For this the usage of a video camera can be done that grabs user’s gesture, along with that we require processing system that capture the useful features and partitions the behavior into appropriate classes.

Various applications designed for gesture recognition require restricted background, set of gesture command and a camera for capturing images. Numerous applications related to gesture recognition have been designed for presenting, pointing, virtual workbenches, VR etc. Gesture input can be categorized into different categories depending on various characteristic [5]. One of the categories is deictic gesture that refers to reaching for something or pointing an object. Accepting or refusing an action for an event is termed as mimetic gestures. It is useful for language representation of gestures. An iconic gesture is way of defining an object or its features. Chai et al. [6] presents a hand gesture application in gallery browsing 3D depth data analysis method. It adds up the global structure information with the local texture variation in the gesture framework designed. Pavlovic et al . [4] have concluded in their paper that the gestures performed by users must be logically explainable for designing a good human computer interface. The current technologies for gesture recognition are not in a state of providing acceptable solutions to the problems stated above. One of the major challenges is evolution in the due course of time of the complexity and robustness associated with the analysis and evaluation for gestures recognition. Different researchers have proposed and implemented different pragmatic techniques for gesture as the input for human computer interfaces. Dias et al. [7] presents a free-hand gesture user interface which is based on finding the flight of fiduciary color markers connected to the user’s fingers.

The model used for the video presentation, is grounded in its disintegration in a sequence of frames or filmstrip . Liu and Lovell [8], proposed an interesting technique for real time tracking of hand capturing gestures through a web camera and Intel Pentium based personal computer. The proposed technique is implemented without any use of sophisticated image processing algorithms and hardware. Atia et al. [9] designs a tilting interface for remote and quick interactions for controlling the directions in an application in ubiquitous environment. It uses coin sized3D accelerometer sensor for manipulating the application. Controlling VLC media player using hand gesture recognition is done in real time environment using vision based techniques [10]. Xu et al. [11] used contact based devices like accelerometer and EMG sensors for controlling virtual games. Conci et al. [12] designs an interactive virtual blackboard by using video processing and gesture recognition engine for giving commands, writing and manipulating objects on a projected visual interface. Lee et al. [13] developed a Virtual Office Environment System (VOES), in which avatar is used navigate and interact with other participants. For controlling the avatar motion in the system a continuous hand gesture system is designed which uses state automata to segment continuous hand gesture and to remove meaningless motion. Xu et al. [14] presents a hand gesture recognition system for a virtual Rubik's Cube game control that is controlled by EMG and 3D accelerometer to provide a user-friendly interaction between human and computers. In this the signals segments the meaningful gestures from the stream of EMG signal inputs.

There are several studies on the hand movements especially gestures, by modeling the human body .On the basis of body of knowledge now it is possible to countenance the problem from a mathematical viewpoint [15]. The major drawbacks of such techniques are they are very complex and highly sophisticated for developing an actionable procedure to make the necessary jigs and tools for any typical application scenarios. This problem can be overcome by pattern recognition methods having lower hardware and computational overhead. These aspects have been considered in subsequent sections, by making the dynamic user interface for the validation of those concepts, where a user performs actions that generates an executable commands in an intelligent system to implement the user requirements in a natural way.

-

III. Proposed Framework

The frame work is said to be a blue print or prototype of theoretical and practical concepts that is used as the guideline of research and implementation work. Some frameworks describe the theoretical aspects for concept building while the other describes practical aspects for implementation for the theoretical concepts. One of the key contributions of the frameworks is its capacity to enable relationships to be formed for the individual elements of the framework. Each parameter setting of the framework will have an effect on the others, and the relationships that are formed based on those settings supports a more informed process. The present research effort aims to provide a practical framework for design and development of a real time gesture recognition system for varied applications in the domain of human computer interaction. The approach is to propose a methodological approach to designing gesture interactions.

The diagram shows the fundamental relationship structure that can be drawn from applying the framework to individual systems for specific applications. There are two levels of relationships building that the framework supports; a specific level i.e. outer circle, where individual systems and the relationships between the parameter settings can inform design, and a general level i.e. inner circle where existing HCI theories and methods can be incorporated into the framework for more general applications to designing gesture systems.

-

A. Two Handed Interaction

In our everyday life, our activities mostly involve the use of both hands. This is the case when we deal cards, when we play a musical instrument, even when we take notes. In the case of HCI, most interfaces only use one-handed gestures. In, the user executes commands by changing its hand shape to handle a computer generated object in a virtual reality environment .Wah and Ranganath propose a prototype which permits the user to move and resize windows and objects, open/close windows by using simple hand gestures. Even with common devices, such as the mouse or the graphic board, only one hand is used to interact with the computer. The keyboard seems to be the only device that permits the use of the two hands in the same time. But using two-handed inputs for computer interfaces can be of potential benefit. Many experiments have been conducted to test the validity of two-handed interaction for HCI. The obtained results are very encouraging. In, the authors ran two experiments to investigate two-handed inputs. The first experiment involves the performance of a compound selection/positioning task. The user is asked to position a graphical object with one hand and scale its size with the other hand. For that purpose, a graphic tablet and a slider box are used. This first experiment shows that performing parallel tasks is a natural behavior of the user for that particular task. Furthermore, they show that the efficiency correlates positively to the degree of parallelism involved in the task. The second experiment involves the performance of a compound navigation/selection task. The user is asked to select particular words in a document. Authors compare the one- versus two-handed techniques. The conclusions are again favorable to two-handed interaction as the twohanded method significantly outperforms the commonly used one-handed method.

Furthermore, using the two-handed approach can reduce the gap between expert and novice users. The overall conclusion that Buxton and Myers draw from these two experiments is that performance can be improved by splitting tasks between the two hands. Their experimental task was area sweeping, which consists in drawing a bounding box surrounding a set of objects. Their conclusions support the fact that twohanded techniques outperform the conventional onehanded technique. Bi-manual techniques are faster and for high demanding tasks, the advantage of two-handed input over one-handed input becomes more obvious.

-

IV. Architecture design

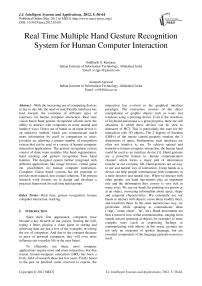

An effective vision based gesture recognition system for human computer interaction must accomplish two main tasks. First the position and orientation of the hand in 3D space must be determined in each frame. Second, the hand and its pose must be recognized and classified to provide the interface with information on actions required i.e. the hand must be tracked within the work volume to give positioning information to the interface, and gestures must be recognized to present the meaning behind the movements to the interface. Due to the nature of the hand and its many degrees of freedom, these are not insignificant tasks. Additionally, these tasks must be executed as quickly as possible in order to obtain a system that runs at close frame rate (30 Hz). The system should also be robust so that tracking can be reestablished automatically if lost or if the hand moves momentarily out of the working area. In order to accomplish these tasks, our gesture recognition system follows the following architecture as shown: The system architecture as shown in figure 1 uses an integrated approach for hand gesture recognition system. It recognizes static and dynamic hand gestures. In the proposed two hand gesture recognition with the use two cameras, one for each hand.

Figure 1 . System architecture

-

V. Simulation Setup

The system setup is shown in figure 1. It consists of a system core 2 quad PC with two camera mounted on the top of the display screen. The twin camera system mounted at the top of the screen is used to provide stereo images of the user and, more specifically, the user’s hand(s). The coordinate system is specified as a right hand coordinate system with the cameras on the x axis, the y axis vertical and the z axis pointing out from the cameras into the scene. Video frames are sent by firewire to a core 2 quad computer system where the image processing is carried out in software. Gesture and positional information is passed between the recognition and graphical display systems via a socket based data link. This connects the PC to the SGI and allows position, orientation and event information to be transmitted to the application program.

-

A. Camera Calibration

In order to be able to accurately compute the 3D position of points from video images, details of each camera’s intrinsic and extrinsic parameters are required. Camera calibration is carried out to determine these parameters in terms of a fundamental matrix F, which provides a linear projective mapping between camera pixels coordinates and real world coordinates:

(J) F (О

Where x’ and y’ are the pixel coordinates of the 3D physical point (x,y,z).

The implemented system architecture starts with the background subtraction of the left cam followed by the right cam. For the left hand detection after image subtraction by left cam the system uses an existing dataset based on a xml file called agest.xml. While for the right hand detection of the image subtracted by the right cam the system uses initial position value. After the subtraction of the captured image and detection of the left and right hands the segmentation, tracking and recognition are done separately for both the hands. Merging the results of the left hand and right hand segmentation, tracking and recognition the system goes on for the interpretation of results. The interpreted results are various hand gestures as mentioned in the gesture vocabulary. These hand gestures thus recognized are matched with the gestures in the vocabulary set and executed for the corresponding commands mapped with the gestures. These commands thus executed may be used for implementation in various real life applications.

-

B. Image Preprocessing

Having an underlying system for the hand in a particular pose assist the feature extraction task. Knowledge of the location of features within the previous frame can be used to define a narrow search window for finding the features in the current frame. This reduces the search area and hence computation required to find the feature. In a system where all processing for a frame must be completed within 33ms (in order to maintain a frame rate of 30 hz), minimizing computation is essential. The proposed method also enhances the robustness of the system if some features are not tracking well (due to items obscuring part of the hand for e.g.), the model allows their position to be estimated from the location of the remaining feature. This prevents the features from wandering off to track other parts of the image in error.

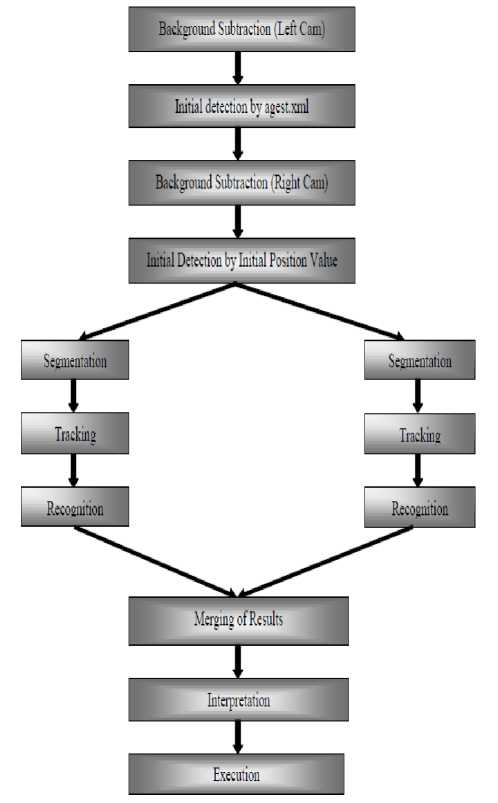

Figure 2 . Architecture design for Detection

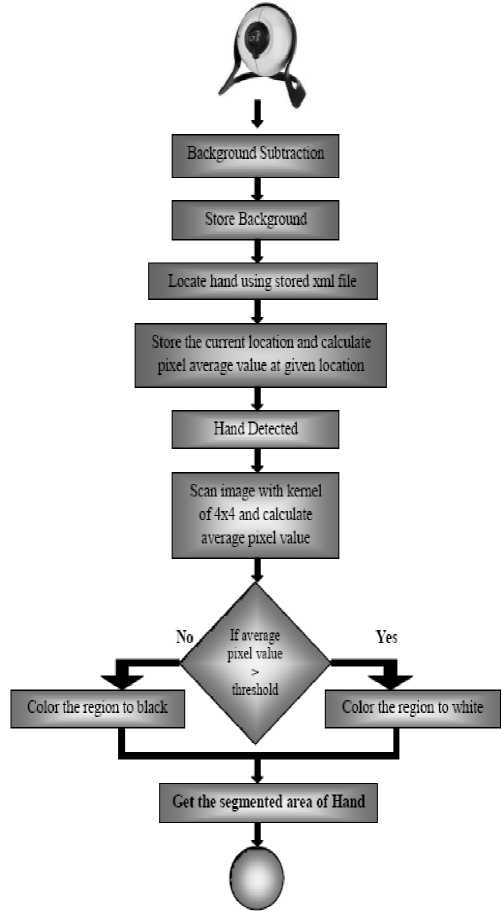

In the current system, during the image acquisition phase system extracts the static background and sits idle till user puts his hand in front of the corresponding camera as shown in figure 2. Once the hand is placed in front of camera it detects the hand using haar like features [16]. A haar cascade xml is created for detection of hand. The haar-like characteristics are comparatively robust to the noise and lighting changes as the features will compute the gray level difference between the black and the white rectangles. The noise and lighting variations also strike the pixel measures on the entire characteristic region, which could be counteracted.

н I ^>^<^>

Figure 2. A set of Haar-like features [16]

The figure 2 depicts shows each Haar-like feature comprises of two to three associated white and black rectangles. The Haar like characteristics is computed as difference between summations of values inside the two rectangles.

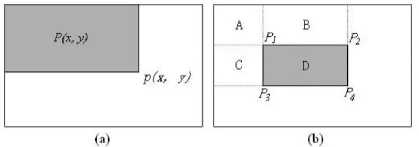

Figure 3. The concept of Integral Image [17]

The “integral image” (Figure 3) at position of pixel(x, y) has the sum of pixel values exactly above and left of the very pixel inclusive:

р (х,у) = ^ НУ, У )

The sum of the pixel value computed by:

P 1 +P 2 +P 3 +P 4 (2)

As per the definition of the integral image. In order to detect the object of interest, the given image is scanned by a sub-window containing the specific Haar-like characteristic. With each Haar-like charateristic fj, the correspondent classifier hj(x) has been defined by the following equality:

№) = ( ^№<рА (3)

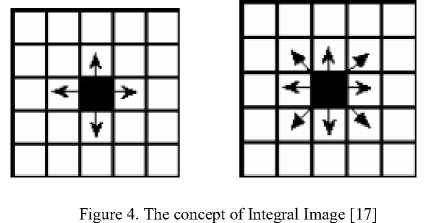

After detecting the corresponding hand it converts each frame into colour independent two level gray scale image and segments the frame by applying the region based image segmentation technique known as flood fill algorithm. A generally recursive algorithm that finds connected regions based on some similarity metric, used mostly in filling areas with colour in graphics programs, though can also be found in implementations of Tetris. Variations that do exist seek to reduce the recursiveness, and make a more linear approach, mostly using linked lists. Initially a compromise will be taken between programming complexity and performance for this project’s implementation, and adapted if need be. ‘4-way’ or ‘8-way’ flood fill algorithms are sometimes mentioned, referring to the number of branches from each pixel as shown in figure 4.

Each obtained frame is subtracted with the background to get the newly entered object in the scene. Entire frame is divided into 2 gray levels in which hand is given the highest gray level value while other is given near 0 by using average filter.

So

<

i

<

grid P,i, jV

(grid*grid)

0

-

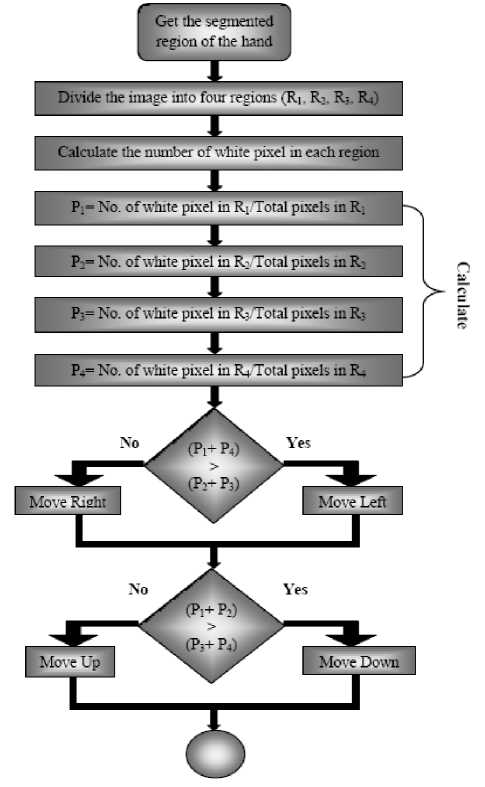

C. Tracking

The segmented hand from the input sequence is tracked in the subsequent phase. This is done by using modified CAMSHIFT based on the CAMSHIFT algorithm for hand tracking. CAMSHIFT is designed for dynamically changing distributions. This unique feature makes the system robust to track moving objects in video sequences, where size of the object and its location may change over time. In this way, dynamic adjustment of search window size is possible. CAMSHIFT is based on colors, thus it requires the availability of color histogram of the objects to be tracked the desired objects within the video sequences. As mentioned earlier, the color model was built in the HSV domain on the basis of the hue component. The spatial mean value is computed by selecting the size and initial position of the search window. The search window is moved towards center of the image in subsequent steps. Once the search window is centered computing is done through the centroid with first-order instant for x, y. The process is continued till it arrived at the point of convergence. In the implemented tracking process the image is divided into four regions R1, R2, R3, R4. Then the number of white pixels in each of the four divided regions is calculated based on the total number of pixels. In order to find the position of hand in the divided regions we calculate p1, p2, p3, p4 the position values of hand tracked in the corresponding region. The position values are calculated using the equations as shown in figure 4. The calculated position values are then added and compared for their respective regions to track the movement of hand. Based on the position values calculated and hand movement thus tracked is in turn mapped with the commands in the specific application.

Figure 4. Architecture design for Tracking.

D. Recognition

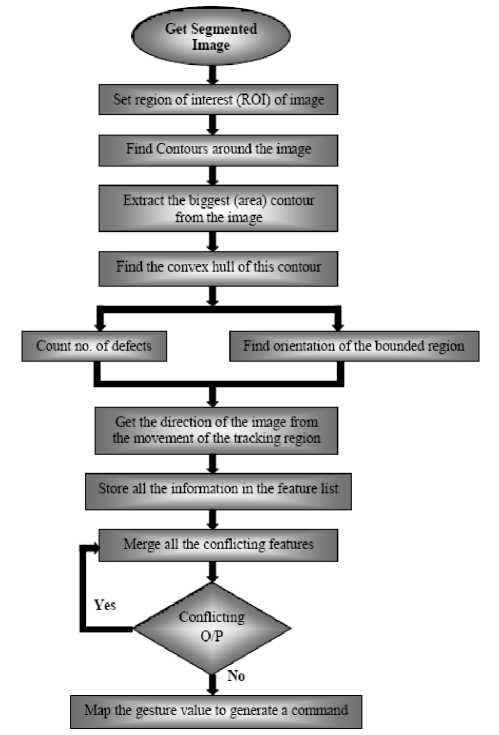

In the recognition phase as shown in figure 5 the system extracts the features of the hand for gesture classification. In the recognition phase the system extracts the features of the hand for gesture classification. The different gestures are differentiated by extracting features from the contours around hand and getting the convex hull of the given contour. One can identify different gestures by counting the numbers of defects in the convex hull and it is relative orientation in its bounding rectangle. The features are classified into 8 classes, which are classified by a widely used algorithm for recognition called NCN (nearest conflicting neighbors).The recognition phase of the proposed two hand gesture recognition system takes the segmented image as input. The region of interest in the segmented image is identified and set first. Then the system finds the contour around the image and biggest area around the contour of the image is extracted to find the convex hull of this contour. Once the convex hull s found the number of defects in the image is calculated and orientation of the bonded region of image is found. Next the direction of the image is taken from the movement of the tracking region.

Figure 5. Architecture design for Recognition.

All this collected information is stored in the features list. Next all the conflicting features in the feature list are merged if there are more conflicting features then they are merged again if no conflicting features remain in the feature list then the gesture value is mapped to generate the corresponding command.

-

VI. Applications

Applications domain being an important consideration for the design and analysis of gesture based user interface needs to have a thorough consideration .Some of the vivid application areas for gesture based recognition system may be summarized in this section .With the consideration for implementation of hand gesture recognition system in complex environment one of the most important area of application could be Virtual Reality: Gestures for virtual and augmented reality applications have experienced one of the greatest levels of uptake in computing. Virtual reality interactions use gestures to enable realistic manipulations of virtual objects using ones hands, for 3D display interactions. These interactions may be

Non-immersed interactions: Using hand gestures to arrange virtual objects and to navigate around a 3D information space such as a graph, using a stereoscopic display. These interactions do not require any representations of the user and user gestures primarily for navigation and manipulation tasks. Or Semiimmersed interactions and avatars: where users could interact with their own image projected onto a wall to create the virtual world display. Or Fully-immersed interactions and object manipulations: Fully-immersed interaction requires Data Gloves or other sensing devices that can track movements and replicate them and the user within the virtual world.

Another interesting area of application is Augmented Reality, these applications often user markers, consisting of patterns printed on physical objects, which can more easily be tracked using computer vision, and that are used for displaying virtual objects in augmented reality displays. Robotics and Telepresence applications are typically situated within the domain of space exploration and military-based research projects. The gestures used to interact with and control robots are similar to fully immersed virtual reality interactions, however the worlds are often real, presenting the operator with video feed from cameras located on the robot. Desktop and Tablet PC Applications, In desktop computing applications, gestures can provide an alternative interaction to the mouse and keyboard.

Graphics and drawing applications One of the first applications for gestures was in graphics manipulation from as early as 1964 The gestures consisted of strokes, lines, or circles used for drawing, controlling applications, or switching modes. Computer Supported Collaborative Work (CSCW) Gestures are used in CSCW applications to enable multiple users to interact with a shared display, using a variety of computing devices such as desktop or tabletop displays. Ubiquitous Computing and Smart Environments early work on gestures demonstrated how distance interactions for displays or devices could be enabled within smart room environments. Tangible Computing One of the first systems that considered tangible interactions was on Bricks. Bricks are physical objects that can be manipulated to control corresponding digital objects.

Pervasive and Mobile Computing Gestures can enable eyes-free interactions with mobile devices that allow users to focus their visual attention on their primary task. Wearable computing wearable devices allow users to interact within smart room environments using various devices that are carried or worn by the user. This interaction provides users with persistent access and flexible control of devices through gestures. Telematics Computer technology is now ubiquitous within automotive design, but gestures have not yet received a great deal of attention in the research literature. Use of gesturing with telematics to enable secondary task interactions to reduce the distraction caused to the primary task of driving. Adaptive Technology Gestures are not the most common technique for adaptive interfaces since they require movement, and this may not be conducive to some physical disabilities, although some technology, such as the Data Glove, has been used to measure and track hand impairment in disabled users.

Communication Applications communication interfaces are those that seek to enable a more humanhuman style of human-computer interactions. Gestures for communication interfaces are considered one of the most challenging areas. Gesture Toolkits are an important approach to investigating gesture interactions .While there are several attempts at developing gesture interaction toolkits for a variety of applications. Games we look at gestures for computer games a player’s hand or body position to control movement and orientation of interactive game objects such as cars, while tracked motion to navigate a game environment. Used gestures to control the movement of avatars in a virtual world. Though the gestures have been mapped for commands used in image browsing the same gesture vocabulary could be reused for mapping different set of commands according to different range of applications like controlling games, power point presentations etc. This makes the gesture recognition system more generalized and adaptive towards human computer interaction.

Figure 6. Browsing image using two hand gestures for zoom in command.

-

VII. Conclusion

Gesture based interfaces allow human computer interaction to be in a natural as well as intuitive manner.

It makes the interaction device free which makes it useful for dynamic environment It is though unfortunate that with the ever increasing interaction in dynamic environments and corresponding input technologies still not many applications are available which are controlled using current and smart facility of providing input which is by hand gesture. The most important advantage of the usage of hand gesture based input modes is that using this method the users get that ability to interact with the application from a distance without any physical interaction with the keyboard or mouse. This paper implements a hand gesture recognition system which is used for browsing images in the image browser and provides a fruitful solution towards a user friendly interface between human and computer using hand gestures. The proposed research work could be very efficiently used in a varied domain of applications where the human computer interaction is the regular requirements. Also the gesture vocabulary designed can be further extended for controlling different applications like game control etc. The vocabulary designed also gives flexibility to define gestures based on the user interest for specific command which make the gesture recognition system more user friendly .As the system provides the flexibility to the users and specifically physically challenged users to define the gesture according to their feasibility and ease of use.

-

VIII. Future Perspective

The present system though seems to be feasible and more user friendly as compared to the traditional command based or device based input modes is somewhat less robust in recognition phase. An attempt to make the input modes less constraints dependent for the users hand gestures has been preferred. But robustness of the application may be increased by applying some more resilient algorithms that may help to reduce noise and blur motion in order to have more accurate translation of gestures into commands.

Another important aspect for the present research work and related development could be design of an independent gesture vocabulary framework. This framework though could be independent of the application domain. Also the framework may be useful for controlling different types of games and other applications dependent on the controlled through user defined gestures.

Список литературы Real Time Multiple Hand Gesture Recognition System for Human Computer Interaction

- Conic, N., Cerseato, P., De Natale, F. G. B.,: Natural Human- Machine Interface using an Interactive Virtual Blackboard, In Proceeding of ICIP 2007, pp.181-184, (2007).

- A. Vardy, J. Robinson, Li-Te Cheng, “The Wrist Cam as input device”, Wearable Computers, 1999

- Wong Tai Man, Sun Han Qiu, Wong Kin Hong, “ThumbStick: A Novel Virtual Hand Gesture Interface”, In Proceedings of the IEEE International Workshop on Robots and human Interactive Communication, 300-305.

- W. T., Freeman, D. B Anderson, and P. et al. Beardsley. “Computer vision for interactive computer graphics. IEEE Trans. On Computer Graphics and Applications, 18:42-53, 1998.

- N. Soontranon, S. Aramvith, and T. H. Chalidabhongse, “Improved face and hand tracking for sign language Recognition”. IEEE Trans. On ITCC, 2:141-146, 2005.

- V. Pavlovic, R. Sharma and T.S. Huang, “Visual interpretation of hand gestures for human-computer interaction: A review,” IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), vol. 7(19), pp. 677–695, 1997.

- Xiujuan Chai, Yikai Fang and Kongqiao Wang, “Robust hand gesture analysis and application in gallery browsing,” In Proceeding of ICME, New York, pp. 938-94, 2009.

- José Miguel Salles Dias, Pedro Nande, Pedro Santos, Nuno Barata and André Correia, “Image Manipulation through Gestures,” In Proceedings of AICG’04, pp. 1-8, 2004.

- Ayman Atia and Jiro Tanaka, “Interaction with Tilting Gestures in Ubiquitous Environments,” In International Journal of UbiComp (IJU), Vol.1, No.3, 2010.

- S.S. Rautaray and A. Agrawal, “A Novel Human Computer Interface Based On Hand Gesture Recognition Using Computer Vision Techniques,” In Proceedings of ACM IITM’10, pp. 292-296, 2010.

- Z. Xu, C. Xiang, W. Wen-hui, Y. Ji-hai, V. Lantz and W. Kong-qiao, “ Hand Gesture Recognition and Virtual Game Control Based on 3D Accelerometer and EMG Sensors,” In Proceedings of IUI’09, pp. 401-406, 2009.

- C. S. Lee, S. W. Ghyme, C. J. Park and K. Wohn, “The Control of avatar motion using hand gesture,” In Proceeding of Virtual Reality Software and technology (VRST), pp. 59-65, 1998.

- X. Zhang, X. Chen, Y. Li, V. Lantz, K. Wang and J. Yang, “A framework for Hand Gesture Recognition Based on Accelerometer and EMG Sensors,” IEEE Trans. On Systems, Man and Cybernetics- Part A: Systems and Humans, pp. 1-13, 2011.

- N. Conci, P. Cerseato and F. G. B. De Natale, “Natural Human- Machine Interface using an Interactive Virtual Blackboard,” In Proceeding of ICIP 2007, pp. 181-184, 2007.

- B. Yi, F. C. Harris Jr., L. Wang and Y. Yan, “Real-time natural hand gestures”, In Proceedings of IEEE Computing in science and engineering, pp. 92-96, 2005.

- R. Lienhart and J. Maydt, “An extended set of Haar-like features for rapid object detection,” In Proceedings of ICIP02, pp. 900-903, 2002.

- C.H, Messom and A.L.C. Barczak, Fast and Efficient Rotated Haar-like Features Using Rotated Integral Images", In Proceedings of Australian Conference on Robotics and Automation (ACRA2006), pp. 1-6, 2006.

- Nguyen Dang Binh, Enokida Shuichi, Toshiaki Ejima, “Real-Time Hand Tracking and Gesture Recognition System”, 2005.

- G. R. Bradski. Computer video faces tracking for use in a perceptual user interface. Intel Technology Journal, Q2, pp. 1-15, 1998.

- R. J. K. Jacob, “Human-Computer Interaction”, ACM Computing surveys, 177-179, March 1996.

- T. Brown and R. C. Thomas, “Finger tracking for the digital desk”. IEEE Trans. On AUIC, 11-16, 2000.

- J. Shi, C. Tomasi. “Good Features to track”, IEEE Conference on Computer Vision and Pattern Recognition, 593-600, 1994.

- Q. Chen, N.D. Georganas, E.M. Petriu, “Realtime Vision-based Hand Gesture Recognition Using Haar-like Features,” In Proceedings of. IEEE Instrument and Measurement Technology Conference, 2007.

- Ismail, N. A., O’Brien, A.,: Enabling Multimodal Interaction in Web-Based Personal Digital Photo Browsing,” Proceedings of the International Conference on Computer and Communication Engineering 2008, Kuala Lumpur, Malaysia, May 13-15, pp. 907-910, (2008).

- Moeslund, T. B., Norgaard, L.: A brief overview of hand gestures used in wearable human computer interfaces, Technical report, Aalborg University, Denmark, (2002).