Secured biometric identification: hybrid fusion of fingerprint and finger veins

Автор: Youssef Elmir, Naim Khelifi

Журнал: International Journal of Information Technology and Computer Science @ijitcs

Статья в выпуске: 5 Vol. 11, 2019 года.

Бесплатный доступ

The goal of this work is the improvement of the performance of a multimodal biometric identification system based on fingerprints and finger vein recognition. This system has to authenticate the person identity using features extracted from his fingerprints and finger veins by multimodal fusion. It is already proved that multimodal fusion improves the performance of biometric recognition, basically the fusion at feature level and score level. However, both of them showed some limits and in order to enhance the overall performance, a new fusion method has been proposed in this work; it consists on using both features and scores fusion at the same time. The main contribution of investigation this technique of fusion is the reduction of the template size after fusion without influencing the overall performance of recognition. Experiments were performed on a real multimodal database SDUMLA-HMT and obtained results showed that as expected multimodal fusion strategies achieved the best performances versus uni-modal ones, and the fusion at feature level was better than fusion at score level in recognition rate (100%, 95.54% respectively) but using more amounts of data for identification. The proposed hybrid fusion strategy has overcome this limit and clearly preserved the best performance (100% as recognition rate) and in the same time it has reduced the proportion of essential data necessary for identification.

Biometrics, fingerprint, finger vein, identification, verification

Короткий адрес: https://sciup.org/15016357

IDR: 15016357 | DOI: 10.5815/ijitcs.2019.05.04

Текст научной статьи Secured biometric identification: hybrid fusion of fingerprint and finger veins

Published Online May 2019 in MECS

The increasing implementations of biometric systems that are based on single modality (called uni-modal systems) have showed three main limitations: a limitation in terms of performance, a limitation in terms of universality of use and a limitation in terms of fraud detection. The first limitation concerns performance in recognition because modalities are physical features that vary in their acquisition but also in their nature. The second limitation is due to the non-universality of certain modalities. Non-universality means that certain modalities may not be possessed by the subject to be identified or may not be informative enough to allow the verification of the identity of certain persons. For example, some people may have unusable fingerprints due to an accident or prolonged manual labour that has damaged the skin in their fingerprints. The third limitation concerns the detection of impostures. Indeed, fraud or identity theft is a problem that has always existed and can be reduced by the use of biometrics because it is easier to falsify a password or an identity paper than to reproduce a face or fingerprints. However, these impostures exist, especially for fingerprints, which is nowadays the most widely used biometrics and for which it is possible to imitate (because fingerprints leave traces) and reproduce (using silicone for example) the fingerprints of another person.

All these limitations can be reduced or even eliminated by the combination of several biometric systems forming a multimodal biometric system. Multimodal systems can improve recognition performance. They also make it possible to solve the problem of non-universality of certain biometrics by proposing an alternative to people who cannot use certain biometrics. And finally, they can limit the possibilities of fraud because it is more difficult to obtain and reproduce several modalities than one.

Furthermore, the biometric fusion has also some limits according to the used strategy of fusion. For example, despite the good performance that could be obtained by fusion at features level since the features contain richer information about the input biometric data than the identification score, the amount of this data necessary for identification is very important and consequently, it needs more storage space and more computational time. Additionally, all modalities will be indispensable for identification (Non-universality problem). Where, the fusion at score level can overcome this problem by using only available recognition scores to make a decision, but, it cannot have the same performance of the fusion at feature level due to the amount of data lost after each process (pre-treatment, extraction, matching ... etc.) before obtaining the final identification score.

Based on the above, this work proposes a multimodal biometric system based on the fusion of two modalities, namely fingerprints and finger veins. The first modality is the most used in the field of biometrics for centuries until today, but as mentioned above, it has some performance limits and it is more exposed to fraud. In this context, another closed modality (the veins of the finger) has been chosen to reduce or eliminate the limitations found in the recognition based on fingerprints.

These two modalities come from the same source that is the finger; this advantage allows the operators of this strategy to benefit from advantage of the ease and speed of the acquisition process.

Furthermore, the fusion of two modalities can be performed at features level which increase the size of the biometric template or at score level which may decrease the recognition performance according to the loss of data after each process of the system (pre-processing, feature extraction, matching …etc.).

The main contribution of this work is the reduction of template size by multimodal fusion of selected features of homogenous modalities and scores fusion of heterogeneous modalities without influencing the overall recognition performance.

The remainder of this paper is organized as follows: Section 2 presents some related works with some definitions and basic concepts. Section 3 describes the important part of this work and develops the proposed approach. Section 4 gives and discusses experiments scenarios and obtained results. Conclusion and future work are given in the final section.

-

II. Related Works

Many works have been conducted in this area of research; in the following some works found in the literature are presented.

Lin You and Ting Wang [1] proposed a novel fuzzy vault scheme based on fingerprint and finger vein feature fusion which can alleviate the limitation of the fuzzy vault using one biological template. It is difficult for attackers to restore each biological template from the fusion template. In addition, the fusion encoding not the feature point parameters is stored in the fuzzy vault, and this fusion encoding will not reveal the information of feature point. Their experiment results show that their scheme can also achieve a high genuine acceptance rate and a low false acceptance rate.

Wencheng et al. [2] proposed fingerprint and finger- vein based cancellable multi-biometric system, which provides template protection and revocability. The proposed multi-biometric system combines the minutia-based fingerprint feature set and image-based finger-vein feature set. They developed a feature-level fusion strategy with three fusion options. Matching performance and security strength using these different fusion options are thoroughly evaluated and analyzed. Moreover, compared with the original partial discrete Fourier transform (P-DFT), security of the proposed multi-biometric system is strengthened, thanks to the enhanced partial discrete Fourier transform (EP-DFT) based non-invertible transformation.

Jinfeng Yang and Xu Zhang [3] proposed a new fingerprint-vein based biometric method for making a finger more universal in biometrics. The fingerprint and finger-vein features are first exploited and extracted using a unified Gabor filter framework. Then, a novel supervised local-preserving canonical correlation analysis method (SLPCCAM) is proposed to generate fingerprintvein feature vectors (FPVFVs) in feature-level fusion. Based on FPVFVs, the nearest neighbour classifier is employed for personal identification. Experimental results show that the proposed approach has a high capability in fingerprint-vein based personal recognition as well as multimodal feature-level fusion.

Young et al. [4] proposed a new multimodal biometric recognition of touched fingerprint and finger-vein. Their proposed method was novel in the following four ways. First, they can get a fingerprint and a finger-vein image at the same time by the proposed device, which acquires the fingerprint and finger-vein images from the first and second knuckles of finger, respectively. Second, the device’s size is so small that they can easily adopt it on a mobile device. Third, fingerprint recognition is done based on the minutia points of ridge area and finger-vein recognition is performed based on local binary pattern (LBP) with appearance information of finger area. Fourth, based on decision level fusion, they combined two results of fingerprint and finger-vein recognition. Experimental results confirmed the efficiency and usefulness of their proposed method.

The majority of these works has focused on biometric fusion at feature level and they proved the efficiency of this method regarding the recognition performance (see Table 1), but they did not study the amount of data necessary for template storage and the computational time for computing.

Table 1. Summary of obtained results of related works

|

GAR % |

FAR % |

FRR % |

EER % |

Accuracy % |

Number of fingerprint images |

Number of finger vein images |

|

|

Feature fusion [1] |

95 |

0.4 |

100x8 |

100x8 |

|||

|

Feature fusion [2] |

0.04 |

FVC2004-DB2 100x2 |

FV-HMTD 100x2 |

||||

|

Feature fusion [3] |

99.687 |

64x10 |

64x10 |

||||

|

Score fusion [3] |

98.75 |

64x10 |

64x10 |

||||

|

Decision fusion [4] |

0.01 |

1.07 |

33x10x10 |

33x10x10 |

As far as we know the idea of using the fusion at feature level and at score level in the same time in a biometric system is a novel proposition that has not been discussed before in literature.

Theoretically, this idea can improve or at least preserve the performance of the feature fusion based systems and offer more flexibility by overcoming the non-universality problem due to the advantage of score fusion based system.

Pretreatment

Pretreatment

Feature Extraction

Feature Extraction

Pretreatment

Pretreatment

Feature Extraction

Feature Extraction

Feature Fusion and Reduction

Feature Fusion and Reduction

Comparaison

Score

Stored Data:

Combined н Templates

Comparaison

Score

Score Fusion

Rejected

Decision

Accepted/ID

Fig.1. Block-diagram of fingerprint and finger vein recognition combined at features and score level.

-

III. The Proposed Approach

The goal of this work is to develop a biometric system based on the fusion of fingerprints and finger veins for biometric recognition. Fig.1 shows the different stages of the proposed biometric recognition system that will be analyzed in the following step by step.

-

A. Pretreatment

After acquiring the image to be processed, it is necessary to improve it by eliminating the noise due to the acquisition by the sensor.

The image contains unnecessary data that occupies more memory space, and negatively affects system performance, such as the background, which leads to select a region of relevant information that represents the uniqueness of the biometric modality; this area is called ROI (Region of Interest).

At the end of this step, only the representative area of the image that will be exploited in the next process is preserved.

-

B. Features Extraction

This step is the most crucial in the entire design of a biometric authentication system. It consists of representing the image in the form of a vector code that summarizes the relevant features of the image. This code clearly represents the system accuracy.

The proposed approach for features extraction of both chosen biometric modalities in this work (fingerprint and finger vein) is based on the overall image processing which considers all the ROI obtained from the last step. This choice is motivated by the important increasing number of unique features of the subjects that are treated which generates more or less efficient recognition system. To concretize this technique, Gabor filter is applied on an image in order to have a data vector (features) which represents the code of the image that will be used in the matching process to authenticate questioned subject.

Gabor filters are particularly convenient operators for contour extraction and detection. Indeed, the system is able with their help to isolate in an image very varied components, which go from large objects clearly defined to fine details of particular orientation, by simply changing two parameters: the frequency and the orientation. These two elements are necessary and sufficient features for the description of a contour line: its thickness and its direction. It is shown that the human visual system proceeds in a similar way for the detection of contours. Thus, the method based on Gabor filters cannot be classified in "old" or "new" techniques; it should rather be described as natural technique.

Function and Gabor Filter [5] are the association of a Gaussian curve and an oriented sinusoid. In image processing, the work space domain is in dimension 2, which makes it possible to write the function of Gabor in the following way:

1 С Х д У е I -2 I л^+л^"

G(x,y,0,f) = e 1 °x °y 1 cos(2^fxj. (1)

Where: xθ = xcos(θ)+ysin(θ) and yθ = ycos(θ)-xsin(θ).

θ is the orientation of the sinusoid, f its frequency and σx (respectively σy) is the standard deviation of the Gaussian along the x-axis (respectively y-axis).

Applying this function to a convolution mask, a convolution filter called Gabor filter is defined.

The application of a Gabor filter G mask M to an image I, is therefore summarized in the following formula:

g (I) = J = M * I. (2)

As we shall see, the Gabor filters make it possible to isolate the contours of an image of orientation perpendicular to and responding to a certain thickness, which depends on f. This justifies the fact that to detect the set of contours of an image, it is generally applied to it a set of Gabor filters called bench.

A bank of complex Gabor filters determined by a set of parameters is built [6,7]. This bank of filters is often used to extract the characteristics of Gabor's magnitudes responses (recent phase characteristics) from images. Typically, in this case, a bank of 40 filters (8 orientations and 5 scales) is used for the purpose of extracting image features.

A structure is returned with several members including the filters themselves defined in the spatial and frequency domain.

The responses of the magnitude of a filtered image with a bank of complex Gabor filters are calculated. The amplitude responses of the filtering operations are normalized after the downscaling using zero mean and normalization unit variance. After that, they are added to the filtered output vector image. All magnitude filter responses form a concatenated vector in the filtered image vector.

-

C. Feature Fusion and Reduction

This step consists in feature fusion of two modalities: fingerprint and finger veins of the same finger to consequently have a single vector that represents the finger code that will be used in the authentication process based on the finger to be able to identify/verify a given subject, or for the stage of fusion with other fingers in order to consolidate the proposed approach according to the performances and precision of the obtained results. This operation of data fusion causes an increasing amount of data that may be unused or redundant.

In order to reduce the size of biometric data in the stored template, a solution can be made by using a statistical procedure like PCA (Principal component analysis). The main idea is to express the M starting images according to a base of particular orthogonal vectors "eigenvectors" containing information independent of a vector to another. These new data are therefore more appropriately expressed for biometric recognition.

In mathematical terms, this amounts to finding the eigenvectors of the covariance matrix formed by the different images of the learning base.

Therefore, the PCA does not require any prior knowledge of the image and is more effective when it is coupled to the used distance measurement, but its simplicity to implement contrasts with a strong sensitivity to changes in illumination and pose.

Another alternative is the LDA (Linear discriminant analysis). Unlike the PCA algorithm, that of the LDA performs a real separation of classes. To be able to use it, one must first organize the image learning base in several classes: one class per person and several images per class. The LDA analyses the eigenvectors of the data dispersion matrix, with the objective of maximizing the variations between the images of different individuals (interclasses) while minimizing the variations between the images of the same individual (intra-classes).

However, when the number of individuals to be treated is lower than the resolution of the image, it is difficult to apply the LDA which can then reveal matrices of singular (non-invertible) dispersions.

As the PCA does not take into account class discrimination, LDA is designed to address this problem. Standard LDA-based methods first apply the PCA for size reduction and then discriminant analysis.

One of the LDA species is implemented in this working context which is Kernel Fisher Discriminant (KFD) [6,7].

This entry can be a single image (a single vector) from which the result 'feat' must contain a single vector representing the input image, or a set of images so a matrix or the result 'feat' must also be a matrix, corresponding to the test matrix X. It is concluded that these two functions make it possible to represent the learning matrix in the form of a model of the subscales representing the individuals, by using the discriminant analysis technique KFA on one side, and to project this model obtained on the test matrix to also obtain a matrix of subclasses representing the test subjects.

These two models (learning and testing) are used in the calculation of distances or similarity of features in order to make a decision on the authenticity of the questioned subjects.

-

D. Distance Measures

When it is desired to compare two feature vectors from the feature extraction module of a biometric system, one can either perform a similarity measure (similarity) or a distance measure (divergence).

The first category of distances consists of Euclidean distances and are defined from the Minkowski distance of order p in a Euclidean space RN (N determines the dimension of Euclidean space).

Some distance measurements are presented in the original space of the images and then in the space of Mahalanobis.

Before being able to measure distance in the Mahalanobis space, it is essential to understand how to move from the space of images Im to the Mahalanobis space E mah .

At the output of the PCA algorithm, eigenvectors are obtained and associated with eigenvalues (representing the variance according to each dimension). These eigenvectors define a rotation to a space whose covariance between the different dimensions is zero. The Mahalanobis space is a space where the variance for each dimension is equal to 1. It is obtained from the space of the images by dividing each eigenvector by its corresponding standard deviation.

Let u and v be two eigenvectors of Im, coming from the PCA algorithm, and m and n two vectors of Emah. Let λi the eigenvalues associated with the vectors u and v, and σi the standard deviation, then we define λi=σi 2 . The vectors u and v are connected to the vectors m and n from the following relationships:

uu vv mi = — = —and ni = — = —. (3) ^i VA ^i A

Mahalanobis L 1 project the vectors into the Mahalanobis space. Thus, for e and v proper vectors of respective projections m and n on the Mahalanobis space, the distance Mahalanobis L1 is defined by:

ZN mi -ni . (4)

Mahalanobis L 2 is identical to the Euclidean distance except that it is computed in the Mahalanobis space. Thus, for eigenvectors u and v of respective projections m and n on the Mahalanobis space, the distance Mahalanobis L2 is defined by:

N2

MahL2(u,v) = ^^=jmi-nil J . (5)

Mahalanobis cosine (MahCosine) is simply the cosine of the angle between the vectors u and v, once they have been projected onto and normalized by variance estimators.

So, by definition:

S MahCosin (u, v) = cOS 0 mn . (6)

Moreover:

cos ^ mn

MN^cos ^ mn

MN

Hence the final formula of the MahCosine similarity measure:

m.n

S MahCosin (u, v) =

DMahCosin (u, v) = - SMahCosin(u, v). (9)

Where DmahCosine(u,v) is the measure of equivalent distance. Finally, if can be noted that this is mainly the covariance between the vectors in the Mahalanobis space.

-

E. Scores Fusion

The fusion at the level of scores is the most used type of fusion because it can be applied to all types of systems (unlike the pre-classification fusion), in a space of limited dimension (a vector of scores whose dimension is equal to the number of subsystems), with relatively simple and efficient methods but dealing with more information than merging decisions. The fusion of scores therefore consists of the classification: YES or NO for the final decision, of a vector of real numbers whose dimension is equal to the number of subsystems. The methods of scores fusion combine the information at the level of the scores from the comparison modules.

There are two approaches to combine the scores obtained by different systems. The first approach is to treat the subject as a combination problem, while the other approach is to see this as a classification problem. It is important to note that Jain et al. [8] showed that combination approaches perform better than most classification methods.

This work focused on the first score fusion approach, namely score combination method, which divides it into two categories: simple combination methods and the combination of scores by fuzzy logic.

The methods of simple score combinations are very simple methods whose objective is to obtain a final score s from N available scores, if for i = 1 to N from N systems. The most used methods are the sum, the average, the product, the minimum, the maximum or the median.

-

IV. Experiments and Obtained Results

-

A. Database

The present biometric recognition system is validated by a real multimodal biometric database called SDUMLA-HMT [9] which was collected by the Shandong University Learning Machine and Applications Group, Jinan, China in 2010.

106 subjects, including 61 male and 45 female with age between 17 and 31 years, participated in the data collection process in which all five biometric traits: face, finger veins, gait, iris and fingerprints are collected for each subject.

Therefore, there are five sub-databases included in SDUMLA-HMT, i.e., a face, finger veins, gait, iris and fingerprints databases. It should be noted that in the five sub databases, all biometric traits with the same person identity are captured from the same subject. Two subdatabases are chosen in these experiments: fingerprints and finger veins.

-

B. Scenarios

The demonstration consists in the fusion of two biometric modalities which are fingerprint and finger veins, precisely, of two fingers that are chosen; index finger and middle finger of both right and left hands, that is to say, there will be two modalities of four fingers to be treated (i.e. a total of 2×4=8 biometrics) for each subject.

The experiments are carried out on fingerprint data captured using the sensor AES2501 only as well as the veins of the fingers in SDUMLA-HMT database.

Six captions of each biometric trait were made for each of the 106 subjects in the database, divided into two subsets: three for train and three for test. Thus, two matrix of n × 318 (3x106) are obtained, where n is the number of fused features extracted from one finger or more of each subject.

The total number of treated images is: 8×6×106=5088 images including 2544 fingerprints and 2544 finger vein images.

The performance evaluation is calculated from a number of tests of 106x3=318 clients, and 105×106×3=33390 tests of impostors. This large number makes it possible to significantly credit the experiment results:

Scenario1: the first step is to apply a uni-modal biometric approach based on the recognition of the fingerprints or veins of the index or the middle finger of right or left hands for each subject (the size of each template is n).

Scenario2: here, the first stage of fusion is started, which consists of the fusion of features of two modalities (fingerprint and finger veins) of each of the four fingers, including the left index, the left middle, the left right index or right middle fingers (the size of each template is 2n).

Scenario3: The biometric modalities are fused at the feature level. That is to say, the obtained vectors of extracted features are concatenated in order to have one single vector grouping the features of both modalities of all fingers, this vector will be used to check the authenticity of the questioned subject (the size of each template is 4x2xn=8n).

Scenario4: The second technique of applied fusion in this approach is the fusion at score level where each modality is treated separately, and then the scores obtained will be combined by giving a final score to be used in the decision to accept/reject the questioned subject (the size of each template is n).

Scenario5: It is at this stage that the proposed fusion is performed. This new so-called 'hybrid' approach consists in the fusion of both modalities of four fingers at features level and at score level at the same time (the size of each template is 2n). The extracted features from the fingerprint and the veins of the same finger are combined in one vector which gives four features vectors representing the right index, the middle right, the left index and the left major fingers for each subject. Then the comparison will be done separately for each finger to get four scores accordingly which will eventually be combined to give one single score that will be used for making decision.

-

C. Results

The obtained results are summarized in Tables 2, 3 and 4 whose fields represent some metrics useful for evaluating the performance of the biometric recognition approaches. The considered metrics are: recognition rate, equal error rate, verification rate at 1%, 0.1% and 0.01% of false acceptances rate.

The results are ordered respectively according to the order of scenarios.

-

D. Discussion

This work is undertaken by a demonstration of a uni-modal biometric system based on the recognition of fingerprints and veins of the index and major of both hands. It has been found that the finger vein recognition results are more efficient compared to those of fingerprints as shown in Table 2, which makes this modality a very interesting research area in the biometric field.

The first fusion step is reported in the second scenario, where both modalities (fingerprint and veins) are combined at the feature level for each finger. Recognition results are improved at this stage compared to those obtained in the first uni-modal approach.

Scenarios 3 and 4 resulted in insightful recognition results. In the proposed algorithm, the fusion at the features level is greater compared to that at the score level (Table 4).

The fusion principle has been further concretized in the fourth scenario by combining two modalities of four fingers studied in the experiments at the score level. The recognition rate of this operation is considerably improved compared to the other recognition variants previously seen as well as to other evaluation parameters namely: the equal error rate and the verification rates.

Table 2. Results of scenario1 (the size of each template is n) [10]

|

Recognition Rate (%) |

Equal Error Rate (%) |

Verification Rate at 1% of False Acceptances Rate (%) |

Verification Rate at 0.1% of False Acceptances Rate (%) |

Verification Rate at 0.01% of False Acceptances Rate (%) |

|

|

Left index fingerprint |

36 |

19 |

44 |

23 |

12 |

|

Left middle fingerprint |

30 |

28 |

34 |

20 |

14 |

|

Right index fingerprint |

45 |

21 |

47 |

39 |

24 |

|

Right middle fingerprint |

26 |

26 |

30 |

14 |

06 |

|

Left index finger veins |

68 |

10 |

76 |

59 |

49 |

|

Left middle finger veins |

29 |

27 |

31 |

22 |

19 |

|

Right index finger veins |

43 |

16 |

56 |

22 |

20 |

|

Right middle finger veins |

59 |

12 |

69 |

52 |

44 |

Table 3. Results of scenario2 (the size of each template is 2n) [10]

|

Recognition Rate (%) |

Equal Error Rate (%) |

Verification Rate at 1% of False Acceptances Rate (%) |

Verification Rate at 0.1% of False Acceptances Rate (%) |

Verification Rate at 0.01% of False Acceptances Rate (%) |

|

|

Left index |

96 |

01.26 |

98 |

96 |

94 |

|

Left middle |

94 |

02.20 |

96 |

92 |

83 |

|

Right index |

98 |

00.63 |

99 |

98 |

94 |

|

Right middle |

93 |

01.57 |

98 |

94 |

88 |

Table 4. Results of scenarios 3 (the size of each template is 8n), 4 (the size of each template is n) and 5 (the size of each template is 2n) [10]

|

Recognition Rate (%) |

Equal Error Rate (%) |

Verification Rate at 1% of False Acceptances Rate (%) |

Verification Rate at 0.1% of False Acceptances Rate (%) |

Verification Rate at 0.01% of False Acceptances Rate (%) |

|

|

Features level fusion |

100 |

0 |

100 |

100 |

100 |

|

Score level fusion |

96.54 |

1.82 |

98 |

96 |

91 |

|

Hybrid |

100 |

0 |

100 |

100 |

100 |

Nevertheless, the features level fusion makes it possible to have more or less efficient recognition performance whatever the methods involved in the authentication algorithm. That is, the eight biometric traits are required in the feature-level fusion process, if any, a complex process should be performed to resolve this kind of problem (a subject lose one or more fingers). On the other hand, since the fusion at score level is to compare each biometric modality separately and scores will be combined to make a decision, the authentication process will never be broken unless the questioned subject has lost all his fingers!

To this end, a hybrid approach is proposed (scenario 5), this approach combines the privileges of modes of fusion, both in terms of features and scores. The final result is really remarkable (recognition rate and verification rate are 100%, the error rate is equal to 0%), and it is possible to take a decision of authentication even with one finger.

The choice of fusion in this algorithm makes this biometric system ultra-secure because the usurpation of these traits at the same time is extremely difficult if not impossible.

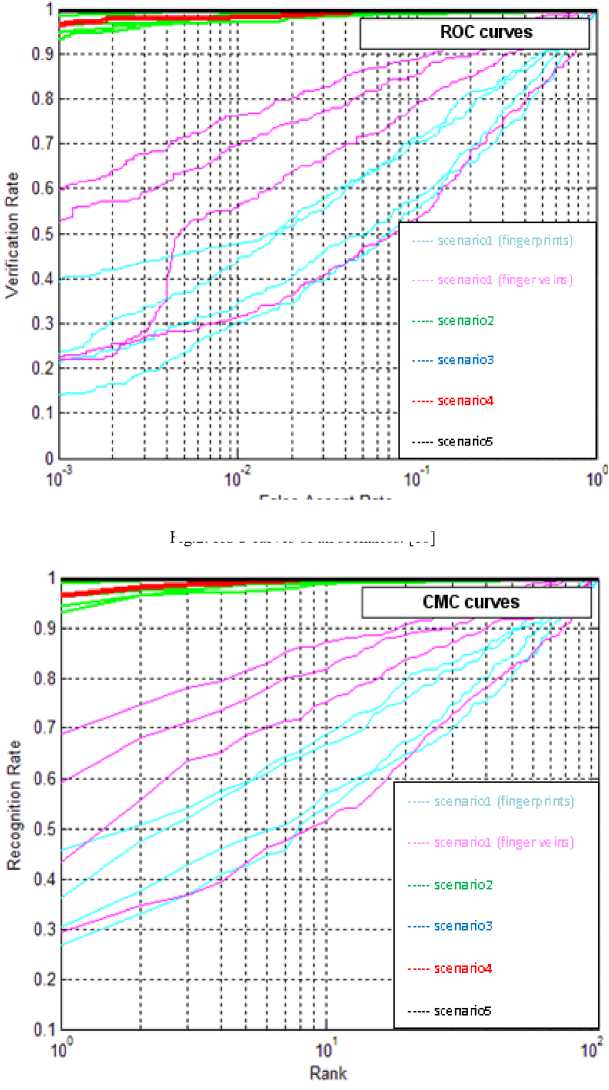

All the results discussed in this section are summarized in two types of curves: ROC (Receiver Operator Characteristics) and the CMC (Cumulative Matching

Curve).

Fig.2 presents the ROC curves of each scenario described previously, these curves make it possible to have the verification rates according to the rates of the false acceptance rates of each scenario.

As shown by the ROC curve of the proposed fusion technique (scenario 5), the verification rate is 100% even at the lowest FAR level of 0.001. That is to say, this technique will never be deceived in the identity verification of a solicited subject.

See also Fig.3 in which are presented the CMC curves of the five scenarios described previously. The CMC curve is a curve of cumulative correspondences; it is used to determine how much recognition rate of 100% is obtained, that is to say, if the recognition rate is 100% in the tenth rank that means that the legitimate subject is necessarily among the top ten suspects. This curve is considered very useful for public services.

The curve representing the results of the proposed fusion approach (scenario 5) starts with a 100% recognition rate from the first rank, which means that a subject will be identified in the first attempt, which proof that this new technique has boosted the performance and accuracy of biometric authentication systems.

Fig.3. CMC curves of all scenarios. [10]

False Accept Rate

Fig.2. ROC curves of all scenarios. [10]

The experimental phase took place in this section where the aspect of uni-modality was highlighted as well as that of multimodality in several parts.

Multimodal fusion is developed in terms of features and scores. The limits of these two levels of fusion are well discussed to arrive at the last resort of a new optimal fusion approach that has presented quite acceptable performance levels.

In term of computational complexity, the proposed approach as feature fusion and score fusion has shown some limits because of the big number of extracted features compared to literature due the use of global mode of feature extraction (Gabor filter). Despite these limit and assuming that the number of feature points is n, as the amount of needed resources varies with the input, the computational complexity is generally expressed as a function n → f(n), where f(n) is either the worst-case complexity, that is the maximum of the amount of resources that are needed for all inputs of size n (8n) in scenario 3 that achieved the best performance (100% as RR and 0 as EER). In scenario 4, the maximum of the amount of resources that are needed for all inputs of size n was n, and the obtained performance was (96,54% as RR and 1,82 as EER), thus, this system is less complex but with weak performance. As expected in scenario 5, this hybrid fusion approach can be considered as the average-case complexity. That is average of the amount of resources over all input of size n ([1..4]x2n) due that the size of the feature template can be at least 2n or at most 8n.

-

V. Conclusion

In this work, a biometric recognition system based on the fusion of fingerprints and finger veins is performed. This approach is conceived by reporting all the necessary stages of image processing of the modalities in the subject as soon as pre-processing, feature extraction, comparison and different fusion variants seen in this work, including the fusion at feature level, at score level and the proposed new technique as hybrid fusion that combines both modes of fusion cited in the same algorithm. The obtained results are represented in the form of curves: ROC and CMC, which also make it possible to evaluate the performance of the biometric system by presenting some evaluation rates and metrics such as recognition rate, equal error rate and verification rates.

In the first step, uni-modal technique based on fingerprints or finger veins was presented, in which the veins based biometric trait offered a much more efficient system compared to the fingerprint.

Nevertheless, the uni-modality recognizes limits in the authentication results in terms of recognition rates for example, which range from 26% to 45% for fingerprints and 29% to 68% for finger veins which pushes us to a multimodal approach that has significantly increased the performance of this process, namely recognition rates up to 100%.

A comparative report of the various fusion techniques was reviewed and in particular between the fusion at feature level and at score level which gave relatively close results, the technique of fusion of features was more powerful (template dimension of 8n) compared to that of scores, but the latter is more flexible in resolving cases of missing data (non-universality) of a subject. In this regard, the new optimal technique is opted to take advantage and the privileges of both modes of fusion, the flexibility of hybrid fusion algorithm that solves authentication systems to a single-fingered subject using less amount of data (template dimension of 2n instead of 8n), on one hand, and insightful results of recognition (100%), on the other hand.

Despite the brilliant results obtained from this proposed recognition approach, the size of the training matrices remains more or less important, because the pretreatment phase has generated relatively large biometric feature codes as a result of the global processing mode.

In future work, local processing techniques will be implemented in the pre-treatment and feature extraction phases of the biometric traits studied to reduce its representative codes and only unique features will be considered.

Acknowledgment

The authors wish to thank Štruc Vitomir and Nikola Pavešić. This work was supported in part by Matlab PhD (Pretty helpful Development) function for face recognition toolbox.

The authors also would like to express their thanks to the MLA Lab of Shandong University for SDUMLA-HMT Database.

Список литературы Secured biometric identification: hybrid fusion of fingerprint and finger veins

- L. You and T. Wang, “A novel fuzzy vault scheme based on fingerprint and finger vein feature fusion,” Soft Computing, pp. 1-9, 2018.

- W. Yanga, S. Wang, J. Huc, G. Zhenga and C. Vallia, “A Fingerprint and Finger-vein Based Cancelable Multi-biometric System,” Pattern Recognition, 2018.

- J. Yang and X. Zhang, “Feature-level fusion of fingerprint and finger-vein for personal identification,” Pattern Recognition Letters, pp. 623–628, 2012.

- H. P. Young et al., “A multimodal biometric recognition of touched fingerprint and finger-vein,” in International Conference on Multimedia and Signal Processing (CMSP), Guilin, Guangxi, China, 2011.

- G. Arnaud and C. Guillaume, “Vision Par Ordinateur – Filtres de Gabor,”.

- Š. Vitomir and N. Pavešić, “Gabor-based kernel partial-least-squares discrimination features for face recognition,” Informatica, vol. 20, no. 1, pp. 115-138, 2009.

- Š. Vitomir and N. Pavešić, “The complete gabor-fisher classifier for robust face recognition,” EURASIP Journal on Advances in Signal Processing, no. 1, 2010.

- J. Anil K., R. Arun, and P. Salil, “An introduction to biometric recognition,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 14, no. 1, pp. 4-20, January 2004.

- Y. Yin, L. Liu, and X. Sun, “SDUMLA-HMT: A Multimodal Biometric Database.,” in The 6th Chinese Conference on Biometric Recognition (CCBR 2011), LNCS 7098, Beijing, China, 2011, pp. 260-268.

- K. Naim, Un Système Biométrique Basé sur la Fusion des Empreintes Digitales et des Veines des Doigts, Tahri Mohammed University, Béchar, 2015.