Shape Classification Based on Normalized Distance and Angle Histograms Using PNN

Автор: Atefeh Goshvarpour, Hossein Ebrahimnezhad, Ateke Goshvarpour

Журнал: International Journal of Information Technology and Computer Science(IJITCS) @ijitcs

Статья в выпуске: 9 Vol. 5, 2013 года.

Бесплатный доступ

This study presents an attempt to develop a reliable computerized algorithm, which could classify images into predetermined classes. For this purpose, the histogram of the normalized distance between each two points of the image (algorithm I) and the histogram of normalized distances between three points and the normalized angle of the image edge points (algorithm II) are analyzed. The probabilistic neural network (PNN) is implemented to do shape classification. Our proposed approach is tested on ten classes of MPEG-7 image database. It has been shown that feature extraction based on the distance histogram (algorithm I and algorithm II) is efficient due to its potential to preserve interclass and intra-class variation. In addition, these algorithms ensur invariance to geometric transformations (e.g. translation, rotation and scaling). The best classification accuracy is achieved by eight classes with the total accuracy of 90% and 92.5% for algorithm I and algorithm II, respectively. The reported experiment reveal that the proposed classification algorithm could be useful in the study of MPEG-7 shapes.

Shape Classification, Distance Histogram, Feature Extraction, MPEG-7 Shapes

Короткий адрес: https://sciup.org/15011965

IDR: 15011965

Текст научной статьи Shape Classification Based on Normalized Distance and Angle Histograms Using PNN

Published Online August 2013 in MECS

In recent years a lot of research has been performed on the image feature extraction based on the shape. Some of the effective shape descriptors are based on the histogram. The most advantage of using the histogram to present the features is that it results in to reduce the feature vector dimension and consequently reduce the computational cost in the classification process [1].

Histogram-based local descriptors (HBLD) are extensively applied in a variety of computer vision tasks such as shape matching [2-5], image retrieval [6] [7], and texture analysis [8].

In one study, Jain and Vailaya [9] proposed shape descriptor algorithm, which describes two features of the shape: local features are extracted by means of histograms of edge directions and global features are obtained by moment invariants. In their study, the strategy which is based on weight is applied to match features of the images. In another more recent work, Wei et al. [10] presented a hybrid approach to trademark image retrieval in which local features are obtained by computing the mean of curvature at each boundary point and mean and standard deviation of the distance between the centroid and each edge point in edge map. In the study of Singh and Pooja [11], it has shown that both local and global features have a significant impact on improving the performance of the image retrieval system. Scott and Nowak [12], had introduced and solved the cyclic order-preserving assignment problem (COPAP), which can be applied to find a cyclic orderpreserving matching between two point sets taken from shapes defined by a single close contour.

Accurate classification of image features has been also the object of much research. Ling and Jacobs [13] applied nearest neighbor for classification of the

MPEG-7 shape database by using inner-distance shape context. Earlier, Super [14] had examined the same classification technique by applying normalized squared distance. Sebastian et al. [15] applied curve edits distance features in their classification problem.

In this study the histogram of distances between shapes based on shape geodesics is examined. Totally, the following features were used:

-

1) Normalized two - point distance histogram (the distances between all points).

-

2) Normalized three - point distance histogram (the normalized sum of distances between two vectors and the normalized angle between them are used as a second feature).

After that, the proposed algorithms are applied to shape classification.

The remainder of this paper is organized as follows. Section II introduced the set of data applied in this study. In addition, the general principles of feature extraction and classification are presented and the details of the proposed method are provided. In Section III, the experimental results are demonstrated. Finally, the study is concluded in Section IV.

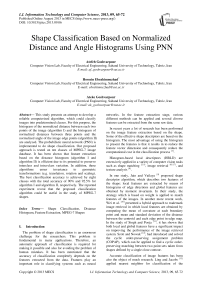

Fig. 1 represents the structure of the proposed algorithm. These steps are discussed in more detail in the following sections.

Fig. 1: The flow chart of proposed algorithm

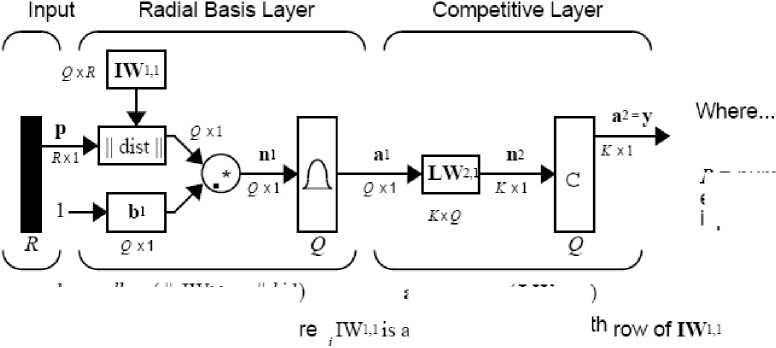

In this data set, each class contains 20 similar objects, usually (heavily) distorted versions of a single base shape. The whole data set therefore consists of 1400 shapes.

The proposed algorithm is tested on 10 selected symbols of the MPEG-7 database. Therefore, in the current study 200 shapes are applied.

-

2.2 Feature Extraction

-

2.2.1 Two-Point Distance Histograms (Algorithm I)

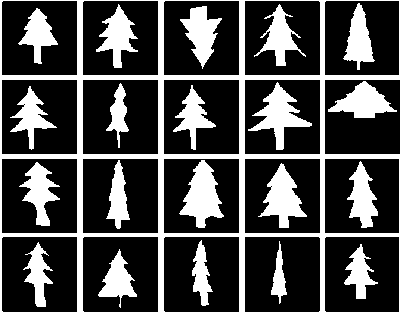

In this paper a boundary-based method is considered because an object’s shape is chiefly discriminated by the boundary [17]. The comparison between shapes is prepared using shape geodesics. Then the proposed measures are applied to shape classification.

The invariant distance histogram identifies and classifies objects in digitized images by distinguishing rigidly in-equivalent finite point sets and is produced by the mutual distances between distinct pairs of such. This distance is calculated by (1):

d = V( x 2 - x 1 ) 2 + ( y 2 - y 1 ) 2

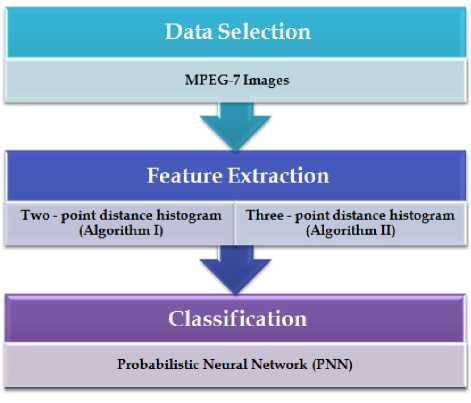

The discretized outlines of objects in digitized images are considered. This process is shown in Fig. 2.

Fig. 2: The process of extracting the outlines of objects in digitized images (apple-1)

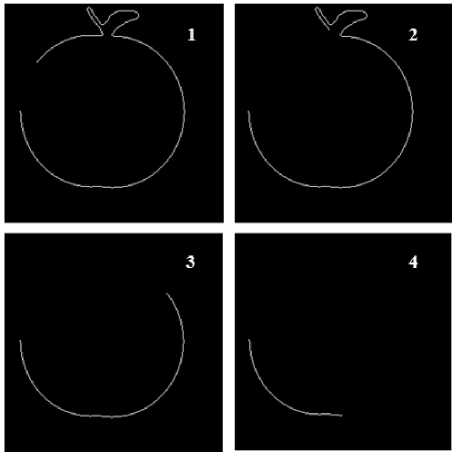

Fig. 3 demonstrated how distances between all points are calculated.

The proportion of points in successive bins of a certain binwidth up to the maximum distance is listed, and a plot of the histogram function with the distances on the y-axis and the corresponding normalized bins on the x-axis is obtained.

-

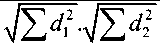

2.2.2 Three-Point Distance Histograms (Algorithm II)

The three point distance histogram function, which takes the mutual distances between three points, is introduced as a more accurate method compared to the two-point distance histogram function.

In this algorithm, one point is fixed (x 1 , y 1 ), leaving the other two points (x 2 , y 2 ) and (x 3 , y 3 ), and the distances are calculated by (2) and (3).

Fig. 3: Algorithm I: Normalized distance between each two points of the image d1 = (Кx2 -x1)2 +(y2 -y1)2

d 2 = V( x3 - x1 ) + ( y 3 - y 1 ) 2

The desired distance is obtained by the sum of these two vectors (d1 and d ).

d = di + d2

Fig. 4 demonstrated the example of how the process is done.

Then, the 3-D histogram of two variables is calculated: the normalized Euclidean distances (d 1 and d2) and the normalized deviation angle ( 9 ) of the image edge points which construct d 1 and d 2 (Fig. 4). The deviation angle ( 9 ) is defined as:

a =

(d (1). d2 (1) + d (2). d2 (2))

0 = cos 1 a

-

2.3 Classification

-

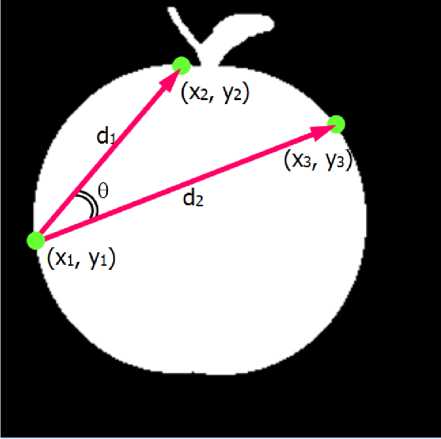

2.3.1 Probabilistic neural network (PNN)

In order to classify the features, probabilistic neural network (PNN) is implemented.

Fig. 4: Algorithm II: This algorithm is based on distance (d=d 1 +d 2 ) and angle ( 9 )

Probabilistic neural networks can be used for classification problems. When an input is presented, the first layer computes distances from the input vector to the training input vectors, and produces a vector whose elements indicate how close the input is to a training input.

The second layer sums these contributions for each class of inputs to produce as its net output a vector of probabilities.

Finally, the competing transfer function of the output of the second layer picks the maximum of these probabilities, and produces a "1" for that class and a "0" for the other classes.

The architecture of probabilistic neural network is shown in Fig. 5.

In this network, it is assumed that there are Q input vector/target vector pairs. Each target vector has K elements. One of these elements is "1" and the rest is "0". Therefore, each input vector is associated with one of K classes.

The first-layer input weights, IW1,1, are set to the transpose of the matrix formed from the Q training pairs, P' . When an input is presented the ||dist|| box produces a vector whose elements indicate how close the input is to the vectors of the training set. These elements are multiplied, element by element, by the bias and sent the radbas transfer function. An input vector close to a training vector is represented by a number close to "1" in the output vector a1 . If an input is close to several training vectors of a single class, it is represented by several elements of a1 that are close to "1" [18].

The second-layer weights, LW1,2, are set to the matrix T of target vectors. Each vector has a "1" only in the row associated with that particular class of input, and

"0"’s elsewhere. The multiplication Ta1 sums the elements of a1 due to each of the K input classes.

a2 = compete LWii ai) 1 vector made of the / t

R = number of elements in input vector

a^vadbas^ ,IWM - p | bil ) al is Mh element of ai wher

Q = number of input/target pairs = number of neurons in layer 1

К = number of classes of input data = number of neurons in layer 2

Fig. 5: Probabilistic Neural Network architecture [18]

Finally, the second-layer transfer function, compete, produces a "1" corresponding to the largest element of n2 , and "0"’s elsewhere. Thus, the network has classified the input vector into a specific one of K classes because that class had the maximum probability of being correct [18].

In the training process of PNN, the determination of the value of the smoothing parameter (spread) is a fundamental step. In this network an optimum spread is derived by trial and error. If the spread is near zero, the network acts as a nearest neighbor classifier. As spread becomes larger, the designed network takes into account several nearby design vectors.

Probabilistic neural networks are a kind of radial basis network suitable for classification problems. The structure of this network is straightforward and does not depend on the training process. A PNN is assured to converge to a Bayesian classifier as long as it is given enough training data. In addition, it has shown that the generalization of this network is good.

Although the PNN has many advantages, it suffers from one major disadvantage. Since this network uses more computation, its function approximation or classification is slower to operate in comparison with other kinds of networks.

-

III. Results

In this study, 10 symbols (classes) of the MPEG-7 database are examined. As mentioned before, each class consists of 20 similar objects, usually (heavily) distorted versions of a single base shape. Therefore, the whole data we used consists of 200 shapes. Fig. 6 depicts one set of data used in this study.

Fig. 6: One set of data from MPEG-7 applied in this study

Normalized Euclidean distances (algorithm I and algorithm II) for each pair of vertices, the distance from one point on the shape edge to the other points are used as features.

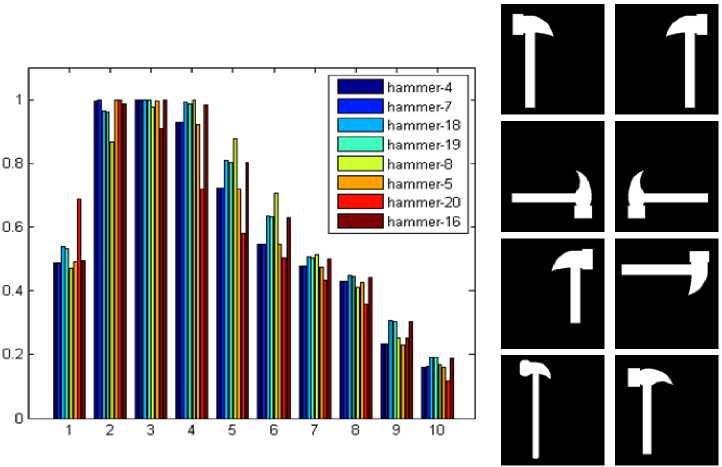

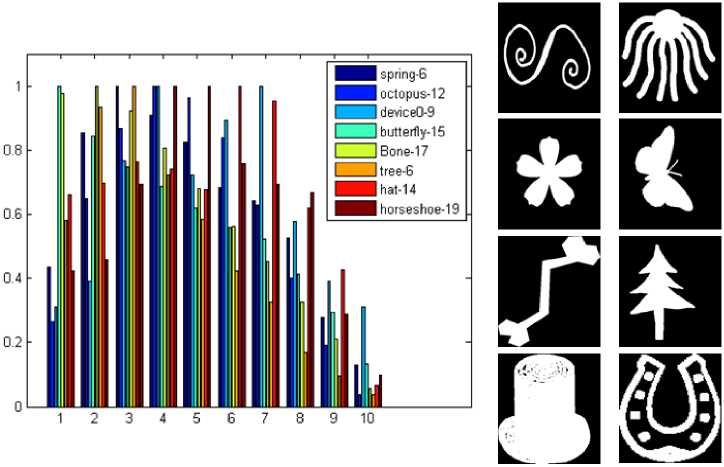

The effectiveness of normalized distance histograms provides small variations in histograms for intra-class and large variations for interclass is demonstrated in Fig. 7 and Fig. 8, respectively.

Normalized distance histograms of eight images of ‘‘hammer’’ class are presented in Fig. 7, which demonstrates minor variation in features extracted from instances of similar class, whereas Fig. 8 depicts a large variation among the distance histograms of the edge images of different classes (‘‘spring’’, ‘‘octopus’’, ‘‘device0’’, ‘‘butterfly’’, ‘‘bone’’, ‘‘tree’’, ‘‘hat’’ and ‘‘horseshoe’’).

Therefore, our feature extraction based on distance histograms is efficient due to its potential to preserve interclass and intra-class variation. In addition, from Fig.

7 it is obvious that this method ensuring invariance to scaling).

geometric transformations (e.g. translation, rotation and

Fig. 7: Normalized distance histograms of eight different images of the similar class ‘‘hammer’’ (‘‘hammer-4’’, ‘‘hammer-7’’, ‘‘hammer-18’’, ‘‘hammer-19’’, ‘‘hammer-8’’, ‘‘hammer-5’’, ‘‘hammer-20’’ and ‘‘hammer-16’’). Small variations in histograms of images of similar class is apparent

Fig. 8: Normalized distance histograms of eight images of different classes: ‘‘spring’’, ‘‘octopus’’, ‘‘device0’’, ‘‘butterfly’’, ‘‘bone’’, ‘‘tree’’, ‘‘hat’’ and ‘‘horseshoe’’. Significant variation in histograms of images of different classes is apparent

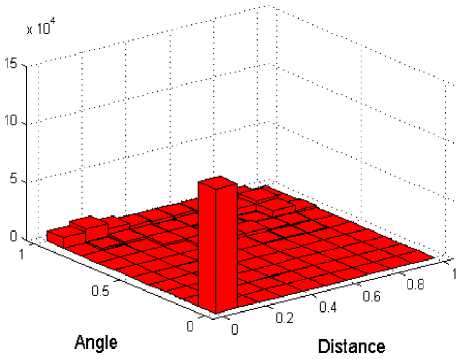

After calculating the normalized distance histograms and normalized angle for all images in 10 classes, 3-D histograms of proposed algorithm based on normalized distance (d=d1+d2) and normalized angle ( θ ) are evaluated. Fig. 9 depicts the 3-D histogram of one image: ‘‘apple-1’’.

In this study, the PNN was applied for classification of the MPEG-7 features. It was implemented by using the MATLAB software package (MATLAB version 7.0 with neural networks toolbox).

The extracted features were randomly divided into two sets: 70% of the data was used for training and the system was tested on the remaining 30%.

Different experiments were performed during the implementation of this classifier and the spread was determined by taking into consideration the classification accuracies for each algorithm (algorithm I and algorithm II).

The best classification accuracy is achieved by spread = 0.05 and spread = 0.001 for algorithm I and algorithm II, respectively. Note that the total classification accuracy is defined as the number of correct decisions/total number of cases.

image segments based on the measured features. Researchers have tried to highlight different image characteristics within various domains and classify the image segments based on the measured features.

The accuracy of the PNN classifier with spread = 0.05 for algorithm I is presented in Table I. The classification results are demonstrated for 5, 8 and 10 classes of MPEG-7 shapes. As one can see from Table I, the best classification accuracy for algorithm I is accomplished by eight classes with the accuracy of 90%.

The classification performances of the PNN classifier applying algorithm II are also examined for 5, 8 and 10 classes of MPEG-7 shapes. The accuracy results of the PNN classifier with spread = 0.001 for algorithm II is reported in Table II.

According to Table II, the best classification accuracy for algorithm II is obtained by eight classes with the accuracy of 92.5%.

|

Table I: The accuracy of PNN classification (spread = 0.05) using algorithm I |

||

|

Train (%) |

Test (%) |

|

|

5 classes |

100 |

88 |

|

8 classes |

100 |

90 |

|

10 classes |

99.33 |

78 |

|

Table II: The accuracy of PNN classification (spread = 0.001) using algorithm II |

||

|

Train (%) |

Test (%) |

|

|

5 classes |

100 |

92 |

|

8 classes |

100 |

92.5 |

|

10 classes |

100 |

86 |

Fig. 9: 3-D histogram of proposed algorithm based on normalized distance and normalized angle for one image: ‘‘apple-1’’

From the classification results presented in Table I and Table II (classification accuracies), one can see that the PNN trained on the second extracted feature vector (algorithm II) produces considerably higher performance than that of the algorithm I.

-

IV. Discussion

MPEG-7 is a silhouette shaped data set containing 70 shape images with varying intra-class separation and large interclass similarity among shapes, which created a challenge for both feature extraction and the classification technique. There are 20 instances of each shape, and variability among them is quite large for some of the members. Our proposed algorithm was tested on 10 selected symbols of the MPEG-7 database, a set of data is shown in Fig. 6.

Researchers have tried to highlight different image characteristics within various domains and classify the

A recurring subject in pattern recognition and classification applications is the separation of data (images, videos, objects, etc.) into homogeneous classes.

This topic has been extensively studied and different approaches and algorithms have been proposed and applied to several problems. Generally, an important step in these problems is the representation of a given image by a vector of features [19]. Although different, the majority of approaches agree that a good classification model should be sensitive to the extracted features [20]. Furthermore, preprocessing and feature extraction methods are important in developing automated classification systems since these stages could change the classification accuracy.

The distance histogram, formed by the mutual distances between all pairs of points on the discretized boundary of a shape, represents a promising method as it uniquely determines the respective point set with a rather small subset of exceptions [21]. Histograms are used in a vast range of image processing algorithms such as in shape representation and classification [22], and image enhancement [23].

Shape analysis from geodesics in shape space has emerged as a powerful tool to develop geometrically invariant shape comparison methods [24]. It has shown that using shape geodesics, one can state the contour matching as a variational non-rigid formulation ensuring a symmetric treatment of curves [17]. The resulting similarity measure is invariant to translation, rotation and scaling independently on constraints or landmarks, but constraints can be added to the approach formulation when available [17].

This study presented an attempt to develop a reliable computerized algorithm, which could classify shapes into predetermined classes. In other words, the purpose of the research was to investigate the accuracy of PNN network trained on the extracted features for classification of MPEG-7 data. The inputs of this network were obtained by the measurement of the histogram of normalized distances between two points in images (algorithm I), and the histogram of normalized distances between three points and the normalized angle of the image edge points (algorithm II).

The results of the studies related to the MPEG-7 shape classification indicated that all of the methods used for feature extraction have different performances and no unique robust feature has been found [13] [14] [17]. Therefore, the MPEG-7 shape classification was considered as a typical problem of classification with diverse features.

The present study dealt with up to ten-class problem (5, 8 and 10 classes), which is the assignment of shapes to one of ten predetermined classes.

The accuracy of the PNN is evaluated employing algorithm I on the MPEG-7 shapes and the best total classification accuracy of that model is achieved by eight classes with the total accuracy of 90%. The best classification accuracy for algorithm II is obtained by eight classes with the accuracy of 92.5% (Table II).

The results of current study related to the MPEG-7 data classification indicated that each feature has different performances. The accuracy rates of the PNN employing the second algorithm for this application (and for the same number of classes) is found to be higher (92.5%) than that of the PNN classifier employing the first algorithm presented in this study. This may be attributed to several factors including the estimation of the network parameters and the nature of the extracted features.

Previously, Mokhtarian et al. [25] applied curvature scale space on MPEG-7 database. The proposed approach only achieved a correct retrieval rate of 75.44%. By using shape context method on the same database, Belongie et al. [26] reported an improvement in term of correct classification rate of about 1.1%. In 2003, the retrieval rate is improved by applying optimized CSS method [27]. Ling and Jacobs [13] described a method for shape retrieval from MPEG-7 database using inner-distance shape context in combination with a nearest neighbor classification. The total retrieval accuracy of their method was 85.4%. The shape tree approach [28] reached an average retrieval rate of 87.7%.

Therefore, the accuracy rate of the PNN with the proposed algorithms in the current study are significantly higher than that of the other approaches [13] [25- 28]. These results are summarized in Table III.

Table III: The recognition accuracy of some methods on the MPEG-7 shape database

|

Study |

Method |

Accuracy (%) |

|

Mokhtarian et al. [25] |

Curvature scale space |

75.44 |

|

Belongie et al. [26] |

Shape context |

76.51 |

|

Mokhtarian & Bober [27] |

Optimized CSS |

81.12 |

|

Ling and Jacobs [13] |

Inner-distance shape context (IDSC) |

85.4 |

|

Felzenszwalb & Schwartz [28] |

Shape tree |

87.7 |

|

Current Study |

Normalized three - point distance histogram |

92.5 |

-

V. Conclusion

The classification results indicated that the PNN trained with the second algorithm had considerable success in discriminating the MPEG-7 shapes.

Further work can be performed for improving the classification accuracies by using different feature extraction methods and other ANN classifiers. In addition, the proposed algorithm for feature extraction and PNN approach can be extended to discrimination of the other shape database.

Список литературы Shape Classification Based on Normalized Distance and Angle Histograms Using PNN

- Ramezani M., Ebrahimnezhad H. 3D Object Categorization Based on Histogram of Distance and Normal Vector Angles on Surface Points [J]. IEEE. Machine Vision and Image Processing (MVIP), 2011.

- Belongie S., Malik J., and Puzicha J. Shape Matching and Object Recognition Using Shape Context [J]. IEEE Trans. on PAMI, 2002, 24(24): 509-522.

- Grauman K., and Darrell T. Fast Contour Matching Using Approximate Earth Mover’s Distance [J]. CVPR. I, 2004, 220-227.

- Thayananthan A., Stenger B., Torr P.H.S., and Cipolla R. Shape Context and Chamfer Matching in Cluttered Scenes [J]. CVPR. I, 2003, 1063-6919.

- Ling H., and Jacobs D.W. Using the Inner-Distance for Classification of Articulated Shapes [J]. CVPR. II, 2005, 719-726.

- Lowe D. Distinctive Image Features from Scale-Invariant Keypoints [J]. IJCV. 2004, 60(2): 91-110.

- Mikolajczyk K., and Schmid C.A. Performance Evaluation of Local Descriptors [J]. IEEE Trans. on PAMI, 2005, 27(10): 1615- 1630.

- Lazebnik S., Schmid C., and Ponce J.A. sparse texture representation using affine-invariant regions [J]. IEEE Trans. PAMI, 2005, 27(8): 1265-1278.

- Jain AK, Vailaya A. Shape-based retrieval: a case study with trademark image database [J]. Pattern Recognit, 1998, 31: 1369–90.

- Wei CH., Li Y., Chau W-Y., Li C-T. Trademark image retrieval using synthetic features for describing global shape and interior structure [J]. Pattern Recognit, 2008, 42: 386–94.

- Singh C., Pooja. Improving image retrieval using combined features of Hough transform and Zernike moments [J]. Optics and Lasers in Engineering, 2011, 49: 1384–1396.

- Scott C., Nowak R. Robust Contour Matching Via the Order-Preserving Assignment Problem [J]. IEEE Transactions on Image Processing, 2006, 15 (7): 1831-1838.

- Ling H., Jacobs D.W. Shape classification using the inner-distance [J]. IEEE Trans. Pattern Anal. Machine Intell, 2007, 29 (2): 286–299.

- Super B. Improving object recognition accuracy and speed through nonuniform sampling [C]. In: SPIE’03: Proceedings of the Society of Photo– Optical Instrumentation Engineers Conference, 2003, 5267: 228–239.

- Sebastian T.B., Klein P.N., Kimia B.B. On aligning curves [J]. IEEE Trans. Pattern Anal. Machine Intell, 2003, 25 (1): 116–125.

- ISO/IEC/JTC1/SC29/WG11: Core Experiment Results for Spatial Intensity Descriptor (CT4), MPEG document M5374, Maui, Dec. 1999.

- Nasreddine K., Benzinou A., Fablet R. Variational shape matching for shape classification and retrieval [J]. Pattern Recognition Letters, 2010, 3: 1650–1657.

- Demuth H., Beale M. Neural Network Toolbox. The MathWorks, Inc, 2000.

- Trunk G.V. A problem of dimensionality: A simple example [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1979, 1(3): 306–307.

- Bouguila N., Almakadmeh K., Boutemedjet S. A finite mixture model for simultaneous high-dimensional clustering, localized feature selection and outlier rejection [J]. Expert Systems with Applications, 2012, 39: 6641–6656.

- Brinkman D., Olver P.J. Invariant histograms. Amer Math Monthly, 2011, 118: 2-24.

- Boyle R., Havlac V., and Sonka M. Image Processing: Analysis and Machine Vision, (Pacific Grove: Brooks/Cole) 1999.

- Sapiro G. Geometric Partial Differential Equations and Image Analysis, (Cambridge: Cambridge University Press) 2001.

- Younes L. Optimal matching between shapes via elastic deformations [J]. Image Vision Comput, 2000, 17 (5): 381–389.

- Mokhtarian F., Abbasi S., Kittler J. Efficient and robust retrieval by shape content through curvature scale space [J]. Proceedings of International Workshop on Image DataBases and Multimedia Search, 1996, 35–42.

- Belongie S., Malik J., Puzicha J. Shape matching and object recognition using shape contexts [J]. IEEE Trans. Pattern Anal. Machine Intell, 2002, 24 (4): 509–522.

- Mokhtarian F., Bober M. Curvature Scale Space Representation: Theory, Applications, and MPEG-7 Standardization. Kluwer Academic Publishers, Norwell, MA, USA, 2003.

- Felzenszwalb P.F., Schwartz J.D. Hierarchical matching of deformable shapes [C]. CVPR’07: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2007, 1–8.