Single and Multiple Hand Gesture Recognition Systems: A Comparative Analysis

Автор: Siddharth Rautaray, Manjusha Pandey

Журнал: International Journal of Intelligent Systems and Applications(IJISA) @ijisa

Статья в выпуске: 11 vol.6, 2014 года.

Бесплатный доступ

With the evolution of higher computing speed, efficient communication technologies, and advanced display techniques the legacy HCI techniques become obsolete and are no more helpful in accurate and fast flow of information in present day computing devices. Hence the need of user friendly human machine interfaces for real time interfaces for human computer interaction have to be designed and developed to make the man machine interaction more intuitive and user friendly. The vision based hand gesture recognition affords users with the ability to interact with computers in more natural and intuitive ways. These gesture recognition systems generally consist of three main modules like hand segmentation, hand tracking and gesture recognition from hand features, designed using different image processing techniques which are further integrated with different applications. An increase use of new interfaces based on hand gesture recognition designed to cope up with the computing devices for interaction. This paper is an effort to provide a comparative analysis between such real time vision based hand gesture recognition systems which are based on interaction using single and multiple hand gestures. Single hand gesture based recognition systems (SHGRS) have fewer complexes to implement, with a constraint to the count of different gestures which is large enough with various permutations and combinations of gesture, which is possible with multiple hands in multiple hand gesture recognition systems (MHGRS). The thorough comparative analysis has been done on various other vital parameters for the recognition systems.

Real Time, Gesture Recognition, Human Computer Interaction, SHGRS, MHGRS

Короткий адрес: https://sciup.org/15010627

IDR: 15010627

Текст научной статьи Single and Multiple Hand Gesture Recognition Systems: A Comparative Analysis

Published Online October 2014 in MECS

The Since their first appearance, computers have become a key element of our society. Surfing the web, typing a letter, playing a video game or storing and retrieving data are just a few of the examples involving the use of computers. And due to the constant decrease in price of personal computers, they will even more influence our everyday life in the near future.

To efficiently use them, most computer applications require more and more interaction. For that reason, human-computer interaction (HCI) has been a lively field of research these last few years. Firstly based in the past on punched cards, reserved to experts, the interaction has evolved to the graphical interface paradigm. The interaction consists of the direct manipulation of graphic objects such as icons and windows using a pointing device. Even if the invention of keyboard and mouse is a great progress, there are still situations in which these devices can be seen as dinosaurs of HCI. This is particularly the case for the interaction with 3D objects. The 2 degrees of freedom (DOFs) of the mouse cannot properly emulate the 3 dimensions of space. Furthermore, such interfaces are often not intuitive to use. To achieve natural and immersive human-computer interaction, the human hand could be used as an interface device [1].Hand gestures are a powerful human to- human communication channel, which forms a major part of information transfer in our everyday life. Hand gestures are an easy to use and natural way of interaction. Using hands as a device can help people communicate with computers in a more intuitive and natural way. When we interact with other people, our hand movements play an important role and the information they convey is very rich in many ways. We use our hands for pointing at a person or at an object, conveying information about space, shape and temporal characteristics. We constantly use our hands to interact with objects: move them, modify them, and transform them. In the same unconscious way, we gesticulate while speaking to communicate ideas (’stop’, ’come closer’, ’no’, etc). Hand movements are thus a mean of non-verbal communication, ranging from simple actions (pointing at objects for example) to more complex ones (such as expressing feelings or communicating with others). In this sense, gestures are not only an ornament of spoken language, but are essential components of the language generation process itself.

The user interfaces in vogue are seldom designed with the dexterity performance in desired learning and teaching assistive system. Evolution of computer from super computers to desktops to palmtops has made the presence of human computer interaction felt in nearly all the aspects of life. The key board, mouse etc lack the sensitivity desired in required application. Eventually the researchers putting in their efforts in the field of Human Computer Interaction made a common emphasis to design and develop the user interfaces capable enough fulfill the intended performance criteria desired in the environment. Such interfaces can be developed by exploiting the phenomena of instinctive communication and manipulation accomplishments of humans facilitating the human computer interaction even at the further higher degree. The use of hand gestures as interface makes the user free to interact with the computing devices without any physical contact with it. This characteristic of HCI makes it particularly very much suitable for different interaction methodology that is quite dependent on hand gestures. Also learning for students could be made easier if the complex commands of the dynamic applications of computers are controlled through easier single and multiple hand gestures, for example browsing images in an image browsers with help of hand gestures or controlling the power point presentations with the usage of hand gestures without need of learning various commands involved, from a distance without the need of any physical contact with the devices. The rest of the paper is organized into four more sections. The section II provides a state of art survey of the work done in the field of human computer interaction in general and gesture recognition in particular. Section III provides the brief description of proposed two hand gesture recognition system. Section IV provides the performance parameters of the proposed two hand gesture recognition system. The paper concludes in section V with discussion and analysis of comparison of single hand and two hand gesture recognition system.

-

II. S TATE OF ART

To improve the interaction in qualitative terms in dynamic environment it is desired that the means of interaction should be as ordinary and natural as possible. Gestures, especially expressed by hands have become a popular means of human computer interface now days. Human hand gestures may be defined as a set of permutation generated by actions of the hand and arm [2]. These movements may include the simple action of pointing by finger to more complex ones that are used for communication among people. Thus the adoption of hand, particularly the palm and fingers as the means of input devices sufficiently lowers the technological barrier in the interaction between the disinterested users and computer in the course of human computer interaction. This presents a very natural way of removing technological barriers while we are adopting the hands themselves as input devices. This needs the capability to understand human patterns without the requirement of contact sensors. The problem is that, the applications need to rely on external devices that are able to capture the gestures and convert them into input. For this the usage of a video camera can be done that grabs user’s gesture, along with that we require processing system that capture the useful features and partitions the behavior into appropriate classes. Various applications designed for gesture recognition require restricted background, set of gesture command and a camera for capturing images. Numerous applications related to gesture recognition have been designed for presenting, pointing, virtual workbenches, VR etc. Gesture input can be categorized into different categories depending on various characteristic [3]. One of the categories is deictic gesture that refers to reaching for something or pointing an object. Accepting or refusing an action for an event is termed as mimetic gestures. It is useful for language representation of gestures. An iconic gesture is way of defining an object or its features. Chai et al. [4] presents a hand gesture application in gallery browsing 3D depth data analysis method. It adds up the global structure information with the local texture variation in the gesture framework designed. Pavlovic et al. [5] have concluded in their paper that the gestures performed by users must be logically explainable for designing a good human computer interface.

The current technologies for gesture recognition are not in a state of providing acceptable solutions to the problems stated above. One of the major challenges is evolution in the due course of time of the complexity and robustness associated with the analysis and evaluation for gestures recognition. Different researchers have proposed and implemented different pragmatic techniques for Image pre-processing Tracking Recognition gesture as the input for human computer interfaces. Dias et al.[6] presents a free-hand gesture user interface which is based on finding the flight of fiduciary color markers connected to the user’s fingers. The model used for the video presentation, is grounded in its disintegration in a sequence of frames or filmstrip.

Liu and Lovell [7], proposed an interesting technique for real time tracking of hand capturing gestures through a web camera and Intel Pentium based personal computer. The proposed technique is implemented without any use of sophisticated image processing algorithms and hardware. Atia et al. [8] designs a tilting interface for remote and quick interactions for controlling the directions in an application in ubiquitous environment. It uses coin sized 3D accelerometer sensor for manipulating the application. Controlling VLC media player using hand gesture recognition is done in real time environment using vision based techniques [9]. Xu et al. [10] used contact based devices like accelerometer and EMG sensors for controlling virtual games. Conci et al. [11] designs an interactive virtual blackboard by using video processing and gesture recognition engine for giving commands, writing and manipulating objects on a projected visual interface. Lee et al. [12] developed a Virtual Office Environment System (VOES), in which avatar is used navigate and interact with other participants. For controlling the avatar motion in the system a continuous hand gesture system is designed which uses state automata to segment continuous hand gesture and to remove meaningless motion. Xu et al. [13] presents a hand gesture recognition system for a virtual Rubik's Cube game control that is controlled by EMG and 3D accelerometer to provide a user-friendly interaction between human and computers. In this the signals segments the meaningful gestures from the stream of EMG signal inputs. There are several studies on the hand movements especially gestures, by modeling the human body .

On the basis of body of knowledge now it is possible to countenance the problem from a mathematical viewpoint [14]. The major drawbacks of such techniques are they are very complex and highly sophisticated for developing an actionable procedure to make the necessary jigs and tools for any typical application scenarios. This problem can be overcome by pattern recognition methods having lower hardware and computational overhead. These aspects have been considered in subsequent sections, by making the dynamic user interface for the validation of those concepts, where a user performs actions that generates an executable commands in an intelligent system to implement the user requirements in a natural way.

-

III. H AND G ESTURE R ECOGNITION S YSTEM

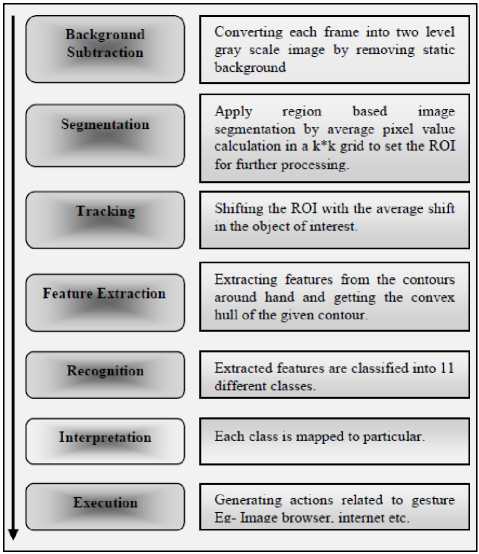

The hand gesture recognition system used for interaction is designed using an integrated approach. It recognizes static and dynamic hand gestures. Fig. 1 depicts the basic flowchart of the techniques used for designing a dynamic user interface.

Fig. 1. Basic building block of a HGRS (hand gesture recognition system)

The gesture recognition system tracks the gestures performed by the hand and counts the number of defects formed which it maps to the appropriate action (numerical value) assigned in the gesture vocabulary.

The hand gesture recognition systems have been developed for single hand as well as multiple hand gesture recognition respectively and has been detailed in the following sub sections:

-

A. Single hand based gesture recognition system (SHGRS)

Research in hands, gestures and movement helps in developing models of the human body. This makes it possible to solve the challenges from mathematical viewpoint. How so ever, these techniques proposed are excessively complex and sophisticated for typical application scenarios. Generally, pattern recognition methodologies are capable of solving the problem with humbler hardware and computation necessities. In the present research effort, we will consider these aspects by taking it as a reference to a smart interaction environment of image browsing. Here the user can execute different actions that translate into a command in an intelligent system and further execute the user requirements into practical actions.

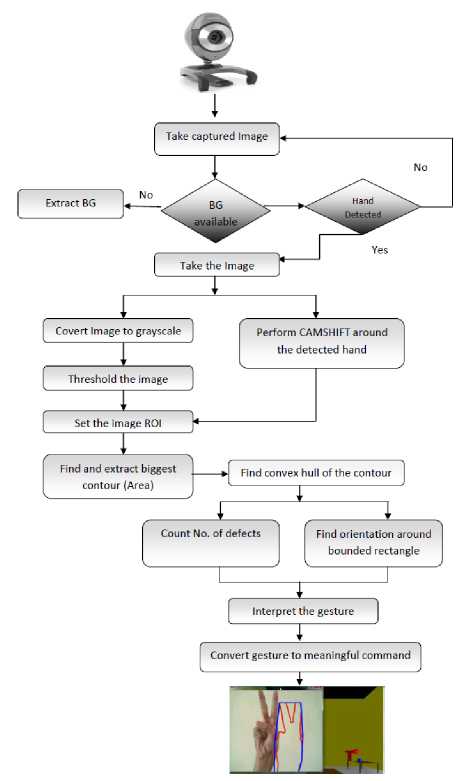

Fig. 2. Architecture design of SHGRS( Single hand gesture recognition system)

Fig. 2 shows the flowchart of SHGRS. Once the image is captured from the vision based device i.e. webcam it is further processed by different image processing techniques. Practical experiments show that the system performance is well in environments with little noises (i.e., existence of objects whose color is similar to human skin) and with the balanced lightning condition.

The SHGRS is integrated with image browser to test its applicability. Different hand gestures are mapped in corresponding to the image browser commands. Fig.3 shows some hand gestures along with their assigned commands (functions) for browsing the images in image browser. Though the gestures have been mapped for commands used in image browsing the same gesture vocabulary could be reused for mapping different set of commands according to different range of applications like controlling games, power point presentations etc. This makes the gesture recognition system more generalized and adaptive towards human computer interaction. Fig. 4 shows the intermediate experimental results of the hand obtained for designing the gesture recognition system. It depicts the feature extraction of the hand by extracting the count of defects formed in the corresponding the convex hull in the object of interest

The hand gestures browse the images in the image browser as depicted in fig.5. The fig.5 presents a depiction of the image being rotated clockwise using hand gesture corresponding to it. The figure 6 depicts when the image is rotated anticlockwise using hand gesture corresponding to it. The fig.7 depicts when the image is zoom in and zoom out using hand gestures corresponding to it.

Fig. 3. Browsing image using single hand gestures in clockwise and anti clock wise direction

-

B. Multiple hand based gesture recognition system (MHGRS)

In our everyday life, our activities mostly involve the use of both hands. This is the case when we deal cards, when we play a musical instrument, even when we take notes. In the case of HCI, most interfaces only use onehanded gestures. In, the user executes commands by changing its hand shape to handle a computer generated object in a virtual reality environment. Wah and Ranganath propose a prototype which permits the user to move and resize windows and objects, open/close windows by using simple hand gestures. Even with common devices, such as the mouse or the graphic board, only one hand is used to interact with the computer. The keyboard seems to be the only device that permits the use of the two hands in the same time. But using two-handed inputs for computer interfaces can be of potential benefit. Many experiments have been conducted to test the validity of two-handed interaction for HCI. Furthermore, using the two-handed approach can reduce the gap between expert and novice users.

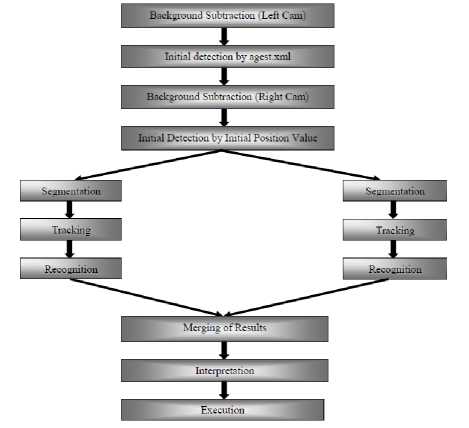

Fig. 4. Architecture design of MHGRS (Two hand gesture recognition system)

An effective vision based gesture recognition system for human computer interaction must accomplish two main tasks. First the position and orientation of the hand in 3D space must be determined in each frame. Second, the hand and its pose must be recognized and classified to provide the interface with information on actions required i.e. the hand must be tracked within the work volume to give positioning information to the interface, and gestures must be recognized to present the meaning behind the movements to the interface. Due to the nature of the hand and its many degrees of freedom, these are not insignificant tasks. Additionally, these tasks must be executed as quickly as possible in order to obtain a system that runs at close frame rate (30 Hz). The system should also be robust so that tracking can be reestablished automatically if lost or if the hand moves momentarily out of the working area. In order to accomplish these tasks, our gesture recognition system follows the following architecture as shown: The system architecture as shown in figure 2 uses an integrated approach for hand gesture recognition system. It recognizes static and dynamic hand gestures. In the proposed two hand gesture recognition with the use two cameras, one for each hand.

The implemented system architecture starts with the background subtraction of the left cam followed by the right cam. For the left hand detection after image subtraction by left cam the system uses an existing dataset based on a xml file called agest.xml. While for the right hand detection of the image subtracted by the right cam the system uses initial position value. After the subtraction of the captured image and detection of the left and right hands the segmentation, tracking and recognition are done separately for both the hands. Merging the results of the left hand and right hand segmentation, tracking and recognition the system goes on for the interpretation of results. The interpreted results are various hand gestures as mentioned in the gesture vocabulary. These hand gestures thus recognized are matched with the gestures in the vocabulary set and executed for the corresponding commands mapped with the gestures. These commands thus executed may be used for implementation in various real life applications.

The system setup has twin camera system mounted at the top of the screen is used to provide stereo images of the user and, more specifically, the user’s hand(s). The coordinate system is specified as a right hand coordinate system with the cameras on the x axis, the y axis vertical and the z axis pointing out from the cameras into the scene. Video frames are sent by firewire to a core 2 quad computer system where the image processing is carried out in software. Gesture and positional information is passed between the recognition and graphical display systems via a socket based data.

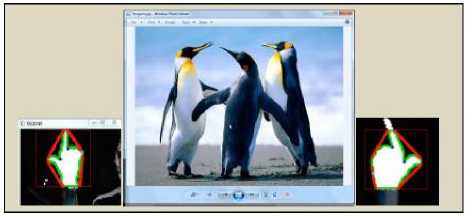

Following Fig. 5 shows the results of browsing image using two hand gestures for zoom in and zoom out commands of MHGRS.

Fig. 5. Browsing image using two hand gestures for zoom in and zoom out command.

-

IV. H AND G ESTURE R ECOGNITION P ERFORMANCE PARAMETERS

The main challenge of vision-based gesture recognition is to deal with the large variety of gestures. Recognizing gestures involves handling a substantial number of degrees of freedom (DoF), huge variability of the 2D appearance dependent on the camera view point (even for the same gesture), different silhouette scales (i.e. spatial resolution) and many resolutions for the temporal dimension (i.e. variability of the gesture speed). Moreover, it needs also to balance the accuracyperformance-usefulness trade-off according to the nature of application, the cost of the solution and several criteria’s.

In order to compare and contrast the performance and viability of the single and multiple hands based gesture recognition system following vital and feasible parameters for testing and analysis of the two recognition systems could be considered and these parameters have been categorized into two subcategories of Quantitative and Qualitative parameters :

Quantitative Parameters: The quantitative parameters are those parameters that may be quantified as numerical values for the recognition system that include the gesture recognition rate, features extracted and root mean square error of the system.

-

(a) Recognition Rate: The recognition rate (RR) is defined as the ratio of the number of correctly classified samples to the total sampling number, shown as (1)

Rr

RR = ∗100

Rt

Where : Rc =

Rt =

(b)Features Extracted: Selecting good features to recognize the hand gesture path play significant role in system performance. There are three basic features; location, orientation and velocity. The previous research [2, 7] showed that the orientation feature is the best in term of accuracy results. Therefore, we will rely upon it as one of important parameter in our recognition systems. A gesture path is spatio-temporal pattern which consists of centroid points (xhand ; yhand). So, the orientation is determined between two consecutive points from hand gesture path.

. yt+i - Vt Л

∅ = arctan ()

-

Where : t = 1,2,3,………T-1

T = length of the gesture path ∅t=

-

(c) RMSE (Root mean square error): RMSE is calculating root mean square between the expected value and system output value of each iteration of gesture recognition. RMSE can be calculated as:

=

( di - У1 )2

n

Where: n =ℎ e -number of iterations dt = =

Qualitative Parameters: The qualitative parameters are those parameters that may be not be quantified as numerical values for the recognition system these parameters include Robustness, Scalability, Computational Efficiency, User’s Tolerance, Real-time performance, User-independence and Application independence.

-

(a) Robustness : In the real-world, visual information could be very rich, noisy, and incomplete, due to changing illumination, clutter and dynamic backgrounds, occlusion, etc. Vision-based systems should be user independent and robust against all these factors.

-

(b) Scalability : The Vision-based interaction system should be easily adapted to different scales of applications. For e.g. the core of Vision-based interaction should be the same for desktop environments, Sign Language Recognition, robot navigation and also for VE.

(c)Computational Efficiency : Generally, Vision based interaction often requires real-time systems. The vision and learning techniques/algorithms used in Vision-based interaction should be effective as well as cost efficient.

-

(d) User’s Tolerance : The malfunctions or mistakes of Vision-based interaction should be tolerated. When a mistake is made, it should not incur much loss. Users can be asked to repeat some actions, instead of letting the computer make more wrong decisions.

(e) Real-time performance: by real-time performance, the system must be able to consider the image at the frame rate of the input video to give the user instant feedback of the recognized gesture.

-

(f) User-independence: By user-independence, the framework should be able to work for different users rather than a specific user. The system should recognize gestures performed by humans of different sizes and colors.

(g) Application independence: Application independence characterizes the ability of a recognition system to have an application independent gesture vocabulary set that could be mapped with the command set of different applications irrespective of the number and type of the commands used in that particular application.

-

V. D ISCUSSION AND A NALYSIS

Gesture recognition offers a natural, unobtrusive method for interaction, especially if the gesture set is selected with some knowledge of the nature of human hand movements. Vision techniques eliminate the need for contact based devices like gloves or restraining cabling back to the computer and so to provide an unobtrusive interface. Examination of previous work in this area provided a basis for the development of a framework for a vision based gesture interface, as well as identification of promising techniques.

The comparative analysis for the two hand gesture recognition systems was done on the basis of system performance parameters already discussed in section 5. Though the results for the comparative analysis did not provide a steep orientation towards any on of the hand gesture recognition systems ,still the facts presents by results were interesting and could be used for decision making of which system to be adopted depending on the characteristics of the system paired with the applications requirements.

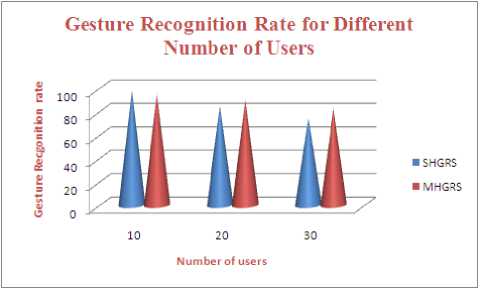

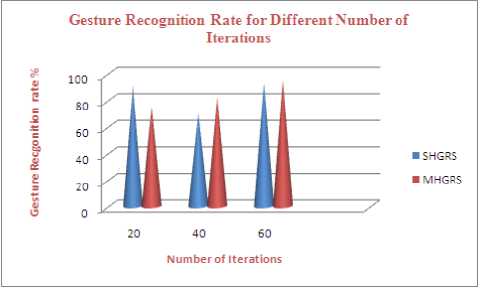

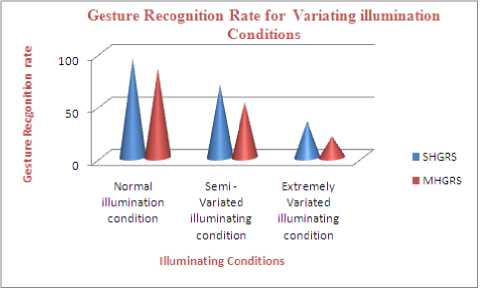

Recognition Rate: The recognition rates of SHGRS and MHGRS were compared on variating the following three parameters of the system

-

a) The number of users from 10 to 20 to 30

-

b) The number of iterations 20 to 40 to 60

-

c) Illumination conditions from one to two to three illuminating devices

a) Gesture Recognition rate for different Number of users

b) Gesture Recognition rate for different number of iterations from 20 to 40 to 60

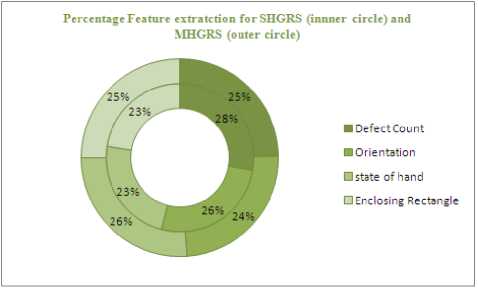

Fig. 7. Feature Extraction Percentage for different parameters of SHGRS and MHGRS

Feature Extraction: Feature Extraction in SHGRS and MHGRS were compared on different values for the following parameters of the system:

-

• Defect count (0-5)

-

• Orientation (Left/right)

-

• State of hand (Open/closed)

-

• Enclosing rectangle (co-ordinate and width)

Net features := 6 * 2 * 2 = 24

Classes of gesture := 11

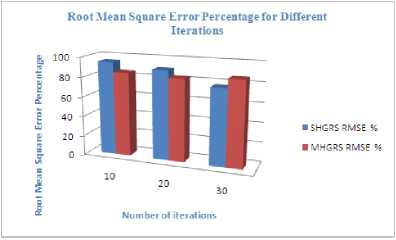

RMSE (Root mean square error): RMSE is compared with the average root mean square percentage between the expected value and system output value of each iteration of gesture recognition.

c) Gesture Recognition rate for different Illumination conditions

Fig. 6(a)(b)(c). Comparative gesture recognition rates

Robustness: When talking of robustness in the recognition system is very much dependent on the complexity of the algorithms used and input and output generation of the system. As far as complexity of implemented algorithms and input and output generated is concerned the SHGRS performs better than the MHGRS. While when the robustness of the system in accordance with the real time dynamic environment applications is concerned the MHGRS is better than the SHGRS. Scalability: Again the scalability of the gesture recognition systems are application dependent and system configuration dependent .Dependency on the system configuration makes both the recognition system facing same set of problems thus having comparable performance. While application dependent scalability favours the performance of MHGRS over the SHGRS, because of the number available permutations and

Fig. 8. Root mean square error Percentage for different number of iterations combinations of gesture to paired with different set of commands in the applications. Computational Efficiency: Computational efficiency of the MHGRS is lesser as compared to the SHGRS because of the additional computations required in MHGRS systems for camera calibration and gestures synchronization of both the hands being the computational overheads of these systems. User’s Tolerance: SHGRS outshines the MHGRS for user’s tolerance as in the latter case both the hands of users are made busy leading to inconvenience and lesser appreciated while in the case of former the user has one hand free making the user more comfortable and acquainted with the habits of earlier interface devices that were based on usage of single hand only. Real-time performance: real time performance of any gesture recognition system is dependent on many vital parameters related to the application as well as system configuration along with the environmental conditions in which the recognition system has to be installed. As we cannot simulate all the possible variations in the system configuration environmental conditions and applications for which the recognition system was implemented so we do not commit to the real time performance of both the systems still SHGRS has a better real time performance due to lesser computations complexities associated with the system. User-independence: Both the SHGRS and MHGRS are very much user independent as both the system provide Naturalistic and intuitive interface with the flexibility of being used by naïve user or expert with same easiness. Application independence: During the course of our research effort we implemented both the SHGRS as well as MHGRS for varied variety of applications thus making both the systems application independent with robustness , scalability and real-time performance still to be worked upon and improved. The proposed hand gesture recognition systems may further be modeled with an approach for hand posture recognition has been applied to already cropped images. A scanning approach could be investigated to overcome this limitation. This method consists of scanning the image at different sizes and locations using a hand posture classifier. As this technique produces a lot of false detections, it could be used in conjunction with a skin color detector to discard these false detections. For the task of hand gesture recognition, we are only dealing in this thesis with already segmented hand gestures. A next step would be the recognition of a sequence of hand gestures. This task, known as gesture spotting, consists of the recognition of hand gestures performed during the gesture sequence as well as finding the start and end position of these hand gestures. Gesture spotting can also be extended to the recognition of known gestures and rejection of unknown gestures. To efficiently use gestural HCI, it is necessary to be able to deal with bare-hand interaction. This task is related to hand tracking as well as two-handed gesture tracking. This is a very difficult problem particularly when dealing with two-handed gestures. In that case, both hands are in the view field of the camera(s). Hands are thus very often occluding each other. Little research has already been done to deal with this problem. Some work still needs to be done to efficiently track both hands without the help of colored gloves.

Список литературы Single and Multiple Hand Gesture Recognition Systems: A Comparative Analysis

- Chaudhary A, Raheja JL, Das K, Raheja S (2011) Intelligent approaches to interact with machines using hand gesture recognition in natural way: a survey. Int J Comput Sci Eng Survey (IJCSES) 2(1):122–133

- Hardenberg CV, Berard F (2001) Bare-hand human–computer interaction. Proceedings of the ACM workshop on perceptive user interfaces. ACM Press, pp 113–120

- Shanis, J. and Hedge, A. (2003) Comparison of mouse, touchpad and multitouch input technologies. Proceedings of the Human Factors and Ergonomics Society 47th Annual Meeting, Oct. 13-17, Denver, CO, 746-750.

- Xiujuan Chai, Yikai Fang and Kongqiao Wang, “Robust hand gesture analysis and application in gallery browsing,” In Proceeding of ICME, New York, pp. 938-94, 2009.

- Pavlovic VI, Sharma R, Huang TS (1997) Visual interpretation of hand gestures for human–computer interaction:a review. Trans Pattern Anal Mach Intell 19(7):677–695

- José Miguel Salles Dias, Pedro Nande, Pedro Santos, Nuno Barata and André Correia, “Image Manipulation through Gestures,” In Proceedings of AICG’04, pp. 1-8, 2004.

- N. Liu and B. Lovell, “Mmx-accelerated Realtime Hand Tracking System,” In Proceedings of IVCNZ, 2001.

- Ayman Atia and Jiro Tanaka, “Interaction with Tilting Gestures in Ubiquitous Environments,” In International Journal of UbiComp (IJU), Vol.1, No.3, 2010.

- S.S. Rautaray and A. Agrawal, “A Novel Human Computer Interface Based On Hand Gesture Recognition Using Computer Vision Techniques,” In Proceedings of ACM IITM’10, pp. 292-296, 2010.

- Z. Xu, C. Xiang, W. Wen-hui, Y. Ji-hai, V. Lantz and W. Kong-qiao, “ Hand Gesture Recognition and Virtual Game Control Based on 3D Accelerometer and EMG Sensors,” In Proceedings of IUI’09, pp. 401-406, 2009.

- C. S. Lee, S. W. Ghyme, C. J. Park and K. Wohn, “The Control of avatar motion using hand gesture,” In Proceeding of Virtual Reality Software and technology (VRST), pp. 59-65, 1998.

- X. Zhang, X. Chen, Y. Li, V. Lantz, K. Wang and J. Yang, “A framework for Hand Gesture Recognition Based on Accelerometer and EMG Sensors,” IEEE Trans. On Systems, Man and Cybernetics- Part A: Systems and Humans, pp. 1-13, 2011.

- N. Conci, P. Cerseato and F. G. B. De Natale, “Natural Human- Machine Interface using an Interactive Virtual Blackboard,” In Proceeding of ICIP 2007, pp. 181-184, 2007.

- B. Yi, F. C. Harris Jr., L. Wang and Y. Yan, “Real-time natural hand gestures”, In Proceedings of IEEE Computing in science and engineering, pp. 92-96, 2005.