Wavelet Based Histogram of Oriented Gradients Feature Descriptors for Classification of Partially Occluded Objects

Автор: Ajay Kumar Singh, V. P. Shukla, Shamik Tiwari, Sangappa R. Biradar

Журнал: International Journal of Intelligent Systems and Applications(IJISA) @ijisa

Статья в выпуске: 3 vol.7, 2015 года.

Бесплатный доступ

Computer vision applications face various challenges while detection and classification of objects in real world like large variation in appearances, cluttered back ground, noise, occlusion, low illumination etc.. In this paper a Wavelet based Histogram of Oriented Gradients (WHOG) feature descriptors are proposed to represent shape information by storing local gradients in image. This results in enhanced representation of shape information. The performance of the feature descriptors are tested on multiclass image data set having partial occlusion, different scales and rotated object images. The performance of WHOG feature based object classification is compared with HOG feature based classification. The matching of test image with its learned class is performed using Back Propagation Neural Network (BPNN) algorithm. Proposed features not only performed superior than HOG but also beat wavelet, moment invariant and Curvelet.

HOG, WHOG, Multiclass, Occlusion, Neural Network, Wavelet

Короткий адрес: https://sciup.org/15010668

IDR: 15010668

Текст научной статьи Wavelet Based Histogram of Oriented Gradients Feature Descriptors for Classification of Partially Occluded Objects

Published Online February 2015 in MECS

In computer vision, detection and classification of objects from images or videos is one of the fundamental problems. Conventionally an image is segmented in to various regions and then these regions are classified as belonging to one of the class. These methods works well when object of interest have somewhat similar properties in image attributes as intensity, texture or color, e.g. face detection by segmentation of skin color regions. While detection of objects in an image like humans, texture may not be uniform and can be arbitrarily complex due to different cloths. In such case designing an algorithm for segmentation is quite difficult. When an image contains multiple objects or image is captured from different view point by camera then object of interest may have occlusion due to overlapping of objects, then problem may turn out to be more difficult.

Recognizing objects under partial occlusion has been a dreadful problem in computer vision applications. In robotic applications like assembling lines, the presence of numerous objects or overlap of one object over other causes partial occlusion, which make trivial problems in identification or locating the objects . So in presence of occlusion, classification problem becomes more complicated due to unknown nature of occlusion, which can occur from different objects size and shape. So the recognition and classification of object is to be performed by the unoccluded portion of the objects.

In the work [1] a statistical approach for object recognition with probabilistic models has been presented to make correspondence between scene feature and object feature. In this work the authors model both object feature uncertainty and the probability that the object features occluded in the scene image. The authors in work [2] have noticed Independent Component Analysis (ICA) as a decorrelation based on higher order moment of a input object. Extraction of edges of the images and matching them with a set of learned objects and matching the distance between two images is implemented in [3] in a practical real time pedestrian detection. In my previous work [4] a method for classification of objects degraded through geometric distortion and radiometric degradations is presented. It is commonly accepted that contextual information acts a vital role in detecting and localizing objects in such conditions as clutter background, occlusion etc. Several context aware object detection methods have been proposed recently [5, 6, 7, 8].

Histogram of Oriented Gradients (HOG) feature based a method is presented in the work [9] for human detection in an image. In this work authors have applied these feature description for human detection. This feature counts the incidences of gradient orientation in confined portions of an image. HOG describes the object in such a way that the same object generates as close as possible to the same feature descriptor when viewed under different conditions such as partial occlusion in this work. These feature descriptors are arranged in feature vector and used to train the artificial neural network.

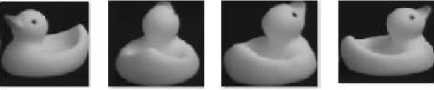

This work corresponds to model a system for multiclass object classification under partial occlusion conditions. A standard data set Columbia Object Image Library COIL 100 [10] has been used for training and testing of classification system. COIL-100 dataset provides the image with black background and with some degree of rotation, scaling and shifting. This dataset is fabricated with partial occlusion for testing the performance of the system. To assess the performance of the proposed feature descriptors, a feed forward neural network with back propagation learning algorithm has been used widely on the image data set under partial occlusion. In these experiments, it is investigated that a better classification rate is achieved than a conventional HOG based descriptors, wavelet features and Curvelet features.

A family functions can be derived with the help of basic scaling function by scaling and translation [11][12] as follows

Class 1

Class 2

Class 3

4>i,jto = 2‘/2ф(2*х-j)

Class 4

Class 5

Fig. 1. Sample images of training images

Class 6

As a result, scaling functions ф^;И can span the vector spacesV-, which are nested as follows:

V°cV1c V2 с —....... [11]. The Haar wavelet function V'to [12] is given by following

Scaling and translation of mother wavelet produce the Haar Wavelet as

This paper first discusses the background of the concepts used in Haar wavelet transform of an intensity image, computation of HOG features of an image and feed forward neural network classifier in section 2. Section 3 discusses the method of proposed WHOG feature extraction method. In the next section 4, we describe the different dataset used to evaluate the performance of the system followed by experimental setup and finally result analysis of the two methods traditional HOG [9] and proposed WHOG feature extraction.

-

II. background

This section discusses some of the theoretical background on the feature extraction method used in this work.

-

A. Discrete Haar wavelet Transform

2D Discrete wavelet transform (DWT) is considered to be built with separable orthogonal mother wavelet containing a given regularity. In two dimensions, a twodimensional scaling functions, ф(х-У) and three twodimensional wavelets, УЙ(^У) , ^(^У) and ^5(^У) are required [11]. Each is the product of two one dimensional functions and the separable scaling function and separable directionality sensitive wavelets are given by the following equations.

^”(х,у) = ^Мф(у)(2)

V,v(^y) = ф№ 0(у)(3)

^D(x,y) = 1^(Х) ф(у)(4)

A Haar scaling function ф№ with unit-height, unitwidth scaling function is given as follows

/ Г! 0 < % < 1

фМ =.

1% otherwise

iptjQx") = 2Vz1/)(2ix-j)

The Haar Wavelet ^ц(х) span the vector space W'

which is orthogonal complement of the V‘ in

Vi+1. Vi+1 _ Vi Ф Wi

-

B. Histogram of Oriented Gradients

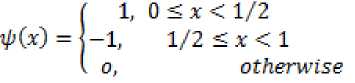

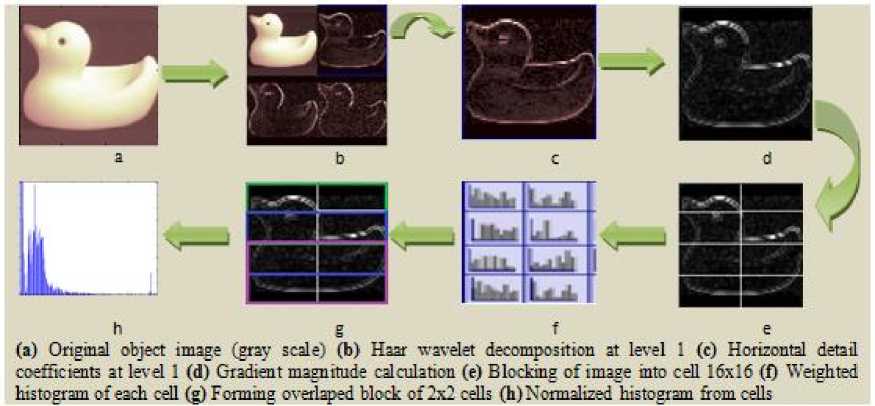

Histogram of Oriented Gradients (HOG) characterizes the features such as object appearance or shape well by distribution of local intensity gradients in the image [9]. HOG features are extracted from an image by a chain of normalizing local histograms of image gradient orientations in a dense grid [9]. An overview of the method proposed in work [9] for calculation of feature descriptors is shown in the Fig 2.

Fig. 2. An overview of HOG feature descriptor calculation [9]

The method is based on evaluation of well normalized local histograms of image gradient orientations in a dense grid. So the image is divided into small regions called cells, where each cell collects a local 1-D histogram of gradient directions or edge orientations over the pixels of the cells and whole object is represented by combining these cell histograms. For better invariance to object illumination it is useful to contrast-normalize the local responses before using them, which can be accomplished by collecting a measure of local energy (histogram) over a bit larger spatial regions termed as block in this work. Normalized descriptor blocks are referred as Histogram of Oriented Gradients (HOG)

Current work assumes the various parameters such as size of one cell as 16x16 pixels and the block size of 2x2 cells for calculation of HOG vector. Fig 2 demonstrates the construction of the HOG feature vector for an intensity image. In the second step the gradient magnitudes at every pixel are computed. Next the histogram for every cell image is computed. At the end of this process the overlapping blocks are normalized by taking 2x2 cells block and normalizing the histogram within the block. Normalization provides some invariance to changes in contrast which can be thought of as multiplying every pixel in the block by some coefficient.

-

C. Neural Classifier

A number of classification methods are available in literature review, each of them are having its robustness and debility. There is no classifier which performs best in all the problems [13][14]. Neural Network is a method of simulation of biological neurons [15][16].

Artificial neural network is used for fast computation, learning, compression, blind signal separation and filtering. This is also used for pattern classification and forecasting [14]. Artificial Neural networks (ANN) are trained initially so that an input leads to a specific output. Back Propagation Neural Network (BPNN) is one of the most popular algorithm for training a artificial neural network as its simple and effective. The nodes in hidden and output layer adjust the value of weights depending on the error in classification. BPNN gives robust prediction and classification but the processing speed is slower as compared to other learning algorithms.

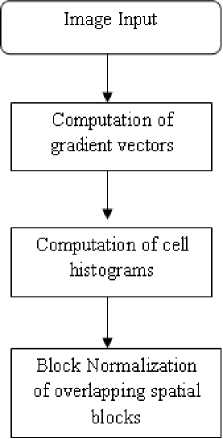

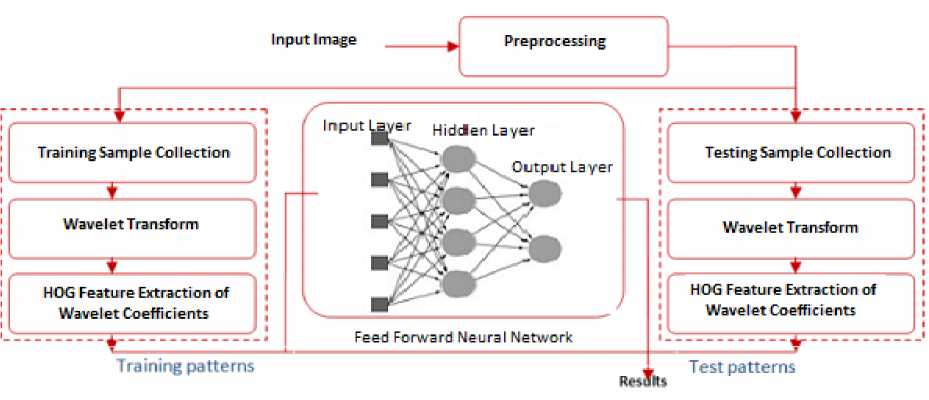

In this work BPNN is utilized as a classification system as in our previous works [4][17][18][19] where it has produced good classification rate. A Multilayer feed forward BPNN based classification system is shown in the Fig 3.

Fig. 3. An Image Classification System Structure

The back propagation is a type of supervised learning algorithm, which means that it receives sample of the inputs and associated outputs to train the network, and then the error (difference between real and expected results) is calculated. The idea of the back propagation algorithm is to minimize this error, until the neural network learns the training data. This can be implemented by:

^(n + 1) = р(ук;0р;) + 6AWj^ (10)

Where p is the learning rate, 5 is momentum, y^- is the error and n is the number of iteration.

A survey on medical image classification using artificial neural network approaches is presented in [20], where the applications of intelligent computing techniques for diagnostic sciences in biomedical image classification are addressed. Some artificial intelligence methods are also mentioned which are used commonly together to solve the special problems of medicine. SVM and ANN were focused since these are being used in almost all image analysis of medical image classification. While fuzzy techniques and corresponding extension emphasize on segmentation of medical images.

-

III. Wavelet Histogram of Oriented Gradients

This section introduces a wavelet based Histogram of Oriented Gradients (WHOG) descriptors for intensity images which provides a better classification result over the normal HOG [9]. The idea is for enhancement of useful and important local high frequency features before shape extraction of an object for image classification. To accomplish this discrete wavelet transform of an image is performed. In this work Haar wavelet is used as a mother wavelet. Wevelet transformation decomposes a gray scale image into four different grayscale sub images. One of these sub images has low frequency contents of original image and remaining three hold the high frequency components of image at different orientations. These sub images are reduced to one fourth size of its original.

After decomposition of image into four sub images HOG feature descriptors are extracted from each of the sub images separately, then are concatenated into a single column vector. This gives the size of one feature vector of grayscale image as four times the size of feature descriptors produced by one sub image. The size of one WHOG feature vector of an image for the parameters assumed in our implementation is 4 sub images X 4 histograms X 9 bins per histogram X 3 blocks = 432. This process of extracting WHOG is depicted in fig 4.

Fig. 4. WHOG feature vector Extraction

-

IV. experimental Setup

Here we introduce the database used for training and testing of image classification system using HOG and WHOG feature descriptors. Then a comparative assessment of both the feature extraction methods is performed under different constraints such as occlusion and without occlusion.

-

A. Data Set

The performance of the proposed model is evaluated with the database taken from Columbia Object Image Library (COIL-100) [21]. COIL-100 is database containing colored images of hundred classes.

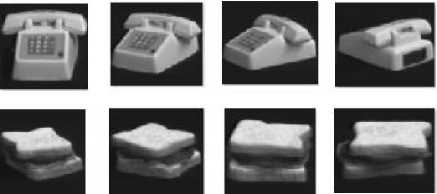

The objects were placed on motor controlled rotating top against black background. Object of six categories are utilized for simulation of the research. The neural classification system is trained with approx three hundred images, 50 images of each class. The performance of the classification system is assessed with two different constraints, one with the image database containing images captured through various angle and other with images having partial occlusion over the object. For these purpose 900 images, 150 images of each class are employed for the first experimentation and 600 images, 100 images having occlusion each category for other experiment. The image database [21] is fabricated with

The effect of occlusion is analyzed through both the methods as follows

-

1) Classification of object image without occlusion:

Image classification system involves mainly four steps. These are preprocessing, feature extraction, training of classifier and testing of classifier. First step of classification deals with image manipulation operations partial occlusion for evaluation of efficiency of WHOG features. Fig 5 shows the sample images of three classes from COIL-100 library for training. Sample images for testing the performance of the feature descriptors images with partial occlusion are shown in Fig 6.

Fig. 5. Sample images for training

-

B. HOG Vs WHOG

This section makes a comparative assessment of our proposed feature descriptors WHOG and HOG [9] in gray scale images. This method of feature description is not a state of the art or stand alone solution for image classification problem, but it is an enhancement of HOG feature description. In this work mainly two experiments involved. Performance is evaluated without occlusion in first experiment and second experiment evaluates the performance in presence of partial occlusion.

such as RGB to grayscale conversion, histogram equalization etc.

Fig. 6. Sample images with partial occlusion

Then wavelet based HOG (WHOG) features from the object images are extracted as the steps shown in fig 4 for supervised learning of classifier and finally feature vectors of the test images are utilized to measure the performance. The performance is evaluated by the following three metrics and the resulted is presented in table 1.

True Positive

Precision = —-------------------------X 100True Positive — False Positive

True Positive

Recall = ----——----—-——---;— X 100

Tine Positive — False Negative

Tine Negative

Specincitv = —--------------------7------x -100 ‘ Tine Negative — False Positive

Precision measures the fraction of samples that truly turns out to be positive in the group the classifier has categorized as a positive class. The higher the precision is, the lower the number of false positive errors committed by the classifier. Sensitivity measures the actual members of the class which are accurately recognized as such. It is also referred as True Positive Rate (TPR). It is defined as the fraction of positive samples categorized accurately by the classification model. High sensitivity value represents that low number of positive samples misclassified as the negative class. Recall/sensitivity is related to specificity, which is a measure that is commonly used in multi class problems, where one is more interested in a particular class. Specificity corresponds to the true-negative rate.

The proposed system is compared with the HOG feature descriptors in [9]. As it is apparent from the result shown in table 1 that WHOG significantly out performs over HOG. In the current work two experiments are conducted on different data sets. The first experiment investigated that the features are invariant to images which are acquired from different angles of an object. The outcome of first experiment is shown in table 1.

Table 1. Performance analysis of proposed system with HOG without occlusion

|

Proposed WHOG Descriptors |

HOG [9] |

|||||||

|

Class |

Precision |

Recall |

Specificity |

Classif. Accur. |

Precision |

Recall |

Specificity |

Classif. Accur. |

|

1 |

99.6 |

100 |

98.4 |

99.8 |

98.6 |

100 |

99.3 |

99.3 |

|

2 |

97.3 |

98.6 |

99.3 |

97.95 |

94.0 |

87.5 |

90.7 |

90.7 |

|

3 |

95.9 |

98.2 |

97.8 |

97.05 |

95.9 |

97.2 |

96.55 |

96.55 |

|

4 |

94.5 |

95.8 |

98.4 |

95.15 |

94.5 |

95.8 |

95.15 |

95.15 |

|

5 |

93.2 |

94.4 |

97.4 |

93.8 |

93.2 |

94.4 |

93.8 |

93.8 |

|

6 |

100 |

93.1 |

99.2 |

96.55 |

89.0 |

90.3 |

89.65 |

89.65 |

-

2) Classification of object image under partial occlusion:

Second experiment examines the effect of partial occlusion of the object image by proposed feature. In this experiment images from the original database are artificially fabricated with partial occlusion. To test the effect of occlusion the two different neural classifiers are trained. One with the HOG feature descriptors of original images i.e. without occlusion, another with the WHOG feature matrix of the unoccluded images. Then HOG and WHOG feature descriptors are extracted from the partially occluded object images to test the performance of the neural classifier and the statistical measures are recorded in table 2.

Wavelet Based Histogram of Oriented Gradients Feature Descriptors for

Classification of Partially Occluded Objects

Table 2. Performance analysis of proposed system with HOG with occlusion

|

Proposed WHOG Descriptors |

HOG [9] |

|||||||

|

Class |

Precision |

Recall |

Specificity |

Classif. Accur. |

Precision |

Recall |

Specificity |

Classif. Accur. |

|

1 |

92.9 |

81.3 |

96.2 |

87.1 |

90.2 |

71.8 |

92.5 |

81 |

|

2 |

80.0 |

87.5 |

92.6 |

83.75 |

75.2 |

82.3 |

88.4 |

78.75 |

|

3 |

100 |

86.5 |

94.3 |

93.25 |

88.4 |

79.2 |

91.9 |

83.8 |

|

4 |

93.3 |

87.5 |

96.4 |

90.4 |

86.6 |

79.6 |

93.5 |

83.1 |

|

5 |

79.6 |

100 |

90.7 |

89.8 |

75.9 |

90.2 |

88.6 |

83.05 |

|

6 |

88.2 |

93.8 |

93.4 |

91 |

84.3 |

85.4 |

92.5 |

84.85 |

-

C. Result Discussion

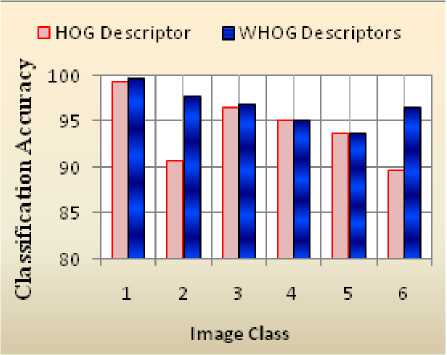

HOG Descriptors DWHOG Descriptors

(b) Performance graph for experiment 2

Fig. 7. Performance graphs for two Experiments

In this section, we discuss the comparative estimation of proposed WHOG feature descriptors and HOG descriptors [9] in two different datasets. The result presented in table 1 states that an average classification rate of 96.7 and 94.19 percent is achieved by WHOG and HOG in first experiment respectively. Similarly in second experiment classification accuracy of 89.21 and 82.45 percent is obtained by WHOG and HOG respectively.

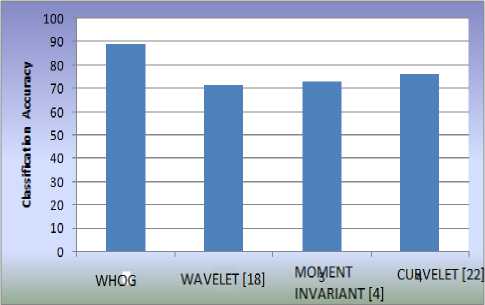

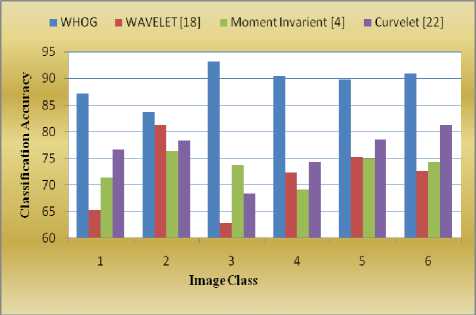

The graphs in Fig 7 represent the performance graph for the both experiments. Fig 7 (a) exhibits that WHOG based descriptors perform better for each of the class. Similarly Fig 7(b) also exhibits the robustness of the WHOG feature descriptors over HOG in presence of partial occlusion. Here the average classification accuracy of each of the image class is achieved by average of the Precision and Recall of corresponding class. The performance of WHOG features under partial occlusion dataset are also compared with the different feature extraction methods such as wavelet based feature extraction [18], moment invariant computation [4] and curvelet based feature extraction [22]. Partially occluded images are used to test the performance under different methods.

Fig. 8. Performance of various Feature Extraction Methods

The bar chart graph displayed in Fig 8 exhibits the comparison of average classification accuracy achieved through different feature extraction methods mentioned in above paragraph. It is clear from the chart that proposed method gives highest classification accuracy. Class wise performance comparison is displayed through bar graph in Fig 9.

(a) Performance graph for experiment 1

Fig. 9. Comparison of WHOG with previous works

A new image descriptor based on local features and shape is presented in this work which is an enhancement over HOG features. Firstly WHOG feature descriptors for an intensity image are presented. Then we have examined the performance of the classification system using these features on images under different constraints such as object images are rotated through different angles and in presence of occlusion. Experiments shows that results achieved using WHOG on object images under partial occlusion not only better than HOG feature descriptors but also perform better than wavelet, moment invariants and curvelet based features.

-

[1] Wells, W.M. 1997. Statistical approaches to feature-based object recognition. Int. J. Computer Visision , 21(1/2):63 – 98.

-

[2] A. Hyvarinen:. Survey on independent component analysis , Neural Computing Surveys, 2, 94-128, 1999.

-

[3] D.M.Gavrila,J.Giebel,andS.Munder. Vision-based pedes trian detection:the protector+system. Proc.ofthe IEEEIn-telligentVehiclesSymposium,Parma,Italy, 2004.

-

[4] Ajay Kumar Singh, V P Shukla, S R Biradar, Shamik tiwari, Performance analysis of wavelet & blur invariants for classification of affine and blurry images, Journal of Theoretical and Applied Information Technology, Vol 59, pp 781-790, January 2014.

-

[5] A. Torralba, Contextual priming for object detection, IJCV, 53(2):169–191, 2003.

-

[6] L. Wolf and S. Bileschi. A critical view of context. IJCV,69(2):251–261, 2006.

-

[7] M. Maire, S. Yu, and P. Perona. Object detection and segmentation from joint embedding of parts and pixels. In ICCV, 2011.

-

[8] M. Blaschko and C. Lampert. Object localization with global and local context kernels. In BMVC , 2009.

-

[9] Dalal, N. and Triggs, B., “Histograms of Oriented Gradients for Human Detection,” IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2005, San Diego, CA, USA

-

[10] S. A. Nene, S. K. Nayar and H. Murase, Columbia Object Image Library (COIL-100), Technical Report CUCS-006-96, February 1996.

-

[11] R. C. Gonzalez, R. E. Woods, "Digital Image Processing third edition", Prentice Hall, 2008.

-

[12] S. Mallat, “A theory for multiresolution signal

decomposition: The wavelet representation,” IEEE

Transaction on Pattern Analysis and Machine Intelligence, vol. 11, no. 7, pp. 674–693, 1989.

Список литературы Wavelet Based Histogram of Oriented Gradients Feature Descriptors for Classification of Partially Occluded Objects

- Wells, W.M. 1997. Statistical approaches to feature-based object recognition. Int. J. Computer Visision , 21(1/2):63 –98.

- A. Hyvarinen:. Survey on independent component analysis , Neural Computing Surveys, 2, 94-128, 1999.

- D.M.Gavrila,J.Giebel,andS.Munder. Vision-based pedes trian detection:the protector+system. Proc.ofthe IEEEIn-telligentVehiclesSymposium,Parma,Italy, 2004.

- Ajay Kumar Singh, V P Shukla, S R Biradar, Shamik tiwari, Performance analysis of wavelet & blur invariants for classification of affine and blurry images, Journal of Theoretical and Applied Information Technology, Vol 59, pp 781-790, January 2014.

- A. Torralba, Contextual priming for object detection, IJCV, 53(2):169–191, 2003.

- L. Wolf and S. Bileschi. A critical view of context. IJCV,69(2):251–261, 2006.

- M. Maire, S. Yu, and P. Perona. Object detection and segmentation from joint embedding of parts and pixels. In ICCV, 2011.

- M. Blaschko and C. Lampert. Object localization with global and local context kernels. In BMVC , 2009.

- Dalal, N. and Triggs, B., “Histograms of Oriented Gradients for Human Detection,” IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2005, San Diego, CA, USA

- S. A. Nene, S. K. Nayar and H. Murase, Columbia Object Image Library (COIL-100), Technical Report CUCS-006-96, February 1996.

- R. C. Gonzalez, R. E. Woods, "Digital Image Processing third edition", Prentice Hall, 2008.

- S. Mallat, “A theory for multiresolution signal decomposition: The wavelet representation,” IEEE Transaction on Pattern Analysis and Machine Intelligence, vol. 11, no. 7, pp. 674–693, 1989.

- Mahmoud, M.K.A., A. Al-Jumaily, and M. Takruri, eds. The Automatic Identification of Melanoma by Wavelet and Curvelet Analysis: Study Based on Neural Network Classification. 11th International Conference on Hybrid Intelligent Systems (HIS)2012, IEEE (HIS). 680 685.

- Lau, H.T. and A. Al-Jumaily, Automatically Early Detection of Skin Cancer: Study Based on Nueral Netwok Classification , in International Conference of Soft Computing and Pattern Recognition2011.

- Freeman, J.A., Skapura, D. M. , Neural networks algorithms, applications, and programming techniques. Reading, Michigan: Addison-Wesle, 1992.

- Sankar, A.B., Kumar, D., Seethalakshmi, K., Neural Network Based Respiratory Signal Classification Using Various Feed-Forward Back Propagation Training Algorithms. European Journal of Scientific Research, 2011. 49(3): p. 468-483

- Ajay Kumar Singh, V P Shukla, SR biradar and Shamik Tiwari, “Enhanced Performance of Multi Class Classification of Anonymous Noisy Images” , international Journal of Image, Graphics and Signal Processing. 6,3, PP.27-34, 2014.

- Ajay Kumar Singh, Shamik Twari and V P Shukla, “Wavelet based multi class image classification using neural network”, International Journal of Computer Applications, 37, 4, 2012.

- Ajay Kumar Singh, Shamik Twari and V P Shukla, “An Enhancement over Texture Feature Based Multiclass Image Classification Under Unknown Noise”,Broad Research in Artificial Intelligence and Neuroscience, 4, 1-4, 2013, 84-96.

- Deepa S.N. and A.D. B., A survey on artificial intelligence approaches for medical image classification. Indian Journal of Science and Technology 2011. 4 (11).

- S. Nene, S. Nayar, and H. Murase (1996). Columbia object image library (COILl-100). Dept. Comput. Sci., Columbia Univ., New York, Tech. Rep. CUCS-006-96.

- Ajay Kumar Singh, V P Shukla, SR biradar and Shamik Tiwari, “Curvelet Based Multiclass Image Classification under Complex Background Using Neural Network”, International Review on Computer and Software, March 2014 issue.