A Gender Recognition Approach with an Embedded Preprocessing

Автор: Md. Mostafijur Rahman, Shanto Rahman, Emon Kumar Dey, Mohammad Shoyaib

Журнал: International Journal of Information Technology and Computer Science(IJITCS) @ijitcs

Статья в выпуске: 7 Vol. 7, 2015 года.

Бесплатный доступ

Gender recognition from facial images has become an empirical aspect in present world. It is one of the main problems of computer vision and researches have been conducting on it. Though several techniques have been proposed, most of the techniques focused on facial images in controlled situation. But the problem arises when the classification is performed in uncontrolled conditions like high rate of noise, lack of illumination, etc. To overcome these problems, we propose a new gender recognition framework which first preprocess and enhances the input images using Adaptive Gama Correction with Weighting Distribution. We used Labeled Faces in the Wild (LFW) database for our experimental purpose which contains real life images of uncontrolled condition. For measuring the performance of our proposed method, we have used confusion matrix, precision, recall, F-measure, True Positive Rate (TPR), and False Positive Rate (FPR). In every case, our proposed framework performs superior over other existing state-of-the-art techniques.

Короткий адрес: https://sciup.org/15012319

IDR: 15012319

Текст научной статьи A Gender Recognition Approach with an Embedded Preprocessing

Published Online June 2015 in MECS

Nowadays gender recognition from facial image has become an active research area in the field of computer vision for different applications such as biometric authentication, surveillance systems, market analysis, security systems, etc. Gender can be recognized in different ways such as from human body shape, gait component [29], facial image [3-15, 28], etc. There have been a number of gender recognition approaches but none of these able to provide satisfactory result in some uncontrolled situations. Moreover, some facial images are so confusing that most of the time human beings fail to recognize gender from facial images. Fig.1 represents some such types of image which are taken from LFW database [17]. So, there is a wide scope of improving the performance of gender recognition approaches. This paper presents a novel approach for gender recognition from facial images.

(b)

Fig. 1. Real life confusing image (a) male but looks like female, (b) female but looks like male

ears were taken as the external part. Noteworthy, the more essential information can be gained from external facial portion. For their experimental purpose, they used FRGC database. Baluja et al. [10] proposed a boosting sex identification performance method in where gender recognition is performed based on boosting pixel comparison. They used FERET database and SVM on images of 20×20 pixels. Makinen et al. [11] proposed a gender recognition method by considering FERET database. All of the above studies have some universal problems because they used FERET database in where images are captured under controlled situation such as frontal faces, consistent lighting, etc. However, it is almost impractical to capture images in real time gender recognition applications in controlled situation due to lack of sunlight, pose variation, presence of the cloud, variation of illumination, image capturing devices, etc [30]. So, real time gender classification is a challenging issue and has increased demand in day by day. Though few methods have been proposed for recognizing gender from real life images, the performance is not as satisfactory as desired.

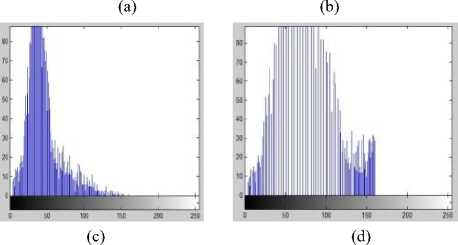

Concerning of real time gender recognition Shakhnarovich et al. [13] took over 3,500 facial images from websites which are more challenging to recognize. The authors achieved 79.0% accuracy using Haar-like features with Adaboost and 75.5% accuracy using Haar-like features with SVM. Caifeng Shan [14] also made an investigation on gender recognition using 7,443 real-life face images from LFW database which is captured in uncontrolled situation. The author used boosted LBPH features with SVM and claimed for 94.81% accuracy. Though the author used LFW database, he did not consider 5,790 difficult face images which are captured in uncontrolled situation. Dey et al. [15] tried to remove noise from facial image using Histogram Equalization

(HE). But in some situations over enhancement caused by HE removes some important features from a face image [1]. Fig.2 represents the over enhancement effect of HE on images of LFW database. The authors experiment over 4,000 facial images using LBP features with DCT and obtained 79% accuracy.

Fig. 2. Problem of histogram equalization (a) Original images, (b) Images after applying HE

Table 1. Several Existing Gender Classification Techniques and their Recognition Rate

|

Ref. |

Features |

Data set |

No. of Samples |

Classifier |

Result (%) |

|

[3] |

Neural Network |

Personal |

90 |

N/A |

91.9 |

|

[5] |

Texture Normalization |

FERET |

1,755 |

Nonlinear SVM |

96.6 |

|

[7] |

N/A |

FERET & PIE |

12,964 |

LDA and SVM |

94.2 |

|

[13] |

Harr features |

Web images |

3,500 |

Adaboost |

79.0 |

|

SVM |

75.5 |

||||

|

[15] |

LBP with HE |

Personal Album |

4,000 |

DCT |

79 |

|

[14] |

Boosted LBP |

LFW |

7,443 |

SVM |

94.83 |

In this paper, we have used the LFW cropped [17] database for our experimental purpose on real-life facial images. As real life images are captured in uncontrolled situation, it may contain noises. Features may not be clearly visualized which is responsible for failure in successful recognition. So, preprocessing is the first step to remove noise from the facial image. Many local feature based approaches are proposed for feature extraction from facial image such as local binary pattern (LBP) [18], local ternary pattern (LTP) [25], local gradient Pattern (LGP) [24], etc. The Adaboost algorithm is adjusted by Tieu and Viola [23] for image analysis. A low contrasted image or a noisy image may lose the detail information or important characteristics which help to differentiate male and female gender. As a result, real time gender classification has become difficult. To mitigate this problem we proposed a framework whose first step is to noise filtering and contrast enhancement. Noise reduction can be done in several ways. Different image contrast enhancement techniques can be employed. But some of the techniques have some over-enhancement effect which may obsolete detail information of the image. Along with this artifacts can be occurred as well. Among all of the contrast enhancement and noise filtering techniques, we use Adaptive Gamma Correction with Weighted Distribution (AGCWD) technique to reduce the noise and clearly visualize detail information.

Rest of the paper is structured as follows. In Section II, we describe our proposed gender recognition technique. Section III presents our extensive experiments and, Section IV contains concluding statements.

-

II .Proposed Method

Gender recognition broadly depends on face detection, feature extraction and classification. We have used the cropped LFW database which contains only the facial region. All the faces are 64x64 pixel size. Fig.3 shows the proposed gender recognition framework. Input image is firstly passed through a preprocessing module and then feature extractors are used for extracting prominent features. Finally, Adaboost classifier has been used for the classification of male and female faces. The detail of proposed framework is described on the following subsections.

-

A. Contrast Enhancement

Gender classification from facial images uses different methods to extract several distinctive features such as edge, corner, lines, etc. But sometimes distinctive features may not be clearly displayed due to the existence of noises. As a result, false detection occurs. Thus, elimination of noise is needed before feature extraction.

We have used preprocessing in our proposed framework. Several kinds of image enhancement techniques are available such as Histogram Equalization (HE) [1], Brightness Preserving Bi-Histogram Equalization (BBHE) [26], Exact Histogram Specification (EHS) [21], Recursively Separated and Weighted Histogram Equalization (RSWHE) [27], Locally Transformed Histogram [31], Adaptive Gamma Correction with Weighting Distribution (AGCWD) [2], etc. Among many image enhancement techniques which technique will provide our desired result is a big question due to the existence of drawbacks of image enhancement techniques such as HE sometimes over enhances the image. BBHE fails for non-symmetric distribution. Among all the available image enhancement techniques, we have used AGCWD for enhancing the contrast and reducing noise from an image because of it has some additive advantages.

Fig. 3. Proposed gender recognition framework

AGCWD enhances the contrast of an image by using gamma correction. Here, each and every pixel of an image is transformed based on the highest pixel’s intensity. As a result, over-enhancement or artifacts will not be occurred [30]. So, we used AGCWD technique for enhancing the contrast and reducing the noise of an image. It enhances the brightness and preserves the available histogram information. Along with this, the algorithm gives more probabilities for infrequent gray levels whereas traditional transformation function does not give more probabilities to the infrequent gray levels [27]. So, infrequent pixel’s information is retained. The transformation of the gamma correction (TGC) [2] is defined by (1).

т ( I )= ^max ∗(Л) (1)

^max'

Where, ^max is the maximum pixel intensity, l represents each pixel’s intensity and T ( I ) [2] is a transformed pixel intensity, γ is calculated by (2).

Y =1- cdfw ( l ) (2)

Here, cdfw is the cumulative density function. Fig.4 shows an example of AGCWD.

Fig. 4. Contrast enhancement using AGCWD (a) Input image, (b) Image after applying AGCWD, (c) Input image histogram, (d) Output image histogram after applying AGCWD

-

B. Feature Extraction

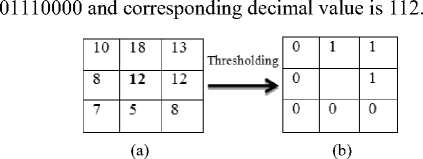

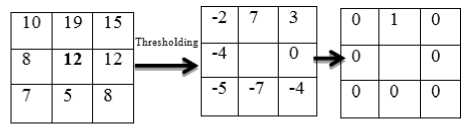

LBP, LTP, LGP and other feature extractors can effectively describe micro-patterns in facial image. After enhancing contrast of the images using AGCWD, we extracted features from the facial image using LBP, LTP or LGP. The extracted feature histogram describes the local texture and global shape of face images. The basic concept of LBP, LTP and LGP are presented below. Ojala et al. [22] introduced basic LBP which defines the basic structure of an image such as edge, corner, lines, etc. LBP considers 3×3 neighborhood of a pixel and the neighbor having pixel value greater or equal to the central pixel value is set as a binary value ‘1’ and the neighbor having pixel value less than the central pixel value is set a binary value ‘0’. The thresholding function T ( x ) is defined by (3) where xi and c i represent neighboring pixel value and central pixel value accordingly.

T (x)={ 01

if xt ≥ ct ot ℎ erwise

Then binary values of corresponding 8 neighbors are converted to a decimal value and assign it as the new central pixel value. The new central pixel value is calculated by (4).

LBP ( XC , Ус )=∑ Lqt ( i )×21 (4)

Where ( x c ,y c ) is the location of central pixel and T(i) is the binary value of ith neighbor. Fig.5 demonstrates basic LBP where central pixel value is 12, binary value is

Fig. 5. LBP of 3×3 neighborhood (a) 3×3 pixel matrix, (b) 3×3 binary value

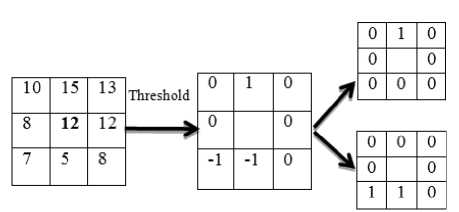

Tan et al. introduced local ternary pattern (LTP) [25] for face recognition which also used 3×3 neighborhood. In LTP, neighbors having pixel value greater than or equal to +5 from the central pixel value is assigned a value ‘+1’, less than or equal to -5 from the central pixel value is assigned as a value ‘-1’, otherwise assigned a value ‘0’. Here, the thresholding function T(i) is defined by (5) where c i , x represents center and neighboring pixels respectively. Fig.6 demonstrates LTP where central pixel is 12.

+1 if ( x - с, )≥+5

T ( i )={-1 if ( x - Ci )≤-5 (5)

0 ot ℎ erwise

(a) (b) (c)

Fig.6. LTP of 3×3 neighborhood (a) 3×3 pixel matrix, (b) 3×3 ternary values (c) 3×3 binary values

The LGP was introduced by Jun et al. [24] where 3×3 neighborhood of a pixel is considered and the neighbor having gradient greater or equal to the average of eight neighboring gradients is set a binary value ‘1’, otherwise is assigned a binary value ‘0’. The threshold function is defined by (6)

T ( x )={

1 ifXg ≥μ g

0 Ot ℎ erwise

Where x g is the neighboring gradient and μ g is the average gradient. Fig.7 demonstrates basic LGP where average value is 4, binary value is 01000000 and corresponding decimal value is 64.

(a) (b) (c)

Fig.7. LGP of 3×3 neighborhood (a) 3×3 pixel matrix, (b) 3×3 gradient matrix (c) 3×3 binary matrix

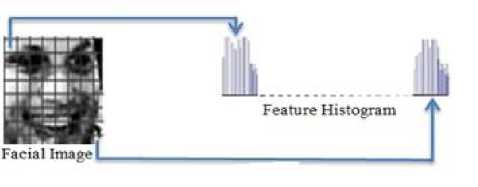

After extracting the features using any of the aforementioned feature extractors, we calculated the histogram of those features which can be used as feature or pattern descriptor. We divided the whole image into several non-intersecting sub-blocks to calculate the shape information. Fig.8 illustrates an example of 8×8 blocked facial image and its histogram.

Fig.8. Sub-regions of 8×8 blocks

-

C. Classification

After generating feature histogram using feature descriptors such as LBP, LTP or LGP etc, we need to make classification decision depending on those features. For this purpose, Adaboost classifier is employed which dynamically boosts a sequence of weak classifiers considering the errors in previous learning [23] and produce a strong classifier. The boosting is performed by (7).

H ( x )=∑ I=i «t ℎ t ( X )

Where ^t and ℎ^( X ) represents boosting coefficient and weak classifier accordingly. We have used Tieu and Viola’s adjusted Adaboost algorithm for classification purpose.

-

III. Experiment

This section presents extensive experiments which include experimental settings and description of dataset, training and testing procedure, experimental results and comparisons with other state of the art methods. These are described in the following subsections:

-

A. Experimental Settings

We used LFW database for our experimental purpose [17]. LFW is a database for studying the problem of real life gender recognition, which consists of 13,230 facial images of 5,749 subjects (4,263 male, 1,486 female) collected from the web. Images are also gender imbalanced, which contains 10,255 male and 2,975 female samples. We divided the data set into five similar sizes, keeping the same number of female and male sample in each division. Fig.9 presents few sample images of LFW database which are captured in uncontrolled situation.

-

B. Training and Testing

We have applied AGCWD before features extraction. Five folds cross-fold validations have been performed in each case. Therefore four parts containing 10,584 facial images are used for training and the remaining 2,646 facial images are used for test and this process is repeated five times for all the methods. Adaboost is used for feature selection and classification. The top five hundred features are selected using adaboost for training and testing purpose.

Fig. 9. Few sample male and female images from LFW database. First, third rows presents male and second, fourth rows presents female facial images.

well as comparing with other existing systems. Confusion matrix allows visualizing the performance of a proposed method in a supervise learning. Table 2, Table 3, Table 4 present the confusion matrix of gender recognition using LBP, LTP and LGP respectively which contain the recognition result using preprocessing (AGCWD) and without preprocessing.

Table 2. Confusion matrix for gender recognition without and with preprocessing in LBP

|

LBP |

LBP + Preprocessing |

|||

|

Male |

Female |

Male |

Female |

|

|

Male |

9,747 |

508 |

9,797 |

458 |

|

Female |

784 |

2,191 |

745 |

2,230 |

Table 2 illustrates that using only LBP 9,747 male and 2,191 female are correctly detected. 508 male faces and 784 female faces are misclassified. On the other hand, LBP with proposed method can recognize 9,797 male and 2,230 female correctly. 458 male and 745 female are misclassified by proposed method.

-

C. Experimental Result

To evaluate the performance of proposed framework, we have used True Positive Rate (TPR), False Positive Rate (FPR), and overall accuracy by equation (8), (9) and (10) respectively to demonstrate the performance.

Male correctly classified

1 P К =-----------------

Total male

Table 3. Confusion matrix for gender recognition without and with preprocessing in LTP

|

LTP |

LTP + Preprocessing |

|||

|

Male |

Female |

Male |

Female |

|

|

Male |

9,764 |

491 |

9,805 |

450 |

|

Female |

783 |

2,192 |

731 |

2,244 |

с Female incorrectly classified г P К =-------------------

Total female

а с cur асу =

TP+TN TP+TN+FN+FP

x 100%

Where TP indicates true positive, which represents a positive value that the system has recognized as positive, TN stands for true negative that the system identifies as negative which is actually negative, FP is false positive which predicts as positive though it is negative and FN is false negative which identifies positive though it is negative. Accuracy is defined as the ratio of the number of gender correctly classified to the total number of gender. Besides this to illustrate the results more extensively we adopt Precision, Recall and F-measure which are defined by equation (11), (12) and (13) respectively.

From Table 3, it is clear that LTP can correctly detect 9,764 male and 2,192 female. 491 male and 783 female faces are wrongly detected. On the other hand, LTP with preprocessing can recognize 9,805 male and 2,244 female accurately. 450 female and 731 male face images are misclassified by proposed technique.

Table 4. Confusion matrix for gender recognition without and with preprocessing in LGP

|

LGP |

LGP + Preprocessing |

|||

|

Male |

Female |

Male |

Female |

|

|

Male |

9,696 |

559 |

9,740 |

515 |

|

Female |

849 |

2,126 |

803 |

2,172 |

Precision =-----

TP+FP

Recall = TP

TP+FN

1 measure

2 xPrecisi о xxRe с a 11

Precision+Recall

F-measure [16] is used as an evaluation metric for measuring regression performance of our proposed framework. It is the harmonic mean of precision and recall. The large value of F-measure indicates higher classification quality. This system has been experimented over 10,255 male and 2,975 female faces.

Beside these, 3 confusion matrixes are created for evaluating the performance of the proposed system as

Table 4 represents that LGP can accurately detect 9,696 male and 2,126 female. On the contrary, LGP with preprocessing can recognize 9,740 male and 2,172 female accurately. 559 male were misclassified by using only LGP whereas 515 male misclassified by using preprocessing before LGP. Female recognition is also improved by our method in LGP.

From the above confusion matrices, in a nutshell it can be said that female recognition rate is not as satisfactory as expected by existing methods. One of the major reasons behind it is that the textures of the facial images are not properly visible. This can be easily understandable by Fig.10. Further the male image in LFW database is significantly large with compare to number of female facial images. This might also affect the result. However, when we incorporate our method, female recognition rate has been increased. So, it can be

concluded that recognition performance of LBP, LTP and LGP is increased after applying the preprocessing technique which is based on AGCWD.

(a)

(b)

Fig. 10. Incorrectly recognized by existing approach but correctly recognized by the proposed approach. (a) Original image, (b) Images after applying proposed preprocessing

Table 5, Table 6, Table 7 present the accuracy, precision, recall and F-measure for each folder using LBP, LTP and LGP without and with preprocessing respectively.

Table 5. Performance Analysis of Proposed Gender Recognition Technique (LBP)

|

Fold |

Accuracy (%) |

Precision |

Recall |

F-measure |

||||

|

LBP |

LBP + Prep. |

LBP |

LBP + Prep. |

LBP |

LBP + Prep. |

LBP |

LBP + Prep. |

|

|

1 |

89.68 |

91.01 |

0.927 |

0.933 |

0.940 |

0.953 |

0.934 |

0.943 |

|

2 |

91.49 |

91.61 |

0.924 |

0.928 |

0.970 |

0.966 |

0.946 |

0.947 |

|

3 |

88.21 |

89.04 |

0.909 |

0.915 |

0.942 |

0.946 |

0.925 |

0.931 |

|

4 |

91.27 |

91.69 |

0.935 |

0.937 |

0.954 |

0.957 |

0.944 |

0.947 |

|

5 |

90.51 |

91.20 |

0.932 |

0.934 |

0.946 |

0.954 |

0.939 |

0.944 |

|

Avg. |

90.23 |

90.91 |

0.925 |

0.928 |

0.950 |

0.955 |

0.938 |

0.942 |

Table 6. Performance Analysis of Proposed Gender Recognition Technique (LTP)

|

Fold |

Accuracy (%) |

Precision |

Recall |

F-measure |

||||

|

LTP |

LTP + Prep. |

LTP |

LTP + Prep. |

LTP |

LTP + Prep. |

LTP |

LTP + Prep. |

|

|

1 |

90.82 |

91.50 |

0.930 |

0.934 |

0.953 |

0.958 |

0.941 |

0.946 |

|

2 |

89.04 |

91.31 |

0.910 |

0.929 |

0.953 |

0.961 |

0.931 |

0.945 |

|

3 |

89.23 |

90.02 |

0.921 |

0.924 |

0.942 |

0.950 |

0.931 |

0.937 |

|

4 |

91.84 |

91.95 |

0.937 |

0.938 |

0.960 |

0.960 |

0.948 |

0.949 |

|

5 |

90.93 |

90.59 |

0.931 |

0.928 |

0.953 |

0.952 |

0.942 |

0.940 |

|

Avg. |

90.37 |

91.07 |

0.926 |

0.931 |

0.952 |

0.956 |

0.938 |

0.943 |

Table 7. Performance Analysis of Proposed Gender Recognition Technique (LGP)

|

Fold |

Accuracy (%) |

Precision |

Recall |

F-measure |

||||

|

LGP |

LGP + Prep. |

LGP |

LGP + Prep. |

LGP |

LGP + Prep. |

LGP |

LGP + Prep. |

|

|

1 |

89.30 |

90.40 |

0.923 |

0.928 |

0.941 |

0.950 |

0.932 |

0.939 |

|

2 |

89.72 |

90.36 |

0.915 |

0.923 |

0.956 |

0.955 |

0.935 |

0.939 |

|

3 |

87.57 |

88.51 |

0.911 |

0.916 |

0.931 |

0.938 |

0.921 |

0.927 |

|

4 |

90.63 |

90.44 |

0.929 |

0.926 |

0.952 |

0.953 |

0.940 |

0.939 |

|

5 |

89.57 |

90.48 |

0.920 |

0.926 |

0.948 |

0.954 |

0.934 |

0.939 |

|

Avg. |

89.36 |

90.04 |

0.919 |

0.924 |

0.945 |

0.950 |

0.932 |

0.937 |

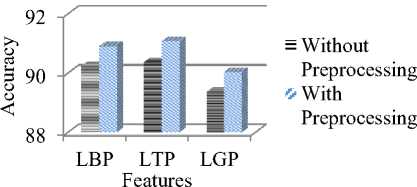

From the above tables and discussion, it is clear that after applying the proposed preprocessing in LBP, LTP and LGP, the rate of accuracy and the value of F-measure have increased. As the value of accuracy and F-measure is increased in our proposed framework, it can be concluded that our proposed framework performs superior on existing state-of-the-art methods.

Fig.11. Accuracy (%) of different techniques

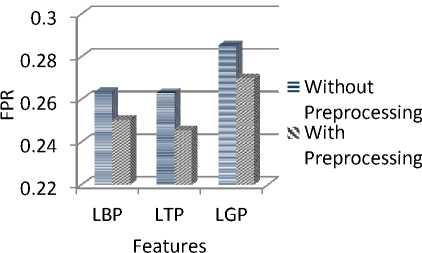

Table 8 presents TPR and FPR of LBP, LTP, and LGP with preprocessing and without preprocessing True positive rate and false positive rate are calculated by equations (8) and (9) respectively. Higher value of TPR and lower value of FPR indicates higher recognition accuracy.

Table 8. TPR and FPR of Proposed Method using LBP, LTP and LGP

|

Feature Types |

TPR |

FPR |

||

|

Without Prep. |

With Prep. |

Without Prep. |

With Prep. |

|

|

Basic LBP |

.95046 |

.95534 |

.26352 |

.25042 |

|

LTP |

.95212 |

.95612 |

.26319 |

.24571 |

|

LGP |

.94549 |

.94978 |

.28538 |

.26992 |

From Table 8, we can see that TPR has increased by using preprocessing before LBP, LTP, and LGP. On the contrary, FPR has decreased for using preprocessing before LBP, LTP, and LGP. So, from TPR and FPR it can also be summarized that our proposed framework performs superior than existing techniques. Fig.12 and Fig.13 plot the result of TPR and FPR respectively.

0.958

0.956

0.954

0.952

or 0.95

Ё 0.948

0.946

0.944

0.942

0.94

Without

Preprocessing

- With

Preprocessing

LBP LTP LGP

Features

Fig. 12. TPR of different techniques

-

D. Discussion

From Table 2-8 and Fig.10-13, it is clear that by adopting a suitable preprocessing technique this research has increased the rate of accuracy, precision, recall and F-measure than running state-of-art gender recognition methods. In real life images several kinds of noises may exist due to lack of illumination, quality of the image capturing devices, or presence of the cloud, etc. As a result, prominent features cannot be effectively extracted from the facial images. For getting distinguished features from images, it is needed to increase the brightness and contrast of the images as well. We have tried to choose a better preprocessing technique to improve the performance of gender recognition technique. The main reason of our superiority is that we have adopted AGCWD contrast enhancement technique which does not over enhance an image and also reduce the noises.

Fig.13. FPR of different techniques

It also avoids the generation of unfavorable artifacts. Over enhancement and generating artifacts are the major problems in traditional image enhancement methodology which reduces the performance rate. So, by using effective preprocessing technique our proposed gender recognition method performs superior than other existing eminent methods which are based on LBP, LTP or LGP.

-

IV. Conclusion

In this paper, we have reviewed state-of-the-art gender recognition techniques. All of these methods show competitive results and perform reasonably well. However, in case of illumination problem they might fail which can be easily elevated with an image enhancement technique as a preprocessing step. This is demonstrated in detail in our experimental section. Our proposed method enhanced the images and existing feature extraction such as LBP, LTP or LGP are implemented on the preprocessed images. Cross validation is applied on LFW dataset to test the results and it provides that the proposed method works better than existing gender recognition methods. It is well known that illumination and pose variations are the major problems in case of feature based holistic approaches. In this paper, we address the illumination problem. A more sophisticated image preprocessing technique along with a dynamic size block for adopting pose variation can further improve the overall accuracies which we will address in future.

Список литературы A Gender Recognition Approach with an Embedded Preprocessing

- R. C. Gonzalez and R. E. Woods, Digital Image Processing. 3rd Edition, Prentice-Hall, Inc, Upper Saddle River, NJ, USA, 2006.

- Shih-Chia Huang, Fan-Chieh Cheng, and Yi-Sheng Chiu, “Efficient contrast enhancement using adaptive gamma correction with weighting distribution,” In: IEEE Transaction on image processing, 2013, pp. 1032-1041.

- Golomb, B.A., Lawrence, D.T., Sejnowski, T.J., “Sexnet: a neural network identifies sex from human faces,” In: Adv. Neural Inform. Process. Systems (NIPS), 1991, pp. 572–577.

- Brunelli, R., Poggio, T., “Hyperbf networks for gender classification,” In: DRAPA Image Understanding Workshop, 1992, pp. 311–314.

- Yang, Z., Li, M., Ai, H., “An experimental study on automatic face gender classification,” In: International Conference on Pattern Recognition (ICPR), 2006, pp. 1099–1102.

- Moghaddam, B., Yang, M., “Learning gender with support faces,” In: IEEE Trans. Pattern Anal. Machine Intell, 2002, pp. 707–711.

- BenAbdelkader, C., Griffin, P., “A local region-based approach to gender classification from face images,” In: IEEE Conf. on Computer Vision and Pattern Recognition Workshop, 2005, pp. 52–52.

- Lapedriza, A., Marin-Jimenez, M.J., Vitria, J., “Gender recognition in non-controlled environments,” In: Internat. Conf. on Pattern Recognition (ICPR), 2006, pp. 834–837.

- Dey, E.K.; Muctadir, H.M., "Chest X-ray analysis to detect mass tissue in lung," Informatics, Electronics & Vision (ICIEV), 2014 International Conference on, vol. 1, no. 5, 2014, pp. 23-24.

- Baluja, S., Rowley, H.A., “Boosting sex identification performance,” In: Internat. J. Computer Vision, 2007, pp. 111–119.

- Mäkinen, E., Raisamo, R., “Evaluation of gender classification methods with automatically detected and aligned faces,” In: IEEE Trans. Pattern Anal. Machine Intell.2008, pp. 541–547.

- Hadid, A., Pietikäinen, M., “Combining appearance and motion for face and gender recognition from videos,” In: Pattern Recognition, 2009, pp. 2818–2827.

- Shakhnarovich, G., Viola, P.A., Moghaddam, B., “A unified learning framework for real time face detection and classification,” In: IEEE Internat. Conf. on Automatic Face & Gesture Recognition (FG), 2002, pp. 14–21.

- Caifeng Shan, “Learning local binary patterns for gender classification on real-world face images,” In: Pattern Recognition Letter, 2012, pp. 431–437.

- Dey, Emon Kumar, Mohsin Khan, and Md Haider Ali. "Computer Vision-Based Gender Detection from Facial Image." International Journal of Advanced Computer Science 3, no. 8 (2013).

- Powers, D.M.W., "Evaluation: from Precision, Recall and F-measure to ROC, Informedness, Markedness and Correlation," In: Journal of Machine Learning Technologies, 2011, pp. 37-63.

- Huang, G., Ramesh, M., Berg, T., Learned-Miller, E., “Labeled faces in the wild: A database for studying face recognition in unconstrained environments,” In: Tech. Rep, University of Massachusetts, Amherst, 2007.

- Ahonen, T., Hadid, A., Pietikäinen, M., “Face recognition with local binary patterns”, In: European Conf. on Computer Vision (ECCV), 2004, pp. 469–481.

- Sun, N., Zheng, W., Sun, C., Zou, C., Zhao, L., “Gender classification based on boosting local binary pattern,” In: Internat. Symp. on Neural Networks, 2006, pp. 194-201.

- Lian, H., Lu, B., “Multi-view gender classification using multi-resolution local binary patterns and support vector machines,” In: Internat. J. Neural Systems, 2007, pp. 479–487.

- Coltuc, P. Bolon, and J. M. Chassery, “Exact histogram specification,” In: IEEE Transactions on Image Processing, 2006, pp. 1143–1152.

- Ojala, T., Pietikäinen, M., Mäenpää, T., “Multiresolution gray-scale and rotation invariant texture classification with local binary patterns,” In: IEEE Trans. Pattern Anal. Machine Intell.2002, pp. 971–987.

- K. Tieu and P. Viola, “Boosting image retrieval,” In: Proc. of Computer Vision and Pattern Recognition, 2000, pp. 228-235.

- Jun, Bongjin, and Daijin Kim, "Robust face detection using local gradient patterns and evidence accumulation," In: Pattern Recognition, 2012, pp. 3304-3316.

- X. Tan and B. Triggs, “Enhanced local texture feature sets for face recognition under difficult lighting conditions,” In: IEEE Transactions on Image Processing, 2010, pp.1635–1650.

- Y.-T Kim, “Contrast enhancement using brightness preserving bi-histogram equalization,” In: IEEE Transactions on Consumer Electronics, 1997, pp.1-8.

- Mary Kim and Min Gyo Chung, “Recursively separated and weighted histogram equalization for brightness preservation and contrast enhancement,” In: IEEE Transactions on Consumer Electronics, 2008, pp. 1389 – 1397.

- Tin, Hlaing Htake Khaung, "Perceived Gender Classification from Face Images," International Journal of Modern Education and Computer Science (IJMECS), vol. 4, no. 1, pp. 12-18, 2012.

- Arai, Kohei, and Rosa Andrie Asmara. "Gender Classification Method Based on Gait Energy Motion Derived from Silhouette Through Wavelet Analysis of Human Gait Moving Pictures," International Journal of Information Technology & Computer Science (IJITCS), vol. 6, no. 3, 2014.

- Rahman, Shanto, Md Mostafijur Rahman, Khalid Hussain, Shah Mostafa Khaled, and Mohammad Shoyaib. "Image Enhancement in Spatial Domain: A Comprehensive Study." International Conference on Computer and Information Technology (ICCIT), 2014.

- Khalid Hossain, Shanto Rahman, Shah Mostofa Khaled, M. Abdullah Al-Wadud, Dr. Mohammad Shoyaib, "Dark Image Enhancement by Locally Transformed Histogram," 8th International Conference on Software, Knowledge, Information Management and Applications (SKIMA), 2014.