A modification of Dai-Yuan’s conjugate gradient algorithm for solving unconstrained optimization

Бесплатный доступ

The spectral conjugate gradient method is an essential generalization of the conjugate gradient method, and it is also one of the effective numerical methods to solve large scale unconstrained optimization problems. We propose a new spectral Dai-Yuan (SDY) conjugate gradient method to solve nonlinear unconstrained optimization problems. The proposed method's global convergence was achieved under appropriate conditions, performing numerical testing on 65 benchmark tests to determine the effectiveness of the proposed method in comparison to other methods like the AMDYN algorithm and some other existing ones like Dai-Yuan method.

Unconstrained optimization, conjugate gradient method, spectral conjugate gradient, sufficient descent, global convergence

Короткий адрес: https://sciup.org/147238546

IDR: 147238546 | УДК: 519.6+517.972 | DOI: 10.14529/mmp220309

Текст краткого сообщения A modification of Dai-Yuan’s conjugate gradient algorithm for solving unconstrained optimization

The conjugate gradient method (CGM) is one of the most widely used approaches for solving nonlinear optimization problems. We consider the following unconstrained optimization problem:

min f (x), (1)

x∈Rn where f (x) : Rn ^ R is continuously differentiable and bounded below when solving equation (1), the iteration method is employed and is written as:

x r +1 = x r + a r d r , (2)

where α r is a line search-based optimal move [1]. One of the most inexact line searches is the Wolfe conditions to achieve convergence in the conjugate gradient methods, α r is guaranteed to be present as:

f (x r+1 ) - f (x r ) < ba r Vf (x r ) T d r ,

(3a)

| g r+i T d r I <-agrTd r , (3b)

where 0 < 5 < a < 1. More information may be found in [2]. The directions d r are calculated using the following rule:

( -g r+i ; for r = 0

( -g r+i + e r d r ; for r > 1

where βr ∈ R is a conjugacy coefficient. There are many Conjugate Gradient techniques based on different βr process choices, for example [3–8]. The most significant distinction between the spectral gradient approach and the gradient conjugate method is calculating the search path. The spectral gradient method’s search path is as follows:

d r+1

^ r+1 g r+1 + в Г s r 1

where s r = a r d r and 6 r +1 is the spectral parameter. The spectral conjugate gradient is a powerful tool for solving large-scale unconstrained optimization problems. The spectral conjugate gradient algorithms are presented in many papers, for more details see [9–20].

1. Derivation of New Parameter

An essential idea is to equate the vector of the conjugate gradient method to the vector of quasi-Newton method such that d Q +N = d^G then

p r H r + 1 g r+1 9 r + 1 g r + 1 + e r s r 1

where the matrix H r +1 is positive definite and symmetric, and we use the scale from [21] defined as follows:

yrT yr pr srT yr

.

We use the DY conjugate gradient algorithm because it achieves convergence according to the Wolfe criterion and has the same property as FR [4] because it is similar in the numerator and the same property as HS [3], which is similar in the denominator. Therefore,

y T y r И .^>+^«

H r + 1 g r + 1 " r + 1 g r + 1 + m s r 1

s r T y r s r T y r

y r T y r T

--T--yr Hr+1gr+1 = srT yr r since Нг+1Уг = Sr ^ yTHr+1 = sr,

t„

—O r+1 y r g r+1 +------ y r s r 1 r s r T y r r

yTyr sr gr+1 = sTyr r

— +r + 1Ly g gr+1 + | g r+1 ^ -

Then the new spectral is known in the following form:

лSDY _ llgr+1^ I

"r+1 rp + yrT gr+1

y rT y r s r T y r

s T g r+1

y T g r+11

then the proposed search direction is defined

as

d - igr +1i\ d r+1 = - Г

[ yT g r+1

/ y T y r A sT_g r+il \ S T Уг) y T g r+1 J

■ gr+1 + т sr- r+1 srT yr

Lemma 1. Suppose that the sequences generate (2) and (10) computed by line search (3a) and (3b), then the sufficient descent condition holds.

Proof. Define the spectral gradient direction as follows:

SDY DY dr+1 = °r+1 gr+1 + pr sr1

where θ r S + D 1 Y is defined in (9)

, Г 1к+111\ ( y T y r A sT Sr+11 Jgr+1112

d r+1 = — —---- + —---- g r+1 + T---sr • (11)

y r T g r +1 s r T y r y r T g r +1 r+1 s r T y r

Multiply equation (11) by (^r^1^). We get dT+1 gr+1 = - [ l|gr+1||2 , (yTУг A sT gr+11 l|gr+1||2 , l|gr+1||2 sT gr+1

||g r+1||2 y T g r+1 VT Уг) y T g r+1 | g r+1 | 2 s T У г | g r+1 | 2 •

Since yTgr+1 < lyr|| |gr+1| and sTgr+1 < sTyr, then dT+1 gr+1 < |gr+1| _ hr II sT gr+1

| g r+1 | 2 < ly r I | g r+1 | + s T y r •

From the strong Wolfe condition, sTgr+1<—a sTgr, and since we get sTgr<——yr dT+1 gr+1 / Ilgr+AI |yr1 _(^+l"|yr1 , a

< a < tH •

||g r+1 l 2 | Уг l l|g r+1 l s T y r ||g r+1 l a +l

Since y r =g r+1 — g r we get:

d T+1 g r+1 < - l g r+1 — g r l , a < - ||g r+1 l , a

||g r+1l2 llgr+1 l a +l llgr+1 l a +l

Let ^ = (1 — y+1) then we get:

d T+1 g r+1 < — ^1^+1112 .

□

2. Convergence Analysis of New Method

Assumption I . The following are some fundamental assumptions in this paper. (a) The level set Q is bounded. (b) The function is convex and continuously differentiable, its gradient g (x) is Lipchitz continuous, i.e., there exists a constant L > 0 such that | g(x) — g(y) | < L | x — y | for all x,y G Q.

Lemma 2. Suppose that Assumption I holds then for any CG-method, the direction d r +1 is a descent, and the step size α r is achieved by (3a, 3b) if

E r>1

K+1f

= TO .

Then

lim (inf | g r | = 0) • r ^^

Lemma 3. Suppose that Assumption I holds, where d r+1 is defined by (10) , then the method satisfies equation (12) .

Proof. Since в DY is positive [7], then 0 < в < 1,

Л | e D Y |< ^ 1 ,^ 1 > 0,

ICT I <

\ 6 SDY

g yrTgr+1

+

lg r+1 1 2

l|gr +1 | 2 - kT +l gk 1

+

(М2)

s r T y r

( М2 )

\ sr yr /

s T g r+1 y r T g r+1

,

-as'T o„ rr

l|g r+1||2 - | g T+1 g r 1 ,

from Powell restart \g' T +1 g r | > 0, 2 ||g r +1||2 ,

^SDYI < jg +i ^E 0,8 B g r+1 B 2

+

-

2 s rT y r

hr1 C+12

s T y r 0,8 lg r+1 12 ’

ICT I < 2,

From equation (5) and using (13) and (14) lldr+1 № < hl|gr+1||2

^ 2 > 0.

∞

E

k ≥ 1

+ ^1 || sr || = ^ 3 ,

1 1

—;--„ > — > 1 = TO.

1 dr+11 ^3 C

3. Results and Discussion

□

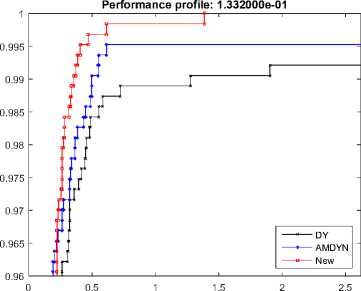

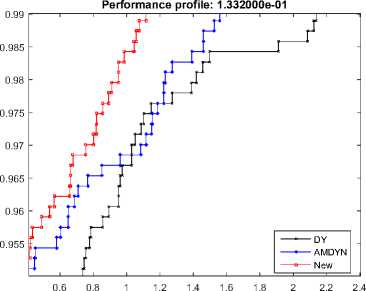

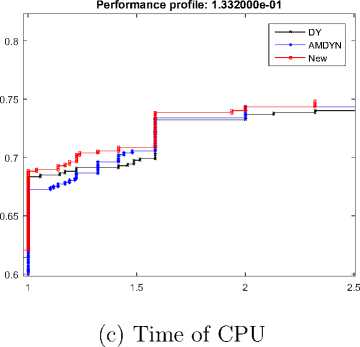

In this section, the new algorithm specified by equation (10) is compared to the classical DY algorithm [7] as well as AMDYN algorithm [11], which define θ r+1 as follows:

Д _ 1 Li ||2 llgr+1|2 (s T gr +1 ) , T ]

d r+1 = ----- | g r+11-- f--+ s r g r+1 .

y r T g r+1 y r T s r

We chosen 65 unconstrained optimizations in the range [n = 1000, 2000,..., 10, 000], broadly and based on generalized [22]. All algorithms used Wolfe condition a = 0, 9,5 = 0, 0001. The codes are adopted with double precision and using the Fortran language. All of these codes are authored by Andrei [11,23]. We applied the performance profile of Dolan and More [24] to demonstrate the algorithm’s efficiency, where the upward curve indicates that the new algorithm (SDY) is better than the classic DY. Also, we compare the results with AMDYN based on the number of iterations (NOI), number of functions and gradient ratings (NOF & g) and CPU time (time). Representation of practical results is presented in Figure.

Conclusion

The aim of this work is to propose new computing schemes for the spectral parameter θ r S + D 1 Y . This technique is such that the search direction always possesses a sufficient descent property. As a result, the presented new spectral conjugate gradient possesses global convergence under a robust Wolfe search. Using a collection of 65 optimization tests to solve problems, and numerically comparing the conduct of this new algorithm to that of some conjugated gradient algorithms DY and AMDYN, we show that the proposed algorithm outperforms previous conjugate gradient algorithms due to computational performance.

(a) NOI

Performance profile

Список литературы A modification of Dai-Yuan’s conjugate gradient algorithm for solving unconstrained optimization

- Alhawarat A., Salleh Z. Modification of Nonlinear Conjugate Gradient Method with Weak Wolfe-Powell Line Search. Abstract and Applied Analysis, 2017, vol. 2017, no. 2, pp. 1-6. DOI: 10.1155/2017/7238134

- Nocedal J., Yuan Ya-Xiang. Analysis of a Self-Scaling Quasi-Newton Method. Mathematical Programming, 1993, vol. 61, no. 1, pp. 19-37. DOI: 10.1007/BF01582136

- Hestenes M.R., Stiefel E. Methods of Conjugate Gradients for Solving Linear Systems. Journal of Research of the National Bureau of Standards, vol. 49, no. 6, pp. 409-435. DOI: 10.6028/jres.049.044

- Fletcher R., Reeve C.M., Function Minimization by Conjugate Gradients. The Computer Journal, 1964, vol. 7, no. 2, pp. 149-154. DOI: 10.1093/comjnl/7.2.149

- Polak E., Ribiere G. Note sur la convergence de methodes de directions conjuguees. Mathematical Modelling and Numerical Analysis-Modélisation Mathématique et Analyse Numérique, 1969, vol. 3, no. 1, pp. 35-43. (in French)

- Polyak B.T. The Conjugate Gradient Method in Extremal Problems. Computational Mathematics and Mathematical Physics, 1969, vol. 9, no. 4, pp. 94-112. DOI: 10.1016/0041-5553(69)90035-4

- Dai Yu-Hong, Yuan Yaxiang. A Nonlinear Conjugate Gradient Method with a Strong Global Convergence Property. SIAM Journal on Optimization, 1999, vol. 10, no. 1, pp. 177-182.

- Dai Yu-Hong, Li-Zhi Liao. New Conjugacy Conditions and Related Nonlinear Conjugate Gradient Methods. Applied Mathematics and Optimization, 2001, vol. 43, no. 1, pp. 87-101. DOI: 10.1007/ s002450010019

- Hassan B., Abdullah Z., Jabbar H. A Descent Extension of the Dai-Yuan Conjugate Gradient Technique. Indonesian Journal of Electrical Engineering and Computer Science, 2019, vol. 16, no. 2, pp. 661-668. DOI: 10.11591/ijeecs.v16.i2.pp661-668

- Jie Guo, Zhong Wan. A Modified Spectral PRP Conjugate Gradient Projection Method for Solving Large-Scale Monotone Equations and its Application in Compressed Sensing. Mathematical Problems in Engineering, 2019, vol. 2019, pp. 23-27. DOI: 10.1155/2019/5261830

- Neculai A. New Accelerated Conjugate Gradient Algorithms as a Modification of Dai-Yuan's Computational Scheme for Unconstrained Optimization. Journal of Computational and Applied Mathematics, 2010, vol. 234, no. 12, pp. 3397-3410. DOI: 10.1016/j.cam.2010.05.002

- Eman H., Rana A.Z., Abbas A.Y. New Investigation for the Liu-Story Scaled Conjugate Gradient Method for Nonlinear Optimization. Journal of Mathematics, 2020, vol. 2020, article ID: 3615208, 10 p. DOI: 10.1155/2020/3615208

- Gaohang Yu, Lutai Guan, Wufan Chen. Spectral Conjugate Gradient Methods with Sufficient Descent Property for Large-Scale Unconstrained Optimization. Optimization Methods and Software, 2008, vol. 23, no. 2, pp. 275-293. DOI: 10.1080/10556780701661344

- Ibrahim Sulaiman Mohammed, Yakubu Usman Abbas, Mamat Mustafa. Application of Spectral Conjugate Gradient Methods for Solving Unconstrained Optimization Problems. An International Journal of Optimization and Control: Theories and Applications, 2020, vol. 10, no. 2, pp. 198-205.

- Wang Li, Cao Mingyuan, Xing Funa, Yang Yueting. The New Spectral Conjugate Gradient Method for Large-Scale Unconstrained Optimisation. Journal of Inequalities and Applications, 2020, vol. 2020, no. 1, pp. 1-11. DOI: 10.1186/s13660-020-02375-z

- Jian Jinbao, Yang Lin, Jiang Xianzhen, Liu Pengjie, Liu Meixing. A Spectral Conjugate Gradient Method with Descent Property. Mathematics, 2020, vol. 8, no. 2, article ID: 280, 13 p. DOI: 10.3390/math8020280

- Danhausa A.A., Odekunle R.M., Onanaye A.S. A Modified Spectral Conjugate Gradient Method for Solving Unconstrained Minimization Problems. Journal of the Nigerian Mathematical Society, 2020, vol. 39, no. 2, pp. 223-237.

- Al-Arbo A., Rana Al-Kawaz. A Fast Spectral Conjugate Gradient Method for Solving Nonlinear Optimization Problems. Indonesian Journal of Electrical Engineering and Computer Science, 2021, vol. 21, no. 1, pp. 429-439. DOI: 10.11591/ijeecs.v21.i1.pp429-439

- Hassan Basim, Jabbar Hawraz. A New Spectral on the Gradient Methods for Unconstrained Optimization Minimization Problem. Journal of Zankoy Sulaimani, 2020, vol. 22, no. 2, pp. 217-224. DOI: 10.17656/jzs.10822

- Liu J.K., Feng Y.M., Zou L.M. A Spectral Conjugate Gradient Method for Solving Large-Scale Unconstrained Optimization. Computers and Mathematics with Applications, 2019, vol. 77, no. 3, pp. 731-739.

- Al-Bayati A.Y. A New Family of Self-Scaling Variable Metric Algorithms for Unconstrained Optimization. Journal of Education and Science, 1991, vol. 12, pp. 25-54.

- Neculai A. An Unconstrained Optimization Test Functions Collection. Advanced Modeling and Optimization, 2008, vol. 10, no. 1, pp. 147-161.

- Neculai A. New Accelerated Conjugate Gradient Algorithms for Unconstrained Optimization. Technical Report, 2008, vol. 34, pp. 319-330.

- Dolan E.D., More J.J. Benchmarking Optimization Software with Performance Profiles. Mathematical Programming, 2002, vol. 91, no. 2, pp. 201-213. DOI: 10.1007/s101070100263