A Pragmatic Approach for E-governance Evaluation Built over Two Streams (of Literature)

Автор: Marya Butt

Журнал: International Journal of Information Technology and Computer Science(IJITCS) @ijitcs

Статья в выпуске: 8 Vol. 7, 2015 года.

Бесплатный доступ

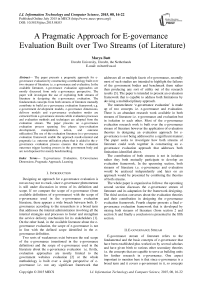

The paper presents a pragmatic approach for e-governance evaluation by constructing a methodology built over two streams of literature i.e. e-governance and evaluation. In the available literature, e-governance evaluation approaches are mostly discussed from only e-governance perspective. The paper will investigate the use of exploiting both streams of literature in designing the e-governance evaluation. The fundamentals concepts from both streams of literature mutually contribute to build an e-governance evaluation framework e.g. e-government development models, e-governance dimensions, delivery models and e-governance evaluation modes are extracted from e-governance streams while evaluation processes and evaluation methods and techniques are adopted from the evaluation stream. The paper presents an e-governance evaluation process spanning five phases (pre-evaluation development, manipulation, action, and outcome utilization).The use of the evaluation literature in e-governance evaluation framework enable the approach result-oriented and pragmatic i.e. outcome utilization phase that is added in the e-governance evaluation process ensures that the evaluation outcomes trigger learning process in the government body and are not dispensed for merely benchmarking.

E-governance Evaluation, E-Governance Dimensions, Pragmatic Approach, Learning

Короткий адрес: https://sciup.org/15012353

IDR: 15012353

Текст научной статьи A Pragmatic Approach for E-governance Evaluation Built over Two Streams (of Literature)

Published Online July 2015 in MECS

Designing an approach for e-governance evaluation is not an easy nut to crack, since e-governance phenomenon is still under discussion in terms of its definition and scope. If we compare the scope of e-governance (from available definitions of e-governance) with the scope of e-governance used in the e-governance evaluation literature, there appears a wide breach between both. Egovernance according to the researchers is a broad term that addresses the internal administration involving all the internal strategies and processes to foster and strengthen the service delivery mechanism for its stakeholders [1]. On the other hand, in the available literature about the egovernance evaluation, the scope of e-governance is not in line with the defined scope identified in the egovernance definition.

Two sorts of weaknesses exist between the real scope of the e-governance (mentioned in the e-governance definition) and the scope of e-governance used in the literature about the e-governance evaluation i.e. firstly, evaluation approaches are mostly limited to the government websites evaluation [2] or the whole methodology is built over a single perspective of egovernance i.e. not any significant framework that addresses all or multiple facets of e-governance, secondly most of such studies are intended to highlight the failures of the government bodies and benchmark them rather than producing any sort of utility out of the research results [3]. The paper is intended to present an evaluation framework that is capable to address both limitations by devising a multidisciplinary approach.

The nomenclature ‘e-governance evaluation’ is made up of two concepts i.e. e-governance and evaluation. There is an abundant research work available in both streams of literature i.e. e-governance and evaluation but in isolation to each other. Most of the e-governance evaluation research work is built over the e-governance stream of literature however the application of evaluation theories in designing an evaluation approach for egovernance is not being addressed in a significant manner. The paper seeks to investigate how both streams of literature could work together in constructing an egovernance evaluation approach that addresses both limitations identified above.

The contribution of both streams is not in isolation rather they both mutually participate to develop an evaluation framework. In the upcoming section, both streams of literature i.e. e-governance and evaluation would be analyzed independently and later on an approach would be presented by combining the theories of both streams.

The whole paper is organized as follows: the upcoming second section discusses the e-governance stream of literature and its adaptation for the framework designing. The third section converses about the evaluation theories and their contribution in designing the e-governance evaluation framework. Fourth chapter presents a final egovernance evaluation framework that is developed by mixing both streams of literature (from section 2 and section 3) and finally a conclusion is presented in the fifth section.

-

II. E-governance Stream

E-governance stream of literature refers to the fundamental and the basic concepts of e-governance that have been established plus worked on by several scholars and have given birth to various other secondary theories i.e. the concepts that are capable to serve as building units for further research in e-governance. One aspect important to mention here is that since e-governance is a broad term and it covers e-government in it, so concepts related to e-government and e-governance both will be used here.

Most of the literature about e-governance evaluation revolves around the e-governance stream of literature yet this stream seems not to be effectively exploited in the evaluation methodology i.e. related studies show weakness in addressing all the facets of e-governance.

To develop an approach that addresses all the possible facets of e-governance require the effective assessment and manipulation of the e-governance fundamentals. The assessment of e-governance literature paves way to develop a multidisciplinary approach by highlighting the discrete components of e-governance for e-governance evaluation.

The most discussed theories of the e-governance could be linked back to times when e-governance concept was naive and various researchers came up with the development models, these models according to Coursey and Norris [4] identify and track the various developmental phases of e-governance. Most of these development models more or less comprised of same components with slight change in nomenclature, there overlapping provides generic components, and could be used for further processing. Apart from e-government development models, another significant concept of egovernance is its dimensions i.e. the delivery facets of egovernance. E-governance is a multi-faceted phenomenon, researchers [5], [6], [7], [8] have identified four main dimensions of e-governance. The four identified dimensions are e-services, e-management, ecommerce and e-democracy. These dimensions and the development models could contribute significantly in designing an evaluation approach for e-governance that would be tabulated later in this section (Table 1).

Another most renowned concept of e-governance is about the delivery models that refer to the types of digital interactions between the government and its various stakeholders. Government interacts and communicates at various tiers [9]. Six sorts of interactions are exhibited by a government body [10] i.e. G2C (Government to Citizens), G2E (Government to Employees), G2G (Government to Governments), G2B (Government to Citizens), and C2B (Government to Citizens).

Apart from e-government delivery models one simple yet very significant concept of e-governance, inevitable for designing an evaluation approach, is the evaluation modes. Evaluation modes are important to mention since in the available literature, e-governance evaluation approaches accentuate only on the external mode while the back-end mode is not significantly addressed. The delivery models and the evaluation modes help to define the scope and boundaries of the evaluation approach that is intended to be designed.

The two other concepts from e-governance stream mentioned earlier in this section e.g. e-government development models and the e-governance dimensions help to construct the building tiers for evaluation approach i.e. what is going to be evaluated and how? Table 1 presents the concepts picked from e-governance stream, how they are manipulated for this research and what role these concepts exhibit in the research?

Table 1. E-governance Stream Construction

E-Governance Stream

(E-Governance is a vast phenomena that incorporates e-government in it, so fundamentals from both would be exploited)

|

Concepts |

Manipulation for the evaluation design |

Role |

|

E-Government Development models |

Models inter-mapping reveals the generic components of egovernment |

E-government development models and dimensions both contribute to develop a tiered approach i.e. what is to be evaluated, and how is to be evaluated? |

|

E-Governance Dimensions |

E-governance dimensions and the components mutually contribute to identify indicators for frontend and back-end evaluation |

|

|

E-Government Delivery Models |

Among various sorts of delivery models, the evaluation approach is intended to be for G2C (government to citizens) delivery model. |

E-government delivery models and evaluation modes both highlight the scope and structure to the research. |

|

E-Governance Evaluation Modes |

The back-end and the frontend evaluation modes are identified in the research, as previously e-governance evaluation is mostly carried out as a front-end delivery mode. |

This section threw light on some fundamental concepts of e-governance that could provide a symbiotic role in designing an e-governance evaluation approach, however their contribution is incomplete till the whole evaluation scheme is planned and research outcomes are worked on and it demands contribution of evaluation related theories. In the next upcoming section, evaluation literature would be assessed for framework development of e-governance evaluation.

-

III. Evaluation Stream

The term ‘evaluation’ is excessively discussed in terms of its types, methods and techniques but its discussion has been limited to few areas e.g. educational and social programming with major focus over learning, efficiency and cost-effectiveness. The exploitation of evaluation theories and methods in other different disciplines is not significantly observed. However the effective utilization of evaluation theories in designing the evaluation approaches for other discipline increase the chances to meet the expected outcomes. In this section it will be observed that how evaluation literature contributes significantly in the e-governance evaluation framework development and what would be missing if the evaluation theories were kept aside (not incorporated).

The first and the foremost significant concept of evaluation is about designing the evaluation process that refers to identify the phases involved to carryout evaluation in any context. There are abundant processes available in the literature and most of them overlap with slight changes. The slight change that is observed in some processes refers to the outcomes utilization. Almost all the processes start with ‘pre-evaluation development’ phase and end on the ‘report and analysis’ phase, while fewer point towards the outcome utilization. Outcome utilization stands for the effectiveness of the results generated after the evaluation i.e. whether the evaluation produced some results which could be exploited further for improvement or the study was just to benchmark the organization. According to Mark, Henry and Julnes [11], the evaluation outcomes must be for the betterment of the society and according to Patton [12] the real worth of any evaluation based study is linked with the outcome utilization.

The use of evaluation process in designing the egovernance evaluation framework provides the sequential phases that are to be followed for evaluation with the last phase ‘outcome utilization’ that makes the research a pragmatic approach. The outcome utilization element appears to be missing in e-governance evaluation literature since previously evaluation theories are not exploited in designing the e-governance evaluation approach. It is because of the use of evaluation literature that ensures the study to be result oriented rather than merely benchmarking.

Another important concept from the evaluation stream is about the methods and techniques used to carry out evaluation. There are abundant methods and techniques available that could be used in combination as per research scope and intention. Among various methods and techniques available, the contemporary researchers are emphasizing on exploiting stakeholder evaluation methods since it ensures better evaluation design and amplify the evaluation quality [13]. Stakeholder’s evaluation ensures stakeholders participation during various phases of evaluation process .e.g. questionnaire designing, planning, outcomes delivery, and analysis.

The both concepts from the evaluation stream could possibly add value to the e-governance evaluation literature that so far has been limited to benchmark the government organizations. The use of evaluation theories while designing the e-governance evaluation framework renders the approach as pragmatic since it triggers the learning process in the tested government bodies. Learning according to 4I framework [14] is a process that involves various developmental phases i.e. intuiting, interpreting, integrating and institutionalizing. These four phases of learning process are spanned at three learning levels i.e. first level is individual level that refers to intuiting phase, Second level is group level that spans ‘interpreting and integrating phases, and the third level is organizational level that implies to the highest phase of learning i.e. ‘institutionalizing’. The results after the evaluation testing must be manipulated in a way that could paved way for the individual learning as according to 4I framework all the changes that get institutionalized are started with the individual learning. Table 2 presents the overview of the concepts chosen from the evaluation stream, their manipulation and role in the research.

Table 2. Evaluation Stream Manipulation

|

Evaluation Stream |

||

|

Concepts |

Manipulation for the evaluation design |

Role |

|

Evaluation Process |

Assessment of the Available evaluation processes from the literature to develop an evaluation process that marks out sequential phases needed to be followed for the evaluation. |

Both concepts provide the egovernance evaluation framework a pragmatic approach with an intention to initiate learning in the government bodies, that has been ignored in egovernance evaluation related literature. |

|

Evaluation Methods |

Among various available evaluation methods, stakeholder’s method is selected that increases the probability to meet the expected outcomes. |

|

Synopsis: In section 2 and section 3, it is observed that e-governance and evaluation both streams carry some fundamental concepts that if exploited together to built an e-governance evaluation framework could significantly contribute to the literature. The evaluation literature helps to develop a shell and a sequential flow in which egovernance evaluation could be carried out across tiered approach (developed over the e-governance literature). The e-governance evaluation literature so far has been missing the use of evaluation theories in it, and that’s why such studies merely benchmark the government organizations rather than triggering any learning opportunity for them that could lead to strengthen government and citizen’s relationship.

In the next upcoming section, a combinational approach will be presented for e-governance evaluation.

-

IV. Combined Evaluation Approach (mix of both streams)

Evaluation process serves as a shell and all the other methods and techniques are planned under it. The egovernance concepts helped for what is to be evaluated and how is it to be evaluated? In this section we will see how e-governance and evaluation literature go side by side to develop a combinational approach. First of all we need to develop a whole evaluation cycle that marks the sequence of the phases to be followed, so we will start from discussing and designing the e-governance evaluation process.

E-governance evaluation process refers to the steps or phases that are required to be followed to complete the evaluation of e-governance. Fig. 1 presents an evaluation process derived by assessing the available evaluation processes. The phases highlighted by the selected evaluation processes enabled to figure out a generic process that incorporates all the essential steps.

Fig. 1. E-governance evaluation process.

everyone (including people suffering from any sort of disability) is least addressed. So website evaluation is a dual sided activity i.e. usability and accessibility.

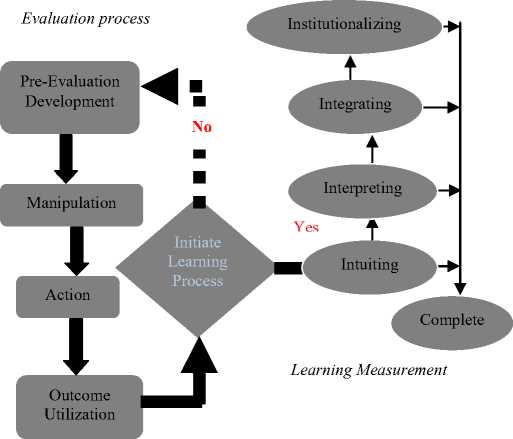

Evaluation process step 1: PRE-EVALUATION DEVELOPMENT (E-governance validation)

A. Pre-Evaluation Development

Scope:

Stakeholders

Government to citizens (G2C)

Government and Citizens. Government

means employees working in any capacity of egovernance e.g. web administrators, ICT managers, ICT advisors, ICT service assurance etc.

Evaluation Modes:

Evaluand:

end mode (internal organizational)

Website, internal systems and processes for e-

governance regulation.

Fig. 2. Pre-evaluation development.

Once pre-evaluation development is made, the next phase is the manipulation and that would be discussed in the upcoming section.

The process starts with a significant phase ‘preevaluation development’ this step is usually disguised under different nomenclatures but is present in almost all the evaluation processes. It refers to develop an understanding about the scope and the intention of the research which then facilitates to dispense the involved stakeholders within the research scope. The phase holds an imperative position as the success of the next phases is directly linked with this step.

E-Governance validation: For the development of egovernance evaluation framework, the pre-evaluation development would dispense the scope, research understanding and the potential stakeholders.

Scope: As a part of e-governance, government interacts with other government bodies (G2G), citizens (G2C), business (G2B) and employees (G2E). However for this research, the scope for the e-governance evaluation framework resides on Government to citizen’s (G2C) relationship.

Stakeholders: The scope of the research highlights two main potential stakeholders and i.e. government and the citizens.

The expansion of the G2C relationship highlights some important entities attached to it. These entities are taken out on following basis.

> The government to citizen’s relationship in egovernance is majorly established through website, so website holds a significant value.

> The government body itself is nothing it is made up of various elements e.g. employees, systems and processes etc. In e-governance stream the employees who work in any capacity with the e-governance are the stakeholders from the government side i.e. they could be web administrators, ICT managers, ICT advisors, ICT service assurance etc.

> Website evaluation is usually carried out across a performance measurement scale from general public, however its evaluation across its accessibility to

-

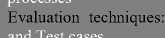

B. Manipulation

Manipulation directs to develop an evaluation plan and strategy. This phase is the soul of an evaluation process as it refers to identify the possible evaluation techniques and approaches and manipulate them accordingly to meet the research objectives. The manipulation phase focuses on what is to be evaluated and how? On the basis of the development made in previous phase, the final design is completed in following ways.

Website evaluation at first tier : Website evaluation is to be carried out in two ways i.e. at first level website accessibility is evaluate to check that the website is accessible to most groups of people including people with physical limitations. To evaluate website accessibility the international benchmark or standard is used i.e. WCAG (Web Content Accessibility Guidelines) Level II which is developed by WWW (World Wide Web). In order to carry out the accessibility test of the government websites, there are various online tools available that could be exploited to highlight the existing accessibility issues in the website and those if addressed could improve the website accessibility to wide group of people. The tools could be used in combination to identify maximum addressable issues to extend the website accessibility on equitable grounds [15].

Website evaluation at second tier: For second tier the website evaluation is carried out from citizen’s perspective. Usually for website evaluation the response from the citizens is collected via online questionnaire. This according to various scholars is not an appropriate way since there are various website measurement indicators (e.g. authenticity, accuracy, recency, integrity etc) that require some guidance to get a right feedback. To avoid that, a group of respondents are developed (from different age group and internet operatability level) and test cases are designed for each indicator with certain tasks to be followed and response is collected by a solicited approach. For website evaluation, e-governance dimensions and various available website performance indicators contribute to develop an e-government website performance scale.

Back-end evaluation mode: Back-end delivery mode is very significant in e-governance service delivery since in e-governance that apparently appears to functions externally via electronically via website portals but is in fact powered by back-end support and administration. As back-end mode evaluation, internal elements of an organization as identified by McKinsey 7S model are used. These elements along with the e-governance dimensions help to identify back end performance indicators validated for e-governance service delivery.

Evaluation approach

Evaluation p rocess Step 3: ACTION (E-governance Validation

Stakeholder evaluation

Stakeholder’s participation is essential at this phase as they are the one whom results and suggestion are delivered by the end of this phase.

Evaluation approach

Evaluation process Step 2: MANIPULATION (E-governance validation

Stakeholder evaluation

“Stakeholder’s participation made at this phase to identify stakeholders, planning and the evaluation outcomes”

Pre-requisite: government organizations and employees names are kept anonymous since study is not to benchmark any government body its sole is to provide a pragmatic and result-oriented approach.

Tasks: Website Accessibility evaluation of the selected government bodies across WCAG (Web Content Accessibility Guidelines)

Website Evaluation from citizen’s perspective

Organizational internal evaluation (employee’s perspective)

Outcomes: Results across all three tiers or levels are compiled into comprehensive reports that are delivered back to the government bodies for consideration.

Evaluation mode

Parameters:

Evaluand:

Front-end

website

Accessibility, E-government website

Fig. 4. Action.

measurement scale (new devised)

Evalaund:

Evaluation techniques:

evaluation, and Test cases

Questionnaires, Tool-based

Parameters:

Back-end Organizational internal evaluation (elements identified in the McKinsey Model

Internal elements along with the e-governance

Evaluation process step 4: OUTCOME UTILIZATION (E-governance Validation)

Evaluation approach:

Stakeholder evaluation

dimensions are synthesized into 33 performance indicators for back end e-governance support

Evaluation technique

Checklist based evaluation

Fig. 3. Manipulation.

Utilization

Stakeholder’s involvement is made at these two phases to amplify the results usability and effectiveness Responsibilities: data analysis, reports generation, delivery to stakeholders

Learning Measurement (E-governance Validation)

ement

4I framework (1999) and

-

C. Action

Once the design is made and consented by the stakeholders it is ready to be put in action that refers to its testing in the selected government bodies. The test running reveals the viability of the devised approach. The selected government bodies are tested across all the tiers identified in the manipulation phase and results are gathered which are translated into comprehensive reports and are delivered to the stakeholders for consideration.

Fig. 4 depicts the entities, which are going to be evaluated and are classified as evaluand and those are, websites for front-end delivery mode and internal processes and systems for back-end delivery mode. Same way ‘test cases’ and ‘online tools’ are exploited for front end evaluation while questionnaire is used for back end evaluation. Testing ends with generation of comprehensive reports at each tier for further consideration by the tested body.

-

D. Outcome Utilization

The results are analyzed and the expected and unexpected outcomes are notified. The utility of the results are measured across the set objectives of the research. According to various researchers the real worth of any evaluation based studies resides in its effectiveness in terms of the usefulness of the results that it generated.

Program evaluation standards (2008)

rs: To track what level of learning initiated in the tested government body i.e. individual, group or institutional

Evaluation purpose:

Results utilization to start learning

Stakeholder:

process

: Government body

Fig. 5. Outcome utilization.

In this research the expected outcome of the research is to initiate learning and to measure the extent it is achieved, according to 4I framework the four processes involved in learning are intuiting, interpreting, integrating, and institutionalizing. Moreover the feedback from the stakeholders of the tested government body is collected about the evaluation results (usefulness) and to collect their feedback a checklist is made by expanding the parameters identified by programs evaluation standards [16].

Synopsis: In section 3, the whole evaluation process of e-governance is presented stepwise indicating the use of evaluation and evaluation theories across each phase. On the basis of the previous sections, Fig. 6 presents a pictorial view of the whole e-governance evaluation.

Back end Mode

Front-end Mode

McKinsey Soft elements

Shared values

Staff

McKinsey Hard elements

System

Strategy

Skills

Style

Structure

Each dimension requires system, strategy and structure to deliver and is powered by shared values, staff, skills and style.

E-Services

E-Management

E-Commerce

E-Democracy

Customer

н

Front-end mode (Web Portal evaluation)

Tier1: Tool based evaluation

Tier2: Citizen Perspective evaluation

overnance Dimensions (key parameters)

Tier3: Check list based evaluation, Stakeholder evaluation

Back-end Mode (Internal organization evaluation)

Pre-evaluation development

Manipulation

Action

Desktop study

Scope (G2C) Stakeholder’s identification

Outcome Utilization

Step#1

Design of the evaluation approach

Test running in the government bodies

Evaluation usefulness learning measurement

Step#2

Step#3

Step#4

EVALUATION PROCESS

Evaluation approach: Stakeholder evaluation

Stakeholders are involved in manipulation and utilization to maximize results utility

Fig. 6. Combined e-governance evaluation approach.

V. Conclusion

The paper presented a design of e-governance evaluation framework building over two streams of literature i.e. e-governance and evaluation. The need of developing an approach on both streams of literature could be linked to the available approaches of egovernance evaluation that are excessively carried out to benchmark the government bodies despite of initiating any chances of improvement.

The evaluation approach developed in the paper addressed both limitations identified in the introduction section i.e. the approach is multidimensional highlighting front-end and the back-end evaluation modes. The backend evaluation mode for e-governance evaluation has not been effectively attended in the literature, therefore the paper added value to the literature by highlighting backend indicators for e-governance evaluation.

The available literature about e-governance evaluation approaches is mostly intended to benchmark the government bodies or to rate one government body over the other, however study presented an approach that accentuates on initiating learning process for the government bodies. The results of the evaluation across all the tiers are translated into comprehensive reports along with the suggestions that are delivered to the government bodies for consideration. Since it has been repeatedly emphasized that this study is not aimed to benchmark the tested government bodies therefore the name of the organizations, employees and all the related entities are kept anonymous to receive the genuine responses. This act encourages the stakeholders from the government bodies to participate too in the evaluation process and that will eventually raise the expectancy of the outcomes utilization.

The stakeholders evaluation approach made stakeholders (officials from government bodies) to participate at various phases of the evaluation process, and since the evaluation is conducted for them therefore results must be in line with their needs and requirement to trigger maximum learning.

For future or extended research, it is advised that the internal evaluation for e-governance could be expanded with adding more tiers. This study provided a check list evaluation approach however it could be enhanced to provide a broader picture of back-end delivery mode if all the internal elements are evaluated in-depth, and then the results would eventually be able to trigger higher level of learning too.

Список литературы A Pragmatic Approach for E-governance Evaluation Built over Two Streams (of Literature)

- A.V. Anttiroiko, “Democratic e-Governance – Basic concepts, Issues and Future Trends”, Digest of Electronic Government Policy and Regulation 30, 2007, pp. 83–90, IOS Press

- K. Lenk, “Electronic service delivery – A driver of public sector modernization”, Information Polity 7, 2002, pp. 87–96

- M. Butt, “Pragmatic design of e-governance evaluation framework: building upon the e-governance literature”, European journal of social sciences 42, 2014a, pp. 495-514.

- D. Coursey and F. D. Norris, “Models of E-Government: Are They Correct? An Empirical assessment”, Public Administration Review 68, 2008, pp. 524-536

- S.S. Dawes, “The Future of E-Government”, Center for Technology in Government, University at Albany/SUNY, 2002, [retrieved June 14, 2013] http://www.ctg.albany.edu/ publications/reports/future_of_egov/future_of_egov.pdf

- M.E. Cook, M.F. LaVigne, Pagano, C.M. S.S. Dawes, and T.A. Pardo, ‘Making a Case for Local E-government’, 2002, Center for Technology in Government. [Retrieved 24 June, 2013] http://www.ctg.albany.edu/publications/ guides/making_a_case/making_a_case.pdf

- M. Sakowicz, “Electronic Promise for Local and Regional Communities”, LGB Brief,Winter, 2003,pp. 24-28

- T. Yigitcanlar, and S. Baum, ‘Benchmarking local E-Government’. In Anttiroiko, Ari-Veikko (Ed.) Electronic Government: Concepts, Methodologies, Tools, and applications, 2008, DOI: 10.4018/978-1-59904-947-2.ch033

- A.N.H. Zaied, “An E-Services Success Measurement Framework”, I.J. Information Technology and Computer Science 4, 2012, pp. 18-25. DOI: 10.5815/ijitcs.2012.04.03.

- Hai @Ibrahim, Jeong Chun (2007): Fundamental of Development Administration, Selangor: Scholar Press. ISBN 978-967-5-04508-0.

- M. M. Mark, G. T. Henry, and G. Julnes, “Evaluation: An integrated framework for understanding, guiding, and improving policies and programs”, 2000, San Francisco, CA: Jossey-Bass.

- M.Q. Patton, Utilization-focused evaluation, 4th edition. Thousand Oaks, 2008, CA: Sage.

- A. H Fine, C. E. Thayer, and A. Coghlan, “Program evaluation practice in the nonprofit sector”, 2000, Washington, DC: The Aspen Institute.

- M. M. Crossan, H. W. Lane, and R. E. White, “An organizational learning framework: from intuition to institution”, Academy of Management Review, 1999, 24(3), pp. 522-537.

- M. Butt, “Result-Oriented Approach for Websites Accessibility Evaluation”, International Journal of Computer Science and Information Security 12, 2014b, pp. 22-30

- Joint Committee on Standards for Educational Evaluations, the Program Evaluation Standards: How to Assess Evaluations of Educational Programs, 1994. Thousand Oaks, CA: Sage Publications.