Augmented reality based campus guide application using feature points object detection

Автор: Dipti Pawade, Avani Sakhapara, Maheshwar Mundhe, Aniruddha Kamath, Devansh Dave

Журнал: International Journal of Information Technology and Computer Science @ijitcs

Статья в выпуске: 5 Vol. 10, 2018 года.

Бесплатный доступ

These days though GPS is a very common navigation system, still using GPS for everybody is not possible due to the lack of technological awareness. So people follow the traditional method of asking location to the local people around. After taking external guidance, even if one reaches the destination place, one is not able to understand the significance of that place. In this paper, we have discussed an implementation of a Mobile Augmented Reality based application called “ARCampusGo". With this application, one has to just scan the structure/monument to view the details about it. Along with it, this application also provides the names of nearby structure/monuments. One can select any of them and then the route to the selected monument/structure from the current location is rendered. This application renders rich, easy and interactive visual experience to the user. The performance and usability of “ARCampusGo" are evaluated during different daytime and nighttime with the different number of users. The user experience and feedback are considered for performance measurement and enhancement.

Feature point, object detection, Mobile Augmented Reality (MAR), campus guide

Короткий адрес: https://sciup.org/15016265

IDR: 15016265 | DOI: 10.5815/ijitcs.2018.05.08

Текст научной статьи Augmented reality based campus guide application using feature points object detection

Published Online May 2018 in MECS

Tourists all over the world, prior to smartphones revolution, relied on tour guides to travel across the place. Now with the advent of smartphones and Geographical Position System (GPS) [1-2], the younger generation who are technologically sound can use location tracking system effectively. But the lack of sophistication and difficult maneuverability of the technology has resulted in various usability challenges, especially among the older generations. The problem of GPS system is that it cannot provide the inner navigation of a particular location. Another issue with GPS is that it does not provide the description details of a structure/monument. Using GPS even though one can reach to the point of interest (POI), it has been observed that every structure does not have printed description boards giving insights to the details about what that structure is meant for. Sometimes the boards are present but they are at specific places and sometimes one can miss out to visit the place where the description board is there. Hence it is not ensured that one will always get to know the significance of the place while visiting it.

Thus we have introduced, “ARCampusGo” which is a Mobile Augmented Reality based application. We have tried to provide a simple, straightforward and amicable yet extensive solution for easy navigation. The goal of our application goes beyond just providing static information on the mobile devices by giving more interactive visual experience to end users for informational and navigational purposes. As a prototype, the application provides insights for Somaiya Vidyavihar Campus by scanning its structures (buildings and landmarks). This campus is located at Vidyavihar, Mumbai. It is a widely spread area and has many colleges running over there. The Google map can show the location of buildings only. The campus has other different places like cafeteria, offices, playgrounds, auditorium, open theater and many more monuments. Also, each building consists of different departments, laboratories, and other administrative and facility sections. All these places cannot be appropriately located using Google Map. This motivated us to develop a Mobile Augmented Reality based application which can serve as a campus guide and can provide rich visual experience to the user.

The rest of the paper is organized as follows. Section 2 gives an overview of different types of Augmented Reality and introduces the term Mobile Augmented Reality. In section 3, the literature survey is presented. Section 4 presents the architecture and implementation of our application ARCampusGo. In section 5 we have discussed our observations and finally, in section 6 we have concluded stating the worth of our research.

-

II. Types Of Augmented Reality(AR) and Mobile Augmented Reality(MAR)

enhancement of the user's view of the physical world by adding digital information to it [4]. Augmented Reality can be mainly classified into two categories, namely

In the constant effort of providing enhanced and rich user experience, there has been a huge growth in the development of Augmented Reality based applications [3]. Augmented Reality (AR) can be defined as an

• Marker-based AR

• MarkerLess AR

Table 1. Types of AR

|

Marker Based AR [5-7] |

MarkerLess AR [5-7] |

|

|

Concept |

|

|

|

Example |

AR generated by scanning QR code |

AR generated by scanning an object |

|

Advantages |

real world environment |

• Any part of the real environment can be used as target for tracking and placing the virtual object |

|

Disadvantages |

|

• The tracking and detection algorithms are comparatively very complex • Requires sensors for tracking the real world environment |

These two types of AR are explained in detail in Table 1. In the early development years of AR, mostly markerbased AR applications were developed. But with the advent of smartphones, there has been a huge paradigm shift from marker-based to MarkerLess AR and markerbased AR is almost faded.

Further these days, many AR based smartphone applications are developed which is referred to as Mobile. Augmented Reality (MAR) based applications. Mobile Augmented Reality can be defined as AR which the user can carry with oneself [8]. It means that the hardware required to implement the AR is a mobile device like smartphone or tablet. The general steps in a Mobile Augmented Reality consist of capturing the scene, processing and identifying the scene and mixing the computer-generated information with the real world view [9]. Most of the MAR applications are MarkerLess AR applications.

Table 2 presents the list of some MAR applications along with its description. From our study, it can be inferred that initially the MAR applications were developed for gaming purpose only. But, of late, the MAR applications are omnipresent in each and every domain, such as real estate, learning, furniture placing, room designing, navigation, social media and so on. Thus MAR applications are now becoming an integral part of mobile application development so as to enhance the real world experience of the user.

Fig.1. An AR marker

Fig.2. Markerless AR using Snapchat application

Table 2. MAR Applications

|

Application |

Purpose |

Description |

|

Pokémon Go |

Game |

It uses GPS to locate the user and move the avatars and uses smartphone camera to display the pokemon in real world |

|

Ink Hunter |

Human Tattoo |

It is used for selection and projection of tattoo design in different orientations on any part of human body |

|

WallaMe |

Information Transmission |

When a user visits a place, the user can capture the street or a wall using smartphone camera and using painting tools can create a message and associate it with the street or wall. Whenever another user visits the same place, using the smartphone camera and WallaMe app, the user can read the hidden message. It provides the facility of exchanging both, private and public messages. |

|

Amikasa |

Room Décor |

It helps to style and design a room. It helps to decide the choice of colors for the walls of the room and the placement of furniture in a room before actually buying the furniture |

|

Quiver for Fun |

Coloring for children |

Quiver company provides special print packs of the pictures on their website. After taking the print, the child can color the picture with crayons and then by using smartphone camera over the colored picture, the picture comes to life. |

|

ROAR |

Shopping |

It helps the users to instantly find the prizes of the products by clicking its picture. It also provides reviews of other people and finds out discounts as well if available on that product |

|

Snapchat |

Social Media Platform |

The users can communicate with each other through by exchanging pictures. Snapchat lenses are basically graphics that are digitally overlayed over the faces. It is also referred to as social augmented reality platform |

-

III. Related Work

The combination of the smartphone and the Internet service is the trend of the future information development and software applications. Most of the early mobile tourist applications comprised of various features like a virtual tour of important places located, voice-based information provider, location identifier and a map based path selection function to select the best path to a specified destination within the premises.

Some of the recent tourist applications are discussed here in detail. Sawsan [10] proposed a system which consists of two components, mainly the website and a mobile application. Here first the user has to login to the website and select the destination before commencing the actual tour. Based on the selected destination the system will generate two files.

-

• XML file which contains information about destination place and nearby hotels. This is considered to be an input for the mobile application (J2ME and Android) module.

-

• Other files will be containing the supporting map images.

The web application will generate .jar or .apk file depending on the preference given by the user at the time of registration. The user has to install that application. Along with this application, both XML and image files will be loaded on one’s mobile and can be used as a guide during the tour.

Advantage: The application can be used offline also.

Limitation:

-

• The mobile application has large memory footprint as it contains all information and related map images.

-

• As the destination is fixed and then the application is made, feature for destination location customization is not available in the same application.

Alexander et. al. [11] have designed a Smart-M3 platform [12] based tourist assistant system called “TAIS” which extracts the information from various web sources and recommend the nearby location. They also provide facilities like the path to the destination, like-dislike options and provision to rate that location. Users reviews collected by this application can be utilized by other interested people to know about the worth of visiting a particular place.

Huei-Ming Chiao et. al. [13] have introduced a 3D VR based tour guide system which provides rich visual experience to the traveler accompanied by entertainment.

Lin Hui et. al. [14] have demonstrated smartphone application blended with mobile augmented reality which serves as a guide for Yilan tourism. The system has basically the two modules

-

1. Map module provides the facility to search information about the hot springs and its corresponding information is given by using the Google Map.

-

2. Augmented reality module provides two functionalities. The first one makes use of point of interest (POI) along with a 2D virtual image to guide the user to reach the destination. Using second functionality, one can scan the Quick Response code for each POI and can redirect to the official site of Yilan. As additional

functionality, this application also provides facilities for capturing photos and video recording etc.

Cintya [15] has developed a mobile application for cultural tourism and named it as “AR City". The architecture of the application is divided into Service Consumer which is related to front end and Service Provider which is related to back end. Front end to backend communication is carried out using JSON message. The front end, back-end, database engine are hosted in PaaS service. Finally, the results validation is carried out using the quasi-experiment execution method. This application makes use of the advantage of PaaS services to improve their performance.

-

F. Wedyan et. al. [16] has put forth “JoGuide”; an android application. This application uses the phone camera to capture the surrounding scene. Before

initializing the camera, GPS needs to be turned on. According to the captured scene request is sent to the GPS and Foursquare [17] and the surrounding site of interest is displayed.

Table 3, summarizes the work done in this area. From the literature survey we observed that in the beginning, the focus of researchers was on providing the information about the site of interest only. Then navigation services and possible transportation options were also considered. But in past few years, along with providing accurate location and precise information, the rich visual experience is also a concern. So the current research is now emphasizing on the smartphones based application developed by considering Mobile Augmented Reality.

Table 3. Summary of background work

|

Author |

Application description / features provided |

Methodology |

|

Sawsan [10] |

Tourist guide systems which can be used on mobile even when offline. But this application is sort of rigid and serves direction to the pre-selected location only. |

According to users preference of destination .jar or .apk file is prepared which can be installed on mobile and can be used for navigation. |

|

Alexander et. al. [11] |

“TAIS” provide features like

|

OpenStreetMap-based web mapping service.

|

|

Huei-Ming Chiao et. al. [13] |

Game-based Tourism Information Service |

|

|

Lin Hui et. al. [14] |

Mobile augmented reality based Yilan Hot Spring tourism guide smartphone application |

|

|

Cintya [15] |

“AR City!” provide features like

The focus is to study the impact on tourism when augmented reality is blended with tourist guide mobile application. |

|

|

F. Wedyan et. al. [16] |

Using “JoGuide” one can explore the nearby site of interest just by scanning the scene around. |

• GPS and FourSquare [17] are used to locate the nearby location accurately. |

navigation details are displayed on the screen.

-

IV. Implementation Overview The application has four major modules

“ARCampusGo"- a mobile augmented reality based informative guide application is meant for informational and navigational purposes pertaining to places of attraction. Internet connection availability is the prerequisite for using this application. Being user one just needs to install this application, scan the monument/structure on campus. As a result, information related to that monument/structure is being displayed using augmented reality. Along with that nearby points of interest are also provided. On selecting a particular option

-

1. Android application which serves as client side

-

2. Server Side Service

-

3. Communication Interfaces

-

4. Mobile Augmented Reality based response generation for user query (MAR module)

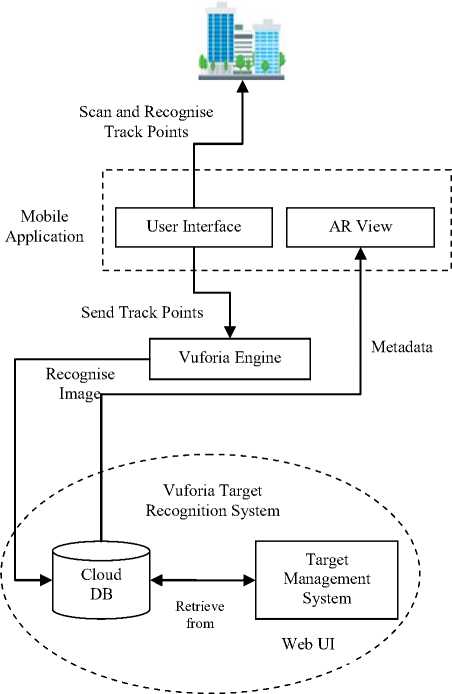

The architecture of the application is demonstrated in Fig. 3. Client-side application is developed using Android SDK. The user interface uses the inbuilt camera of the smartphone to scan the monument/structure. As Internet connection is mandatory, validation is carried out and a warning is displayed if the Internet connection is unavailable. Accompanying to these necessary UI functional requirements; few additional features like changing the font size and easy navigation through the available functional options in the application are provided.

Fig.3. ARCampusGo application architecture

Next Vuforia Cloud Service is used as Server Side Service. To make the application lite, the database is managed and target recognition is performed on the server side. The cloud storage development has two major parts

-

• Vuforia license manager and

-

• Vuforia target manager

For developing an application using Vuforia, first one needs to create a unique license key. Once this key is generated, the cloud database can be generated using Vuforia target manager. The Vuforia cloud-based database stores the targets i.e. images of structures. The cloud-based database contains two Access Keys:

-

• Client Access key

-

• Server Access key

The Client Access Keys will be passed to the Vuforia library within the application in order to authenticate itself with the server. This key will be a unique key for the application. The Server Access Key is used to upload and manage images in the cloud target database, via the REST interfaces. The database has following attributes associated with it:

-

• Database Name: name of the database in which the target images will be stored

-

• App Name: name of the application for which database is created

-

• Database Type: type of database whether it is cloud or device (local to the device). In our case, we have chosen the database type as a cloud in order to minimize the application footprints.

-

• Targets: number of target images stored in the database. Here target images are the images of different monuments/structures present on campus.

-

• Date of modified: last date when the database is modified.

After the database is created, one can upload as many target images as required along with its associated metadata. Here metadata refers to the informative description of the target image. Once the target is added, an ID is assigned to it and feature points for that target image are marked and stored in the cloud database.

The next module is communication interfaces. The application communicates with a cloud-based database server by using Internet services available on smartphones. This communication is carried out in the form of database requests to the server for feature point matching and providing back the result of the query to the client.

Finally, the Augmented Reality based rendering view is generated using Unity 3D platform.

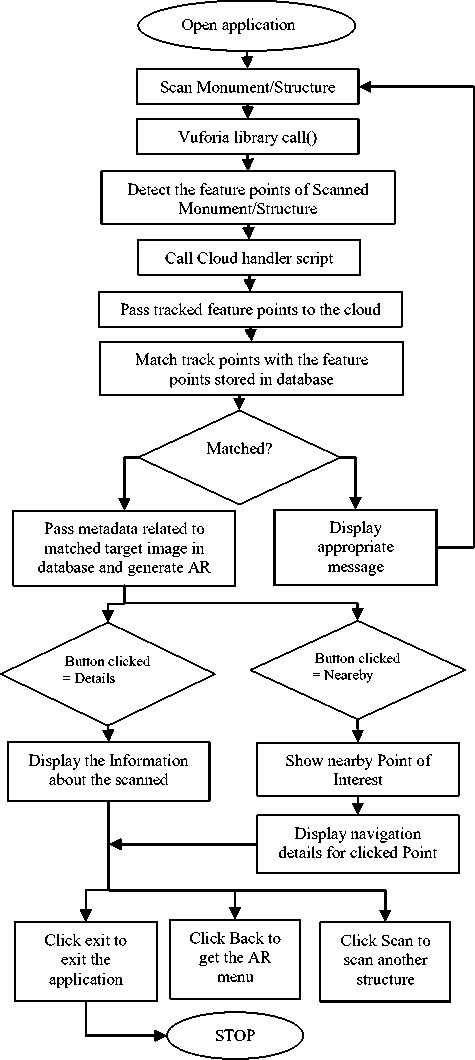

As demonstrated in Fig. 4, the working of the application is given as follows:

-

1. The intended structure is scanned by the Smartphone camera and Vuforia library is called.

-

2. The tracking points are marked and recorded by the “ARCampusGo” application and Cloud handler script is called.

-

3. These track points are sent to the Vuforia Engine which in turn sends these points to Cloud database in Vuforia Cloud Recognition System.

-

4. The inbuilt Recognition system, using its Target Management system and feature detection system, then matches the track points with the help of Targets which are prior stored in the cloud database

-

5. If matched, then the related metadata is retrieved from cloud database and sent to the Vuforia engine. If not matched, then intended structure is scanned again.

-

6. The engine then generates and renders an augmented view for the user having various options like viewing the information for the site of interest or navigating to nearby points of interest.

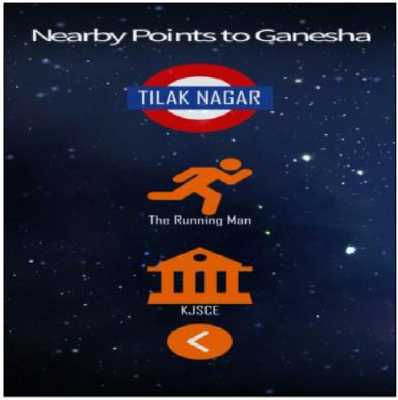

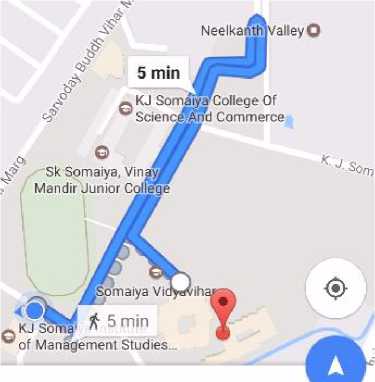

On selecting navigating to other nearby points, a list of nearby points is displayed. And when the user selects a nearby point, its location is displayed on Google Map.

The following major processes of the implementation are described as follows

-

A. Scanning intended structure/monument

-

B. Matching of Scanned image with target image

-

C. Displaying visually rich AR view

Fig.4. Working of application

-

A. Scanning intended structure/monument

-

1. Initialize_camera()

//Starts device camera for scanning.

-

2. Scanline();

//using this function a green scan line is displayed as an indication for scanning process.

-

3. arr_points[]=Detect_track_points();

/* The Detect_track_points() detects various track points and stores it in arr_points[] array which is based on feature detection algorithm.*/

-

4. Send(arr_points[]);

//The arr_points[] is sent to cloud database for matching purposes

-

B. Matching of Scanned image with target image in database

-

1. Receive[]=arr_points[];

//The arr_points[] are stored in Receive[] array for modularity purposes.

-

2. If (receive[]==stored_points[])

-

3. Then Send_output(AR_view_metadata);

/* The stored_points are track points stored in the cloud database. If the received track points match the stored points in database based on Target Recognition system then AR_view_metadata is sent to user as metadata.*/

-

4. Else

Send_output(error);

-

C. Displaying visually rich AR view

After the scanning and matching functionality, a menu list is rendered. Then based on the buttons selected, three different types of output are being displayed.

-

1. AR_display=receive_output()

//The output sent from Vuforia target recognition system is received by receive_output();

-

2. Render(AR_menu_display)

-

3. If (Details_button_clicked==true) Then Render (AR_display_details);

-

4. Else If (Directions_button_clicked==true) Then Render (open_google_maps());

-

5. Else If (About_button_clicked==true) Then Render (AR_display_about);

-

6. After rendering, to revert back to menu If (Back_button_clicked==true) Render(AR_menu_display);

When the user tries to exit from this application we are asking for feedback and rating. In the initial stages, this feedback played an important role in improving application's accuracy and precision. As it is a customized application for Somaiya Vidyavihar Campus, the database is also customized and built by us, solely consisting of all the structures and significant places within the campus. So initially, if someone would scan a random structure on campus and if that was not present in the database, then “match not found” message was displayed to the user. We then maintained the log of such locations for which the match was not found. Through the rigorous analysis of the unsatisfactory feedback and failure messages, we identified the missing structures and places within the Somaiya Vidyavihar Campus and added them to the database accordingly. After few iterations, the failure rate reduced to zero. We observed that the response time for AR rendering is directly proportional to the number of target images in the cloud database. More the number of target images in the database more is the time required for AR rendering, as the time required for target recognition is more. In order to overcome this overhead, we created a list of 10 buildings, referred to as prime locations. For each prime location, the images of structure and its entrance are considered as primary image sets. For internal structures within these buildings, while matching the tracking points, in the first scan the set of primary images are compared and then for next scan instead of going through the whole database that particular set is compared. So, in the end, we have approximately 250 images which are categorized into 10 sets.

-

V. Result and Discussion

Fig. 5 represents the scanning of monument activity. After a successful scan, VR view is rendered to the user with various options like About us, details and directions as shown in Fig. 6. When any user clicks on ‘Details’, information about that particular structure/monument is provided as given in Fig. 7. If one clicks on ‘Direction’, various options of navigation to the nearby points to that structure are displayed as demonstrated in Fig. 8. If the user clicks on any of the nearby points displayed, the Google maps application will be opened to provide directions from current location to select the nearby point as shown in Fig. 9.

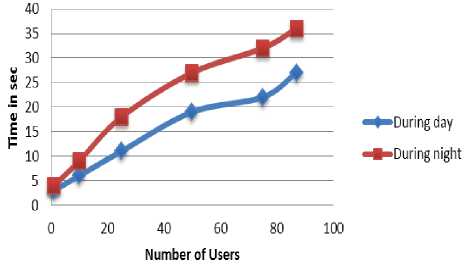

recorded for each user in batch. Table 4 elaborates the average time (after round-off) taken by the application during day and night.

Fig.6. AR view with menu options

Ganesha ' • .

“Art is a spontaneous flowof inner soul For m^it is nothing but the \Aorship” . * * ■

Phis is the vision of Bhagwan Rampure, th£ famed sculptor of th15 artistic beauty. The 8 ft. 'Big Bull’ executed in bronze and on display at the Bombay Stock Exchange is оцр of his most popular worlds. KJ Somaiya Institute welcomes its visitors with unique statue of Ganesha that is carved out of a single stone Fig.5. Scanning monument Fig.7. Nearby points of interest In order to measure the performance of "ARCampusGo", we have asked people to use this application during day and night time. We have considered users from different age groups. They had different smartphone models and Internet service used by them were also different. Experimentation is carried out in batches of different size (1,10,25,50,75 and 87 users in each batch respectively). The time taken by the application to scan an object and render the result is Fig.8. Nearby points of interest Fig.9. Google map showing direction Table 4. Average Time of AR Rendering During Day and Night Number of users Average Time required (in sec) for scanning object and rendering AR view during day Average Time required (sec) for scanning object and rendering the AR view during night 1 3 4 10 6 9 25 11 18 50 19 27 75 22 32 87 27 36 From the graph shown in Fig. 10, it has been observed that the time required to render the result during the day is less as compared to night. This is because, during the daytime, light intensity and clarity are much better, so identifying tracking point require low computation time. It has been also observed that the response time is directly proportional to the number of the concurrent users. More the number of concurrent users more is the query handling load on cloud and thus more time is taken to process it. Fig10. Relation between number of users and response time of application The usability testing is carried out through feedback rating. We have asked following question to 87 users and each question is rated on a scale of 0 to 10. The final rating for a question is calculated as the average of rating values given by all the 87 users for that question. Here 0 is the worst rating and 10 is the best one. Table 5. Analysis of user feedback QN Question Rating 1 The user interface of application easy to use. 8.16 2 The response time of application is good. 6.03 3 The application is bulky in size. 8.70 4 The application frequently crashes/hangs. 7.80 5 The application runs well with a low internet connection. 5.42 6 The application can recognize the structure by scanning it from any angle. 9.98 7 The AR view rendered by the application is interesting and has a rich visual impact. 8.91 8 The description provided by application about structure/ monument is worth to know the details about it. 9.66 9 The application helps to explore the nearby site of Interest. 9.20 10 The application is useful to navigate effortlessly through the campus. 9.57 From Table 5, it is observed that the rating for response time (Question no. 2) is around 6 and for crashing / hanging frequency (Question no. 4), it is 7.80. This is because response time is highly dependent on various factors such as Internet speed, processing power, the internal free memory of the smartphone. It is also dependent on the number of applications running on the smartphone. The rating value obtained for the performance of the application with low internet speed (Question no. 5) is low. The main reason behind this is the structure recognition process is carried out on the server side and target database is maintained in the cloud. Thus if Internet connectivity is poor then it requires more time to process the query and retrieve the results. Also, intermittent Internet connectivity increases the query processing time. From the overall results, it can be interpreted that “ARCampusGo" facilitates easy navigation through the campus. It is user-friendly, a lightweight application which serves as an effective guide for campus exploration. VI. Conclusion This paper discusses the development of “ARCampusGo" which is a Mobile Augmented Reality based application. It is specifically designed to give an insight of Somaiya Vidyavihar Campus. The informational and navigational requirements are sufficed with the help of Unity 3D platform and Vuforia Cloud Services. The application can be used effectively in low light intensity. Use of Mobile Augmented Reality help to improvise the visuals rendered to the user. The only constraint is Internet speed as far as the performance is concerned. The application can be extended further to include navigation by just scanning the random surrounding rather than a specific structure or monument. Other additional functionalities like details of events upcoming as well as current events can be provided as buzz. The application discussed here is just a prototype. On similar lines, we can design an application for a shopping mall, Special Economic Zones (SEZ), railway stations, airports etc. It not only just helps to navigate but also provides easy access to information related to location.

Список литературы Augmented reality based campus guide application using feature points object detection

- Omisore M. O., Ofoegbu E. O., Fayemiwo M. A., Olokun F. R., Babalola A. E, “Intelligent Mobile Application for Route Finding and Transport Cost Analysis”, Vol.8, No.09, pp. 73-80. DOI: 10.5815/ijitcs.2016.09.09

- Shaveta Bhatia,"Design and Development of New Application for Mobile Tracking", International Journal of Information Technology and Computer Science(IJITCS), Vol.8, No.11, pp.54-60, 2016. DOI: 10.5815/ijitcs.2016.11.07.

- Adi Ferliyanto Waruwu, I Putu Agung Bayupati and I Ketut Gede Darma Putra, “Augmented Reality Mobile Application of Balinese Hindu Temples: DewataAR” International Journal of Computer Network and Information Security, Vol. 7, No. 2, pp.59-66, 2015. DOI: 10.5815/ ijcnis.2015.02.07

- Julie Carmigniani and Borko Furht, "Augmented Reality: An Overview" In J. Carmigniani and B. Furht, Eds., Handbook of Augmented Reality, Springer, New York, 2011, pp 3-46

- Harry E. Pence, “Smartphones, Smart Objects, and Augmented Reality”, Journal: The Reference Librarian, Volume 52, Issue 1-2, 2010, pp 136-145

- Anuroop Katiyar, Karan Kalra, and Chetan Garg, "Marker Based Augmented Reality", Advances in Computer Science and Information Technology (ACSIT), Volume 2, Number 5; April-June 2015, pp 441-445Daniel Lelis Baggio, Shervin Emami, David Millan Escrivá, Khvedchenia Ievgen, Naureen Mahmood, Jason Saragih, Roy Shilkrot, “Mastering OpenCV with Practical Computer Vision Projects”, 1st ed., Packt Publishing,2012, pp 47-127.

- Alan B. Craig, ”Understanding Augmented Reality”, 1st ed., Elsevier Inc, 2013, pp 209-220.

- Abrar Omar Alkhamisi, Muhammad Mostafa Monowar, “Rise of Augmented Reality: Current and Future Application Areas”, International Journal of Internet and Distributed Systems (IJIDS), Volume 1,o. 4, 2013, pp 25-34

- Sawsan Alshattnawi, “Building Mobile Tourist Guide Applications using Different Development Mobile Platforms” International Journal of Advanced Science and Technology (IJAST), Volume 54, May 2013, pp 13-22

- Alexander Smirnov, Alexey Kashevnik, Nikolay Shilov, Nikolay Teslya, Anton Shabaev, “Mobile Application for Guiding Tourist Activities: Tourist Assistant – TAIS” IEEE Proceedings of Sixteenth Conference of Open Innovations Association FRUCT, Oulu, Finland, 27-31 October 2014, pp 95-100

- Jukka Honkola, Hannu Laine, Ronald Brown, Olli Tyrkkö, "Smart-M3 Information Sharing Platform," IEEE Proceedings of IEEE Symposium on Computers and Communications (ISCC’10), Riccione, Italy, 22-25 June 2010, pp 1041-1046

- Huei-Ming Chiao, Wei-Hsin Huang, Ji-Liang Doong, “Innovative Research on Development Game-based Tourism Information Service by Using Component-based Software Engineering” IEEE Proceedings of the 2017 International Conference on Applied System Innovation IEEE-ICASI 2017, Sapporo, Japan, 13-17 May 2017, pp 1565-1567

- Lin Hui, Fu Yi Hung, Yu Ling Chien, Wan Ting Tsai, Jeng Jia Shie, “Mobile Augmented Reality of Tourism-Yilan Hot Spring”, IEEE Proceedings of Seventh International Conference on Ubi-Media Computing and Workshops (UMEDIA), Ulaanbaatar, Mongolia,12-14 July 2014, pp 209-214

- Cintya de la Nube Aguirre Brito, “Augmented reality applied in tourism mobile applications”, IEEE Proceedings of Second International Conference on eDemocracy & eGovernment (ICEDEG), Quito, Ecuador, 8-10 April 2015, pp 120-125.

- Fadi Wedyan, Reema Freihat, Ibrahim Aloqily, Suzan Wedyan, “JoGuide: A mobile augmented reality application for locating and describing surrounding sites,'' Proceedings of The Ninth International Conference on Advances in Computer-Human Interactions(ACHI 2016), Venice, Italy, April 2016, pp 88-94

- “FourSquare,” URL: http://www.foursquare.com (Last Accessed Date: 4 January 2018)