Facial Expression Classification Using Artificial Neural Network and K-Nearest Neighbor

Автор: Tran Son Hai, Le Hoang Thai, Nguyen Thanh Thuy

Журнал: International Journal of Information Technology and Computer Science(IJITCS) @ijitcs

Статья в выпуске: 3 Vol. 7, 2015 года.

Бесплатный доступ

Facial Expression is a key component in evaluating a person's feelings, intentions and characteristics. Facial Expression is an important part of human-computer interaction and has the potential to play an equal important role in human-computer interaction. The aim of this paper is bring together two areas in which are Artificial Neural Network (ANN) and K-Nearest Neighbor (K-NN) applying for facial expression classification. We propose the ANN_KNN model using ANN and K-NN classifier. ICA is used to extract facial features. The ratios feature is the input of K-NN classifier. We apply ANN_KNN model for seven basic facial expression classifications (anger, fear, surprise, sad, happy, disgust and neutral) on JAFEE database. The classifying precision 92.38% has been showed the feasibility of our proposal model.

Facial Expression Classification, Artificial Neural Network (ANN), K-Nearest Neighbor (K-NN), Independent Component Analysis (ICA)

Короткий адрес: https://sciup.org/15012259

IDR: 15012259

Текст научной статьи Facial Expression Classification Using Artificial Neural Network and K-Nearest Neighbor

Published Online February 2015 in MECS

A facial expression is an expression of an emotional state can be seen, cognitive activities, intent, character of a person [1]. Mehrabian [2] showed that while emotional communication, 55% of the message conveyed by facial expression, while only 7% by linguistic language and 38% by intonation. Facial Expressions are an important component of human-computer communication. There are many approaches for facial expression classification problem such as: using AdaBoost, K-NN, Support Vector Machine (SVM) and Artificial Neural Network (ANN).

AdaBoost is a weak classifier and can be used in conjunctions with another machine learning algorithm to improve their performance. The k-nearest neighbor (k-NN) is a common tool in image classification, but its sequential implementation is slowly and requires the high calculating costs because of the large representation space of images.

SVM applies for pattern classification even with large representation space. In this approach, we need to define the hyper-planes for pattern classification. The number of hyper-plans is ratio to the number of classes. Unseen class elements can be identified by calculating posterior probabilities in 1-vs-1/1-vs-rest binary SVMs (Platt's method) or in multiclass SVMs [3].

ANN will be trained with the patterns to find the weight collection for the classification process. ANN overcomes this weakness of SVM of using suitable threshold in the classification for outside pattern. If the patterns do not belong any in L given classes, ANN identify and report results to the outside given classes. Thus, ANN has been applied for facial expression classification by many researchers [4, 10, 11, 12, and 13].

Besides, some fusion model using multi soft computing techniques for classifying. For example, ANN_SVM [4] has been brought together Artificial Neural Network and Support Vector Machine applying for facial expression classification.

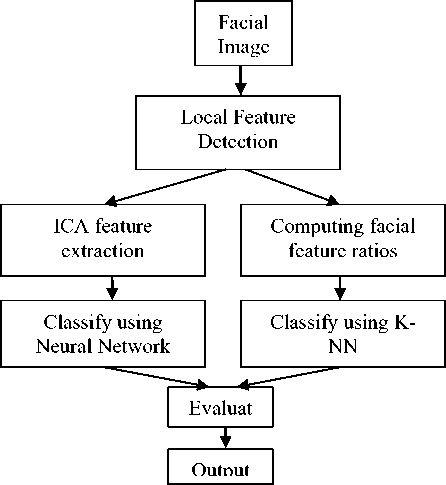

The aim of this paper is bring together two areas in which are Artificial Neural Network (ANN) and K-Nearest Neighbor (K-NN) applying for facial expression classification. Thus we propose a model for Facial Expression Classification using ANN and KNN below:

In the Fig. 1 model, we use two classifiers ANN and K-NN. The input of ANN classifier is ICA feature extraction. The input of KNN classifier is the geometrical features and their ratios. Finally, we combine the conclusions of ANN classifying and K-NN classifying to give the final classifying result.

Firstly, Facial Expression is classified by ANN. In second phase, we calculate width of left eye, width of right eye, width of mouth, distance from left eyebrow to right eyebrow, height of left eye, height of right eye, height of mouth, distance from left eye to left eyebrow, distance from right eye to right eyebrow. After that, we use k-mean to classify Facial Expression base ratio of distance between them.

Fig. 1. Facial Expression Classification Process

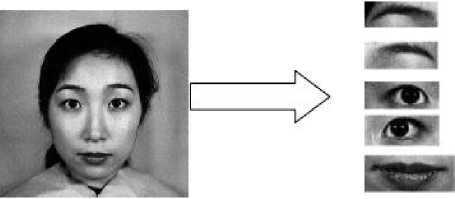

Where K: the number dimension of feature space. The above vector yface is the global feature vector. Detecting and extracting eyebrows, eyes and mouth features, we get 5 local feature vectors (y left_eye , y left_eyebrow , y right_eye , y right_eyebrow , y mouth ) below:

x /eft _ eyebrow = ( X 1 , X 2 ’.", X 450 )

x rght _ eyebrow ( x 1 , X 2 ,

...

, x450 )

xlefi_eye (X1’ X2,"., X450)

x right _ eye ( X 1 ’ X 2 ’ . ..’ X 45o )

xmouth (x1, X2,-", x800)

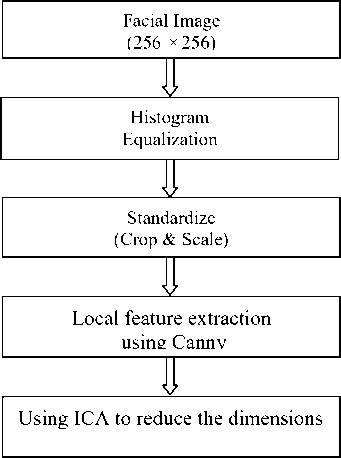

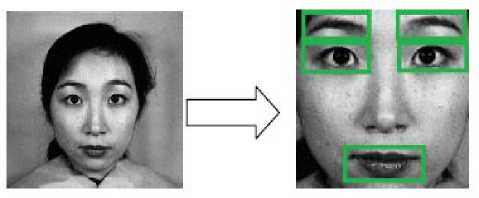

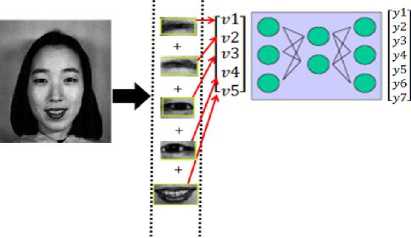

Local feature analysis in facial expression is very significant for facial expression analysis. Canny is used to detect local region such as: left-right eyes, mouth, leftright eyebrows [5]. In first phase, we use ICA to present local features in small presenting space. Our local feature extraction below:

Fig. 3. Eyebrow, eye and mouth detection

The local features are projected to K dimensions feature space. There are 5 local feature vectors below:

y /eft _ eyebrow = ( y 1 , y 2 ,”., y K )

y right _ eyebrow = ( y 1 , y 2 ,

...

,yK )T

yefft _ eye = (y1, y 2,---, yK )

yright _ eye = (y1, y 2,..., Ук ))

ymouh = (У1, У 2,.-., Ук )

Combining local and global feature vector:

face

y left _ eyebrow

|

Fig. 2. Facial Feature Extraction Process |

y comp |

y right _ eyebrow |

(4) |

|

After facial image had equalized histogram, it would be standardized to 30x30 size and map to feature space: |

y left _ eye y right _ eye |

||

|

xface = ( x 1 , X 2 ,..., X 900 ) |

_ y mouth _ |

||

|

Ф (1) |

y^ce = (У1, y 2,..., Ук )

ICA

yface _ ICA

y -— . y yleft _ eyebrow Уleft _ eyebrow _ ICA

Fig. 4. Local and global feature extraction

ICA yright _ eyebrow yright _ eyebrow _ ICA

ICA yleft _ eye — ^ yiejt _ eye _ ICA

У -—^ У

У right _ eye У right _ eye _ ICA

B. Artificial Neural Network for facial expression

classification

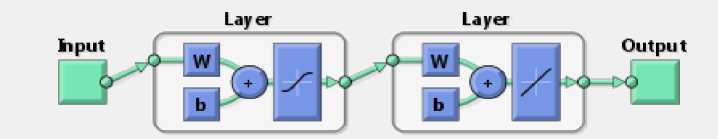

Multi-Layer Perceptron (MLP) [7] is a function

-

III. Facial Expression Classification using ANN and K-NN

-

A. Independent Component Analysis for feature extraction

Global and local features are extracted by ICA [6] in order to reduce the number dimensions of feature space:

y = MLP ( x , W ) , with x = ( X ;, x 2,..., xn ) and y = ( y i ,y 2 ,...,y m )

W is the set of parameters

{ w y ,w> Lo} v i,j,L

Fig. 5. Multi-Layer Perceptron structure

The MLP uses the algorithm of Gradient Back-Propagation for training to update W and transfer: yL = f(s), where

f(t)- T^iezr (6)

Number of input layer neurons: n=200 input nodes corresponding to the total dimension of five feature vectors in V set. In the input layer (L=0):

y0 = x , wherei = 1..200

Number of hidden layers: 1.Number of hidden layer neurons: will be identified based on experimental result. In the hidden layer (L=1):

yX j = 2 yLfX w y + w Lo andy T = f ( yXj) (7)

Number of output layer neurons: m=7 output nodes corresponding to seven basic facial expression analyses: anger, fear, surprise, sad, happy, disgust and neutral. The first output node give the probability assessment belong anger. In the output layer (L=2):

yt2 = yz, wherei = 1 ..7 (8)

ICA

Fig. 6. Structure of MLP Neural Network

Table 1. Output node corresponding to anger, fear, surprise, sad, happy, disgust and neutral

|

Feeling |

Max |

|

Anger |

Y1 |

|

Fear |

Y2 |

|

Surprise |

Y3 |

|

Sad |

Y4 |

|

Happy |

Y5 |

|

Disgust |

Y6 |

|

Neutral |

Y7 |

-

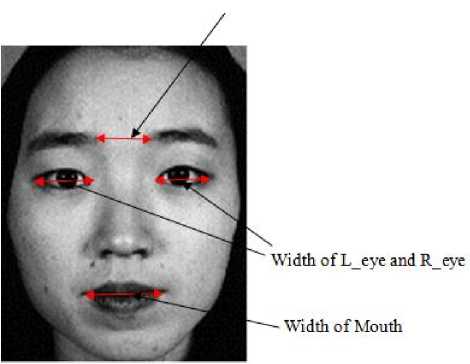

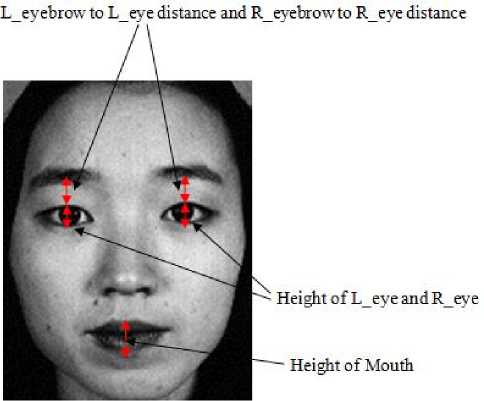

C. K-NN Classification using Ratios local features

Facial ratios features: The primary facial features are located to compute the ratios for facial expression classification. Nine ratios are calculated for facial face database comprising anger, fear, surprise, sad, happy, disgust and neutral. Figure 7 gives the ratios of l_eyebrow to r_eyebrow distance, width of l_eye, width of r_eye, width of mouth, l_eyebrow to l_eye distance, r_eyebrow to r_eye distance, height of l_eye, height of r_eye and height of mouth for feature extraction.

Leyebrow to R eyebrow distance

Fig. 7. Ratio1,Ratio2 and Ratio3

Fig. 8. Ratio4, Ratio5, Ratio6, Ratio7, Ratio8 and Ratio9

Ratio1, ratio2, ratio3, ratio4, ratio5, ratio6, ratio7, ratio8 and ratio9 are computed using the Equ. 9. below:

L eyebrow to R eyebrow dis tan ce ratio1 = width _ of _L_eye

L eyebrow to R eyebrow dis tan ce ratio2 = width _ of _R_eye

L eyebrow to R eyebrow dis tan ce ratio3 = width _of _ mouth

L eyebrow to L eye dis tan ce ratio4 = height_of _L_eye

R eyebrow to R eye dis tan ce ratio5 = height_of _R_eye

L eyebrow to L eye dis tan ce ratio6 = height _of _ mouth

R eyebrow to R eye dis tan ce ratio7 = height _of _ mouth ratio8=height_of_L_eye height _of _ mouth height of R eye

ratio9 = _ _ _ (9)

height _ of _ mouth

The ratios feature is the input of K-NN classifying method using Mahalanobis distance measure.

-

D. Fusion of ANN and KNN for Facial Expression Classification

The output of ANN classifier:

y ANN =(y ANN_1 , y ANN_2 , y ANN_3 , y ANN_4 , y ANN_5 , y ANN_6 , y ANN_7 )

The output of K-NN classifier:

y KNN =(y KNN_1 ,y KNN_2 , y KNN_3 , y KNN_4 , y KNN_5 , y KNN_6 , y KNN_7 )

The final output of integrated classifier is computed by using minimum function below:

Y final =min (1-y ANN , y KNN )

Yfinal_l = min (1-yANN_l, yKNN_l) (10)

Where, l=1..7 corresponding to seven basic facial expression analyses: anger, fear, surprise, sad, happy, disgust and neutral.

Table 2. Output node corresponding to seven basic facial expressions

|

Feeling |

Max |

|

Anger |

Y final_1 |

|

Fear |

Y final_2 |

|

Surprise |

Y final_3 |

|

Sad |

Y final_4 |

|

Happy |

Y final_5 |

|

Disgust |

Y final_6 |

|

Neutral |

Y final_7 |

In the above Table, Y final_1 represents the ability of the facial image in the class ‘anger’. Yfinal_2 represents the ability of the facial image in the class ‘fear’...Y final_1 represents the ability of the facial image in the class ‘neutral’.

-

IV. Experimental Results

We apply our proposal method for seven basic facial expressions on JAFEE database consisting 213 images posed by 10 Japanese female models. The result of classification sees the table below:

Table 3. Seven basic facial expression classification

|

Feeling |

ICA_ ANN |

Ratios_ KNN |

ICA_ANN+ Ratios_ KNN |

|

Anger |

93.33% |

93.33% |

93.33% |

|

Fear |

86.67% |

86.67% |

86.67% |

|

Surprise |

93.33% |

86.67% |

93.33% |

|

Sad |

93.33% |

93.33% |

93.33% |

|

Happy |

93.33% |

86.67% |

93.33% |

|

Disgust |

86.67% |

93.33% |

93.33% |

|

Neutral |

93.33% |

93.33% |

93.33% |

|

Average |

91.43% |

90.48% |

92.38% |

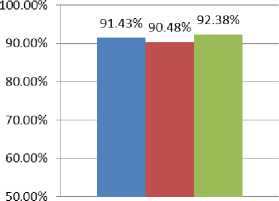

Comparation Classification Rate of Methods

■ ICA_ANN

■ RatiosKNN

■ ICA ANN+ Ratios KNN

Fig. 9. Comparison of classifying methods

In the above Figure 9, the combination of ANN and K-NN has been increased the classification accuracy.

-

V. Conclusion

In this paper, we propose a model for facial expression classification. The facial image is extracted to six feature vectors (one global feature representing the whole face and five local feature vectors representing the eyebrow, eye and mouth of the face). All feature vectors processed by ICA are the input of ANN classifier. The distance ratios of the local region of face are the input of K-NN classifier. The minimum function used to combine the output of ANN and K-NN classifier. Our proposed model ANN_KNN uses ANN and KNN with the suitable feature for classifying.

We apply ANN_KNN model for seven basic facial expression classification (anger, fear, surprise, sad, happy, disgust and neutral) on JAFEE database. The classifying precision is 92.38%. This experimental result has been showed the feasibility of our proposal model.

Список литературы Facial Expression Classification Using Artificial Neural Network and K-Nearest Neighbor

- G. Donato, M.S. Barlett, J.C. Hager, P. Ekman, T.J. Sejnowski, “Classifying facial actions”, IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol 21, No 10,pp 974–989, 1999.

- A. Mehrabian, “Communication Without Words”, Psychology Today, Vol. 2, No. 4, pp. 53–56, 1968.

- Ting-Fan Wu, Chih-Jen Lin, and Ruby C. Weng, “Probability Estimates for Multi-class Classification by Pairwise Coupling”. J. Mach. Learn. Res. 5, 975-1005, 2004

- L. H. Thai, T. S. Hai, N. T. Thuy, “Image Classification using Support Vector Machine and Artificial Neural Network”, International Journal of Information Technology and Computer Science, Vol 4, No 5, pp. 32-38, 2012, DOI: 10.5815/ijitcs.2012.05.05.

- L. H. Thai, N. D. T. Nguyen, T. S. Hai, “Facial Expression Classification System Integrating Canny, Principal Component Analysis and Artificial Neural Network”, 3rd International Conference on Machine Learning and Computing, ICMLC Proceedings, Vol. 4, pp. 306-309, 2011.

- Zhang Qiang, Chen Chen, Zhou Changjun, Wei Xiaopeng, "Independent Component Analysis of Gabor Features for Facial Expression Recognition," Information Science and Engineering, 2008. ISISE '08. International Symposium on, vol.1, no., pp.84, 87, DOI: 10.1109/ISISE.2008.323, 2008

- H.M, Ebeid, “Using MLP and RBF neural networks for face recognition: An insightful comparative case study”, Computer Engineering & Systems (ICCES), 2011 International Conference Proceesings, pp. 123-128, 2011.

- Ying-Li Tian; Kanade, T.; Cohn, J.F., "Recognizing action units for facial expression analysis," Pattern Analysis and Machine Intelligence, IEEE Transactions on , vol.23, no.2, pp.97,115, DOI: 10.1109/34.908962, 2011

- P. S. Hiremath, Manjunatha Hiremath, “Depth and Intensity Gabor Features Based 3D Face Recognition Using Symbolic LDA and AdaBoost”, International Journal of Image, Graphics, and Signal Processing, Vol 6, No 1, pp. 24-31, 2013, DOI: 10.5815/ijigsp.2014.01.05.

- G.W. Cottrell, J. Metcalfe, “EMPATH: face, gender and emotion recognition using holons”, Advances in Neural Information Processing Systems, Morgan Kaufman, San Mateo, CA, Vol. 3, pp. 564–571, 1991

- Zhengyou Zhang; Lyons, M., Schuster, M.; Akamatsu, S., "Comparison between geometry-based and Gabor-wavelets-based facial expression recognition using multi-layer perceptron," Automatic Face and Gesture Recognition, Proceedings. Third IEEE International Conference on, pp.454, 459, 14-16, 1998

- D. Huang; C. Shan; M. Ardabilian, Y. Wang, L. Chen, “Local Binary Patterns and Its Application to Facial Image Analysis: A Survey”, Systems, Man, and Cybernetics, Part C: Applications and Reviews, IEEE Transactions, Vol. 41, No. 6, pp. 765-781, 2011, DOI: 10.1109/TSMCC.2011.2118750.

- Y. Cheon, D. Kim, “A Natural Facial Expression Recognition Using Differential-AAM and k-NNS”, Multimedia, 2008. ISM 2008. Tenth IEEE International Symposium Proceedings, pp. 220-227, 2008.

- Lorincz, Andras; Jeni, Laszlo Attila; Szabo, Zoltan; Cohn, Jeffrey F.; Kanade, Takeo, "Emotional Expression Classification Using Time-Series Kernels," Computer Vision and Pattern Recognition Workshops (CVPRW), IEEE Conference on , vol., no., pp.889,895, 2013

- B. Fasel, Juergen Luettin, “Automatic facial expression analysis: a survey”, Pattern Recognition, Vol. 36, no. 1, pp. 259-275, ISSN 0031-3203, http://dx.doi.org/10.1016/ S0031-3203(02)00052-3, 2003.