Intelligent Application for Textual Content Authorship Identification based on Machine Learning and Sentiment Analysis

Автор: Dmytro Uhryn, Victoria Vysotska, Lyubomyr Chyrun, Sofia Chyrun, Cennuo Hu, Yuriy Ushenko

Журнал: International Journal of Intelligent Systems and Applications @ijisa

Статья в выпуске: 2 vol.17, 2025 года.

Бесплатный доступ

During the development and implementation of the software system for text analysis, attention was focused on the morphological, syntactic and stylistic levels of the language, which made it possible to develop detailed profiles of authorship for various writers. The main goal of the system is to automate the process of identifying authorship and detecting plagiarism, which ensures the protection of intellectual property and contributes to the preservation of cultural heritage. The scientific novelty of the research was manifested in the development of specific algorithms adapted to the peculiarities of the natural language, as well as in the use of advanced technologies, such as deep learning and big data. The introduction of the interdisciplinary approach, which combines computer science, linguistics, and literary studies, has opened up new perspectives for the detailed analysis of scholarly works. The results of the work confirm the high efficiency and accuracy of the system in authorship identification, which can serve as an essential tool for scientists, publishers, and law enforcement agencies. In addition to technical aspects, it is vital to take into account ethical issues related to confidentiality and copyright protection, which puts under control not only the technological side of the process but also moral and legal norms. Thus, the work revealed the importance and potential of using modern text processing methods for improving literary analysis and protecting cultural heritage, which makes it significant for further research and practical use in this area.

Machine Learning Methods, Text Analysis, Authorship Identification, Sentiment Analysis, NLP, SVM, LSTM, CNN, RNN

Короткий адрес: https://sciup.org/15019774

IDR: 15019774 | DOI: 10.5815/ijisa.2025.02.05

Текст научной статьи Intelligent Application for Textual Content Authorship Identification based on Machine Learning and Sentiment Analysis

Published Online on April 8, 2025 by MECS Press

The topic of text analysis for the original authorship of works of literature is essential and relevant for several reasons [1]. In today's world, where information is spread rapidly through the Internet, the problem of plagiarism and copyright arises [2]. Literary analysis allows us not only to determine the authenticity of the text but also to preserve cultural heritage, particularly in the case of national literature, such as English or Ukrainian. Identifying original authorship is key to ensuring intellectual property and recognition of writers' work. Scientific interest in the analysis of texts goes beyond literary studies and finds application in jurisprudence, history, and cultural studies. The development of technologies in the field of natural language processing (NLP) and machine learning opens up new opportunities for the study of literary texts [3-6]. Automation of the author identification process allows the analysis of large volumes of data with high accuracy and speed, which is indispensable in modern scientific and commercial conditions. In addition, the question of authorship is particularly relevant in the literature context, where historical vicissitudes such as Soviet censorship often led to the loss of documents or changes in authorship for ideological purposes [7-9]. Restoring historical justice and accurately attributing works to their authors helps restore national identity and maintain cultural heritage. On a practical level, the development of adequate tools for the analysis of authorship can contribute to the fight against literary piracy, which is a significant problem in Ukraine. Such tools allow publishing houses, libraries and educational institutes to protect the rights of writers and promote the development of the literary market [10-12]. Recognition and protection of authorship are also essential for maintaining the creative climate and stimulating new literary talents.

The goal of creating a software system for text analysis for the original authorship of works of literature is to develop an effective tool that will automate the process of determining the authorship of literary works. It is essential in the context of ensuring the protection of intellectual property, maintaining copyright and detecting possible cases of plagiarism. The system aims not only to determine the author of the text but also to provide an analysis of literary styles and the use of linguistic features and, ultimately, to contribute to the preservation of cultural heritage. The system faces the following tasks to achieve this goal:

-

• Development of the methodology for authorship identification. The system should use machine learning and natural language processing algorithms to analyse texts. It includes training the models on historical data that contains samples of the works of famous authors to create a reliable basis for comparison.

-

• Creation of the database of literary works. For the effective operation of the system, an extensive database is required, which includes the texts of works of various genres and periods. It will help in the analysis and identification of literary techniques and styles that may be characteristic of specific authors.

-

• Use of linguistic features. The system should analyse linguistic features such as syntax, vocabulary, stylistic figures and other elements of the text that may indicate a specific author.

-

• User interface design. The interface should be intuitive and user-friendly so that users with different levels of technical skills can use the system effectively. It also assumes the presence of functionality for entering text, analysing it and obtaining results.

-

• Ensuring confidentiality and data security. Since the system will process texts that may have a copyright, it is essential to ensure that this data is protected from unauthorized access.

Thanks to the fulfilment of these tasks, a software system for analysing text for original authorship can significantly contribute to the development of literary science, as well as provide practical tools for publishing houses, scientific institutions and law enforcement agencies to fight plagiarism and support copyright.

The object of research in the analysis of the text for the original authorship of works of literature is literary texts written in the natural language in the context of determining their authorship. The research focuses on the use of linguistic, stylistic and other textual characteristics that help to identify authors of works or establish cases of literary plagiarism. Primary attention is paid to the analysis and comparison of the linguistic features of the texts, which includes a deep understanding of the individual and characteristic features of the writers [12-15].

-

• Literary texts . First of all, the text of literature itself is the main object of research. It can be novels, poetry, plays, essays, etc. The study of these texts includes the analysis of their structure, vocabulary, and syntax, as

well as unique stylistic devices that may indicate a specific author. Each author has a unique style of writing, which is reflected in the use of particular terminology, metaphors, rhythm, and other linguistic elements.

-

• An author's style analysis is an integral part of determining authorship. Studying how particular authors use language helps create "authorship profiles" that can be used to compare and identify the authors of unknown or disputed works. It includes researching their previous works and identifying the unique features that distinguish one author from another.

-

• Linguistic analysis involves the detailed study of language and its components used in literary works. It covers grammatical structure, word choice, and phrasing, as well as more subtle aspects such as rhythm and meter in poetry. Such an analysis can reveal the use of linguistic patterns typical for a specific author.

-

• The subject of research in text analysis for the original authorship of literature works focuses on the use of specific methods and techniques that allow establishing the author of a literary work or detecting plagiarism. These methods cover both linguistic and stylistic analysis and include the following main aspects [15-21]:

-

• Linguistic analysis consists of a detailed study of the linguistic features of the text. It includes analysis of syntax, morphology, semantics and phonetics. It is essential to study the use of grammatical structures, word frequency, sentence formation and characteristic language repetitions that may indicate a particular author. The use of advanced natural language processing and machine learning techniques makes it possible to analyse these characteristics on large volumes of data.

-

• Stylistic analysis focuses on the study of individual features of the author's style, such as the use of metaphors, symbols, and irony, as well as features of text construction. The stylistic analysis allows the reveal of unique author's "signatures", which may not always be evident at first glance. Research can also include the analysis of themes and motifs that reveal a deeper connection between the works of the same author.

-

• Use of statistical methods . Authorship analysis also uses statistical methods for processing and interpreting textual data. It may include frequency analysis, clustering, principal component analysis, and other statistical procedures that help identify statistically significant features of texts associated with particular authors.

-

• Software and algorithms . A separate role in the research subject is played by the development and implementation of specialized software and algorithms for automating the analysis process. The development of efficient algorithms that can quickly process large volumes of text and determine authorship with high accuracy is key to the practical application of text analysis.

-

• The scientific novelty of text analysis research on the original authorship of works of literature consists of the application of advanced methods of text analysis and natural language processing to solve specific problems of authorship identification. This direction can have a number of innovative aspects that will make a significant contribution to literary studies, computational linguistics, and jurisprudence.

-

• Development of specific algorithms for the natural language . Most modern text analysis and language processing technologies are focused on English and other widely used languages. In the context of literature, novelty can consist of the adaptation of existing algorithms or the development of new ones that take into account the unique features of the natural language, such as morphological structure, syntax and vocabulary. It may include the development of specialized tools for morphological analysis, semantic analysers and algorithms that detect stylistic features specific to texts.

-

• Using deep learning . Innovations may also include the application of deep learning techniques to the analysis of literary texts. Neural networks such as LSTM (Long Short-Term Memory) and transformers can be trained on large corpora of texts to detect complex language patterns and stylistic features that indicate authorship. This approach can significantly increase the accuracy of author identification and plagiarism detection.

-

• Analysis of big data . With the help of big data technologies, it is possible to analyse vast volumes of literary works, which would previously have been impossible due to the limitation of human resources. It allows us not only to determine authorship more quickly but also to study changes in literary styles and currents on a scale inaccessible to traditional research methods.

-

• Interdisciplinary approach . Scientific novelty can also consist of a multidisciplinary approach that combines the methods of literary studies, computer science, and statistics. It allows the create complex analysis models that take into account both linguistic and contextual aspects, providing a deeper and more comprehensive understanding of texts.

-

• Ethical aspects in text analysis . Research may also include the development of a moral framework for the use of analytical tools, ensuring the protection of personal data and copyright in the process of text analysis. Ethical consideration is key to creating technologies that respect individual rights and cultural identities.

Thus, scientific innovation in text analysis for the original authorship of works of literature has the potential to make a significant contribution to expanding the possibilities of modern literary studies and natural language processing, providing new tools for the analysis and interpretation of literary texts. The problem of text analysis for the original authorship of works of literature is multifaceted and includes a number of challenges arising from literary, linguistic, technical and legal aspects. This problem becomes especially relevant in the context of modern requirements for the protection of intellectual property and challenges related to the digitization of textual information [15-21].

• Literary and linguistic aspects. The scientific problem lies in the difficulties of identifying the authorship of texts, where the individual style of the writer must be revealed and analysed with great precision. The author's style may include unique lexical choices, syntactic constructions, stylistic figures and other linguistic features that require detailed analysis. There are also cases when authors consciously adapt or modify their style, which calls into question the possibility of unambiguous attribution of texts.

• Technical challenges. The technical side of the problem is the need to develop and implement sophisticated algorithms for machine learning and natural language processing that can effectively process large volumes of text and distinguish subtle nuances of speech style. The importance of accuracy in algorithms is critical, as errors can lead to incorrect attribution of authorship, with serious consequences.

2. Related Works

The goal of creating a software system for text analysis for the original authorship of works of literature is to develop an effective tool that will automate the process of determining the authorship of literary works. It is essential in the context of ensuring the protection of intellectual property, maintaining copyright and detecting possible cases of plagiarism. The system aims not only to determine the author of the text but also to provide an analysis of literary styles and the use of linguistic features and, ultimately, to contribute to the preservation of cultural heritage.

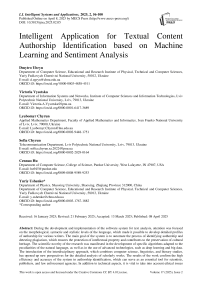

Since no analogues were explicitly found for literature, there is a need to consider programs that are primarily used for English literature. It is also essential to know that models are divided into the following examples according to their method of creation [1-3]:

Fig.1. Authorship attribution models

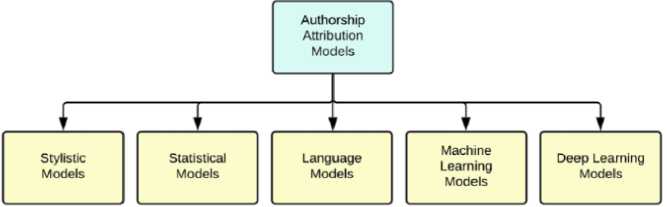

JGAAP (Java Graphical Authorship Attribution Program). Authorship is a study in the field of stylometry. It addresses the problem of determining the most likely author of an unknown text from a pool of potential authors. Tools such as JGAAP (Java Graphical Authorship Attribution Program), Stylometry with R, and the stylo Python package allow quantitative analysis of authorship based on stylistic and linguistic features. They can be trained or tuned for Ukrainian texts if a sufficient dataset is available.

Fig.2. Java graphical authorship attribution program

Recent developments in machine learning and corpus linguistics have shown the possibility of automatic authorship determination using statistics; the NSF-funded JGAAP (Java Graphical Authorship Attribution Program) system was part of these developments. JGAAP has helped foster a new authorship community and create a valuable tool for a wide range of scientific specialities. Although JGAAP contains thousands of possible methods, there are many more proposed but not thoroughly tested methods in the literature. Benchmarking on a large scale will require the development of new methods and test corpora. In addition, many key issues need to be addressed to meet the needs of the community, such as the open class issue, the adversarial issue, and the co-authorship issue. Finally, it is necessary to look at the application of JGAAP and similar systems to key areas of linguistic profiling, such as gender, education, native language, psychological profiling, health status, age (of document or author) or even attempts at deception. Again, by applying a rigorous testing method to these new problems and corpora, the project can establish accuracy benchmarks for different methods (under various test conditions), find new combinations that lead to improved methods, and create "best practice" recommendations. Improved attribution will be of immediate benefit to both academics and broader social contexts, such as law enforcement and forensics, where there is a direct demand for this type of security technology. Historical/social analysis will also provide better access to the related disciplines of digital humanities, sociology, history, and computer science, creating a basis for a better understanding of traditional humanities issues. Profiling can help doctors and psychologists by providing a non-invasive method of identifying certain aspects of the human psyche. The developed software (and the planned development/distribution process) will help improve the effectiveness of digital humanities and computer science, primarily through the establishment of standards and software validation processes. In particular, by providing direct evidence of the conditions and expected error rates involved in different methods, the resulting information will help establish authorship to meet Daubert's criteria for expert evidence, allowing authorship designations to be used in formal legal contexts. Finally, funding for this research will help support Duquesne University's unique interdisciplinary computational mathematics program, providing greater access to unusual and atypical audiences for technology education.

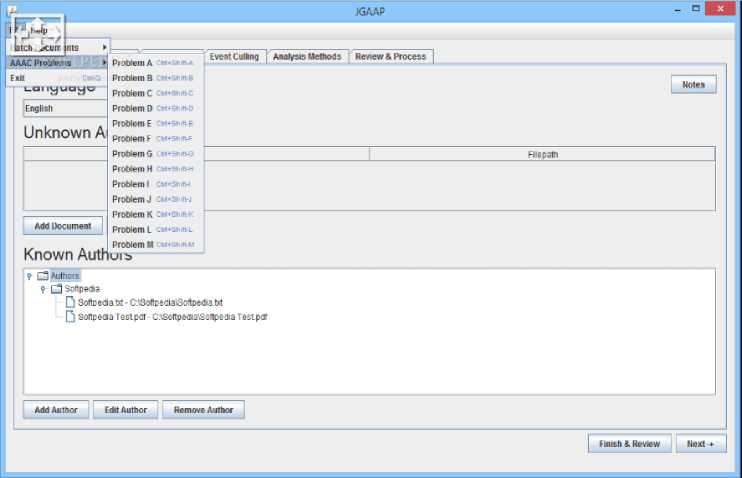

General Architecture for Text Engineering, or GATE, is a Java toolkit originally developed at the University of Sheffield. It is now used worldwide by a vast community of academics, companies, educators, and students for many natural languages processing tasks, including information extraction in many languages.

Fig.3. General architecture for text engineering

The Natural Language Toolkit, or more commonly NLTK, is a set of symbolic and statistical natural language processing (NLP) libraries and programs for the English language written in the Python programming language. NLTK contains both datasets and graphics. The package includes a book that explains the basic concepts of the language processing tasks supported by the toolkit, as well as examples of how to use the package. NLTK is designed to support research and teaching courses related to NLP and related fields, including empirical linguistics, cognitive science, artificial intelligence, information retrieval, and machine learning. NLTK has been successfully used as a learning tool and a platform for prototyping and building research systems. In the US and 25 other countries, 32 universities use NLTK in their courses. NLTK supports classification, tokenization, stemming, tagging, parsing, and semantic reasoning functionality.

Advantages of the developed product. The development of a software product for text analysis for the original authorship of works of Ukrainian and English literature has several significant benefits that significantly strengthen its practical application and scientific value.

-

• Convenient functionality . One of the key advantages is a convenient and intuitive user interface. It means that users, regardless of their technical experience, can effectively use the system to analyse texts. The interface makes it easy to load text data, perform analysis, and view results in an easy-to-understand format. The inclusion of such functions as user hints and a contextual help menu makes the process of interacting with the program as simple and effective as possible.

-

• Availability of Ukrainian and English literature datasets . A great advantage is the creation and integration of a specialized dataset of Ukrainian and English literature, which includes texts of various genres and periods. The presence of a large and diverse body of texts allows the system to more accurately analyse and recognize the author's features. This dataset not only improves the quality of authorship analysis but also makes it possible to carry out complex studies of the natural literary language and its evolution and transformations.

-

• Innovative technologies . The development includes the application of advanced technologies in the field of natural language processing and machine learning, such as neural networks, deep learning algorithms and statistical analysis. These technologies provide high accuracy and speed of data processing, allowing the complex analysis of large volumes of text to be performed for the purpose of identifying the author's style. Innovative solutions embodied in the product also include algorithms for identifying plagiarism and ensuring copyright, which is especially relevant in today's digital world.

These advantages make the developed product convenient for users, effective for research in the field of literature, and essential for ensuring the cultural and intellectual heritage of Ukraine. Such innovations contribute to scientific progress and the support of the literary sphere in the conditions of globalization and digitalization, opening up new opportunities for the analysis, preservation and development of Ukrainian and English literature.

Disadvantages of the developed product. Despite numerous advantages, the developed product for text analysis for the original authorship of works of Ukrainian and English literature has several shortcomings that may affect its effectiveness and acceptability for end users.

-

• Data limitations . One of the main problems is the limited availability and volume of Ukrainian literature datasets, which are necessary for training machine learning algorithms. Although the development involves the creation of a specialized corpus of texts, there is a risk that this corpus will not be fully representative or large enough to ensure high accuracy of the analysis. Incomplete data can lead to incorrect interpretation of results or errors in determining authorship.

-

• Technical limitations . The natural language processing and machine learning technologies used in the product, while advanced, have certain technical limitations. The complexity of the algorithms requires significant computing resources, which can be problematic for users with limited technical capabilities. Also, high dependence on the quality of input data can complicate the versatility and reliability of the product.

-

• Ethical and legal issues . Developing such a product presents researchers with ethical and legal challenges. Using literary texts to train algorithms raises questions about copyright and data privacy. There is a risk that without proper regulation and control, the system could be used to infringe copyright or to intrude on the privacy of authors.

-

• Dependence on linguistic features . Another problem is the high dependence of the analysis results on the peculiarities of the natural language. The presence of dialects, historical changes in the language, and stylistic differences can make it difficult to determine the exact authorship, especially for historical texts where linguistic norms differ significantly from modern ones.

These shortcomings require a careful approach to improving technological aspects, proper legal regulation and ensuring ethical responsibility when using the system. These challenges not only identify potential design weaknesses but also outline directions for further research and product improvement that can lead to greater market adoption and adoption.

General comparison. It is necessary to conduct a general comparison of the product we developed with existing analogues on the market by carefully analysing the main parameters, such as the effectiveness of detecting artificially created texts and the accuracy of the results. Based on this comparison, it is possible to find out the advantages and disadvantages of our product compared to competitors, as well as identify its competitive advantages and opportunities for further improvement.

Table 1. The advantages and disadvantages of our product compared to competitors

|

The name of the program or site |

Convenient functionality |

Availability of the Ukrainian language |

Advanced machine learning technologies |

|

JGAAP |

Yes |

No |

Yes |

|

General Architecture for Text Engineering |

Yes |

No |

Yes |

|

Natural Language Toolkit |

No |

No |

Yes |

From the results of the table, it is concluded that the best results have the programs JGAAP and General Architecture for Text Engineering.

Prospects for product development. The prospects of developing a product for text analysis for the original authorship of works of Ukrainian and English literature open up new opportunities for the development of the science of literature, cultural preservation, education and technologies. This product can have a significant impact in several key areas:

-

• Scientific research . The product can become an essential tool for academic research, allowing scholars to analyse literary texts more deeply and identify stylistic features of different authors. It can contribute to a better understanding of literary trends and the evolution of language, as well as help to resolve academic disputes about the authorship of works.

-

• Education . In the field of education, such a product can be used to teach students to analyse literary works,

develop critical thinking and improve literacy. Text analysis tools can become part of the learning process in Ukrainian and English literature classes, allowing students to experiment and learn through hands-on experience.

• Protection of intellectual property. In the context of the growing challenges of plagiarism and copyright, development can serve as an essential tool for publishers, legal authorities and independent authors to protect their intellectual property. The system can help identify and document cases of copyright infringement, as well as provide an evidentiary basis in legal cases.

• Expansion of technological capabilities. Technological innovations based on artificial intelligence and machine learning continue to develop, and the development of such a product can contribute to the further development of natural language processing and deep learning algorithms. It opens the door to new tools capable of analysing text at a much deeper level, including emotional content, subtext, and contextual meanings.

• Cultural preservation. The product can be necessary for the preservation of culture, especially in the context of modern globalization and cultural homogenization. Preservation of unique linguistic and stylistic features through accurate analysis of authorship will help future generations to understand and appreciate the contribution of previous generations of writers.

• Development at the international level. Since literature is becoming more and more famous and popular abroad, there is a significant potential for the use of such a product at the global level, especially in areas where it is necessary to determine the authorship of translations or verify the text within the framework of literary studies. Such a tool can contribute to greater visibility of Ukrainian and English literature and its analysis in world academic circles.

3. Material and Methods

3.1. System Analysis of the Research Object and Subject Area

Based on the analysis of the current state of development of a software product for text analysis for the original authorship of works of literature, it can be concluded that this project is essential for both the academic community and the cultural sphere. The use of advanced technologies of natural language processing and machine learning allows for an increase in the accuracy and speed of text analysis, which opens up new opportunities for research in the field of literary studies and the fight against plagiarism. Convenient functionality and the availability of a specialized dataset ensure the availability and effectiveness of the product for a wide range of users, including researchers, educators and law enforcement agencies. It contributes not only to the in-depth analysis of literary works but also to the preservation of the national cultural heritage. However, the project has some drawbacks, such as limited data and high requirements for technical resources, which may limit its application in certain conditions. It is also important to consider ethical and legal issues related to the use of literary works.

The systematic analysis of the object of research and the subject area for the analysis of the text for the original authorship of works of literature focuses on a comprehensive approach to the study of literary texts with the aim of identifying their authors [1-3]. This analysis covers several key aspects: the definition of the research object, the analysis of the subject area, and the implementation of systematic methods and tools for the study of texts.

-

• Object of research. The object of research in this context is literary texts written in Ukrainian or English. It includes poetry, prose, drama and other forms of scholarly works. The primary purpose of the analysis is to establish the authorship of these works, which can be especially important in cases where the text is attributed to different authors or when the authorship remains unknown or disputed.

-

• Subject area. The subject area covers literary studies, linguistics, computer science, and, in some aspects, legal studies. It includes the methods of stylometry, which allow analysis of the stylistic features of the text and the use of specific verbal forms and structures that may indicate a particular author. Natural language processing and machine learning methods are also used to analyse large volumes of text data.

-

• System methods and tools. System analysis involves the use of various tools and technologies. The main ones are databases for storing texts, analytical tools for studying linguistic features, and software for automating the processes of analysis and classification. The use of statistical methods and artificial intelligence makes it possible to develop models capable of determining the authorship of texts with high accuracy.

-

• Impact and prospects. The study of system analysis in this area opens up new opportunities for the development of literature and culture. It also has practical implications in the areas of plagiarism detection, copyright protection, and academic integrity.

-

3.2. Goal Tree

The tree of goals for the project "Text analysis for original authorship of works of literature" can be considered as a hierarchical structure of goals, which allows for detailed planning and organization of all key aspects of the project. This tree includes the primary goal, sub-goals, and specific tasks that must be accomplished to achieve the ultimate goal. A description of the goal tree will detail each level of the hierarchy.

The main goal of the project is to develop an effective tool for analysing texts with the aim of identifying the original authorship of works of literature. This tool should be able to accurately identify the authors of texts based on linguistic and stylistic features.

Sub-goals

-

1) Development of a comprehensive database of literary works:

-

• A collection of texts of various genres and eras;

-

• Creating a structured database for analysis;

o Collect more than 10,000 works;

o Digitize archival texts;

o Develop mechanisms for constant updating of the database;

-

2) Development of text analysis algorithms:

-

• Implementation of machine learning and natural language processing techniques;

-

• Development of algorithms for the identification of stylistic and linguistic features characteristic of specific authors;

o Setting up neural networks for text analysis;

o Testing algorithms for accuracy and reliability;

o Optimization of algorithms for fast processing of large volumes of data;

-

• Setting up neural networks for text analysis;

-

3) Integration of the tool into academic and educational processes:

-

• Development of user-friendly interfaces in educational and research institutions;

-

o Development of a user interface that includes intuitive tools for determining authorship;

-

o Organization of workshops and demonstrations for educators and researchers;

-

• Data visualization support.

The implementation of the goals mentioned above will allow not only to increase the quality and accuracy of the analysis of literary works but also to significantly expand the possibilities of their use for academic and educational purposes. The introduction of the product can contribute to a better understanding of the cultural heritage of Ukraine and support the preservation of the literary language. The discovery of these opportunities can also increase the interest of the international audience in literature, providing greater visibility and recognition of authors on the world stage.

However, there are challenges to consider:

-

• Ensuring sufficient funding and support for project development;

-

• Overcoming technical difficulties associated with the processing and analysis of large volumes of textual information;

-

• Compliance with ethical standards and copyright protection in the process of using literary works.

-

3.3. Selection of Alternative Options and Selection of the Best Option

When developing a platform for text analysis for the original authorship of works of literature, it is essential to consider several alternative options. The choice of the best option should be based on a careful analysis of the capabilities of each solution, their effectiveness, cost, and the possibility of integration with existing systems. Alternatives for platform development:

-

1) Development of own platform "from scratch"

-

• Advantages . Complete control over the functionality and the ability to fine-tune the system to the specific

needs of the project.

-

• Disadvantages . High development cost, long development time, high risks of delays and technical problems.

-

2) Use of open libraries and tools

-

• Advantages . Lower development costs use of time-tested tools.

-

• Disadvantages . Limitations in functionality and possible difficulties with the integration of various

components.

-

3) Partnership with technological companies

-

• Advantages . Access to advanced technologies support from experienced developers.

-

• Disadvantages . Dependence on a third party, potential limitation in control over the final product.

-

4) Adaptation of existing commercial solutions

-

• Advantages . Fast implementation, no need to develop own tools.

-

• Disadvantages . High licensing costs and possible limitations in customization.

To choose the best option, it is necessary to conduct a detailed analysis of costs, potential risks, and the time required to implement each of the possibilities. It is also essential to involve project stakeholders in discussing possible solutions to ensure their support and understanding of key requirements. Considering the specificity of the task of analysing the authorship of texts, it may be beneficial to choose a combination of approaches. For example, one might consider developing a basic platform "from scratch" with the integration of open-source libraries for specific tasks that require deep specialization, such as machine learning and natural language processing. It will balance cost and efficiency while providing high adaptability and control over the final product.

In the process of developing software or other information systems, a key stage is the identification, analysis and documentation of system functionality requirements. Use cases in the Unified Modelling Language (UML) methodology are an effective tool for this. They allow to describe how the system interacts with its users or external agents to achieve specific goals or perform certain tasks.

User → Run the program → Download data (add sources, references, and test input) → Select an analysis method → Select an analysis metrics → Configure an analysis method → Run an analysis → View results → Export results

|

Author |

0... |

TextDocument |

°- |

Analysis |

... 1 |

Report |

|

|

authorjd: int name: string bio: string |

documentjd: int title: string content: string authorjd :int |

analysisjd: int documentjd: int analysis_date: date result: string |

reportjd: int analysisjd: int report_date: date repon_content: string |

||||

|

addAuthoro updateAuthor() deleteAuthorQ |

|||||||

|

uploadDocument() editDocument() deleteDocument() |

performAnalysis() updateAnatysis() deleteAnalysis() |

generateReportf) viewReport() deleteReportf) |

Fig.4. UML class diagram

User → Text Document (uploadDocument → saveDocument → getDocument) → Analysis (analyseDocument → getAnalysis) → Report (createReport → saveReport) → User

Workflow algorithm:

Adding data → Pre-process text → Extract features → Train model → Generate reports

-

3.4. Statement and Justification of the Problem

For the system of text analysis for the original authorship of works of literature, the following key functions and tasks, the primary goals, and an analysis of the importance and necessity of its implementation can be outlined. The text analysis system for authorship is designed to perform several main tasks:

-

• Author identification . The system has algorithms that allow the author of the text to be determined based on linguistic and stylistic features.

-

• Plagiarism detection . The ability of the system to analyse the text for its originality allows it to identify potential cases of plagiarism.

-

• Statistical analysis . The system can collect and analyse statistical data on the frequency of use of certain verbal forms and structures that are characteristic of specific authors.

-

• Educational tool . Providing opportunities for educational institutions to use the system in the process of studying literature.

The main goals of the system:

-

• Improving the understanding of literary heritage. The system helps researchers and students to better

-

• understand the style and other features of the works of famous authors.

-

• Copyright protection . The system helps protect intellectual property by identifying copyrights for textual works.

-

• Supporting academic integrity . Providing tools to check academic papers for plagiarism increases the level of academic integrity in educational institutions.

Implementing a text analysis system for authorship is essential for several reasons:

-

• Cultural significance . The preservation and proper attribution of literary works are of great importance for national culture and identity.

-

• Academic value . The system will help the academic community conduct research and provide access to reliable tools for checking texts for authorship. It is especially relevant in the conditions of the modern information space, where originality of content is key to academic and creative activity.

-

• Legal significance . Determining authorship is critical to protecting intellectual property. Having practical authorship analysis tools can facilitate the proper resolution of copyright and plagiarism disputes.

-

• Technological importance . The development of natural language processing and machine learning technologies through the implementation of such projects stimulates innovation and improves technical capabilities in general.

-

3.5. Application Area of the System

Scope and users. The text analysis system for the original authorship of works of literature can be applied in various areas, including:

-

1) Academic Institutions . Teachers and researchers can use the system to analyse literary works, study the author's style, and check student works for plagiarism.

-

2) Publishers . To verify the originality of works before publication and ensure compliance with copyright.

-

3) Law enforcement agencies . In cases where it is necessary to determine the authorship of works as part of investigations into copyright violations.

-

4) Libraries and Archives . For cataloguing and attribution of texts, especially old or anonymous manuscripts.

Application context. The system can be applied in the following contexts:

-

1) Educational . Supporting the learning process through the study of literary works and the education of academic integrity among students.

-

2) Scientific . Conducting complex literary studies with the aim of analysing literary styles and identifying literary periods or influences.

-

3) Legal . Use in court cases to confirm authorship of texts or identify copyright violations.

Potential application benefits:

-

1) Increasing the accuracy of attribution of works . The use of modern technologies makes it possible to accurately determine authorship, reducing the risk of errors in attribution.

-

2) Effective management of rights to content . Automating the authorship identification process contributes to more effective management of intellectual property rights.

-

3) Preservation of cultural heritage . The possibility of archiving and accurate identification of literary works contributes to the preservation of national culture.

Problems that the system solves:

-

1) Detection of plagiarism . The system is able to identify cases of copying of texts, which is especially important for the educational environment and publishing activities.

-

2) Differentiation of similar authoring styles . Thanks to deep analysis of textual characteristics, the system can distinguish even very similar authoring styles, helping to accurately identify authors under challenging cases.

-

3) Support for the study of literary heritage . The system provides tools for scholarly researchers to identify connections and influences between different authors and works, contributing to a deeper understanding of literary trends.

-

3.6. Justification of System Development and Implementation

The justification for the development and implementation of a text analysis system for the original authorship of works of literature is based on several key factors that highlight the necessity and significance of such a system in the modern context. These aspects include the cultural, educational, legal and technological importance of the system.

-

• Cultural significance . Literature is not only an artistic expression but also an essential part of the cultural identity and history of Ukraine. The development of an authorship analysis system will help scientists and cultural scientists to more deeply explore the creative heritage of writers, restore forgotten or little-known works, and discover unpublished manuscripts. It will also contribute to the preservation and popularization of literature, supporting the cultural diversity and multidimensionality of the national culture.

-

• Educational value . An authorship analysis system can become an indispensable tool in the academic environment, where it will allow teachers and students to use advanced technologies for the study of literature. It can serve as a platform for teaching text analysis, stylistic consideration, and historical contextualization of literary works, thereby improving the quality of education and research.

-

• Legal significance. From a legal point of view, the system will contribute to the protection of intellectual property and copyright, which is extremely important in the conditions of growing digitalization. A clear definition of authorship will help resolve disputes related to plagiarism and misuse of textual materials, ensuring fairness in the literary and media industries.

-

• Technological significance . The development of such a system stimulates innovative development in the field of natural language processing and machine learning. It not only improves the existing methodologies of text analysis but also opens up possibilities for the application of artificial intelligence in many spheres of society. These technologies have the potential to radically change approaches to data analysis, particularly in literary studies and other humanities.

-

• General rationale for system implementation . The rationale for the development and implementation of a text analysis system for the authorship of works of literature stems from the current needs of cultural, educational, legal and technological development. The system is aimed at raising the level of literary education, scientific research, intellectual property protection and cultural heritage support. It can play an essential role in preserving national identity, popularizing literature, and in the fight against academic fraud and plagiarism.

-

3.7. Expected Effects of System Implementation

The expected effects of the implementation of the text analysis system on the original authorship of works of literature can be large-scale and influential, covering various fields from education and culture to law and technology. A detailed consideration of the potential effects provides a better understanding of the meaning and importance of such a tool.

-

• Increasing the level of education and scientific research . One of the most important effects of the implementation of the system is its impact on the educational process and scientific research. The system will allow teachers and students to study literary texts more effectively, using in-depth text analysis to determine the author's styles and techniques. It not only promotes critical thinking but also helps students understand how different historical and cultural contexts influence literary works. In a scientific context, the system can assist researchers in determining authorship and in plagiarism analysis, providing more accurate and informed conclusions.

-

• Protection of intellectual property . The system will significantly improve the possibilities of intellectual property protection, particularly in the field of literature. With a precise definition of authorship, authors and publishers will have a more solid basis for protecting their rights in cases of illegal use or plagiarism of their

works. It not only promotes fairness but also encourages creativity and innovation, knowing that their rights will be protected.

-

• Promotion of cultural preservation . The system will help preserve and popularize literary heritage, allowing us to identify and catalogue works that may have been forgotten or lost. It will ensure the accessibility of these works to a broader audience and contribute to a deeper understanding of cultural and literary history.

-

3.8. Development of the Conceptual Model of the System

The development of the text analysis system for the original authorship of works of literature includes careful planning and structuring of input and output data, algorithms, system functions, and requirements for it. Here is a detailed description of these aspects:

-

• Input data are Text files (the system will accept texts in formats such as .txt, .docx, and .pdf for analysis) and Metadata (information about works, such as publication date, author, and genre, that can be used to improve the accuracy of the analysis).

-

• Output data are Authorship report (identification of the author or possible authors of the work), Statistical data (analysis of the frequency of use of words, stylistic figures, etc.) and Visualizations (graphs and diagrams reflecting the stylistic features of the texts).

The system will include the following key components:

-

• Data download module . Interface for downloading text files and metadata.

-

• Text processing module . Convert texts from different formats into a single standard format for analysis.

-

• Text analysis module . Machine learning algorithms were used to identify the author's features.

-

• Output module . Presentation of analysis results in a clear and visually appealing format.

-

• User interface . Simple and intuitive interface for interacting with the system.

Determination of requirements for the system and other formal models:

-

• Technical requirements are Compatibility (the system must be compatible with the central operating systems such as Windows, macOS, and Linux), Scalability (the ability to process large amounts of data without sacrificing performance) and security (ensuring the protection of input data and confidentiality of information).

-

• Functional requirements are Accuracy (high accuracy of authorship identification and plagiarism detection), Speed (ensuring fast data processing, essential for large text corpora) and Accessibility (easy access to the system through the web interface or desktop applications).

-

• Machine learning algorithms are Stylometry (the use of stylometric analyses to determine the characteristic features of the author's style), Neural Networks (development of deep neural networks that can learn to detect sub-textual patterns and stylistic signatures specific to individual authors) and Clustering (using clustering techniques to group works by stylistic similarities, which can help determine authorship).

The system should have the ability to process text files of various formats, such as PDF, DOCX, and TXT, which will allow users to easily download materials for analysis without the need for additional conversion.

The user interface should be intuitive and user-friendly for users with different levels of technical skills. The main elements of the interface include:

-

• Download management . Simple tools for downloading and organizing text files.

-

• Control panel . Visual representation of the analysis process and results, with the ability to sort and filter data.

-

• Feedback . Tools for sending feedback and getting support from developers.

The output results of the analysis should be presented in an understandable format, including:

-

• Reports . Detailed analysis reports are exportable to popular formats such as PDF or Excel.

-

• Visualizations . Graphs, diagrams, and other visualizations are used to visually present the stylistic and linguistic features of texts.

-

• Recommendations . Automated recommendations for further research or action based on analysis results.

Expected effects of system implementation:

-

• Cultural influence . The system allows for more profound research and preservation of literary heritage. With its help, it is possible to restore forgotten or unknown works, ensuring their availability to a broad audience. It also contributes to understanding the evolution of language and literary styles, which is essential for cultural identity.

-

• Educational influence . The system can significantly improve the quality of education by providing teachers and students with modern tools for analysing literature. It promotes the development of students' critical thinking, allowing them to analyse literary works at a deeper level and understand the nuances of the author's style.

-

• Legal impact . The system improves copyright protection by providing tools to accurately determine authorship and identify plagiarism. It helps prevent misuse of creative works by ensuring that authors are fairly compensated for their work.

It can be concluded that the development and implementation of a text analysis system for the original authorship of works of literature is a significant and promising project. The system provides solutions to a number of tasks and reveals the potential for influence in various areas.

-

• Cultural enrichment . The system will contribute to the better preservation and popularization of literary heritage, allowing for a deeper study of the work of authors and ensuring accurate attribution of scholarly works.

-

• Educational opportunities . The use of the system in the educational process will help teachers and students expand their analytical skills through access to advanced technologies of text analysis, increasing the quality of teaching and research.

-

• Copyright protection . The system plays a key role in detecting plagiarism and ensuring compliance with intellectual property, which is essential in today's world of rapid information dissemination.

-

• Technological progress . The development of such a system stimulates innovation in the fields of natural language processing and machine learning, opening new opportunities for the application of these technologies in other disciplines and industries.

-

• Social significance . The system can contribute to the strengthening of national identity through a deeper awareness of cultural and literary achievements and fostering respect for the author's work.

-

3.9. Selection and Justification of Methods for Solving the Task

This project has the potential not only to solve specific practical tasks but also to make a significant contribution to the development of culture, education, law and technology, thereby contributing to social and cultural progress.

For the system to work effectively, practical methods and tools are needed to help them distinguish between texts and make appropriate decisions. In this context, it is essential to choose and justify the methods and means of presenting knowledge in the decision-making system, the description of the mechanisms of logical deduction in the system and the justification of decision-making algorithms [1-9].

Methods and means of presenting knowledge in the decision-making system:

-

1) Text vectorization is the process of converting text into numerical vectors that machine algorithms can process. Popular methods include:

-

• Bag of Words (BoW). The text is represented as a set of words, where each word in the text is associated with its frequency.

-

• Term Frequency-Inverse Document Frequency (TF-IDF). Used to determine the importance of a word in the context of a document, taking into account its frequency in the entire corpus.

-

• Word Embeddings (e.g. Word2Vec, GloVe). Creates word vectors that represent semantic and syntactic relationships between words.

-

2) Stylistic Signs features are often used to analyse authorship, which characterizes the author's unique writing style:

-

• Syntactic features . Length of sentences, use of punctuation, frequency of use of different parts of speech (for example, adjectives, verbs).

-

• Lexical features . Variety of vocabulary, frequency of use of certain phrases or specific words.

-

• Semantic features . Themes and concepts that often appear in the author's works.

-

3) Neural networks , especially deep learning models, are able to automatically detect important features for authorship classification:

-

• Convolutional Neural Networks (CNN). Effective for detecting patterns in extensive text data.

-

• Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM): Used to analyse text sequences, in particular, to model context and dependencies in the text.

Description of logical output mechanisms in the system:

-

1) Data processing and analysis stages . Logical inference mechanisms begin with data collection and preparation: • Text pre-processing . Text normalization (noise removal, spelling standardization), token segmentation, stop

word removal, and lemmatization.

-

• Text vectorization . Converting text into vector representations such as TF-IDF or word vectors.

-

• Selection of features . Identification and use of features that most influence the determination of authorship, for

example, stylistic features (sentence length, use of punctuation), lexical features (frequency of phrases), and syntactic features (phrase structure).

-

2) Machine learning models :

-

• Classification models . Training algorithms such as naive Bayes classifier, SVM, random forests, or neural networks based on a training dataset with known authorship.

-

• Deep learning . Using recurrent or convolutional neural networks to detect complex patterns in textual data that may indicate the style of a particular author.

Description and justification of decision-making algorithms:

-

1) Naive Bayesian classifier .

-

• Description . This algorithm uses the principle of Bayesian statistics and assumes independence of features. It calculates the probabilities of each class (author) based on the input features (words, phrases, stylistic elements) and classifies the text by the author with the highest probability.

-

• Rationale . Naïve Bayes is simple to implement and efficient with large amounts of data. It works well even when the assumption of independence of features does not hold. A significant advantage is its ability to handle many classes, which is ideal for systems where there may be many potential authors.

-

2) Support vector method (SVM).

-

• Description . SVM searches for the hyperplane that best separates classes of data in a multidimensional space. In the context of authorship analysis, classes are different authors, and features are stylistic characteristics of texts.

-

• Rationale . SVM is effective in high-dimensional data, as is often the case in word problems, and is able to efficiently solve multiclass problems through strategies such as one-vs-one. It makes it an excellent choice for authorship analysis, as there may be many input features and the need to distinguish between writing styles accurately.

-

3) Random forests .

-

• Description . Random Forests is an ensemble method that uses multiple decision trees to classify data. Each tree is trained on a randomly selected subset of features and data, and the classification results are aggregated to obtain a final decision.

-

• Rationale . Random forests work well with complex data structures and provide high accuracy while reducing overtraining due to the ensemble nature. This method also determines the importance of features, which can help analyze which stylistic elements have the most significant impact on author identification.

-

4) Deep learning (e.g. LSTM, CNN).

-

• Description . Deep learning uses complex neural network structures to model data. LSTM (Long Short-Term Memory) is excellent for analyzing sequences of data, such as texts, where context and word order are essential. CNNs (Convolutional Neural Networks) can be used to detect patterns in text at different levels of abstraction.

-

• Rationale . Deep learning models can automatically detect complex patterns in data, making them ideal for tasks that require the recognition of subtle nuances in writing style. They require significant computing resources and large amounts of data for practical training but provide high accuracy and flexibility in simulation.

-

3.10. Main Components of the System

-

1) User interface (UI)

-

• Loading text for analysis (txt, pdf, docx).

-

• Selecting a model for author attribution.

-

• Displaying results: predicted author, model confidence level.

-

2) NLP module

-

• Text preprocessing: tokenization, lemmatization, stopword removal.

-

• Text vectorization: TF-IDF, Word2Vec, BERT embeddings.

-

• Analysis of stylistic features of the text.

-

3) Machine learning modules

-

• Using models: Naïve Bayes, SVM, LSTM for authorship classification.

-

• Training models on text corpora.

-

• Generating a forecast and deriving metrics (accuracy, F1-score, etc.).

-

4) Results processing module are Generating reports, Visualizing results in the form of a confusion matrix and Integration with external services (e.g., plagiarism databases).

System operation algorithm:

Stage 1. The user uploads text.

Stage 2. The NLP module cleans text and extracts features.

Stage 3. Text vectorization.

Stage 4. Machine learning model predicts authorship.

Stage 5. Results are displayed in the UI.

This structure allows the system to work efficiently with large amounts of text and supports real-time authorship analysis.

-

3.11. Justification for the Choice of Naive Bayes and SVM in the Study

The study uses several machines learning methods, including Naive Bayes (naive Bayes classifier) and SVM (support vector method). Their choice is based on efficiency, computational speed, accuracy, and the ability to work with high-dimensional text data. Justification for the choice:

-

1) The Naive Bayes classifier is based on Bayes' theorem and the assumption of independence of features. It calculates the probability that the text belongs to a particular author using words, phrases, and stylistic elements. Bayes' formula:

Р(Л|В)= ^( ^^ ) 4 1 J Р(В)

where P(A|B) is the probability that the author is A, given text B; P(BIA) is the probability of obtaining text В given that it was written by A; P(A) is the prior probability of authorship of A; P(B) is the total probability of encountering text В in the data corpus.

Table 2. Analysis of the naive bayes classifier

|

N |

Name |

Explanation |

|

1 |

Advantages |

Speed of learning and prediction – the algorithm calculates probabilities in constant time, making it one of the fastest methods. Minimal computational requirements are efficient when working with large amounts of text data. Works well with text data, even if the assumption of feature independence is not met. |

|

2 |

Disadvantages |

Independence assumption – in real-world texts, words are often dependent on each other, which can reduce accuracy. It does not take into account word order, which can be critical to the author’s style. |

|

3 |

Reason for choice |

High speed is vital for analyzing large text corpora. Sufficient accuracy in stylometry tasks is about 80% on the test corpus. Works well on sparse text data (for example, when using TF-IDF). |

-

2) SVM is a linear or nonlinear classifier that searches for the optimal hyperplane to separate data in a multidimensional space:

min-||w|| 2 provided yt(w ■ xt + b) > 1,4i w,b 2

where w is weight vector, b is bias, xt is input feature vector, yt is class label (+1 or -1).

Table 3. Analysis of the SVM classifier

|

N |

Name |

Explanation |

|

1 |

Advantages |

It works well with high-dimensional data and is ideal for TF-IDF and Word2Vec. High accuracy (≈ 79-81%) – especially for text classification. Can work in multi-class classification using "one-vs-one" and "one-vs-all" methods. Good generalization – less prone to overtraining than neural networks. |

|

2 |

Disadvantages |

Slow learning – may be less efficient than Naive Bayes for large amounts of data. Difficult to interpret – SVM models are less transparent than tree models. Kernel selection is required, which requires parameter tuning (linear, polynomial, RBF kernel). |

|

3 |

Reason for choice |

It works better on text features with high dimensionality (e.g. 300-dimensional Word2Vec). High accuracy is for author attribution (≈ 81.26% for English and 75.05% for Ukrainian). Support for nonlinear solutions – can take into account complex dependencies between words. |

Naive Bayes is chosen for fast and straightforward text classifications. SVM is used where higher accuracy and the ability to handle complex text patterns are required. In the study, they are combined: Naive Bayes works for fundamental analysis and SVM for deeper analysis of the authors' style.

Table 4. Comparative analysis of methods

|

Criterion |

Naive Bayes |

SVM |

|

Learning speed |

Very high |

Slow |

|

Prediction speed |

High |

Medium |

|

Accuracy |

75-80% |

79-81% |

|

Computational complexity |

Low |

High |

|

Support for non-linear solutions |

None |

Yes |

|

Interpretability |

High |

Low |

Naive Bayes is suitable for fast and efficient text analysis, especially when working with large amounts of data. SVM provides high accuracy, especially when using TF-IDF or Word2Vec. The combined use of NB and SVM allows you to achieve a balance between speed and accuracy of author attribution. SVM has a higher computational complexity, which may limit its application on large corpora. The results of this analysis confirm that both algorithms were chosen reasonably based on the characteristics of text data, accuracy and computational efficiency.

-

3.12. Selection and Justification of Means of Solving the Task

It was decided to use the Naive Bayesian classifier.

-

• Description . This algorithm is based on the principles of Bayesian statistics and assumes independence of features among themselves. It calculates the probabilities of each class (author) based on the input features (words, phrases, stylistic elements) and classifies the text by the author with the highest probability.

-

• Rationale . Naïve Bayes is easy to implement and efficient, especially with large amounts of data. It works well even when the assumption of independence of features does not hold.

The Naive Bayes classifier is often used for authorship analysis tasks due to several key advantages, especially compared to other machine learning algorithms such as the support vector method (SVM), random forests, or deep learning (e.g., LSTM, CNN). Here is a detailed rationale for choosing Naive Bayes for this task:

-

1) Learning and forecasting speed:

-

• Naive Bayes is one of the fastest algorithms because it only requires the computation of class probabilities based on independent features. It is significantly faster compared to algorithms that require complex calculations, such as SVMs with non-linear kernels or deep learning.

-

• Other methods . SVM, random forests, and deep learning require more time to train, especially on large or high-dimensional datasets.

-

2) Need for resources:

-

• Naive Bayes . Efficient in terms of memory and computing resources. It is essential when processing large volumes of text data, where other models may require significant computing power.

-

• Other methods . Deep learning, for example, requires significant computing resources and time to train and optimize the model, which may be impractical for some applications.

-

3) Ease of implementation:

-

• Naive Bayes . Easy to implement and configure, even for developers with a basic understanding of machine learning. This makes it an affordable choice for rapid prototyping.

-

• Other methods . SVM and deep learning require a deeper understanding of machine learning theory and parameterization for effective implementation.

Each of these classes follows Python's OOP principles by using abstract methods to ensure that any AnalysisMethod subclass implements the required functionality. This design makes it easy to extend and customize the document analysis methods in the system. In the process of developing the given project, various techniques and tools for the analysis of textual data were carefully selected and substantiated.

Analysis Method class

-

1) Purpose . Serves as a base class for various parsing methods that learn on known documents and predict the labels of unknown documents.

-

2) Attributes

-

• distance (stores the distance function used in subclasses);

-

• _variable_options (dictionary for storing variable options);

-

• _global_parameters (dictionary for storing global parameters);

-

• _NoDistanceFunction_ (a flag indicating the need for a distance function);

-

3) Methods

-

• __init__ (constructor initializes options based on _variable_options);

-

• after_init (placeholder for initialization tasks to be defined in subclasses);

-

• train (an abstract method for training a model using known documents);

-

• analyze (an abstract method for analysing unknown documents);

-

• displayName (abstract method to return the name of the method);

-

• displayDescription (abstract method to return the method description);

-

• set_attr (method for dynamically setting attributes);

-

• validate_parameter (validates parameters against predefined options);

-

• get_train_data_and_labels (prepare data for training and labels);

-

• get_test_data (preparation of test data);

-

• get_results_dict_from_matrix (converts scores to a dictionary of results by class);

-

• setDistanceFunction (sets the distance function);

Centroid Driver class

-

1) Purpose . Implements a centroid-based approach where the mean (centroid) is calculated for each known author of the data.

-

2) Methods :

-

• train (computes the mean by author from the training data);

-

• analyze (analyzes unknown documents by calculating distances to centroids);

-

• displayName (returns the name of the method);

-

• displayDescription (describes the method);

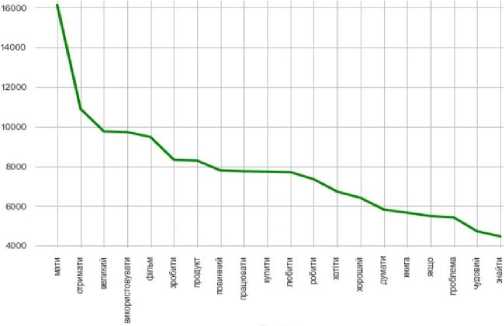

The K Nearest Neighbor class

-

1) Purpose . Implements the K-Nearest Neighbours algorithm for document classification;

-

2) Attributes :

-

• k (number of nearest neighbours considered);

-

• tie_breaker (method for resolving conflicts as 'average' or 'minimum');

-

3) Methods :

• train (stores embeds of known documents);

• analyze (classifies unknown documents based on k-nearest neighbours in the feature space);

• displayName (returns the name of the method);

• displayDescription (describes the method, including details about conflict resolution).

4. Experiments

4.1. Detailed Description of the Dataset used in the Study

The most essential tools used in the work are Count Vectorizer and the naive Bayesian classifier method. Count Vectorizer will be used to efficiently vectorize text, that is, convert text data into numeric vectors that would allow machine learning models to work with them. The Naive Bayesian classifier method was chosen to classify the text based on this vectorized data. Both of these tools would show effective results when working with textual data. The Tkinter library was also analysed to create an interactive user interface. It can provide convenient interaction with the program and make the process of text analysis more accessible to users. In addition, other libraries, such as pandas for data processing, as well as docx and PyPDF2 for working with documents in DOCX and PDF formats, will be used to fully process text data from various sources. All these tools and methods together will form a functional and practical system for text analysis using machine learning methods that can be used in real projects for different purposes and tasks.

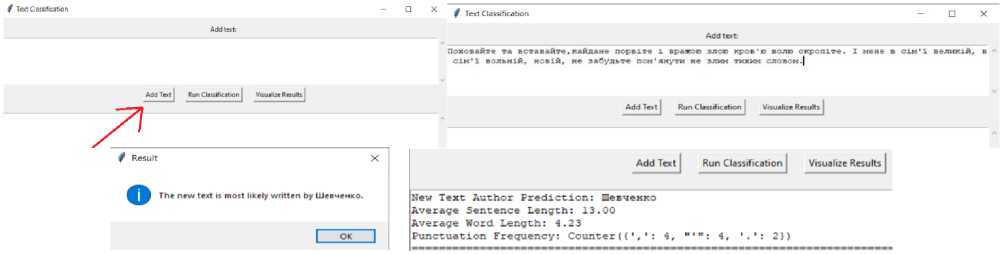

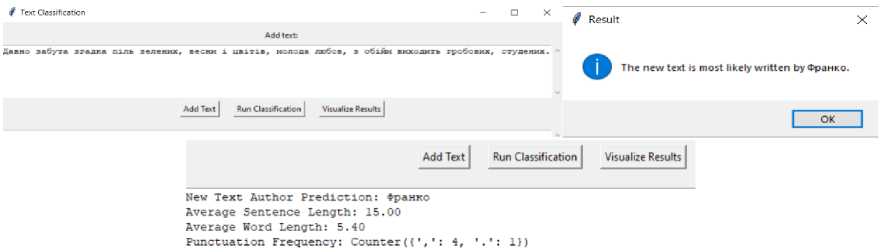

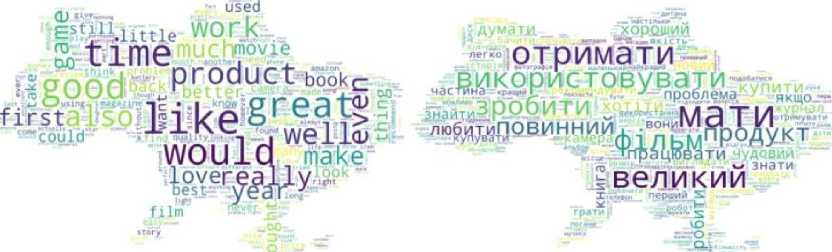

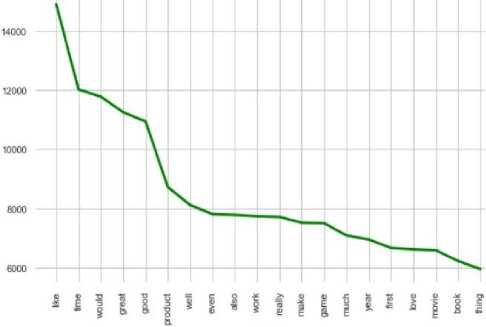

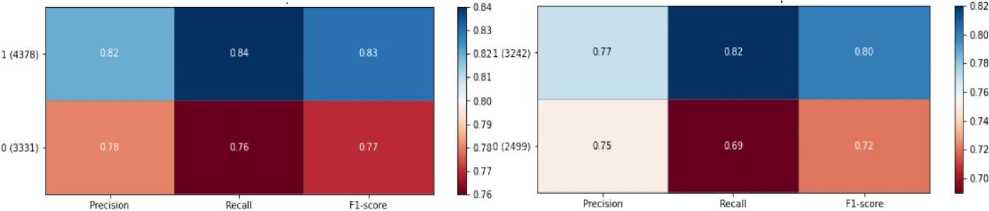

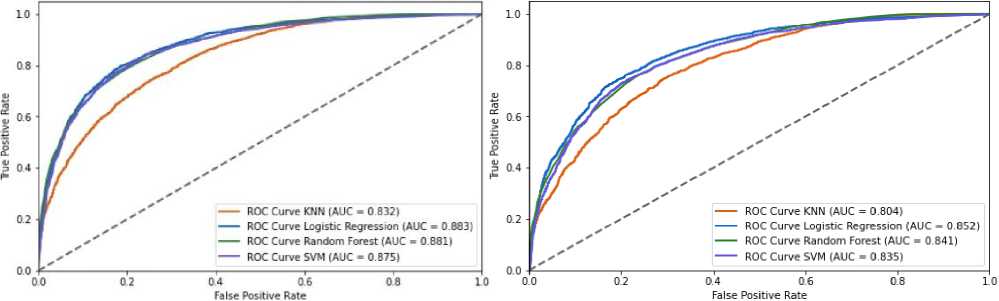

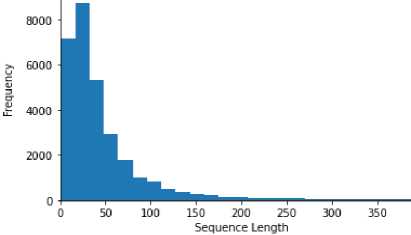

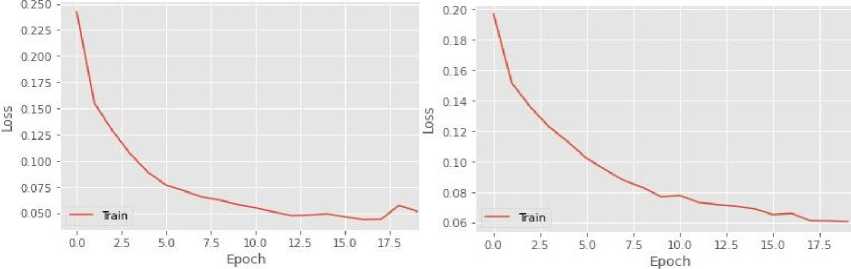

The dataset used in the study contains texts for authorship identification, presented in two languages: English and Ukrainian. The study includes original and translated texts, which makes it possible to test the system in different conditions. Characteristics of the dataset

-

• Data volume. Thirty thousand text fragments were used for training and testing the model. Texts by authors of classical Ukrainian and English literature (Franko, Shevchenko, Kulish, L. Ukrainka). English texts were translated into Ukrainian (via Google Translate API).

-

• Data diversity. Texts of different genres were used: poetry, prose, and journalism. Other periods were also

used: works from the 19th to the 21st centuries. Frequency analysis and analysis of syntactic structures were used to identify authorial styles.

-

• Data quality. Text normalization was performed (removal of stop words and special characters). The maximum sentence length was determined to be 100 words (optimization for the model). Analysis of syntactic features allowed for the improvement of the quality of author attribution.

Stages of data preprocessing

Stage 1. Conversion to a convenient format. XML conversion to DataFrame (convenient format for analysis).

Stage 2. Text preprocessing

Step 1. Removed stop words (a custom list for Ukrainian was used).

Step 2. Tokenization (breaking the text into words).

Step 3. Lemmatization (reducing words to their original form).

Step 1. Using Word2Vec for vectorization (300-dimensional vectors).

Stage 3. Class balancing. Since some authors are represented by a smaller number of texts, augmentation techniques (paraphrasing, synonym selection) were used.

Stage 4. Distribution into samples: 70% training sample, 20% test, 10% validation. Cross-validation to avoid overtraining.

A large corpus of texts (30,000 excerpts) provided a sufficient sample for training models. Text optimization (lemmatization, tokenization) significantly improved the accuracy of the model. The use of Word2Vec and GloVe allowed us to improve the processing of text semantics. The main challenge is that translated texts may differ from the originals, which affects the accuracy of author attribution. This dataset is unique due to its support for the Ukrainian language and the possibility of testing on different genres and periods.

-

4.2. Description of the Created Program Entry

The program uses the natural language for data analysis. A dataset of authors of literature was created independently for the program. We add the link:

The program uses ML algorithms to improve analysis accuracy and supports various text formats. The program identifies unique stylistic features of the text, such as word frequency, syntactic structures, and other language patterns.

-

• Comparison of texts . AuthorshipAnalyzer compares texts with each other to determine similarities and possible joint authorship.

-

• Using machine learning . The program uses machine learning methods to train models on large data sets, which improves the accuracy of authorship determination.

-

• Support for various text formats . AuthorshipAnalyzer supports the analysis of texts in multiple formats, such as TXT, DOC, and PDF, which makes the program versatile for different users.

-

• Intuitive interface . The program has a convenient and easy-to-use interface that allows you to quickly download texts and receive analysis results.

Fields of application

-

• Literary studies . Determining the authorship of unknown or disputed texts.

-

• Journalism . Analysis of texts to confirm the authorship of articles.

-

• Forensics . Use in investigations to identify the author of anonymous texts.

-

• Education . Use to teach students methods of stylometric analysis.

The basis of the work of AuthorshipAnalyzer is a detailed analysis of the stylistic characteristics of the text. The program analyses lexical features such as frequency of word usage, unique word forms and general vocabulary of the text. Syntax analysis allows us to determine grammatical structures, such as the frequency of use of different parts of speech, the length of sentences, and the use of punctuation. In addition, AuthorshipAnalyzer is able to detect unique stylistic patterns of text, such as rhythm, paragraph structure, and use of literary devices. It allows the create a comprehensive text profile that can be used to identify the author.

-

• Comparative analysis of texts . One of the key functions of AuthorshipAnalyzer is the ability to compare texts with each other. The program allows one to determine the degree of similarity between different texts, which can be helpful in detecting possible borrowings or plagiarism. In addition, the program can determine the joint authorship of texts by analysing stylistic intersections between different parts of the text.

-

• Machine learning models . AuthorshipAnalyzer uses machine learning algorithms to improve authorship accuracy. The program trains the model on large data sets, which allows for a wide range of stylistic features to be taken into account. These models can adapt to new data, allowing the program to continuously improve its results.

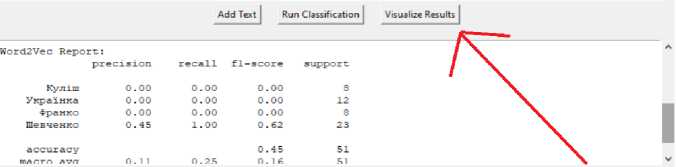

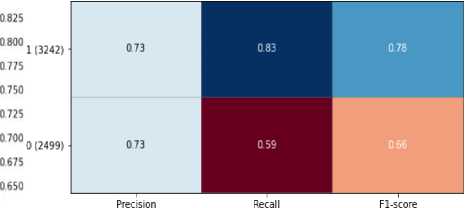

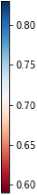

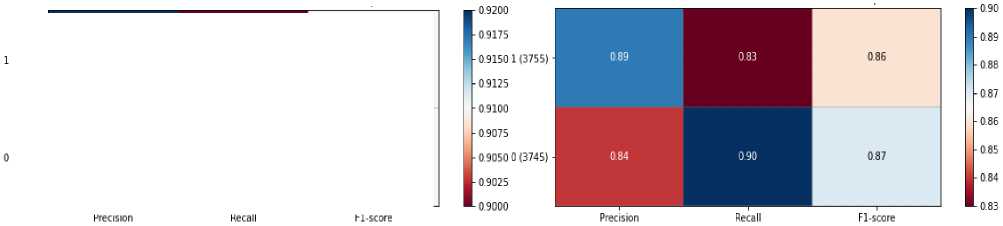

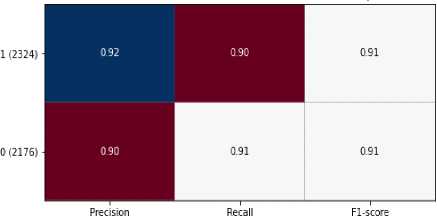

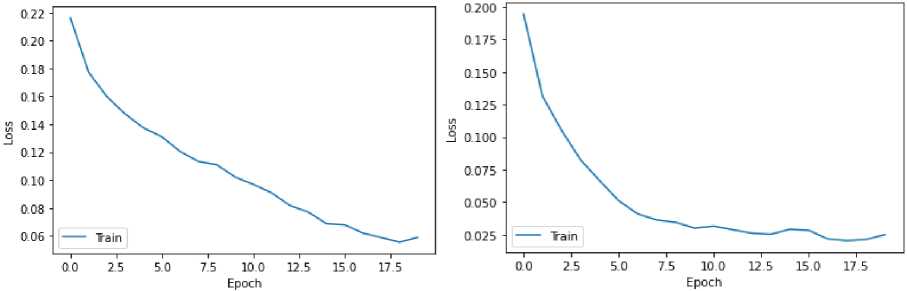

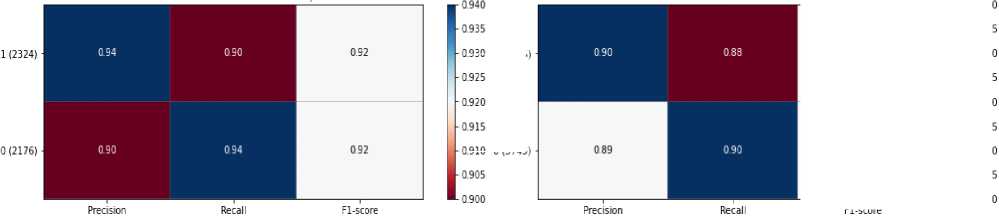

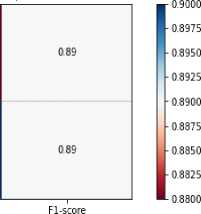

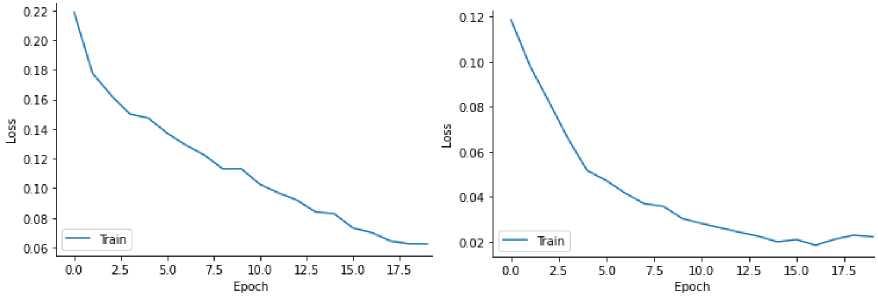

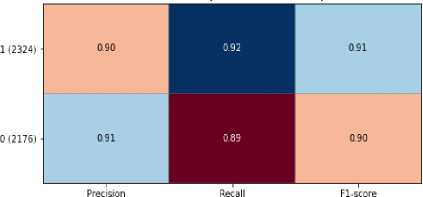

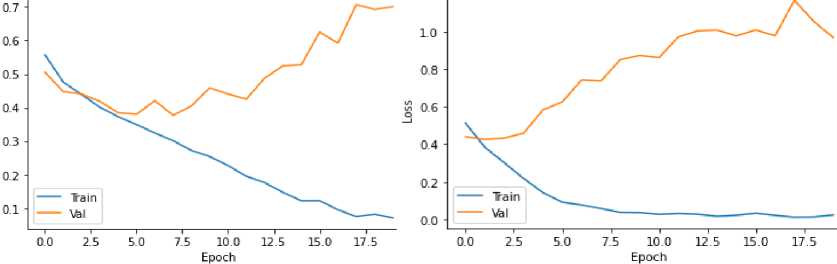

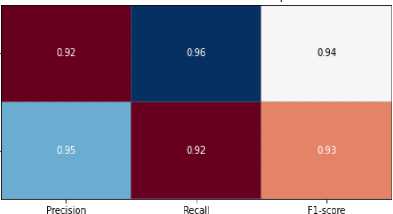

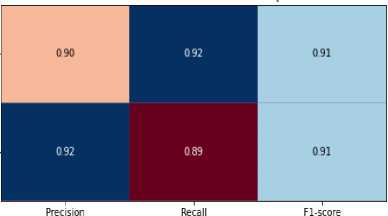

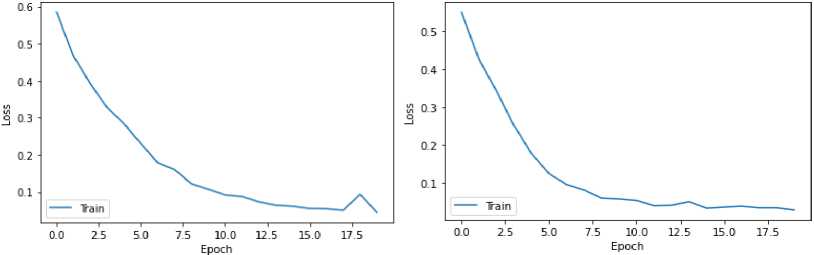

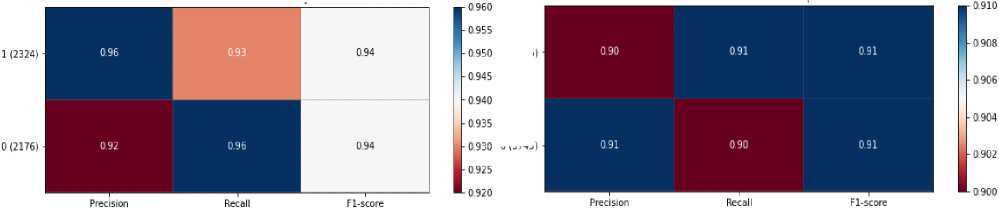

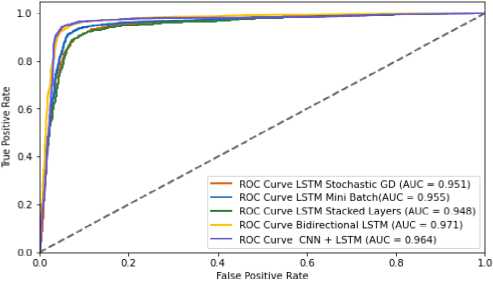

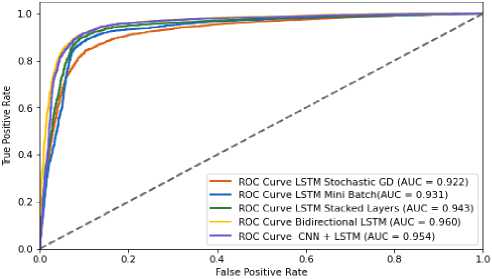

-