PCA based Multimodal Biometrics using Ear and Face Modalities

Автор: Snehlata Barde, A S Zadgaonkar, G R Sinha

Журнал: International Journal of Information Technology and Computer Science(IJITCS) @ijitcs

Статья в выпуске: 5 Vol. 6, 2014 года.

Бесплатный доступ

Automatic person identification is an important task in computer vision and related applications. Multimodal biometrics involves more than two modalities. The proposed work is an implementation of person identification fusing face and ear biometric modalities. We have used PCA based neural network classifier for feature extraction from the images. These features are fused and used for identification. PCA method was found better if the modalities were combined. Identification was made using Eigen faces, Eigen ears and their features. These were tested over own created database.

Biometrics, Principal Component Analysis (PCA), Eigen Faces, Eigen Ears, Euclidian Distance Hierarchical Matching, Calligraphic Retrieval, Skeleton Similarity

Короткий адрес: https://sciup.org/15012080

IDR: 15012080

Текст научной статьи PCA based Multimodal Biometrics using Ear and Face Modalities

Published Online April 2014 in MECS

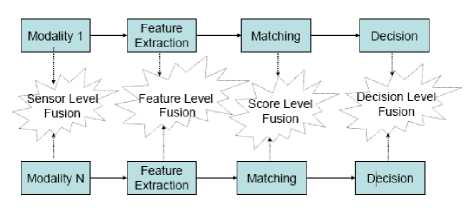

An essential feature of new applications services employ each its own authentication method and use different credentials. Biometrics deals with identification of a person based on biometric traits such as face, fingerprint, iris etc. As a result, biometric recognition using a single biometric trait or modality may not be robust and it has a limited ability to overcome the problem due to spoofing. The biometric technologies can be combined to provide enhanced security over a single modal biometrics, which is called as multimodal biometric system [1]. A multimodal bio-metric system integrates multiple source of information obtained from different biometric sources. Multimodal biometrics system involves various levels of fusion, namely, sensor level, feature level, matching score level, decision level and rank level [2-4]. Identity management system is challenging task in providing authorized user with secure and easy access to information and services across a wide verity of network system. Biometrics refers to the use of physiological or biological characteristics to measure the identity of an individual [5]. These features are unique to each individual and remain unaltered during lifetime. Many problems arise because of the variation in several parameters such as scale, lighting, poor illumination and other environmental parameters [6, 7]. Usually, biometric techniques are developed to give binary decisions that accept the authorized persons and reject the impostors or unauthorized entities. Mainly, there are two types of errors that occur in biometric systems namely false acceptance (FA) errors which let the impostor in, and false rejection (FR) errors that keep the authorized personnel out.

Fig. 1 shows a generalized block diagram of multimodal biometrics

Fig. 1: Multimodal biometric system

In this paper, we evaluate and compare the false acceptance rate (FAR) and the false rejection rate (FRR) of multimodal biometric prototypes. The system error of a multimodal biometric system is a combination of the FAR and the FRR from different biometric technologies. Unlike in the case of a single biometric measurement, the combination of several measurements makes it harder to analyze the accuracy of a multimodal biometric system

The remaining section of this paper is organized as follows: Section 2 describes related research in this field, Section 3 gives brief description of Principle component analysis as a pre-processing technique classifiers and Section 4 discusses the preprocessing steps involved to recognize face and ear images and to perform the implementation of PCA evaluation. Section 5 includes a method of combining the face matching score and the ear matching score. The proposed method has been tested on self created database. Experimental results have been analyzed in and conclusions are reported.

-

II. Related Research

In recent years, multimodal biometrics has received significant attention from both research communities and biometrics market. A numerous research contributions in the field of biometrics can be seen in current literatures. As sensor level fusion consolidates the information at very early stage, it is expected to hold more information as compared to any other level of fusion. Existing literatures reported some research works on sensor level fusion [8-16]. The sensor level fusion lies in multi-sample system that captures multiple snapshots of the same biometric. Heo et al. (2004) suggested a weighted image fusion of visible and thermal face images where weights are assigned empirically on the visible and thermal face images by decomposing them using wavelet transform [17].

Gyaourova et al. (2004) employed Genetic Algorithm for feature selection and fusion where group of wavelet features from visible and thermal face images are selected and fused to form a single image. Here there is no scope for weighting [13]. Vatsa et al. (2008) proposed a weighted image fusion using 2V-SVM where weights are assigned by finding the activity level of visible and thermal face image [18]. Kisku et al. (2009) proposed a sensor level fusion scheme for face and palm print biometrics where face and palm print are decomposed using Haar wavelet and then average of wavelet coefficients is fused as image of face and palm print. Finally, inverse wavelet transform is carried out to form a fused image of face and palm print. Feature level fusion involves consolidating the evidence presented by two biometric feature sets of the same individual. Thus as compared to match score level or decision level fusion, the feature level exhibits rich set of information. The majority of the work reported on feature level fusion is related to multimodal biometric system. Feng et al. (2004) proposed the feature level fusion of face and palm print in which Principle Component Analysis (PCA) and Independent Component Analysis (ICA) are used for feature extraction [10]. Zhao et al. (2000) implemented a multimodal biometric system using face and palm print at feature level. Here, Gabor features of face and palm prints are obtained individually. Extracted

Gabor features are then analyzed using linear projection scheme such as PCA to obtain the dominant principal components of face and palm print separately [6]. Jing et al. (2007) employed Gabor transform for feature extraction and then Gabor features are concatenated to form fused feature vector. Then, to reduce the dimensionality of fused feature vector, non linear transformation techniques such as Kernel discriminate Common Vectors are employed [7]. Ross et al. (2003) proposed a multimodal biometric system using Face and hand geometry at feature level. Face is represented using PCA and LDA while 32 distinct features of hand geometry is extracted and then concatenated to form a fused feature. Then, Sequential Feed Forward Selection (SFFS) is employed to select the most useful features from the fused feature space [12]. The majority of the works reported on multimodal biometric are confined to score level fusion. Score level fusion techniques can be divided into three different categories (1) Transformation based methods (2) Classifier Based Methods (3) Density based score fusion. In transformation based method, scores obtained from different modalities are normalized so that, they will lie in the same range weights are calculated depending on the individual performance of the modalities. In classifier based score fusion, a pattern classifier is used to indirectly learn the relationship between the vectors of match scores provided by the ’K’ biometric matchers. Heo et al. (2004) used classical K-means clustering, fuzzy clustering and median Radial Basis Function (RBF) for fusion at match score level [17]. G.R. Sinha et al.(2010) Modified the PCA based Noise reduction of CFA images[21].

-

III. Principal Component Analysis

Principal Component Analysis (PCA) is one of the most popular face recognition algorithms. The databases for the proposed work contains train subjects and test subjects. For testing the biometric system, face or ear images were used from test subjects (same persons of the train set). The first step is to train the PCA using the training set, in order to generate eigenvectors. The mean image is computed of the training data as:

=1∑ mZ-j

м

Γп п-1

Each training image is subtracted as:

Фг =Γi -ѱTrain і=1,2,…․․М (2)

This set of very large vectors is then subjected to principal component analysis, which seeks a set of M orthonormal vectors, Un. The kth vector, Uk, is chosen such that:

м

λк = 1 ∑( ∪ £Φп)2 (3)

М п-1

The vectors Uk and scalars λk are the eigenvectors and eigenvalues, respectively of the following covariance matrix (CM):

М

∁ = 1 ∑(Φп Φ п )= ААТ (4)

п-1

The mean image Ψ of the gallery set is computed. Then this is projected onto the “face space” or “ear space” spanned by the M eigenvectors derived from the training set [4]. This gives:

0)к = ∪ к Φi Κ=1…․М

Euclidian distance is calculated to classify the new face or ear class as follows:

d К = ∥ Ω-Ωк ∥

where, dk is a vector describing the kth face or ear class.

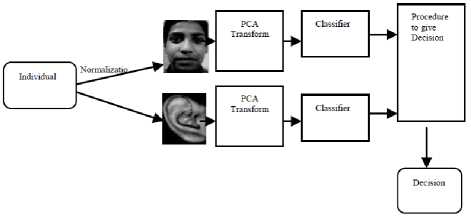

Each face in the training set is transformed into the face space and its components are stored in memory. The system computes its Euclidian distance from all the stored faces. Fig. 2 shows a general view of identification process.

Fig. 2: A general view of the identification process

-

IV. Implementation of PCA

Usually a face image and ear image of size p × q pixels is represented by a vector in p.q dimensional space. In practice, however, these ( p.q) dimensional spaces are too large to allow robust and fast object recognition. A common way to attempt to resolve this problem is to use dimension reduction techniques. one of the most popular techniques for this purpose are Principal Components Analysis (PCA)

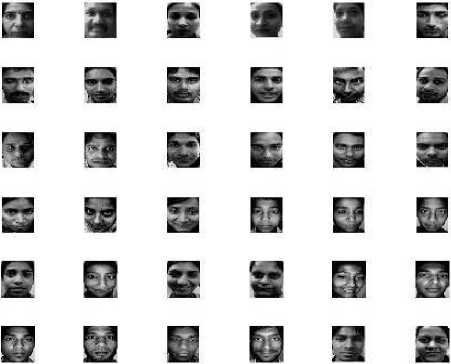

PCA implementation consists in classifying faces of 50 people including girls and boys to recognize. The information extracted from each face is represented by the distances corresponding to the 50 people. For each person, we can handle a large input vector, facial image, only by taking its small weight vector in the face space. The first step is to train the PCA using the training set and then each face in the training set is transformed into the face space and its components are stored in memory. Fig. 3 shows the training set of face images. Two dimensional images are considered as a vector, by concatenating each row or column of the image. Each classifier has its own representation of basis vectors of a high dimensional face vector space. The dimension is reduced by projecting the face vector to the basis vectors, and is used as the feature representation of each face images [8-12].The face recognition system consist the feature extraction techniques such as PCA is applied to the original input face image first. Fig. 4 shows a normalized training set of face images with normalized face images. Fig. 5 shows mean image which is computed by addition of all training images and dividing the image by number of images.

Training set а а « а вв

Е ■ В В иг

В Я Е Е н

2 S S 0 В0

S В Е s Пк?

в £3 в 0 а*

Fig. 3: Training set of faces

Normalized Training Set

Fig. 4: Normalized Training set of faces

Mean Image

Fig. 5: Mean image

Fig. 6 shows Eigen faces that can be viewed as feature set. When a particular face is projected onto the face space, its vector into the face space describes the importance of each of those features in the face. The face is expressed in the face space by its Eigen face coefficients (or weights).

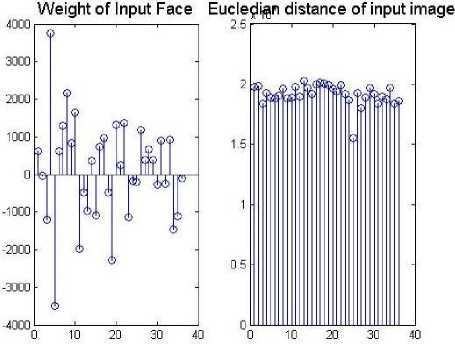

Fig. 8: Weight of input face and Euclidian distance

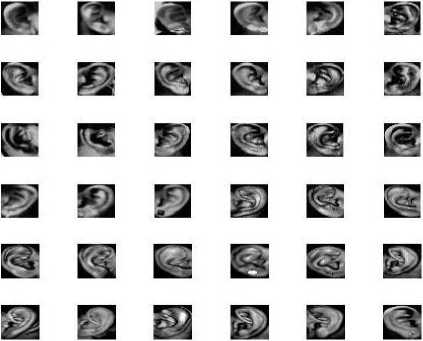

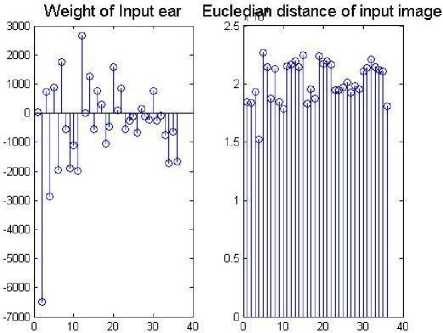

Fig. 9 to Fig. 14 present results for ear images.

Eigenfaces

Training set

Fig. 6: Eigen faces

Fig. 9: Training set of ear images

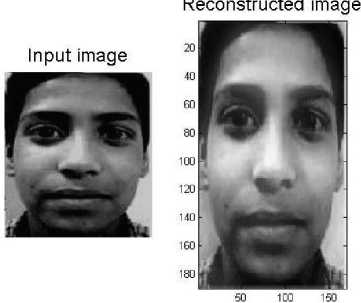

Fig. 7 shows the input image and reconstructed image. Fig. 8 shows weight of input face and the distance of input image. Their weights are stored. An acceptance or rejection is determined by applying a Euclidian distance comparison producing a distance, as mentioned in Table 1.

Fig. 7: Input image and reconstructed image

Normalized Training Set

Fig10: Normalized training set of ears

Fig. 11: Mean image

-

V. Results

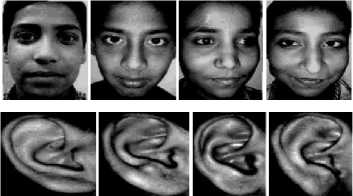

A database of faces and ears is created that consist of 50 person’s images for face and ear using high quality camera and sufficient light. Fig. 15 shows cropped and resize data sample for faces and ears.

Eigenfaces

Fig. 12: Eigen image set of ears

Fig.15: Cropped and resized data sample of faces and ears

Reconstructed image

50 100 150

Fig. 13: Input image and reconstructed ear image

Before performing recognition based on fusion of ear or face, input images should be pre-processed and normalized. First only face images or ear images in the profile face images are cropped. Both face and ear face images are filtered and transformed into the size of 170×190 pixels. Redistribution of intensity values of the images is carried out using histogram equalization, thereby producing an image with equally distributed intensity value. Training set for face or ear images per person are used as the gallery and test data images are used as the probe. The dimension of the training samples space of face or ear is reduced applying PCA. The minimum-distance classifier is used for classification. Euclidian distance for faces and ears has been shown in Table 1.

Fig. 14: Weight of input ear and distance of input image

Table 1: Euclidian distance for faces and ears

|

Faces |

Minimum Euclidian distance for faces |

Ears |

Minimum Euclidian distance |

|

Pf1 |

1.5488E+04 |

PE1 |

1.5343E+04 |

|

Pf2 |

1.5485E+04 |

PE2 |

1.5307E+04 |

|

Pf3 |

1.5483E+04 |

PE3 |

1.5274E+04 |

|

Pf4 |

1.5504E+04 |

PE4 |

1.5265E+04 |

|

Pf5 |

1.5488E+04 |

PE5 |

1.5257E+04 |

|

Pf6 |

1.5495E+04 |

PE6 |

1.5252E+04 |

|

Pf7 |

1.5492E+04 |

PE7 |

1.5244E+04 |

|

Pf8 |

1.5515E+04 |

PE8 |

1.5247E+04 |

|

Pf9 |

1.5496E+04 |

PE9 |

1.5247E+04 |

|

Pf10 |

1.5502E+04 |

PE10 |

1.5254E+04 |

|

Pf11 |

1.5511E+04 |

PE11 |

1.5241E+04 |

|

Pf12 |

1.5514E+04 |

PE12 |

1.5260E+04 |

|

Pf13 |

1.5516E+04 |

PE13 |

1.5249E+04 |

|

Pf14 |

1.5512E+04 |

PE14 |

1.5224E+04 |

|

Pf15 |

1.5517E+04 |

PE15 |

1.5229E+04 |

|

Pf16 |

1.5519E+04 |

PE16 |

1.5254E+04 |

|

Pf17 |

1.5507E+04 |

PE17 |

1.5241E+04 |

|

Pf18 |

1.5516E+04 |

PE18 |

1.5253E+04 |

|

Pf19 |

1.5486E+04 |

PE19 |

1.5256E+04 |

|

Pf20 |

1.5501E+04 |

PE20 |

1.5260E+04 |

|

Pf21 |

1.5502E+04 |

PE21 |

1.5248E+04 |

|

Pf22 |

1.5485E+04 |

PE22 |

1.5182E+04 |

|

Pf23 |

1.5468E+04 |

PE23 |

1.5175E+04 |

|

Pf24 |

1.5502E+04 |

PE24 |

1.5163E+04 |

|

Pf25 |

1.5498E+04 |

PE25 |

1.5159E+04 |

|

Pf26 |

1.5501E+04 |

PE26 |

1.5192E+04 |

|

Pf27 |

1.5497E+04 |

PE27 |

1.5152E+04 |

|

Pf28 |

1.5494E+04 |

PE28 |

1.5152E+04 |

|

Pf29 |

1.5506E+04 |

PE29 |

1.5104E+04 |

|

Pf30 |

1.5512E+04 |

PE30 |

1.5214E+04 |

|

Pf31 |

1.5502E+04 |

PE31 |

1.5227E+04 |

|

Pf32 |

1.5500E+04 |

PE32 |

1.5223E+04 |

|

Pf33 |

1.5501E+04 |

PE33 |

1.5191E+04 |

|

Pf34 |

1.5505E+04 |

PE34 |

1.5197E+04 |

|

Pf35 |

1.5498E+04 |

PE35 |

1.5175E+04 |

|

Pf36 |

1.5509E+04 |

PE36 |

1.5239E+04 |

Firstly, face and ear algorithms are tested individually and individual weight for face is found to be 92% and for ear 96% as shown in Table 2. However, in order to increase the accuracy of the biometric system as a whole the individual results are combined at matching score level. At second level of experiment the matching scores from the individual traits are combined and final weight is calculated. The overall performance of the system has increased showing weight for face and ear of 98.24% with FAR of 1.06 and FRR of 0.93 respectively.

Table 2: Individual and combine accuracy

|

Traits |

Algorithm |

Weight % |

FAR |

FRR |

|

Faces |

PCA |

92 |

1.08 |

0.98 |

|

Ears |

PCA |

96 |

1.17 |

0.99 |

|

Combined |

PCA |

98 |

1.06 |

0.93 |

-

VI. Conclusion

Recently, several contributions have been made in the field of biometrics and investigations have been carried out in the domain of multi-modal biometrics. Good combination of multiple biometric traits and various fusion methods is achieved to get the optimal identification results. In this paper, PCA based multimodal biometrics has been presented using ears and faces modalities for self-created databases. Multimodal biometrics have resulted improved performance in terms of recognition accuracy, FAR and FRR.

Список литературы PCA based Multimodal Biometrics using Ear and Face Modalities

- R. Arun, Jain Anil, et al. Multimodal Biometrics: An Overview[C]. Proceedings of 12th European Processing Conference, 2004.1221-1224(Vienna, Austria)

- A.Ross, A.K.Jain, et al. Information fusion in biometrics. Pattern Recognition Letters, 2003, 24(13) :2115 –2125.

- A. K. Jain, A. K. Ross , S. Prabhakar, et al. An introduction to biometrics recognition [J]. IEEE Transactions on Circuits and Systems for Video Technology, 2004, 14(1):4–20.

- M. Turk, A. Pentland, et al. Eigenfaces for recognition[J]. Journal of Cognitive Science. 1991. 3(1):71-86.

- D.R. Kisku, J. K. Singh, M. Tistarelli,et al. Multisensor biometric evidence fusion for person authentication using wavelet decomposition and monotonic decreasing graph[C]. Proceedings of 7th International Conference on advances in Pattern Recognition (ICAPR-2009):205-208 (Kolkata, India)

- W. Zhao, R. Chellappa, A. Rosenfeld, P. J. Phillips,et al. Face Recognition[J]. An ACM Computing Surveys, 2003, 35(4):399-458.

- X. Y. Jing, Y.F. Yao, J.Y. Yang, M. Li, D. Zhang et al. Face and palmprint pixel level fusion and kernel DCVRBF classifier for small sample biometric recognition[J]. Pattern Recognition, 2007, 40 (3): 3209-3224.

- P.Xiuqin, X.Xiaona, L.Yong, C.Youngcun.et al. Feature fusion of multimodal recognition based on ear and profile face [J]. Proceedings of SPIE. 2008.

- A.Rattani,M. Tistarelli, et al. Robust multimodal and multiunit feature level fusion of face and iris biometrics[C].International Conference of Biometrics, Springer, 2009, :960-969.

- G.Feng, K.Dong, D.Hu,D.Zhang et al. When faces are combined with palmprints: a novel biometric fusion strategy[C]. in First International Conference on Biometric Authentication (ICBA), 2004 :701 –707.

- J. Kittler ,E. F. Roli et al. Decision-level fusion in fingerprint verification. Springer Verlag 2001.

- A. Ross, A.K.Jain, et al. Information fusion in biometrics. Pattern Recognition Letters, 2003, 24(13): 2115 –2125.

- S.Singh, A.Gyaourova, G.Bebis, Pavlidies,et al. Infrared and visible image fusion for face recognition. in of SPIE Defense and security symposium , 2004,41: 585 –596.

- R.Snelick, U.Uludag, A.Mink, M.Indovina, A.K. Jain et al. Large -scale evaluation of multimodal biometric authentication using state-of-the-art systems[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2005, 27( 3): 450 – 455.

- Y.Wang, T.Tan, A.K.Jain et al. Combining Face and Iris Biometrics for Identity Verification [C]. in proceedings of 4th International Conference on Audio and Video based Biometric Person Authentication, 2003,150(6):402- 408 (Guildford, UK)

- R.Brunelli, D.Falavigna et al. Person identification using multiple cues[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 1995,17(10): 955 –965.

- J.Heo,S.Kong,B.Abidi,M.Abidi,et al.Fusion of visible and thermal signatures with eyeglass removal for robust face recognition. IEEE workshop on Object Tracking and Classification Beyond the visible spectrum in conjunction with (CVPR-2004), 2004:94–99,( Washington, DC, USA)

- R.Singh,M.Vatsa, A.Noore. et al.Integrated multilevel image fusion and match score fusion of visible and infrared face images for robust face recognition[J].Pattern Recognition ACM Digital Library, 2008, 41(3): 880 –893.

- A.Ross, R.Govindarajan et al. Feature level fusion using hand and face biometrics[C].Proceedings of SPIE Conference on Biometric Technology for Human Identification, 2004, 5779:196 –204(Orlando ,USA)

- L. Hong, A.K. Jain, et al. Integrating Faces and Fingerprints For Personal Identification[J]. IEEE Transactions Pattern Analysis and Machine Intelligence, 1998, 20(12):1295-1307.

- G.R. Sinha, Kavita Thakur,et al. Modified PCA based Noise reduction of CFA images[J]. Journal of Science, Technology & Management, 2010,1(2):60-67.