Проектирование системы редактирования аудио и видео на основе OpenCV

Автор: Юэханг Сонг, Борун Чен, Сяобинь Лю, Ху Вэйцзюнь, Се Сяньюй, Янь Юци

Журнал: Informatics. Economics. Management - Информатика. Экономика. Управление.

Рубрика: Информатика, вычислительная техника

Статья в выпуске: 1 (2), 2022 года.

Бесплатный доступ

С быстрым развитием Интернета такой новый носитель для восприятия мира и общения людей друг с другом, как аудио и видео, постепенно становится все более популярным среди населения планеты. Развитие мультимедийных технологий и технологий искусственного интеллекта стало вехой на пути к зрелости аудио- и видеотехнологий. В частности, короткие видеоплатформы постепенно становятся новой сетевой позицией для различных медиа-продвижений. Особенно в момент эпидемии все больше ценится канал понимания мира через аудио и видео. Общественность выдвигает повышенные требования к содержанию и подаче аудио- и видеоматериалов. Поэтому особенно важно производить качественное аудио-видео, отвечающее требованиям времени, чего невозможно достичь без эффективной системы аудио-видео монтажа. Кроме того, после предыдущих исследований и практики, применение технологии искусственного интеллекта в области визуализации также стало более зрелым, включая некоторые приложения в направлении развлечений. Применение технологии искусственного интеллекта в процессе редактирования видео может повысить эффективность редактирования, увеличить интерес к видеоконтенту и позволит создателям видео сосредоточиться на разработке контента, не тратя слишком много времени и энергии на операции редактирования видео, тем самым создавая видео более высокого качества. Предлагаемая разработка использует основную технологию OpenCV и стек front-end технологий, таких как JavaScript, React и Electron, для реализации базового видеомонтажа, видеофильтров в дополнение к разработке дружественного интерактивного интерфейса. Реализация базового модуля редактирования видео и модуля видеофильтров основана на реализации OpenCV. В данном проекте базовое редактирование видео реализует операции панорамирования, масштабирования и поворота видео, а модуль видеофильтра реализуется путем изменения значений каналов RGB изображения. Операции над видео можно разбить на операции над каждым кадром видео, и OpenCV предоставляет способ реализации этих операций. В конце статьи приведены выявленные недостатки и недочеты разработки, а также дается прогноз на следующие шаги исследования и перспективные направления. Данная разработка использует основную технологию OpenCV и стек front-end технологий, таких как JavaScript, React и Electron, для реализации базового видеомонтажа, видеофильтров, в дополнение к разработке дружественного интерактивного интерфейса. Реализация базового модуля редактирования видео и модуля видеофильтров основана на реализации OpenCV. В данном проекте базовое редактирование видео реализует операции панорамирования, масштабирования и поворота видео, а модуль видеофильтра реализуется путем изменения значений каналов RGB изображения. Операции над видео можно разбить на операции над каждым кадром видео, и OpenCV предоставляет способ реализации этих операций. В конце статьи приведены недостатки и недочеты разработки, а также дается прогноз на следующие шаги и перспективные направления.

Компьютерное зрение, OpenCV, фронт-энд технология, аудио- и видеомонтаж

Короткий адрес: https://sciup.org/14124346

IDR: 14124346 | УДК: 004.4'27 | DOI: 10.47813/2782-5280-2022-1-2-0101-0120

Текст статьи Проектирование системы редактирования аудио и видео на основе OpenCV

DOI:

In the process of perceiving the world, human beings are more interested in auditory and visual sensations, and sound and image are the most direct means. With the progress of technology and the rapid development of mobile Internet, audio and video as a new carrier for people to perceive the world and communicate with each other, making people's communication and interaction more vivid and emotional. The emergence of multimedia technology and the maturity of artificial intelligence technology is a milestone for the storage and editing of audio and video technology. In particular, with the increasing maturity of network technology, short videos, which are pushed with high frequency, have gradually become the hot spot of mobile Internet development [1-2], and major companies have launched their own short video application services. The short video platform has slowly become a new position for media publicity and promotion [3-4]. Short video users in China account for more than 80% of all Internet users, and as a "new species" of video, short video is showing its vitality and vigor.

According to the "2021 Youth Employment and Career Planning Report" released by the People's Data Research Institute of People's Daily Online and Global Youth Vine; selfmedia is a popular choice for young people to start a side business. As one of the important communication carriers of self-media, audio and video play an irreplaceable role. With the continuous development of science and technology and the improvement of living standards, people's demand for audio and video quality is constantly increasing. At a time when the epidemic is reducing the number of outbound travels, the channel of understanding the world through audio and video is being paid more and more attention to. It is particularly important to produce quality audio and video that meet the requirements of the times, which is inseparable from a feasible audio and video editing system. The use of deep learning to process audio and video is the icing on the cake, not only reducing the learning cost and shortening the production cycle of audio and video, but also enriching the content performance of audio and video [4].

Current status of audio and video editing system research

Due to the development of digital technology and the establishment of audio and video compression technology standards, nonlinear audio and video editing systems have replaced traditional linear editing methods, which were time-consuming, repetitive and inefficient [3]. Non-linear audio and video editing systems provide a guarantee for the user's creative ideas through a "what you see is what you get" approach, which is easy to inspire the creator and to immediately realize the creator's intention, and easy to operate, saving equipment and manpower and improving efficiency. Non-linear audio and video editing systems include video and audio track processing, special effects, subtitling and other functions [7].

FFmpeg was first launched by Fabrice Bellard as a multimedia video processing tool to provide solutions for audio and video streaming. Thanks to its powerful codec, FFmpeg is fully flexible and fast to convert audio and video efficiently according to user preset parameters, and it is also easy to separate and composite video and audio, etc. [21].

Whether it is professional editing software, such as Adobe Premiere Pro, Final Cut Pro, Vegas Pro, etc., or mobile editing tools that have emerged in China, such as Cut Image, Must Cut, and BuGoo Edit, all of them use deep learning technology to improve the efficiency of creation and enrich the creative content [14].

OVERALL ARCHITECTURE DESIGN OF AUDIO AND VIDEO EDITING SYSTEM

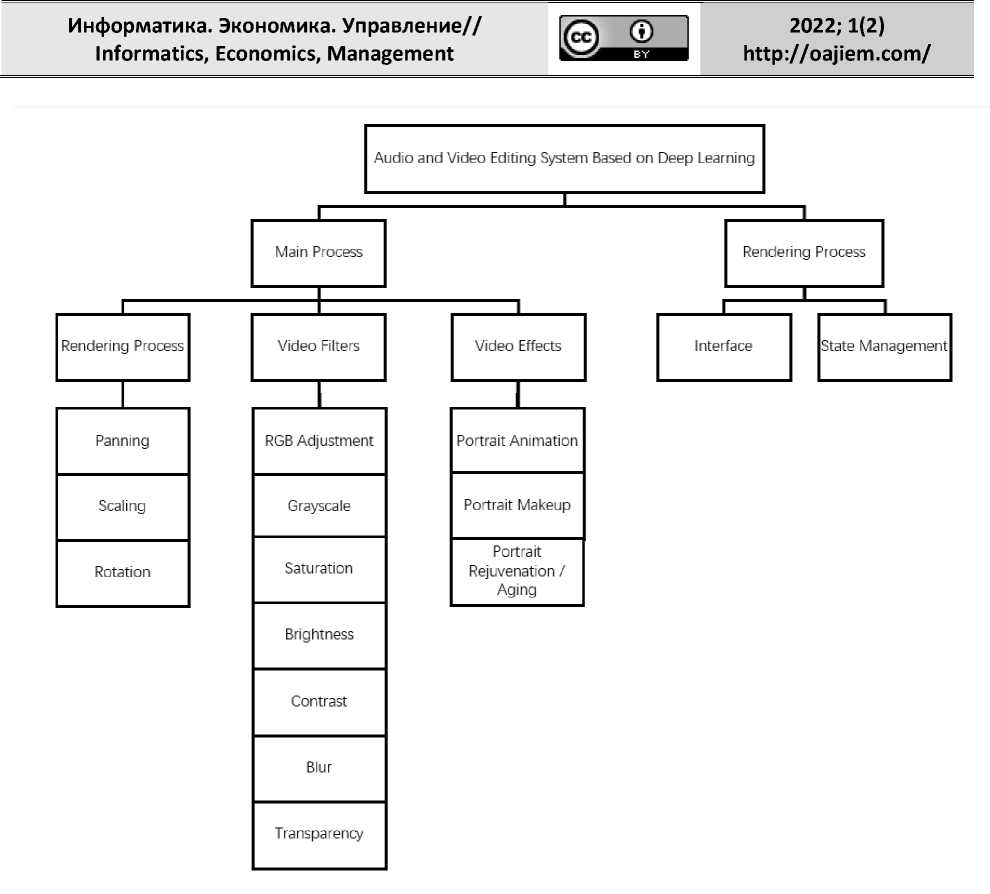

The deep learning based audio/video editing system is a combination of deep learning, OpenCV and front-end technology stack to realize the three modules of video editing: basic video editing, video filters and video effects [15].

The basic video editing and video filters are implemented by OpenCV. The basic video editing includes video panning, zooming and rotating [10], while the video filters process the video by adjusting the RGB three channels of the image, including grayscale, color saturation, brightness, contrast, and blur and transparency. Three video effects are implemented in the video effects module, which are Pixel2Pixel-based portrait cartoonization, PSGAN-based portrait makeup, and StyleGAN2-based youthful/aging face generation [23].

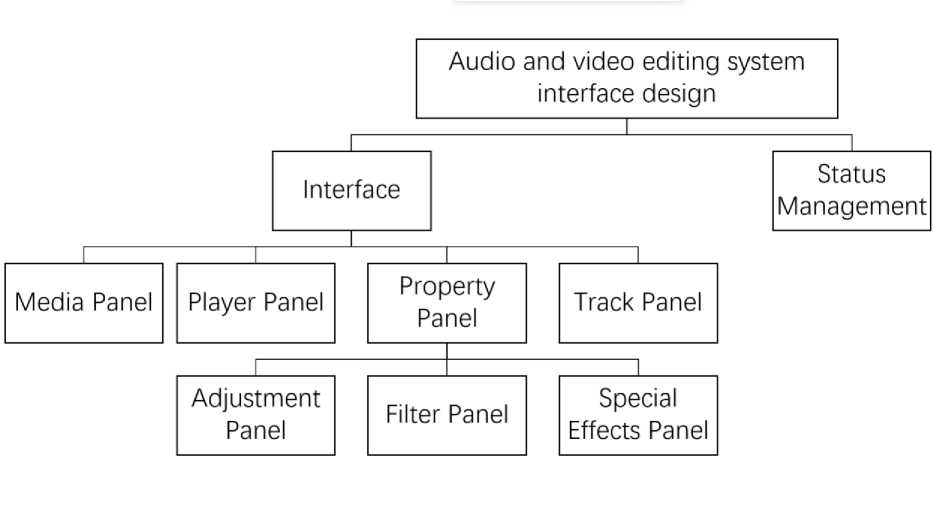

The overall design of this system is shown in Figure 1.

The system consists of a main process that calls other modules to process the video, and a rendering process that interacts and updates the system interface. The rendering process manages the interface of the system and the component states of the interface, and when the user manipulates the interface, it changes the component states and triggers the interface update.

Figure 1. System design diagram.

When the video needs to be processed, the rendering process communicates with the master process, which notifies the master process of the operation to be performed. The master process receives the message and calls the specified module to process the video, and then notifies the rendering process when the processing is completed [16].

BASIC VIDEO EDITING AND VIDEO FILTER IMPLEMENTATION

In the system design of this paper, the basic video editing and video filtering are implemented based on OpenCV.

Introduction to OpenCV

OpenCV (Open Source Computer Vision Library), an open source computer vision library, is a cross-platform, open-share image processing software library. OpenCV is written

Информатика. Экономика. Управление// 2022; 1(2) Informatics, Economics, Management in C++, which implements many of the current more general and practical algorithms in the field of image processing and computer vision, and provides programming interfaces for Python, Java, MATLAB, and so on. MATLAB and other programming interfaces, which are widely used in the design and development of real-time image processing, computer vision, and pattern recognition [5].

Basic video editing implementation

Video panning

Video panning is the operation of panning a video frame to a specified position. The procedure is to read the video first, use the warpAffine function provided by OpenCV to pan each frame, and finally save it as a new video.

Figure 2. Horizontal movement operation.

Figure 3. Vertical shift operation.

As shown in Figure 2 and Figure 3, the original image is in the middle, in Figure 2, the image is panned 50% to the left on the left and 50% to the right on the right, and in Figure 3, the image is panned 50% up on the left and 50% down on the right.

Video scaling

Video scaling is the operation of scaling a video frame to a specified size. The procedure is to read the video, use the resize function provided by OpenCV to scale each frame to the specified size, and finally save it as a new video.

Figure 4. Zoom operation.

As shown in Figure 4, the middle is the original image, the left is the image with 50% reduction, and the right is the image with 50% enlargement.

Video rotation

Video rotation is the operation of rotating a video frame by a specified angle. The procedure is to read the video, rotate each frame by a specified angle using the getRotationMatrix2D function provided by OpenCV, and finally save it as a new video.

Figure 5. Rotation operation.

As shown in Figure 5, the middle is the original image, the left is the image rotated by 90° counterclockwise, and the right is the image rotated by 90° clockwise.

Информатика. Экономика. Управление// 2022; 1(2) Informatics, Economics, Management

Video filter implementation

Video filters include adjustment of the RGB channel, grayscale, saturation, brightness, contrast of the image, in addition to the adjustment of the image blur and transparency.

RGB Channels

To adjust the RGB channels, first split the R, G, and B channels using the split function provided by OpenCV, then adjust each channel separately, and finally merge the modified three channels into one image using the merge function provided by OpenCV.

Figure 6. Adjusting the red channel.

Figure 7. Regulating the green channel.

Figure 8. Adjusting the blue channel.

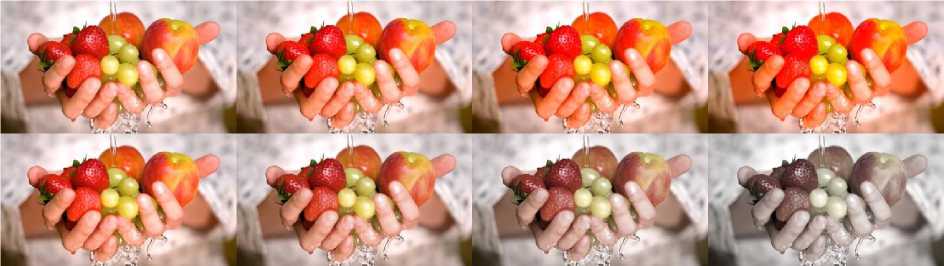

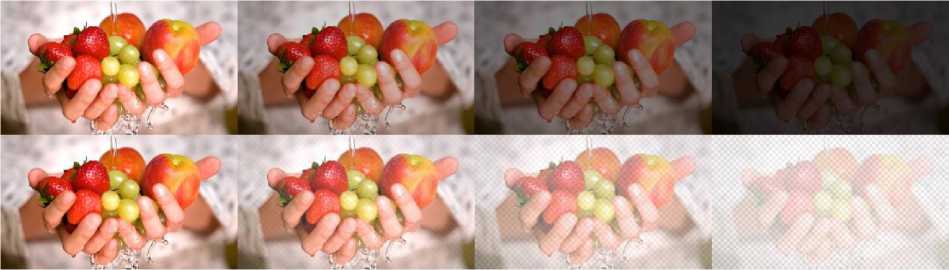

As shown in Figure 6, Figure 7, and Figure 8, the first column is the original image. For Figure 6, in the first row, the second column spreads

In the second row, the second column shows a 20% increase in the red channel, the third column shows a 50% increase in the red channel, and the fourth column shows an 80% increase in the red channel; in the second row, the second column shows a 20% decrease in the red channel, the third column shows a 50% decrease in the red channel, and the fourth column shows an 80% decrease in the red channel. Figure 7, Figure 8, and so on [6-9].

Grayscale

Adjusting the image grayscale is achieved by adjusting the S channel in the HLS color space. The color space of the image is first converted to HLS, the H, L, and S channels are split using the split function provided by OpenCV, the S channel is adjusted, and finally the modified three channels are merged into one image using the merge function provided by OpenCV.

Figure 9. Adjusting Gray Scale.

As shown in Figure 9, among the five images, the first one is the original image, the second one is the image with the gray scale set to 20%, the third one is the image with the gray scale set to 50%, the fourth one is the image with the gray scale set to 80%, and the last one is the image with the gray scale set to 100%, which is the black and white image.

Saturation

Adjusting image saturation is achieved by adjusting each channel in the HLS color space. First, we convert the image color space to HLS, split the H, L, and S channels using the split function provided by OpenCV, then adjust each channel, and finally use OpenCV to adjust the saturation of each channel [10-14].

The supplied merge function merges the modified three channel images into one image.

Figure 10 . Adjusting Saturation.

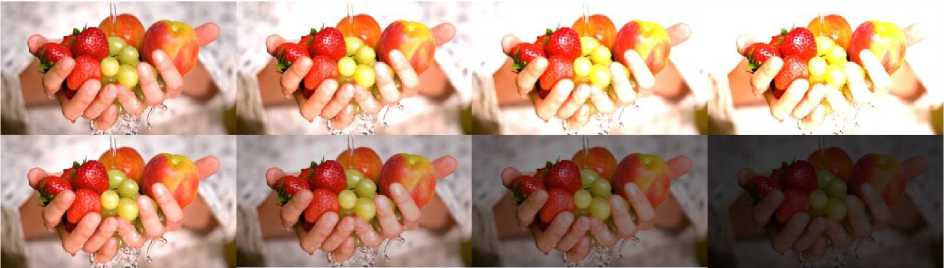

As shown in Figure 10, the first of the four images in the first row is the original image, the second is the image with a 20% increase in saturation, and the second is the image with a 20% increase in saturation.

The third image is the one with 50% increase in saturation and the last one is the one with 80% increase in saturation; among the four images in the second row, the first one is the original image, the second one is the one with 20% decrease in saturation, the third one is the one with 50% decrease in saturation and the last one is the one with 80% decrease in saturation.

Brightness

Adjust the image brightness, using the addWeighted function provided by OpenCV for each frame, and finally save it as a new video.

Figure 11. Adjusting Brightness.

As shown in Figure 11, in the first row, the first image is the original image, the second is the image with 20% increase in brightness, the third is the image with 50% increase in brightness, and the last is the image with 80% increase in brightness; in the second row, the first image is the original image, the second is the image with 20% decrease in brightness [15-

17], the third is the image with 50% decrease in brightness, and the last is the image with 80% decrease in brightness.

Contrast

To adjust the image contrast, use the addWeighted function provided by OpenCV to adjust each frame and save it as a new video.

Figure 12. Adjusting Contrast.

As shown in Figure 12, in the first row, the first image is the original image, the second is the image with 20% increase in contrast, the third is the image with 50% increase in contrast, and the last is the image with 80% increase in contrast; in the second row, the first image is the original image, the second is the image with 20% decrease in contrast, the third is the image with 50% decrease in contrast, and the last is the image with 80% decrease in contrast. The last image is the one with 80% contrast reduction.

Fuzzy

Blur the image, using the blur function provided by OpenCV for each frame, and finally save it as a new video.

Figure 13. Blur operation.

As shown in Figure 13, the first one is the original image, the second one is the image with blur set to 20%, the third one is the image with blur set to 50%, and the last one is the image with blur set to 80%.

Transparency

The transparency of the image is adjusted, each frame is manipulated using the addWeighted function provided by OpenCV, and finally saved as a new video.

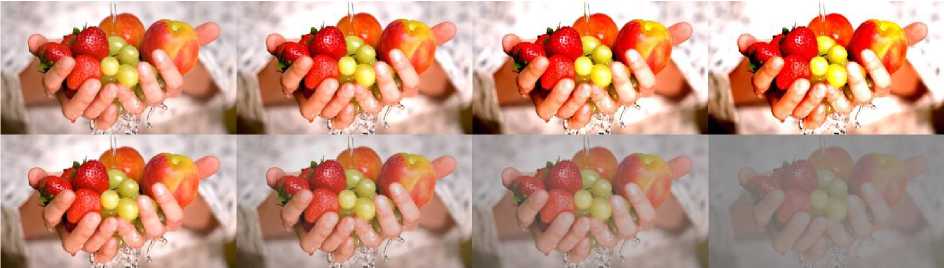

Figure 14. Adjusting Transparency.

As shown in Figure 14, the first column is the original image, the second column is the image with transparency set to 20%, the third column is the image with transparency set to 50%, and the last column is the image with transparency set to 80%. The grid in the second row represents transparency.

DEEP LEARNING BASED AUDIO AND VIDEO EDITING SYSTEM INTERFACEDEVELOPMENT

In the system design of this paper, the system interface is built using the React framework and the desktop application is developed using Electron.

Introduction to React

React is a JavaScript library developed by Facebook, Inc. to build user interfaces quickly and with minimal interaction with DOM elements by using Diff algorithms to simulate DOM elements. The development process starts by building simple components that manage their state, and then combining the wrapped components in various combinations to form more complex UI interfaces.

Ant Design is a set of open source component libraries wrapped in React by Ant Group. It implements a large number of highly reusable components, reducing the cost of development and allowing developers to focus on improving the user experience.

Introduction to Electron

Electron is a framework for building cross-platform desktop GUI applications using JavaScript, HTML, and CSS that is compatible with Windows, macOS, and Linux, allowing applications running on all three platforms to be built using the same set of code. These APIs enable the use of JavaScript to build desktop applications. Desktop applications built by Electron include a main process, which is responsible for the main business logic, and a rendering process, which is responsible for rendering updates to the interface [17-19].

Overall design of the audio and video editing system interface

In the audio and video editing system interface, the overall design diagram is shown in Figure 4-1. The interface contains a media panel, a player panel, a property panel and a track panel, and the property panel includes an adjustment panel, a filter panel and an effects panel, and the status of the components is managed in a unified manner.

Figure 15. Overall design of audio and video editing system interface.

Introduction to the system interface

The interface is shown in Figure 16.

Figure 16. System Interface.

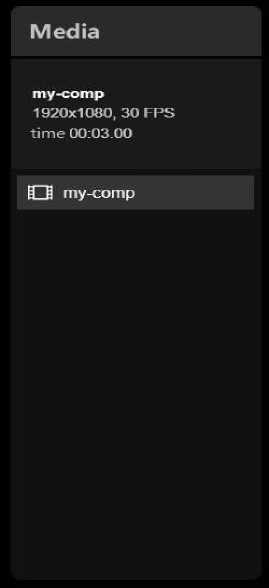

Media Panel

The Media panel is used to display the clips used in this video editing, as shown in

Figure 17.

Figure 17. Media Panel.

The panel also shows the user information about the resolution, frame rate and duration of the video.

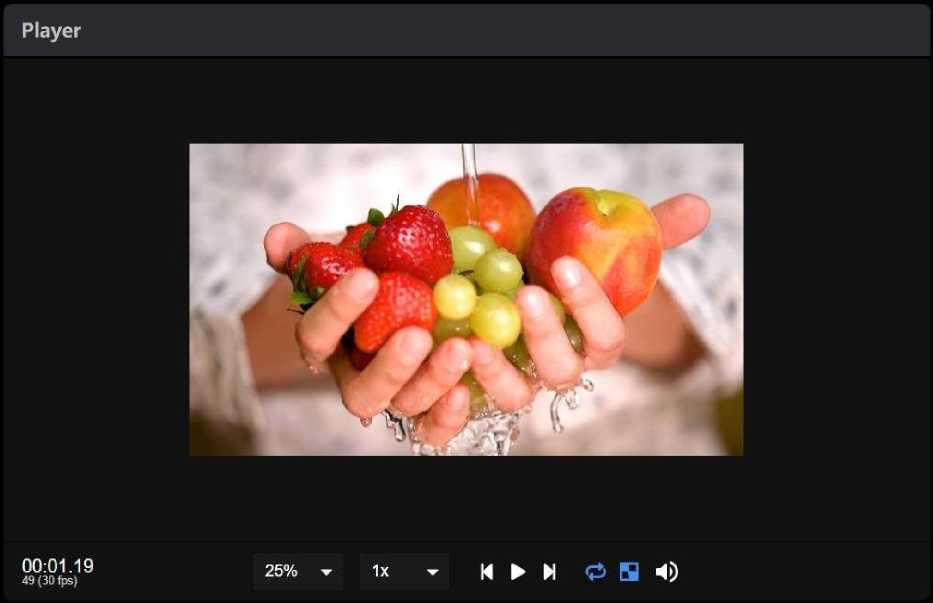

Player Panel

The player panel is used to display the video, as shown in Figure 18.

Figure 18. Player Panel.

The panel also implements a video control bar that allows you to control the playback, pause, loop, fast forward, fast rewind, playback speed and the size of the display of the video.

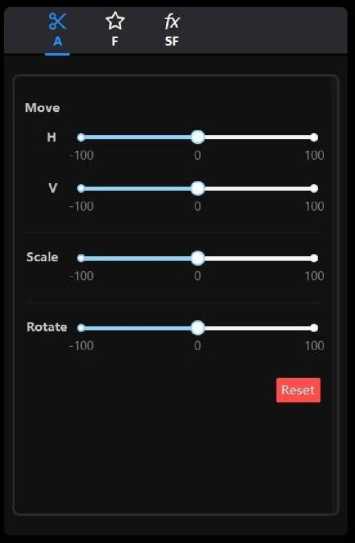

Properties panel

The Properties panel consists of the Adjustments panel, the Filters panel, and the Effects panel.

Adjustment panel

The adjustment panel is used to pan, zoom and rotate the video as shown in Figure 19.

Figure 19. Adjustment Panel.

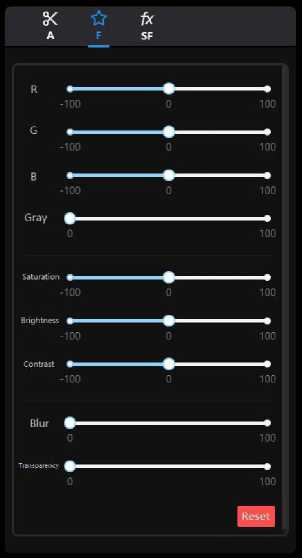

Filter panel

The Filter panel is used to perform RGB channel adjustment operations on the video, as

shown in Figure 20.

Figure 20. Filter panel.

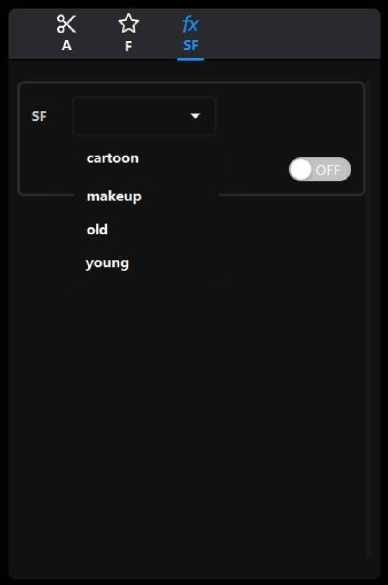

Special effects panel

The Special Effects panel is used to add special effects to the video, as shown in Figure

Figure 21. Special Effects Panel.

Filter panel

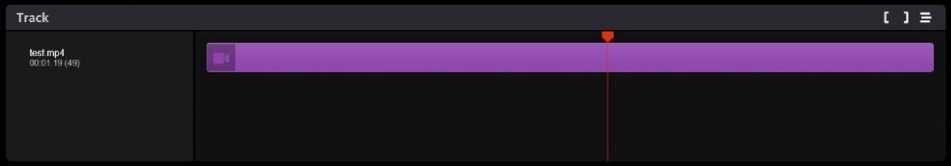

The Tracks panel is used to display the video clips used, as shown in Figure 22.

Figure 22. Track Panel.

In this panel, you can also select the playback interval of the video.

Информатика. Экономика. Управление// 2022; 1(2) Informatics, Economics, Management