The Role of Artificial Intelligence in Judicial Systems

Автор: Žaklina Spalević, Srđan Milosavljević, Dobrivoje Dubljanin, Gradimirka Popović, Miloš Ilić

Журнал: International Journal of Cognitive Research in Science, Engineering and Education @ijcrsee

Рубрика: Original research

Статья в выпуске: 3 vol.12, 2024 года.

Бесплатный доступ

The problem we tried to solve relates to the application of artificial intelligence in the legal and judicial system. Given the fact that artificial intelligence (AI) is increasingly taking precedence in various areas of human existence and work, it is an undoubted fact that the legal and judicial systems have not been left out either. It is precisely for this reason that the current state of the use of artificial intelligence in the judicial system, as well as potential new solutions, was first reviewed. In addition to the current one, we focused on the application of artificial intelligence in the legal and judicial system, especially in the domain of decision-making. In this regard, in this paper we propose the use of explainable artificial intelligence, which increasingly takes place when dealing with systems in which the precision and clarity of the parameters on the basis of which a decision is made are of great importance. Looking at the advantages of using explainable artificial intelligence in the decision-making process, one gets the impression that the application of such a model of neural networks can provide the necessary and sufficient conditions for the legal system to accept the use of artificial intelligence in decision-making in the judicial system.

Artificial Intelligence, Explainable Artificial Intelligence, AI in Courtroom, Legal System, Decision Making

Короткий адрес: https://sciup.org/170206560

IDR: 170206560 | УДК: 004.421:347.95 | DOI: 10.23947/2334-8496-2024-12-3-561-569

Текст научной статьи The Role of Artificial Intelligence in Judicial Systems

The development of computer technologies, recent innovations associated with the use of large data sets, as well as the use of artificial intelligence contribute to changes at all levels of human life and work. Artificial intelligence refers to a collection of scientific approaches, principles, and methods designed to enable machines to replicate human cognitive abilities (Nakad, et al., 2015). Recent advancements aim to enable machines to carry out complex tasks that were once performed by humans. Currently, the primary use of artificial intelligence involves machine learning (ML), which depends on large volumes of data to identify patterns and make predictions, potentially driving significant innovations in institutions and society.

As part of human society, the judicial system and related court processes have also inevitably undergone a series of changes made possible by recently developed technologies (Shi, et al., 2021). Artificial intelligence is becoming an increasingly important topic in legal studies, and researchers emphasize that the judicial system cannot ignore technological advances. The Vestal intelligentsia is already considered an inevitable introduction into judicial processes. However, some experts express concern about the possibility of accessing sensitive data, including personal information, and point out that no system can fully guarantee the security of such data. There are also fears that decision-making based on algorithms may violate constitutional rights to a fair trial, especially if the parties cannot dispute the conclusions of artificial intelligence or if there is no possibility of appeal. Despite these challenges, artificial intelligence shows great potential to facilitate court processes, reduce costs and speed up the resolution of cases, especially when it comes to simpler cases (Laptev and Feyzrakhmanova, 2024).

© 2024 by the authors. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license .

Seen from the perspective of the judiciary, the use of artificial intelligence can be divided into three groups: administrators of law (judges, legislators), practitioners of law (lawyers) and those subject to the law (people and organizations). Several authors argue that a key issue with using artificial intelligence in the courtroom is that it cannot replace judges, as it lacks the ability to replicate essential human qualities such as creativity, empathy, and strategic thinking (Tamošiūnienė, et al., 2024). Although artificial intelligence can communicate and respond to questions, it does not have emotional intelligence, but artificial intelligence has logical-mathematical intelligence, which is insufficient for making complex legal decisions. On the other hand, a certain number of researches believe that the correct use of artificial intelligence models can help a lot in the decision-making process. Even to the extent that there are mechanisms that can ensure that the entire decision-making process can be based on the application of artificial intelligence.

Contribution of artificial intelligence to the judicial system today is negligible. Despite the uncertainty brought by all that is new, as well as the attitude that artificial intelligence cannot replace the judge in the courtroom, it is an undeniable fact that the application of adequate tools based on artificial intelligence can greatly facilitate the work in the judiciary. The first goal of this paper is to highlight the advantages and disadvantages of some of the applications of artificial intelligence in the judicial system. Advantages and disadvantages are defined based on the analysis of existing scientific literature. In addition, the paper also provides a proposal for the application of new artificial intelligence models, such as explainable artificial intelligence, which represents a step towards achieving the one of the main goals, and that is to replace the judge in decision making process. Precisely for this reason, the goal of this paper is to point out the advantages of using models of explainable artificial intelligence in comparison to the models of artificial intelligence applicable so far.

The paper is organized as fallows: Second section at first presents findings from scientific literature, and court practice trough some of the examples of current use of artificial intelligence. In the same time advantages and disadvantages of those application are listed. In the second part of the second section the main postulates of explainable artificial intelligence are presented. The main focus is on the use of explainable artificial intelligence in judicial system. Third section presents the proposed model. This model is based on the use of explainable artificial intelligence in the judicial system. Fourth section presents the main conclusions.

Materials and methods

Current use of artificial intelligence in judicial systems

At present, the most commonly used applications of artificial intelligence in the judicial system, can be categorized in the main groups: legal research and analysis, predictive policing and risk assessment.

One of the basic applications of artificial intelligence in the judicial system is research and data analysis. Legal research and analysis involve the process of gathering, evaluating, and interpreting legal information to support legal decision-making, case preparation, and the practice of law. Legal professionals, such as attorneys, paralegals, and law students, traditionally invested a considerable amount of time and effort in libraries or legal databases to collect information ( Faghiri, 2022 ). With the advent of technology, legal research has shifted from manual methods to computer-assisted methods. Electronic resources allowed faster and more efficient searches, but the process still required human interpretation and analysis.

|

Second application of artificial intelligence in the judicial system is predictive policing. Predictive policing stands at the forefront of law enforcement innovation, leveraging advanced analytics and artificial intelligence to enhance crime prevention and resource allocation ( Berk, 2021 ) This approach moves beyond traditional reactive strategies, aiming to forecast and proactively address potential criminal activities. At its core, predictive policing employs machine learning algorithms to analyze historical crime data, identifying patterns and trends that may indicate where future incidents are likely to occur (Storbeck, 2022). This data-driven approach allows law enforcement agencies to optimize their resources and deploy officers more effectively to areas with higher predicted crime rates. Risk assessment, as the third large group of AI applications, involves leveraging machine learning algorithms to analyze various factors and predict the likelihood of a defendant reoffending or failing to appear in court. One of the key aspects are predictive algorithms. This means that artificial intelligence algorithms analyze historical data, including criminal records, demographics, and socio-economic factors, to generate risk scores. These scores aim to assist judges and parole boards in making more informed decisions about bail, sentencing, and parole. Data-driven decision-making refers to artificial intelligence systems used in risk assessment that depend largely on data to detect patterns and correlations. Ensuring the quality and representativeness of the data is crucial to avoid reinforcing existing biases and disparities within the criminal justice system (Souza, Amilton and Nascimento, 2022). The application of artificial intelligence within the mentioned three large groups is based on different tools that can be used for the aforementioned needs. Seen from the point of view of neural networks, their application can be very different. The most commonly used neural networks for the needs of applications applicable in the judicial system, as well as cases of their use, are given in Table 1. Some of the most important advantages and disadvantages of using artificial intelligence in the judicial system are given in Table 2. |

|

|

Table 1. The list of neural networks that can be used in applications applicable in judicial system |

|

|

Neural networks |

Use case |

|

Recurrent Neural Networks |

Predicting case outcomes based on historical data or analyzing the evolution of legal arguments over time. |

|

Long Short-Term Memory Networks |

Document classification, legal language translation, and context-driven analysis of case law. |

|

Convolutional Neural Networks |

Classifying legal documents, extracting features, or identifying entities (like parties or legal statutes) from case files |

|

Graph Neural Networks |

Mapping out relationships in legal precedents or analyzing networks of legal citations |

|

Feedforward Neural Networks |

Predicting the likelihood of crime occurrences based on historical data such as time, location, and type of crime. |

|

Bayesian Neural Networks |

Providing probabilistic assessments of crime predictions to help law enforcement understand the uncertainty associated with different forecasts. |

|

Transformers |

Enhancing crime prediction models by incorporating context and temporal features relevant to crime occurrences |

|

Autoencoders |

Detecting anomalies in criminal activity data or identifying cases that deviate from typical patterns |

Table 2. Advantages and disadvantages of artificial intelligence application in tools for legal research and analysis, predictive policing and risk assessment in criminal justice

|

Advantages |

Disadvantages |

|

Efficiency and speed |

Reliability and trust issues |

|

Accuracy and consistency |

Complexity and understanding |

|

Enhanced search capabilities |

Privacy and confidentiality concerns |

|

Cost-effectiveness |

Integration and adoption challenges |

|

Comprehensive data analysis |

Legal and ethical considerations |

|

Crime prevention and resource allocation |

Bias and discrimination |

|

Data analysis and pattern recognition |

Privacy and civil liberties concerns |

|

Improved public safety |

Reliability and accuracy |

|

Enhanced investigations |

Community trust and relations |

|

Rapid processing |

Bias and fairness |

|

Objective analysis |

Transparency and accountability |

|

Enhanced predictive accuracy |

Over-reliance on technology |

|

Resource optimization |

Privacy and ethical concerns |

|

Improved public safety |

Implementation challenge |

Integrating these neural network models into legal research and court practices can enhance efficiency, improve accuracy, and support more informed decision-making in the judicial process. Using neural networks for the different purpose in judicial system requires careful consideration of ethical implications, such as potential biases in the data and the impact of predictions on communities. Effective models should be transparent, explainable, and subject to oversight to minimize adverse outcomes. To provide all that it is important to ensure that these models are trained carefully to avoid biases and ensure fairness in the legal context.

Explainable artificial intelligence

Given the aforementioned limitations of applying artificial intelligence in the judicial system, it can be observed that some of the biggest problems are the transparency of the process itself, as well as the comprehensibility of the obtained results. Recently, to address this issue, the use of explainable artificial intelligence (XAI) techniques has been introduced. Initially applied in various fields, explainable artificial intelligence has also found its place in law and judicial practice. These techniques aim to develop machine learning models that strike a good balance between interpretability and accuracy. By applying explainable artificial intelligence principles, it is possible to create white/gray-box machine learning models that are interpretable by design while maintaining high accuracy. Alternatively, black-box models can be made minimally interpretable when white/gray-box models cannot achieve an acceptable level of accuracy. Explainable artificial intelligence techniques are essential for understanding decision-making processes in neural network models and ensuring that their outcomes are comprehensible to humans ( Sajid, et. al., 2023 ).

Explainable artificial intelligence is increasingly being integrated into judicial systems to improve transparency, accountability, and fairness in legal processes. Here are some key points regarding its use:

-

• Enhanced Decision Making: Explainable artificial intelligence can assist judges and legal professionals by providing data-driven insights and recommendations while ensuring that the rationale behind those recommendations is clear and understandable.

-

• Transparency: Explainable artificial intelligence helps demystify the decision-making process of AI systems, allowing legal professionals to understand how certain conclusions were reached. This transparency is crucial in the judicial context, where decisions can significantly impact individuals’ lives.

-

• Bias Detection: Explainable artificial intelligence techniques can be employed to analyze and identify

potential biases in judicial decisions or within the AI algorithms themselves. By understanding these biases, steps can be taken to mitigate unfair outcomes.

-

• Legal Research: Explainable artificial intelligence can accelerate legal research by summarizing case law, providing relevant precedents, and suggesting legal arguments while explaining the logic behind its suggestions.

-

• Predictive Analytics: Courts may use explainable artificial intelligence for case outcome predictions, helping to manage case loads and allocate resources more efficiently. However, it’s vital to ensure that these predictions are made transparently and do not inadvertently reinforce existing biases.

-

• Public Trust: As artificial intelligence technologies are integrated into the judiciary, explainable artificial intelligence can enhance public trust by offering clear explanations for AI-driven decisions. This ensures that users can understand how and why automated systems reach specific conclusions.

-

• Compliance with Regulations: Many jurisdictions are focusing on the ethical implications of artificial intelligence, and the transparency offered by explainable artificial intelligence can help address regulatory requirements concerning fairness and accountability.

-

• Training and Development: Judges and legal practitioners can benefit from explainable artificial Intelligence tools that provide educational resources and training, allowing them to better understand both the capabilities and limitations of artificial systems.

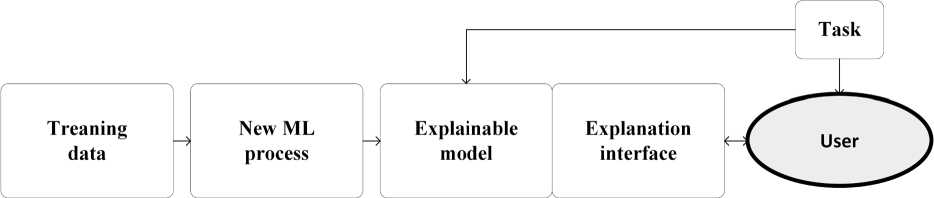

Explainable artificial intelligence is one of the key components of current judicial programs that are expected to enable third-wave AI systems. In this phase, machines are designed to understand the context and environment in which they function. Over time, they develop explanatory models that help them characterize real-world phenomena. Based on all that the current explainable artificial intelligence concept is shown on Figure 1.

Figure 1. Explainable artificial intelligence concept

If in the model itself the user is viewed as someone to whom the model should deliver a created decision based on the input data set, some of the advantages of using such an explainable artificial intelligence model can be defined in comparison to traditional artificial intelligence models. A comparative analysis is given in Table 3.

Table 3. Comparison of understanding of current artificial intelligence models and explainable artificial intelligence models from the user perspective

|

Current approach of artificial intelligence |

Explainable artificial intelligence |

|

User do not know why model does something |

User understands why |

|

User do not know why model did not apply something else |

User understands why not |

|

User does not know when model has succeed |

User knows when model succeeds |

|

User does not know when model fails |

User knows when model fails |

|

User does not trust in obtained results |

User has the trust in obtained results |

|

User can not know how to correct error, and improve results |

User has information why model finished with error |

As when applying tools and models based on the principles of artificial intelligence in the judicial system, it is most important to gain the trust of judges and other participants in the process in the results obtained. It is necessary that the results themselves be as accurate as possible. However, when it comes to decisions made in court processes, it is necessary to achieve maximum trust of the process participants in the artificial intelligence model itself. This is the reasons why the application of an explainable artificial intelligence model brings benefits in terms of trust. The very explainability of the model, transparency during the entire training process as well as during prediction or decision-making allows to overcome the barrier of mistrust and lack of privacy.

Results

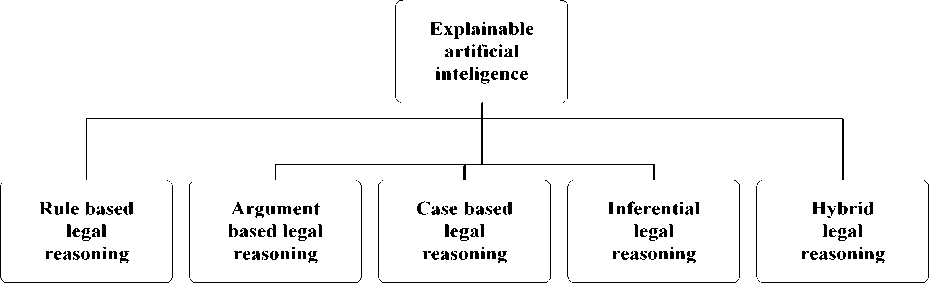

By researching the available literature in which the authors deal with the problem of applying methods of explainable artificial intelligence in various fields, methods that are equally applicable in judicial practice were singled out ( Richmond, 2023 ). These methods can be applied both for the purposes of processing large data sets and providing assistance during the processing of materials, as well as for decision-making, which creates the conditions for the application of artificial intelligence to make a decision in a judicial process. Of course, for already known ethical reasons, such a final decision would only help the judge, while its absolute or relative adoption would remain up to the judge. Selected methods of explainable artificial intelligence are shown in Figure 2.

Figure 2. Classification of explainable artificial intelligence model for legal and judicial purpose

Each of the mentioned models can be further broken down into different types of frameworks that are applicable in specific cases. Rule-based legal reasoning continues to be the most common form of legal AI systems. These systems model deductive reasoning by applying a rule of law to a specific problem to derive an answer, A. The system outputs A based on the legal principle established by the relevant authority. The core of legal reasoning involves determining which rules should be applied and how they should be interpreted ( Mowbray et al., 2023 ). Rule-based systems consist of three key components: a set of rules (rule base), a fact base (knowledge base), and an interpreter for the rules (inference engine). The rules, which reflect the content of knowledge-based sources, are applied and matched with a set of facts to derive conclusions using the inference engine. The reasoning process relies on a series of “if–then” rule statements, which are used to explain specific patterns in a given domain, such as legal norms ( El Ghosh et al., 2017 ). The proposed system was firstly demonstrated in a case study based on an online child care management System, ChildSafeOMS, to automate the early identification of children at risk. The authors claim that the resulting RuleRS provides an efficient and flexible solution to the problem at hand by using defeasible inference ( Richmond, 2023 ).

Argument based legal reasoning is one of the most common legal reasoning methods in the law domain. It is based around the construction of arguments and counter-arguments, followed by the selection of the most acceptable of these. Argumentation, as opposed to deduction, is an appropriate mode for reasoning with inconsistent knowledge, based upon the construction and the comparison of arguments. It can allow for reasoning in the face of uncertainty, and identify solutions when confronted with conflicting information. In particular, it should be possible to use this approach to assess the reason why a putative fact resonates, in the form of argument, and to combine these arguments to evaluate the level of certainty.

Argumentation has strong explanatory capabilities, as it can translate the decision of an AI system to an argumentation process, which shows step-by-step how the system concludes the result ( Vassiliades et al., 2021 ).

Legal case based reasoning applies a problem-solving approach which is not referable to strict rules ( Keane and Kenny, 2019 ). Works in this category conform to the analogical reasoning of the courtroom in which a judge reasons with instant and prior cases (drawn from a case base), finding similarities and differences between them. In legal case-based reasoning, a set of domain-specific, legally significant features is defined, and a previous case is considered relevant (and potentially binding) based on the degree of similarity between the features of the prior case and those of the current case. Legal case-based reasoning (LCBR) has been formalized for the purposes of computer reasoning as a three-step process: retrieve, revise and retain. These newer LCBR methods have brought modifications and advancements in LCBR modelling. For example, the authors in ( Zheng et al. 2021 ) proposed a logical comparison approach, which logically generalized the formulas involved in case comparison, and their approach to identifying analogies, distinctions, and relevance. This approach is extended to HYPO-style comparisons (where distinctions and relevance are not separately characterized) and to the temporal dynamics of case-based reasoning modelling real world cases. Notably, the authors claimed that such case-based model formalism is capable of refining the comparisons inherent to case-based reasoning.

Inferential legal reasoning seeks to reason using evidential data, which refers to primary sources of evidence whose existence cannot reasonably be disputed, such as witness statements given in court or forensic expert reports presented to the fact-finder. According to some authors inferential processes, broadly understood, are simply those cognitive processes in which beliefs are formed or maintained on the basis of the information possessed”. These inferential processes are usually considered equivalent to reasoning, as reasoning describes “the making or granting of assumptions called premises (starting points) and the process of moving toward conclusions. When it comes to the relation between inference/ reasoning and argumentation, Walton (1990) claims that reasoning occurs within discourse or argument, whereas other scholars attest that reasoning is discourse or argument. In either case, inferential reasoning is a requirement for argumentative discourse, and at the same time appears during it. A view of argumentation as a dynamic and dialectical type of reasoning occurring in certain contexts of dialog is thus justified under this approach. From a practitioner’s perspective, legal reasoning and legal argumentation are relatively straightforward to describe and define: they involve the reasoning used by lawyers to solve a legal problem, advise a client, or justify a legal decision. Some might argue that it is simply ordinary reasoning applied within a legal context.

Hybrid legal reasoning represents integration of two or more different knowledge representation methods. The underlying assumption is that complex problems can be easier solved with hybrid systems ( Hamdani et al., 2021 ; Marques Martins, 2020 ). One of the most widely used types of integration in the legal domain is the combination of rule-based and case-based reasoning approaches. The rule-based approach has the advantage of structuring the explanation according to the underlying statute or legal doctrine, but tends to be rather prescriptive and requires considerable knowledge engineering effort in constructing the rule base. Rule-based systems solve problems from the ground up, while case-based systems rely on pre-existing situations to address similar new cases. As a result, integrating both approaches is logical and frequently beneficial. Another novel hybrid model of legal reasoning was proposed in Bex and Verheij (2011) , by broadening the argumentative approach to evidential reasoning, this method covers the entire reasoning process in a case, from evidence and facts to legal implications. It represents an expansion of their proposed hybrid theory of reasoning with evidence. The authors argue that the process from evidence to established facts, and from established facts to legal implications, cannot be isolated from the factual component of legal reasoning. Thus, it has been demonstrated that the categories of AI-powered legal reasoning are not discrete but offer the potential for the development of hybrid forms that fit with the different facets of legal reasoning.

There are different neural network architectures that can be utilized for explainable artificial intelligence in judicial case studies. Each of the architects is characterized by certain performances and is applicable to a certain set of data as well as at a given moment. It is particularly important, when it comes to architectures that belong to explainable artificial intelligence, that such architectures can represent certain layers of a larger network. In that case, all parameters of importance to the system user would be generated in this part of the network.

Conclusions

Artificial intelligence is increasingly becoming a key component of modern justice systems, bringing potential benefits in the form of increased efficiency, faster resolution of disputes and reduced costs. Although the judiciary is slow to adapt to technological innovations compared to other sectors. Artificial intelligence allows for the analysis of vast amounts of data and the use of advanced algorithms to enable faster and more accurate decision-making, which streamlines administrative tasks and eases the workload of courts. However, despite these advantages, there are significant challenges, including the risk of algorithmic bias, violations of fundamental rights, and issues of transparency and ethics. The solution to the mentioned problems is possible in the use of principles and techniques of explainable artificial intelligence. By applying these techniques, tools can be created that will lead to the mass use of artificial intelligence in courtrooms and legislative systems.

Author Contributions

Conceptualization, Z.S. and S.M.; methodology, Z.S and G.P.; software, D.D and M.I.; formal analysis, Z.S. and S.M.; writing—original draft preparation, Z.S. and G.P.; writing—review and editing, D.D. and S.I. All authors have read and agreed to the published version of the manuscript.

Conflict of interests

The authors declare no conflict of interest.

Acknowledgments

The authors would like to express their gratitude to the respondents who participated in the research and the reviewers whose constructive suggestions significantly enhanced the quality of this work.

Список литературы The Role of Artificial Intelligence in Judicial Systems

- Berk, R. (2021). Artificial Intelligence, Predictive Policing, and Risk Assessment for Law Enforcement. Annual Review of Criminology. 4, 209-237. https://doi.org/10.1146/annurev-criminol-051520-012342 DOI: https://doi.org/10.1146/annurev-criminol-051520-012342

- Bex, F., & Verheij, B. (2011). Legal shifts in the process of proof. Proceedings of the 13th International Conference on Artificial Intelligence and Law. https://doi.org/10.1145/2018358.2018360 DOI: https://doi.org/10.1145/2018358.2018360

- El Ghosh, M., Naja, H., Abdulrab, H., & Khalil, M. (2017). Towards a legal rule-based system grounded on the integration of criminal domain ontology and rules. Procedia Computer Science, 112, 632–642. https://doi.org/10.1016/j.procs.2017.08.109 DOI: https://doi.org/10.1016/j.procs.2017.08.109

- Faghiri, A. (2022). The Use Of Artificial Intelligence In The Criminal Justice System (A Comparative Study). Webology. 19(5), 593-613.

- Francisco, H., & Storbeck, M. (2022). Artificial intelligence and predictive policing: risks and challenges. EUCPN. 1-20.

- Hamdani, R. E., Mustapha, M., Amariles, D. R., Troussel, A., Meeus, S., & Krasnashchok, K. (2021). A combined rule-based and machine learning approach for automated GDPR compliance checking. Proceedings of the Eighteenth International Conference on Artificial Intelligence and Law, 40-49. https://doi.org/10.1145/3462757.3466081 DOI: https://doi.org/10.1145/3462757.3466081

- Keane, M. T., & Kenny, E. M. (2019). How case-based reasoning explains neural networks: A theoretical analysis of XAI using post-hoc explanation-by-example from a survey of ANN-CBR twin-systems. In: Bach, K., Marling, C. (eds) Case-Based Reasoning Research and Development. ICCBR 2019. Lecture Notes in Computer Science, 11680. 155-171. https://doi.org/10.1007/978-3-030-29249-2_11 DOI: https://doi.org/10.1007/978-3-030-29249-2_11

- Laptev, V.A., & Feyzrakhmanova, D.R. (2024). Application of Artifcial Intelligence in Justice: Current Trends and Future Prospects. Human-Centric Intelligent Systems 4, 394-405. https://doi.org/10.1007/s44230-024-00074-2 DOI: https://doi.org/10.1007/s44230-024-00074-2

- Marques M. J. (2020). A system of communication rules for justifying and explaining beliefs about facts in civil trials. Artificial Intelligence and Law, 28, 135–150. https://doi.org/10.1007/s10506-019-09247-y DOI: https://doi.org/10.1007/s10506-019-09247-y

- Mowbray, A., Chung, P., & Greenleaf G. (2023). Explainable AI (XAI) in Rules as Code (RaC): The DataLex approach. Computer Law & Security Review, 48, 105771. https://doi.org/10.1016/j.clsr.2022.105771 DOI: https://doi.org/10.1016/j.clsr.2022.105771

- Nakad, H., Jongbloed, T., Salem, A.B.M., & Van Den Herik, H.J. (2015). Digitally Produced Judgements in Modern Court Proceedings. International Journal of Digital Society (IJDS), 6(4), 1102-1112. https://doi.org/10.20533/ijds.2040.2570.2015.0135 DOI: https://doi.org/10.20533/ijds.2040.2570.2015.0135

- Raphael Souza de O., Amilton S. R., & Sperandio Nascimento, E. G. (2022). Predicting the number of days in court cases using artificial intelligence. PLoS ONE, 17(5):e0269008. https://doi.org/10.1371/journal.pone.0269008

- Richmond, K. M., Muddamsetty, S. M., Gammeltoft Hansen, T., Olsen, H. P., & Moeslund, T. B. (2023). Explainable AI and Law: An Evidential Survey. Digital Society, 3(1), 1-33. https://doi.org/10.1007/s44206-023-00081-z DOI: https://doi.org/10.1007/s44206-023-00081-z

- Rocha, C., & Carvalho, J.A. (2023). Artificial Intelligence in the Judiciary: Uses and Threats. Computer Science and En-gineering. https://ceur-ws.org/Vol-3399/paper17.pdf

- Sajid, A.,Tamer, A., Shaker, E.,Khan, M.,Jose M. A., Roberto, C., Guidotti, R., Javier D. S., & Díaz- Rodríguez, N. (2023). Explainable Artificial Intelligence (XAI): What we know and what is left to attain Trustworthy Artificial Intelligence. Information Fusion, 99, 101805. https://doi.org/10.1016/j.inffus.2023.101805 DOI: https://doi.org/10.1016/j.inffus.2023.101805

- Souza R. de O., Amilton S. R., & Nascimento, S. E. G. (2022). Predicting the number of days in court cases using artificial intelligence. PLoS ONE, 17(5):e0269008. https://doi.org/10.1371/journal.pone.0269008 DOI: https://doi.org/10.1371/journal.pone.0269008

- Shi, C., Sourdin, T., & Li, B. (2021). The Smart Court – A New Pathway to Justice in China?. International Journal for Court Administration 4, 12(1). https://doi.org/10.36745/ijca.367 DOI: https://doi.org/10.36745/ijca.367

- Tamošiūnienė, E., Terebeiza, Ž., & Doržinkevič, A. (2024). The Possibility of Applying Artificial Intelligence in the Delivery of Justice by Courts. Baltic Journal of Law & Politics, 17 (1). https://doi.org/10.2478/bjlp-2024-0010 DOI: https://doi.org/10.2478/bjlp-2024-0010

- Vassiliades, A., Bassiliades, N., & Patkos, T. (2021). Argumentation and explainable artificial intelligence: A survey. The Knowledge Engineering Review, 36, e5. https://doi.org/10.1017/S0269888921000011 DOI: https://doi.org/10.1017/S0269888921000011

- Zheng, H., Grossi, D., & Verheij, B. (2021). Logical comparison of cases. AI Approaches to the Complexity of Legal Systems XI-XII: AICOL International Workshops 2018 and 2020: AICOL-XI@ JURIX 2018, AICOL-XII@ JURIX 2020, XAILA@ JURIX 2020, (Lecture Notes in Computer Science; Vol. 13048 (Lecture Notes in Artificial Intelligence), Springer, 125-140. https://doi.org/10.1007/978-3-030-89811-3_9 DOI: https://doi.org/10.1007/978-3-030-89811-3_9