Gender Classification Method Based on Gait Energy Motion Derived from Silhouette Through Wavelet Analysis of Human Gait Moving Pictures

Автор: Kohei Arai, Rosa Andrie Asmara

Журнал: International Journal of Information Technology and Computer Science(IJITCS) @ijitcs

Статья в выпуске: 3 Vol. 6, 2014 года.

Бесплатный доступ

Gender classification method based on Gait Energy Motion: GEM derived through wavelet analysis of human gait moving pictures is proposed. Through experiments with human gait moving pictures, it is found that the extracted features of wavelet coefficients using silhouettes images are useful for improvement of gender classification accuracy. Also, it is found that the proposed gender classification method shows the best classification performance, 97.63% of correct classification ratio.

Gender Classification, Human Gait, Gait Energy Motion, Wavelet Analysis

Короткий адрес: https://sciup.org/15012054

IDR: 15012054

Текст научной статьи Gender Classification Method Based on Gait Energy Motion Derived from Silhouette Through Wavelet Analysis of Human Gait Moving Pictures

Published Online February 2014 in MECS DOI: 10.5815/ijitcs.2014.03.01

In decades, there has been increased awareness on effectively identifying individuals for prevention of terrorist attacks. Many biometric technologies have emerged for identifying and verifying individuals by analyzing face, iris, gait, fingerprint, palm print, or a combination of these traits [1].

Human Gait classification / recognition giving some advantage compared to other recognition system. Gait recognition system does not require observed subject’s attention and cooperation. It can also capture gait at a far distance without requiring physical information from subjects [3][4][5].

Human Gait Recognition as a recognition system divided to three main subjects; image preprocessing, feature reduction and extraction system, and classification.

There are two pre-processing methods, model-based and model-free methods. Model-based approach obtains a set of static or dynamic body parameters based on human body model. It tracks motions of body components such as head, legs, hip, and other thighs. Gait signatures derived from these design parameters employed for identification and recognition of subject. These benefits are significant for practical implementation because it is unlikely that reference sequences and test sequences using the same viewpoint. Model-free based approaches focus on the silhouettes image sequences or the whole of human bodies’ motion. Model-free methods are not too sensitive to the quality of silhouettes. One of the benefits of the model-free method is processing time. It requires relatively small computer resources in comparison to the model-based approaches. It, however, does not robust to viewpoints and scale [3].

This paper proposes a method for gender classification based on model-free method with wavelet coefficients derived from gait energy motion derived from silhouette images extracted from time series of gait motion of moving pictures. The following section describes the proposed method followed by some experimental results with CASIA data of Chinese Academy of Science. Then conclusion is described together with some discussions.

II. Proposed Method2.1 Preprocessing

Model-free methods focus on shapes of silhouettes and/or the entire movement of physical bodies. Therefore, model-free approach are not sensitive to the quality of extracted silhouettes usually. Some of the researchers eliminate the view and scale dependent disadvantage by using multi-view cameras [25][26]. Another disadvantage of the model-free based approach is that it requires relatively large computer resources.

Video files format is providing gait data. Resolution and frame rate are important parameters for data amount [2]. We will create model-free method and model-based method and compare between both. Through a comparison between both, the most appropriate method for gait recognition or classification is determined. Both model-free and model-based image representation using silhouettes as their preprocessing system.

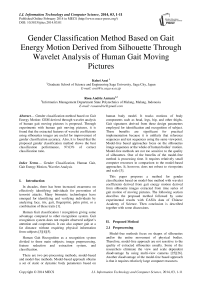

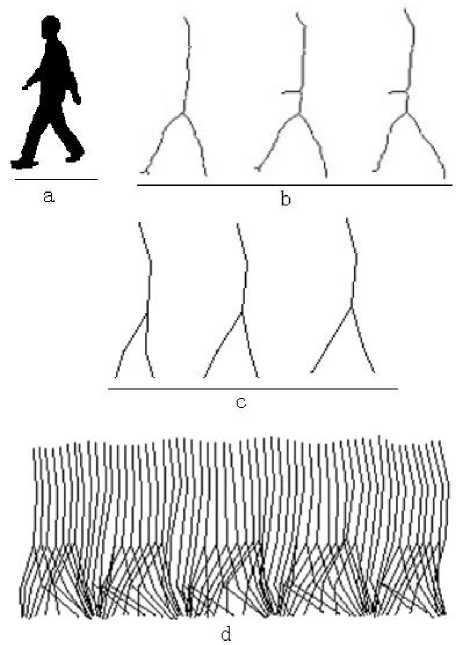

Fig. 1: Background subtraction for silhouettes images

Fig.1 shows a schematic diagram for silhouettes creation from the acquired video data of human gait. It assumes that (1) there is no hole inside of the silhouette, and (2) there is no shadow. First, using background subtraction image for getting the silhouette images which then creating binarization with the color components of Red and Green normalized by summation of all the color components of RGB images. Binarization is then applied to the resultant images based on Otsu's threshold process. Secondly, background subtraction image is used for removing shadows which are extracted a color component analysis of the pixel in concern through a binarization with threshold obtained from Otsu's method.

The proposed algorithm for silhouettes creation is as follows:

-

1) Read current image and background image; Image_current, Image_background

-

2) Read size of image (current and background images should be in the same size). Image_row, Image_column. Image_dimension

-

3) Determine threshold manually, based on the experiment conducted. From the experiments determining 10 threshold values ; work_thresh.

-

4) Addition of the R, G, and B color components of the background image. Imbacksum = Im back (R)+ Im back (G)+ Im back (B)

-

5) If there is 0 value in Imback , then change the

value to a small number, i.e. 1e-30.

-

6) Normalize R and G components of the background

image and zeroing the B component.

a) ^^backnorm (R)=

^mback ( R ) imbacksum

b) ^^Ь^сктгогт ( G )=

^mback ( G ) imbacksum

c) I^backnorm ( в )=0

-

7) Do the step 4) – 6) for the current image. ^mcurrsum , Im curr (R, G, B) .

-

8) Calculate the absolute difference, A= | ^^■back ( R , G , В )- ^^■curr ( R , G , В )|

-

9) Find the threshold of A automatically using Otsu’s threshold; O_thresh

-

10) If A value is smaller than O_thresh, then change A value to 0, else change A value to 1.

-

11)

B=

(|^^■back (R)-^^■curr (R)|> work^^gg^)||( |^^-back (G)-^^-curr (G)| > WOrkthresh)||(|^^-back (В)-^^-curr (В)|)> wovk^^gg^ )

-

12) C(R) = A(R) B; C(G) = A(G) B; C(B) = A(B) B

-

13) Convert C to binary image.

Algorithm for Otsu’s threshold method is:

-

1) Compute histogram and probabilities of each intensity level

-

2) Set up initial class probabilities ω i (0) and class means µ i (0)

-

3) Step through all possible thresholds t = 1... maximum intensity

-

1. Update ω i and µ i

-

2. Compute agto

4) Desired threshold corresponds to the maximum

2.2 Model Based

2.2 Model Based

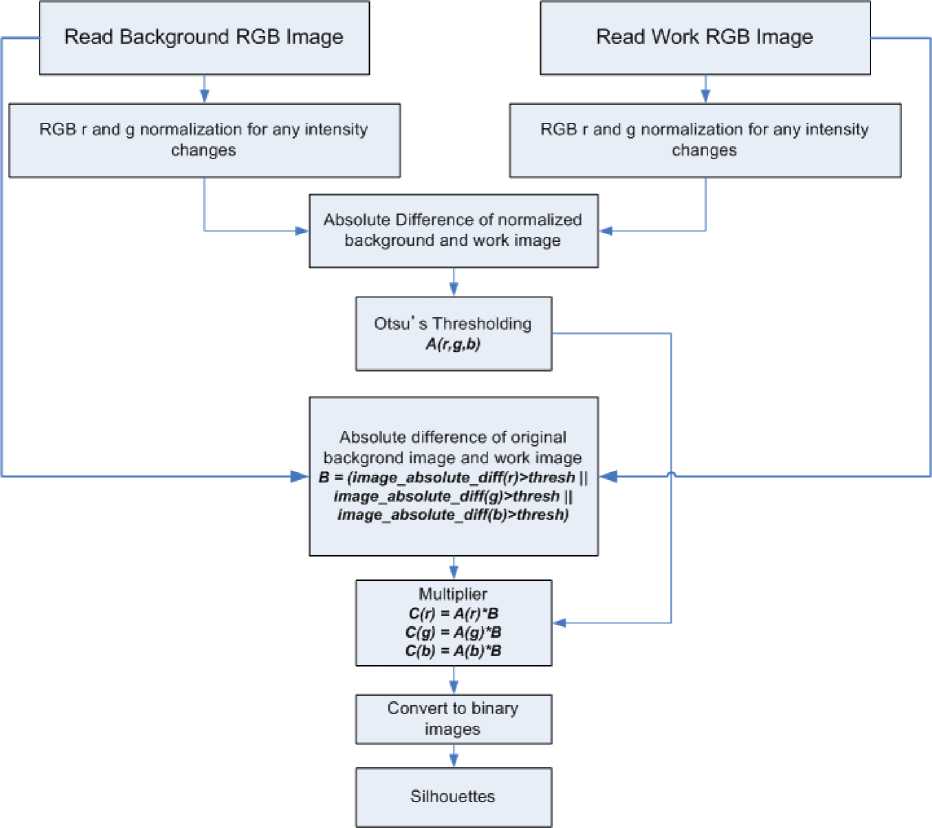

Fig.2 shows the resultant image of silhoettes using the proposed preprocessing method.

After we get the silhouettes, we create the skeleton using some morphological methods in image processing. The morphological method used such as dilation, erosion, and thinning. The dilation is used three times for structuring one's element. Meanwhile, the erosion is used six times for structuring one's element. The thinning algorithm is as follows :

-

1) Divide the image into two different subfields in a checkerboard pattern.

-

2) In the first sub iteration, remove pixel p from the first subfield if the conditions G1, G2, and G3 are all fulfilled.

-

3) In the second sub iteration, remove pixel p from the second subfield if all the conditions are fulfilled.

The skeleton created is fixed and reconstructed for better skeleton representation. For the fixing and the reconstructing the skeleton, we have to measure human body size average and determine the position of a major point needed for reconstruction. This major point used such as head point, neck point, hip point, both knee point, and both ankle point.

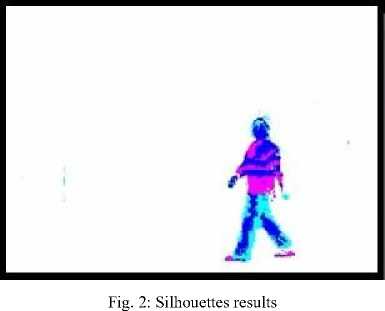

Fig. 3: Proportional average size of human body

Proportional average size of the human body which is measured by Wilfrid Dempster et al. [6] is referred. As shown in Fig.3, we can conclude that the hip is in the middle of the human body. The length of the hip – knee about 25% length of total human body height. The length of the knee – ankle about 27% length of total human body height. The length of hip – neck is 32% length of total human body height. The length of the neck – top of the head about 15% length of total human body height.

The procedure is as follows: Read an image, Create the silhouettes, Create the skeleton, Determine the body height, Determine the hip point (middle of the height), Determine the neck point using hip point as a reference, Determine the top of the head point using neck as a reference, Determine the knee point using hip point as a reference, Determine the ankle point using knee point as a reference, Connect one point to another using one pixel line.

Fig.4(c) is the example of the skeleton per frame, while Fig.4(d) is the example of skeleton per frame sequence.

Fig. 4: (a) Skeleton image per frame, (b) Skeleton image per video sequence (c) Skeleton per frame,(d) Skeleton per frame sequence

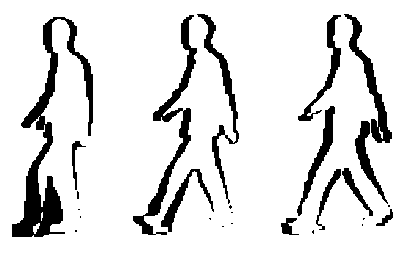

2.3 Model-Free Based

The model-free based method uses the motion parameter per frame. We can get the motion by using background subtraction from silhouette. The motion is gotten by video sequence and frame. Fig.5(a) is the example image of the human motion body per frame. Fig.5(b) is the example of human motion per video sequence.

(a)

(b)

Fig. 5: (a) Skeleton image per frame, (b) Skeleton image per video sequence

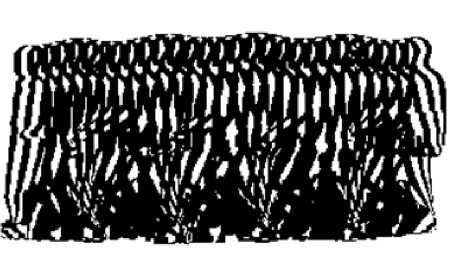

2.4 Discrete Wavelet Transform

Discrete wavelet transform: DWT represents an image as a subset of wavelet functions using different locations and scales [7], [8]. There are so many experiments conducted by researchers using 2D DWT such as for image compression and digital watermarking [12], [13], [15]. There is also some researchers that use 2D DWT for feature dimensional reduction and feature extraction [10]. 2D DWT works by making some decomposition images. The decomposition of an image into wavelet involves a pair of waveforms: the high frequencies corresponding to the detailed parts of an image and the low frequencies corresponding to the smooth parts of an image. Discrete Wavelet Transform for an image as a 2D signal derived from a 1D DWT. An image can be decomposed into four images through a 1-level 2D DWT as shown in Fig.6 according to the characteristic of the DW decomposition. These four sub-band images in Fig.6 can be mapped into four sub-band elements representing LL (Approximation), HL (Vertical), LH (Horizontal), and HH (Diagonal) respectively.

Approximation and detail coefficients are the results of DWT decomposition from a given signal into other signal. A given function f(t) can be represents using the following formula:

f(t) = ∑ ∑ ԁ(ϳ, Κ)φ(24t-Κ) j=l K=-oo

+

∑

а(L, Κ)θ(2-Lt-Κ)

K=-oo where:

Fig. 6: 1-Level Decomposition 2D DWT

The approximation and detail coefficients can be expressed as follows,

a(L, Κ) = ∫ f(t)θ(2-Lt - Κ)dt

d(ϳ, Κ) = ∫ f(t)φ(2"it - Κ)dt

√2U-oo

Wavelets from different families can be constructed by choosing the mother wavelet φ(t) and scaling function θ(t) [7]. We will use the Haar which is identical to Daubechies base function of 1 of a wavelet and in level-1 decomposition. To draw easier the characteristics of the wavelet coefficients, we use the energy of each coefficient, and then create the 2D scatter graph for every combination of the coefficients. Below are the formulas of energy coefficients in a frame sequence (video):

Ea(L,К) =

video frame

∑ √∑ |a(L,Κ)| i=1 J i=l video

∑√

E d( ,К) =

frame

∑ |d(ϳ, Κ)| j=l

Then we do an energy normalization using the formula below,

ETotal =Ea(L,К)+Ed( ,К)

%E a(L,К)

%E d( ,К)

100 x E a ( L , К ) ETotal

100 x E d ( , К ) ETotal

Percentage ratio of total energy is expressed as follows,

100% Energy = (%Ea ( L , К ) + %Ed ( , К ) ) (5)

1) Then normalization is applied to the energy data using simple normalization technique.

2) Using all those formula, we work on some different procedures for preprocessing data:

3) Apply the wavelet transform to the individual skeleton frame, calculate the means of all the energy from one video (using the Fig.5c preprocessing data). It is a high-cost computation.

4) Apply the wavelet transform to the skeleton frame sequence, (using Fig.5d preprocessing data). It is a high-cost computation.

5) Apply the wavelet transform to a single motion frame, averaging all the energy from one video (using Fig.6a preprocessing data). It is a low-cost computation.

6) Apply the wavelet transform to the motion frame sequence, averaging all the energy from one video (using Fig.6b preprocessing data). It is a low-cost computation.

3.2 Determination of Wavelet Coefficients used

III. Experimental Results3.1 Data Used

There are some gait datasets used widely by researchers today. Some research group had created human gait datasets for the last one decade. Some of the widely used datasets are the University of South Florida (USF) Gait Dataset, Southampton University (SOTON) Gait Dataset, and Chinese Academy of Sciences (CASIA) Gait Dataset. This paper reviews CASIA Gait Dataset to see their characteristics. CASIA Gait dataset has four kinds of datasets: Dataset A (perpendicular view dataset), Dataset B (multi-view dataset), Dataset C (infrared dataset), and Dataset D (foot pressure measurement dataset) [9]. As a beginning, we use the B class dataset in 90 degrees point of view.

Fig. 7: Frame sample of CASIA Human Gait Dataset

This paper also reviews the database using 2D DWT for the feature reduction and extraction. Fig.7 shows two examples of CASIA B class Human Gait Dataset male and female.

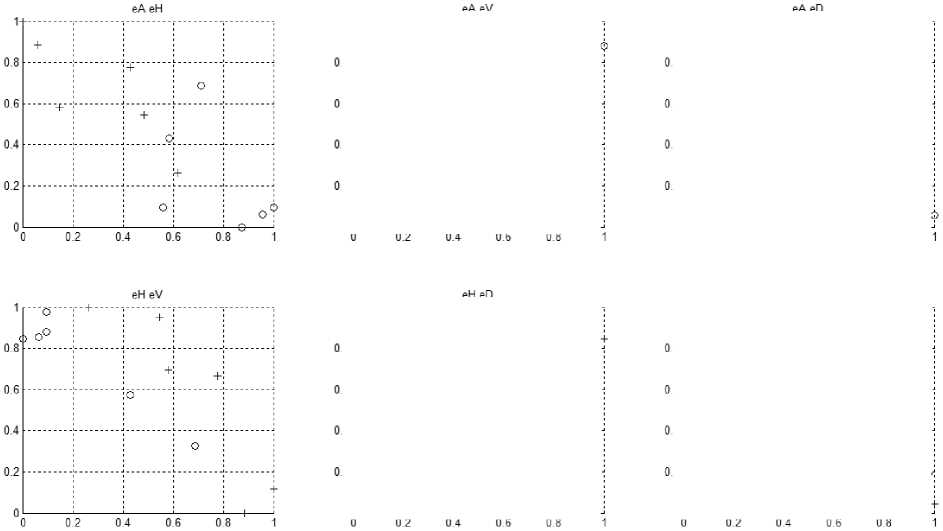

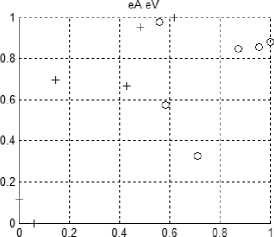

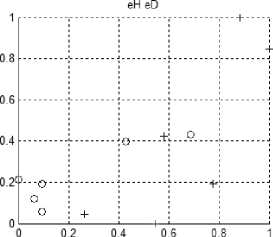

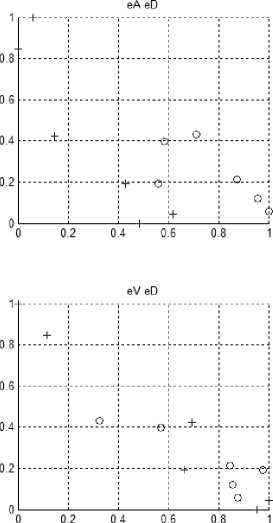

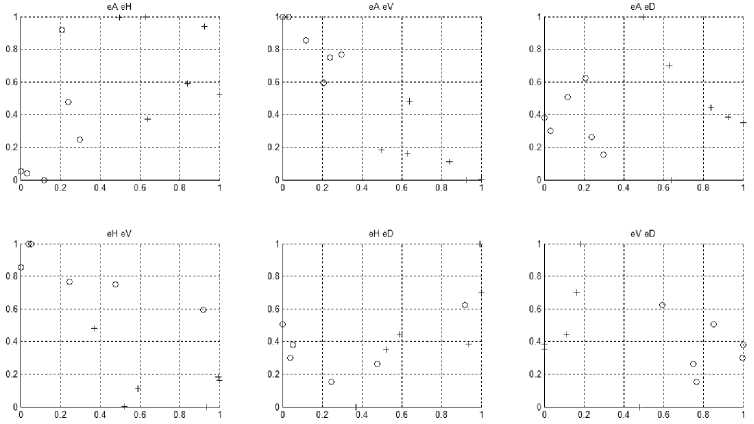

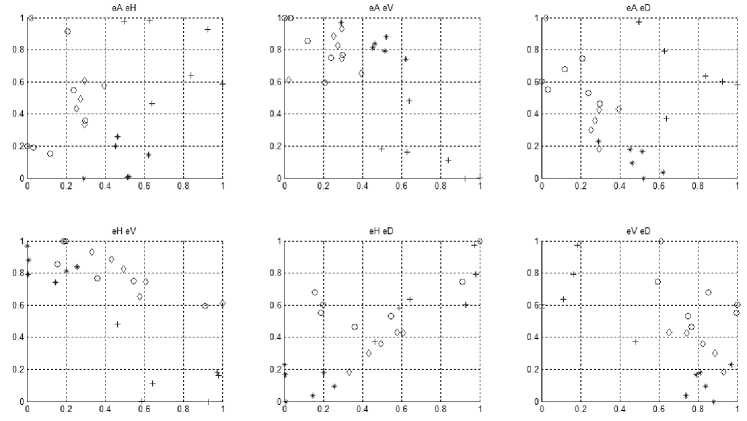

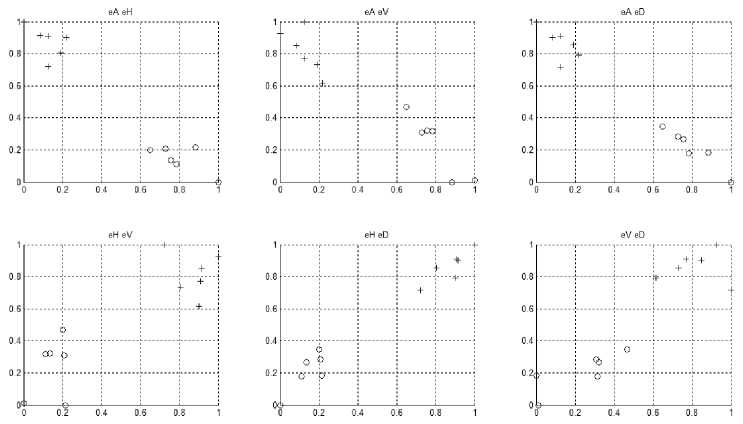

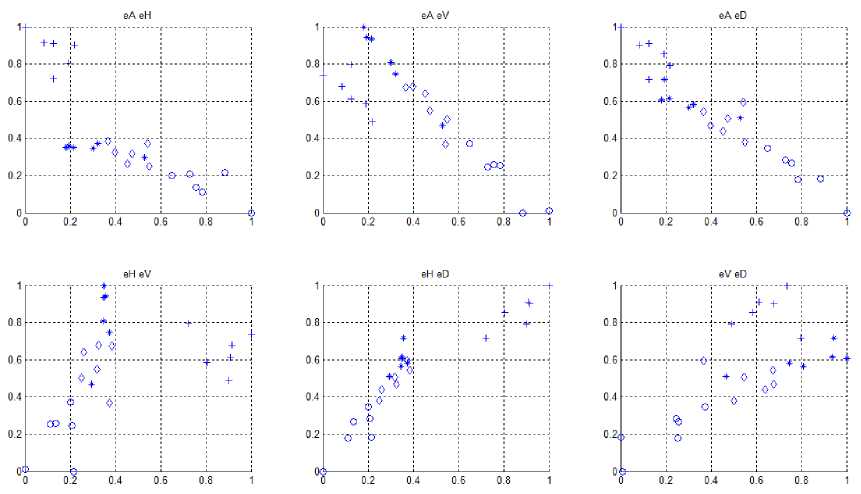

Fig.8 to 13 shows experimental results to determine which wavelet coefficient used. Those figures show the state for every combination and every procedure. One plotted data point in every figure represents data of one person in one video. One color represents one gait person video dataset. All the processes are applied with Haar wavelet at 1-level decomposition. All the possible combination is as follows:

-

2) eAeV (energy from Approximation and Vertical Detail),

-

3) eAeD (energy from Approximation and Diagonal Detail),

-

4) eHeV (energy from Horizontal and Vertical Detail),

-

5) eHeD (energy from Horizontal and Diagonal Detail),

-

6) eVeD (energy from Vertical and Diagonal Detail).

Fig.8 shows all possible energy combination per frame with 2 persons. The energy combination eHeV shows the better cluster condition. It can separate data. In the other energy combination, there are found intersection data. This figure implies that an eHeV is the best energy combination compare to other.

Fig.9 shows all possible energy combination per sequence with 2 persons. It will be easier to do the classification, except on eHeD combination energy. It will be difficult if we add some data as shown in Fig.10. In Fig.10, we add the data 2 more persons, so the total data is 4 persons. This figure shows that there is no possible energy combination used for classification.

Fig.11 shows that the result is better than the result in Fig.7 for two persons. All the combination energy looks possible to make the classification, but the best for all is the energy combination of eHeD. As shown in Fig.12, the best energy combination is eHeD if we use 4 person data. Fig.13, by using 4 person data, we can see that the best energy combination is eHeV. The result of eHeV (in Fig.13) is even better than the eHeD (in Fig.12).

From these figures, it is shown that the best combination energy from wavelet is Horizontal and Vertical Coefficients.

Fig. 8: Result from single skeleton frame of 2 persons

Fig. 9: Result from skeleton frame sequence of 2 persons

Fig. 10: Result from skeleton frame sequence of 4 persons

Fig. 11: Result from single motion frame of 2 persons

Fig. 12: Result from single motion frame of 4 persons

Fig. 13: Result from motion frame sequence of 4 persons

3.3 Classification Performance Comparison

This paper will extract motion feature from all the frames using Gait Energy Motion (GEM) [27].

Evaluated gender classification performance with CASIA data is well reported as shown in Table 1. Lee and Grimson and Huang and Wang evaluated classification performance with 25 male and 25 females of CASIA data while we and Li et al. evaluated for 31 males and 31 females. It is found that the proposed method utilizing GEM shows the best gender classification performance in comparison to the others.

Table 1: Comparison of Gender Classification Performances Among the Proposed Method and the Conventional Methods

|

Method |

Dataset |

CCR |

|

Lee and Grimson [28] |

25 males & 25 females |

85.0% |

|

Huang and Wang [25] |

25 males & 25 females |

85.0% |

|

Li et al. [19] |

31 males & 31 females |

93.28% |

|

GEM with 2D DWT Proposed |

31 males & 31 females |

97.63% |

3.4 Effectiveness of Features

As shown in Fig.13, it is found that the extracted Gait Energy Motion: GEM is concentrated at upper left leg followed by tip of a head. These features are different between male and female. Therefore, GEM is useful for gender classification.

Also, it is found that the GEM difference between male and female is concentrated at the left foot followed by hip and right hand from Fig.14 which shows F value of the extracted GEM. F value is defined as follows, с-1∑i=i ni (x̅ i - x̅)

F= n-с∑i=l ∑ "=1 (x ij - x̅ i)2

where x denotes extracted feature, wavelet coefficients of GEM. Therefore, F value is corresponding to effectiveness of the features. Large F value implies high classification performance.

It is found that the human gait difference between male and female is situated at left foot, hip and right hand. Therefore, gender classification performance is improved if these features are used for classification. Wavelet coefficients reflect these features which results in improvement of gender classification performance accordingly.

IV. Conclusion

Through experiments with CASIA gait data based on the proposed gender classification method with 2D DWT, Haar Wavelet and 1 level decomposition, it is concluded as follows,

-

1) The best preprocessing data is a motion in frame sequence,

-

2) Best combination of the parameters for classification is Horizontal Detail and Vertical Detail,

-

3) It needs a number of energy and decomposition to create a human gait classification.

This research is the beginning stage of identifying a person with human gait data. In this research, we choose the small number of energy to create feature vector. It is found that these energy features are effective for human gait classification.

Acknowledgment

Список литературы Gender Classification Method Based on Gait Energy Motion Derived from Silhouette Through Wavelet Analysis of Human Gait Moving Pictures

- X. Qinghan, Technology review – Biometrics Technology, Application, Challenge, and Computational Intelligence Solutions, IEEE Computational Intelligence Magazine, vol. 2, pp. 5-25, 2007.

- Jin Wang, Mary She, Saeid Nahavandi, Abbas Kouzani, “A Review of Vision-based Gait Recognition Methods for Human Identification”, IEEE Computer Society, 2010 International Conference on Digital Image Computing: Techniques and Applications, pp. 320 - 327, 2010

- N. V. Boulgouris, D. Hatzinakos, and K. N. Plataniotis, “Gait recognition: a challenging signal processing technology for biometric identification”, IEEE Signal Processing Magazine, vol. 22, pp. 78-90, 2005.

- M. S. Nixon and J. N. Carter, "Automatic Recognition by Gait", Proceedings of the IEEE, vol. 94, pp. 2013-2024, 2006.

- Y. Jang-Hee, H. Doosung, M. Ki-Young, and M. S. Nixon, “Automated Human Recognition by Gait using Neural Network”, in First Workshops on Image Processing Theory, Tools and Applications, 2008, pp. 1-6.

- Wilfrid Taylor Dempster, George R. L. Gaughran, “Properties of Body Segments Based on Size and Weight”, American Journal of Anatomy, Volume 120, Issue 1, pages 33–54, January 1967.

- Gilbert Strang and Truong Nguen, Wavelets and Filter Banks. Wellesley-Cambridge Press, MA, 1997, pp. 174-220, 365-382

- I. Daubechies, Ten lectures on wavelets, Philadelphis, PA: SIAM, 1992.

- CASIA Gait Database, http://www.cbsr.ia.ac.cn/ English/index.asp

- Edward WONG Kie Yih, G. Sainarayanan, Ali Chekima, "Palmprint Based Biometric System: A Comparative Study on Discrete Cosine Transform Energy, Wavelet Transform Energy and Sobel Code Methods", Biomedical Soft Computing and Human Sciences, Vol.14, No.1, pp.11-19, 2009

- Dong Xu, Shuicheng Yan, Dacheng Tao, Stephen Lin, and Hong-Jiang Zhang, Marginal Fisher Analysis and Its Variants for Human Gait Recognition and Content- Based Image Retrieval, IEEE Transactions On Image Processing, Vol. 16, No. 11, November 2007

- Hui-Yu Huang, Shih-Hsu Chang, A lossless data hiding based on discrete Haar wavelet transform, 10th IEEE International Conference on Computer and Information Technology, 2010

- Kiyoharu Okagaki, Kenichi Takahashi, Hiroaki Ueda, Robustness Evaluation of Digital Watermarking Based on Discrete Wavelet Transform, Sixth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, 2010

- Bogdan Pogorelc, Matjaž Gams, Medically Driven Data Mining Application: Recognition of Health Problems from Gait Patterns of Elderly, IEEE International Conference on Data Mining Workshops, 2010

- B.L. Gunjal, R.R.Manthalkar, Discrete Wavelet Transform based Strongly Robust Watermarking Scheme for Information Hiding in Digital Images, Third International Conference on Emerging Trends in Engineering and Technology, 2010

- Turghunjan Abdukirim, Koichi Niijima, Shigeru Takano, Design Of Biorthogonal Wavelet Filters Using Dyadic Lifting Scheme, Bulletin of Informatics and Cybernetics Research Association of Statistical Sciences, Vol.37, 2005

- Seungsuk Ha, Youngjoon Han, Hernsoo Hahn, Adaptive Gait Pattern Generation of Biped Robot based on Human’s Gait Pattern Analysis, World Academy of Science, Engineering and Technology 34 2007

- Maodi Hu, Yunhong Wang, Zhaoxiang Zhang and Yiding Wang, Combining Spatial and Temporal Information for Gait Based Gender Classification, International Conference on Pattern Recognition 2010

- Xuelong Li, Stephen J. Maybank, Shuicheng Yan, Dacheng Tao, and Dong Xu, Gait Components and Their Application to Gender Recognition, IEEE Transactions On Systems, Man, And Cybernetics—Part C: Applications And Reviews, Vol. 38, No. 2, March 2008

- Shiqi Yu, , Tieniu Tan, Kaiqi Huang, Kui Jia, Xinyu Wu, A Study on Gait-Based Gender Classification, IEEE Transactions On Image Processing, Vol. 18, No. 8, August 2009

- M.Hanmandlu, R.Bhupesh Gupta, Farrukh Sayeed, A.Q.Ansari, An Experimental Study of different Features for Face Recognition, International Conference on Communication Systems and Network Technologies, 2011

- S. Handri, S. Nomura, K. Nakamura, Determination of Age and Gender Based on Features of Human Motion Using AdaBoost Algorithms, 2011

- Massimo Piccardi, Background Subtraction Techniques: Review, http://www-staff.it.uts.edu.au/ ~massimo/BackgroundSubtractionReview-Piccardi.pdf

- Bakshi, B., "Multiscale PCA with application to MSPC monitoring," AIChE J., 44, pp. 1596-1610., 1998

- G. Huang, Y. Wang, Gender Classification Based on Fusion of Multi-view Gait Sequences, Proceedings of the Asian Conference on Computer Vision, 2007.

- W. Kusakunniran et al., Multi-view Gait Recognition Based on Motion Regression Using Multilayer Perceptron, Proceedings of the IEEE International Conference on Pattern Recognition, pp 2186-2189, 2010

- Kohei Arai, Rosa Andrie, Human Gait Gender Classification in Spatial and Temporal Reasoning, International Journal of Advanced Research in Artificial Intelligence, Vol.1, No. 6, 2012

- L.Lee, WEL. Grimson, Gait Analysis for Recognition and Classification, Proceeding of the fifth IEEE International Conference on Automatic Face and Gesture Recognition, page 148-155, 2002.